aleph null

Aleph Null is an experimental audio visual work, that exists as a real time performance, controlled by myself. It is presented, as an extreemly early version of a complex performace system where the use of an underlying abstract model is used to generate both visual and sonic material. In the piece, the artist is able to explore, and construct visions based on simple mathmatical processes, which lead to apparently complex, and beguilling figures. Specifically these can be seen as limited examination of those thoughts and processes that the I find interesting, compelling, and driven by - this is especially revealed in the growth of simple patterns, with which variation can be replicated and built into more complex figures and fuges.

produced by: with-lasers / nathan.adams

Concept and background research

Aleph Null’s origins are in my Research and Theory project, which resulted in the film Navagraha. For this work I moved from the fixed pre-determined domain of a structured film, to being able to create a real time performance, where the underlying abstract model would drive both the generation of graphics and sound. This desire to create such a system comes from a dissatisfaction with the ways in which graphics often accompany music in a live setting but, are somehow both subservient to the music performance, yet ironically a constraint in terms of timing, and a desire both at the heart of the performance.

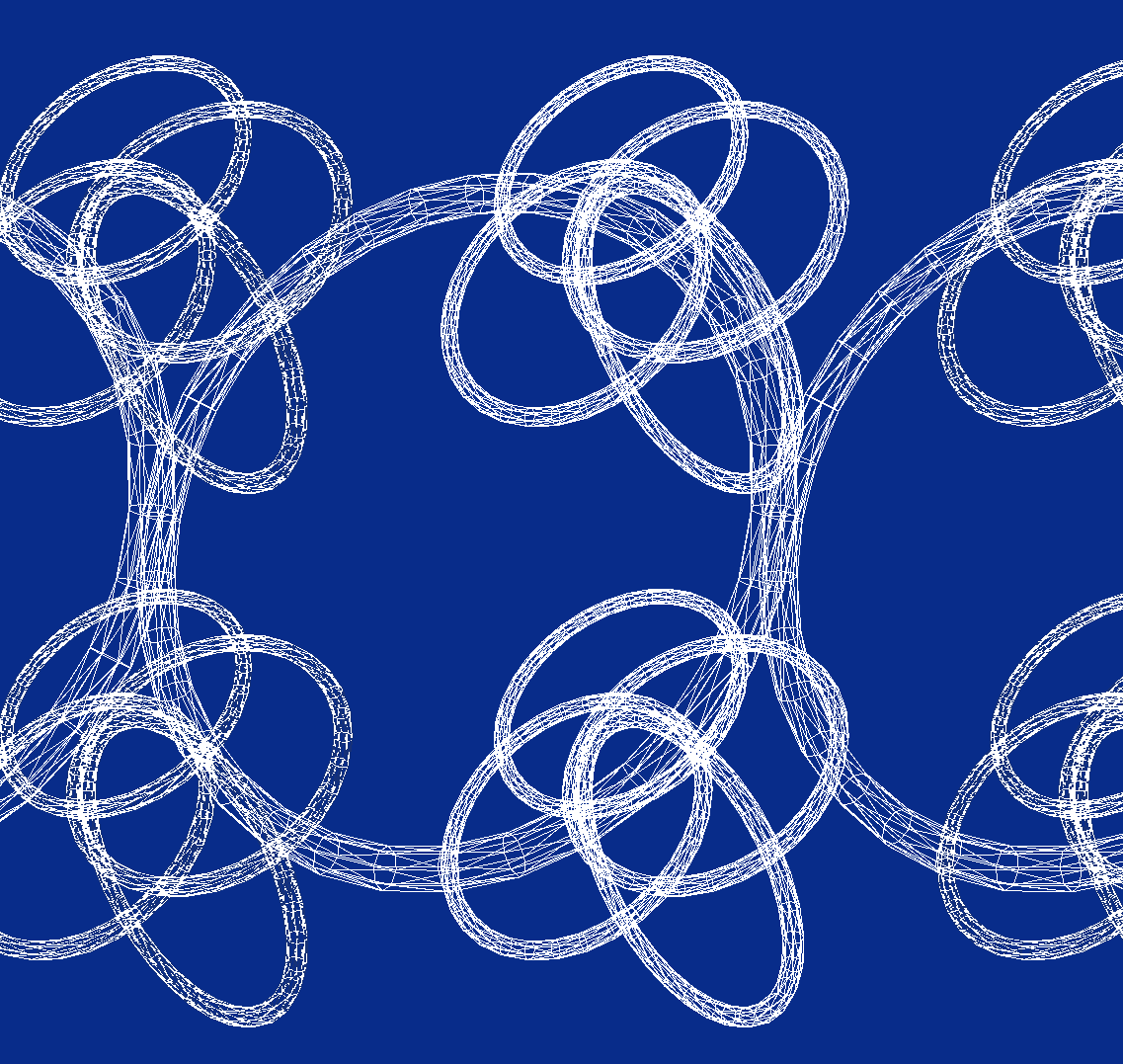

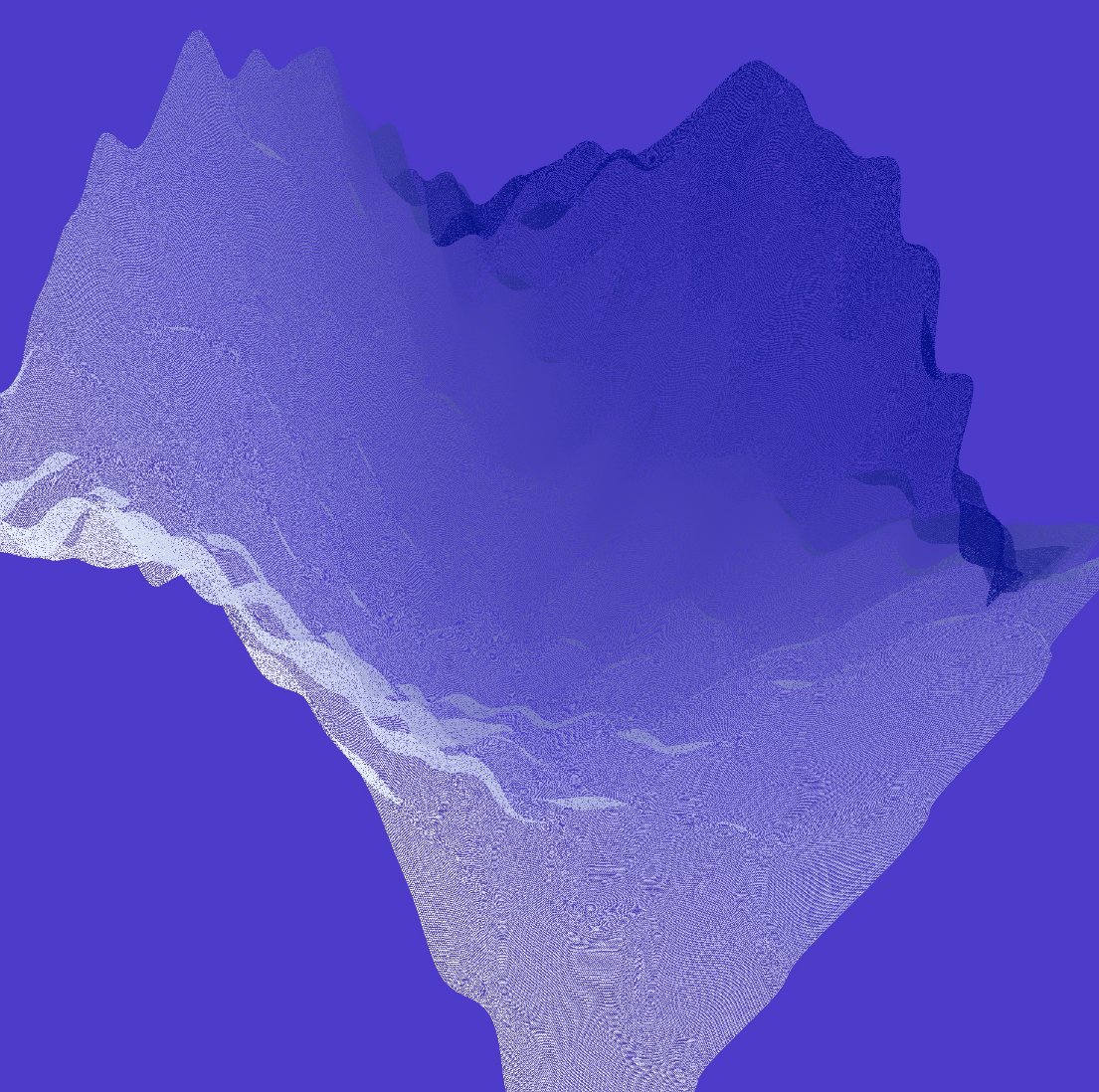

At the core of Navagraha and Aleph Null, is the Harmonograph, a system of rotating objects, which can be linked to one another. Typically these are ‘rotors’ a torus object, which can have one or more child rotors attached, as well as additional objects to be displayed. In the case of Navagraha and Aleph Null, these are planes subdivided into meshes of triangular, square or diamond shaped holes, that are then deformed by trigonmetric and noise functions creating shapes which generate moire patterns and other interesting artefacts.

This Harmonograph system is inspired by the work of John and James Whitney, early computer graphics pioneers - specifically their early use of war surplus gun computers to create the first motion control systems that allowed for groundbreaking animation. In conjunction with Bill Alves paper "Digital Harmony of Sound and Light” about his work with John, the framework for creating a virtual system in three dimensions, that could also be linked to sound generation was set.

Having been diagnosed with Dyspraxia and ADHD during my time at Goldsmiths, this has made me appreciate some of my limitations, that as a child limited my expression in traditional arts - as an adult I have begun to be able to use the computer, and code as a prosthetic that has allowed me to create my own art, the creation of this performance system is yet another aspect of this, allowing me to consider performing - something I have not done for over 30 years.

Technical

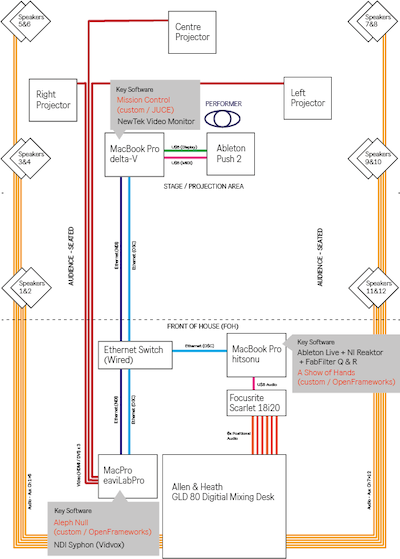

The system is composed of three main elements

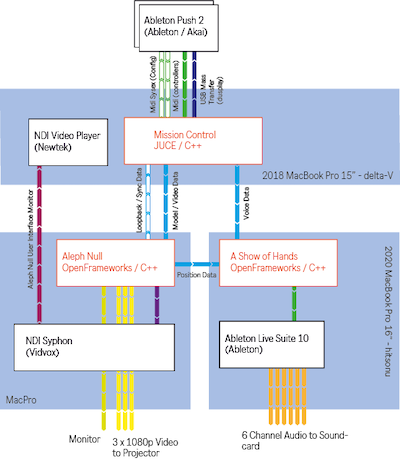

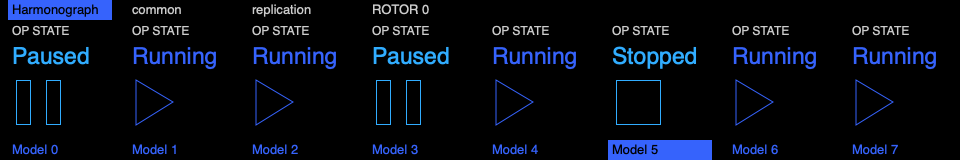

Mission Control / Ableton Push 2

A custom controller written with the JUCE framework, that repurposes an Ableton Push 2 control surface, allowing me to create a rich user interface alongside, a multitude of pads, rotary encoders and other input devices. This application is responsible for sending OSC messages to the other components - it also manages a start up sequence which ensures all components start with parameters in synchronisation. This application ran on a MacBook Pro 15” on stage with me.

This application made direct use of the Ableton provided project push2-display-with-juce to manage the 960 x 160 pixel display via USB.

Aleph Null

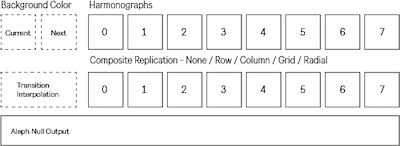

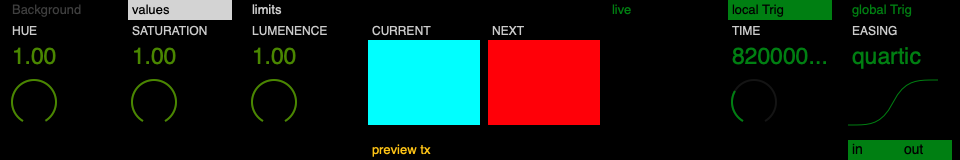

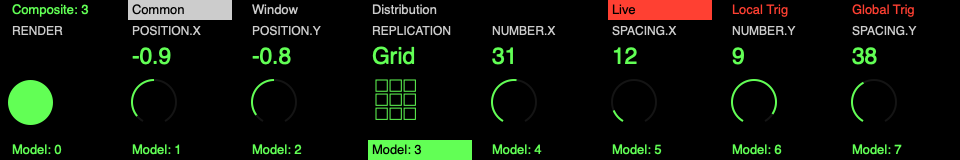

The main visualiser software written in OpenFrameworks/C++, receiving OSC messages from Mission Control, it runs eight Harmonograph systems, and composites them into the final image displayed. The composition system has functionality for each individual Harmonograph frame to be replicated using a row, column, grid or radial method as desired. It also added a background colour that was fully controllable from Mission Control.

The eight systems were hard coded into an initial form, which could then be amended before and during the performance.

In addition, to these key functions, it also managed output of a 5760 x 1080 pixel FBO across three windows for use on each projector, an overview window (which was sent back to stage using Syphon and NDI), and also sends OSC messages about Harmonograph position to Ableton Live.

A Show of Hands / Ableton Line / Reaktor

The sound element was provided by Native Instruments Reaktor 6, using free running oscillator modules in blocks - these were then managed by Ableton Live, creating a voice for the background colour, and 8 Harmonograph ones. Routed through the Ableton Surround Panner this then created a 6 Chanel positional mix.

The OpenFrameworks / C++ application A Show of Hands acted as an OSC bridge to MIDI to transfer parameters to Live from Mission Control and Aleph Null.

Although very limited in terms of my initial goals, the basic control system for sound was effective with the size of each rotor in a Harmonograph controlling either the base pitch, or a relative pitch in the chord. The background Hue, Saturation and Luminence also doing the same (leading to delightful effects when background colours changed with divergent / convergent tones), and the position of specified rotors placing Harmonograph in the sound field.

Technical Challenges

Asides from the sheer complexity of the undertaking (both software, and finding a solution that worked in the Goldsmiths SIML), the main challenge that had to be addressed was an issue with ofxSyphon not working on the Harmongraph FBO output which prevented me from using separate applications for each one, and compositing in VidViox’s VDMX - and required the development of Aleph Null as a single monolithic application.

Future development

I feel that this project and performance has only begun to scratch the surface of what is possible, and it is exciting to think of the future paths this work may take.

There are numerous artistic elements which can, and will be developed, these include considering what are the most effective formats for this as a performance - from longer forms, as a sound bath style meditative experience, to shorter more musical forms. An exciting prospect, as an earlier idea that was dropped due to time, was adding the ability to trigger events from positions on a rotor - creating in effect a euclidean sequencer, that also has permutations in three dimensions.

I look forward to spending more time developing the visual and sonic elements, as they took a back seat to developing the control system - and now this is place, this should allow for more research and development into these areas.

In addition, I plan to use my remaining time at Goldmsiths during the rest of MFA, continuing to research not just this project, but how to get the best out of a venue like the SIML, creating a more immersive experience - for example rather than treating as a large wide canvas, creting specific views from virtual cameras which represent the actual postion of the screens in the virtual environment.

Self evaluation

Atau Tanaka stated in a summer session in 2020, that "If your project involves creating a controller, then it will be about building that controller, and not so much what it creates”, this work has proved that he is of course, absolutely correct. The majority of the time spent on this project (perhaps 50%) has been in developing the mission control application. As a result it's hard for me to not feel that the visual and sonic elements of this piece were poorly served - and that many of the features I wished to implement were either not completed or started.

Of course, then doing this within the SIML environment, created additional challenges, specifically in being able to best locate computing resource near either the performer, or audio and video inputs.

Reviewing the various recordings of the performances, it’s hard for me to not see many of the areas which needed more time and development - for example the compositor had no ability to fade harmonograph in or out, and the binary on / off nature of it, was not perfect - siumarly there were issues with the base parameters of the replication system, that created off difficult to control effects. Most disappointedly, my ideas for modifying the sound as a result of replication could not be realised at this time - an element I look forward to adding in the future.

That being said, it's hard not to think of this work as a triumph, the goal, was to produce something which could demonstrate that it is possible to create an interesting, relevant, and thought provoking performance using the elements I already in place but running in real time (not forgetting that the final minutes of the Navagraha film took 10 hours to render) and this I achieved.

One aspect Im particularly pleased by, is just how responsive it was to how I was feeling - most tellingly on the Saturday night performance, just before show time I encountered some network issues which resulted in OSC messages not getting to A Show of Hands, and unresponsive audio. Thank fully I was able to resolve (if never find out why it was an issue), this level of tension carried itself into the performance - resulting in what felt like an angrier version of the work - I pushed things harder, resulting in a performance that those who had seen multiple iterations of, said was the best so far.

Not only am I proud of achieving this goal of creating a truly performable work, I learnt new things about how to take the work to new levels, and in subsequent performances was able to be a little less tame and restrained, which I think added to the impact of the work.

References

https://github.com/Ableton/push2-display-with-juce - reference for using the display on the Ableton Push 2 device

https://github.com/Ableton/push-interface - reference for the MIDI, USB implmentation of the Ableton Push 2 Device

https://scholarship.claremont.edu/hmc_fac_pub/1068/ - Bill Alves, "The Digital Harmony of Light and Sound"

https://www.youtube.com/watch?v=kzniaKxMr2g - James Whitney - Lapis

https://www.youtube.com/watch?v=w7h0ppnUQhE - John Whitney - Arabesque

https://www.awn.com/mag/issue2.5/2.5pages/2.5moritzwhitney.html - Digital Harmony: The Life of John Whitney, Computer Animation Pioneer, William Moritz

https://www.atariarchives.org/artist/sec23.php - John Whiteny - Computaional Periodics

http://www.centerforvisualmusic.org/WMEnlightenment.html - Enlightment, William Moritz

The above is the source of the comment "Between 1950 and 1955, James constructed an astonishing masterpiece, Yantra, by punching grid patterns in 5" x 7" cards with a pin, and then painting through these pinholes onto other 5" x 7" cards images of rich complexity and dynamism" which I misread, and led to the creation of the deformed meshes, allowing the creation of moire effects in Navagraha / Aleph Null

The following are from my project website https://harmonograph.with-lasers.studio (a longer version of this text will be posted as an essay in the near future), which collects together my developments of the Harmon ograph.

https://harmonograph.with-lasers.studio/blog/20210509_navagrahaDiscussion - Nathan Adams, 2021

https://harmonograph.with-lasers.studio/blog/20210316_definingATestBed - Nathan Adams, 2021

Below are a recording of a complete perfromance, and the audio from the same performance. For this the audio was recorded in Ableton Live, and has been binaural encoded - listening with headphones is recommended.