Computer Science & Robotics ResearchR

Research at the Goldsmiths Atelier

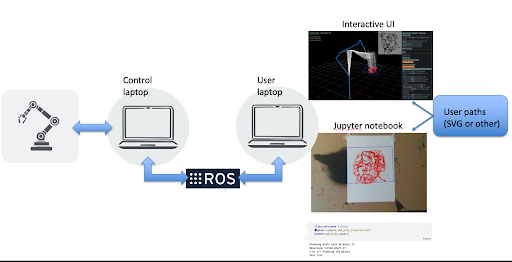

We have been focused on preparing the Franka Emika robot for collaborative work with artists, alongside developing technologies for image generation and stylization to be used by them. On the fabrication side, we have equipped the robot for painting applications, including the design of (i) a pen-holding system similar to that used by the AxiDraw pen-plotter, allowing for easy testing of different brushes and pens, and (ii) an orientable drawing pad that supports various drawing configurations depending on the medium used. On the software side, we have implemented (i) a path-planning system that enables the robot to draw vector files (SVG) or follow custom trajectories with specific kinematic requirements, and (ii) a Python API that allows for easy interfacing with the robot over a network (e.g. through Jupyter notebooks) and for programming motions through a combination of human demonstration and scripting (e.g., picking tools, mixing paint). The system includes an interactive UI for monitoring and programming the robot. On the research side, we are currently focused on developing methods that enable the generation of smooth “calligraphic” strokes with variable width profiles. The system can be easily integrated into generative imaging pipelines (e.g., Stable Diffusion), allowing for a flexible combination of criteria that guide stroke generation, such as image similarity, semantics, and structural/stylistic constraints.

Research at the Konstanz Atelier

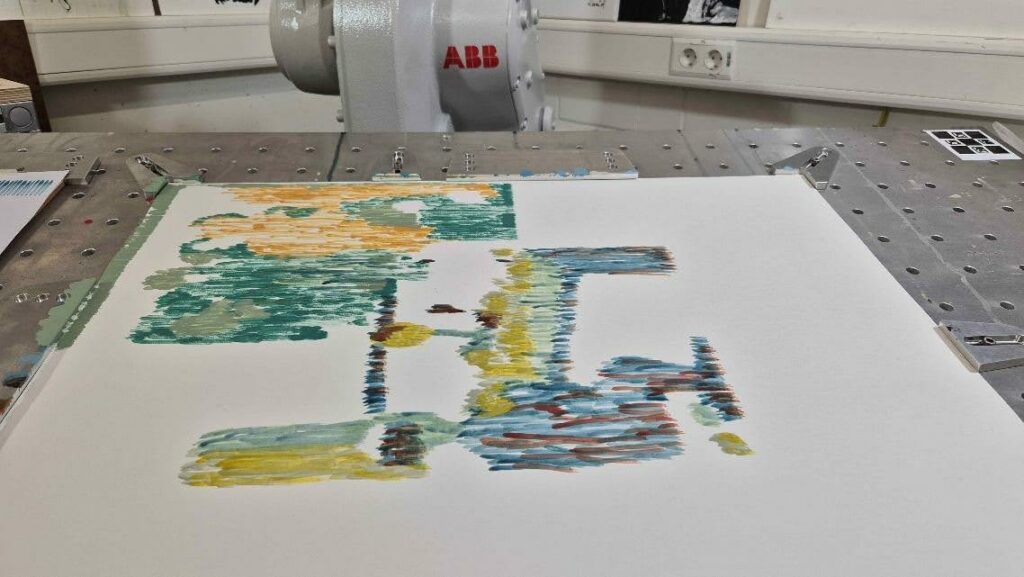

Starting from an arbitrary image, often used by artists as a reference or motif, we developed a framework to transform the image into an abstract vector representation. This abstraction highlights semantically significant objects and key structures of the original. A dynamic filling algorithm fills the vectorized regions onto an actual canvas in conjunction with a visual feedback system and a painting robot.

Over the past few months, this system has undergone iterative improvements, evolving from early results with indistinct shapes to increasingly recognizable and refined outputs.

Visits

The Atelier in Konstanz has been a meeting point for the computer science side and artists. Daniel Berio, a computer scientist and artist on the team, and two artists who are residents of the project, Anna Mirkin and Gretta Louw, visited the atelier.

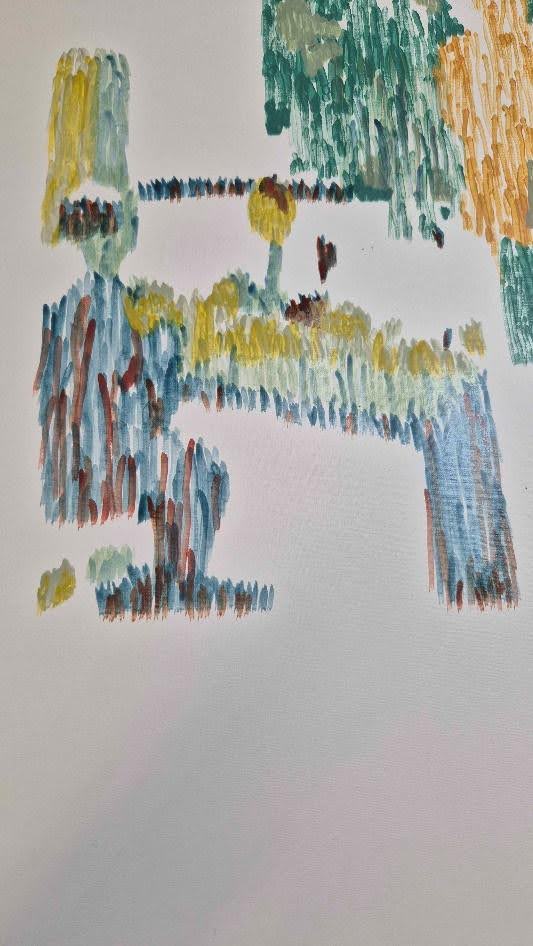

Anna visited us for a few days at the end of June, and we tested our abstraction framework on AI-generated images that suited Anna’s historical narrative. We then did early tests using a simplified painting agent that fills abstract regions in the picture on the canvas by fitting constrained vertical strokes into the shapes.

During this time, the shape-filling algorithm was still in the early stages of development. However, we produced early results for the constrained setting while clearing the general framework of Anna’s Project realization.

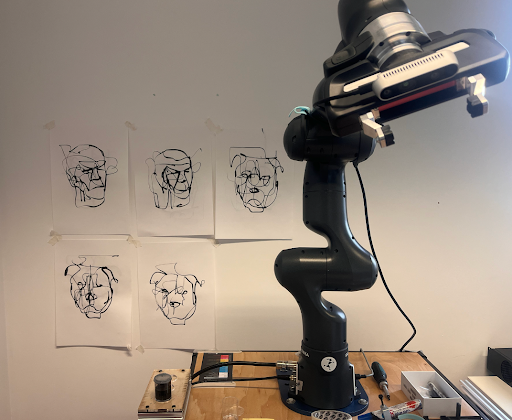

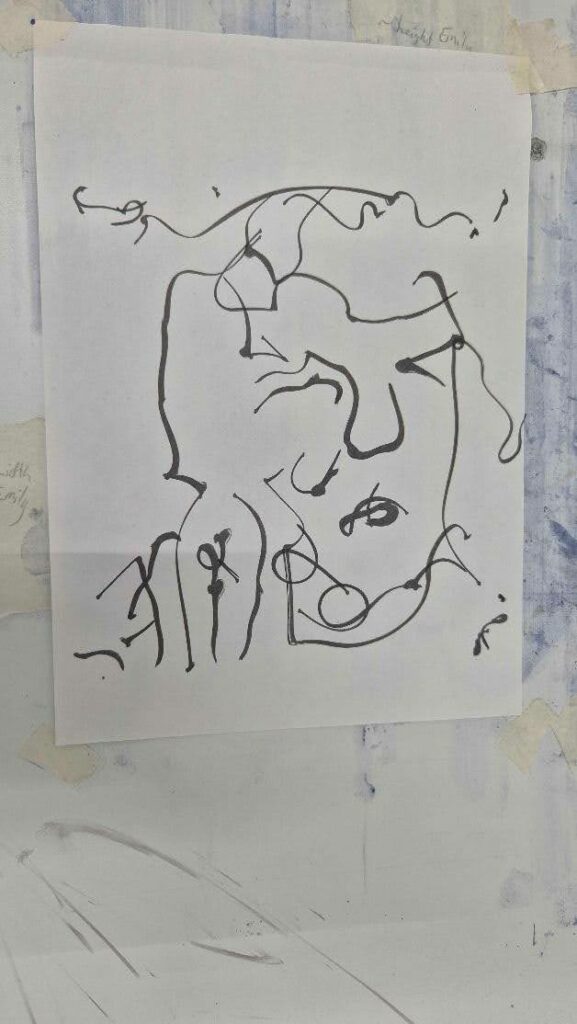

During Daniel Berio’s Visit, we operated his optimization-based image line work on one of our robots and achieved some nice results.

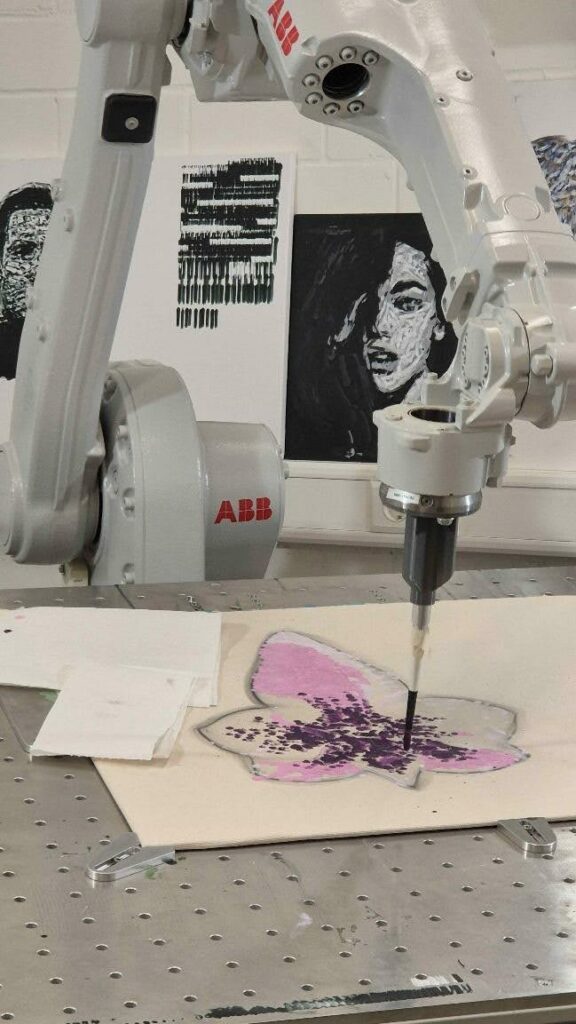

Together with Gretta Louw during her visit in early July, parallel with Daniel Berio’s visit, we also settled on the general abstraction framework we want to use to realize her project proposal. During her stay, we focused on improving the robot-filling technique, as at that point, the region-filling system proved lacking for the elongated and curvy strokes that would fit well with the flowery patterns of the orchids. Regardless of the limitations, we painted two of her AI-generated orchid images onto stretched linen.