Ground

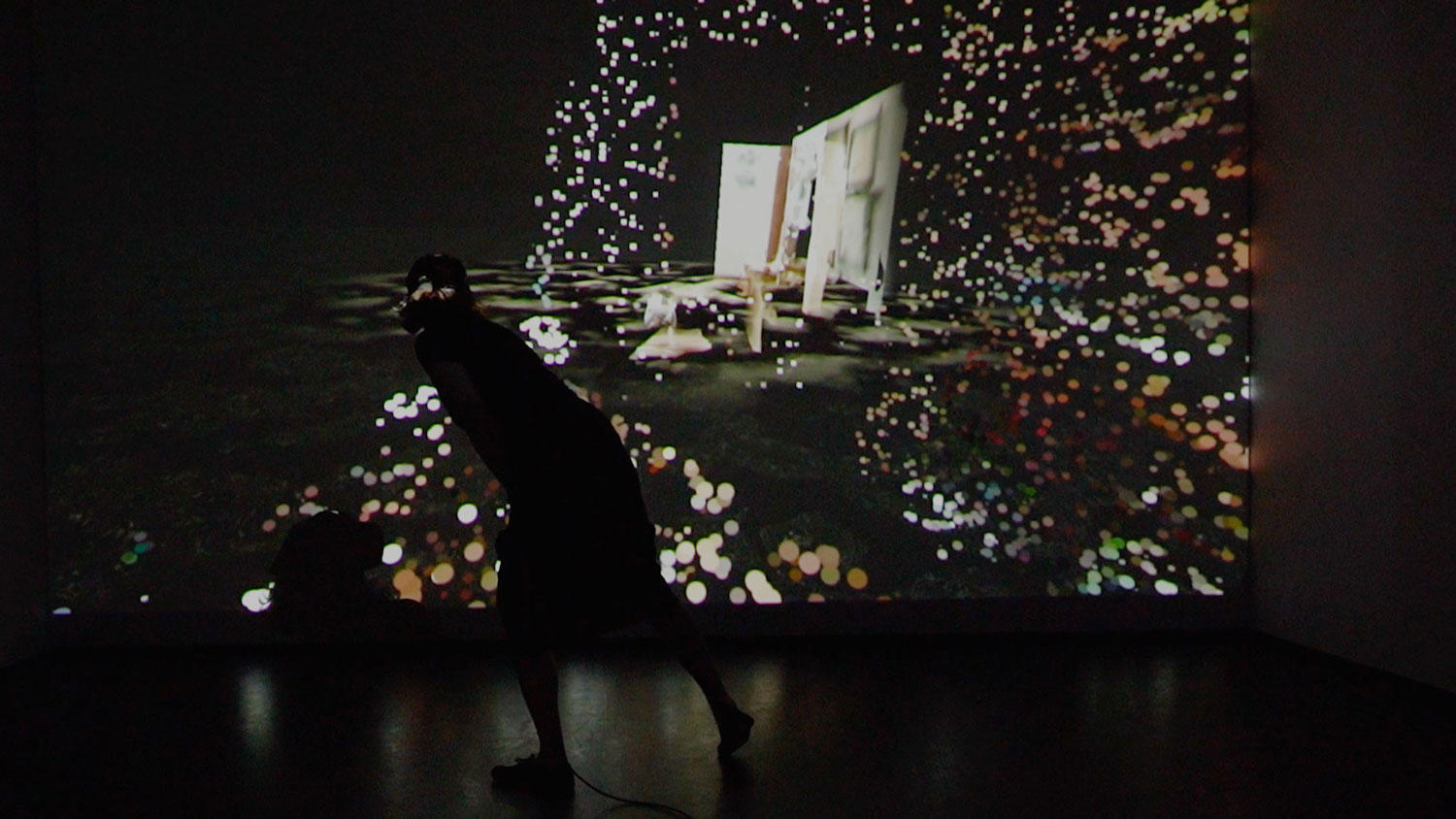

A performative Virtual Reality piece exploring memory and embodied perception through the visual language of abstraction and animation.

produced by: Rebecca Aston

Introduction

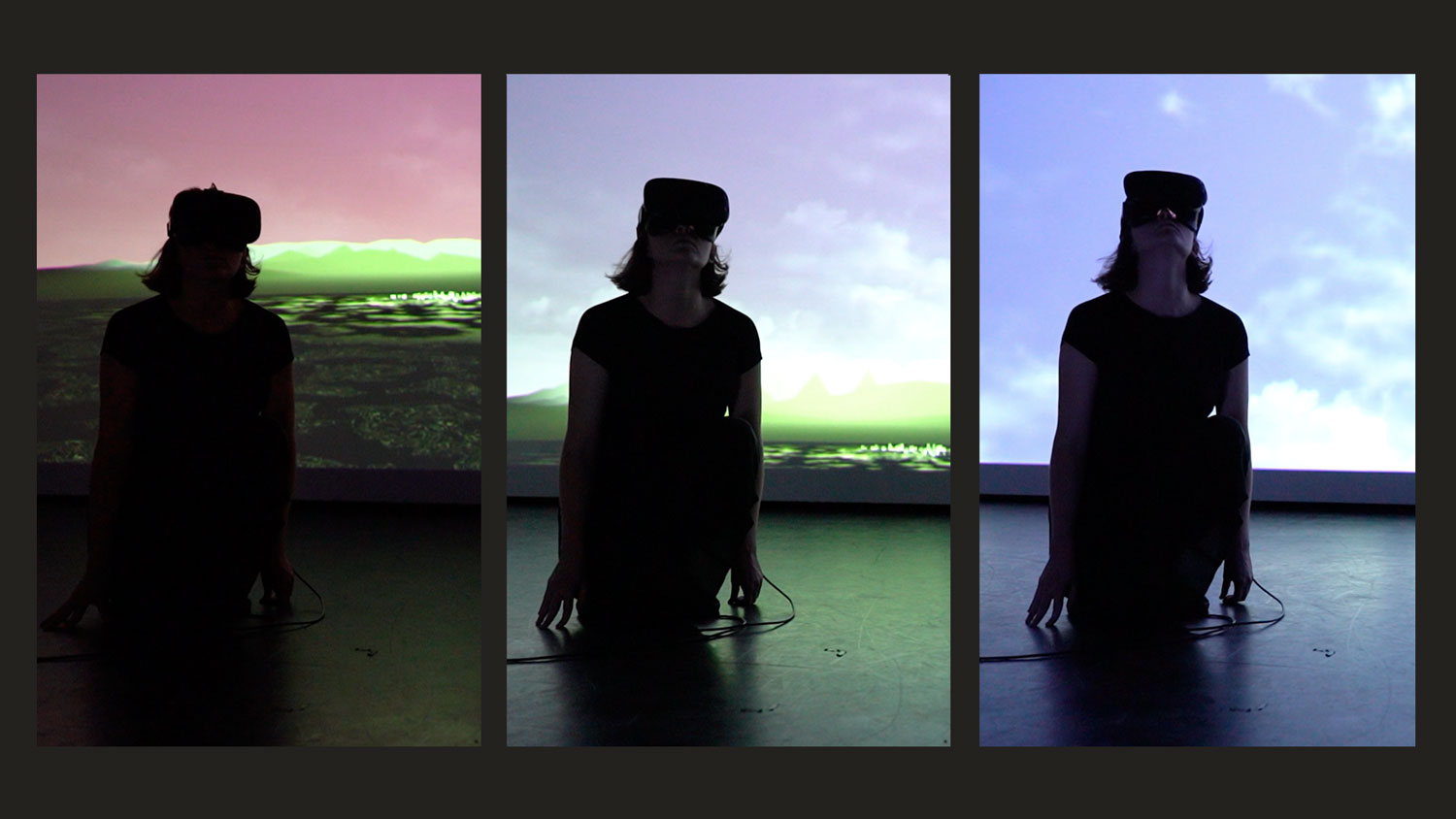

Grounded in a shifting terrain, living in the sediments of the past, disorientation becomes the only thing to hold onto. Ground is a Virtual Reality piece that celebrates groundlessness and change, claiming disorientation as a positive state to feel your way into or through. Within the work, texture is used as a way to rethink a ground—the present moment—that is in constant flux, steeped in history and memory. The perception of texture exists at the border of sight, touch and sound, and so it becomes a device to recall and remix time and space. Ground uses the medium of Virtual Reality to think about perception and embodiment. Following a meandering line through abstract painting, color theory, minimalist sculpture and light art, Ground makes use of abstraction, light and the relationship between bodies and the worlds they are perceiving. A gaze controller allows a user to change and animate the virtual world by moving their head and looking around, which results in visual and aural textures that build up to a synaesthetic and embodied experience. The work became a performative piece presented in the G05 performance space at Goldsmiths as a response to the health and safety limitations brought about by the pandemic. This work is a part of my larger MFA study of perception and VR.

Concept and background research

"Recent 3D animation technologies incorporate multiple perspectives, which are deliberately manipulated to create multifocal and nonlinear imagery. Cinematic space is twisted in any way imaginable, organized around heterogeneous, curved, and collaged perspectives.” (Hito Steyrl, “In Free Fall”).

Hito Steyrl talks about the loss of the horizon brought about by new technological ways of seeing in a 2011 essay. Animation exaggerates what film began with the invention of the close up and Sergei Eisenstein’s invention of montage (editing), these twisted image spaces breaking realistic perceptions of space. Narrative in VR, however, has been described as a problem in part because the loss of the horizon in montage makes it difficult to translate the technique into VR. Rapid transitions in the spirit of montage cause disorientation, dizziness and nausea at worst. Within VR the primacy of the human perceptual system has necessitated a return of the ground or horizon in order to orient and anchor bodies, at first glance seeming to leave us stuck with realism and coherent perceptual spaces. However, within my work I wanted to rescue the ground from realism, to bring ground and the horizon into abstraction. I create spaces that are constantly changing and fractured, while insisting that new ways of seeing will always be anchored in the human body.

Within Ground I used texture as a way to re-think the ground. I primarily studied the psychologist James Gibson’s work around ecological perception; Gibson describes the ambient optic array or "optical texture" as the structure of light entering into the eye. I am interested, however, in a spectrum of texture that goes from the embodied perception of space to the particularities of lived experience that includes the zone of narrative, memory and history. Renu Bora captures these two types of texture that I am interested in, “texxture is the kind of texture that is dense with offered information about how, substantively, historically, materially, it came into being… But there is also the texture—one x this time—that defiantly or even invisibly blocks or refuses such information; …that insists instead on the polarity between substance and surface” (qtd. in Sedgewick 15). The sediments of history and memory in the texture of the world are felt in different ways according to different lived experiences. This fact is central to my work as a white Zimbabwean coming to terms with the colonial history of my country. A study of light and perception could perhaps be seen as an investigation into the universality of perception. However, I don't make claims that all bodies and minds react the same way, let alone emote or “read” or decode in the same way. Deborah Levitt in her writing on VR, states that VR is not an empathy machine as Chris Milk called it (“Five Theses on Virtual Reality”). Similarly I claim that VR is not a transparent medium, it is no different to other art forms where the maker or author invites you into their subjectivity, into their framing of the world.

Ground is part of an ongoing set of works that use 3D scans of places I have lived in Zimbabwe. It thus is directly situated in personal history and confrontations with history. The other part of my background research was into the idea of disorientation and making things strange. I primarily studied Sara Ahmed’s work, Queer Phenomenology, where she describes coming to phenomenology through an interest in thinking about lived experience. Her project is to think through the possibilities for the new orientations that come out of disorientation or losing one’s grounding. Another line of study was Bretolt Brecht’s “defamiliarization effect” also known as the “distancing effect”. Brecht’s tactics, for example actors breaking the fourth wall by addressing the audience, are used to encourage self-reflexivity in the audience by creating a distance from the medium, by making things strange. Within my artwork, a feeling of being disoriented has largely come about through a process of learning history. My interests in archives or the historical record are firmly rooted in a personal engagement with the history of Zimbabwe. I am “reading Zimbabwe” to uncover incomplete histories, in similar ways to artists like Black Chalk and Co. through their project readingzimbabwe.com. In my case confronting history that was either not told to me when I was young, or history in which whiteness has simultaneously dominated and been made invisible.

Technical

I made this work in Unreal Engine. I had initially been thinking about texture in relation to touch and haptics, though when I started to adapt the work to become a performance piece in response to the pandemic, I switched to focusing on sight. The central mechanic is, therefore a gaze controller. I used a VR plugin, but wrote all the code for the gaze controller myself using ray tracing to detect where things were. Different sounds and animations are triggered depending on the distance between the user and where they look.

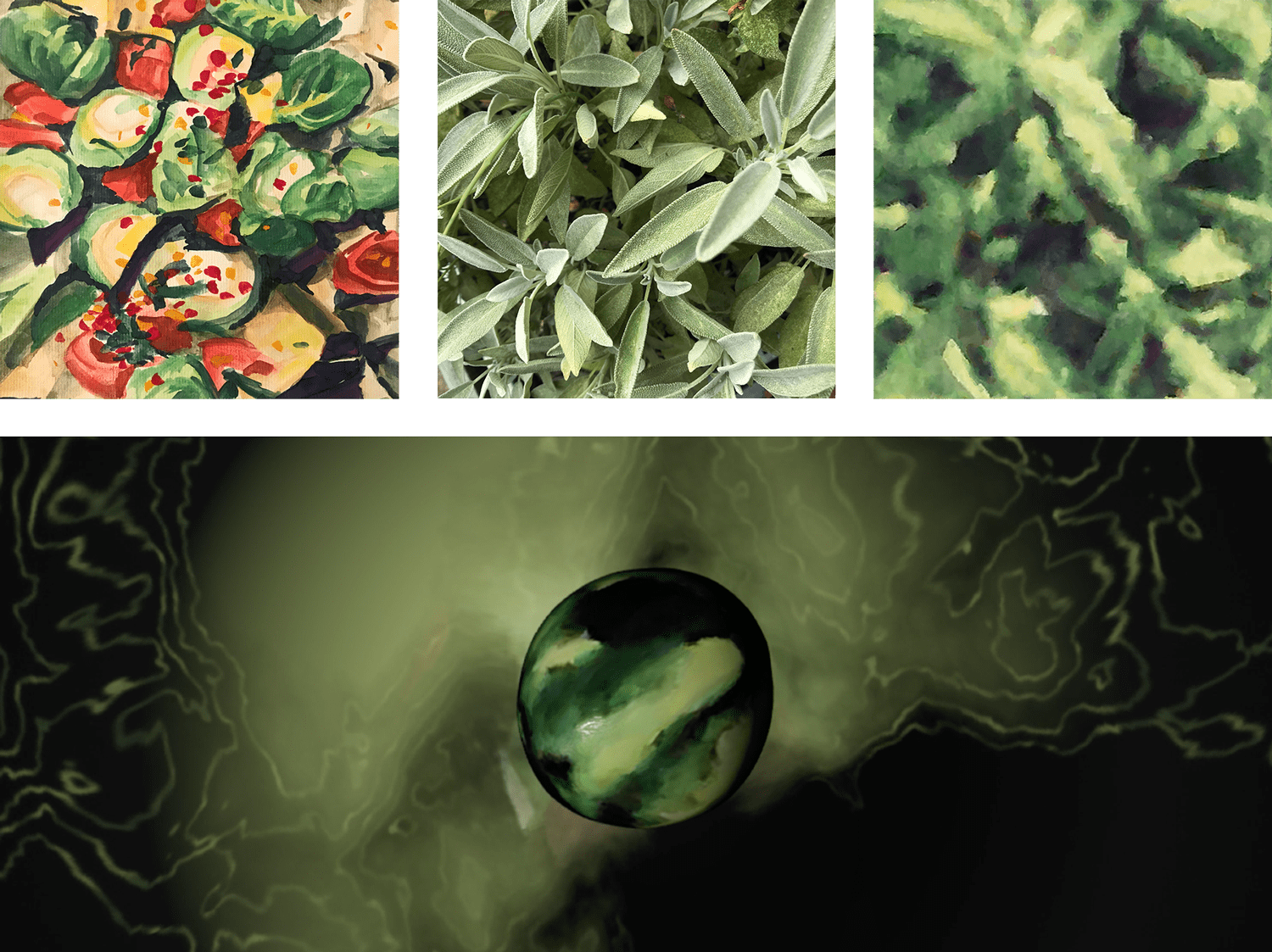

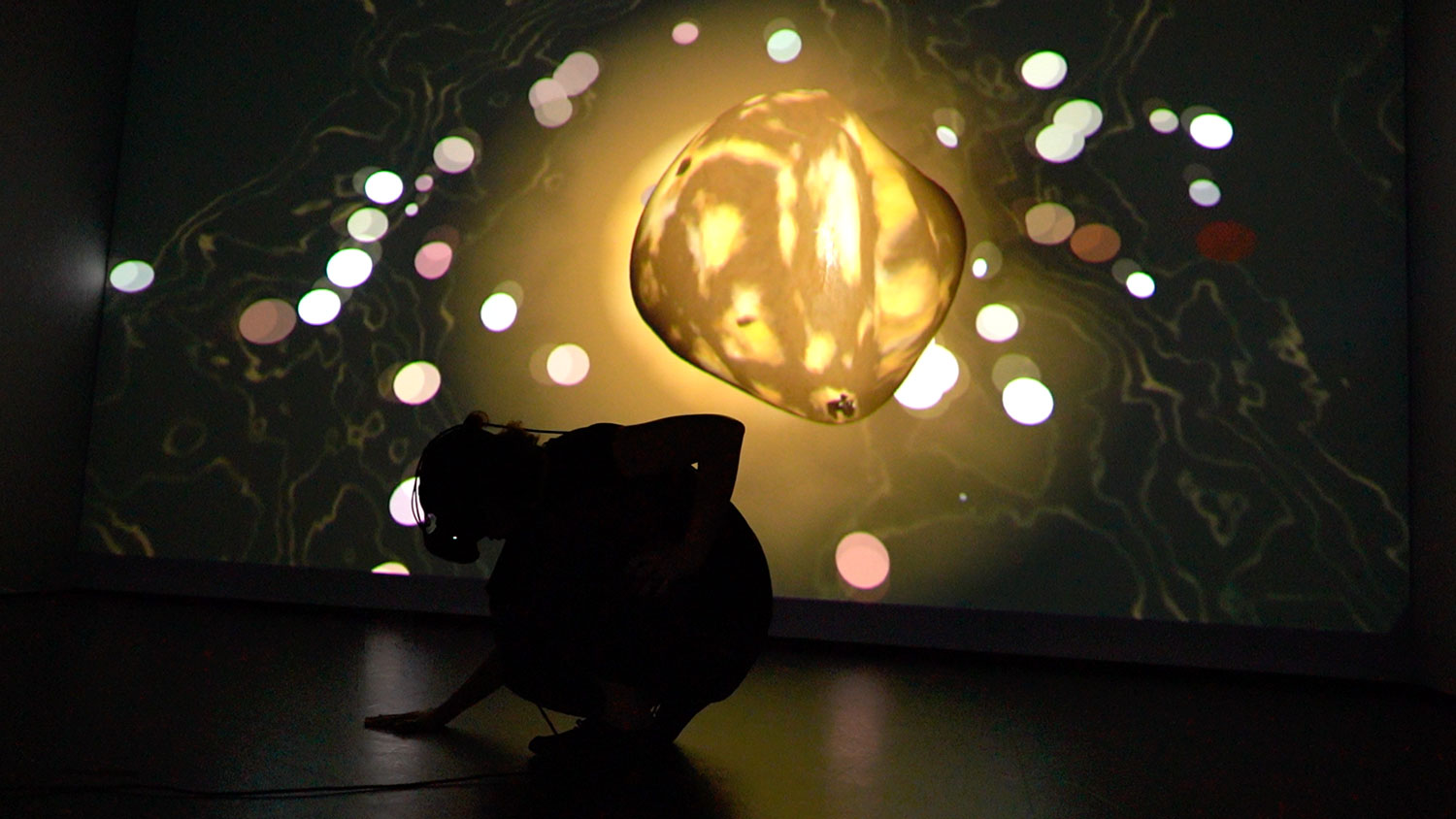

I was thinking about texture and sound change as a way to transport the user in time and space. The texture ball that appears when the user looks at their feet is the mechanic to move between spaces. Once the user is crouched down low to the ground with the ball in sight, change begins to happen, transitioning slowly between sounds and textures. At the end of each cycle of change a new texture is randomly chosen from an array of textures before the next animation cycle begins. The texture change was achieved by using render targets during run-time, I dynamically mixed two textures together using a noisy mask that would be rendered each frame of the transition to a target that was then sampled by many other materials and particle effects. I gave each texture the association of being either an indoors or outdoors texture. When the user looks up to the horizon the current texture determines if you are transported outdoors or indoors. Outdoors is an open space with the horizon line, the ambient textural sounds coming from a selection of sounds that I associate with being outdoors. Indoors you are transported into a room in the house, each room is a point cloud made from a 3D scan. Similarly the indoor sounds are ones I chose to invoke being indoors. Color changes to the ground brought about by the texture allude to different floors or surfaces depending where you look, the changing sounds were also a tool that I used to invoke the sensation of different space times or memories.

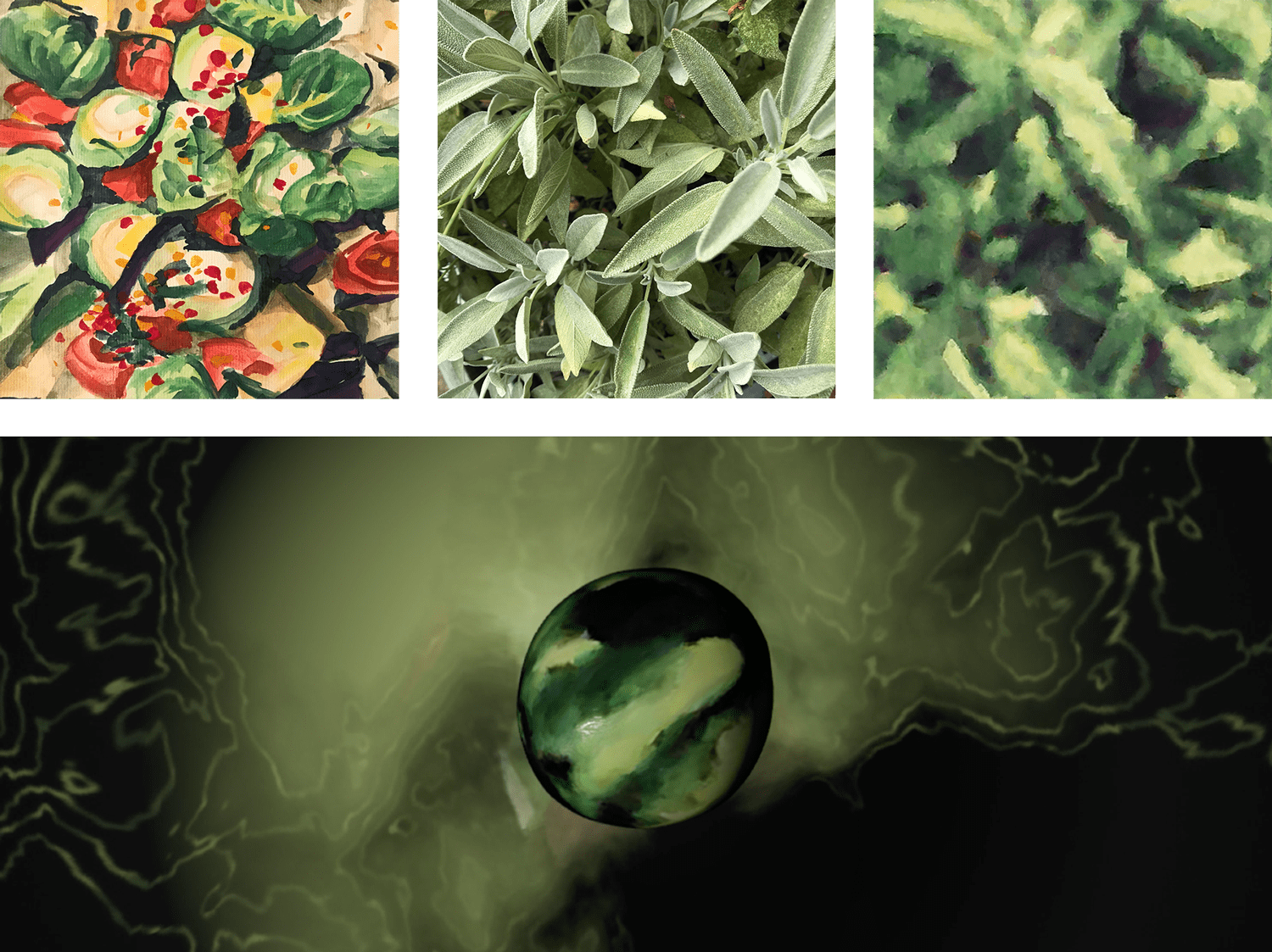

Within my study of texture this year I was looking up various algorithms to generate repeat textures. This brought me to discover Anastasia Opara who is working at a creative studio called Embark in Stockholm and her lecture on texture synthesis. I unfortunately didn’t have the time to focus on writing my own algorithms or grappling with texture synthesis algorithms directly, instead I used the open source tools that Anastasia and her studio have created in Rust. I hope to continue this line of investigation, both learning more about texture synthesis and about Rust. All of the textures I used in Ground were generated from paintings of mine and photographs I took. I will detail how I made one texture as an example. For this one I used the style transfer algorithm in the Embark texture synthesis toolkit. I applied the style of one of my paintings to an image and had the texture synthesis command line flags set to tile in order to get a seamless repeating texture tile. Below you first see an image of my painting, next the photograph which the painting style was applied to, then the resulting texture tile and beneath that a screen capture of the texture in use inside of my VR work.

There were various other mechanics that made the environment reactive to the user’s body. Much of the effects were done in materials (graphical shaders); I used vertex displacement, masking based off of world locations and animated noise to name a few. I used Niagara particle systems in Unreal Engine for the particle effects, the particles within a certain range of where the user was looking would be attracted to that central point, triggering a textural sound depending on how fast the user moved their head. In the outdoors scene the horizon emerges out of the darkness when you are looking at the horizon, and when you have your head tilted all the way back, a blue sky fills your vision. In the indoor scenes there was always a more solid room through one of the doors that the user can only peer into by tilting their body, this was achieved using the viewing angle between the user and the door frame to rotate a box mask on the 3D mesh.

Future development

I was not able to finish the work in the ways I would have liked to, due largely to the constraints of the pandemic on access to equipment. I plan to continue working on versions of this piece. I am interested in including more narrative elements, I want to add voice and bring in more of my personal source material and writing, which would make the source inspiration of the work more transparent.

Self evaluation

I enjoyed making this work as it brought together many different threads of interest. Firstly, my interest in immersive interactive art that involves the body and mechanics of seeing: including light art, color-field painting and large-scale sculpture. Secondly, an interest in narrative and history; making non-linear generative sequences of voice or text or images has been something in many of my recent works. Lastly, an interest in 3D technologies, which is perhaps the more obvious bridge into VR. Of course when you look at your own work again and again you see all the glitches, the good and the bad. There are many improvements that I would like to make, I had many other plans for smoothing things out, features, as well as content that I wanted to include in this version of the work.

The constraint of having to perform this piece ended up pushing the work in an interesting direction; it became a tool for performance more than a VR piece, the work becoming about my body in relation to the virtual. That being said there were some elements that don’t work so well to watch on a big screen, for example head movement making things look a lot more shaky along with strange angles when the head is tilted. I had to move slowly in order for it to not look sickening for the audience. The screen experience loses a lot of the bodily effects of being in the headset and some of the color decisions I made were based on G05 and projected light rather than the best effect in the headset. If I were to continue developing this as a performance piece I would probably move things further into abstraction, fine-tuning the experience to what is occurring on-screen rather than in headset. I would have loved to be able to use all three screens in G05 for example, but the VR preview could not output a high enough resolution of the semi-realistic graphics for a 4K wrap-around. If I were to develop this only for the VR experience I would go back to including haptics and touch as the particle effects based on the gaze were less effective than being able to reach your hand into them. Overall it ended up being a piece that was somewhere half way between an immersive performance piece and a VR work.

References

Theory:

- Ahmed, Sara. Queer Phenomenology: Orientations, Objects, Others. Duke University Press, 2006.

- Gibson, James Jerome. “The Implications of Experiments on the Perception of Space and Motion.” US Department of Commerce, National Technical Information Service, 1975.

- Levitt, Deborah. “Five Theses on Virtual Reality and Sociality.” Public Seminar, 10 May 2018, https://publicseminar.org/2018/05/five-theses-on-virtual-reality-and-sociality/.

- Milk, Chris. How Virtual Reality Can Create the Ultimate Empathy Machine.

- Reading Zimbabwe. https://readingzimbabwe.com/.

- Sedgwick, Eve Kosofsky, and Adam Frank. Touching Feeling: Affect, Pedagogy, Performativity. Duke University Press, 2003.

- Steyrl, Hito. In Free Fall: A Thought Experiment on Vertical Perspective. https://www.e-flux.com/journal/24/67860/in-free-fall-a-thought-experiment-on-vertical-perspective/.

Code:

- VR Expansion Plugin for Unreal Engine.

- Inspiration and a code snippet taken from Art Hiteca, Youtube Channel for all things Niagara particle effects.

- Anastasia Opara’s lecture at a game’s convention and Embark studio's open source texture synthesis tooling in Rust.

- Inspiration taken from Audio Training for Unreal Engine.

- Countless small youtube videos about Unreal Engine, answers on forums over the past two years, the Unreal Engine documentation and some great on the job training from Angel Flores.