237

“237” is a prototype of the sound/light piece/interactive installation for 6 flurescent CFL light bulbs (300W each) which are being triggered by sounds (various oscillators that modulate each other) generated in oF (ofMax addon: https://github.com/micknoise/Maximilian).

Room 237 is a room in “The Shining” by Stanley Kubrick, where all of the crazy, spooky events were happening.

Produced by: Ewa Justka

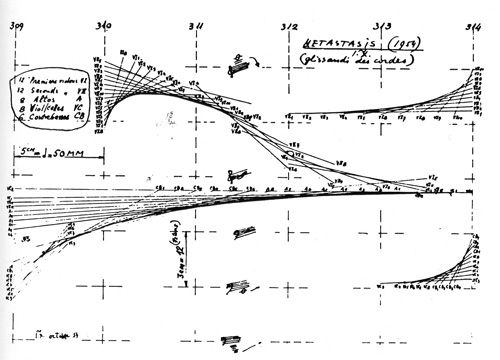

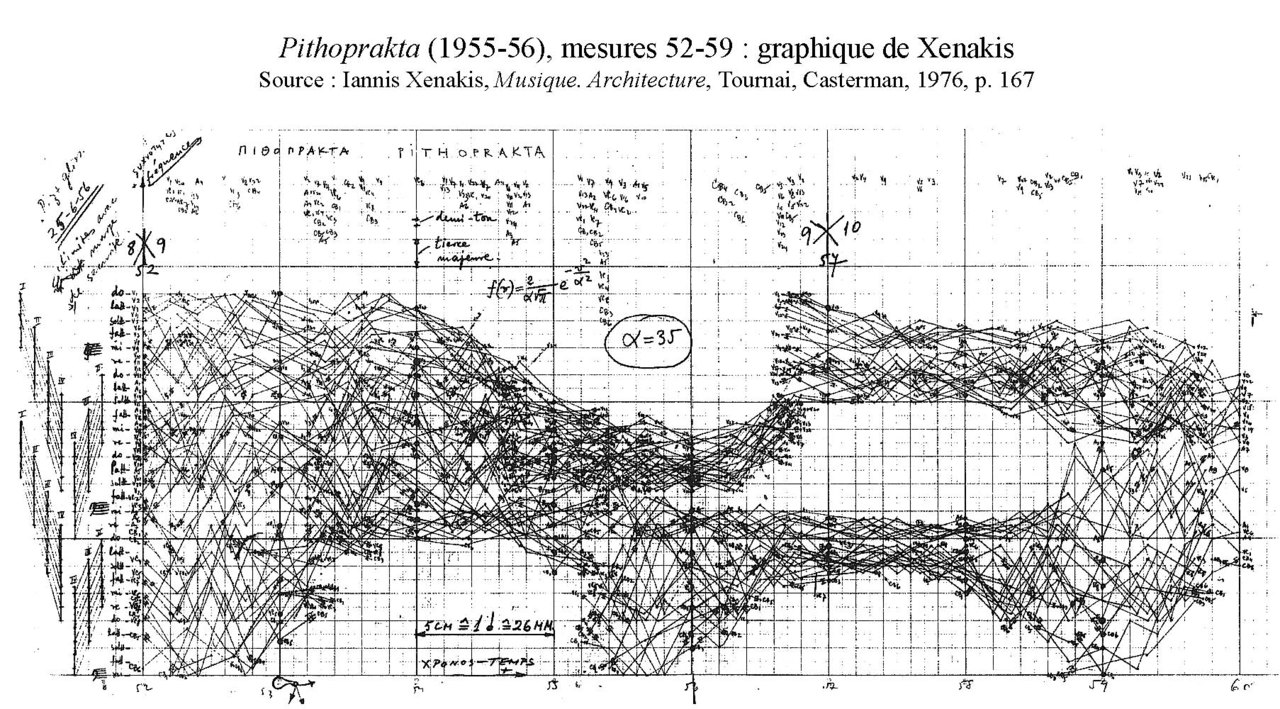

In my art practice I work with light and sound as I am interested in extreme psychophysical environments, i.e.very intense physical stimuli and their relation to mental state of the observer/participant. I also wanted to spend more time studying in depth sound synthesis and various ways of producing algorithmic compositions especially in work of Iannis Xenakis (who also worked with light as well as being an architect) and stochastic synthesis techniques. I was particularly interested in his uses of glissandi in his algorithmic compositions, one of good examples is a piece “Gendy3” entirely produced by a computer program.

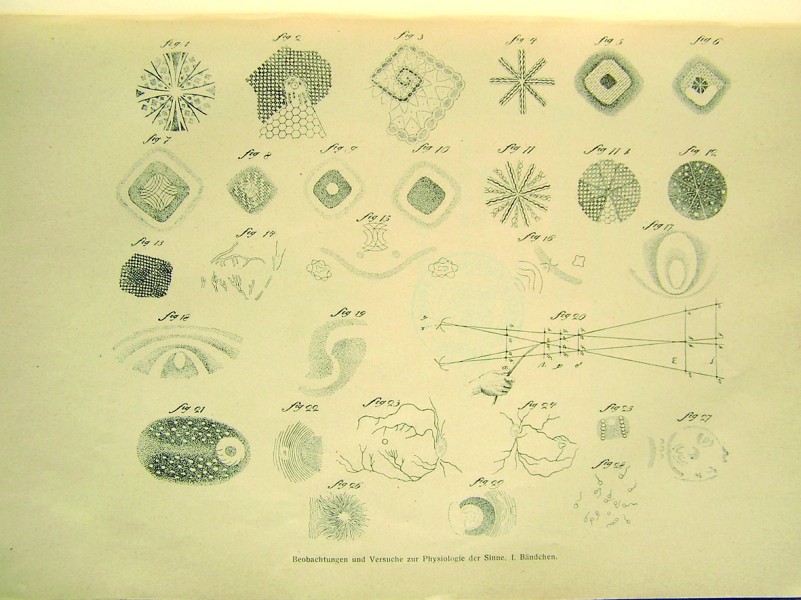

I was also researching scientific work of Jan Evangelista Purkinje (1787-1869) whose main field of work was an investigation of subjective visual phenomena and accounting it in objective terms. For example he studied various patterns that are being produced by means of flicker, while looking at the bright sky, by waving his fingers in front of one eye and reported “seeing checkrboard, zigzags, spirals and ray patterns. When the eye is stimulaed by an unpattarned flickering light, patterns of bewildering complexity become visible. They are called stroboscopic patterns (…) “ (Wade and Brozek: 42). This is just an example of one of plenty of Purkinje's vision experiments.

I am very much interested in an immersive aspect of this piece. I wanted to have the 'interactive' aspect (interactive, as in literal sense: participant's movement to sound/light, etc) to be minimum because I believe that otherwise it would destract the observer from the actual piece, which in itself is an interactive piece and, I believe, does not need any extra additions.

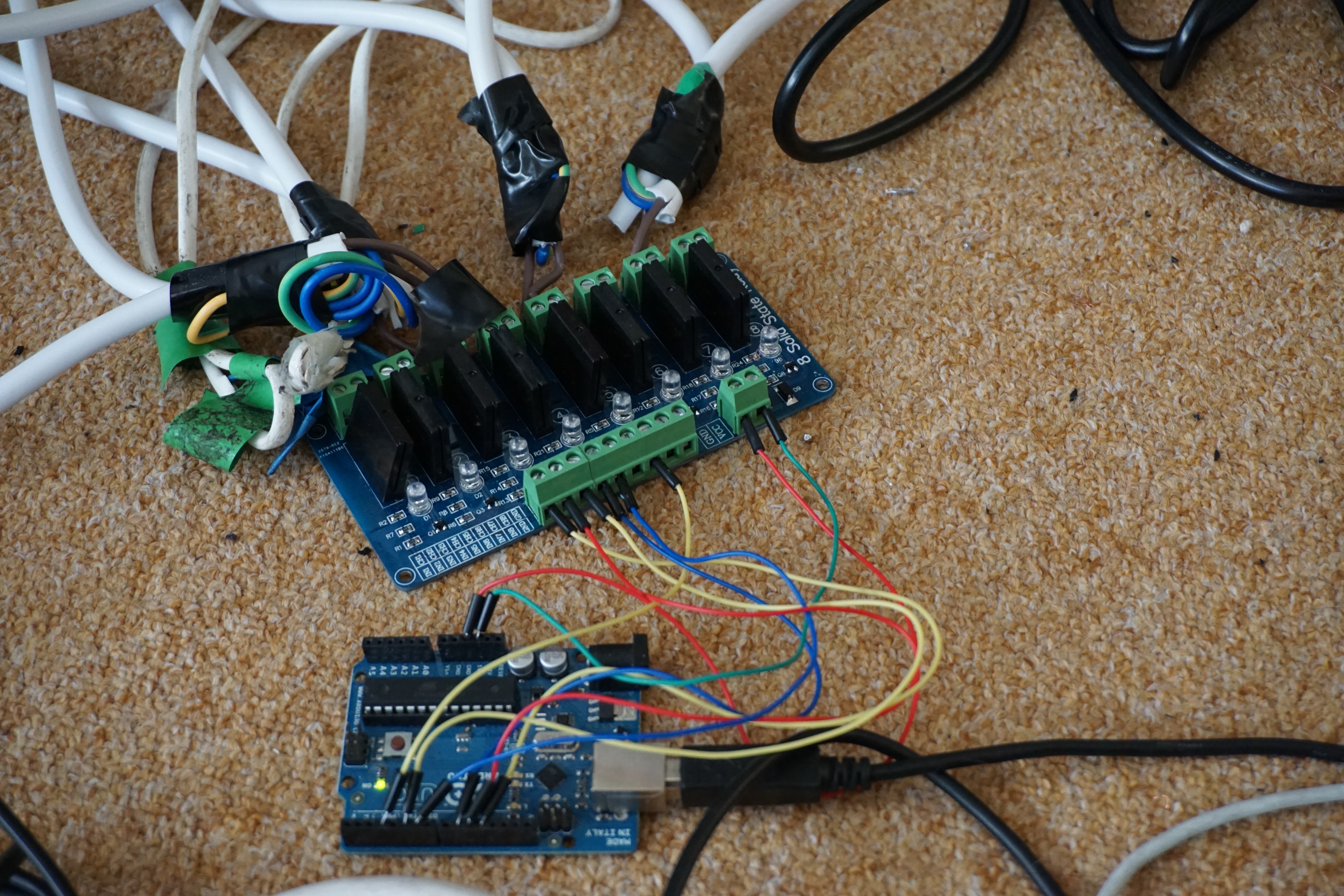

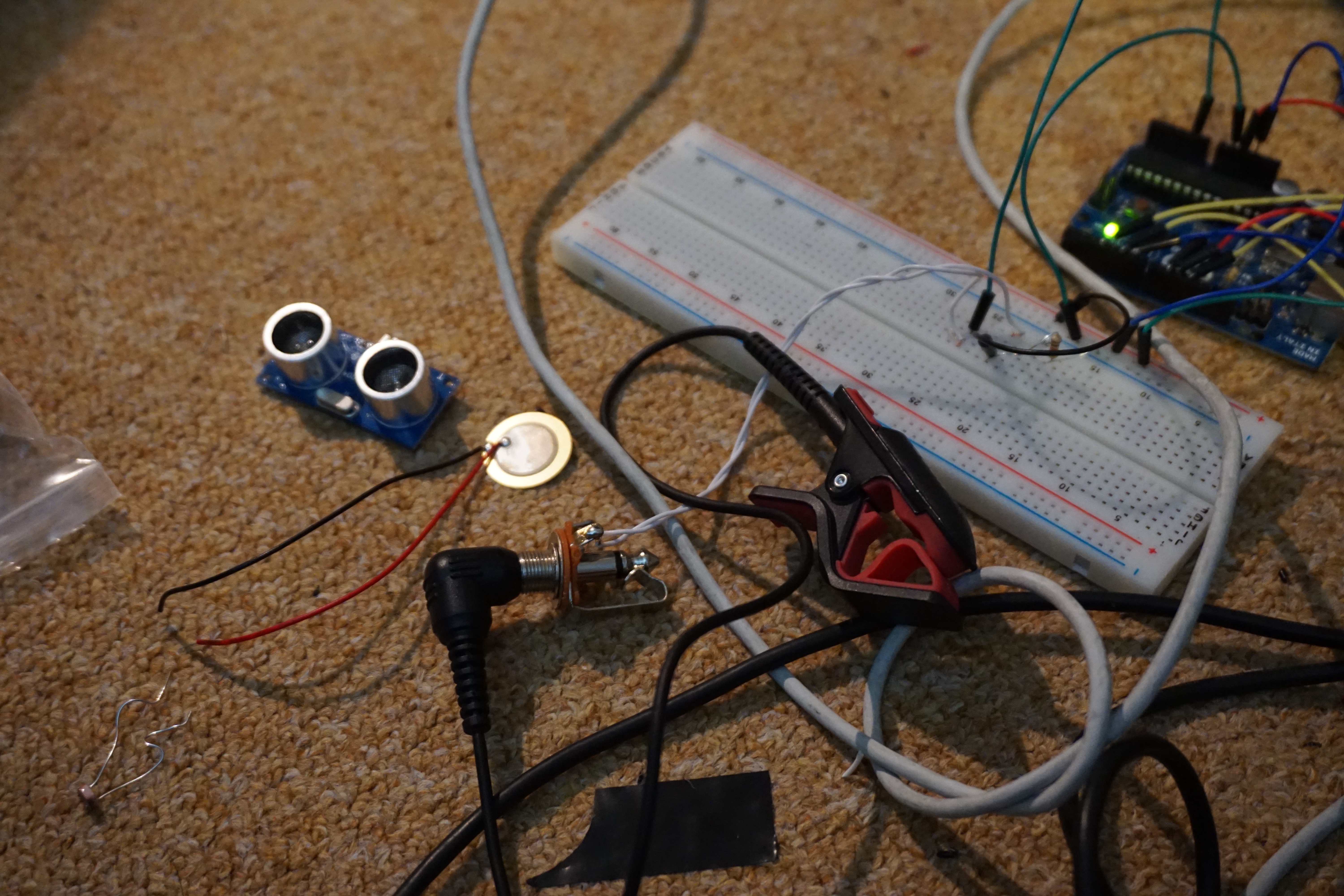

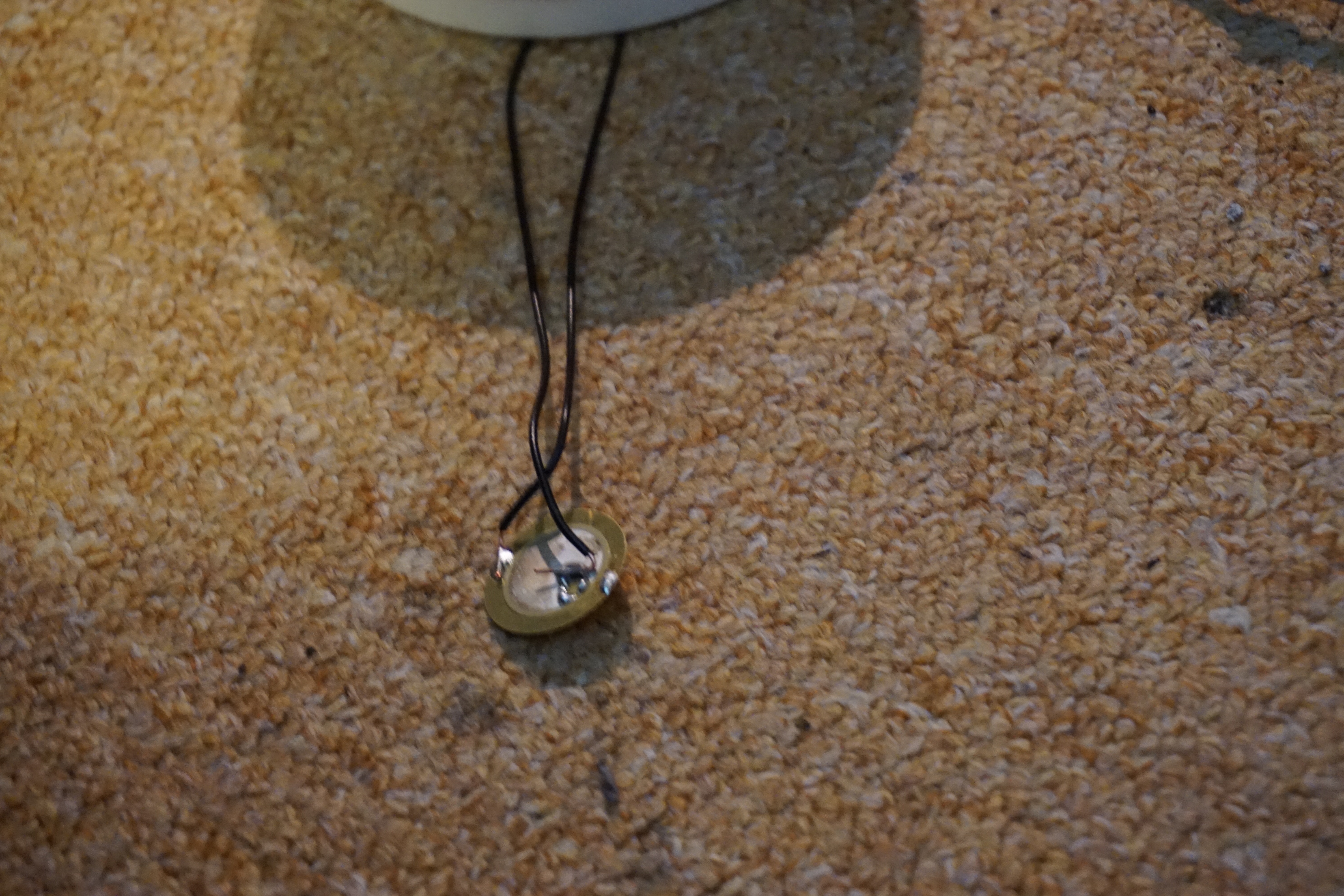

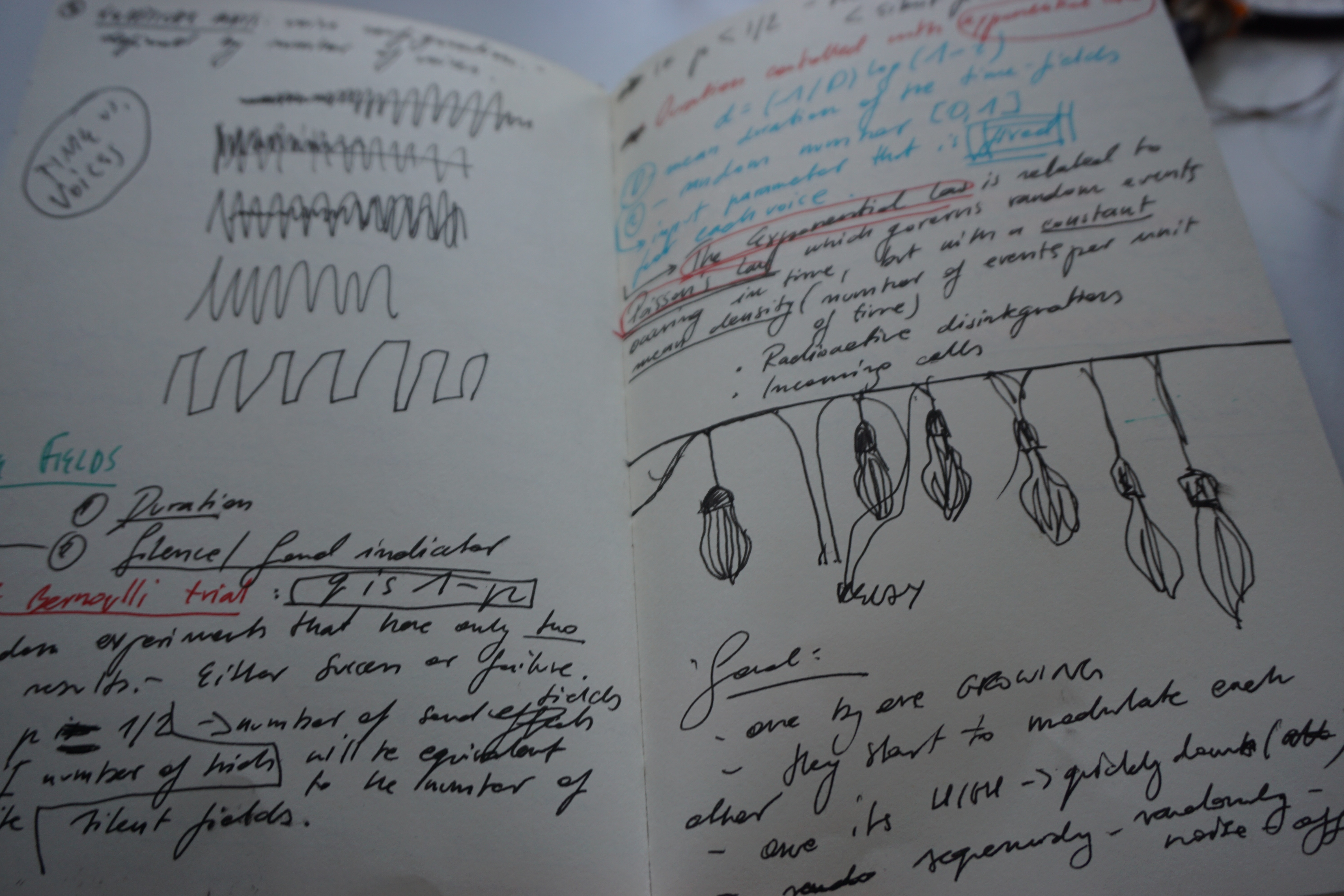

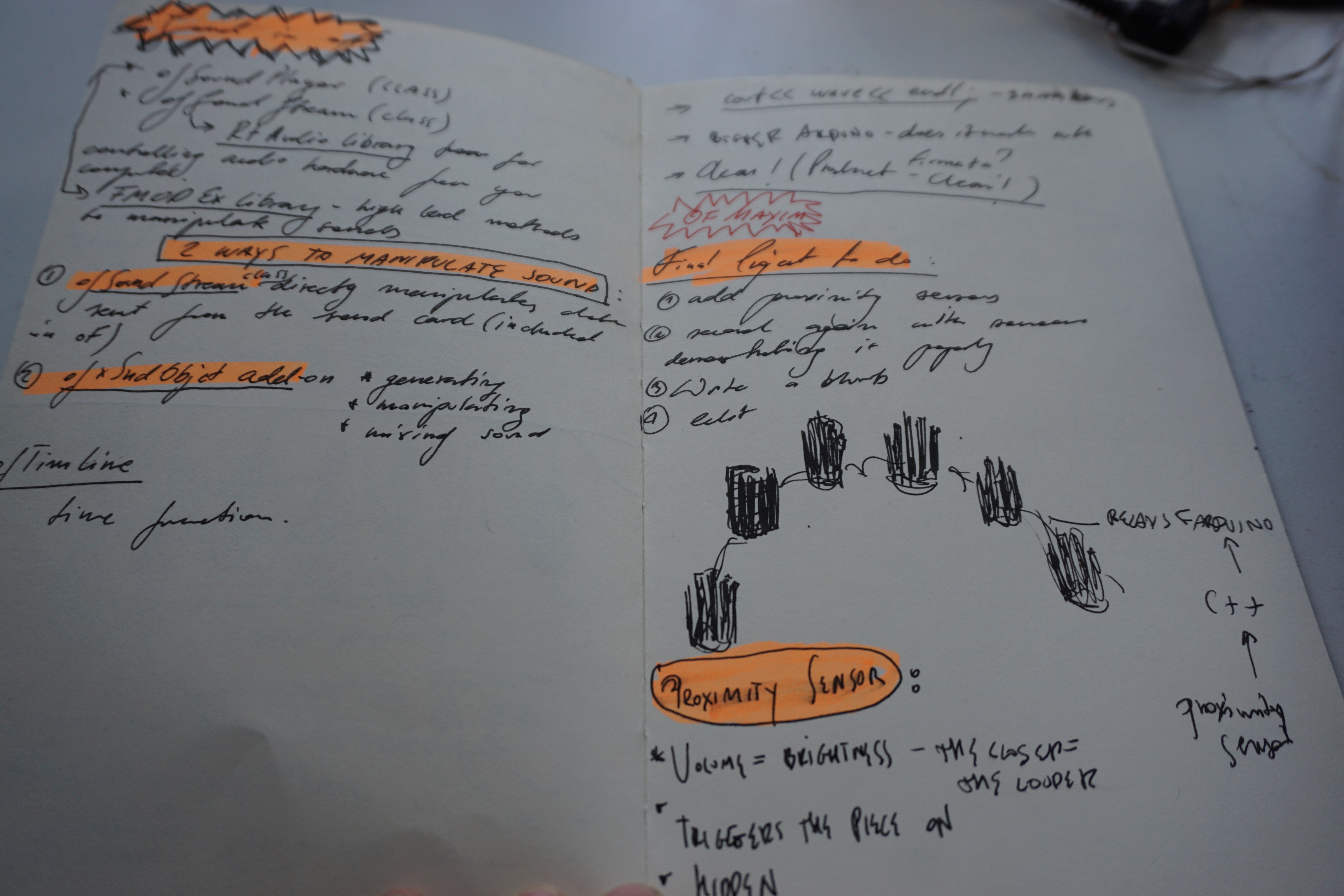

The code is based on simple firmata example from open frameworks library, which is used for communicating with arduino. It is mainly based on ofMax addon for creating different layers of oscillators, which trigger six fluorescent CFL 300W light bulbs via arduino and 6 solid state relays. The whole piece is triggered by presence of the participant - there's an analog input (contact mic) which, if detects vibration when participant/observers enters the room, turns the composition on. There are different musical sections - each has it's own time. Each section of sounds triggers each light. The whole piece is 'composed' (by adding different oscillators-square, sawtooth and sine waves- depending on the time factor) for 5 minutes, but because I used variable frequencies which either ascent or descent - it evolves for ever. Which means, that once it's triggered at the beginning it won't stop playing until there's no one in the room. Once another participant/observer enters the room, the piece will start again, but not from the beginning, as it's being played constantly, only that the audio turns on when arduino detects the vibration. This was my conscious decision – I wanted that piece to be constantly evolving and never sounding the same.

I mainly worked with amplitude and frequency modulation. I spent long time basically experimenting with different types of sound synthesis, deciding in the end, that I want the sound to be building up slowly until it becomes a wall of noise, in a way. The second part of the composition is more dynamic, sounds on and off signals(square waves and sawtooth) that also slowly evolve over time.

In the future I would like to use either pure data/max msp +arduino in order to control the sound/lights interaction, as I think those programs are more suitable for this kind of things. I also want to have more sound sources and lights. Each light would have corresponding speaker, so I could create very intense and dynamic multichannel environment. I would like to work on more complex multi-channel sound/light synthesis and create more complex patterns between them.

the code was written with some techinical advice from Jayson Haebich.

Inspirations:

Marije Balmaan's and Chris Salter's N-Polytope https://www.marijebaalman.eu/with_chris_salter/2015/10/03/n-polytope.html http://archive.aec.at/showmode/prix/?id=48292#48292

Mark Feel's Get Out of Defensive Position https://www.youtube.com/watch?v=A56zQuLkrQE

Philip Stearn's Fluorescence https://phillipstearns.wordpress.com/fluorescene-2011-ongoing/

Iannis Xenakis – Gendy3 https://www.youtube.com/watch?v=Q8suVSzQmwA

Bibliography:

Brozek, Josef and Wade, Nicholas, Purkinje's Vision : the drawing of Neuroscience, Lawrence Erlbaum Associates, London, 2011

Dobrian, Christopher, Realtime Stochastic Decision Making for Music Composition and Improvisation

Luque, Sergio, Stochastic synthesis: An overview, Department of Music, University of Birmingham, U.K, 2011

Serra, Marie – Helene, Stochastic Composition and Stochasti timre: GENDY3 by Iannis Xenakis

Xenakis, Iannis, Formalized Music, Indiana University Press, 1972