A Digital Pond

A Morphogenetic installation. A digital pond is filled with 'creatures' that are governed by a genetic algorithm and surrounded by a digital forest and illuminated artefacts. The installation is either driven by a non-human process (smoke bubbles) or by people that add 'food' to the pond and feed the 'creatures' and create 'trees' and change the forest 'leaves'. The sounds created are reactive to movement in the pond.

produced by: Colin Higgs

The Concept

The work pays homage to two of the founding fathers of Morphogenesis:

Frei Otto (a RIBA gold medalist) and Alan Turing (a mathematician). Each of these persons have engaged in the morphogenetic arena. Frei Otto formed a partnership with Nature to engage in a form-finding expedition using bubbles and magnets in water to look at bubble surfaces and a natural distance experiment of "cellular" objects and the forms they construct (Voronoi patterns). The Voronoi ideas were thought out in his book Occupying and Connecting: Thoughts on Territories and Spheres of Influence with Particular Reference to Human Settlement. (ref 1)

Alan Turing although a famous mathematician was also interested in biological cell formations and published a paper in 1952, The chemical basis of morphogenesis (ref 2) on the spontaneous formation of patterns in systems undergoing reaction and diffusion of their ingredients. Turing devised a mathematical model that explained how random fluctuations can drive the emergence of pattern and structure from initial uniformity.

Frei Otto thrived on collaborating with Nature to make architectural forms (the most famous being The Olympic Pavilion, Munich) and Alan Turing focused on algorithms of difference and repetition (using a Laplace transform). Both parties were interested in emergance of patterns and structure.

My idea was to merge a natural collaboration with Nature that reacts with digital processes that are governed by algorithms of growth and repetiton and change. Following in the footsteps of both Fei Otto and Alan Turing and the philosphohy of Morphogenesis as outlined by Manuel DeLanda. (ref 3)

Background Research

In forming a partnership with Nature I looked at various pieces of works and the ones that directly influenced my work are laid out as follows. The first Funky Forest: design-io is an interactive ecosystem whereby people create trees with their body and then divert the water flowing from the waterfall to the trees to keep them alive. (Figure 1.0)

Figure 1.0

The aspects that I liked about this installation were the feeling of immersion (you were surrounded by projections) and the darkness helped the feeling of a inhabited forest. The art work was not to my own taste and the interaction seemed too child-like. I wanted to feel something minimal but natural.

I decided upon a three tiered projection system (2 projections of the forest and 1 of the pond) to help with the feeling of the immersion and in darkness. My forest trees were minimal but very detailed (like a real tree) and held to a simple graphic aesthetic but were very detailed the more the trees grew the more 'leaves'.

I also liked Team Labs shimogano light festival in Japan I loved the aesthetic and lighting ( massive spherical giant lights) wherby they installed a series of lights in a temple that changed colour on touch and proximity. (Figure 2.0)

Figure 2.0

I felt their giant lights and my spherical bubbles of smoke resonated well in philosphical harmony: both seemed out of place but some how felt natural as well. I took on board their proximity of change as well as my light artefects of clay had a proximity of change to the next light caused by close proximity.

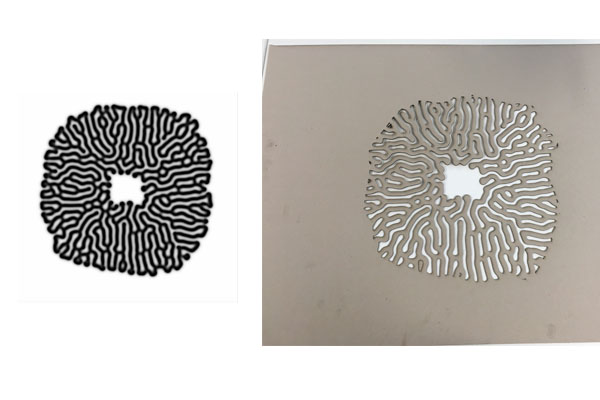

I was also inspired with my clay lights by Prof. Neri Oxman from MIT media and Nervous Lights Hyphae Lights to make my own artefacts of Frei Oto and Alan Turing using real agorithms that output patterns I could use as masks over clay. (See Figure 3.0 and Figure 4.0)

Figure 3.0

Figure 4.0

Interaction

The primary source of interaction was blob detection (Ref.4) for the people and the bubbles. For people blob detection was straight forward but I also programmed gestures into the blobs so that any sudden movement would trigger the forest 'leaves' to change colour. This gestural movement was also triggered by the bubbles as well.

The blob detection (Ref.4) used a kinect 1.0 to process depth recognition and was apparent immediately with opaque objects (people), however, the blob detection of bubbles was far harder as they were transparent. Initially, I tried dyeing the bubble solution but the results were poor and unreliable. The bubbles wouldnt hold up well; bursting within the first 8-10 seconds. Next, I saw people vaping bubbles so that the bubbles were filled with smoke and I then tried this method and this was detected with the kinect. See the movie below.

Smoke filled bubble detection

Food Detection

The blob detection was used as food for both the pond and the forest. When people stood in the pond they would feed the pond and the food would appear with yellow ripples surrounding it. The time for feeding was chosing randomly around the blob detection so that a continous stream of food was prohibited. The feeding would be somewhat sporadic.

Moreover, when blobs were detected small swarms of fly-like creatures would follow all the blobs and group around them; another indicator that the pond had recognised a blob and a further interaction with a blob. This detail is shown in the movie below.

The number of blobs was also directionally proportional to the number of 'creation' trees (the forest had ecotrees and creation trees, ecotrees were independent). If there were more people than trees new creation trees would appear. (ecotrees were colured green and creation trees were coloured orange-brown.)

Either the bubbles or people would create food for the pond. See the movie below.

Food detection in the pond

Leaves changing colour (Gestures on Blobs)

So far the Blob detection has been used for making food in the pond and for creation of trees, however, a third form of interaction was programmed. Gestures on Blobs. If each blob was deemed to move with a certain parameter it would trigger a tree to change its colour leaves from a multicolour to a cyan colour over a period of 2 seconds. The more blob movement the more tree leaves changed colour. This was also true for the bubble interaction if there velocity was measured over a certain amount they would also change the leaves colour. See movie below

Leaves changing colour

Light detection of clay artefacts

The clay artefacts used a wifi chip to send messages to the next light to change its pattern if a close proximity change was detected. This was used to highlight the masks cut out on the lights. A movie showing this process is shown below.

The use the of the wifi lights was ment to be minimal: occassionally changing the pattern on the lights which had ceramic patterned lampshades. It was not programmed to be instrusive. It was only actived when people were in close proximity of the lights.

light detection

Sound Detection

The sound was programmed in MAXMSP and used OSC to communicate between the sound interaction and the command 'Pond' sketch.

The interaction between the two was based on both the position of a blob (x,y) and the velocity and acceleration of a Blob. As the person moved it would effect either frequency of the synth sound or the selected sample played and any sudden movement would engage another sound. This interaction was immediate and programmed to be very obvious to any person moving in the pond.

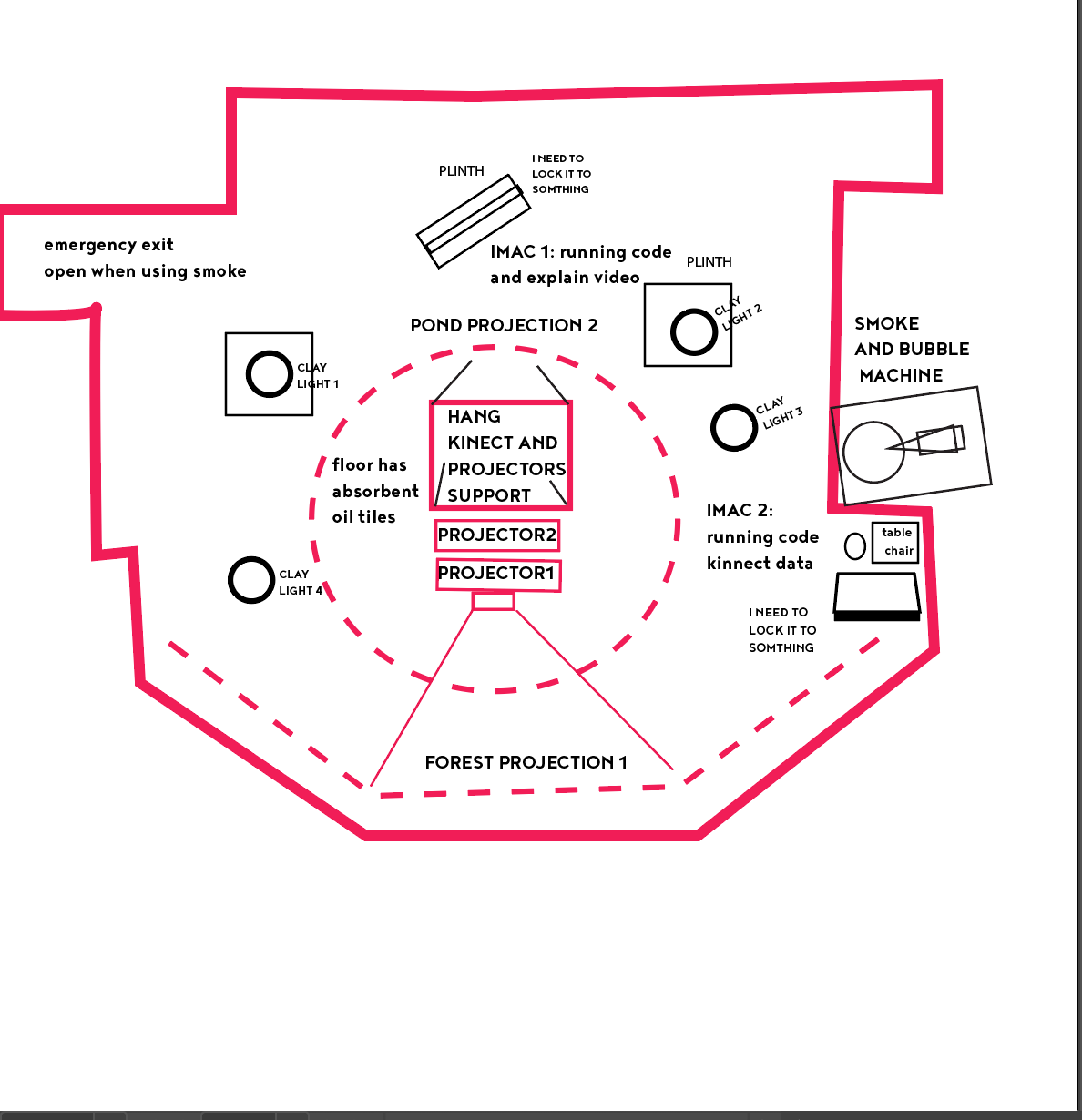

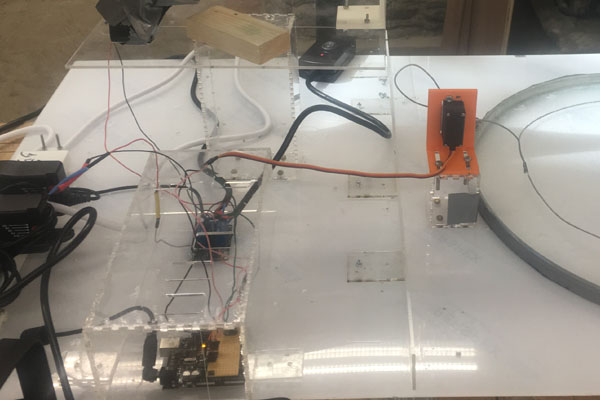

Design

The overall design. See Figure 5. Surrounded by the clay artefacts that communicate to each other the person entering the room sees two projections in front of them: the digital forest projected on the wall and the digital pond projected onto the floor. On entry to the room a imac with a explanatory video is looping explaining the concept. See the movie below. The smoke machine is mounted high above them on a shelf to the left of the room. The kinect is in the middle of the room mounted with a projector pointing downwards and another mounted projector pointing forwards. As the person entered the pond a soundscape would react to their position and velocity.

Overall Design Figure 5.

The design is split into physical design and digital design. The physical design is concerned with the design of the smoke machine to create the bubbles, the clay artefacts and the soundscape design and the digital design is concerned with the forest and pond design.

Physical Design

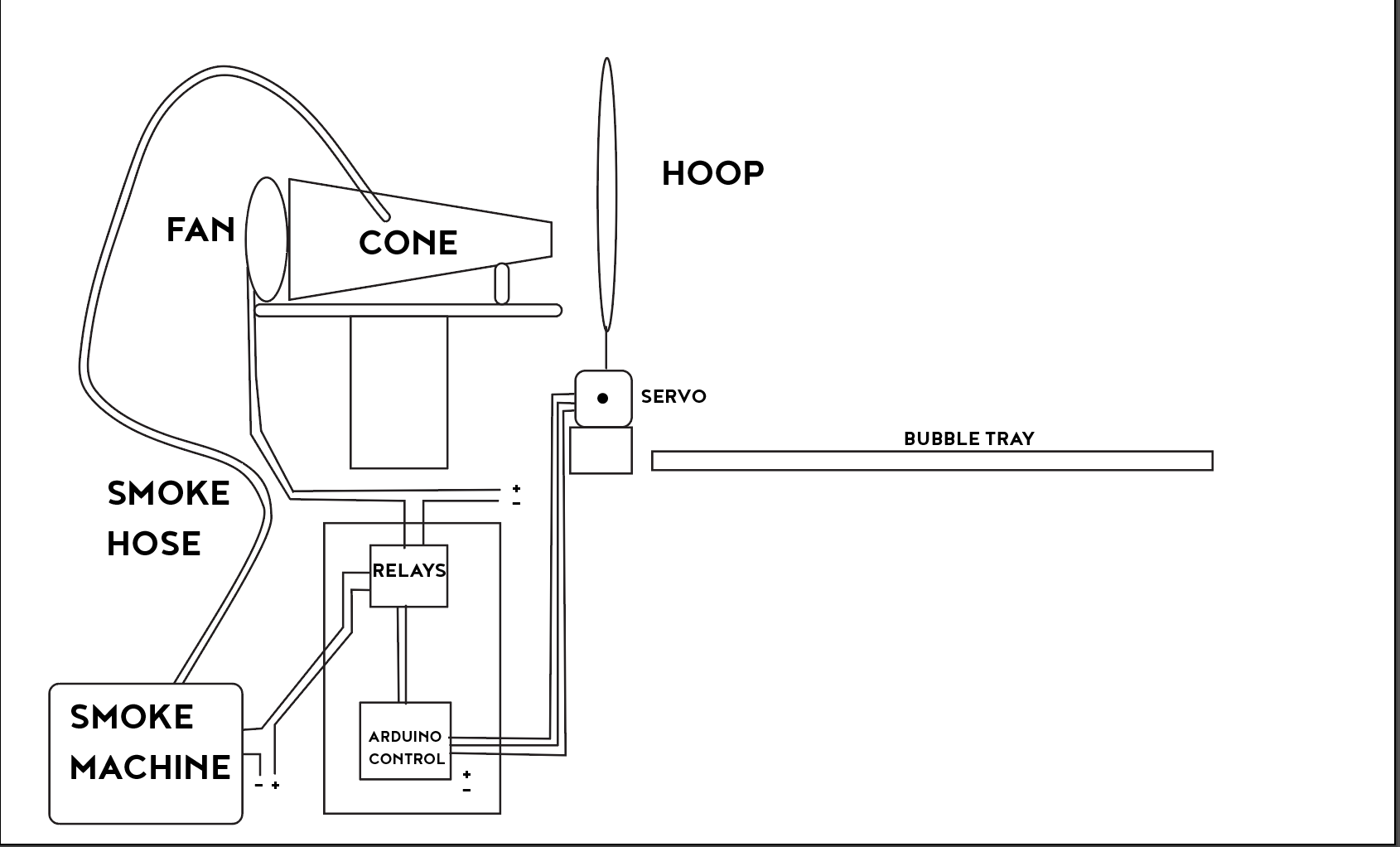

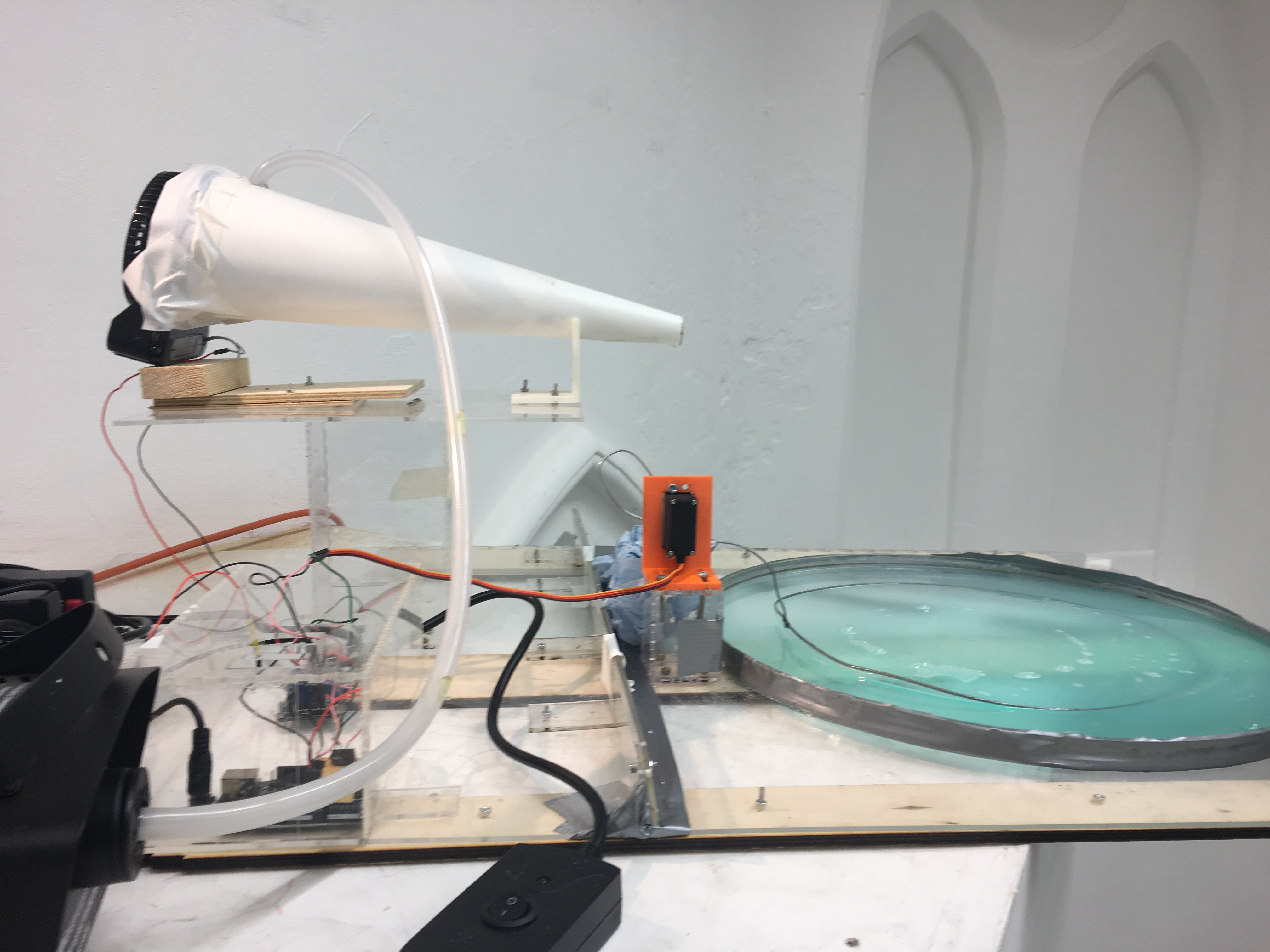

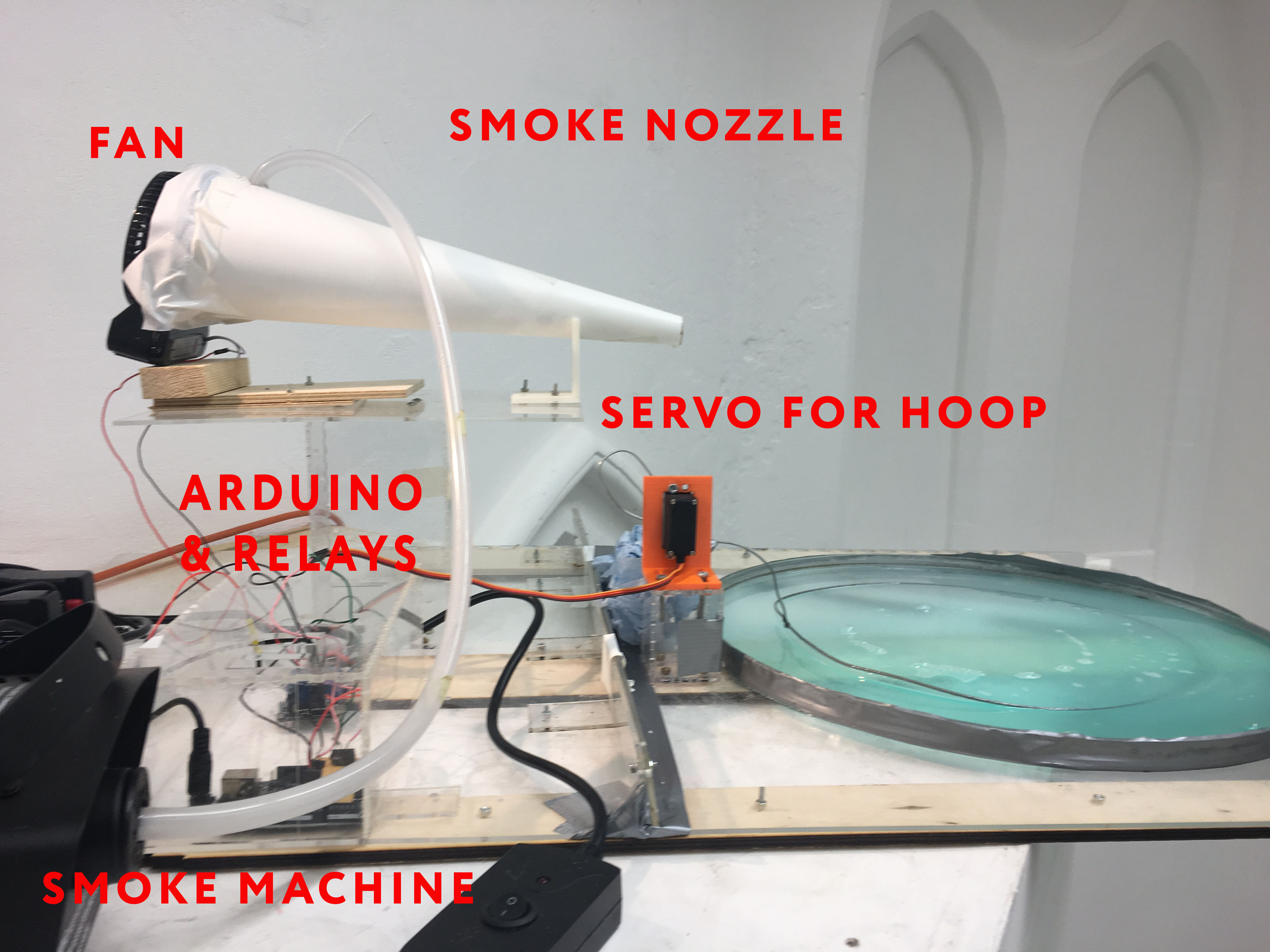

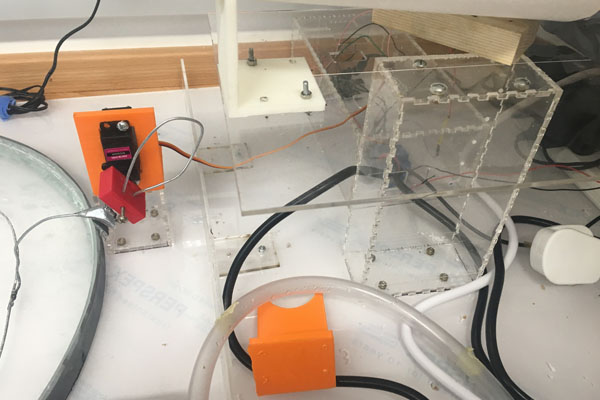

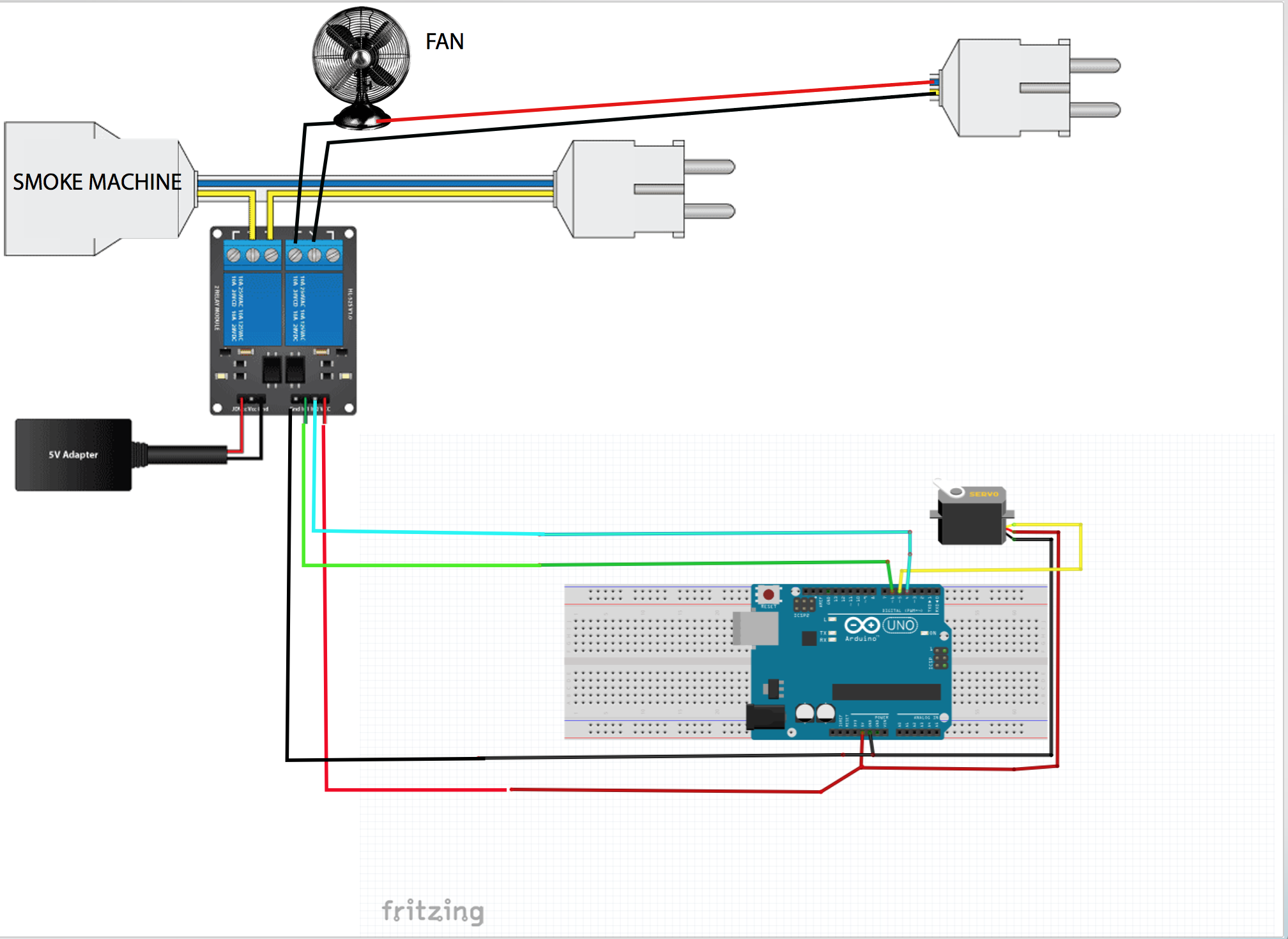

The Smoke Machine. See Figure 6

The Smoke Machine was designed with a arduino and relays and a servo. The arduino controlled the relay triggering and timing of the bubble-making using a servo to control the bubble hoop. When the smoke machine was switched on by the relay triggering it would pump smoke out of the machine and into the fan which then the pumped air would go through the hoop and into the bubble.

This took over 1 month to finess. A combination of the solution, the position of the cone, the power of the fan and timing. When I did the testing by hand it seemed impossible to control well, however, the machine control and the fan and pressure of the smoke were consistent in force using a machine and that was a crucial factor in getting it to work. The solution mix also took over a month to find the right ingredients that could hold a lot smoke and would last between 20-30 seconds before bursting. Amazed it worked!! Very difficult.

The actual design of the smoke machine was designed with the minimum of movement needed. The servo lifted a hoop that was immersed in liquid and that liquid was injected with smoke and a fan blowing air and smoke into the liquid.

The smoke Machine. See Figure 6

Sound Design

The soundscape was designed in MAXMSP and communicated to by the 'pond' processing sketch. It used acceleration and velocity blob values to dynamically change the generated synth sounds. It used a conductor event variable and a time generated by the pond to play a variety of sample sounds and generative sounds that changed as a blob or person moved through the pond. The sample generated patches contained 9 samples each and were dynamically controlled by a persons position. Every time food was generated a sonar blip sound was generated using a fourier noise transform.

The sounds were reactive to peoples movements and dynamically changing.

See the movie below which shows me moving acroos the screen and the tonal range changing as I do this. Also, as I wave my arm above me the acceleration sound kicks in.

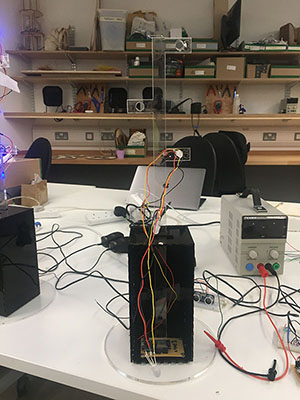

Clay Artefacts

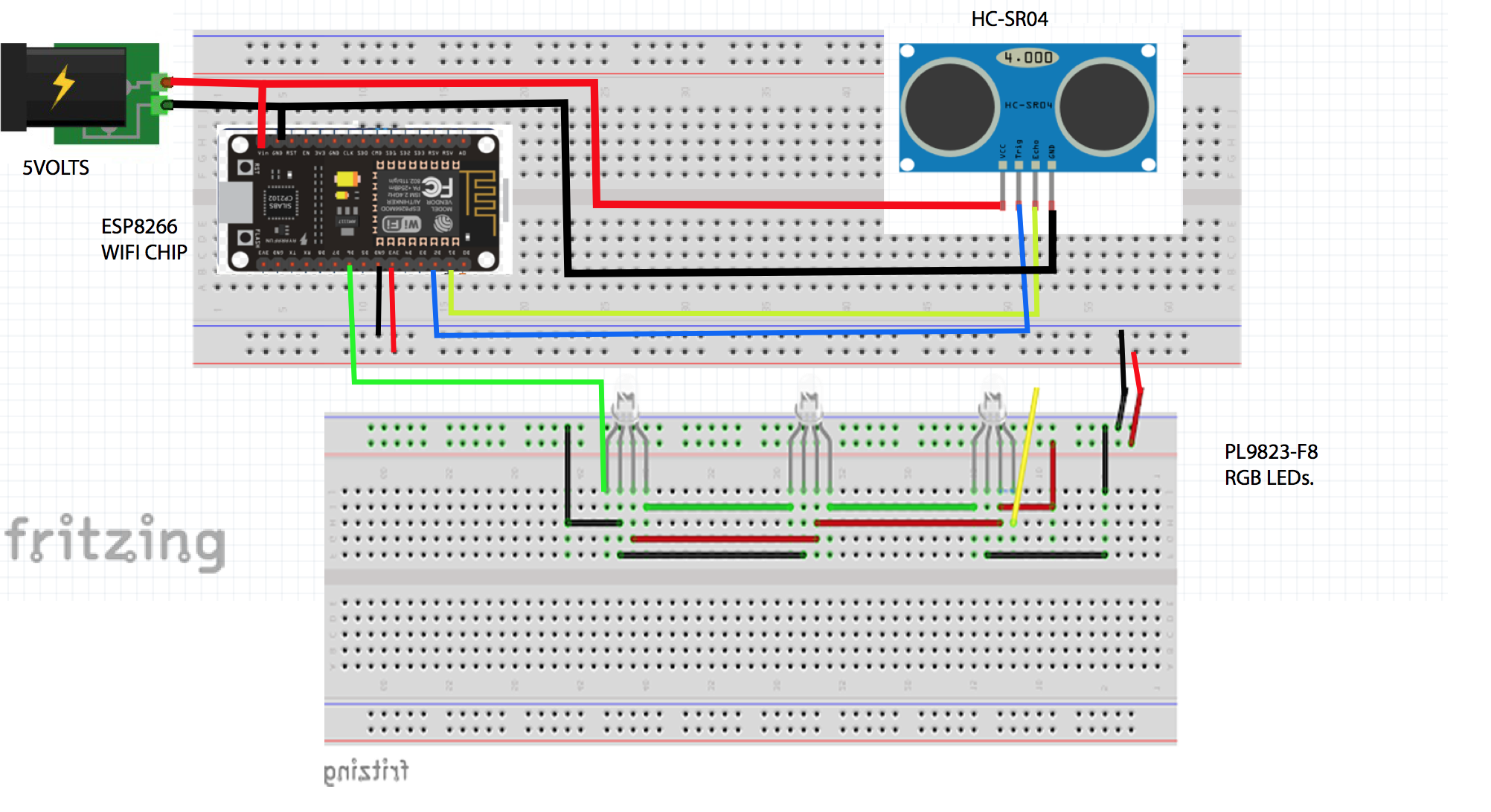

The clay artefacts were designed with a electrical wifi chip that communicated with each light separately. See Figure 7. They used neopixel LEDs for the lights and a proximity sensor to send data via osc WIFI and change display sequences to the next light. The lights were housed inside both hand built clay and 3d printed clay lampshades that used Voronoi artefacts (as used by Frei Otto) and Reaction Diffusion artefacts (as used by Alan Turing).

The circuits were housed in black boxes with the neopixels sticking out of them. The proximity sensor was stuck to the top of them and then restuck on top of the clay artefacts.

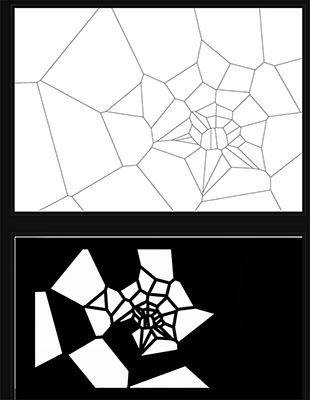

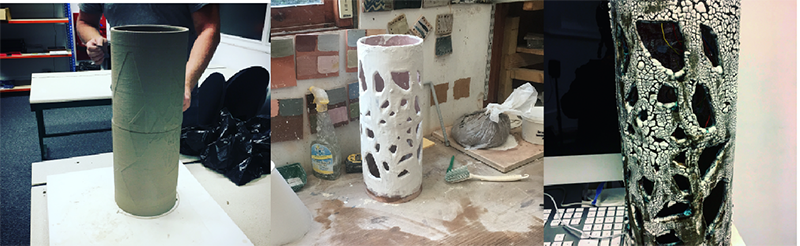

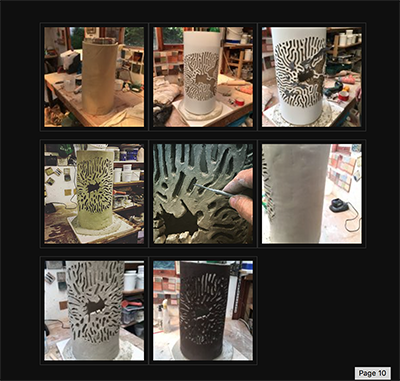

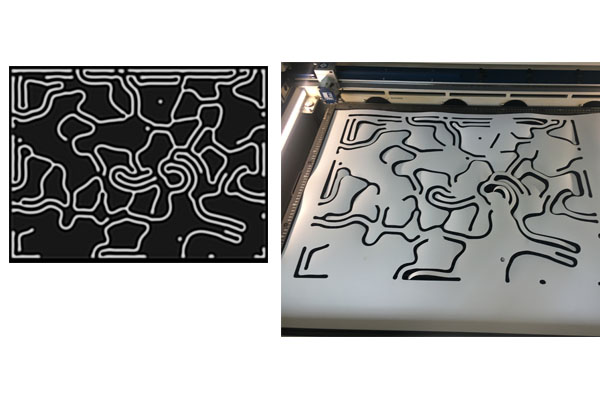

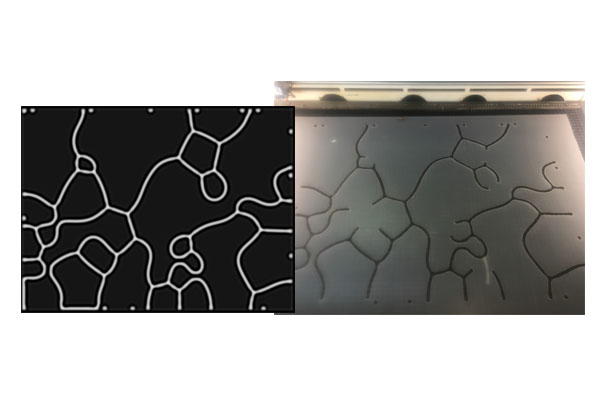

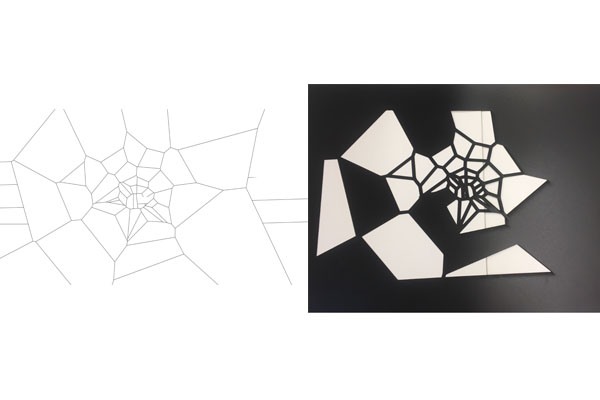

The clay lampshades were hand built (reaction diffusion) and 3d printed (voronoi). See figure 8 and 9 and the movie below. Figure 8 shows the voronoi design and figure 9 shows the reaction diffusion design both using generative algorithms.

The images show generative patterns of Voronoi (saved as a svg) and reaction diffusion agorithims saved as a screen shots (as they were generated using image values RGB pixels). The images also show they were initially printed in plastic 3D unsucessfully, however, the same models were also used to print out on a 3D clay printer eventually successfully.

They both show the clay processes for the 3D printed clay and the hand built clay.

Clay Artefact Electrical housing. See Figure 7. and 3d design clay movie

Voronoi Clay Artefact (3D built) Figure 8

Reaction Diffusion Clay Artefact (Hand built) Figure 9

Digital Design

The Digital designs were focused on two algorithms: an ecology using a genetic algorithm to develop the pond and a forest grown using L-systems.

The Pond Design

The 'organisms' for the pond ecology used a recursive algorithm to change there form which was linked to their life time. The algorithms mimicked real life by having the 'creatures' looking for food to live and if they didn't eat they would die out. They could procreate by themselves if they lived long enough and their genes had built in potential for mutation. The pond released food into the pond naturally and this was combined with the external food made by the bubbles and/or people entering the pond.

The movies below show two examples of the genetic pond: the first shows a mouse going over the creatures and if they were detected the creatures would gradually increase an attraction to the mouse (this was implemented to make the creatures gradually follow the blobs) and the second shows the recursive design of the creatures as they start very simply and gradually increase in complexity.

See movies below.

The Forest Design

The Forest ecology was self-sustaining but could also create trees depending on the number of blobs. The self-sustaining trees were green and the blob created trees were orange-brown. If the algorithm detected movement the trees 'leaves' would change to a cyan colour. They used a simple L-system to grow from branch to branch using a line function that continously changed its start and end positions until the gowth time had finished for that branch. Then the process was repeated for the next branch at a different start angle. Each branch data would then be into a arraylist. The complications were added by adding leaves at different branches and making them fall off the branches at different speeds and with different colours and in different directions. A further complication was added by making the trees die and fall over and the whole process keeping repeating.

The movie below shows this process.

Technical

The Kinect

A kinect was used for blob detection and hung above the pond and fixed with a mini projector APEMAN Mini Portable Projector using cable ties onto a block of wood. The mini projector was used to project the pond on to the floor. A further BENQ Short Throw projector HD was mounted against the wall to project the forest onto the wall using the iMac. This setup is shown in the movie below.

The Blob Detection Algorithm was based on Daniel Shiffman code for basic blob Detection. (Ref.4. ) I then extended it to detect gestures of blobs that change in velocity and acceleration.

The Smoke Machine

The smoke machine used 3D print components and laser cut components. The hoop was made with a mig weld using steel wire. The actual smoke machine was very basic so I could hack the circuit; 1byone Halloween Fog Machine with Wired Remote Control, 400-Watt Fog Machine, Black

The control of the smoke machine used a servo and relay switches which were timed to produce a good result... a bubble filled with smoke. The servo rotates between the fluid and the fan (in degrees 30-130) then activates the smoke machine and the fan pushing the smoke into the bubble and slowly pushing it out. The servo creates a bubble using a metal hoop and then activates two relays to switch on and off the fan and smoke machine the only libraries used were a "servo" library. The fan was a KooPower 3 Speed Adjustable USB Mini Desktop Fan which I hacked to control the speed of the fan with a variable voltage supply.

Components used:

kwmobile 2 Channel Relay Module - 5V Relay

MG996R Servos used.

Failures:

My first motors used were stepper motors and they failed completely to turn the hoop. The LEORX NEMA 17 2 Phase 4 were crap. The hoop was initalilly made in 8mm steel and mig welded, however, I couldn't use them as no motor could lift the torque of the hoop. Instead a thin 2 mm wire hoop was made using a mig welder.

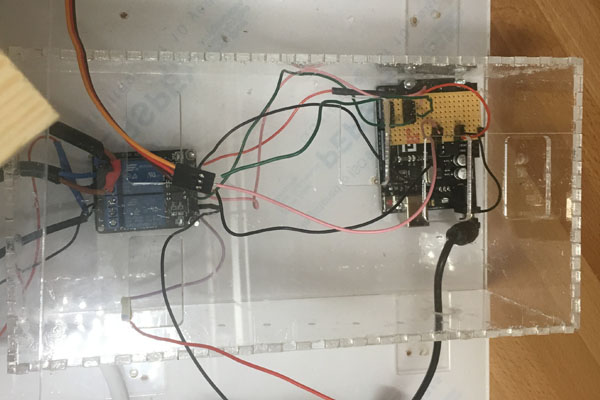

Schematics shown in figure 10.

smoke machine electronics figure 10

The Light communication

The lights communicate with each other through WIFI chips ESP8266. Using a HC-SR04 Ultrasonic Sensor where each of them passes a message to the next to communicate the presence of someone in there immediate range all wireless messages use a OSC library. The message sends a header/tag with a data value and its message is broadcast accross all ip addresses. Using a code broadcast code .

The lights use a neo pixel library made by adafruit to control the lights. The lights are PL9823-F8 RGB LEDs.

The circuit for the communcation is shown in Figure 11.

The Light communication Figure 11

The Clay Artefects Tech Spec

The Clay Artefects were two fold: hand-made 3d clyinders using lasercut templates from a reaction diffusion algorithm Gray Scott Model. Ref 5.

As well as 3d Voroni patterns made in Processing using a toxiclibs. Ref 6. The second process was output using a 3D clay printer The Lutum.

Figure 11 and 12 below show both processes below.

Hand Made Tech

The reaction diffusion hand made clay lampshades used stills generated from a reaction diffusion algorithm (based on a Gray Scott model Ref. 5). They were further modified so that the clay would not fall off the model. These formed masks that would be wrapped around the clay cylinders to be cut out.

Failures

Two of the clay models blew up in the kiln due to air pockets not being removed. This was due to the complexity of the job. The clay needed to be of a certain thickness and 'age' to be ready to support of 16kg of weight. The use of a newspaper and the laser plastic templates holding the inital shape was practical but led to this issue. Removing the newspaper solved this issue.

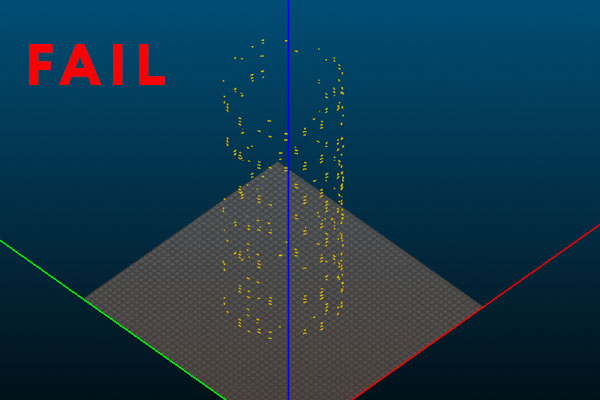

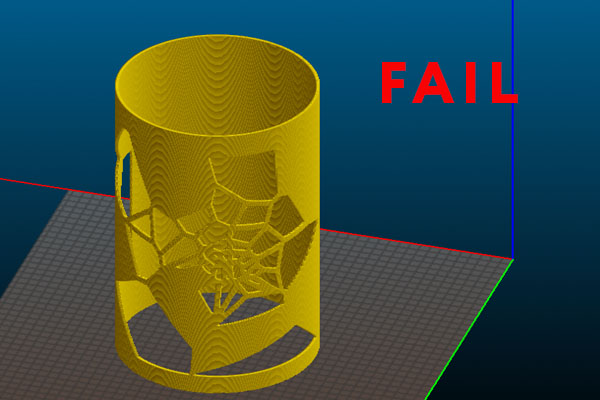

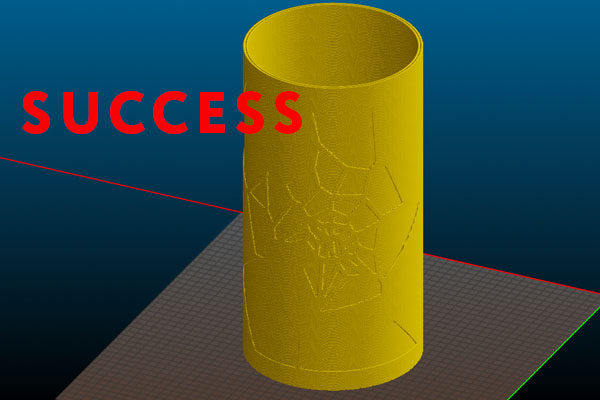

3D Clay Tech

The 3d clay printer was a The Lutum and had very precise slic3r settings. Slic3r was used for the export of GL codes. These were difficult to get correct for the Lutum so that it would print properly. Eventually it did but not before a series of failures.

The 3D models were constructed as a boolean addition with a cylinder plus the additional vector 3D voroni shape added onto to it to make additonal layer of the cuts to be seen on the 3D print. 3D Clay printers do not have any supports and holes cannot be made without the clay collapsing so a outline was chosen to use as a cutting guide for the holes made by hand. See the movie below shows the guides drawn on the cyclinder.

Failures

Very difficult to get the GL code to work with the Lutum settings and was only over come with a mass output of different 3d setups thicknesses.

2d and 3d clay process images Figure 11 & 12

Future development

The bubble duration before they burst was between 20-30 secs and if I could extend the time by 10 secs the bubbles would then hit the floor and a further pond reaction could then be programmed. 60% of the bubbles burst between 8-10ft above the pond and rest managed to get close to the floor but few actually hit the floor. This would mean experimenting further with the bubble mixture.

Changing the colour of the bubbles as they came out of the smoke would be good to implement. To achieve this the hard way would be to use multiple colours of smoke and an easier way would be to have a tracking projection onto the bubbles with another projector projecting coloured graphics on top of the bubbles. This would also mean rearranging the projectors so that they would not interact with each other.

Having the wifi clay lights react to the pond blob excitement would also be a good step forward making every object join together and react to each other.

Changing the feeding of the pond and having the food fall first into the pond and then ripple would also be good try out.

Making the pond creatures investigate all blobs entering the pond (just as the fly-like creatures do) and then carrying out there normal routine in search of food would also be another good interaction.

Have the forest create new trees on any new blob entering or leaving pond would also be good.

Having the pond creatures radically changing their shape and evolution would also be another good development.

Changing the leaves shape as people moved in the pond would be nice addition.

Self evaluation

Overall I felt the project was successful. The bubbles were a 100% success. Children and adults would not leave installation when the bubbles were active. In particular, children would never leave unless I switched off the machine.

Feeding the pond was moderately successful and needed to be recoded to make the interaction easier to understand. When blobs were detected small swarms of fly-like creatures would follow all the blobs and group around them; however,the large ceatures would ignore them. Making the creatures explore all new blobs for food then going back to there search for food would make it easier to see the interaction in the pond. Also, making the food fall into the water would be one thing to explore in the future and then make the ripples appear.

Making the trees create a new tree when people came in and out of the pond would be another thing to explore. Changing the tree blooms shapes would also be a good thing to explore when people moved in the pond.

The changing of complexity of the creatures evolution needed to radically change as the computational power needed was alot. I was restricted alot by processing power. As I had restrictions on computational power and it would be good to separate all processes on to separate machines for more processing power.

Having better projectors for the pond display would definitly help as I was restricted to use my own projector with limited definition and Lumen power.

I felt the goal of impressing the value of Frei Otto and Alan Turing had in the field of Morphogenesis would have been better if I had shown extracts of there algorithms and videos and also shown my process of linking there patterns onto the clay artefacts.

The clay lights perhaps needed to be linked to the pond more to gain people's attention (react to people's movement).

I felt my goal of creating an immersive installation was successful. People tended to stay between 10-15 minutes on average.

What made things very surprising for me was how many people complimented the installation and came up to me and said so. Also, people would not only come back twice in the same day but would return the next day with other people to show them the work.

In summary the main changes:

1. The tree growth and death worked very well

but the tree blossoms could change on peoples interaction as well.

2. The digital pool gained interest but need better interaction.

3. The clay lights needed to be linked to the pond.

References

Ref 1. Otto, Frei. Occupying and Connecting Thoughts on Territories and Spheres of Influence with Particular Reference to Human Settlement. Stuttgart: Ed. Menges, 2011.

Ref 2. Nanjundiah, Vidyanand. "Alan Turing and “The Chemical Basis of Morphogenesis”." Morphogenesis and Pattern Formation in Biological Systems, 2003, 33-44. doi:10.1007/978-4-431-65958-7_3.

Ref 3. Manuel De Landa . Deleuze and the Use of the Genetic Algorithm in Architecture

http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.453.2327&rep=rep1&type=pdf

Ref.4. Shiffman. "11.7: Computer Vision: Blob Detection - Processing Tutorial." YouTube. July 07, 2016. Accessed September 20, 2018.

https://www.youtube.com/watch?v=ce-2l2wRqO8.

Ref 5. "Gray Scott Model of Reaction Diffusion." Weiss Lab for Synthetic Biology. Accessed September 16, 2018. https://groups.csail.mit.edu/mac/projects/amorphous/GrayScott/

Ref 6. "Toxiclibs." Toxiclibs RSS. Accessed September 16, 2018.

http://toxiclibs.org/.