And Then They Were Gone

Inviting the audience into monochrome space, And Then They Were Gone is an audio-visual film that explores abstraction, sound, and image. Through the usage of limited visual and aural resources, the piece explores an abstract narrative, giving room to the audience to either find meaning, or embrace meaninglessness.

produced by: Armando González Sosto

Introduction

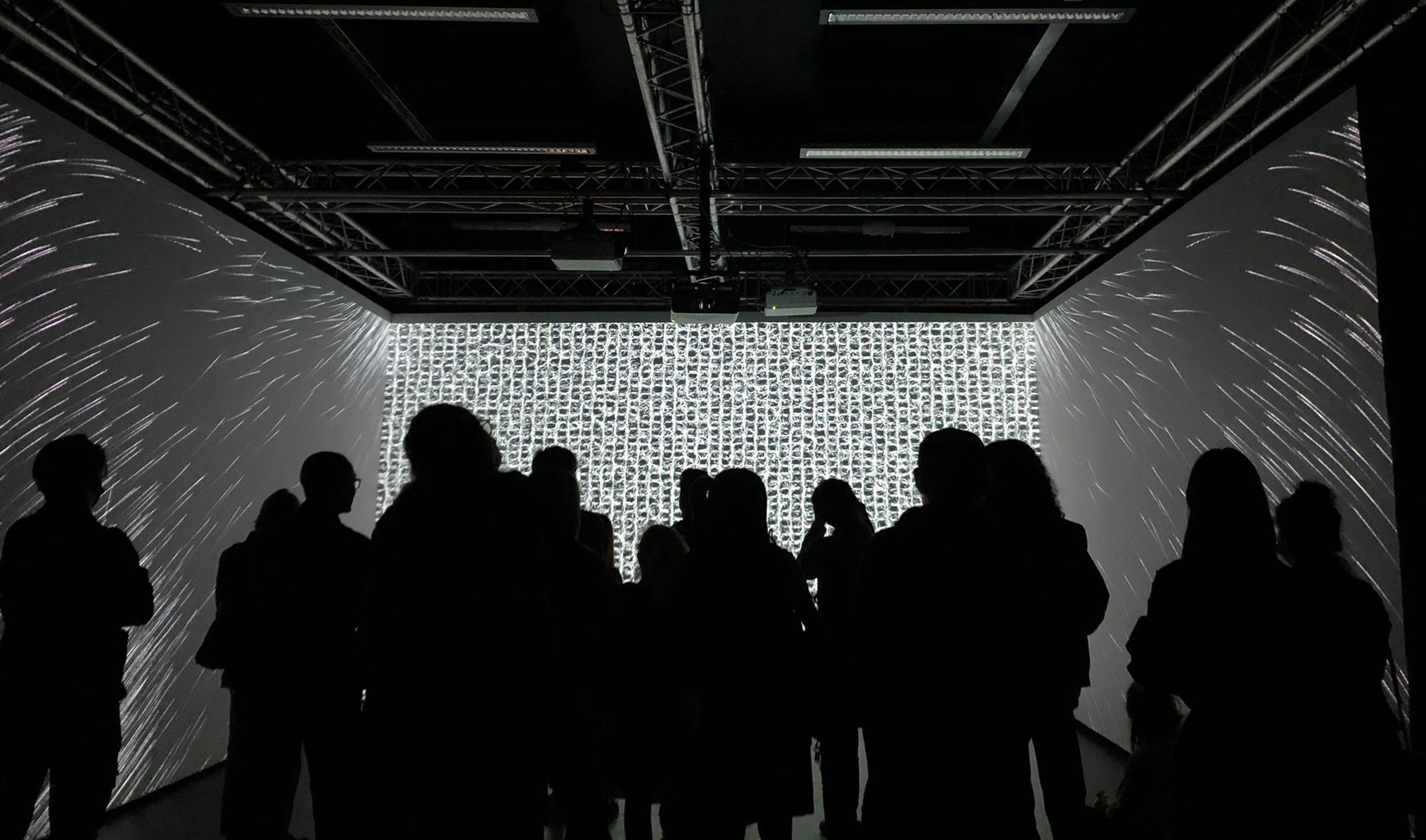

The piece is an audio-visual film featuring animations developed and rendered using a variety of creative coding tools. Visuals are projected on three different screens laid out in a U-shaped arrangement accompanied by music played through a 12-speaker surround sound system.

The piece navigates through a series of scenes which create moments of reflection in conjunction with the through-composed score. These scenes explore a variety of aesthetic and technical ideas while maintaining narrative coherence by adhering to a series of core visual motifs and patterns.

Figure 1 - Audience viewing a section of the piece which explores stigmergy and boids

Concept and background research

The idea with this project was to develop a cohesive audiovisual piece that would take advantage of the unique facilities at Goldsmiths’ Sonics Immersive Media Lab, which meant the outcome would be presented with surround sound and multiple projectors in mind. I saw three potential creative outlets that interested me: a live audiovisual performance, an installation, or a film.

I chose to proceed with a film for a variety of reasons. For starters, it allowed me to explore some of the more computationally-expensive ideas I had been researching, including agent-based systems, boids, and stigmergy. Given that I could render visuals at a high frame-rate and resolution by working off-line/non-real time, this approach would allow me to ensure I could meet my high production standards.

In addition to the technical considerations, working with fixed media also helped me address my current artistic interests. My musical work has increasingly been shifting towards composing and producing complex electronic music that features a lot of editing. Having less experience in developing cohesive visual time-bound artworks, I wanted to draw from my work as a music producer to influence and inform my visual work. This would allow me to expand and draw parallels between different media, and would provide a sense of reference and direction when attempting to achieve a large scale project in a new format.

Lastly, I believe that working with film would allow me to articulate the fundamental artistic interests I currently have. I want to build arguments and narratives that produce emotions in audiences that engage with my work. I want to embrace chance, chaos, and generative procedures, but at the end of the day, I want to be the one who decides what the outcome of an artwork is. This piece contains many generative and emergent systems, but by working through a process of editing, I would determine the final outcome. Creating a film would allow me to have the degree of control I was looking for.

Influences

A variety of artists across multiple fields influenced and inspired this piece. Some of my biggest influences came from 20th Century painters, including Frank Stella, Josef Albers, and Anni Albers. The works of Stella were of particular importance to me, especially the idea of utilizing concentric squares. The minimalist and patterned nature of the Albers’ work was also constantly in mind when developing the piece.

Figure 2 - Posing in front of the work of Frank Stella in 2018

I also drew influence from other practitioners in the field of audio visual art. An important influence comes from Robert Henke, an artist working with sound, image and technology. His piece Lumière II is inspiring for showing the ways in which sound and image can work together when creating a fixed piece for audiences to view in a theatrical context. The presentation and format of his work is highly appealing to me, and inspired me to work with the constraint of film as a format. Additionally, his attitude towards the creative process as shown through lectures and talks at Ableton Loop or at CIRMMT was also influential in the way in which I decided to approach the process of the piece as one of constant experimentation within the constraints of a given presentation format.

The works of Ryoji Ikeda were also relevant, particularly due to the presentation of his work at 180 The Strand in London. I had the privilege to see this exhibition firsthand, which influenced the direction in which I wanted to take my own audiovisual work. Seeing the variety of approaches he and his team explored helped me contextualize the work I would be doing, and seeing a production as ambitious and as well-excecuted as Ikeda's in person had a palpable effect.

Goldsmiths professor Andy Lomas was also an important influence, both through what I've learnt from him being his student during the MA, as well as from viewing his audiovisual work with Max Cooper. The ideas of stigmergy, which are central to the development of this piece, were initially exposed to me through Andy’s lectures, and it was through an appreciation of his works with Max Cooper that I began to see how well some of these ideas could interact with sound.

Other educators in the field of computational art also have a lingering influence on my own work, including Zach Lieberman, Dan Shiffman, and Matt Pearson. They are worth mentioning as fragments of their approaches inevitably linger, as they were my first guides as I investigated books, video lectures, and online forums at various stages prior and during the MA.

Finally, another important influence came from film making as a concept. Given I was developing a piece that would be screened and experienced as a film, it is impossible to deny the influence that film and television had. Particular films such as Ex Machina, or the work of screenwriter Charlie Kaufman served as a spiritual inspiration for this piece. Additionally, the film work done by John Whitney (and his work in general) is also an important inspiration.

In summation, a combination of minimalist painting, established audio-visual artists, film, and electronic music processes influenced and propelled me towards the completion of this work.

A recording of an entire screening of the film can be seen below:

Video - A screening of the piece

Technical

The music was composed using Ableton Live. A Max for Live package called Envelop was used to handle specific spatial audio needs. This package allows engineers to mix spatial audio with various ambisonic decoders, including binaural and multi-channel audio. This means that a home mixed binaural track can be easily adapted to a multi-speaker system in a different room simply by changing the ambisonic decoder used at the output stage. I developed a custom ambisonic decoder in Max to accommodate for the necessities of Goldsmiths' Sonics Immersive Media Lab.

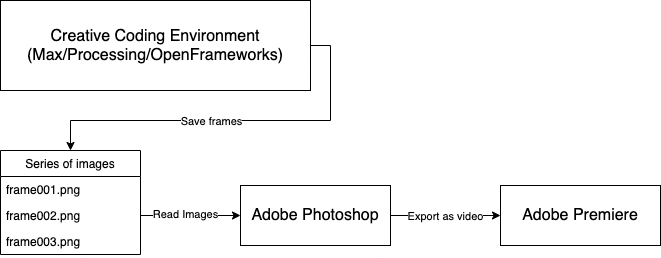

The visuals were developed using a combination of various creative coding technologies, including Max, Processing, and openFrameworks. Each programming environment was used with the express intent of leveraging its own unique strengths and idiomatic inherences, which will be explored separately per language in a later section.

Regardless of the tool used, visuals were recorded by capturing high-resolution images of non-realtime rendered frames. These images (either in PNG or JPEG format) would then be merged together as a video using Adobe Photoshop. This process yielded high-resolution videos with smooth frame-rates, albeit through a slow process of capturing and combining assets. Videos created through this process were later edited together and arranged with the music using Adobe Premiere.

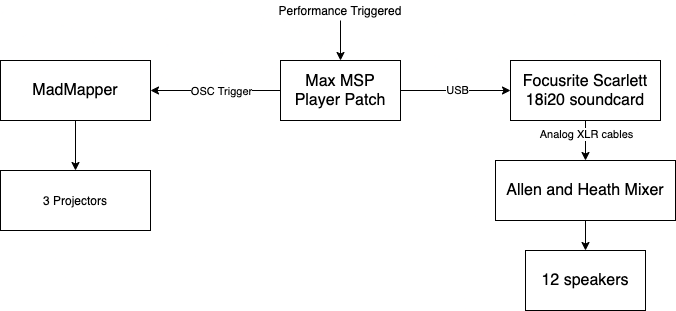

A diagram illustrating this rendering pipeline is displayed below:

Figure 3 - Diagram showing pipeline from code to video

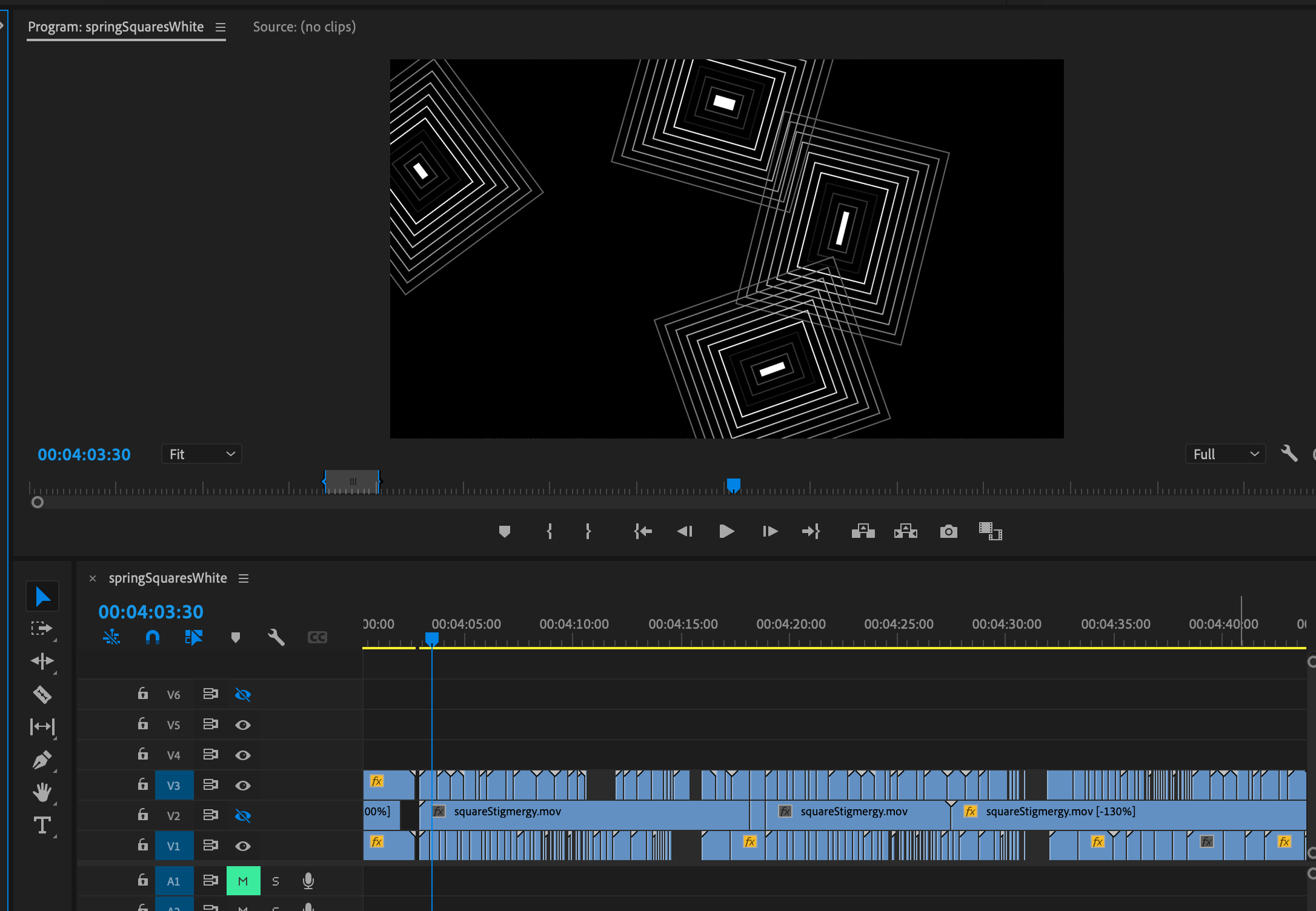

Adobe Premiere was used to arrange and structure the contents of the film by thinking of each screen as a separate 1920x1080 video track. Figure 4 shows a screenshot of the Premiere project where clips of computationally-generated visuals were arranged for different screens using different tracks. The three video tracks named V1, V2, and V3 represent the Left, Center, and Right screen respectively. The visuals were edited, arranged, and joined with the music through this process.

Figure 4 - Editing material in Adobe Premiere

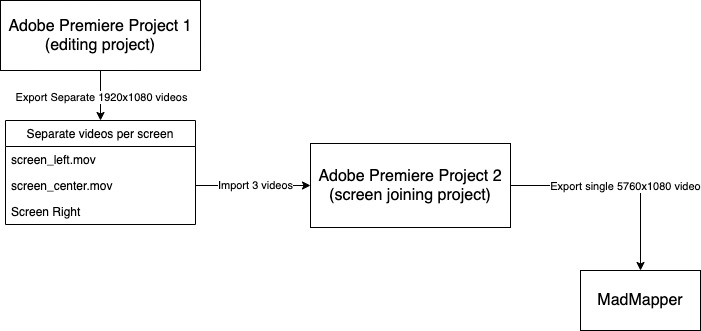

Each video track in this Premiere project is analogous to a specific screen in the Sonics Immersive Media Lab. Once the content for each screen was determined, it would be exported separately as a video. After completing this stage and having three separate video files, a second Premiere project was used to join them into one large single 5760x1080 video. This large video was extended over the three screens when showing the film to ensure that every screen was always in sync, as MadMapper would simply have to play a single video instead of three.

A diagram describing this process is presented below:

Figure 5 - Diagram showing pipeline from edit to screening. Videos are edited together in Premiere, then fused into a large file and projected using MadMapper

A simple Max patch was used to orchestrate the different parts of the film during screenings. This patch was used to trigger the beginning of the performance through a simple mouse click, which would then play the rendered multi-channel audio and trigger the playback of the video in MadMapper by sending an OSC message. The diagram below illustrates the setup used during screenings, with the left path being the video path, and the right path being the audio path:

Figure 6 - Diagram showing screening setup

An Orchestra of Creative Coding

A variety of creative coding tools were used in the development of this project. Just like an orchestra, where different instruments have unique features that compliment and balance each other, the tools used in this project allowed me to explore different perspectives.

I place high importance on the developmental experience as a determining factor in the outcome of an idea. The interaction between an artist and their code has an effect on their psychology and perception as ideas are explored, which means that ideas can be articulated differently depending on the tool used. For example, given Max never requires compilation, code developed with it tends to have a more playful developmental process. OpenFrameworks, on the other hand, tends to have a slow compilation process, which means ideas tend to come, at least in my own personal case, less from interactions and more from observations. Also, each tool performs tasks with varying degrees of reliability, which has an effect on the type of ideas that tend to emerge when using it.

The implications of these tools depend on the personality of the artist using them. The observations I had when working with different tools are presented in the following section, but it's worth mentioning that these effects are personal, and might be substantially different for other artists.

Max

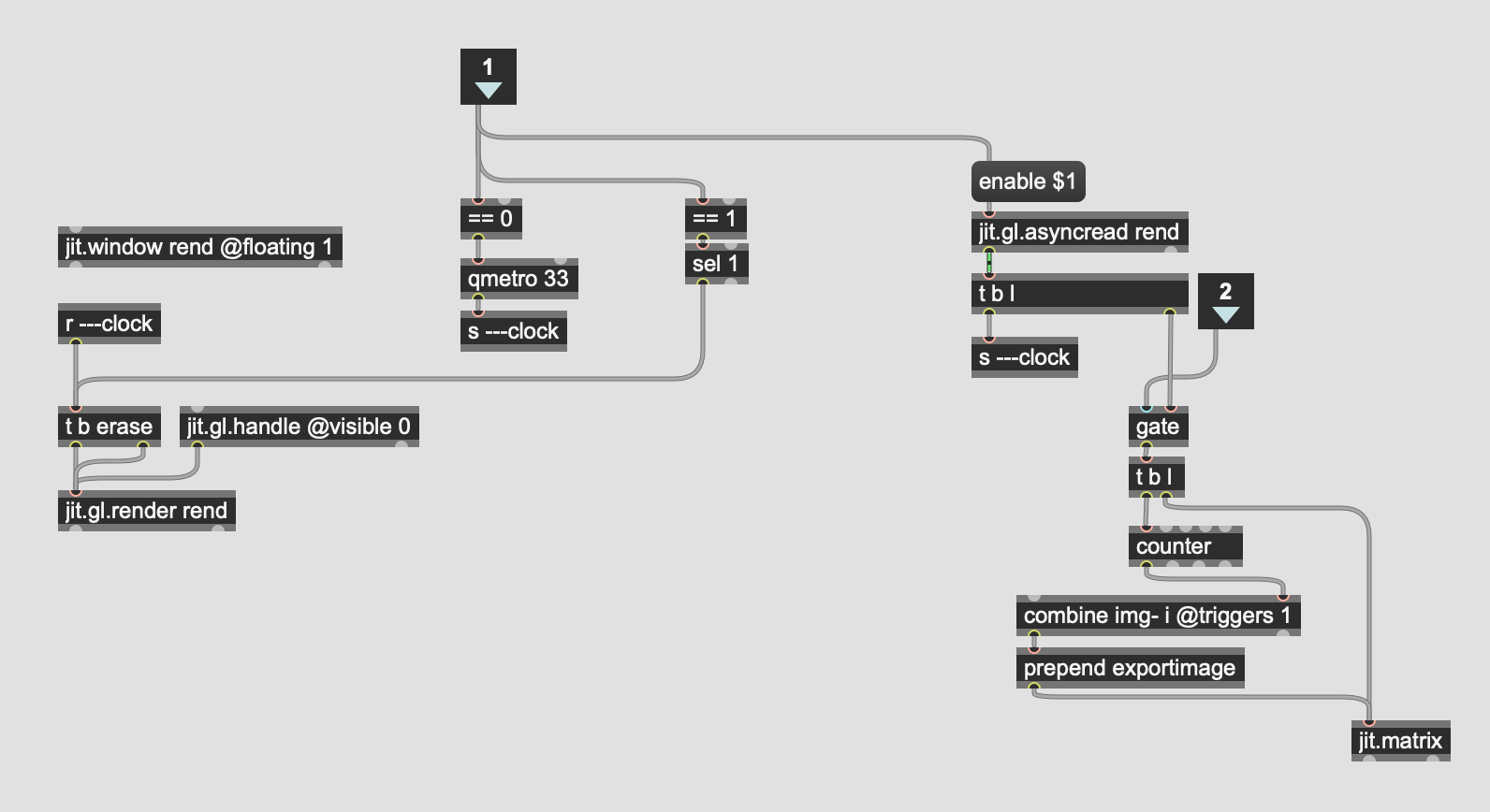

Figure 7 - Example of a Max patch used to record visuals

Max has a unique integration of OpenGL which combines standard OpenGL conventions with Max’s idiosyncratic patching environment. Visuals can be developed using Max GL objects, each object encapsulating different layers of OpenGL code. This makes certain OpenGL features accessible through ways that may be different from other languages. This encapsulation has many effects. On one hand it brings efficiency to the development experience by allowing artists to focus on their artwork, and not on tedious or repetitive OpenGL scaffolding. At the same time, it obfuscates the inner workings of the library, which somewhat limits the scope of possible developments.

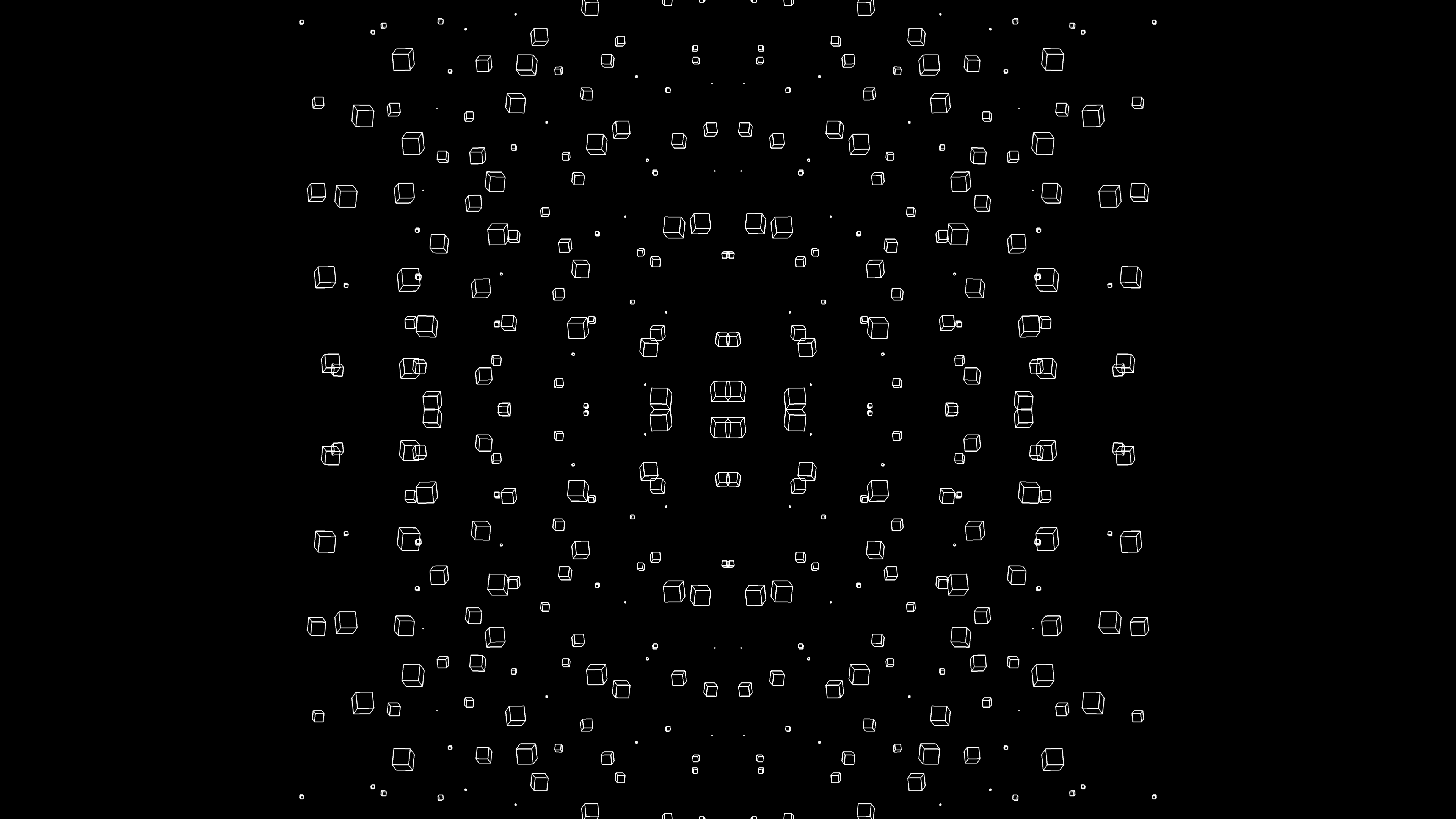

An example of visuals done in Max for this piece is shown in figure 8. In this example, the jit.gl.multiple object takes in complex matrices to instantiate a variety of 3D objects. Instead of thinking in terms of loops and complex algorithms, developers can think in terms of procedural matrix generation and manipulation in a manner not unlike CV signals in modular synthesizers. This thinking process has a palpable effect on the types of patterns that can be created.

Another important object used was jit.gl.pix. This object creates a sub-patching environment which allows the development of fragment shaders using a simple node-based approach called gen, allowing artists to write GLSL shaders through patching, rather than writing code. Max also makes it easy to capture GL scenes and process them in an effects-based signal path. Using objects like jit.gl.node, GL scenes can be captured and processed by a series of quickly-developed fragment shaders, allowing ideas to quickly happen and be implemented. Ideas can be triggered by intuitively setting a value a particular way, and these moments can be easily found when there’s a lack of needing to compile to see results.

Unfortunately, one of the limitations found in Max is recording high quality footage. In order record content, I ended up developing my own rendering engine (shown in figure 7). This custom engine allowed me to make sure that I would only render the following frame once the current frame had been safely saved into a high-resolution file, which is something openFrameworks and Processing can do by default.

Figure 8 - An example of visuals generated using Max's jit.gl.multiple and jit.gl.pix

Processing

Similar to Max, Processing enables artists to have quick developmental processes due to its architecture. Compilation times in Processing are relatively quick due to the fact that Processing works with Java and uses a virtual machine (VM) which speeds up compilation times. However, this also means that Processing is not optimal for heavy-performance visuals, as Java's VM is not as efficient as natively compiled code.

Ultimately, the quick compilation times means that ideas can be quickly sketched-out, and observations can influence a quick iterative series of developments. This is exactly what I did when working with Processing in this project - I would iterate very fast over ideas, attempting to emulate free-write like processes, starting at one end with one idea and ending at another end with a substantially different idea. Processing lends itself very well during ideation stages, but does not perform so well when more complex ideas begin to take hold.

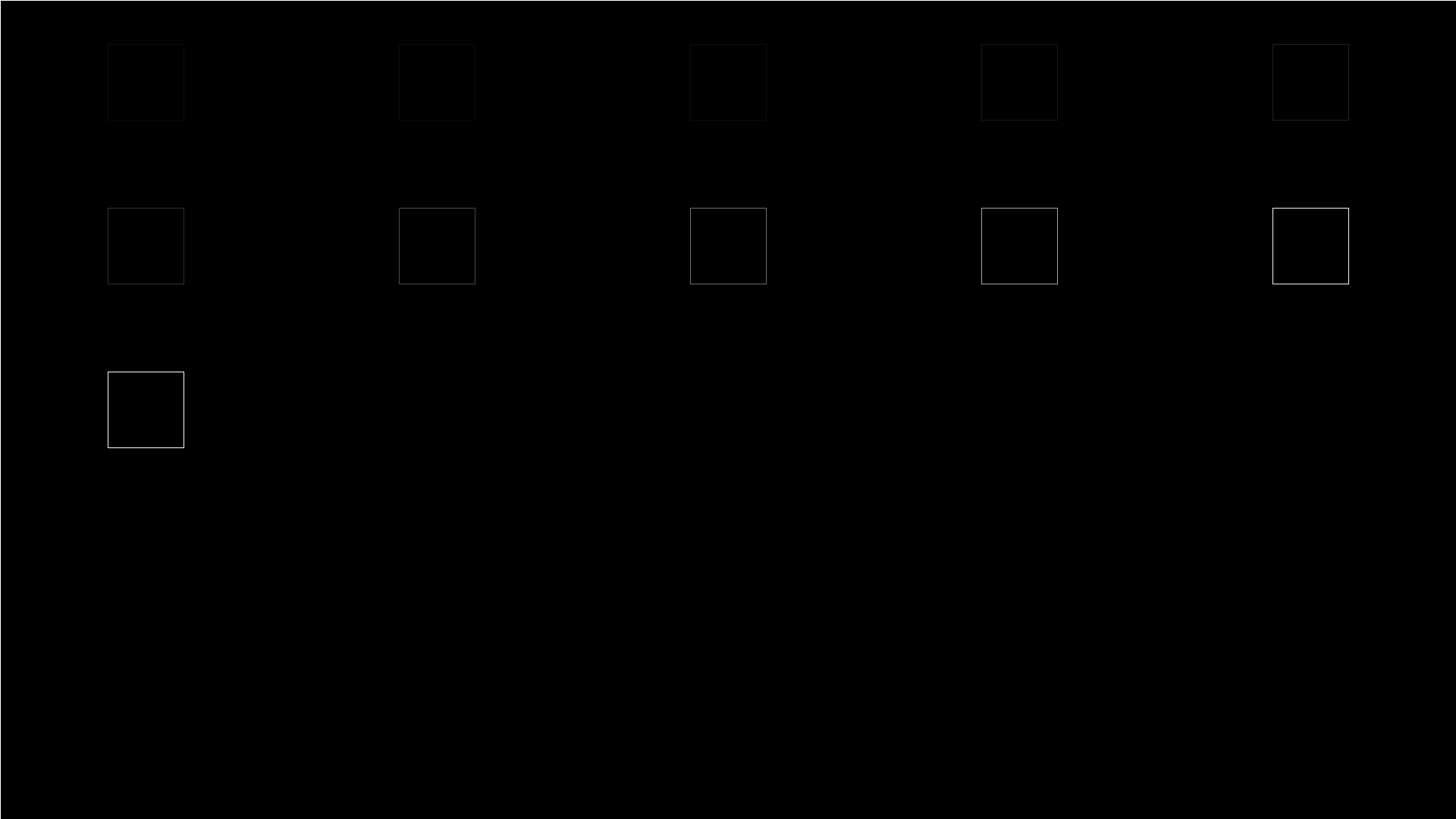

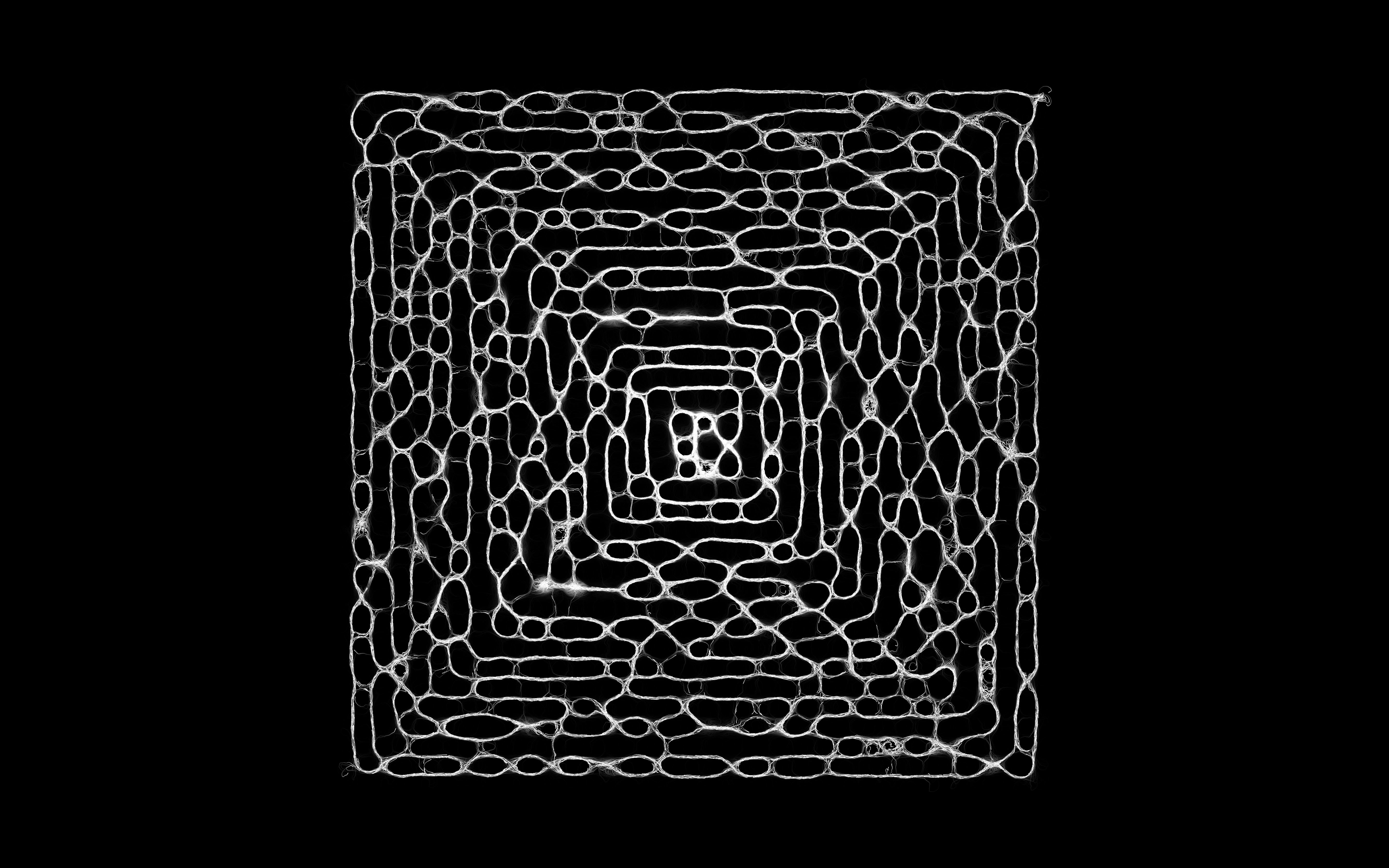

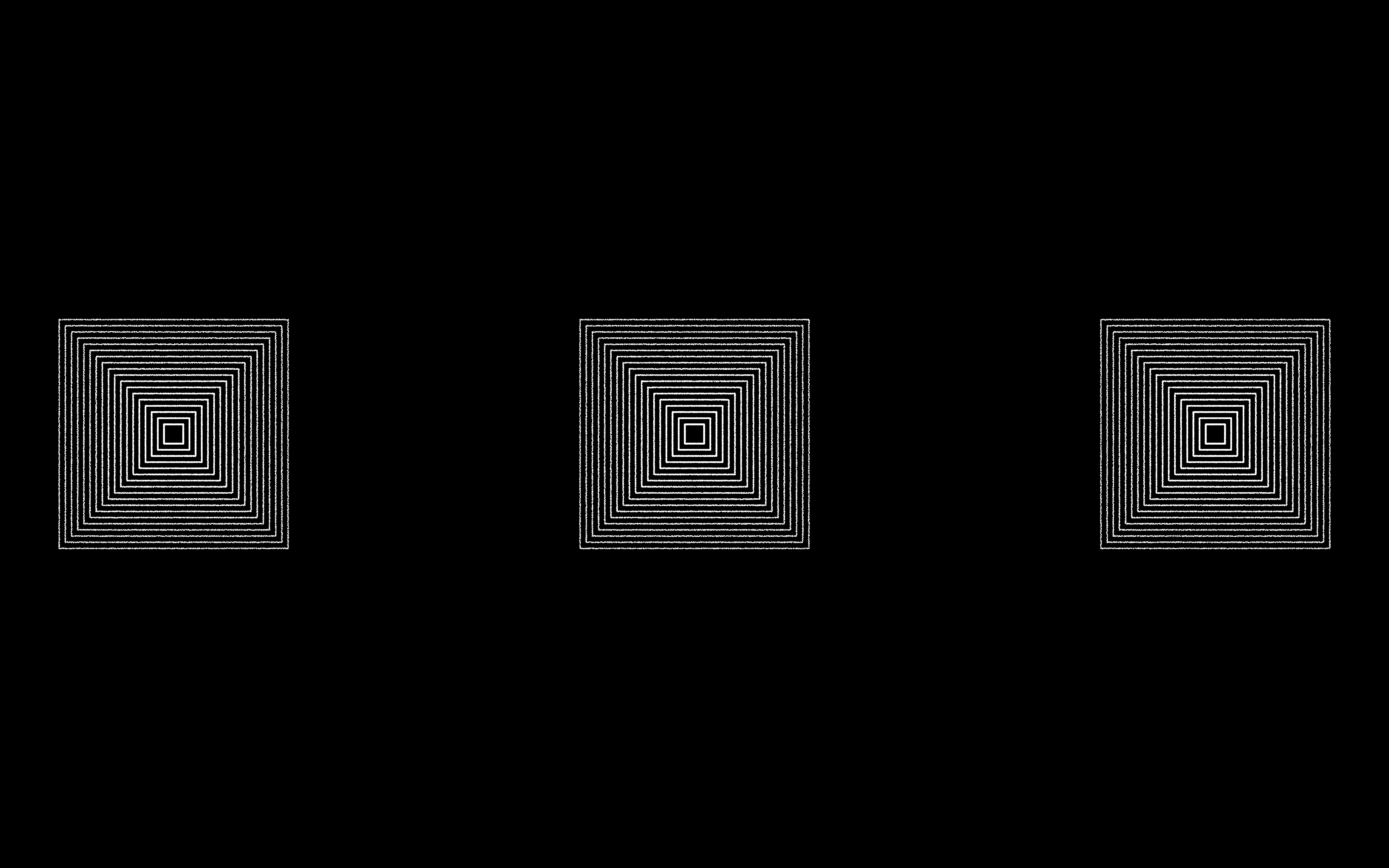

The gallery of images below shows a series of images developed through a quick series of developments in Processing. The simple idea of a row of squares developed into various permutations, some of which are used in the final version of the film.

Image Gallery 1 - Examples of iteratively developed sketches in Processing

openFrameworks

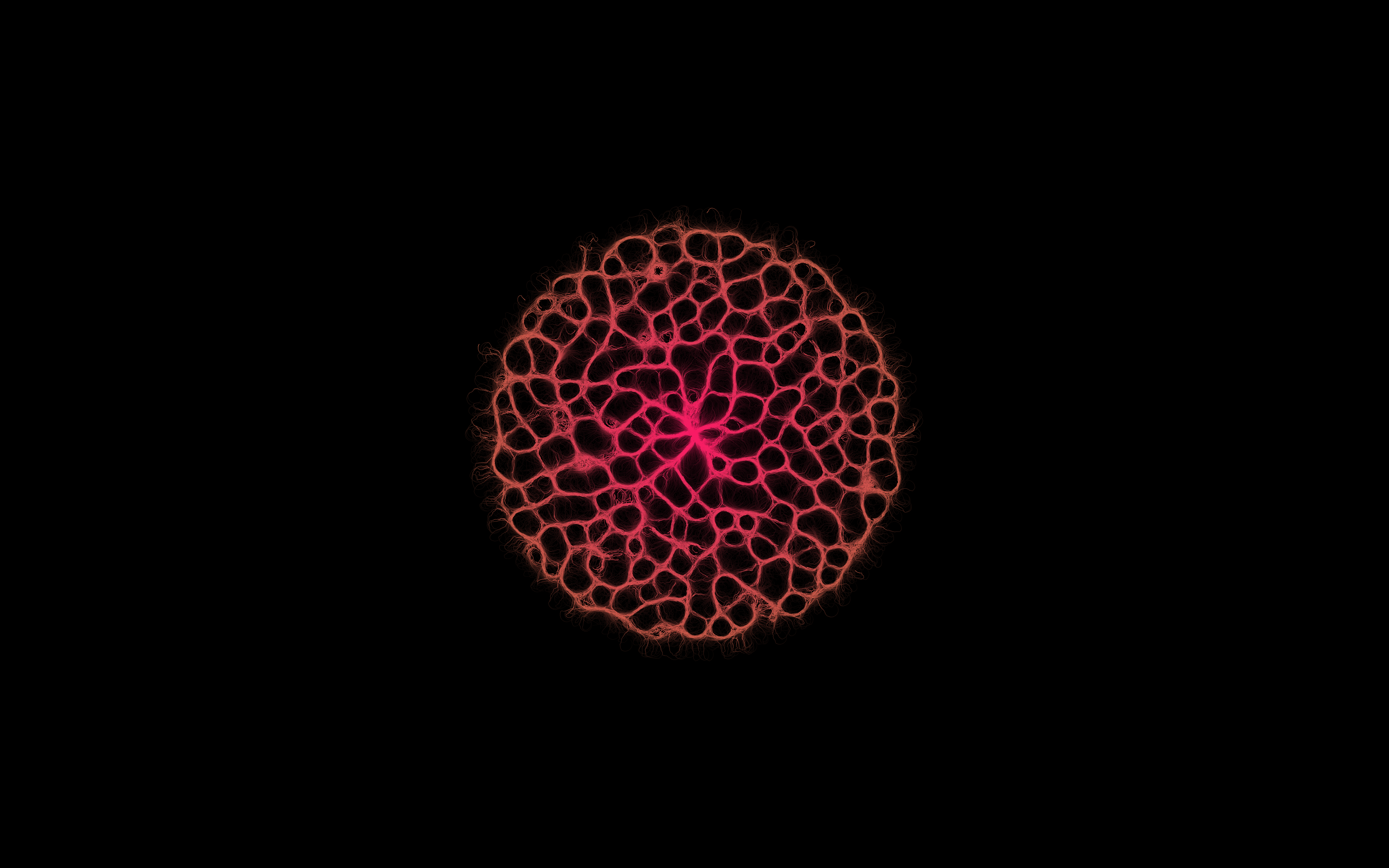

openFrameworks is the most performant and robust of all tools used in this project. openFrameworks is slow to compile, but once excecuted it performs much better than any other creative coding environment. This means more ambitious and complex ideas can be explored by using it, especially ideas involving emergent systems and agent-based systems, which is precisely what it was used for in this project. Additionally, the usage of FBOs in this project was of high importance, particularly in the development of stigmergy and branching processes. Frame-buffers allow agent-based systems to look back at previous generations of rendered visuals, which enables the development of stigmergy.

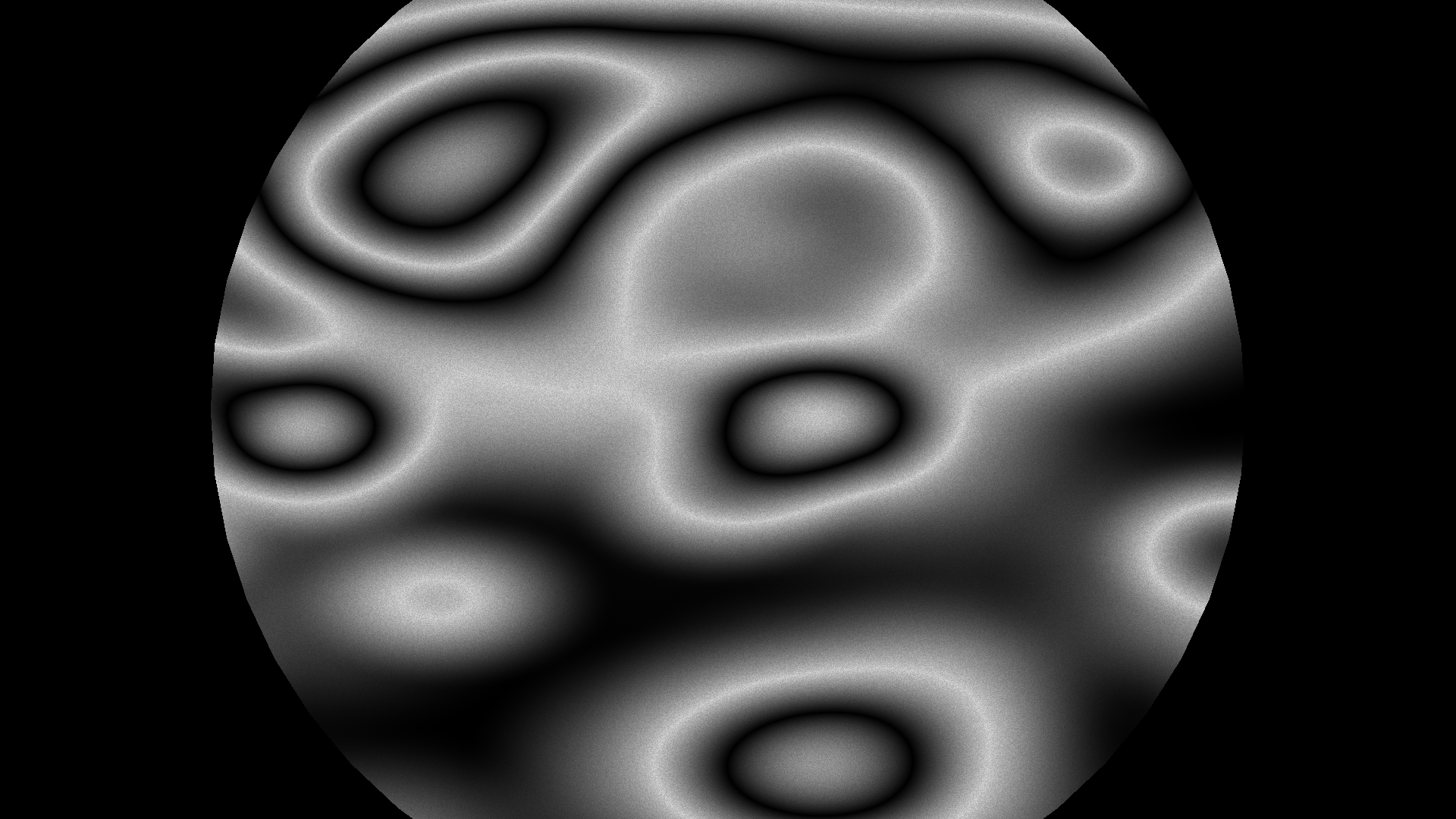

More complex fragment shaders were also developed in openFrameworks. Although fragment shader performance shouldn’t vary that much between the environments used in this project (as this is delegated to the GPU), it's worth noting that openFrameworks allows for CPU-scoped code to be more ambitious than what could be done in Processing or Max. In this piece, I developed meta-ball sketches which were rendered using fragment shaders, but used agent-based systems to determine the position of the centroids.

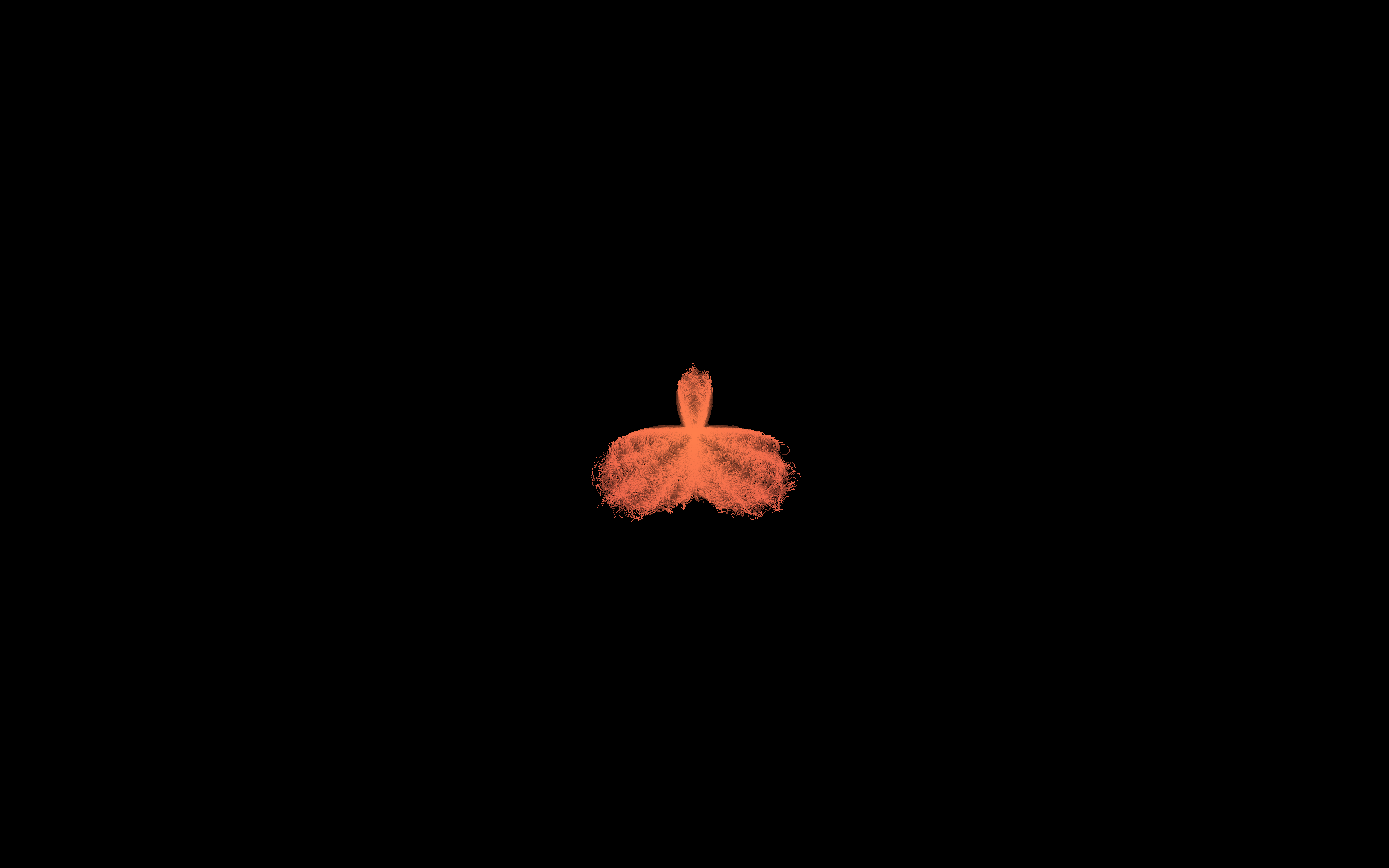

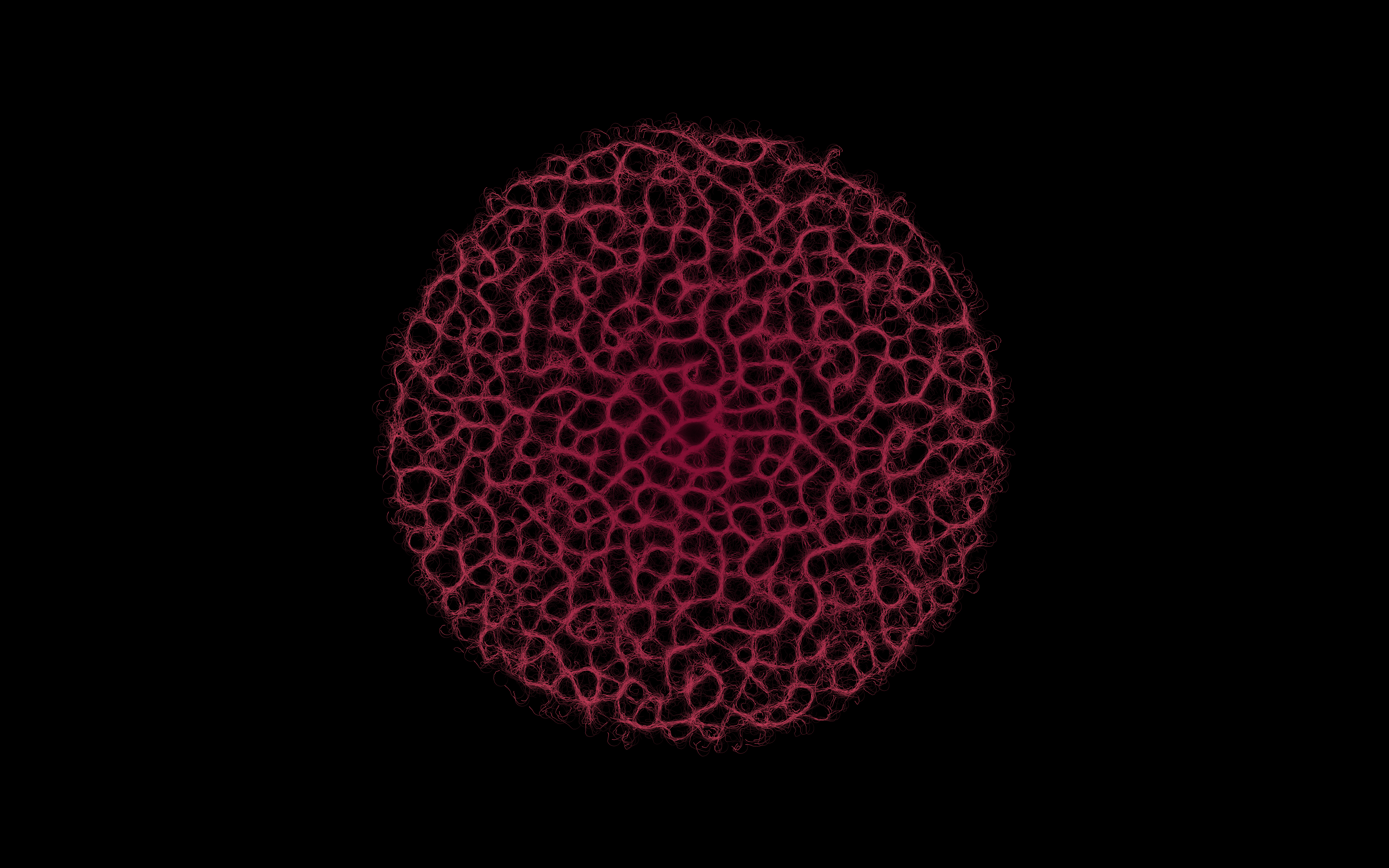

A gallery of examples of stigmergy, branching structures, boids, and metaballs is displayed below. All of these images were developed and rendered using openFrameworks.

Technical Conclusion

Working with a variety of creative coding tools was interesting to me as it allowed me to explore a variety of visual textures and concepts through different approaches. It's important to remember that projects like these are developed over a multi-month period, which means there needs to be a way to maintain engagement with the concepts being explored. Mixing and combining different tools alowed me to keep myself interested and engaged, as development on one tool would inform what I would do with a different tool. This is similar to my approach to music production, but instead of editing audio in Ableton, I edited computational visuals with Adobe Premiere.

Future Development

Decisions were made at the beginning of the piece to limit the scope of the work, which I believe was conducive to bring clarity to what was essentially an open-ended project. Like Robert Henke suggested in his talk at CIRMMT, limits can be useful tools for artists working in experimental media, as it helps focalize priorities within the established constraints. However, now that this particular piece has been completed, future iterations could play with the definitions of the constraints that were set for this piece. I could have my current configuration as a point of departure, but gradually bend the definitions of my constraints and intruduce new concepts, maintaining coherence but also expanding into new territory.

For instance, this piece has strictly no real-time processing. While I believe that pre-rendered material would be at the core of future iterations, it'd be interesting to explore how real-time interaction and variation could figure in the piece. For instance, a new film could be structured and developed similarly, but instead of simply playing back a video, a layer of code could process or add simple animations on top of pre-rendered material. A simple particle system could be added on top of pre-rendered visuals in order to bring a degree of liveness to the performance. Also, a degree of interaction could be added to the work. Minimal audience interactions could be added in order to invite participation from audiences through the usage of a variety of human-computer interfaces.

Given the conclusion of my studies at Goldsmiths, future developments would be developed for other spaces. This means new versions would have to adapt to different display/projector realities, or it could mean taking alternative presentation methods altogether. 360/VR video environments could be used to create immersive film experiences, or single-display versions could be used to ensure a larger degree of accessibility. My personal interests and previous projects point towards the usage of the web development as a medium, but I wonder how this edit-heavy approach could be implemented natively on web browsers in a way that performs efficiently on average computers. This technical inquiry could be at the beginning of interesting new research.

There are many routes this idea can take, but I believe that working with audio, visuals and code is going to be a consistently important part of my practice, and the conclusion of this piece leaves me inspired and motivated to continue.

Self evaluation

I believe this project was very successful. I was able to create what I set out to do: a film which would link sound and visuals to tell a story that conveyed emotion to the audience. I had the pleasure of speaking with audience members after screenings and their responses were overwhelmingly positive, with some people remarking on their perceived emotionality of the piece, and others sharing their diverse interpretations of the motifs I was exploring.

I was also satisfied with the production standard that was achieved. The quality of the projections and the sound mix was very high. Frame-rates were consistent, resolution was high, and the editing was generally very tight and coupled well with sound in the sections where there was a one-to-one relation between the two media. So in summary, I believe the piece was technically successful.

However, this doesn’t mean that there weren’t issues - certainly there are things that could've been done better. I believe there were moments in the piece where the development of ideas and motifs wasn’t as clear as I had hoped for. Upon receiving feedback during my viva, a professor commented he was unsure when the piece would end. On one hand, temporal dissociation and uncertainty was part of the experience I was looking for. However, this comment suggests that more attention could be paid to how the piece is structured so that more subtle cues are presented to indicate and highlight the structure of the piece, or that the uncertainty of the timeline could've been expressed with more clarity.

The pipeline to develop this piece was incredibly inefficient, and I would look to improve upon that in future versions. I somewhat counterintuitively believe that the inefficiency in my processes was an important part that defined positive elements in the development of this piece, as it allowed me to gain perspective and insight into the decisions that I was making. However, I also believe that this inefficiency somewhat tied my hands at times, as the rendering processes were so slow and tedious that my ability to do quick last minute edits was limited. I believe optimizing my pipeline in a variety of ways is highly important for future versions.

One of the biggest technical inefficiencies came form generating videos from images. I firmly believe that the process of using offline frame-capturing is ideal for high quality renders, but I think that the process of taking these frame captures and turning them into videos could be optimized. Through the usage of custom developed utilities (potentially using the ffmpeg library), I could run a batch process that automatically takes all of the generated images and turns them into videos without my intervention. This process could be executed when I’m not using the computer, so this way I don’t spend time waiting for renderings to complete.

I believe I’m only getting started in developing my own vocabulary for this format. I believe that this development is a result of iterating through projects that are produced at as high of a standard as this one. However, I believe my capacity to create engaging pieces of audiovisual work is only going to get better. I feel like I achieved what I set out to achieve, which makes me grateful and happy for this project. The process itself was also immensely rewarding and I learnt a lot about myself as an artist - what ways of working suit me, and which don’t, for example. I am looking forward to future iterations that take some of the ideas even further.

References

Failure = Success, Robert Henke. Presented at Ableton Loop: https://www.youtube.com/watch?v=bVAGnGWFTNM

Give Me Limits!, Robert Henke. Presented at CIRMMT: https://www.youtube.com/watch?v=iwOaYxSJGqI

On Writing, Charlie Kaufman. Presented at BAFTA: https://www.youtube.com/watch?v=eRfXcWT_oFs

The Nature of Code, Daniel Shiffman.

Generative Art, Matt Pearson.