The Artefact

blitzAR

The first night of the London Blitz in a situated AR experience.

The Theory

The City through the eyes of Augmented Reality,

A shift of representation and perception.

produced by: Eden Chahal

blitzAR

The artefact is an Augmented Reality experience situated in the city of London. Using computational tools, it reveals historical layers that are part of the city but escape the human eye, and at times the collective consciousness.

It is a 24 hours experience, anchored in space and time that suggests a narration of the first 24h of the Blitz bombings on the 7th of september 1940 over London.

Our relation to our phones has become intimate, and for a large portion of urbanised citizens, the attention we grant it might be higher than the focus on details we might encounter in our physical environment, such as commemorative or informative plaques. This object that fits in our hand could possibly be replaced by other interfaces that would fade into our bodies, becoming its seamless extensions. That could call to redefine all Human Computer Interactions, but also to the way we think, perceive, interact with the built environment. In this case, the first modification could be of the attention to the surrounding. Once you have experienced and felt the places through augmented reality, how will the perception of the physical world be affected with this additional understanding? You might have your curiosity roused, look closer, search for details, and remember it while you pass, because you have absorbed it through your body, in a specific place and time. Reading a map, and extensive descriptions informs us widely about those events, that can in this way be understood and memorized. What would happen if you could also feel and see them while you are physically passing by the place of the events?

The main point of this work is to evaluate the impact of a shift in the way information is absorbed by a user. How is it different to read a map of London, see pictures, or read a text describing the impacts of the bombings to receiving those same pieces of information through chosen computational tools, that are merged in the user’s daily journey in the city. We will explore the added values of the AR media, the features unique to the specific tool, while keeping a critical approach on what we might lose by modifying the way we represent, therefore think and perceive our environment.

The result that can be seen on the demo video is not a fully functioning application, but a proof of concept using a combination of available AR techniques. The elements were tested on a portion of Grove Road, Bow, London, between Roman Road and Mile End, including Mile End Park. The app was tested at different times of the day.

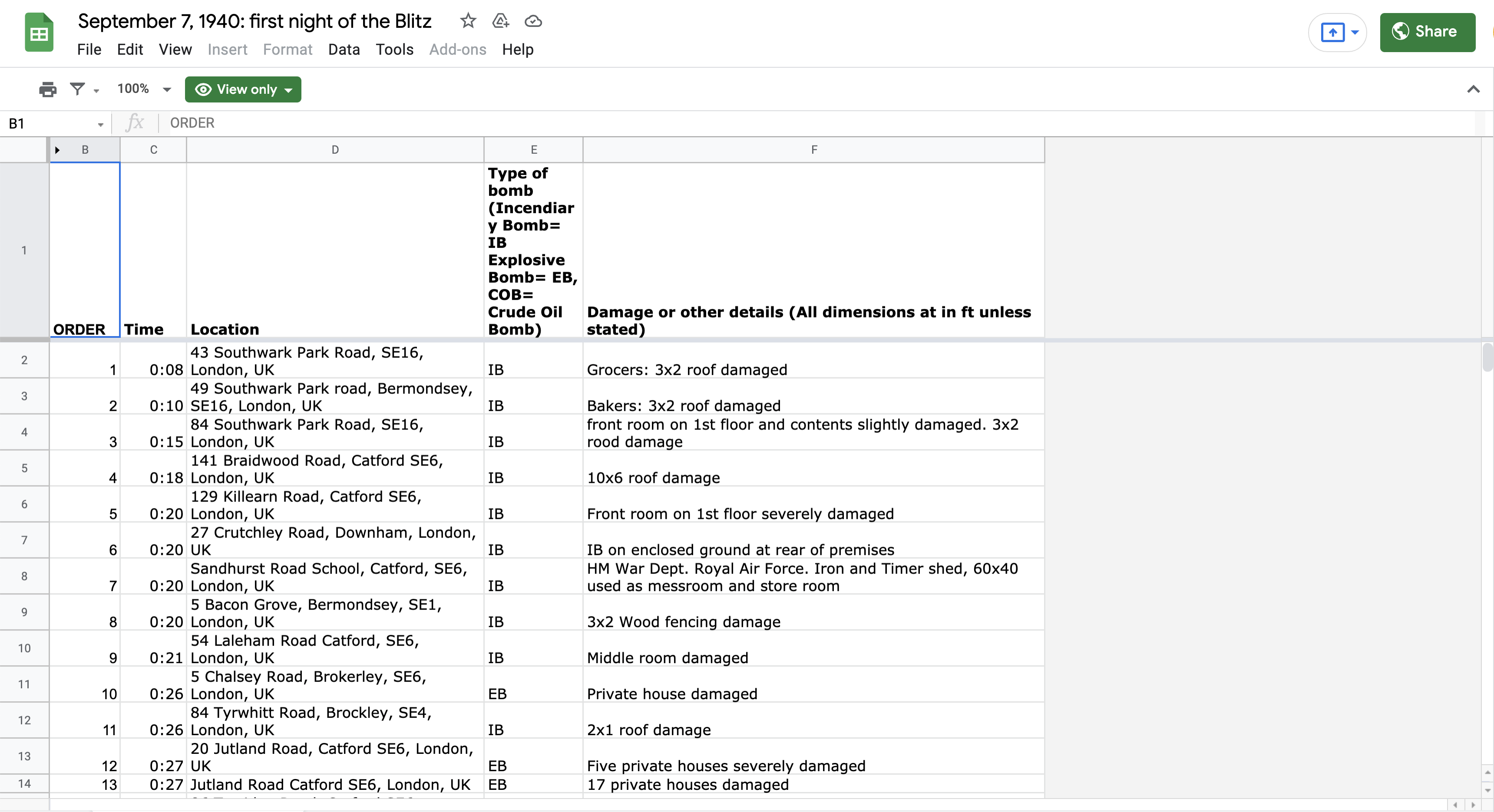

The data used was already accessible through a combination of resources listed in the bibliography, the focus of the research was not historical.

In order to be able to conduct research based on a practical application, the technical implementations all rely on available uses. The framework is mobile AR for it is the one that is currently democratised for a large portion of smartphone users. Nevertheless, in the theoretical part, we will intend to expand on this study to take a prospective approach laying down possible outcomes of evolutions towards other devices such as AR glasses or even AR lenses.

The experience: building a narration through a mobile application

Markerless AR: GPS based

Placing augmented reality objects at specific “real world” locations is a central part of the work that appeared more complex than expected. Marker-less AR (one that is not using a tracked image or a surface to appear) is regarded as one of the most promising evolutions of Augmented Reality. It is still at the early stages of development and requires high coding skills. After experimenting with different strategies including the use of MapBox, and coding with the Vuforia for Unity I decided to make use of an add-on that seemed to give the best results for the project: AR+GPS location by Daniel Fortes, that I adapted to my project.

This allows to specify GPS coordinates to each object or to instantiate them with a set of coordinates. The accuracy is still limited, with a margin of error of 10 to 20 meters.

The main, major issue is the fact that it doesn’t take into account the environment. This is not proper to this code, but to the lack of AI tools that would enable an understanding of the surroundings. Overcoming those graphical glitches would require powerful Artificial Intelligence applications that are not yet accessible to a broader audience.

Graphical elements

Different objects are scattered at the positions of the impacts. Each of them signifies to the user a location and gives a sense of elapsed time between bombings.

When running into a burning fire, one will understand that an explosion has happened at this location in the past 3 hours.

If the user sees smoke, the explosion happened earlier, between 3 to 5 hours.

For the explosions that are about to happen, a timer runs live, counting down the time remaining before the impact.

These animated objects were made using the add-on package “Particle elements” in Unity.

When entering a radius of 50 meters within a high-risk zone, one that has just been impacted or will be in the coming hour, a filter will modify the aspect of the environment. This is meant to alter the attention of the user and contribute to the spatial narration through a visual trigger.

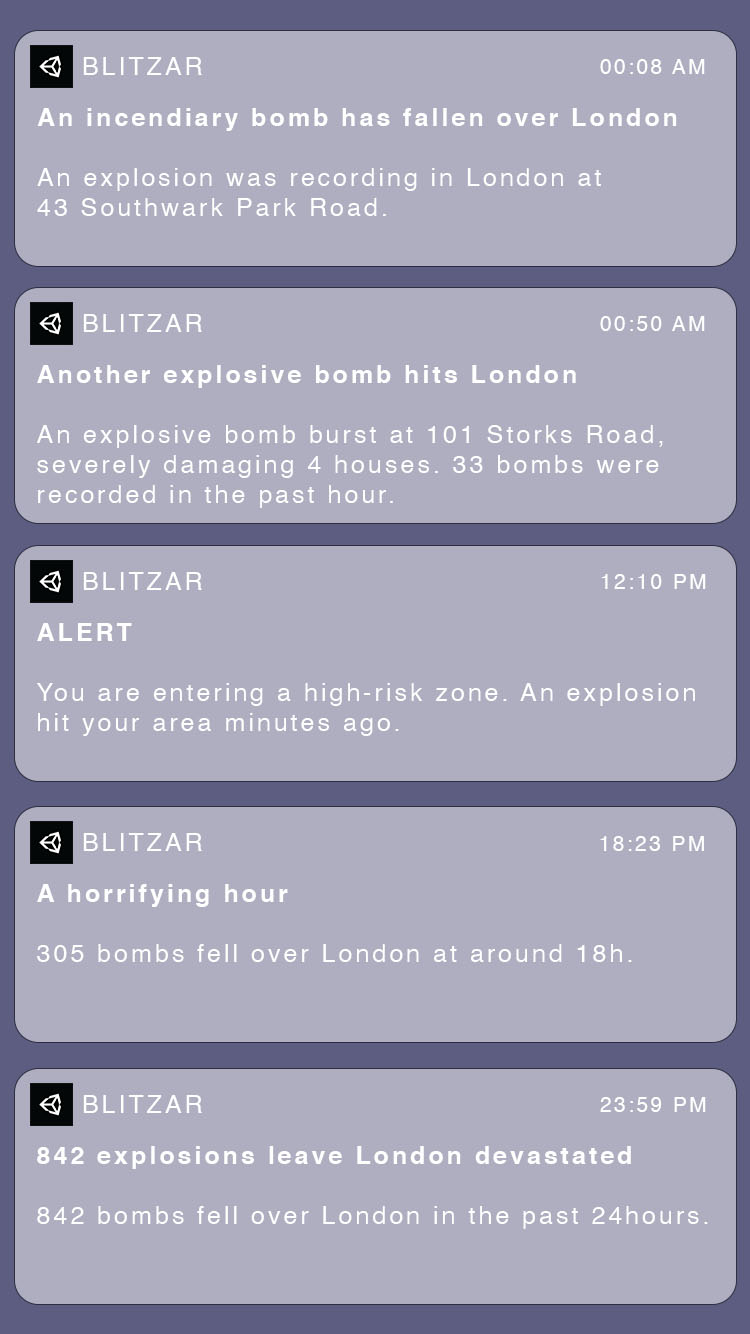

Notifications

When activating the application, the user will receive notifications. They will start appearing at 00:08 of the following night, alerting them of the first recorded explosion.

The notifications can be related to the events, giving a real-time feel of the rhythm of the explosions and when the app is open, they will give information relative to what the user is perceiving in space. This is achieved through a code inside unity, that will detect the GPS position of the phone.

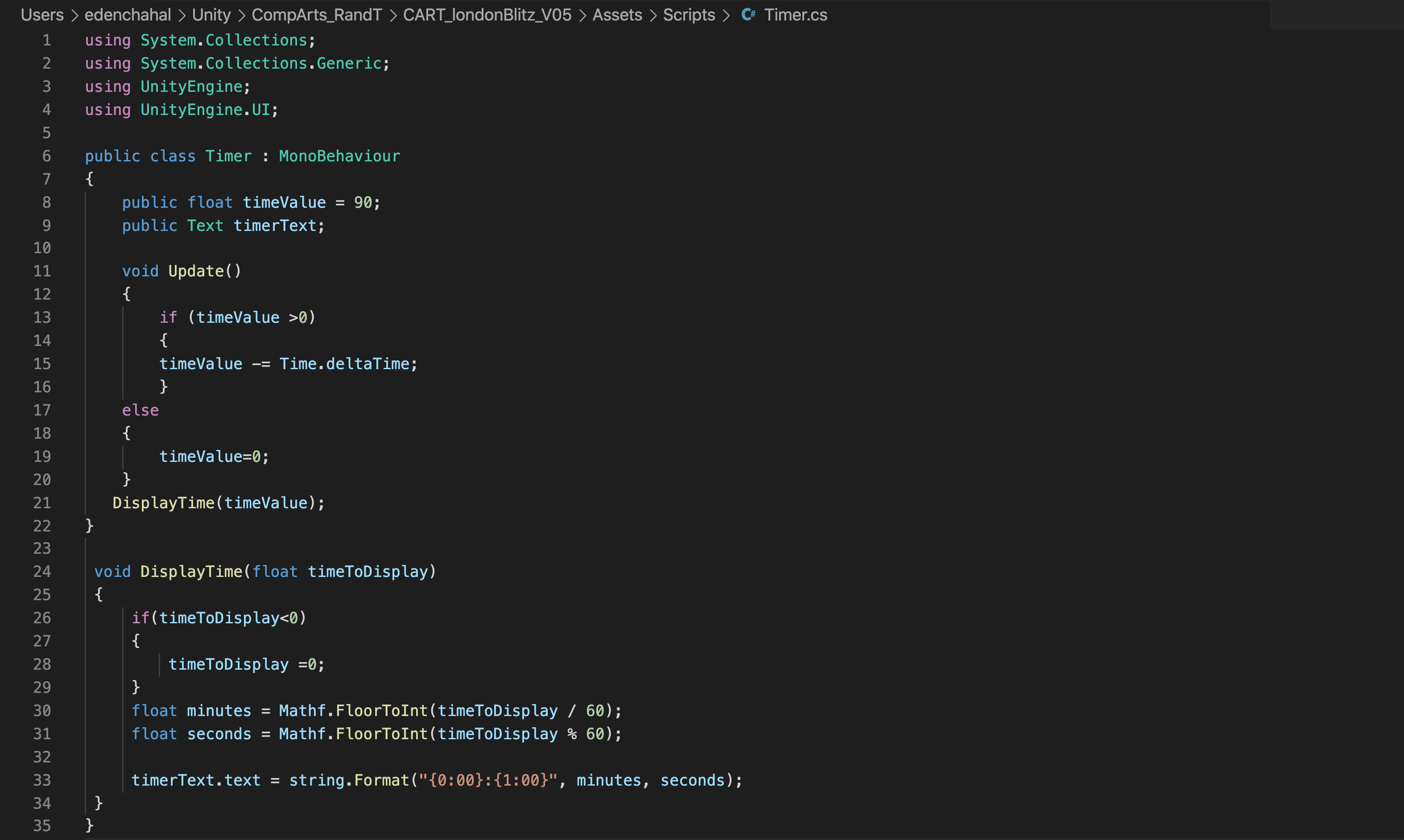

A time-based experience

In addition to the spatial dimension of the experience, a sense of time can be conveyed by this media, which could not be achieved through a map or a text.

This is implemented through the notifications, but also with the timers that can be encountered during the strolls, and that has a script attached, counting down the time remaining before a blast.

It is also embedded in the fire and smoke objects as mentioned previously.

The aggregation of those elements contributes to building a sense of the elapsed time between bombings for the user.

Commemorative blue plaques

In addition to the application was made as an experiment interacting with the physical environment. When a commemorative Blue Plaque is encountered, it can be scanned and will expand, giving more information in the form of photographs and text. It was achieved using the add-on packages ARFoundation with ARkit and AR Image Tracking.

This is an example of AR that uses a marker, here the blue plaque, as opposed to the one used for the rest of the app that doesn’t require a graphical element to be triggered.

Limits and how to go further

- The graphical aspect of these elements was not satisfactory in my opinion. It is a literal interpretation, aiming towards a realistic representation when I would have liked to work on a language propper to the app. Nevertheless, the technical implementation was difficult to achieve, and I prioritized it to show proof of concept over the ideal aspect of the animations.

- Another improvement that wasn’t explored and could participate in the narration would be spatial sound. It would be taking the user's position to adjust the intensity of sound, possibly leading them to a point of interest, without being given specific direction, or relying solely on visuals.

- As stated previously, the accuracy of the GPS is limited and doesn’t incorporate an AI technology that allows it to understand the environment. It can be misleading as objects will appear on top of buildings, cars, or people passing by.

- Another important progress would be to find a way to scale up the app computationally. Instead of manually entering the GPS coordinates and attaching a timer to each object, which wouldn’t be efficient if it was meant to be expanded to the whole city of London.

- A wider variety of graphics could give more information about the types of explosions which aren’t detailed in this demonstration.

The city through the eyes of Augmented Reality, a shift of representation and perception

Introduction

French philosopher Michel Serres points that in the world we live in, technology hasn’t reduced distances, it has canceled them. This research intends to explore how we could be having different experiences of space while being physically in a shared one.

Augmented and mixed reality could modify our perception, become extensions of our senses, in a seamless way. The condition of sharing a moment might not be related to the fact that we are standing in the same physical space. What effects could that induce? If AR was democratized, could it replace the digital objects that are part of our intimate environments? Those objects have been the mobile receptories of our digital lives, what if the next interface was our physical environment? Could our cities become an interface for augmented reality? How would we build and plan cities within this new paradigm? If it came to merge seamlessly into our bodies and our everyday life, in what ways would it affect our interaction with the city?

“The most profound technologies. are those that disappear. They weave themselves into the fabric of everyday life until they are indistinguishable from it.”

With this sentence, Marc Weiser introduced an article about The Computer of the 21st Century for the Scientific American review, in September 1991. He was a computer scientist known for his role as CTO (Chief Technology Officer) of Xerox PARC (Palo Alto Research Center) and for his work on ubiquitous computing, a term that he coined in 1988.

With his tropism towards computer science, he draws an analogy with the way that writing, which he refers to as the first “information technology”, introduced a representation of spoken languages, and is now ubiquitous, “a constant background presence in industrialized countries”.

According to him, the key relies on the fact that it doesn’t require our “active attention”, and can be absorbed in a glance. The target of computing would be to reach the same level of literacy, which leads populations to use them without being aware of it.

Namely VR, but not AR, although the description that is being made of the desired achievement of computing applies to what we now know as Augmented Reality.

The article gives an extensive insight into strategies and aims that we have since seen spread and develop widely over the past thirty years. What we consider new technologies that are changing our behaviors nowadays already existed decades ago, and were being studied and directed within those research labs. That should invite us to consider the research undertaken by technology companies in 2021 without disregarding them as pure commercial announcements.

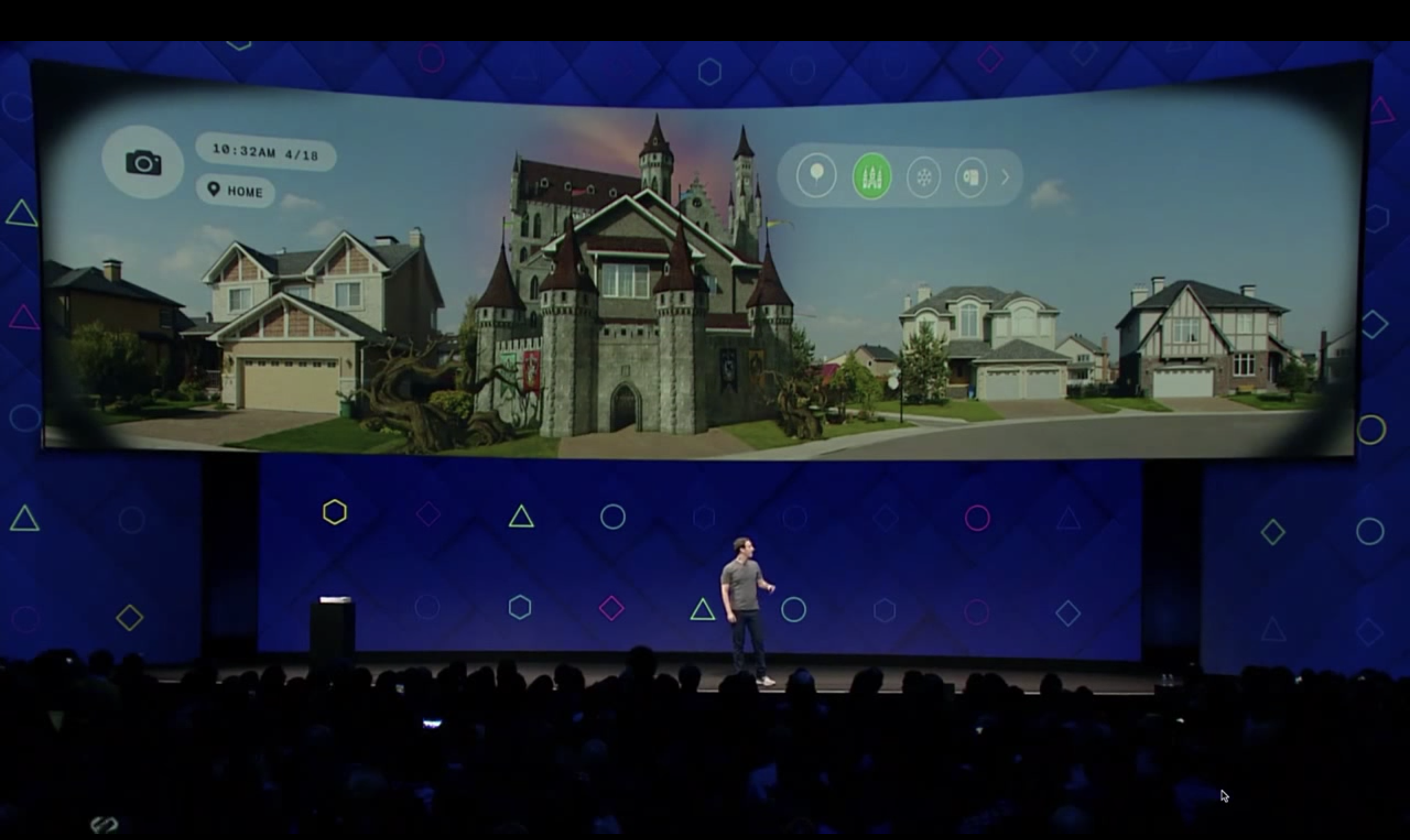

Learning from Facebook?

In line with other leading tech giants such as Google and Apple, Facebook has invested massively in research and development for VR and AR. In 2017 a fifth of its employees were dedicated to this sector. The same year, CEO Mark Zuckerberg at the annual F8 conference delivered his vision of what our built environment could be evolving towards with the projects of his company expanding.

He describes the disappearance of the ecosystem of objects that surrounds us. Appliances such as television, or even mobile phones wouldn’t have a utility with AR glasses making every experience tailored.

This would also be true for cities and architecture. Environments would be customizable, limiting their visual and cultural dimension in the physical world.

With the limits of the interfaces we use today, the screens between our physical and digital worlds fading, the changes would diffuse in all aspects of our lives, blurring the limits between the two dimensions.

It would also be a major shift in the way humans come together, in what shared, common spaces signify. If Facebook, like others, is looking at how to get closer to ubiquity, having seamless shared experiences at a distance, being physically together might not mean sharing the same perception of the environment. This is true without the digital dimension but could be exponentially enhanced.

The vision deployed at Facebook allows a flexible, fluid environment. It could open artistic expressions, and even, unwillingly, allow people to organize what they would want to see in the city they evolve in, without oppressing signs of power.

At the same time, if our realities don’t primarily meet in the physical world anymore, the power that crystallizes in the public space could also be reinvented and might change hands. It is yet to be defined to whose benefit, although the investments and research effort developed by the companies previously mentioned gives a direction.

If we can choose our realities in a customizable, fluid manner, would we still fight to change the manifestations of power in the physical world? If this technology is likely to expend, possibly at a fast pace, to what extent is it desirable?

Nothing left to burn: Cities as the place of contestation of power, and common social struggles.

In the book Rebel Cities, David Harvey describes how cities have been the receptacle of social changes, revolutionary politics, and utopian projections. He also highlights to what point they are the center of “capital accumulation and the front line for struggles over who controls access to urban resources and who dictates the quality and organization of daily life.”. Through his reflection he asks who holds the power, “the financiers and developers or the people?” Concluding that cities are both the place of capital and class struggles.

The possible changes evoked in this text could modify those mechanisms. The disappearance of physicality would modify the ways of confronting it collectively.

The opacity of possible systems to come would also reserve disruption to a very limited part of the population, one with access to technical languages and literacy, to financial means, and to time, in unprecedented proportions.

In his book “La Dynamique de la Révolte” (The dynamics of Revolt), writer Eric Hazan analyses 220 years of uprisings, describing how in revolutionary history, the biggest changes don’t come from the rise of political ideas but from the rage of the people. Where does rage burst in the cities of Facebook? How do you put pressure on those who hold this power?

Conclusion

The research was intended as an observation on how technologies have been exponentially merging into our daily lives, into our bodies and senses, redefining the ways in which we build common spaces, create and maintain a social structure.

The radicality of the subject makes critical research appear fundamental. It seems all the more relevant to conduct it through practice, by using and building experiences with the tools that we are reflecting on. Working along with Computation, Art can play a role of a revealing agent, to highlight the possible human and socio-political changes induced.

Inviting to the table of reflections current actors and makers of cities such as architects, planners, engineers, could add complexity to the discussions. Without placing the current system as an ideal, a balance of possible futures should include more varied propositions, built collectively, that might challenge and disrupt powerful technology giants.

Bibliography

On the imageability of cities and the construction of their representation

- Lynch, Kevin. The image of the City, Cambrige, Mass:The MIT Press, 1960.

Ubiquous computing and the redefinition of HCI

- Weiser, Mark. The Computer for the 21st Century, Scientific American, September 1991.

Perception of space and human senses

- Serres, Michel. Les Cinq Sens (The Five Senses). Paris, France: Hachette Pluriel Editions, 2014.

- Magritte, René. Les mots et les images (The Words and the Images). Brussels, Belgium: Espace Nord, 2017.

- Bachelard, Gaston. La Poétique de l’Espace (The Poetics of Space). Paris, France: PUF, 2012.

Focused on the medium of Augmented Reality

- Schmalstieg, D. Augmented Reality: Principles and Practice. Boston, Massachusetts: Addison-Wesley, 2016.

- Aukstakalnis, Steve. Practical Augmented Reality : A Guide to the Technologies, Applications, and Human Fac- tors for AR and VR, 2017.

- Benford, Steve. Performing Mixed Reality. Cambridge, Mass: MIT Press, 2011.

- Zuckerberg Mark, conference at the F8 summit 2017.

Possible changes that AR/XR could induce to the practice of architecture and more widely to the way we conceive and build our physical environment.

- Hopkins, Owen. Augmented reality heralds the abolition of architectural practice as we know it, 2017, Dezeen, https://www.dezeen.com/2017/01/31/owen-hopkins-opinion-augmented-reality-heralds-abolition-cur rent-architecture-practice/. Accessed 18th of February 2021.

On public space and the materialisation of power.

- Harvey, David. Rebel Cities: From the Right to the City to the Urban Revolution. New York: Verso, 2012.

- Hazan, Eric. La dynamique de la revolte. Paris: La Frabrique editions, 2015.

- Lefebvre, Henri. La production de l’espace (The production of space). Paris, France, 1974.

- Lefebvre, Henri. Le Droit à la Ville (The Right to the City). Paris, France: Economica, 2009 (3rd edition).

References of art practices making use of Augmented/Mixed Reality by bringing situated additional layers to our direct environment:

- Studio Above&Below

Digital Atmosphere “The piece looks at how technology and art can illuminate the quality of our air, usually invisible to the naked eye, and bring us an urgent step closer to a sustainable, zero-emissions future.” “(...) Atmo Sensor picks up the invisible change of air quality of the immediate environment, which is translated into an evocative visual simulation, visible to the viewer through the AR headset.” https://www.studioaboveandbelow.com/work/digital-atmosphere

- BLAM, History Bites

https://blamhistorybites.org/

History Bites. An AR experience by a non profit organisation based in London, BLAM. It allows users to visualise sculptures from black history.

- Olafur Eliasson

The Wunderkammer project. Wunderkammer is german for “cabinets of curiosities”. Danish artist Olafur Eliasson developed this AR project that projects realistic-looking visuals of animals, objects and weather phenomenons into one’s home.

- Jakob Kudsk Steensen

His work focuses on Augmented Reality and emotions, I would be looking more in detail at the way he uses storytelling to create a sense of immersion in other worlds.

- Catharsis, Serpentine Gallery London.

The deep listener, Serpentine Gallery London.

- Yasaman Sheri

Works on how AR allows us to sense beyond our human capabilities.

References specific to the making of artefact application and data on the city of London under the Blitz.

Mapping the WW2 BombCensus

http://bombsight.org/#14/51.5301/-0.0070

24 hours of Blitz Sept 7th 1940

Guardian Data Store & London Fire Brigade Records

“Data previously transcribed from the London Metropolitan Archives from the London Fire Brigade Records, recording: time bomb fell, location, type of Bomb and Damage or other details. Made available via the Guardian public data store.”

https://docs.google.com/spreadsheet/ccc?key=0AonYZs4MzlZbdGZyNDJtd0d0ZFhvRTBxOFAyMkRFeUE&hl=en

Weekly Bomb Census for 7th to 14th October 1940

The National Archives

“This background map records the locations of bombs which fell shown in different colours to show different days of the week.

For the period of the Blitz, there are 559 weekly map sheets for region 5 alone.

Due to time and cost constraints the project we are using weekly bomb plots for the first week of the reporting period- for Central and East London (7th to 14th October 1940) (HO193 / 01).This will provide an overview of daily intensity and proof of concept to hopefully justify the remaining weekly maps to be integrated.”

Addons; Packages and scripts

Unity3D with AR Foundation; ARKit; AR Image Tracker; Particle System Package

3D+GPS Script by Daniel Fortes