FaceTime

FaceTime is an interactive timepiece which incorporates the viewer's face into the time display. It is built using OpenFrameworks with face tracking video processing.

produced by: Julian Burgess

Introduction

FaceTime has two scenes, the first shows a black screen with the current time as a series of straight lines connected to a bright red mouth shape. The mouth tracks that of the viewer and when they open their mouth very wide it makes the lines thicker and brighter. Scene two tracks the face of the viewer and crops an image around their face and redraws this on the screen multiple times with a delay and also behind each numeral of the clock display.

Concept and background research

The work of Zach Liberman inspired me greatly. He has done a fantastic amount of work around face tracking, where the viewer's face or parts of it are remixed and altered in real time. Recently some of them have been added to Instagram as filters which are now very popular. I am also really interested in time as a concept and how we relate to it and timepieces which show the passing of time as an integral part of the artwork. I wanted this work to incorporate those ideas. The focus of my idea was that as a person viewed the timepiece, they become integrated with it. Ideally, the duration spent looking at the work would be reflected by back in the amount of time their face continues to appear in the work after they have left.

Technical

This project was built using OpenFrameworks. I initially used the ofxFaceTracker addon, but switched to ofxFaceTracker2 as I found it had better performance, also it had the ability to recognise more than one face at a time, but I didn't get around to using this feature in the end, and also found that it had a severe performance impact when trying it out. I also added CLAHE to improve contrast in the image before applying face detection. I learned about CLAHE from this helpful blog post by Zach Lieberman on a project he had implemented which uses face tracking. Adjusting contrast is especially important if I want to install the work in an area which has natural lighting since the contrast of the faces can be extremely variable depending on lighting conditions.

I extensively used the ofxDatGui addon for dynamically controlling variables within the code. It was really helpful as compile is quite long and so this makes tuning and experimenting with variables much simpler. It also has folders which I used by passing a reference to each scene on startup, this made it so much easier to work on a single scene or part of the code.

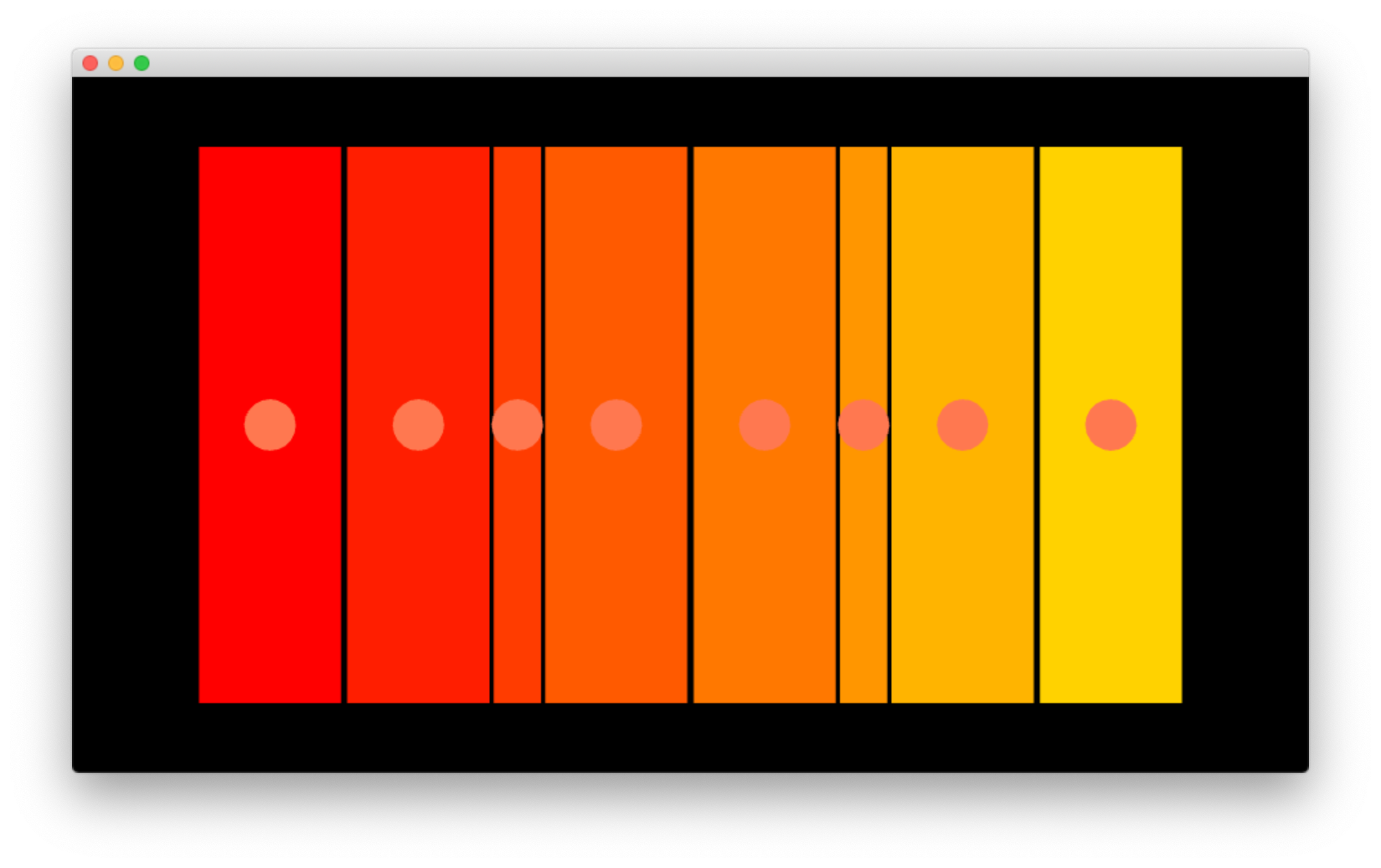

I found writing the code quite challenging as I'm not very familiar with C++ and the differences relative to JavaScript, which I use a lot more. I spent a lot of time getting the numerals of the clock to align so that they didn't jump around as the "1" numeral is thinner than the "8". In the end, I made equal-sized blocks, in which each numeral would be centred and then had an adjustable width for the block that contains the colon (pictured below). It was a huge amount of coding work for something which looks very simple, so perhaps I missed a trick, or there is a good library which could have done most of this for me.

I also had problems with the text in the mouth clock scene. There I was basing my code on the OrganicText example we developed in Term 1. The trouble was that the code for creating points measures the rendered width of a glyph, so again an "8" is wider than a "1", and then I wanted to render the same text again over the top. In the end, I stored the width of the "8" during the setup code and then reused it. However, it still needed a little offset to adjust depending on screen size. I used a Logitech external webcam as I found it had better resolution and contrast than the build in iMac webcam.

Future development

There's a lot more development I could add to this work. I started on the ability to switch scenes, but only had time to implement two scenes. Ideally, I would add many more scenes, also adding more dynamic variability within the scenes. I'd also like to look at changing the parameters of the scene more with the time so that it reflects ideas around when the person is viewing the piece. So at night, it would show a different style of interaction compared to daytime, but also perhaps it has a different style in winter compared to summer.

Self evaluation

Overall I was pleased with the work. At the exhibition, it was really enjoyable to watch people interacting with the work without knowing that I was the author and to see how they understood and worked with the piece. It seemed the mouth clock scene was more popular than the webcam delay, so I left it mostly playing that scene. People understood the mode of interaction quickly, but the face tracking itself wasn't that reliable. There's another library OpenFace which I think might be more reliable, but it wasn't clear how easily I could use it with my existing OpenFrameworks code, so I didn't try to use it.

My code was quite well structured, but I'm still struggling with understanding how to pass references around in C++, so it limited some of the modularity of the code, and also made it harder to reused code between scenes.

References

- ofxFaceTracker - https://github.com/kylemcdonald/ofxFaceTracker

- ofxFaceTracker2 - https://github.com/HalfdanJ/ofxFaceTracker2

- ofxDatGui - http://braitsch.github.io/ofxDatGui/

- CLAHE implementation code adapted from https://gist.github.com/gu-ma/eae2f72e740631a31b20eb8b2810c370