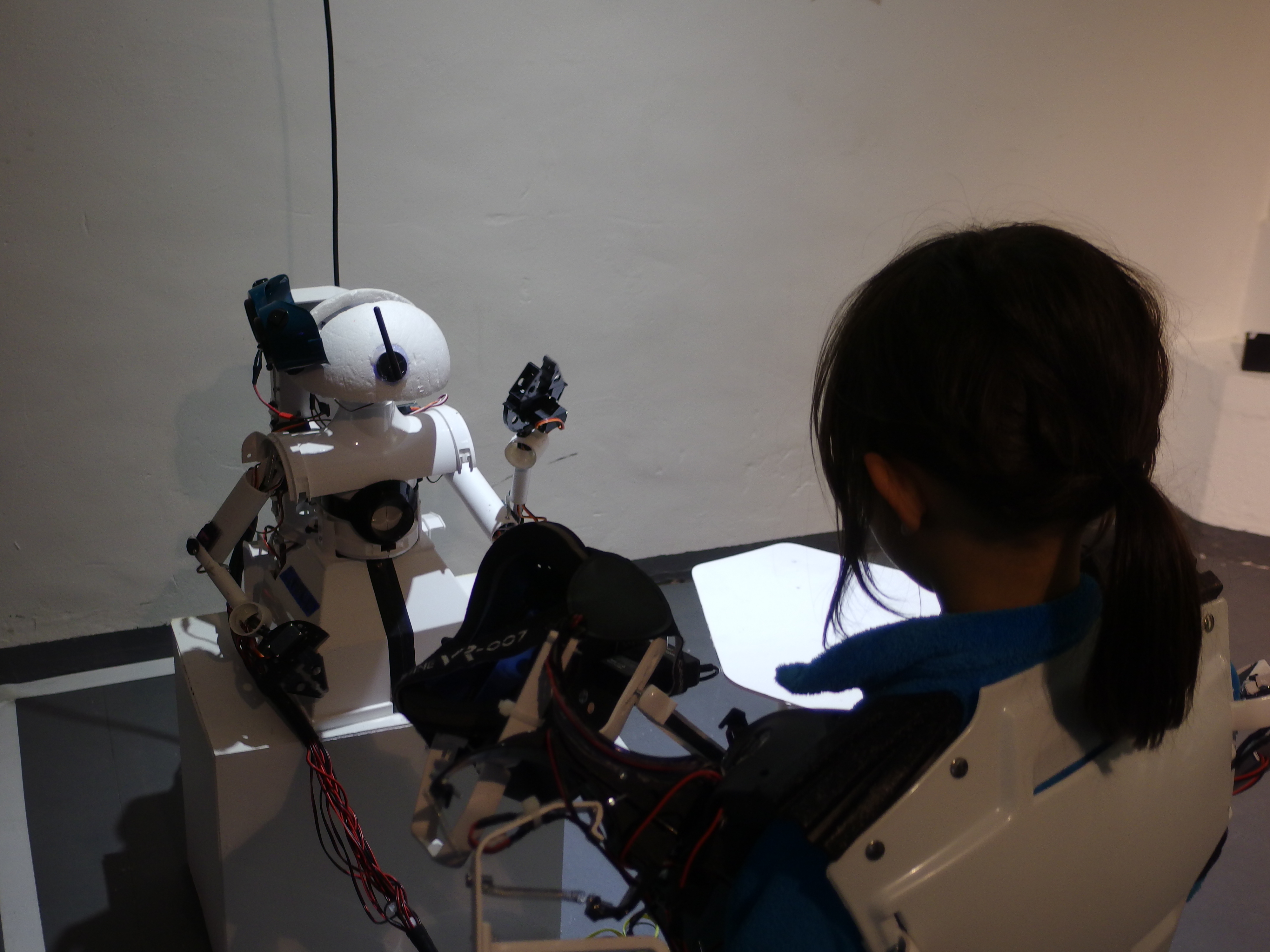

CH4RL13

CH4RL13 is a play on play, mimicry and transhumanism, reflecting our dependency on as well as de-personalization through technology. Ch4rl13 is both a companion in play (as much of technology is nowadays) and disembodiment/embodiment.

produced by: Luis Rubim

Introduction

By using technology, CH4RL13 intends to disembody the user while being at the same time an active/passive medium: active in the sense that CH4RL13 is a medium with which to interact with the world in the present, as well as a symbolism and actualisation of a technology to come and allowing the user to transcend even if to a small extent, the human, in a way asking questions of identity in a technological world, absorbing the user into the device. Its connection to a user via wire(s), symbolic of a umbilical cord, raises questions surrounding not only on our dependency on technology as well as both our present and future in our increasingly technological society: we become technology and technology becomes us. CH4RL13 is passive in the sense that the user becomes a spectator and also because the technology is encased in another (CH4RL13 will tweet photos of what it sees for example) as well as alluding to the role of passivity in interaction such as imposing constraints upon the physical actions of a user to allow the interaction to occur.

I. Concept and Research

CH4RL13 was born out of my own fascination with robots and their role in popular sci-fi culture, as well as contextualised studies and papers on posthumanism, transhumanism, the latter which is often seen as a vehicle for the former.

Having grown up in the 80s and 90s and being an avid sci-fi fan, a decade in which robots were heavily featured on screen and had a strong companionship element to them, I was always fascinated by them. Then came the 90s and the concept of fusing humans with machines gained more of a presence in sci-fi, while the transhumanist movement was gaining more traction, becoming formalised in the end of that decade, based on the work of transhumanists such as Max More,Natasha Vita-More, Nick Bostrom, Alexander Chislenko. In fact, Natasha Vita-More's Transhumanist Arts Manifesto was an integral part of the inspiration for this project, as well as some inspiration taken from other elements of popular culture of the time such as a game called One Must Fall 2097, which approached transhumanist concepts in its storyline:

“(...)The attendants begin checking your suit. You can feel the small needles

in the helmet pressing into your skull. Fifteen minutes to show time.

You feel a slight burning sensation as the drugs which connect you to

the super computer seep into your spine. A cute attendant leans over

the bed and gives you a wink.

"Nighty night... Remember me when you're famous."

Your eyes begin to shut. You blink a few times, trying not to fight the

medication. Finally, your eyes begin to close as you lose

consciousness.

METAL! You can't believe the feeling! Your eyes open, but they're not

your own. You look at your hand and flex it into a fist. You strike

the fist against your opposite palm and the sound is like two trains

smashing together. You realize you'll never get used to "jacking in,"

the feeling of power you get from suddenly becoming a few hundred tons

of dangerous equipment.

"You there, kid?" says a voice inside your head.

You now hear several voices in the background,

"Physical attributes steady. All systems mark."

You turn your head over to the body lying on the bed ten meters away and

almost 30 meters below.

You speak, your voice amplified a hundred times,

"I'm slice, Plug.Let's do some crushin'!"

It is no longer blood you feel pumping, but Synthoil. Your eyes now

show you heat dissipation factors, metal strength, weapon power, damage

scales, and some other figures so complicated you know to ignore them.

Even though you no longer have blood, it feels like your pulse is

climbing as the clock over the door nears 00:00.

"Zero hour. Let 'er roar!(...)"

- from the storyline script of One Must Fall 2097, Ryan Elam 1996

Other inspiration came from the “Robots” exhibition in the Science Museum and the “Into the Unknown” exhibition at the Barbican, both exploring robots and the world of the future.

Another central aspect of CH4RL13 is that it was made largely with found materials, namely plumbing materials. It is as much an exploration of transhumanist themes as it is an objet d'art, taking a page from the Natasha Vita-Moore's Transhumanist Arts Manifesto as well as Buckminster Fuller's concepts of DIY cultures reflecting transhumanist initiatives through transhumanist arts with the purpose of problem-solving through creativity (which later became very much the literal case with the sensor vest).

CH4RL13 is also an object symbolic of an actualisation of a technology to come and intrinsically our quest to break the boundaries of our fleshly bodies.

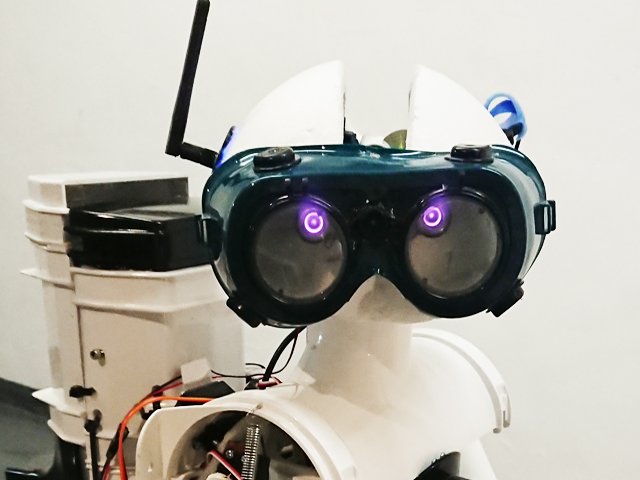

In terms of its aesthetics, I aimed at CH4RL13 looking a little like a typical 1980's scifi movie robot.

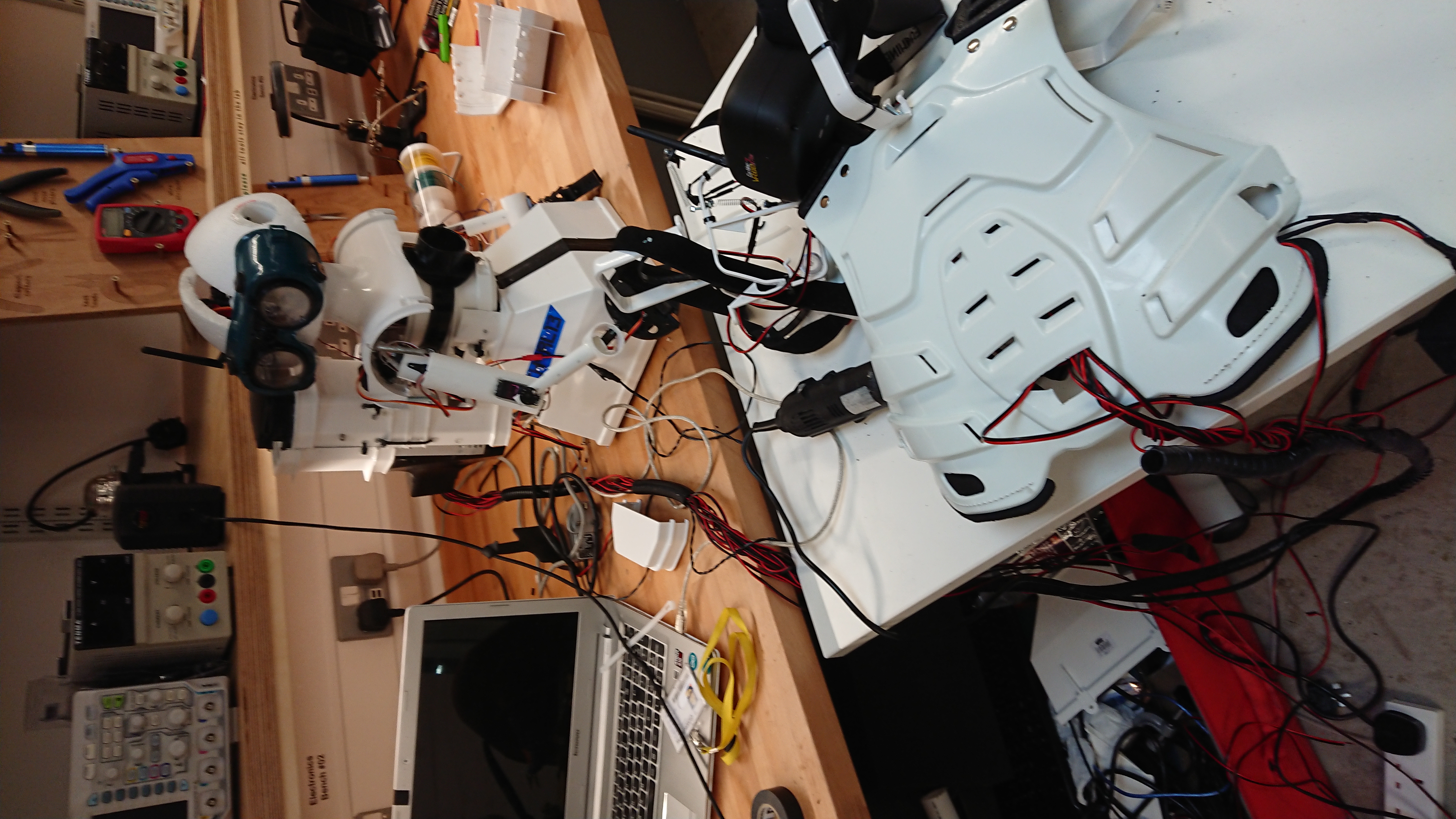

II. Development & Technical Details

IIa. CH4RL13 (the robot)

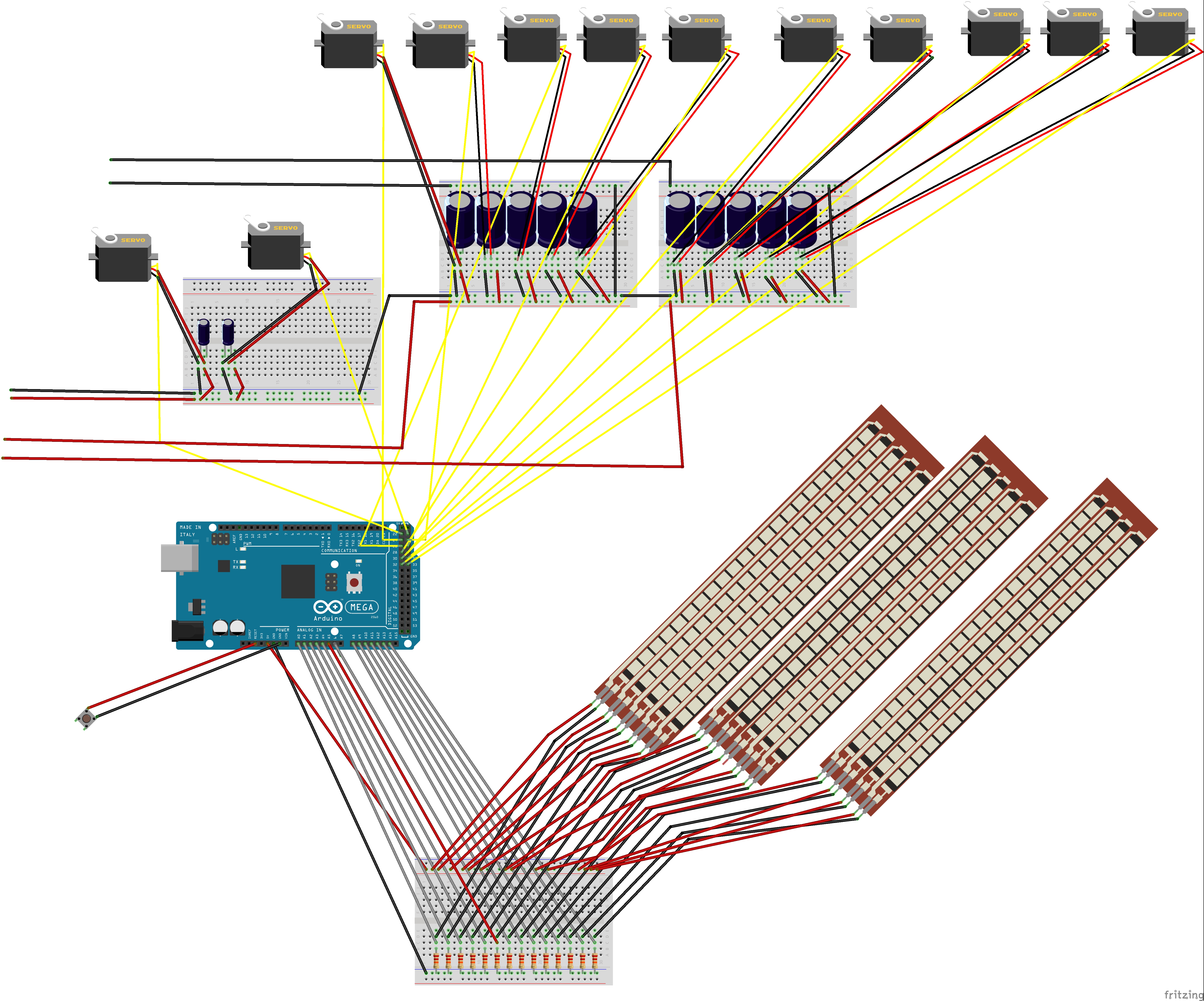

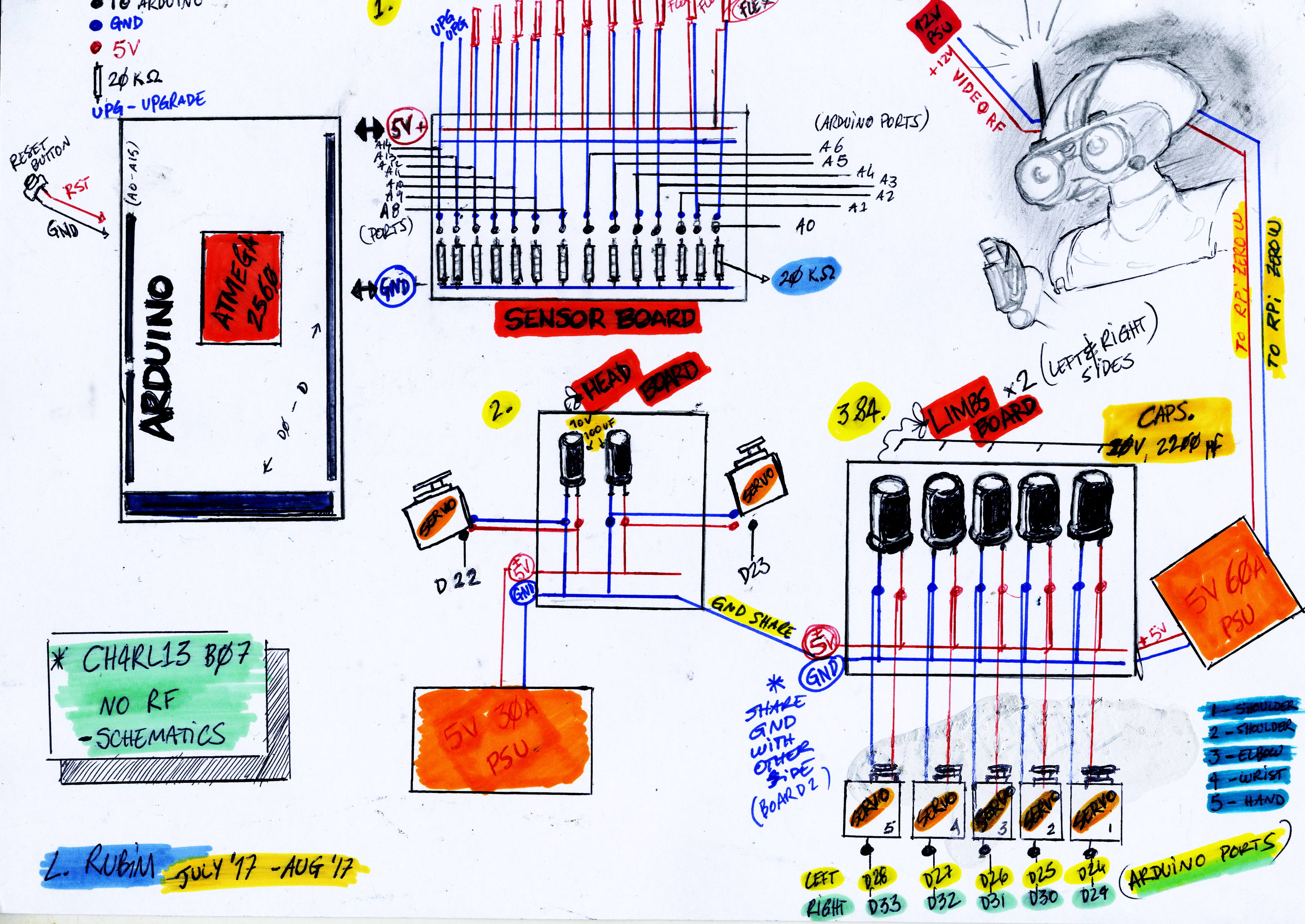

CH4RL13 at present uses simple mapping code to perform its movements. An Arduino unit receives readouts from homemade flex sensors installed on a wearable vest and maps them to servo values. There are only 5 off the shelf sensors used, which were used out of necessity due to damage during testing. The homemade flex sensors are made of Velostat sandwiched between copper tape strips. They are then connected to a 20K Ohm resistor and readouts are taken by assigned analog ports, which are then mapped to servo values, which have upper and lower limits defined in the code. Due to the power requirements of the servos, two separate power supply units supply CH4RL13 with power, a 5v 30A and 60A of the same voltage, with the arms having the more powerful unit. Large 2200 uf capacitors are installed in the arm controller units and smaller 100 uf capacitance ones to the head unit. The vision and imaging are controlled with a Raspberry Pi Zero unit using Python and a bash script to boot straight to the IR Camera used for the eyes.

CH4RL13 was originally intended to use long range radio technology with Arduino, with a Raspberry Pi Zero to take over imaging and vision using Python, as well as future proof the robot for further development. It was also intended to be mobile to allow the user to roam somewhat free within the body of the robot. There were a few obstacles with this approach on the Arduino side, which while successful to an extent (further documented here) led to a different approach in its development.

Incompatibilities encountered with the VirtualWire (which allows for RF communication) and the Servo libraries, were leading to increased complexities deemed unnecessary for the project and required further resources to be pooled within a somewhat limited budget. The incompatibilities lied in the fact that both VirtualWire and Servo libraries tried to call the same timer.

Under the RF model, there were two Arduino units, one sender installed on the sensor vest, which would read out values received from sensors placed on different parts of the user's torso and use a smoothing algorithm (based on the Arduino example for smoothing values read out from analog ports) to make the movements smoother which would then pass the values to a data structure, and a receiver unit in the robot, that would receive the data structure and proceed to map the values accordingly.

RF communication sending sensor values over a data structure using the Arduino IDE language were successful, however a multi-servo controller hat using Pulse Width Modulation (PWM), couldn't quite cope with several instructions (sensor data) being sent at once. This servo hat was what seemed a solution to the incompatibilities of the VirtualWire and Servo libraries, but it presented its own issues. With time frames in consideration, a simplified approach was undertaken and the servo hat removed. The other alternatives were using the RadioHead library, which required a longer time frame or using 3 Arduinos with VirtualWire and ServoTimer2 library. These options were vetoed, in particular the latter, as it would increase hardware complexity and take up space in the frame of the robot. Also, the ServoTimer2 library can only support up to 8 servos per microcontroller, while the robot required a minimum of 14. There were then issues with smoothing the data from the values which further validated a simplified approach under the time frame.

This simplified approach, the use of a stream of wires contained in a tube that connected the sensor vest to CH4RL13, in the end in fact added to the conceptual part of the piece, which was to symbolise an umbilical connection with technology which is much part of our human condition nowadays.

The vision/imaging unit is based on a Raspberry Pi with a Python script for the camera to start and take photos every 10 seconds. The code was then made executable using the chmod command under the Pi's operating system and added to the boot up script.

IIb. The Sensor Vest with FPV (First Person View) headset

The sensor vest aside from its functionality had to make the user feel and move like a robot, limiting somewhat range of movement and make the user self identify with it, visually also. Hence the choice of an ABS white plastic motorbike vest of small/average size.

Originally, the vest was meant to use gyros for head swivel movements installed on the FPV headset (see here) and some shoulder rotation movements, but it soon became apparent after experimentation that it would consume much of the time frame. While raw data was easily pulled out from them, making them translate into any practical movement would take time from other project tasks. As such, a decision to use flex sensors for these specific purposes was taken and problem-solving led to the development of the leverage system in the vest.

This system allows for minimal flexing of the sensors which translates into significant value changes in the data that is then translated into servo position values. This protects the sensors from being severely misaligned with usage and out of calibration, however some very minimal calibration is still needed after a relatively high number of uses. Using a series of plumbing brackets and springs, the shoulder mounted flex sensors allow for vertical raising of the shoulders, with some adjustment for users shoulders made possible by use of small cylindrical plastic tubes which are moveable. This system also allows for the swinging of the arms without affecting the shoulder mounted sensors and therefore how this movement is translated to the servos. A flexible bracket system was then devised behind the vest in the shoulder blade area to allow the swinging of arms to be translated into the corresponding movement on the robot.

Relaying vision to the RF receive headset was done by adding an adjustable power video transmitter, which was soldered to the Raspberry Pi TV-Out pins. A dedicated power supply was added as the voltage for the transmitter differed from that of the robot, ranging from 7-24v corresponding to increased area coverage. The headset was also a base for the head movements by attaching it to the head flex sensors mounted to the left shoulder and chest.

IIc. Stress testing the Servos

Testing the servos was paramount and always is, if not at least in this case because servos were purchased in bulk and as such some sample variance and differences in performance as well as durability may be encountered, even if the servos are of the same brand and model. The servos were tested for durability while integrated and temperatures monitored using a FLIR camera. Temperatures averaged around 35C during a 15 minute period with some servos hitting high temperatures, which meant that they had to be replaced during testing. All circuits were primarily tested repeatedly to ensure circuitry was safe, not shorting and not impacting on servo performance.

III. Further Development

CH4RL13 was built with further development in mind, a platform for an exploration/telepresence robot in addition to what it conveys as an objet d'art. A USB port can be found in the back of its head, which will allow in the future for A.I. and Computer Vision, with the Raspberry Pi being the brain behind everything it does, connected to the Arduino that controlls its limbs. The intent is to give it a certain autonomy with the possibility of a human user override. It will also revert to its original wireless state at some point and use the RadioHead library with smoothing algorithms to increase movement precision.

IV. Self Evaluation

It has been an extremely educational journey with a lot learned during development of this project, which became a project very close to my heart. Despite the technical outcomes not being quite what I had projected in terms of features and code, I am very pleased with CH4RL13 and the reactions of visitors. The interactions were all very positive and it was interesting to see how the concept actually translated, particularly in terms of embodiment: the longer users used CH4RL13 the closer their movements resembled, raising so many questions in terms of the themes approached. Interestingly, the sensor vest and therefore CH4RL13 worked better with children, despite it having been built around an adult's frame (myself). This evoked in my mind studies (as well as scifi pop culture references), which seem to indicate that children are better robot pilots or interfacers, however I'd like to develop a way of calibrating the vest to a user that goes beyond mere physical/mechanical calibration.

It was equally satisfying to receive very positive feedback on the aesthetics of it. Nevertheless, I wish I had achieved smoother movement and made headset use more comfortable, but that will now be for future developments. Code had to be simplified to the minimum required for interaction, but I believe it actually translated into an example of the power of simple code and how much can be done with it, as well as with some alternative thinking.However, better research on the limitations of, as well as how certain technologies are in reality implemented could have saved me a lot of time and likely greatly improve outcomes.

References

Colgate,E., Hogan,N.. (1989). The Interaction of Robots with Passive Environments; Application to Force Feedback Control. Available: http://colgate.mech.northwestern.edu/Website_Articles/Book_Chapters/Colgate_1989_TheInteractionOfRobotsWithPassiveEnvironmentsApplicationToForceFeedbackControl.pdf. Last accessed 27 Jul 2017.

Haraway, D.. (1986). A Cyborg Manifesto. Available: http://faculty.georgetown.edu/irvinem/theory/Haraway-CyborgManifesto-1.pdf. Last accessed 19th July 2017.

LaGrandeur,K.. (2014). What is the Difference between Posthumanism and Transhumanism?. Available: https://ieet.org/index.php/IEET2/more/lagrandeur20140729. Last accessed 19th July 2017.

Vita-More,N.. (2000). The Transhumanist Culture. Available: http://natasha.cc/transhumanistculture.htm. Last accessed 22nd July 2017.

Raspicam Code based on

https://www.raspberrypi.org/learning/getting-started-with-picamera/worksheet/

Other

One Must Fall 2097,1994 [Game]. By Rob & Ryan ELAM, Diversions Entertainment/Epic Megagames.

Short Circuit, 1986 [Film]. By John Badham, TriStar Pictures.

Robots Exhibition @ The Science Museum - https://beta.sciencemuseum.org.uk/robots/

Into The Unknown @ The Barbican - https://www.barbican.org.uk/whats-on/2017/event/into-the-unknown-a-journey-through-science-fiction