ShanHaiJing Reimagined + Protean Visions

Two approaches to explore the imaginary visions of Machine Learning.

produced by: Yuting Zhu

AI learns, identifies, simulates – but can it imagine the surreal? What does an AI dream of? Is there something an AI can envision but a human cannot?

To explore these questions I put the selected Machine Learning techniques to the test. From inventing mythical creatures, to giving shapes to emotions, and to visulising self-evolving scenarios based on pseudo storylines, these ML models never failed to produce new surprises. Through provoking and enquiring of the imaginary visions from machines, one acquires unconventional perspectives for seeing and reconstructing our own realities.

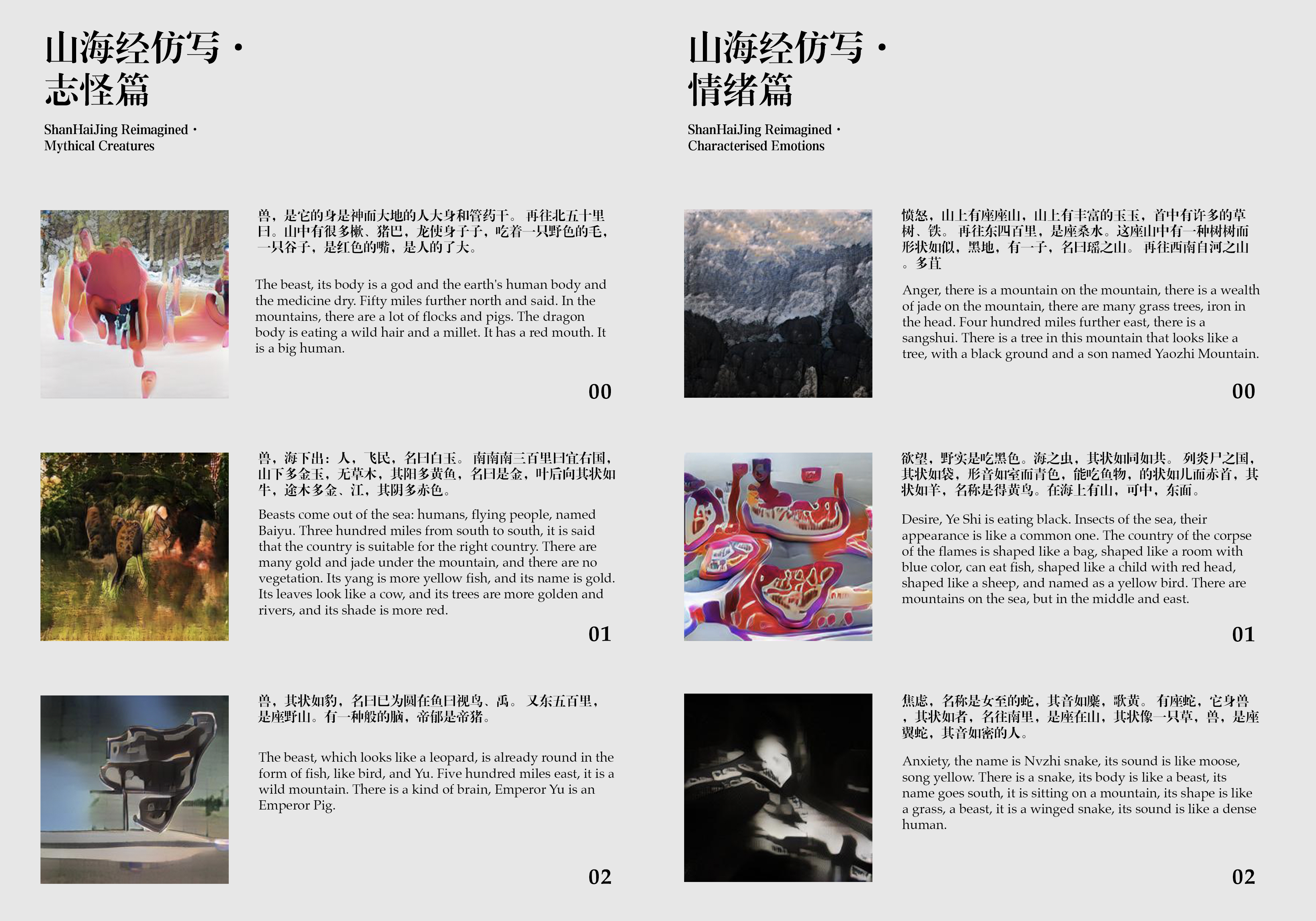

Part 1. ShanHaiJing Reimagined

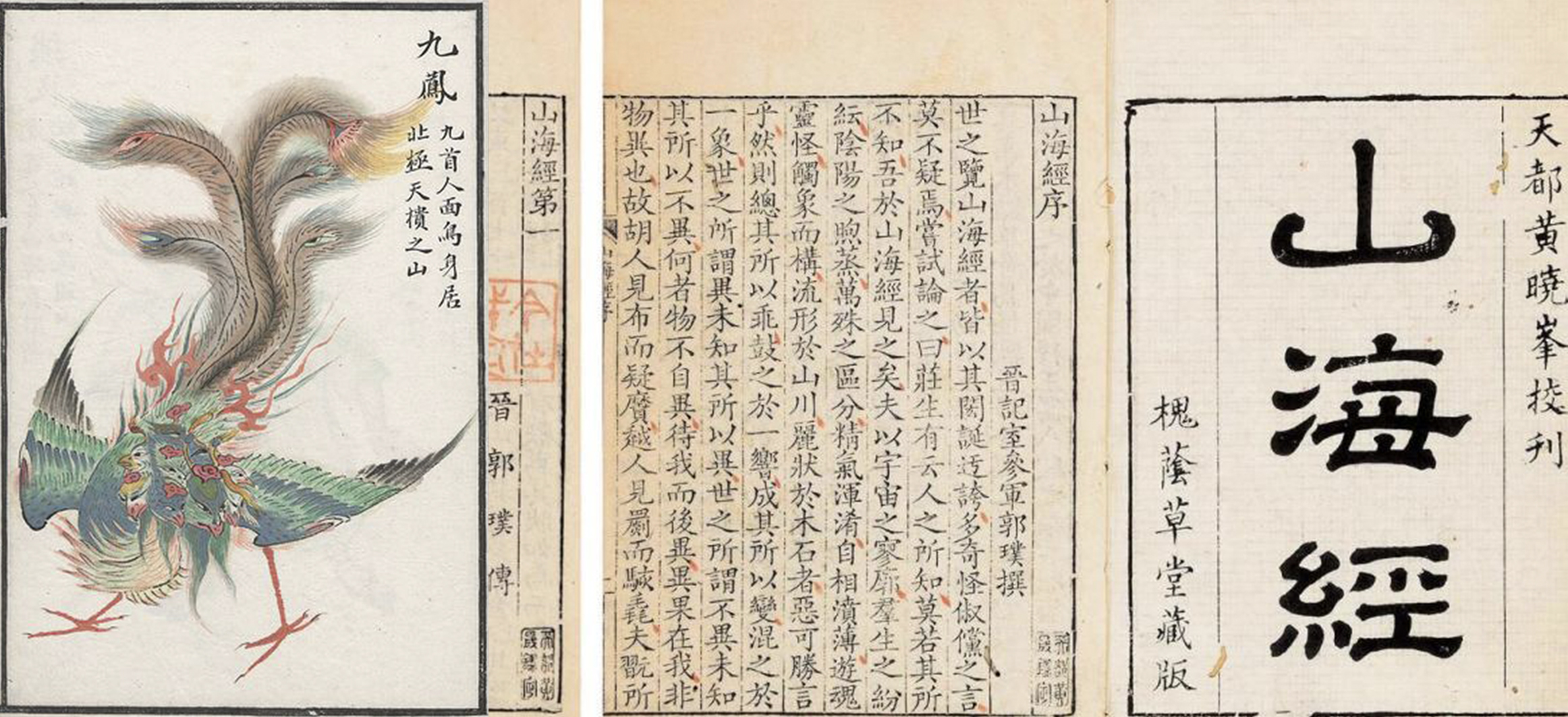

《山海经》(Shan Hai Jing), or The Classics of Mountains and Seas is an ancient collection of Chinese mythology and mythic geography around the 4th century BC. Its documentary-styled accounts of fanciful creatures (famous ones including the Nine-tailed Fox) and vast natural landscapes have fascinated the Chinese people and inspired folklores for centuries.

In this part of the project I wanted to enable an application to invent its own decriptions of mythical creatures and visualise them.

I started with training a text-predicting charRNN model locally on Shan Hai Jing – after carefully cleaning out a large number of archaic characters and symbols for footnotes – following ml5's training instructions here.

Then I found this fantastic model for image generation on GitHub: AttnGAN (repo). It is a tool for fine-grained text to image generation with Attentional GAN, and is pre-trained by Tao Xu et al. on the COCO image dataset. It is able to analyse a given description and intepret the priority of features, then patch these features up as a coherent sketch – like how a kid would "imagine"!

I hosted this model on Runway, and in order to communicate it with charRNN I built an html interface and embedded the Google Translate API (because attnGAN only supports English at the moment). Its working process wasn't the easiest – I made a note on this and marked it as a key optimisation task for the second part of the project later.

Here is a gif demo of the interface:

And here is one of the results it generated on the theme "Animals":

It is interesting to see how often in the AI's imagination a mythical creature would have both the features of animals and of landscapes as if they're integrated, and made you think why not? It seems a good new perspective contradicting humans' conventinally categorical views of the non-human beings. And why stop there? If the machine can see "animals" in the ways we do not, how about asking it to characterise "Emotions" in the forms of mythical creatures as well:

These seed words for emotions did not originally exist in Shan Hai Jing around the 4th century BC. Therefore the AI can generate these visions of its own without relying on conventional metaphors and associations from the human culture, which reads/looks as fresh and bold as a foreigner's poetry. Eventually, I'd love to make a printed collection of these mythical creature/landscapes as a zine or a bookmark series, one that showcases a machines' imaginary visions about the surreal and the intangible.

Part 2. Protean Visions

The second application focused more on the dynamic side. What would an AI's dream look like? Would it also follow an imaginative storyline endlessly built upon itself, often strange and ridiculous but somehow linked together as it progresses?

I was excited to construct such an automated generator of a series of self-evolving scenarios driven by AI's visions. To do that, I applied Machine Learning techniques to create a text-image-text feedback loop.

First, I asked charRNN to write a pseudo "storyline". Unlike a simple next-word-predictor on the keyboard of our phones, charRNN's Long-and-Short-Term-Memory (LSTM) model enables it to memorise a much longer paragragh of words, and is able to refer to the previous contexts when needed, which is right what I needed.

Then attnGAN was used for visulising the evolution of this pseudo "storyline", turing it into a "storyboard". Because the attnGAN model was originally trained on a image dataset of daily objects, when it gets to more abstract subjects or when the sentence doesn't make full grammartical sense, the output "sketches" start to appear eriee, fluid, and surreal – and I fully embrace this aesthetics. The only draw back is when the text is too long the sketch would be stuffed with cells and become stagnant to progress, so I asked the proggramme to regularly clear out old text for attnGAN, like turning a page.

To further highlight the notion of autopoiesis, I applied a third model Im2txt for image captioning (hosted on Runway). This is for the simulation of "reactions" in a generated scenario: a tiniest move taken in the current scenario affects the next. I asked it to regularly interprets the current sketch into text and feeds it back into the soil of the text generator. Thus a text-image-text feedback loop is formed.

To communicate the three different models I spent a lot of time configuring asychronous calls. Each model required a different amout of time to respond, so I set regular intervals and conditioned pauses to allow the models to catch up with each other. I also created separate image buffers to display different results on different parts on the canvas. so they wouldn't cancel each other out by different refresh rate. Eventually the programme can run entirely on itself with just one click for start.

This is a demo of a trial run. I input the programme with the first sentence from Franz Kafka's The Metamorphosis, and set off the text generator trained on Roberto Bolaño's writing style (from ml5). Here it goes:

Finally I saved the sequence of its generated images and added trainsitions to record its "dream" as a film:

References

[1] Joe McAllister's code excamples for CharRNN on Week 8

[2] Ml5's CharRNN training instructions: https://github.com/ml5js/training-charRNN

[3] AttnGAN's gitHub repository: https://github.com/taoxugit/AttnGAN

[4] Runway's code examples for Hosted Models: https://learn.runwayml.com/#/how-to/hosted-models

[5] This thread on how to use the Google Translate API: https://stackoverflow.com/questions/12243818/adding-google-translate-to-a-web-site