C O S M O S

An iris sonification piece that converts one’s biometric data to digitized sound.

produced by: Sarah Song Xiaran

Introduction

Have you ever thought about listening to your own eyes? Do you know those unique patterns and structures of your iris contain your secrets?

Cosmos is an installation and sound generating piece which demonstrated interactive, generative and procedural techniques. It invites the audience to an unexpected journey in which their eyes are going to tell a little story to themselves and give everyone a tailor-made sound piece. This work aims to find an equal presence of order and beauty in chaos.

That whole process took 3 minutes. The audience is invited to sit in front of a microscope and asked to wear a pair of headphone, then "Dr.Sarah" will capture their iris manually. The image will then be scanned and analyzed for sonification. The mapping algorithm is developed according to the idea of Iridology and harmonic series.

Concept and background research

The first inspiration came from a poster of an American scientific docuseries: Cosmos: A Spacetime Odyssey. The nebula looks like an “eye” in the universe. Every one of us has a unique structure of lines, dots and colours in our own iris. Because of these unique patterns, the iris is known as a type of biometric data like our fingerprints which can be used to identify people. Many scientists and doctors have devoted a lot of time and efforts to the study of iridology. It is believed that the iris is connected to every organ and tissue of the body by way of the brain and nervous system. I've been fascinated by this and become really interested in iridology for the past year. An idea then popped into my mind: why not sonify the patterns of iris? To sonify of iris with iridology can be an attractive and unique method to associate the close relationship between an iris and a human body.

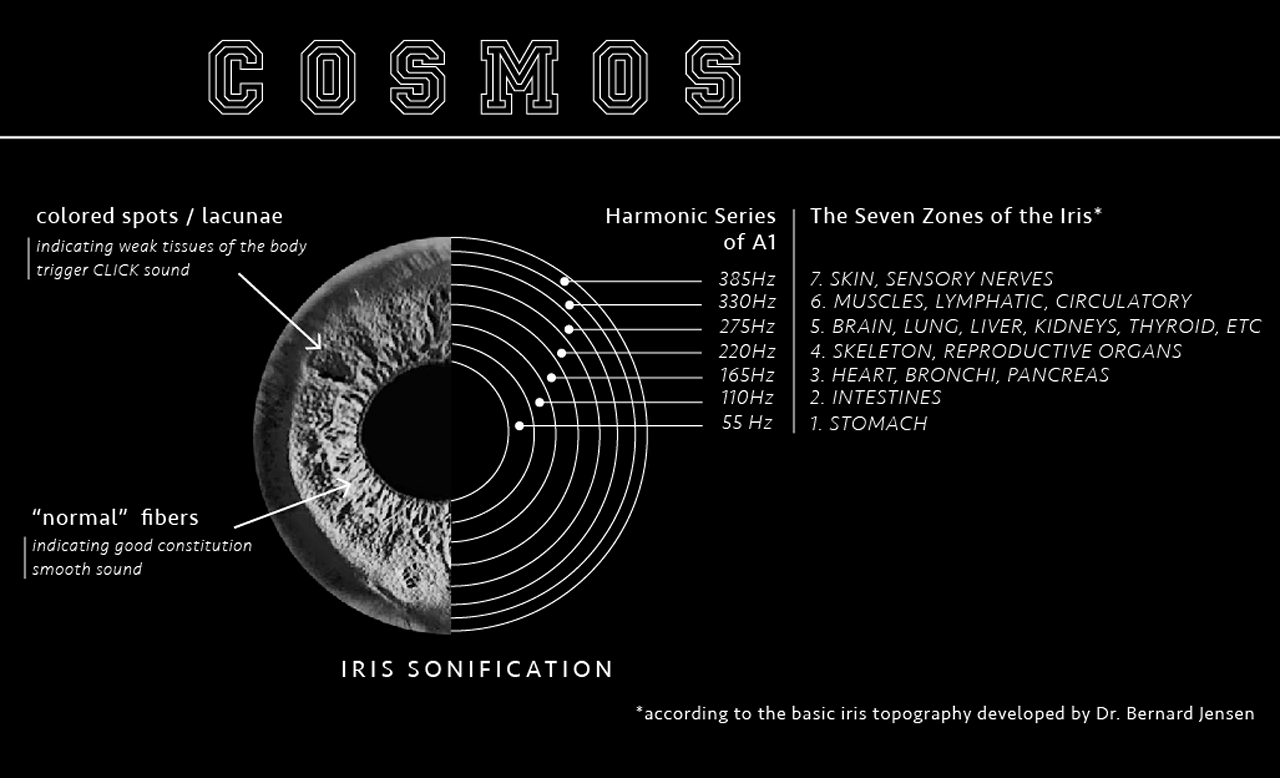

There have been many studies and experiments on biological data sonification although it is still a rather young discipline: to identify protein folds from sequence data and then sonify those data into melodic piano music; fingerprint sonification piece called Digiti Sonus, etc. I started to find tools to capture human iris and using software to do the image processing and sonify them. Here is the research paper I wrote in Computational Arts-based Research & Theory classes earlier this year. I come up with two mapping strategies but in COSMOS I chose to adapt "The Seven Zones of the Iris", a theory from Iridology and combined it with harmonic series. So theoretically, a health iris should generate a melodic and harmonic sound as the fibre supposed to be very smooth and in a good constitution. In fact, no one has a "perfectly healthy" iris so there must be lots of clicking and noisy sound in the soundtrack. My sonic inspiration comes from Alva Noto and Ryoji Ikeda's music. I really like their concept of using the mathematical language of music, binary numbers and data as a raw material for art.

“Within the time variable, we are very sensitive to rhythm and its changes.” - Thomas Hermann

It is a good attempt at linking the sound with its owner – data since the best criterion of sonification is that “the data speak for itself”. Without a doubt, that is the most challenging part of this iris sonification. The core theme of COSMOS is to create a contrast between Colour and B&W, Natural and DIgital, Glitchy beats and Harmonic sound, the knowable and unknowable. Cosmos, as the term was first used by the philosopher Pythagoras for the order of the universe, I really want to bring those beautiful little "cosmos" into a digital world and transfer them into another sensory perception.

Technical

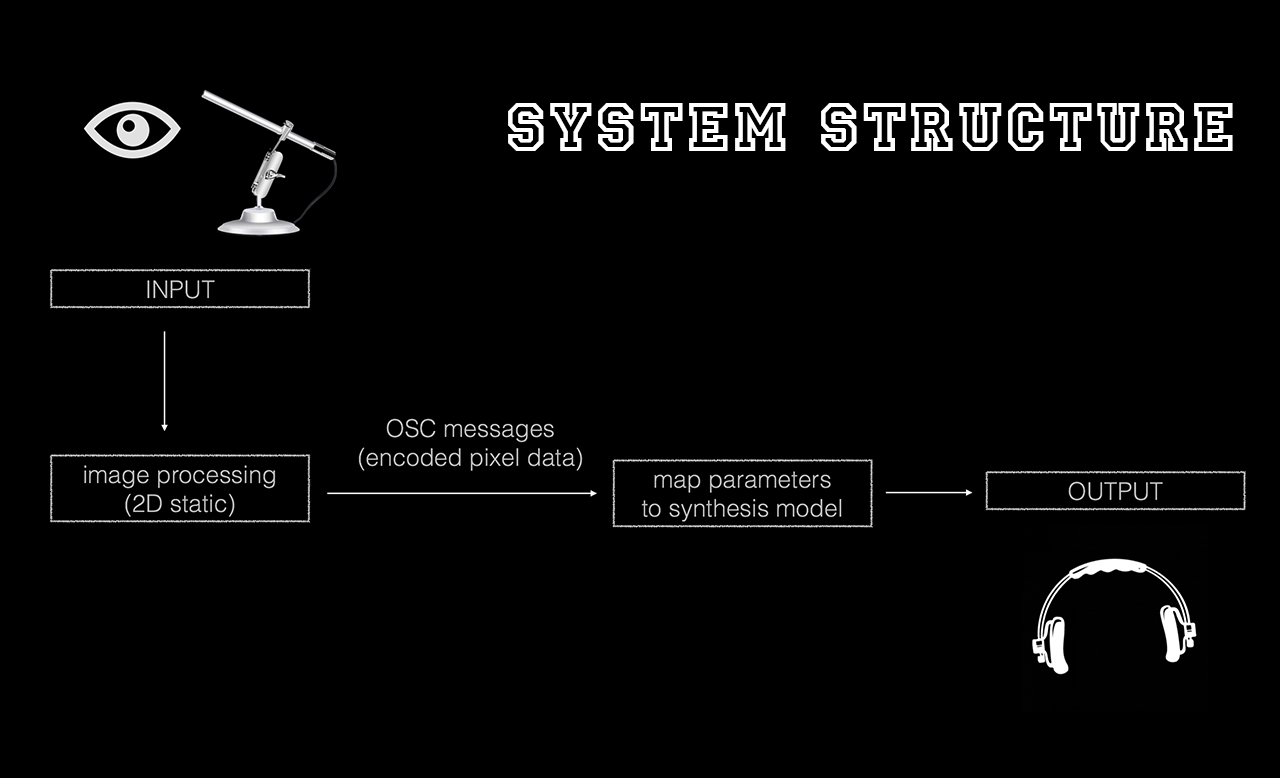

The system structure is presented in the pictures below:

Visual

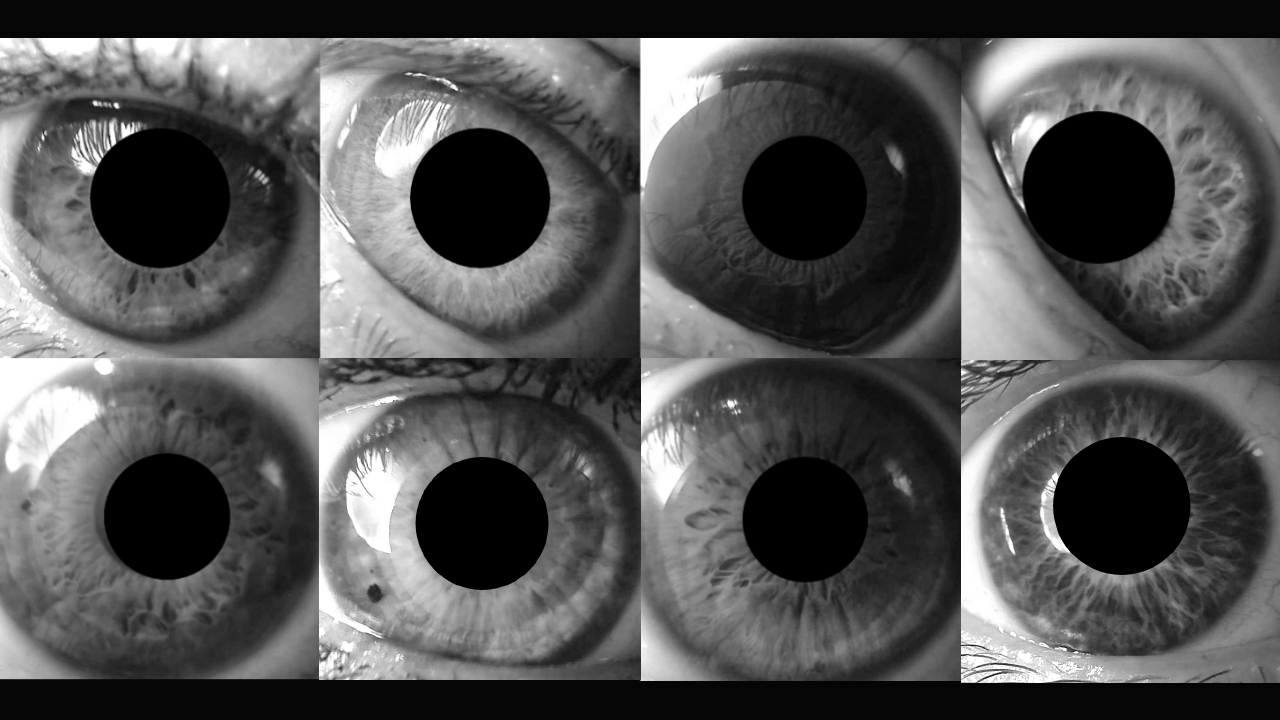

All the image processing is done in openFrameworks. First, I grab an image from the live camera(digital microscope) when I do mouseClick and the image will be converted into a grayscale image and save into the data file. Here I add a black dot which represents the pupil and helps me locate every eyeball in a better position(in the middle of the window) for scanning. Second, a scanning line will rotate in 360 degrees and extract 7 pixel's colour value(r, g and b are the same value in grayscale mode), and send these data through an OSC bundle in every 30 frames(this also create the rhythm).

Sound

I found an image sonification source code from Sonic-Code-Workshops when I was making a prototype, it uses Processing for image analysis and PureData for sonification. However, I decided to use Max/MSP, a new program I've learned for half a year because it's nicer and more steady for real-time audio synthesis and processing. Instead of using many sound samples, I try to make the sound which creates a machine-made and also rhythmic perception. As mentioned before, the pixel value of the iris will be sent through OSC to Max and filtered to trigger specific sound. If the value is below 20, it will trigger an impulse(using "Click"); if the value is between 20 to 100, it will play a noisy audio buffer with particular frequencies in a harmonic series(the fundamental pitch is 55Hz(note A1); if the value is bigger than 100, it will trigger another oscoscillator with particular frequencies. To add more texture to the whole soundtrack, I added a sinewave at 30Hz with a frequency modulator. After 1 min scanning, it will stop and the soundtrack will be recorded and saved in the specified directory with a serial number(same as the image saved in OF).

Interaction

The idea is to provide a clinic-like environment and "eye test" for people. The audience will become a patient and have their iris scanned with my instruction. They will then be able to see their iris from a mini projection on a box and listen to their iris for 1 min. Me, as an optician will explain the whole process and the idea of iridology to "my patient" after the “eye test” and ask them to leave their email if they want to receive a “sonic report”(their iris photograph and an audio file of their iris). I designed the piece is more likely to be a performance, people are invited to participate in it and may have a sense of insecure as it still takes a bit of time to have their eye scanned properly and they have to be very close to the "scanner". It will definitely become an unusual yet memorable experience for them.

I also have a wall mounted "eye clock"(40cm x 40cm), an acrylic engraved outlined iris when I did in a research earlier, alongside with a pre-recorded audio from an mp3 player(sound of the outlined iris). If the "clinic" is closed, people can still listen to this backup piece and get an idea about iris sonification.

Setting up video:

Future development

There are several ideas to further develop COSMOS:

- To build a better "iris scan" machine. Currently I just have a USB microscope and a support stand to help me get an iris picture, and it still takes time to find a best height and position for scanning. It would be nicer if I can have a better instrument to do that.

- Count colour as a factor for sonification because there are other research has found that eye colour might reveal about one’s health and personality. Accordingly, a way it could be improved is to add more texture to the whole track. In data analysis and visualization, the extraction of related features of iris images needs to be further experimented with in the future.

- To experiment more with a different timbre of the sound and try to map the pixel data extracted from the iris to the “Attack Sustain Decay Release” envelope.

- To create an online (or make another installation) "IRIS Library" with sonic data as there were lots of iris picture with good quality which were captured and archived during the exhibition(see below).

Self-evaluation

It's been a while since this idea came to my mind but I did not have the chance to try it out until now. Therefore, I was surely very excited about this iris sonification project and how COSMOS turned out overall.

The early version of my iris sonification was implemented with MetaSynth’s image synth and I did the image processing in ImageJ, so I decided to technically challenge myself this time and really want to make an installation which can sonify iris in real-time. Due to my lack of expertise, the sonic results were not what I've expected so it's a little bit hard for others to find the relationship between the visual and the sound at last. However, as a person who does not come from a music background, I am proud that I wrote the code from scratch and learned a lot during the time experimenting with different digital programming platform.

To my surprise, this piece successfully attracted a large crowd. And I received feedback from various people after taking this "eye test" that they would like to know more about iridology and were really amazed by the picture of their own iris. Nevertheless, to get an idea image of one's iris is the most difficult part of this piece and this is something that I endeavour to develop in the future. Presentation of the iris in digital art today is still limited and underexplored. For me, the revelation of the mystery in the iris of the eye is an interesting research field. I would like COSMOS to be a unique piece in biologic art and it's interesting for me to discover the meaning of data through sound somehow.

References

- Lindlahr, H. (1919). Iridiagnosis and other diagnostic methods. Chicago: The Lindlahr Publishing co.

- Hermann, T. (2002). Sonification for Exploratory Data Analysis. Bielefeld, Germany: Bielefeld University.

- Han, Y. C. & Han, B. (2014). Skin Pattern Sonification as a New Timbral Expression. Leonardo Music Journal 24(1), 41P43. The MIT Press. Retrieved September 15, 2018, from Project MUSE database.

- Jensen, B. (1980). Iridology Simplified. The United States, Healthy Living Publications.

- Scaletti, C. (1994). Sound synthesis algorithms for auditory data representations. In G. Kramer (Ed.) Auditory Display: Sonification, Audification, and Auditory Interfaces. Addison-Wesley. 223--251.

- Burak Arslan, Andrew Brouse, Julien Castet, Jehan-Julien Filatriau, Remy Léhembre, et al.. (2005) From biological signals to music. 2nd International Conference on Enactive Interfaces.

- OSC addon: https://openframeworks.cc/documentation/ofxOsc/

- Max/MSP tutorial [https://zhuanlan.zhihu.com/dataFlow]

Acknowledgements

Some code is adapted from Theo Papatheodorou:

- pixelAccess - From week 6 of Workshops in Creative Coding Term 2

- oscSend - From week 18 of Workshops in Creative Coding Term 2

written in OF version 0.10.0 with addon ofxOsc, platform Xcode 7.0.1