Mimicry

2019

Print

Video work

Loop

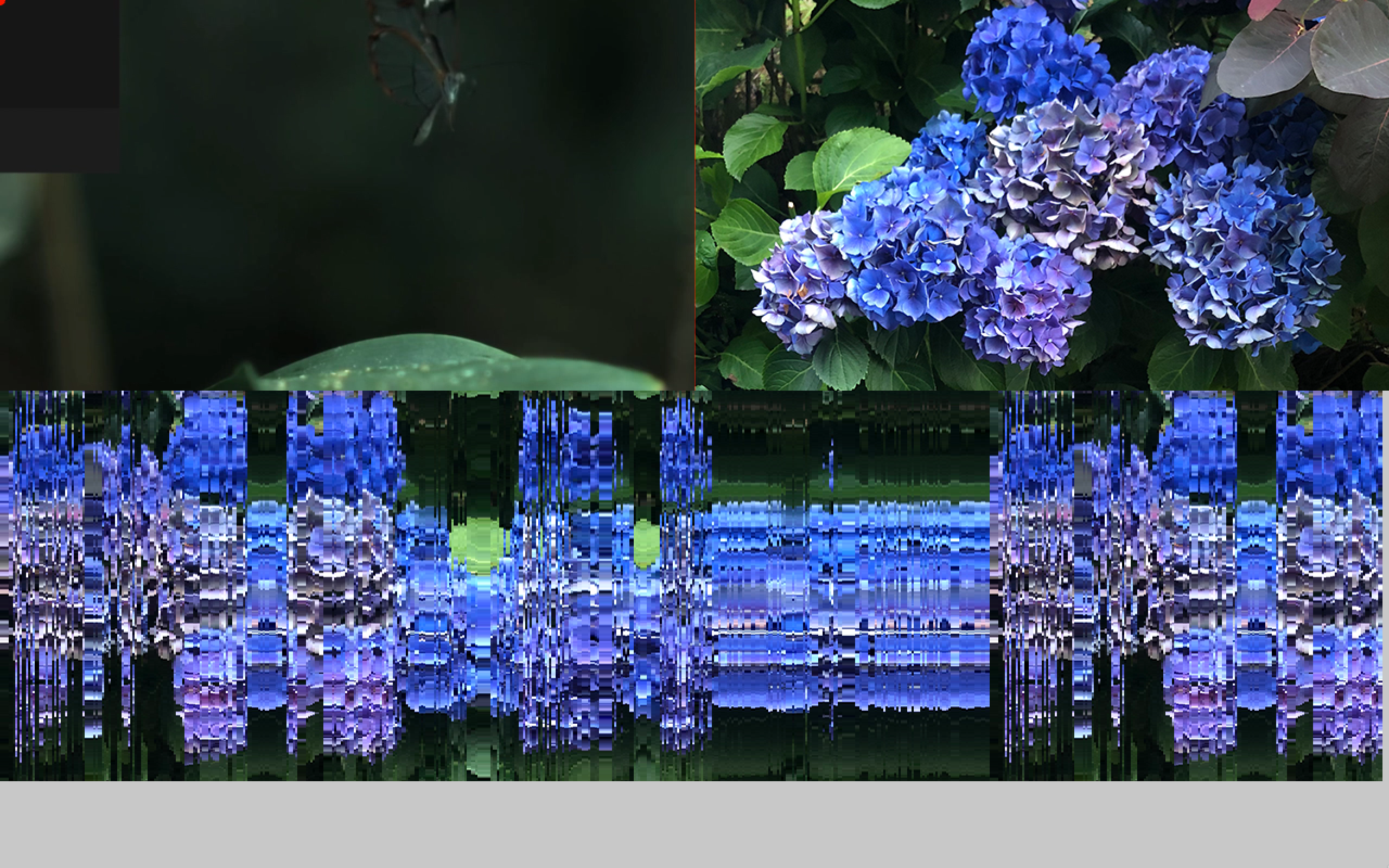

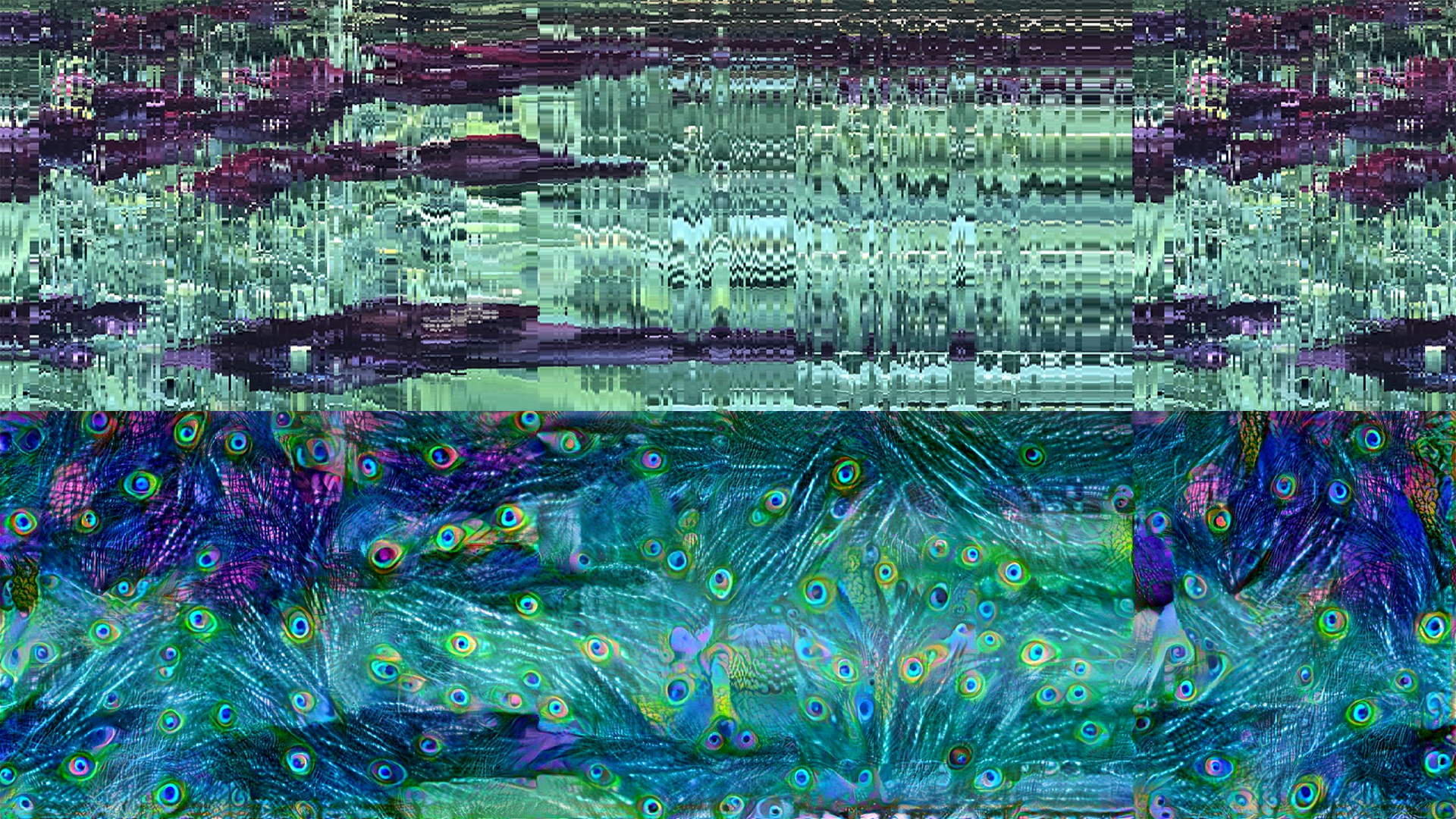

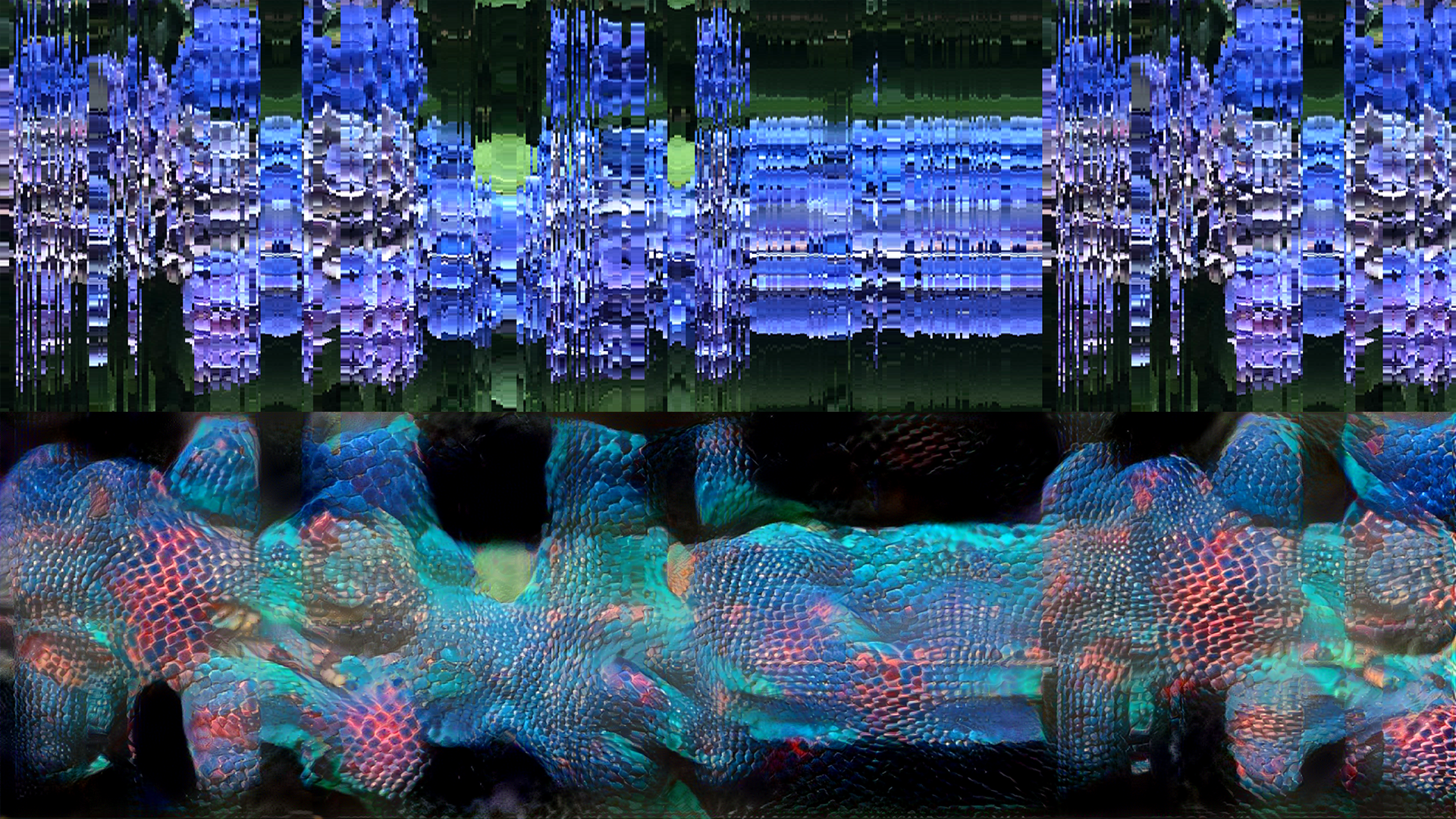

Insect slow motion video, flower image, animal pattern, machine learning with style transfer

Variable size

produced by: Ziwei Wu

Introduction

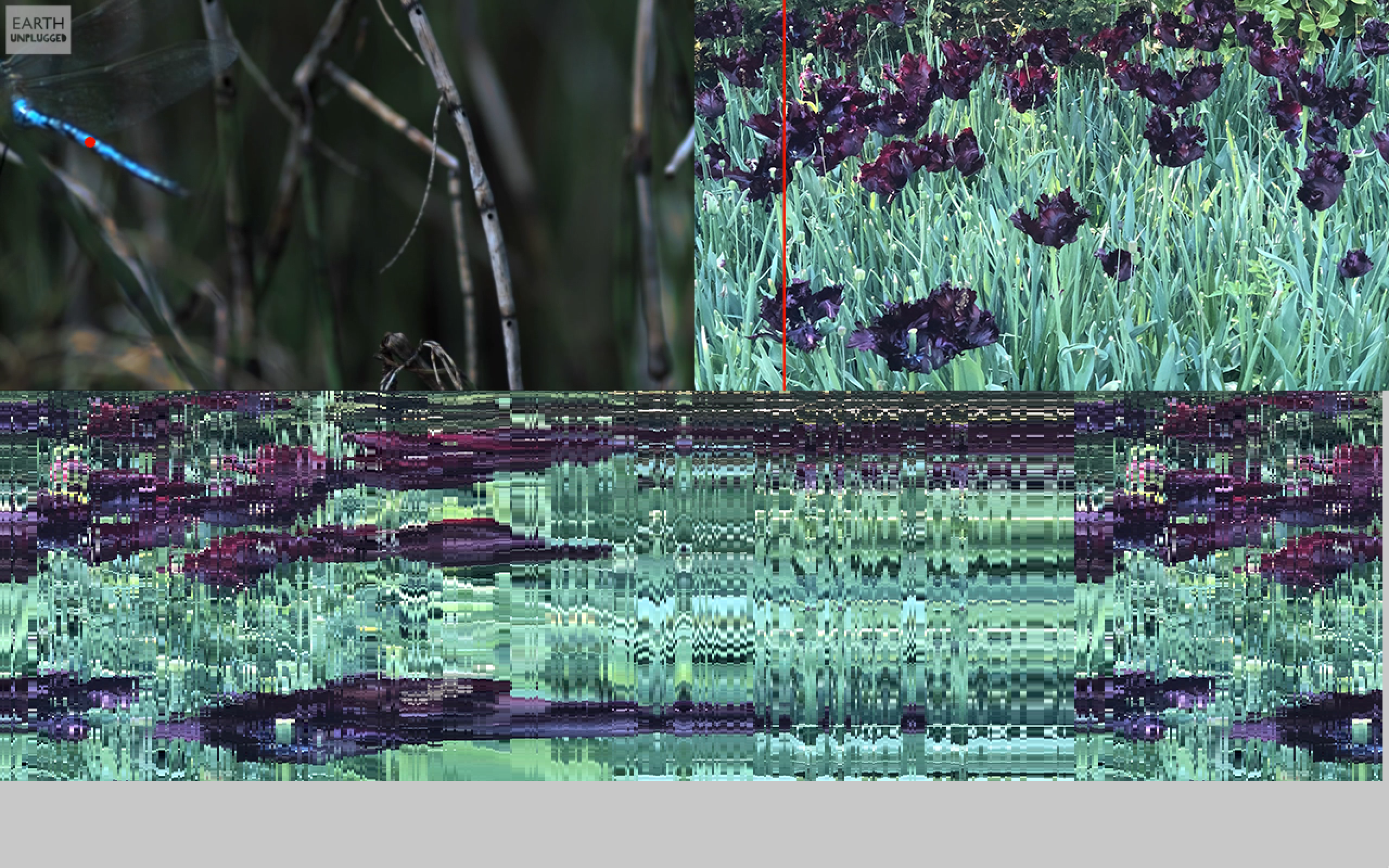

“Mimicry” is a multi screen video installation which was inspired by mimicry. A unique way of species protects themselves. Some time, species can change their color and pattern relating to the environment. It is based on nature, but not the original nature.

Thus, this work is an experiment which uses computational way to collect the animal movement data, mix with real environment picture. Simulating the process of mimicry to generative new pattern. Also using style transfer in machine learning, trying to alter the nature.

My motivation, My previous work Mimicry and Pseudo- environment

The motivation for my work comes from two part. It is a further development of my previous work Mimicry. Besides, for the curiosity of myself, machine learning can create what kind of ‘different’ nature based on nature.

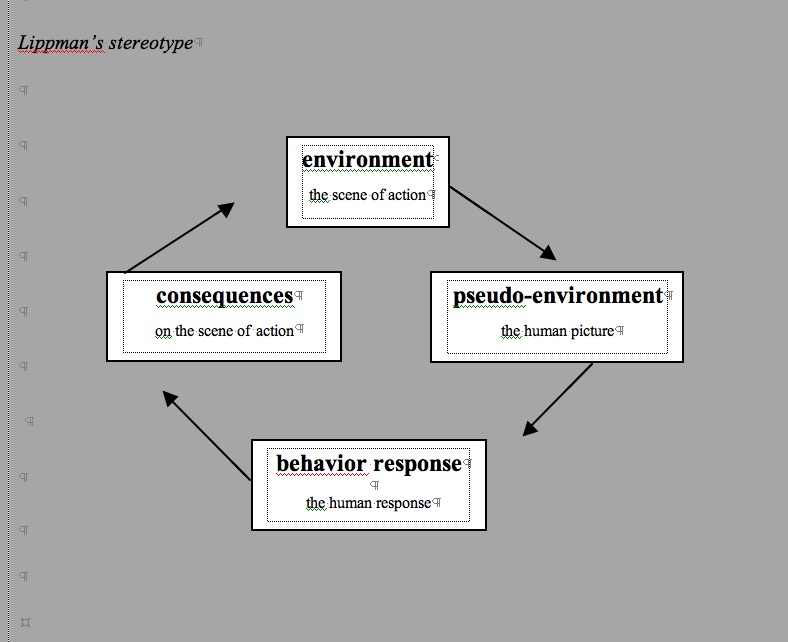

My previous work Mimicry (2019) exhibited in the exhibition ‘In The Dark’ (2019) in Cello factory. It is a mapping work with 4 screens which contain different fiction moving fur on the second floor. Inspiration comes from Mimicry. A unique way of species protects themselves. Sometimes, species can change their colour and pattern relating to the environment. It is based on nature, but not the original nature. More like the fake and changeable skin controlled and influenced by nature. Also, in the human society, mentioned in Walter Lippmann’s book ‘Public Opinion’, People construct a pseudo- environment that is a subjective, biased, and necessarily abridged mental image of the world, and to a degree, everyone’s pseudo-environment is a fiction. People ‘live in the same world, but they think and feel in different ones’.

Thus, this work is trying to build a protected, warm, fantasy pseudo-environment in my mind – through a partial reorganization of the real environment. Also, the conception of the exhibition ‘In The Dark’ (2019) arouse me to generate the colourful and tender moving image. Set illusionary and mysterious atmosphere in the dark room.

Besides, after that exhibition. I started to thought about how to link the real environment and my work more closely. It is obvious that all these images came from 3D rendering is

easier to do. But convey the less real emotional and authentic feeling. Also, another question is how to make my work more biological. I am interested in vivid bio art but I don’t know how to learn from nature and translate it into new media art, then express in my own work. How some artwork imitates the animal’s behavior or nature? What kind of Mimicry is suitable to present by new media art? Based on these questions, I decided to analysis one of my favourite artist to find some clues.

Collect the data from bio, using the data to generate shape or pattern

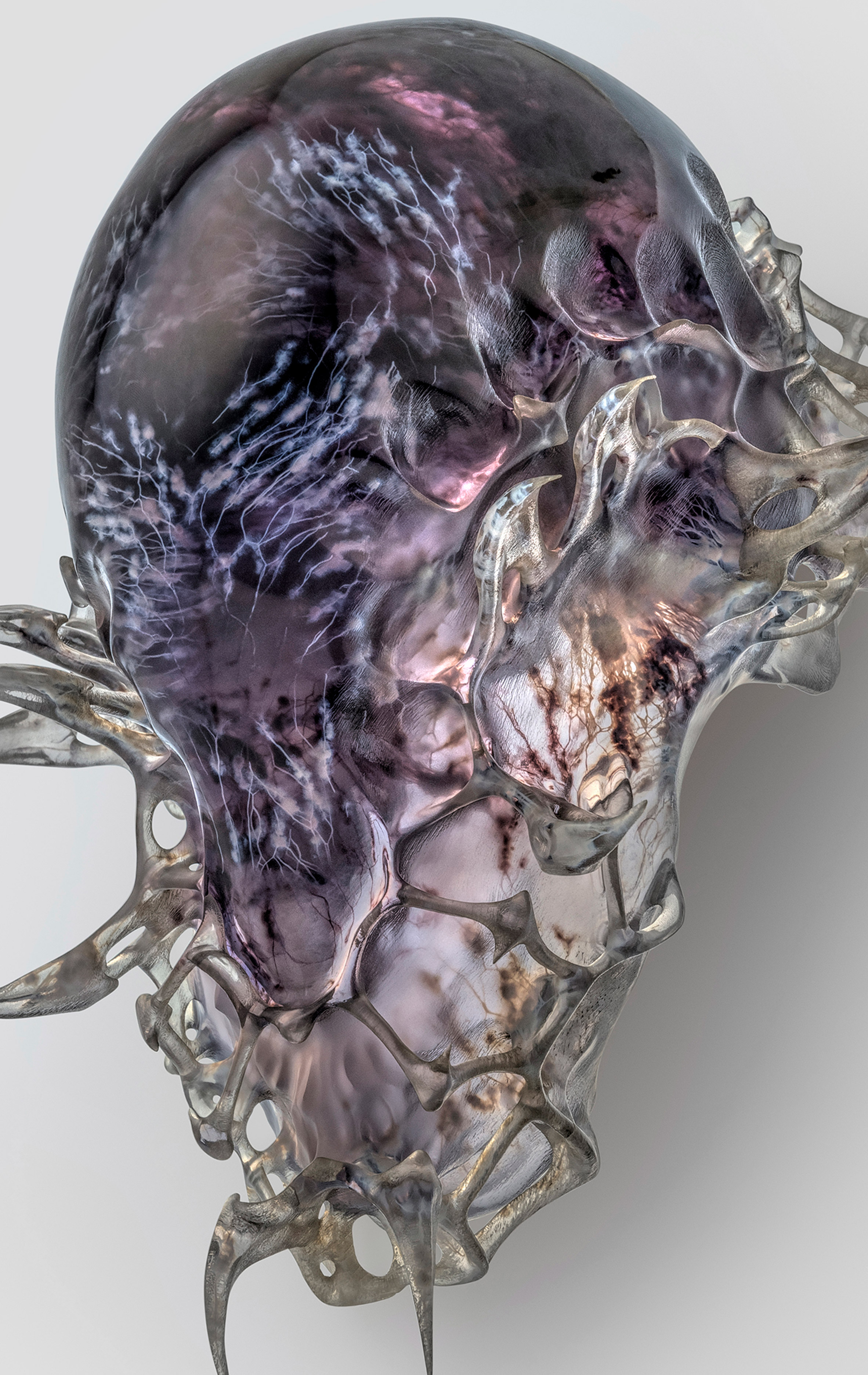

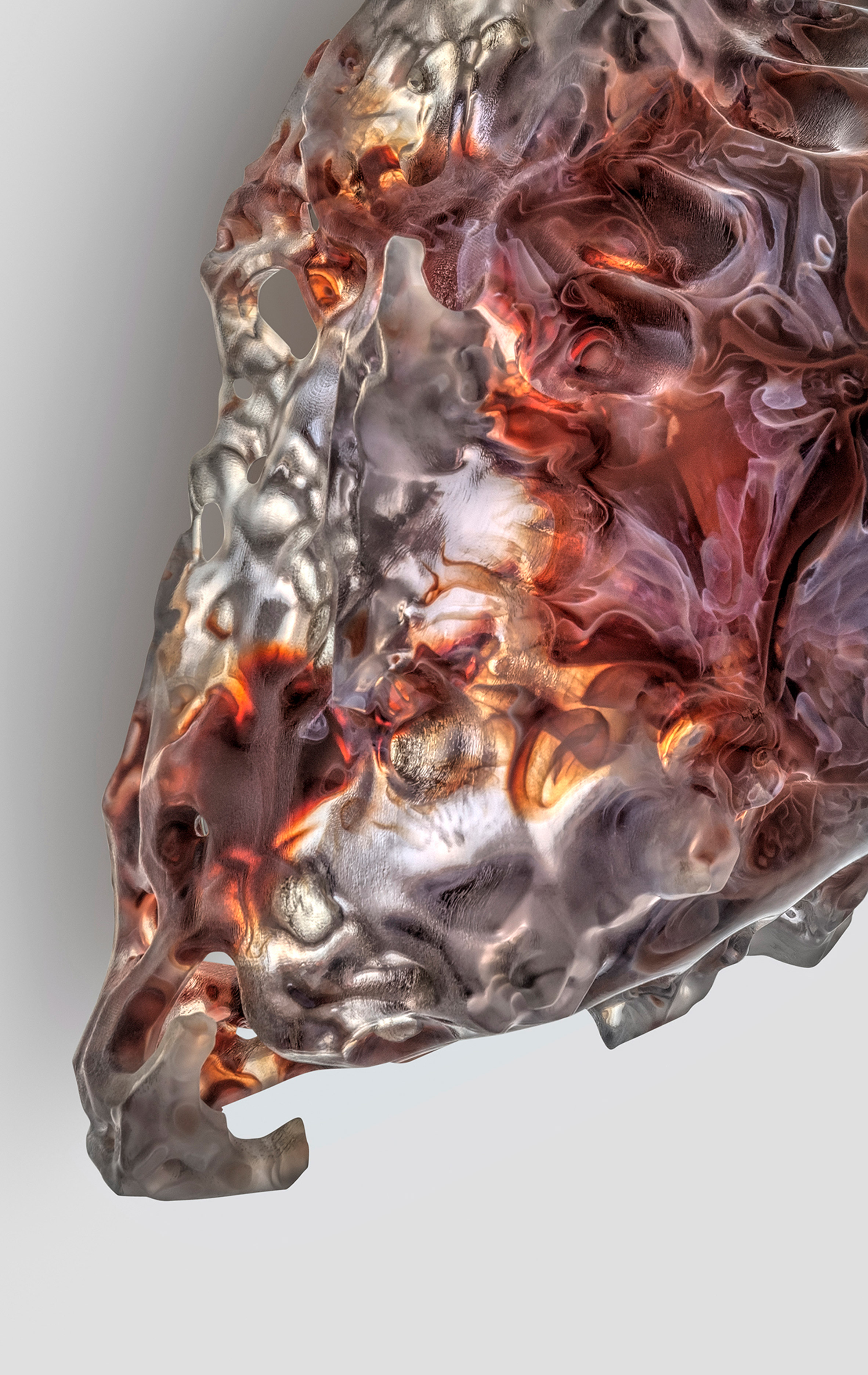

Oxman states that ‘Combined, the three series represent the transition from life to death, or death to life depending on one’s reading of the collection,’ said Oxman. ‘The inner structures are entirely data driven and are designed to match the resolution of structures found in nature’.

First of all, the data for the three main areas are generated, including a heat map of the last breath, a map of the wearer’s face, and the path the flow of air takes across the face. The software then applies the data to the death mask shape, which is transformed into a three-dimensional design before being 3D-printed. Then also using real bio things like a cell to be part of it. Like pigment.

Altered nature

What’s more, during the research time, I also find another artist’s series of work which is David Quayola’s Landscape paintings (2015).

I think this work is a great example of altering nature. I think he uses some frame differ way to put the wind into different image and video. It just like the ancient gorgeous oil painting. The image itself is beautiful enough and the artist doesn’t need to say anything! Also, I find it is more intriguing to put this work exhibit in nature. This means the audience can see and feel the real nature combine with the ‘altered nature’ in the work. It is really like the mimicry in nature, also like the pseudo-environment in human society. Combine the real nature with the ‘fake’ nature together.

My own practice about bio data

Firstly I download some insect slow motion video from Internet. Also photo some flower image by myself.

Secondly, set the insect video and flower image in the same size. Track the insect color, using the same position on the flower image.

Thirdly, Get the new slit scan image which contain insect movement data.

My own practice with Style transfer

At first, I highly looking forward to what my new photo will be. But it seems like not my type, not really successful. Then I change the style to another image. the result also likes this. I start to find that maybe the reason is that the style colour is much different than the original one. So, the new image is both don’t like the original image or style image. Then I try to use the same two colours image to generate a new one, its success! I can see them both and I really like that!

This is the original code I use: https://colab.research.google.com/github/tensorflow/models/blob/master/research/nst_bl ogpost/4_Neural_Style_Transfer_with_Eager_Execution.ipynb#scrollTo=SSH6OpyyQn 7w

Based in an online coding environment, I find a website to upload my photo to the internet and get the link: https://imgbb.com/. Then I paste the image link on it and call the name to use it.

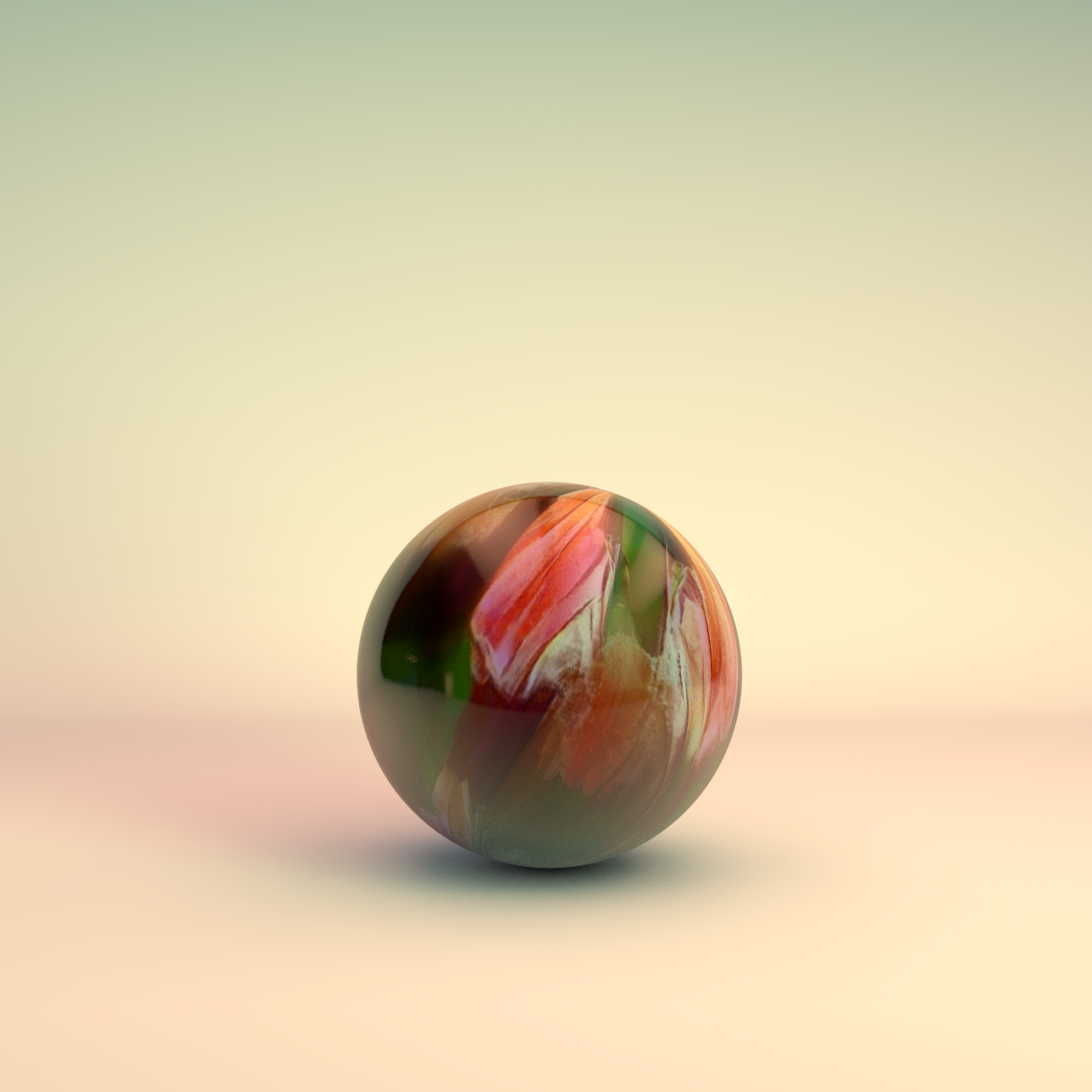

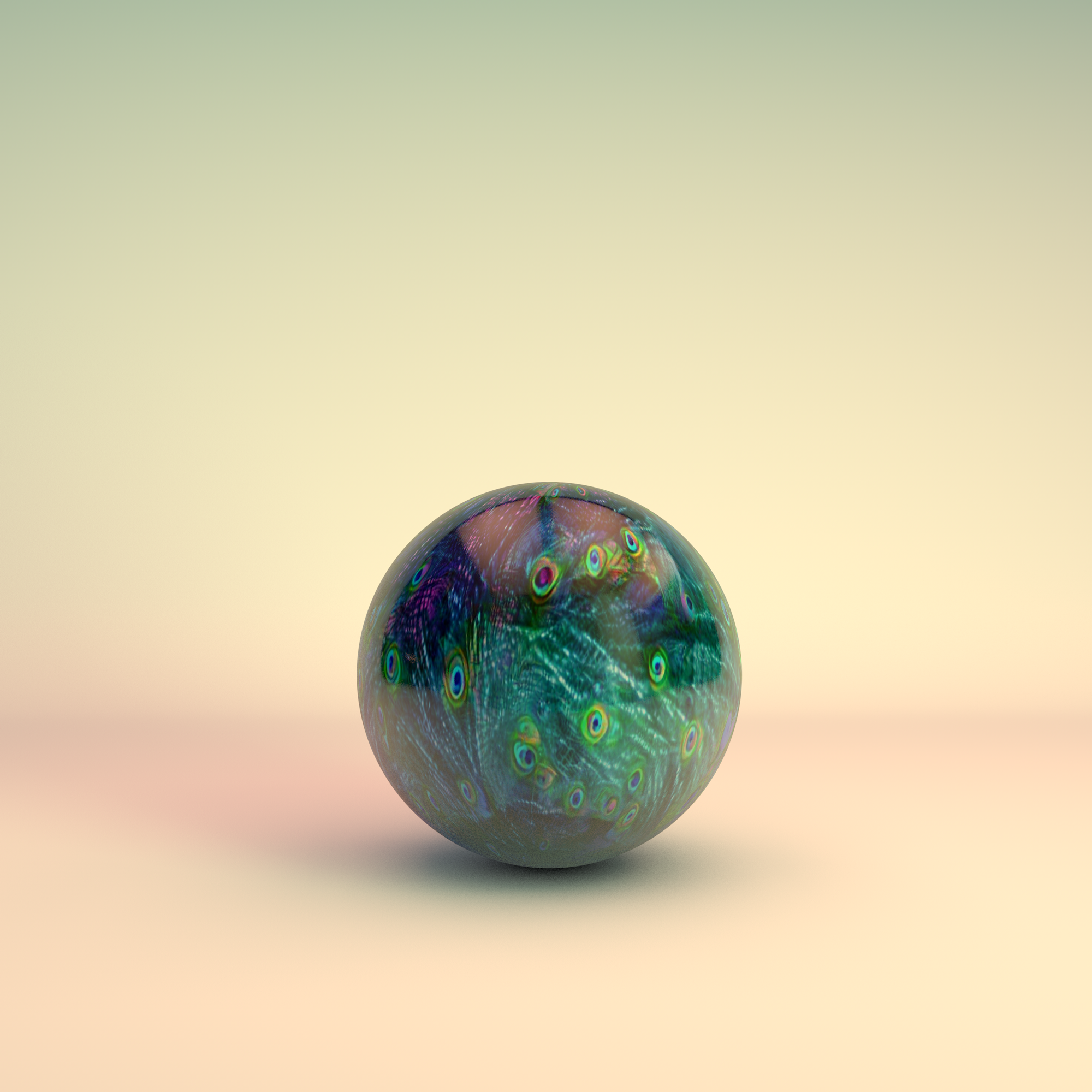

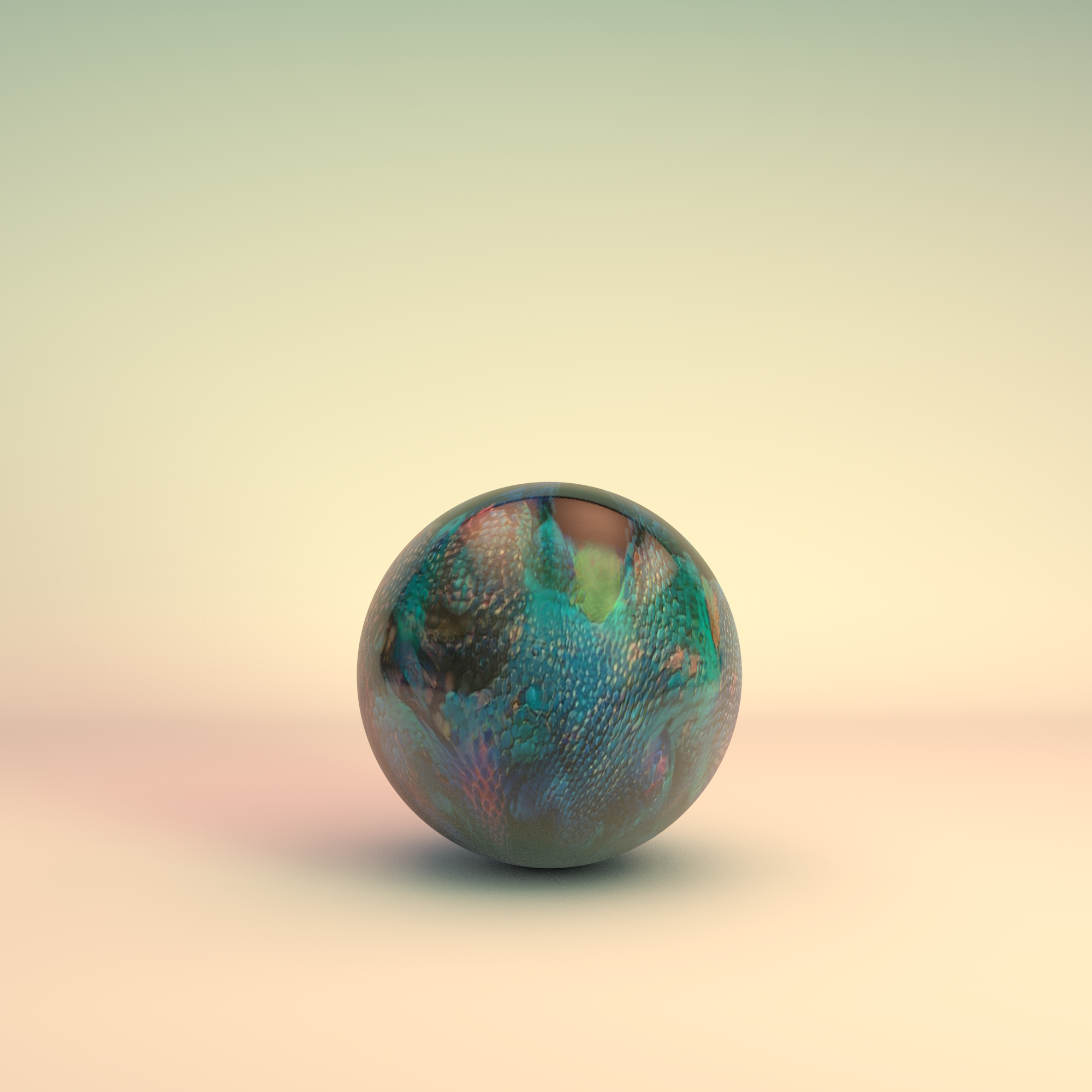

My practice with 3D model - texture

Get the texture from style transfer then put it on 3D model as a texture.

More details see the video below

Next step back to nature

Overall, my practice can separate in 3 part:

1.Collect data from real nature - Collect the data from bio, using the data to generate shape or pattern

2.Altered nature - Using the digital way to combine the real nature with the ‘fake’ nature together.

3.Return to nature- Deal with the relationship with nature, influence the nature

Now I already finish 1 and 2 part. Thus, my next step is putting the result to the nature again. Maybe will 3D print it or put a small screen in the real flower. Find some relationship with the 'fake nature' and real nature.

Future development

There are just the beginning of my project, now I find a way to solve all the process by myself. There are some points I need to practice a lot.

1. Try to deal with video with machine learning

I video a lot but till now I can’t find a way to deal with mine video!

2. Try to deal with sound with Machine learning

3. Made my own 3Dmodel

The 3D model I used in this practice is from www.c4d.cn. I use this for testing. But for my own work, I think I want some more abstract 3D model that made by myself. Also, I have to research for it, the shape has to link to Mimicry.

Some relative art work made by Andy Lomas and Nick Ervinck

References

1.Lippmann, Walter. Public Opinion. New York: Harcourt, Brace and Company, 1922.

2.Morby, A. (2016), ‘Neri Oxman creates 3D-printed versions of ancient death masks’. Dezeen. https://www.dezeen.com/2016/11/29/neri-oxman-design-3d-printed-ancient- death-masks-vespers-collection-stratasys/ [Accessed on 7th May 2019].

3.https://ml5js.org/docs/style-transfer-image-example/ [Accessed on 9th May 2019].

4.https://colab.research.google.com/github/tensorflow/models/blob/master/research/nst_bl ogpost/4_Neural_Style_Transfer_with_Eager_Execution.ipynb#scrollTo=SSH6OpyyQn 7w [Accessed on 9th May 2019]

5.https://www.tumblr.com/dashboard/blog/iwuziwei [Accessed on 10th May 2019]

6.https://research.google.com/seedbank/seed/d_feature_visualization [Accessed on 10th May 2019]

7.https://youtu.be/WrJ0ayfAUNo [Accessed on 10th May 2019]

8.https://www.mitpressjournals.org/doi/pdf/10.1162/isal_a_00215 [Accessed on 2019]

9.http://nickervinck.com/en/works/detail-2/noitals [Accessed on 2016]

Acknowledgements

Written in Xcode 10.3

Some code is adapted from Theo Papatheodorou:

- Slit scanner - From week 6 of Workshops in Creative Coding

- Good fratures to track - From week 13 of Workshops in Creative Coding

Machine learning with style transfer

Google seedbank: https://research.google.com/seedbank/seed/neural_style_transfer_with_tfkeras

Some experiment online : https://deepart.io/

Special thanks to:

- Pete Mackenzie

- Taoran Xu

- Theo Papatheodorou

- Rebecca Fiebrink

- Xinyu Ma

- Andy Lomas

- Atau Tanaka