The Nowhere Gaze

Exposing the objectifying bias in a machine learning data set

Author: Eevi Rutanen

“Neither God nor tradition is privileged with the same credibility

as scientific rationality in modern cultures.”

(Harding, 1986)

Since the Enlightenment, empirical science and positivist belief in the scientific method have been among the main organising forces of Western societies. Driven by the ideal that rationality can reveal some external and absolute truths of the world, practitioners of the dominant epistemologies strive to look from the outside in. But is this even possible? Or is it just another attempt at the “god trick” (Haraway, 1988)?

An increasing amount of contemporary knowledge production is achieved by computational means: Machine learning algorithms and data-mining technologies discover patterns in information and make conclusions and predictions of the material world. Dan McQuillan (2017) points out that modern data science promises to provide even more objective accounts than the traditional empiricism, since it works on the aspects of experience that can be numerically quantified. Employing the realm of data, machine learning poses as a neoplatonic paradigm which “appears to reveal a hidden mathematical order in the world that is superior to our direct experience.” (McQuillan, 2017)

Machine learning algorithms learn from data sets where the input (say images of cats) and output (the word “cat”) are already known. The algorithm decides independently the ratio of features (in this case certain constellations of pixels) for matching the inputs to the outputs. However, while working in the platonic world of numerical abstractions, the reasoning of the algorithm can sometimes become completely obscure to humans. (McQuillan, 2015) The risk of being black-boxed by an incomprehensible algorithm is even more intensified when predictive algorithms transcend from laboratories to offices, courthouses and hospitals.

McQuillan (2017) notes that machine learning not only creates knowledge but also directly acts on it. This makes the position of the object of the knowledge possibly even more precarious than that of traditional empiricism which seeks to distance itself from implementation. Even without a deeper understanding of their inner workings, algorithms are increasingly being used to govern human lives: parole verdicts, mortgage deals and medical diagnoses are executed based on algorithmic predictions, since how could pure data be anything else but objective?

Despite the sublime promise of the view from above, algorithmic governance can be problematic for various reasons. McQuillan (2015) argues that data science often confuses correlation with causation when trying to detect patterns in statistics, and that the opaqueness of algorithmic decision making leads to the abstraction of accountability and responsibility. Sometimes the opaqueness can be intentional as well: Commercial corporations like Google and Facebook are reluctant towards transparency, since the algorithms they deploy are valuable trade secrets (Seaver, 2014).

However, even the most explicit and responsibly handled machine learning algorithms can not turn bad input into good output (McQuillan, 2017). Several precedents (see Crawford & Schultz, 2014; O’Neil, 2016; AI Now Institute, 2017; Algorithmic Watch, 2017) have already proven how algorithm-based predictions have been infected by human prejudice through biased data sets: For example, ProPublica’s (2016) research revealed that the software used by judges to predict defendants’ likelihood of reoffending was biased towards minorities because the data that was used to teach it was discriminatory to begin with.

These kind of “allocative harms” (Bornstein, 2017) that are clearly in conflict with most expectations about judicial fairness are maybe easier to recognise and condemn than the so-called “representational harms” (Bornstein, 2017) that algorithms also pose. Sandra Harding (1986) describes this dichotomy as the difference between “bad science” and “science-as-usual”: If knowledge is always infected with the values of the dominant groups in a culture, maybe the biased view best represents the current condition? Safiya Umoja Noble (2012, 2013) as well as Paul Baker and Amanda Potts (2013) have researched the racism and sexism in Google search engine’s auto-suggestions. Both investigations recognise the problematic way in which algorithms can reinforce discriminatory stereotypes, but also acknowledge the difficulty in preventing these prejudiced representations. Baker and Potts summarise the dilemma as follows:

[I]s there an over-riding moral imperative to remove these auto-suggestions? Should content-providers ‘protect’ their users or should they simply reflect the phenomena that people are interested in? And if content providers do choose to manually remove certain suggestions from auto-completion algorithms, who decides which suggestions are inappropriate? […] Decisions to remove certain questions could be interpreted by some Internet users as censorship and result in a backlash.

The appendix of this essay constitutes a research that examines the biased nature of ImageNet, one of the largest and most used data sets of natural images for machine learning (Deep Learning 4J, 2017; Deep Learning, 2017). The results of the research reveal that ImageNet’s representation of women is objectifying and sexist: 41% of the image category names referring to women were objectifying or offensive, and 18% of the 2416 images for the categories “woman, adult female” and “female, female person” were sexually objectifying (see the appendix for a more in-depth account of the research).

According to Catharine MacKinnon (1987) this kind of objectification is a direct consequence of the compulsion of hegemonic empiricism to separate the scientific object from the subject:

[The] relationship between objectivity as the stance from which the world is known and the world that is apprehended in this way is the relationship of objectification. Objectivity is the epistemological stance of which objectification is the social process, of which male dominance is the politics, the acted out social practice. That is, to look at the world objectively is to objectify it.

The “male gaze” is a well-documented phenomenon in the arts and popular culture (see Mulvey, 1975; Kuhn, 1982; Berger, 1973), but does similar violence through vision take place in the practices of science? Haraway (1988) argues that seeing the scientific object as a passive resource for knowledge instead of an actor with agency is just an excuse for dominating interests. She continues to state that vision itself is always a question of power—of who gets to see, and what claims they make from those observations.

The bias of ImageNet is harmful not only for being “bad science”, but for its propensity to become a vicious cycle of representation and reification. Rae Langton (1993) notes that the objectifier always arranges the world in order to fit their beliefs, instead of arranging their beliefs to fit the way the world actually is. In the same vein, Harding (1986) argues that the representations of gender that are produced under the forced set of dualisms on which traditional empiricism is based on—culture vs. nature, rational mind vs. irrational body, public vs. private, objective vs. subjective, male vs. female—become just the way things “naturally” are. Dominant cultural values shape the technologies produced by those cultures (Nissebaum, 2001) which in consequence shape future values. Wajcman (2010) reminds us that “We live in a technological culture, a society that is constituted by science and technology, and so the politics of technology is integral to the renegotiation of gender power relations.”

Considering that the head scientist of ImageNet is an non-white woman, does it make it less probable that the bias in the data set is born from bigotry or even the sort of “thoughtlessness” (see notes 1) McQuillan (2017) blames data science for, but maybe from a reluctance to mix politics with science? Perhaps with their eyes fixed on the prize—verifiable image data for all!—the creators of the ImageNet did not see where they were standing, and what implications that might have. Harding asserts that all rationalised claims to objectivity merely provide cover for the unwillingness to scrutinise the underlying values and power structures. However, the presumed objectivity turns out to be an ideology in itself (Harding, 1986)—a Faustian trade of one’s identity for knowledge. Harding believes that the objectivity of science can be increased only with politics for emancipatory social change. But without an identity there is no politics or perspective, and without perspective one can not have a clear view of the world. The rational “view from nowhere” has no focus and no standpoint, and therefore the gained knowledge is unsituated, unlocatable and thus unaccountable. (Haraway, 1988)

But how to make knowledge claims that are situated, located and accounted for? As Don Heider and Dustin Harp (2010) admit in their research on online pornography, “It is fairly easy to look at existing systems and determine shortfalls; it is more difficult to conceptualize what a more democratic or pluralistic system would include.” In the same vein, it is not simple to suggest practical remedies for better accounts regarding ImageNet. One solution could be to promote stricter vetting for the image content. Despite the problematic connotations of censorship, even Google does apply a limited amount of removal policies on autocomplete results that contain “pornography, violence, hate speech, and terms that are frequently used to find content that infringes copyright” (Baker & Potts, 2013). As Baker and Potts point out, some websites have also successfully introduced an option for users to report problematic content. Naturally, the focus should not be on just damage-control, but on critical scrutiny of the data set’s methodology and fundamental purpose. Are images found online an adequate representation of the material world? Is it essential to exhibit offensive vocabulary, including racist slurs, even though they might have a historical meaning in a lexical context? How can the image data be maintained if it consists of links to third party websites? Furthermore, it goes without saying that more attention should be paid on plurality, diversity and participation: Not placing the object under a microscope but letting it speak for itself.

Does this then mean that the view from an oppressed body is an innocent position—a panacea for a “true” objectivity? Harding (1986) states that men’s dominating position limits their vision, and women’s subjugated position provides the possibility of a more complete understanding, but how can we simultaneously overcome the subject-object division and privilege women epistemically? If “[a]ll inside-outside boundaries in knowledge are power moves, not moves towards the truth” (Haraway, 1988), what allows us to think that the perspectives of the objectified are preferable? The answer is not to exchange male-centric science to female-centric, but to understand both of these positions to build one free from gender loyalties (Harding, 1986). Similarly to Berger’s (1973) account on the visual arts, in the patriarchal epistemology the problem with representations of women is not that the feminine is essentially different from the masculine, but that the spectator is almost always assumed to be male. As ImageNet proves, sexist society produces sexist science, so we can only hope that an emancipatory society will bring out emancipatory empiricism.

It is easy, however, to be lured by the all-seeing promise of objectivity, as I make my own knowledge claims in the analysis of this particular data set. While selecting the problems for inquiry, and defining what is problematic about these phenomena, I can only be informed by my own experience and standpoint. Luckily Haraway (1988) clears our conscience of the eventual controversy of the subject-object dichotomy:

Feminist objectivity makes room for surprises and ironies at the heart of all knowledge production; we are not in charge of the world. We just live here and try to strike up noninnocent conversations by means of our prosthetic devices, including our visualization technologies.

There are no unmediated, perfect accounts, no transcendence to rationality from my partial version of the story. Haraway (1988) states that “One cannot ‘be’ either a cell or molecule—or a woman, a colonised person, laborer, and so on—if one intends to see and see from these positions critically.” So we must become something else, something plural, something fantastic, something queer. Haraway continues to propose that the feminist science project must be read as an argument for the fanciful idea of the transformative power of knowledge. According to her, science has always been utopian and visionary. So we must change our visualising practices—from seeing with the violence of an objectifying gaze, to dreaming up and fantasising pluralistic futures. To escape the totalising “algorithmic preempting of the future” of which McQuillan (2015) warns us, we should perhaps not try to foresee and predict with the false accuracy of rationality, but to adopt the methodologies of speculation: “telling alternative tales about particular actualities […] making available new ways to situate and relate to the empirical [and developing] sensibilities that take futures seriously as possibilities.” (Wilkie, Savransky & Rosengarten, 2017). As Wajcman (2010) quotes Whitehead: “‘things could be otherwise’,technologies are not the inevitable result of the application of scientific and technological knowledge”. It is on all of us to imagine these novel outcomes. Because in the end, all epistemologies, traditional or radical; patriarchal or feminist; positivist or speculative, have the same goal: “better accounts of the world, that is, ‘science’” (Haraway, 1988).

Research Appendix

Background

ImageNet is one of the largest and most used natural image datasets for training computer vision algorithms. (Deep Learning 4J, 2017; Deep Learning, 2017) It was started by the Vision Lab research team in Stanford University in 2008, and it currently has over 14 million human-annotated images, which are organised according to the WordNet hierarchy. (ImageNet, 2016) WordNet is a lexical database founded in the Princeton University. Words in the hierarchy are grouped into sets of cognitive synonyms, called synsets, which are interlinked based on their semantic relations. (WordNet, 2018)

The ImageNet database was constructed in a two-tiered process: In the first phase images corresponding to the words in the WordNet hierarchy were collected through different search engines and image sites, like Yahoo image search, Google image search and Flickr. This process was repeated in multiple languages using multiple synonyms. In the second phase images were annotated by humans, using Amazon’s crowdsourcing service Mechanical Turk. (Fei Fei, 2010) AMT provides a marketplace for work that requires human intelligence: Through Mechanical Turk’s API, companies can hire a workforce to perform simple tasks which are compensated with micro payments. (Mechanical Turk, n.d.)

The crowdsourced workers were instructed to recognise images representing a given word from a group of images retrieved from online (Fei Fei, 2010). However, they could only make positive interpretations and had no option for example reporting the presumably massive amount of offensive or disturbing content yielded from random online sources. Also every image was only annotated once by a single person, so there were no procedures for verifying the quality of the selected images.

The researchers at ImageNet have not made any claims about the social implications of their collection methods or the possible underlying biases in the dataset. However, they have carried out some analysis on the demographic of the Mechanical Turk workers involved in the project: According to their research, the largest demographics by nation are USA (46.8%, of which 65% female, 35% male) and India (34%, of which 70% male 30% female). (Fei Fei, 2010) Of the ImageNet research team 33% are female and 67% male.

Considering the problematic methodology used in the construction of the dataset and the possible lack of diversity in the workforce behind it, it is not surprising that the ImageNet seems to display signs of bias. Browsing through the synsets for “woman” and “female”, a large number of the images appear objectifying and poorly representative, and many of the WordNet synonyms seem to be sexist and derogatory (see image 1).

Image 1: Screen capture from the ImageNet's images for "woman, adult female" (ImageNet, 2016).

Hypothesis

The ImageNet database is biased and discriminatory in its representations of women.

Methodology

Qualitative content analysis for recognising objectification in images and synonyms used to describe women in the ImageNet database. The research was conducted in March 2018 using the current content in the ImageNet database.

Synonym data

The synset “female, female person” in the WordNet hierarchy has 150 subsets of synonyms, and counting all the words in these subsets and their respective children subsets there are altogether 263 terms for “female person”.

Research Instruments for synonyms

Discriminatory synonyms were recognised based on the definition of objectification by Nussbaum (1995) and Langton (2008) (see notes 2). Words that correspond to at least one of the three following categories were marked as discriminatory:

1. terms that reduce a person to their looks

2. terms that reduce a person to their sexuality

3. terms that are otherwise offensive or derogatory

Results for synonyms

263 female synonyms

reducing to sexuality___50

reducing to looks___25

otherwise negative, derogatory or offensive___32

total___107 (41%)

186 male synonyms

reducing to sexuality___14

reducing to looks___5

otherwise negative, derogatory or offensive___10

total___29 (16%)

The analysis of the synonyms showed that 41% of the words linked to “female, female person” were objectifying or offensive, while for the words for “male person” the number was 16%. However, the comparison between the level of objectification in synonyms for “female person” and “male person” is not necessarily interchangeable, since many of the terms for men that in essence are objectifying (for example “stud” or “hunk”) still carry more positive and active connotations than most of the female counterparts (for example “minx” or “floozy”). Also it is notable that several of the male synonyms (for example “mother’s boy”, “fop”) are linked to characteristics that are traditionally seen feminine, and therefore used in a derogatory sense.

Image data

The analysis was carried out for the images in the synsets “female, female person” (1371 images) and its largest child synset “woman, adult female” (1045 images). Other subsets were not taken into account because of their varying number of images. ImageNet does not own or store the images in the database, but only provides the links to images on other websites. Because many of the images were collected as long as ten years ago, several of the links were outdated, and thus inaccessible. However, the ImageNet website still provided thumbnails of all the original images, so the analysis was conducted on them.

Research instruments for images

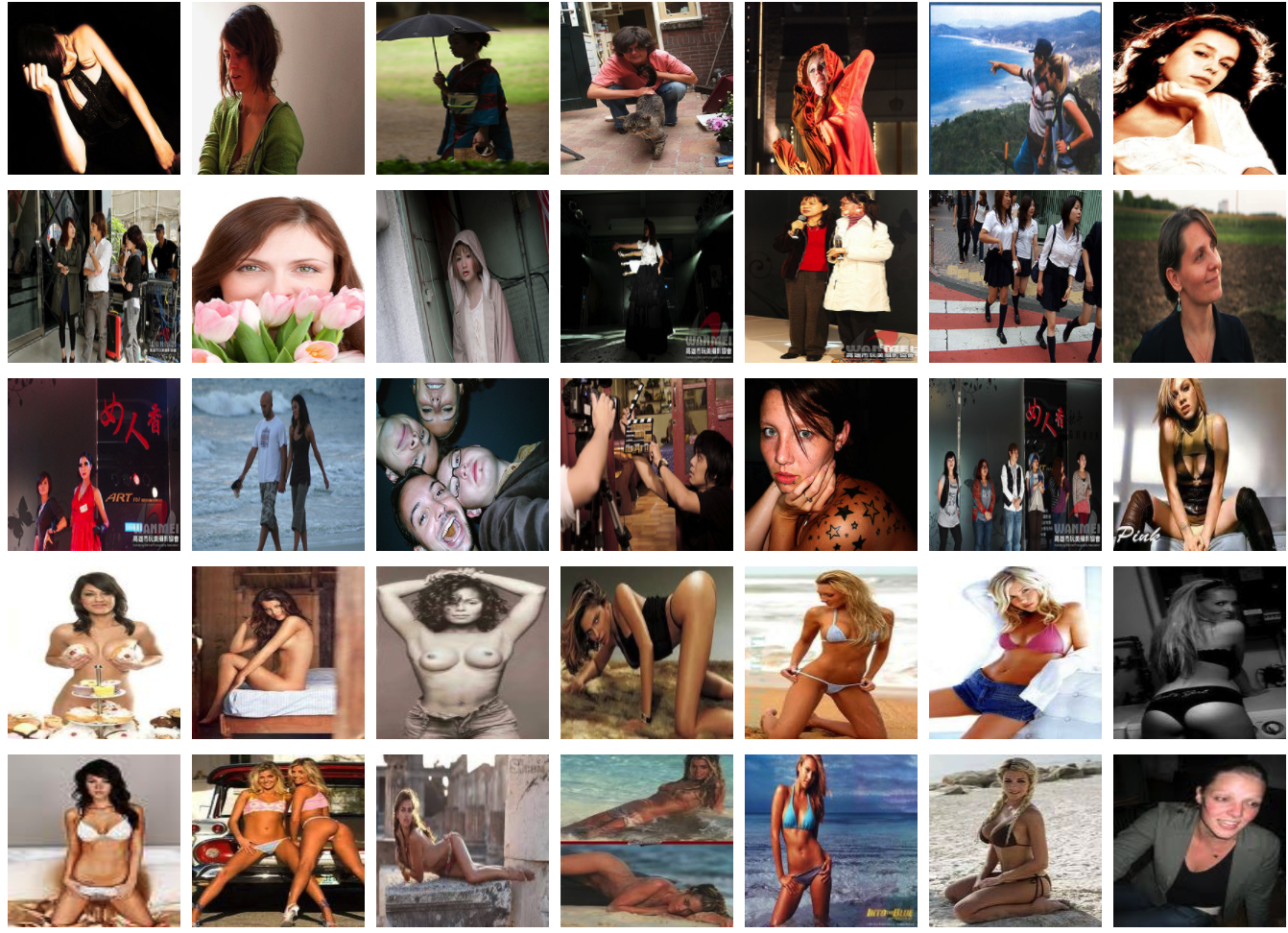

The categories for the image analysis were created based on MacKinnon’s definition of pornography (1987, see notes 3), in addition to the definitions of objectification by Nussbaum (1995) and Langton (2008) (see notes 2). The images that corresponded to at least one of the following categories were marked as objectifying (see image 2 for examples of categories):

1. person is presented in a state of undress but image is not explicitly sexual

2. person is reduced to appearance

3. person is reduced to body parts

4. person is presented as interchangeable with other objects

5. person is presented in postures of submission or display

6. image is sexually insinuating

7. image is sexually explicit and clearly designed for sexual pleasure

Results for images

1045 images for woman, adult female

1371 images for female, female person

in total 2416 images

1. presented in a state of undress but not explicitly sexual___94

2. reduced to appearance___45

3. reduced to body parts___39

4. interchangeable with other objects___6

5. presented in postures of submission or display___220

6. sexually insinuating___18

7. sexually explicit___19

total___441 (18%)

The analysis showed that 18% of the images of women were objectifying or discriminatory. This research did not examine the problematics around cultural beauty norms in the representations of women, but focused on the subject-object dichotomy that prioritises the male perspective.

Some of the images in the dataset were not only objectifying, but suggestive of humiliation, violence and child pornography. However, it is notable that for some of the most explicitly pornographic or disturbing images the links were broken at the time of the research, and only the thumbnails were accessible. This repeats Noble’s (2012) findings about the fast-paced migration of pornographic material online. An actual ImageNet user would download a list of image URLs for a given synset in stead of using the thumbnails, and thus would necessarily not account the most explicit material.

Semplice Theme

Image 2: Example images from the data set representing the different aspects of objectification.

Notes

1 Hannah Arendt refers with “thoughtlessness” to the banal evil caused by the failure to think with a conscience and question the status quo.

2 Definition of objectification by Nussbaum (1995), features 8-10 extended by Langton (2008). Cited here as in Papadaki (2015):

1. instrumentality: the treatment of a person as a tool for the objectifier's purposes

2. denial of autonomy: the treatment of a person as lacking in autonomy and self-determination

3. inertness: the treatment of a person as lacking in agency, and perhaps also in activity

4. fungibility: the treatment of a person as interchangeable with other objects

5. violability: the treatment of a person as lacking in boundary-integrity

6. ownership: the treatment of a person as something that is owned by another (can be bought or sold)

7. denial of subjectivity: the treatment of a person as something whose experiences and feelings (if any) need not be taken into account.

8. reduction to body: the treatment of a person as identified with their body, or body parts

9. reduction to appearance: the treatment of a person primarily in terms of how they look, or how they appear to the senses;

10. silencing: the treatment of a person as if they are silent, lacking the capacity to speak.

3 Definition of pornography by MacKinnon (1987):

“We define pornography as the graphic sexually explicit subordination of women through pictures and words that also includes

(i) women are presented dehumanized as sexual objects, things, or commodities

(ii) women are presented as sexual objects who enjoy humiliation or pain

(iii) women are presented as sexual objects experiencing sexual pleasure in rape, incest or other sexual assault

(iv) women are presented as sexual objects tied up, cut up or mutilated or bruised or physically hurt

(v) women are presented in postures or positions of sexual submission, servility, or display

(vi) women's body parts — including but not limited to vaginas, breasts, or buttocks — are exhibited such that women are reduced to those parts

(vii) women are presented being penetrated by objects or animals

(viii) women are presented in scenarios of degradation, humiliation, injury, torture, shown as filthy or inferior, bleeding, bruised, or hurt in a context that makes these conditions sexual.”

References

- AI Now Institute (2017) [online] Available at: https://ainowinstitute.org/ [Accessed 28 March 2018]

- Algorithmic Watch (2017) [online] Available at: https://algorithmwatch.org/ [Accessed 28 March 2018]

- Amazon Mechanical Turk (2018) Human intelligence through an API. [online] Available at: https://www.mturk.com/ [Accessed 28 March 2018]

- Angwin, J.; Larson, J.; Mattu, S. and Kirchner, L. (2016) Machine Bias. ProPublica [online] Available at: https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing [Accessed 28 March 2018]

- Arendt H. (1998) The Human Condition. 2nd edition. Chicago: University of Chicago Press.

- Berger, J. (1973) Ways of seeing. London: BBC Penguin Books.

- Bornstein, A. M. (2017) Are Algorithms Building the New Infrastructure of Racism? Nautilus [online] 55. Available at: http://nautil.us/issue/55/trust/are-algorithms-building-the-new-infrastructure-of-racism [Accessed 28 March 2018]

- Crawford, K. and Schultz, J. (2014) Big Data and Due Process: Toward a Framework to Redress Predictive Privacy Harms. Boston College Law Review [online] 55(1). Available at: http://lawdigitalcommons.bc.edu/bclr/vol55/iss1/4 [Accessed 28 March 2018]

- Deep Learning 4J (2017) Open Data for Deep Learning. [online] Available at: https://deeplearning4j.org/opendata [Accessed 28 March 2018]

- Deep Learning (2017) Datasets. [online] Available at: http://deeplearning.net/datasets/ [Accessed 28 March 2018]

- Fei-Fei, L. (2010) ImageNet: Crowdsourcing, benchmarking & other cool things. In: CMU VASC Seminar [pdf] Pittsburgh: Carnegie Mellon University. Available at: http://www.image-net.org/papers/ImageNet_2010.pdf [Accessed 28 March 2018]

- Haraway, D. (1988) Situated Knowledges: The Science Question in Feminism and the Privilege of Partial Perspective. Feminist Studies, [online] 14(3), pp. 575-599. Available at: http://www.jstor.org/stable/3178066 [Accessed 28 March 2018]

- Harding, S. (1986) The Science Question in Feminism. New York: Cornell University Press.

- Heider, D. and Harp, D. (2010) New Hope or Old Power: Democracy, Pornography and the Internet. Howard Journal of Communications [online] 13(4), pp. 285-299. Available at: https://doi.org/10.1080/10646170216119 [Accessed 28 March 2018]

- ImageNet (2016) [online] About. Available at: http://image-net.org/about-overview [Accessed 28 March 2018]

- ImageNet (2016) [online] Female, female person. Available at: http://image-net.org/synset?wnid=n10787470 [Accessed 28 March 2018]

- ImageNet (2016) [online] Woman, adult female. Available at: http://image-net.org/synset?wnid=n10787470 [Accessed 28 March 2018]

- Kuhn, A. (1982) Women’s Pictures: Feminism and Cinema. London: Routledge & Kegan Paul.

- Langton, R. (1993) Beyond a Pragmatic Critique of Reason. Australasian Journal of Philosophy, [online] 71(4), pp. 364-384. Available at: https://doi.org/10.1080/00048409312345392 [Accessed 28 March 2018]

- MacKinnon, C. (1987) Feminism Unmodified. Cambridge, Massachusetts, and London, England: Harvard University Press.

- McQuillan, D. (2015) Algorithmic states of exception. European Journal of Cultural Studies [online], 18(4-5), pp. 564-576. Available at: https://doi.org/10.1177/1367549415577389 [Accessed 28 March 2018]

- McQuillan, D. (2017) Data Science as Machinic Neoplatonism. Philosophy & Technology [online] pp. 1-20. Available at: https://doi.org/10.1007/s13347-017-0273-3 [Accessed 28 March 2018]

- Mulvey, L. (1975) Visual Pleasure and Narrative Cinema. Screen, [online] 16(3), pp. 6–18. Available at: https://doi.org/10.1093/screen/16.3.6 [Accessed 28 March 2018]

- Nissenbaum, H. (2001) How Computer Systems Embody Values. IEEE Computer, 120, pp. 118-119.

- Noble, S. U. (2012) Missed Connections: What Search Engines Say about Women. Bitch magazine [online], 12(4), pp. 37-41. Available at: https://safiyaunoble.files.wordpress.com/2012/03/54_search_engines.pdf [Accessed 28 March 2018]

- Noble, S. U. (2013) Google Search: Hyper-visibility as a Means of Rendering Black Women and Girls Invisible. InVisible Culture: An Electronic Journal for Visual Culture [online], (19). Available at: http://ivc.lib.rochester.edu/google-search-hyper-visibility-as-a-means-of-rendering-black-women-and-girls-invisible/ [Accessed 28 March 2018]

- O'Neil, C. (2016) Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy. New York: Crown Random House.

- Papadaki, E. (2015) Feminist Perspectives on Objectification. The Stanford Encyclopedia of Philosophy [online] Edward N. Zalta (ed.). Stanford: Metaphysics Research Lab, Stanford University. Available at: https://plato.stanford.edu/archives/win2015/entries/feminism-objectification/ [Accessed 28 March 2018]

- Seaver, N. (2013) Knowing algorithms. In: Media in Transition 8. [pdf] Cambridge: Cambridge University. Available at: https://static1.squarespace.com/static/55eb004ee4b0518639d59d9b/t/55ece1bfe4b030b2e8302e1e/1441587647177/seaverMiT8.pdf [Accessed 28 March 2018]

- Wajcman, J. (2010) Feminist theories of technology. Cambridge Journal of Economics [online], 34(1), pp. 143–152. Available at: https://doi.org/10.1093/cje/ben057 [Accessed 28 March 2018]

- Wilkie, A.; Savransky, M. and Rosengarten, M. (2017) Speculative Research: The Lure of Possible Futures. New York: Routledge.

- WordNet (2018) [online] What is WordNet? Available at: https://wordnet.princeton.edu/ [Accessed 28 March 2018]