'In Time'

This installation challenges the notion of linear time by blurring the boundary of the past present and future, disrupting the rules of time relative to oneself. A network of twelve cameras positioned around a rig record a time slice of a participant, creating a computational time bubble. The images are then activated by walking around the perimeter of the installation, creating an interaction ‘in time’ with oneself.

produced by: Jonny Fuller-Rowell

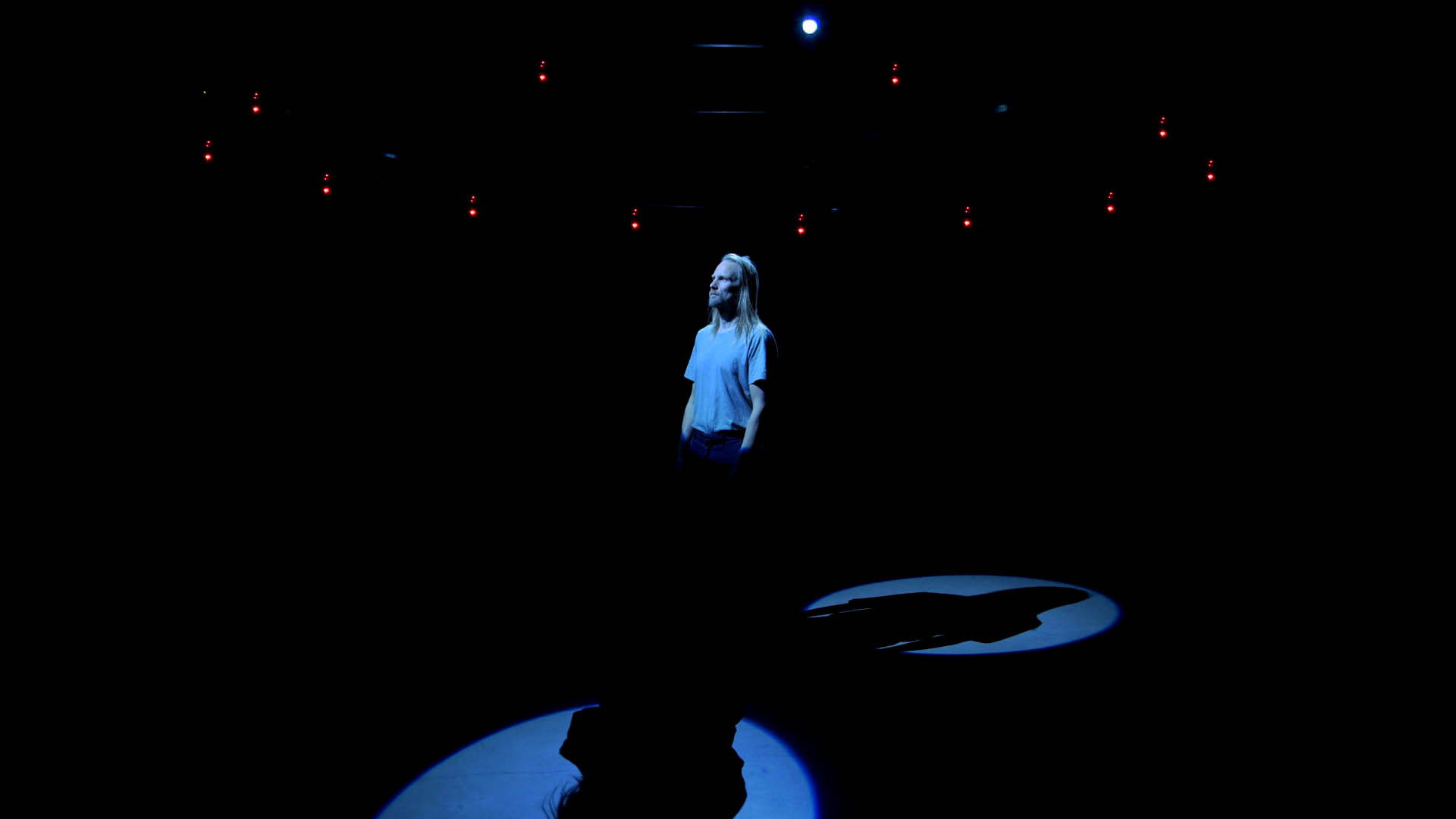

Video documentation of installation, 'In Time', 03:11.

Introduction

Our idea of time is a constructed bubble that although works very well in our daily life, is not a fixed thing, it is not absolute, only relative to the world that we construct. This artwork sits at the intersection of physics, neuroscience and philosophy attempting to explore the relationship between how we scientifically understand time versus how subjectively we experience time.

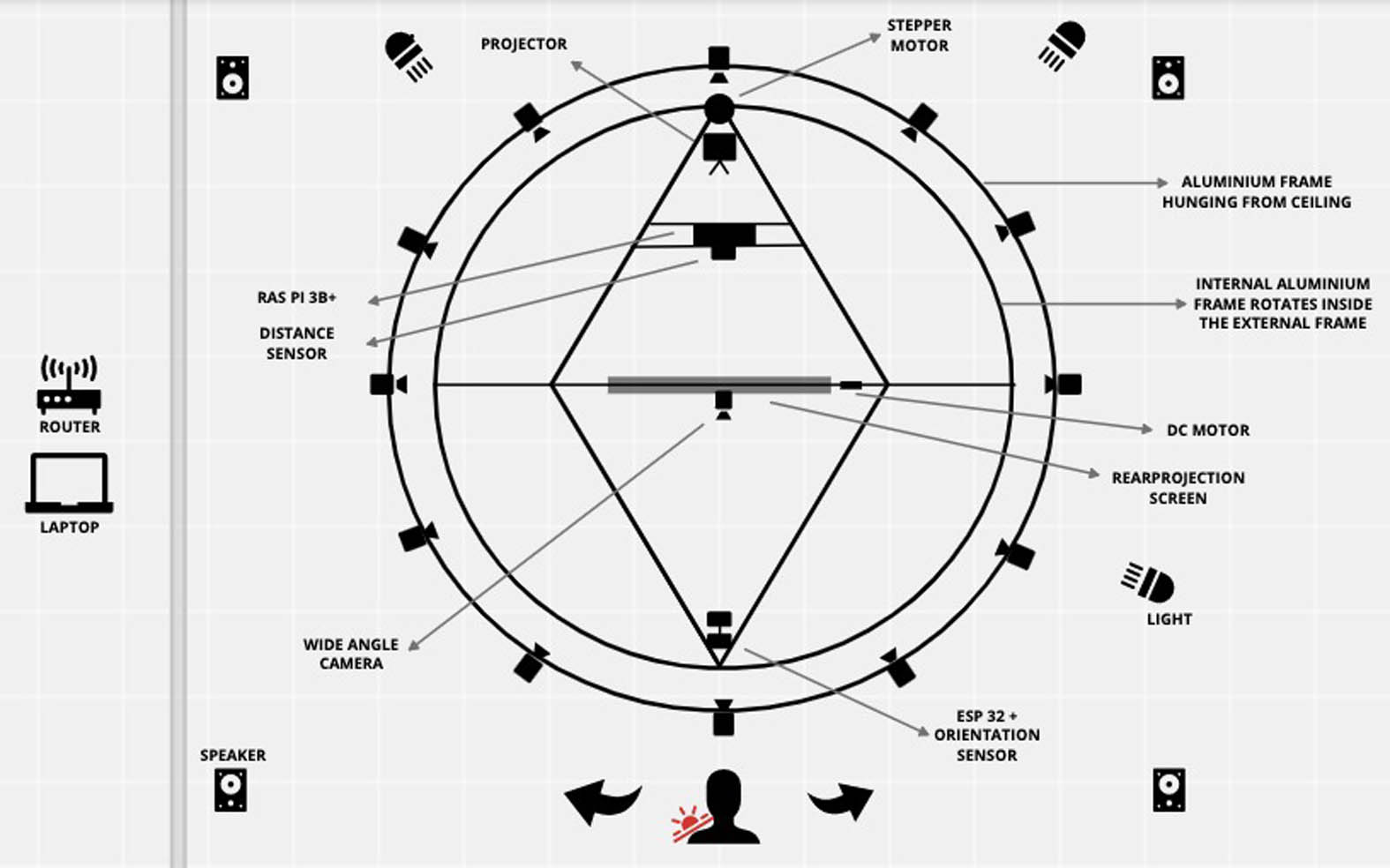

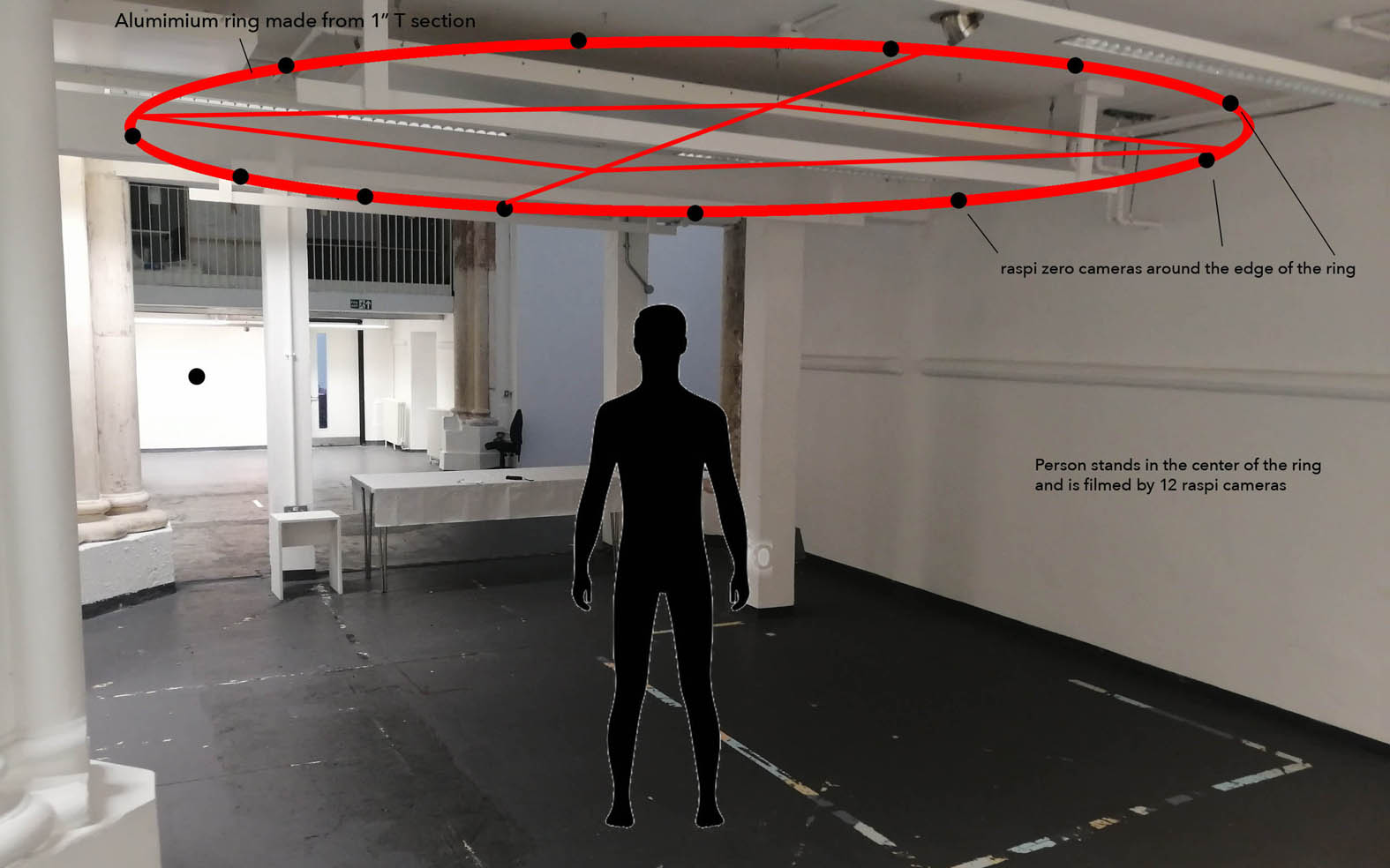

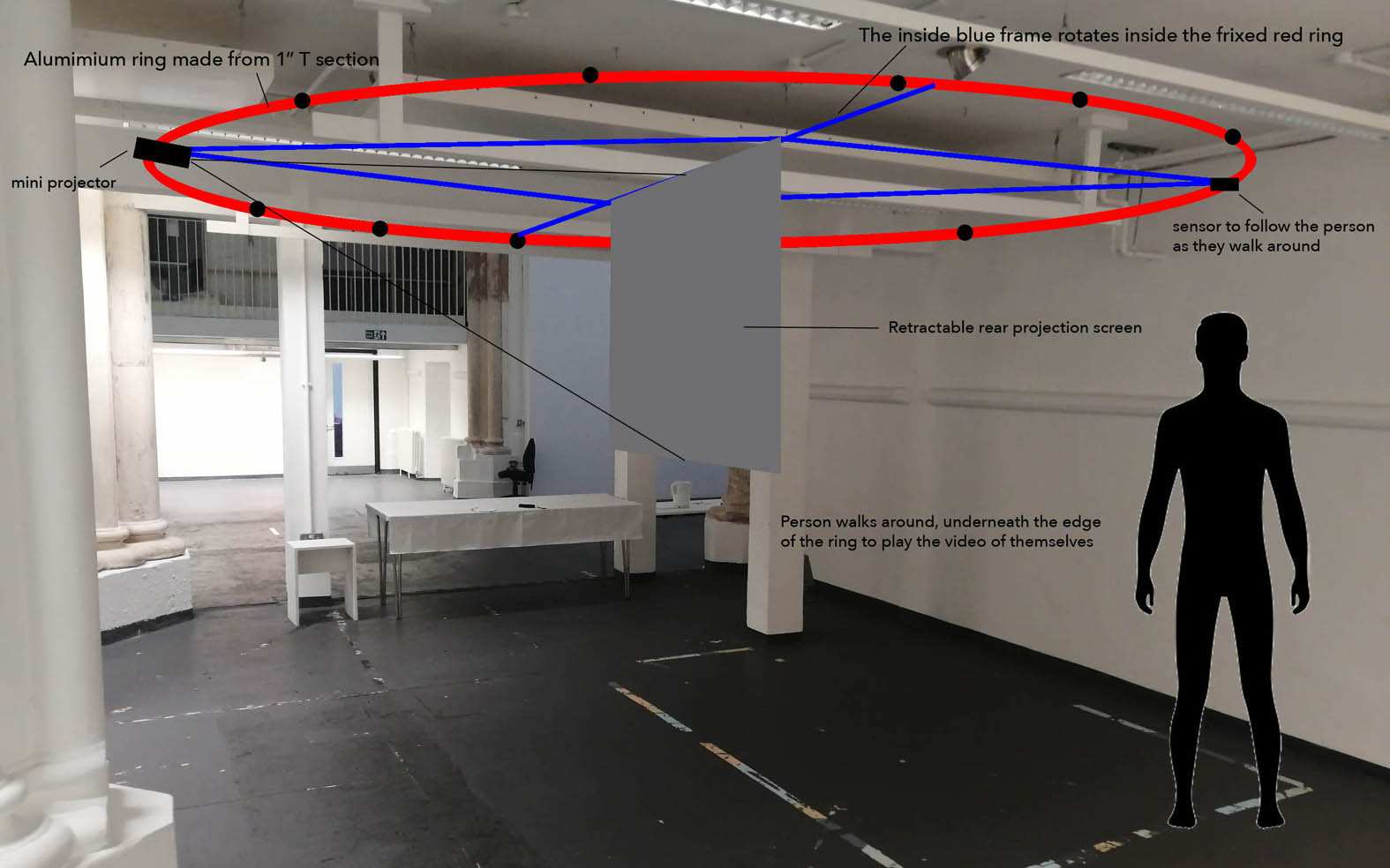

It is an installation that is designed to be experienced by one person at a time, in which a participant is asked to firstly stand in the centre of the installation and perform a slow 360 rotation. This act is recorded by 12 raspberry pi cameras positioned equidistantly around the installation, which is essentially a large automated rig that consists of a 4m diameter aluminium ring hung from the ceiling. The data is sent by WIFI to a main computer where it is processed to be sent back by WIFI to a portable projector mounted to the same rig. The projector is able to travel the perimeter of the aluminium ring where it now projects onto a retracted rear projection screen in the centre of the space. The participant is faced with a 1:1 image of themselves. As the viewer moves around the installation a stepper motor orientates the screen so that it always faces the participant. The participants location in space triggers the respective filmed angle to be projected, so that the participant can effectively walk around themselves.

Philosopher Henri Bergson argued that time is always measured through the prism of space. Our present notion of time is based upon the revolutions of the earth around the sun, therefore time is relative to space.[1] This installation acts as an analogy of this idea, the participant 'orbits' themselves experiencing the prerocoded version of themselves through their movement in space.

The installation does not attempt to answer questions, only offering an experiential dichotomy, encouraging the viewer to question their own ideas of how they perceive themselves in time and space. The work asks the following questions; is it possible to experience two ’time bubbles’ relative to each other at the same time, producing a paradoxical experience, in which the initial recorded ‘slow’ rotation now feels very fast, as the viewer attempts to face themselves through 360 degrees. The work also investigates how we interact with the extension of ourselves. The projected self appears as a mirror except that the roles are strangely switched, you become the reflection following the recording, questioning who is the object? Who is the subject?

Installation images of 'In Time', four meter diameter aluminum ring, twelve raspberry pi cameras, retracting rear projection screen.

Concept and background research

There is no objective ‘now.’ We intuitively feel that time flows, that it is part of the fundamental structure of human existence, we can think about reality without space, without things but it’s very hard to think about reality without time. As theoretical cosmologist Andreas Albrecht explains,

"The essence of relativity is that there is no absolute time, no absolute space. Everything is relative. When you try to discuss time in the context of the universe, you need the simple idea that you isolate part of the universe and call it your clock, and time evolution is only about the relationship between some parts of the universe and that thing you called your clock." [1]

I want to play with the idea that ‘time’ is always just a perception of time. The installation plays with the paradoxical experience of two acts, one being the participants initial (filmed) slow rotation and the other the act of walking around this projected performance. After user-testing I found that there seemed to be an instinctive response to try and face oneself through the 360 rotation. This led to an experiential uncanniness as you find that to stay in 'sync', (to stay facing oneself through 360 degrees) you have to travel at a much higher speed relative to the initial rotation speed. Also, when a synchronisation is achieved the two movements seem to cancel each other out, even though the screen, the participant and the film are all rotating (at various speeds) the experience is that nothing is moving because the image that is being activated is the one which faces you.

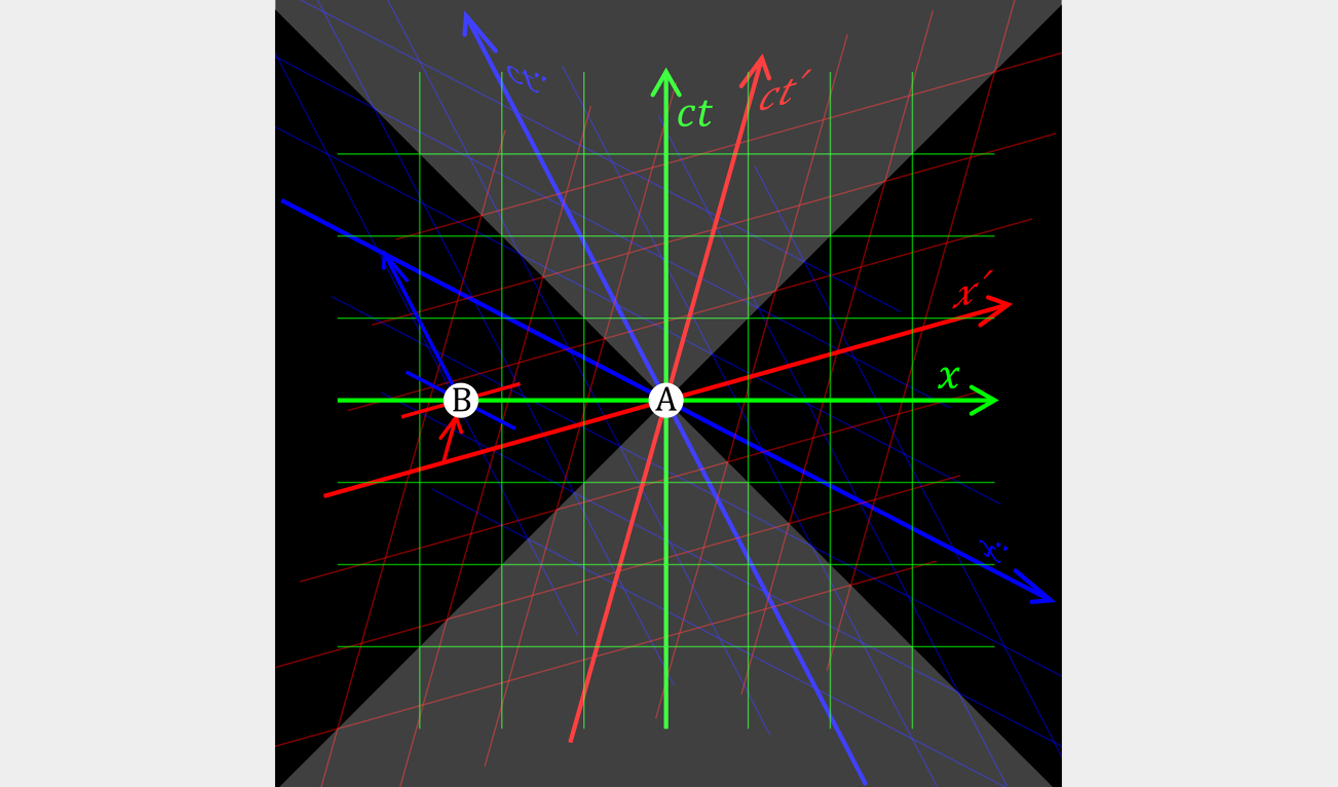

The idea that time might be experience in different ways was inspired by my research into Lorentz 'time dilation' (Lorentz transformations) which is the difference in the elapsed time measured by two clocks, either due to them having a velocity relative to each other, or by there being a gravitational potential difference between their locations. Time dilation explains why two working clocks will report different times after different accelerations.

For example, at the international space station time goes slower, lagging approximately 0.01 seconds behind for every 12 'earth' months passed. This time differential is also compensated for in order for GPS satellites to work, "they must adjust for the bending of spacetime to coordinate properly with systems on Earth."[2]

Relativity of simultaneity diagram: Event B is simultaneous with A in the green reference frame, but it occurred before in the blue frame, and will occur later in the red frame.

The 'Twins Paradox' is a thought experiment used to disprove Lorentz transformations which is central to Einstein's special theory of relativity. According to Lorentz, time slows down the faster you go. One twin stays on the earth while the other travels on a rocket very fast for a long period of time, turns around and comes back again. The equation explains that when the traveling twin returns he is younger than the one on earth, no paradox, except that some argue how can you tell which is the twin that is moving. The twin in the rocket from his perspective might be the one stationary and it is the one on earth that is traveling away from them, in which case the twin on the earth would be younger when they meet again. This is explained by further equations/ thought experiments and theories however what was more relevant for this installation was the idea of using twins to communicate an idea of conflicting experience of time. More than a tool which starts two events at the same time (two people being born together) but also that you might have some sort of physical connection to another person, to have two duel experiences.

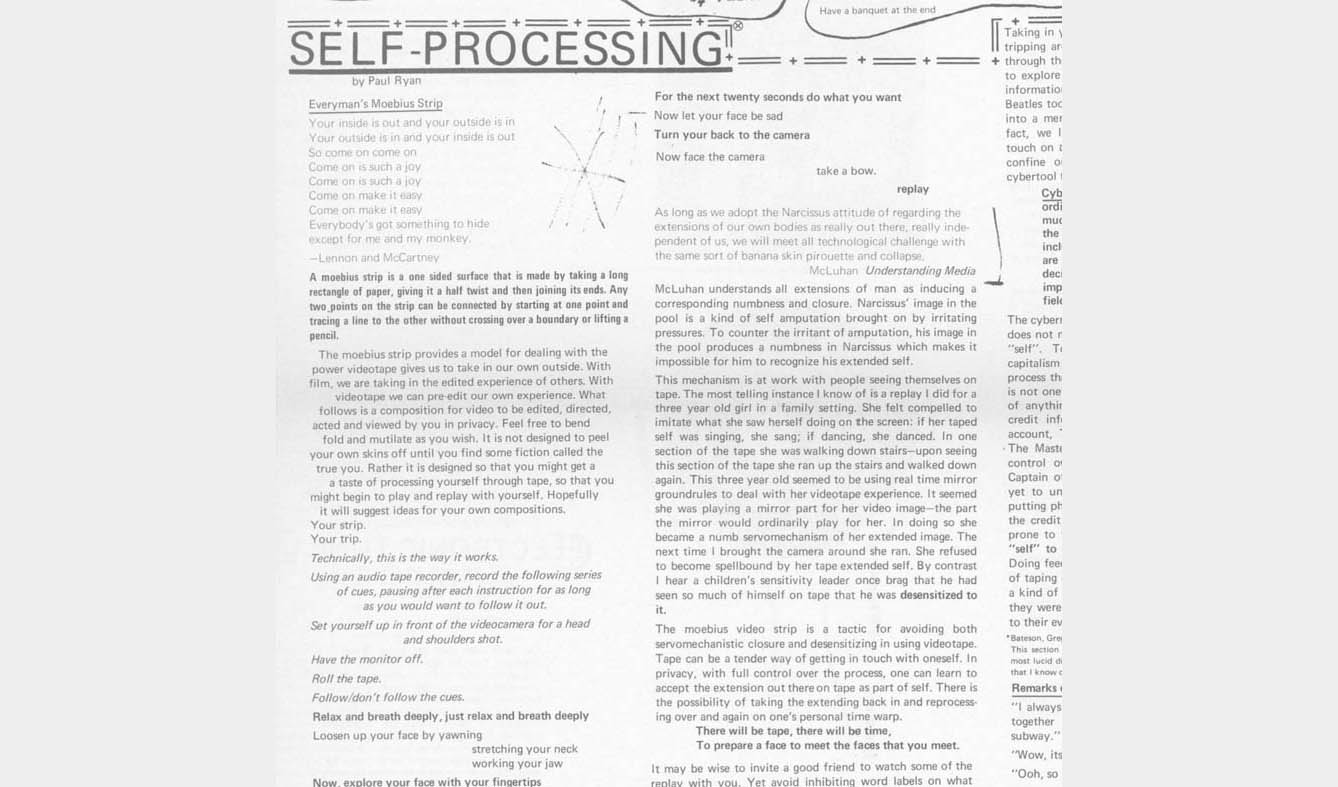

This idea of a experience of the self was explored by Paul Ryan in the 70's with the introduction of the 'videotape' to a mainstream public

"With videotape, the performer and the audience can be one and the same, either simultaneously or sequentially." [3]

The artwork, Self-processing by Paul uses the idea of a Möbius strip, the simplest non-orientable surface to 'provides a model for dealing with the power videotape gives us to take in our own outside' [4] Although the film camera existed in the 1970’s and indeed was a ‘home’ commodity the video camera was a far more immediate technology and allowed the user to edit their own experience, “so that you might begin to play and replay with yourself.” Self-processing as an artwork is merely a set of instructions that take advantage of this new technology and asks what it might help to reveal about ourselves.

Paul Ryan, Self-Processing, Radical Software, №2, 1971, p.15.

“Tape can be a tender way of getting in touch with oneself. In privacy, with full control over the process, one can learn to accept the extension out there on tape as part of self . There is the possibility of taking the extending back in and reprocess- ing over and again on one's personal time warp.”[5]

'In Time' Extends this power…

We are now very used to a virtual presence of our bodies and invest more or less of our ‘self’ in them. Instagram / Facebook offer useful templates to store our identities over which to titivate. 'In Time' offers the ability to view yourself in 360 degrees which adds a further layer of realness to the extended self.

The success of 'In Time' perhaps partly relies on the somewhat normalised narcissist attitude that the extension of our body is really ‘out there’ independent of ourselves. The initial 360-degree recorded rotation, especially being an exact 1:1 ratio of ourselves standing blinking in front of us, has power. As the recorded self turns you are compelled to intimate. Standing in front of your reflection the roles switch, you become a numb servomechanism of your extended image, circling at the directed speed.

The instinctive tendency to face yourself is explored by German video artist Peter Weibel’s installation, Observation of the Observation: Uncertainty, (1973) in which he juxtaposed cameras and monitors in such a way that the viewers were unable to see themselves from the front, he noted that the viewer would always try to see themselves. The self-observers see different parts of their bodies, but never their faces, “no matter how much they twist and turn”. [6]

Peter Weibel, Observation of the Observation: Uncertainty, 1973.

‘In Time’ plays with this research, the computation in the system allows the elastication of time, varying the playback speed of the central rotation, so at points it is more or less easy to remain in sync with oneself through space. The synchronization of the two rotating ‘selves’ produces a strange harmony. The two movements cancel out the experience of motion. A similar effect is present in an artwork called BeijIng Accelerator by Marnix de Nijs. A participant takes position in a racing-chair secured on a motorized structure equipped with a joystick. A 160x120cm screen is positioned in front of the user, they control both the direction and speed of the rotating chair they are sat in. Rotating panoramic images are projected on the screen before them, the aim is to synchronize the moving image with the rotation of the chair. Once this is achieved, the participant is able to view the images properly and the disorientation associated with the uncoordinated spinning is blocked. 'In Time' substitutes the moving image of the building with the image of self. The question ‘In Time’ is asking is how much invested experience can be embodied in the recording and can this be manifested at the same time as re-experiencing it. Is it possible to have a dual experience, or does the extension become an independent entity?

Marnix de Nijs, Beijing Accelerator, 2006.

The 'Time-Slice' camera was first devised in 1980 by Tim Macmillan and was later developed most famously as a filming technique used in the 'The Matrix' trilogy, rebranded 'bullet time'. 'In Time' uses the same technique, however in much the same way that Paul Ryan's artwork Self-Processing used the video camera as a tool for self-reflection, 'In Time' also allows an accessibility to go 'inside' the technology, to become the subject and the object. Unitizing the power of computation and physical computing this hardware/software heavy process is transformed 'user friendly' into a tool for self-reflection/processing. 'In Time' develops the medium by repositioning the experience from a 2D screen to a spatial stage. As the participant activates the images by walking around themselves they are not only 'self-processing' in time but also in space. The installation uses spotlights to control the participant's perception of space, cancelling out the background with darkness, illuminating only the subject (yourself as you are filming) and the object (the projection) so that you are solely faced with your reflection and your relationship to it, of looking and being looked at. It becomes a phenomenological tool for the study of conscious awareness. Asking what does it mean to live ‘in time’ as opposed to focusing on the experiences we have while living in time. The difference between thinking about the content of what you are thinking about, as opposed to the act of thinking about that content. By turning away from our immersion in the outer world and reflecting back onto our consciousness within it, can we have a greater understanding of how we experience ourselves in time?

In his book The Phenomenology of Internal Time-Consciousness, principal founder of phenomenology Edmund Husserl's attempts to account for the way things appear to us as temporal, how we experience time. He explains that there is an important dimension to perceptual experience, called 'time-consciousness'. [7]

"This seemingly unconscious structure consists, at a given time, of both retentions, i.e., acts of immediate memory of what has been perceived “just a moment ago”, original impressions, i.e., acts of awareness of what is perceived “right now”, and protentions, i.e., immediate anticipations of what will be perceived “in a moment”. It is by such momentary structures of retentions, original impressions and protentions that moments of time are continuously constituted (and reconstituted) as past, present and future, respectively, so that it looks to the experiencing subject as if time were permanently flowing off." [7]

The suggestion that the present moment is always a combination of the past, present and future is literally explored with this installation. The past act of oneself rotating is replayed in the present in the same time, in the same space. The 'dropoff' in experiential memory after the first act is finished, is moved forward to the present in an effort to intertwine these two experiences.

Technical

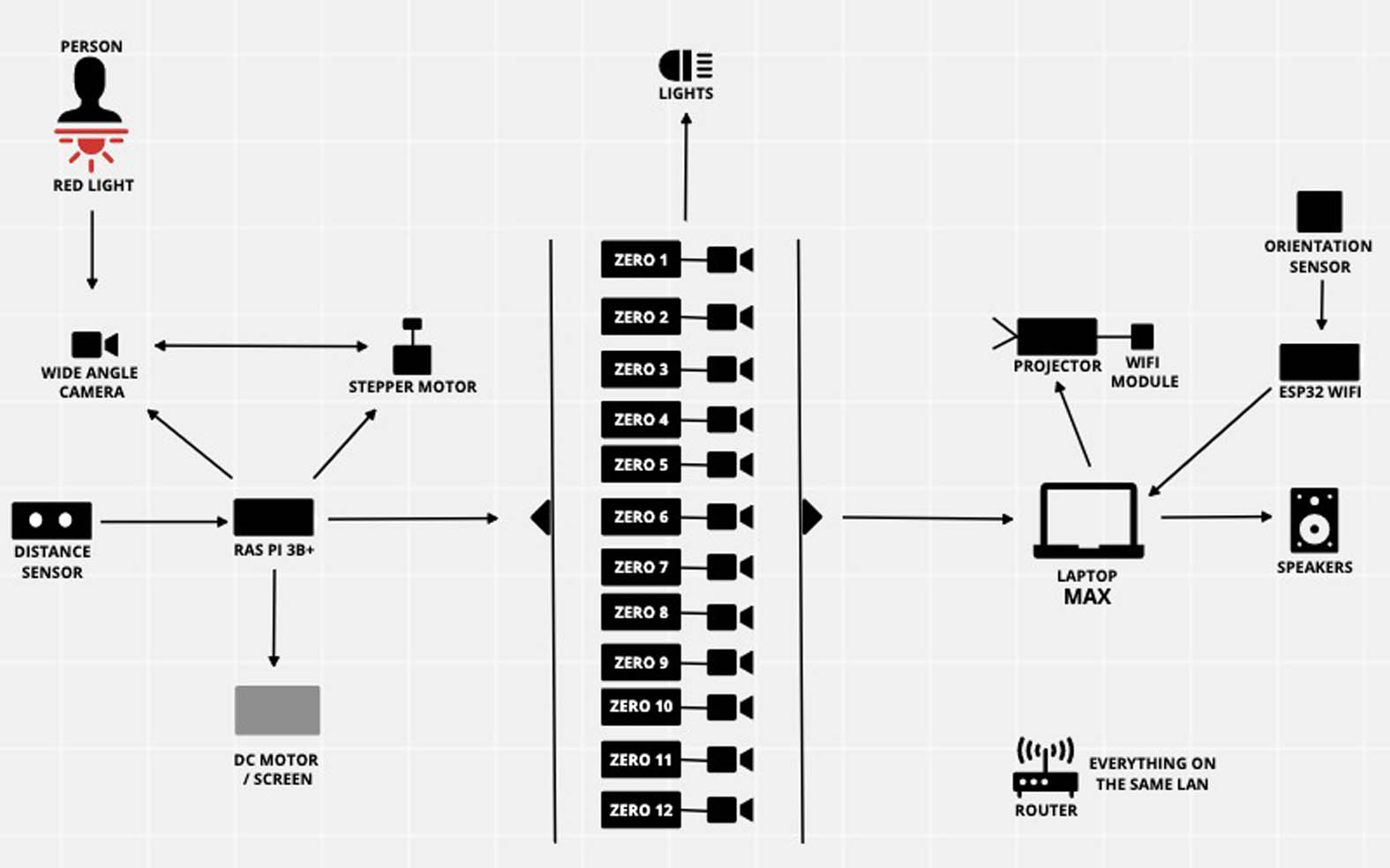

Instructions: The participant is asked to stand in the centre of the installation where a spotlight lights the ground. Once in position twelve raspberry pi cameras around the person record their movement. They are instructed to turn slowly through 360 degrees. After 14.4 seconds (1 second for every degree) the films are automatically loaded into a MAX patch which projects the films onto a retracted rear projection screen. The viewer must turn on a small red led badge so a camera connected to a central raspberry pi 3B+ can track their position. As the viewer moves around the installation a stepper motor, controlled by the same central raspberry pi (using the positional data) orientates the screen so that it always faces the participant. Their location in space triggers the respective filmed angle so that they can effectively walk around themselves.

Computation: Automation, to synchronise events. / Computational speed of processing. / Machine vision to track a person's position. / Tracks the position of the projector to control playback order. / Controls the measure of time, playback speed of the recordings.

What technologies did you use?

The artwork is essentially a large automated rig that consists of a 4m diameter aluminium ring, hung from the ceiling with 12 fixed cameras positioned evenly around the circumference. The Sequence of operation with the associated technology used are listed below:

1. Spotlight lights ground in the centre of installation controlled by a raspberry pi zero and relay.

2.The person stands in the light and triggers another set of stoplights (directed on their torso) and also triggers the cameras to start their recordings. The lights are controlled by a raspberry pi zero and relay which is triggered by a distance sensor controlled by a raspberry pi 3B+ which communicates via Wi-Fi using python socketsio. This also sends a message to all twelve raspberry pi zero's with cameras to start the filming.

3. The person turns 360 degrees. After 14.4 second the lights are turned off and the recording sent to a main laptop running MAX. The raspberry pi zero's send their respective films/files automictically, using a script written in python via ftp. They are sent directly to the folder which MAX is using which loads the films into the patch. A further spotlight is lit to direct the participant to the centre of the installation.

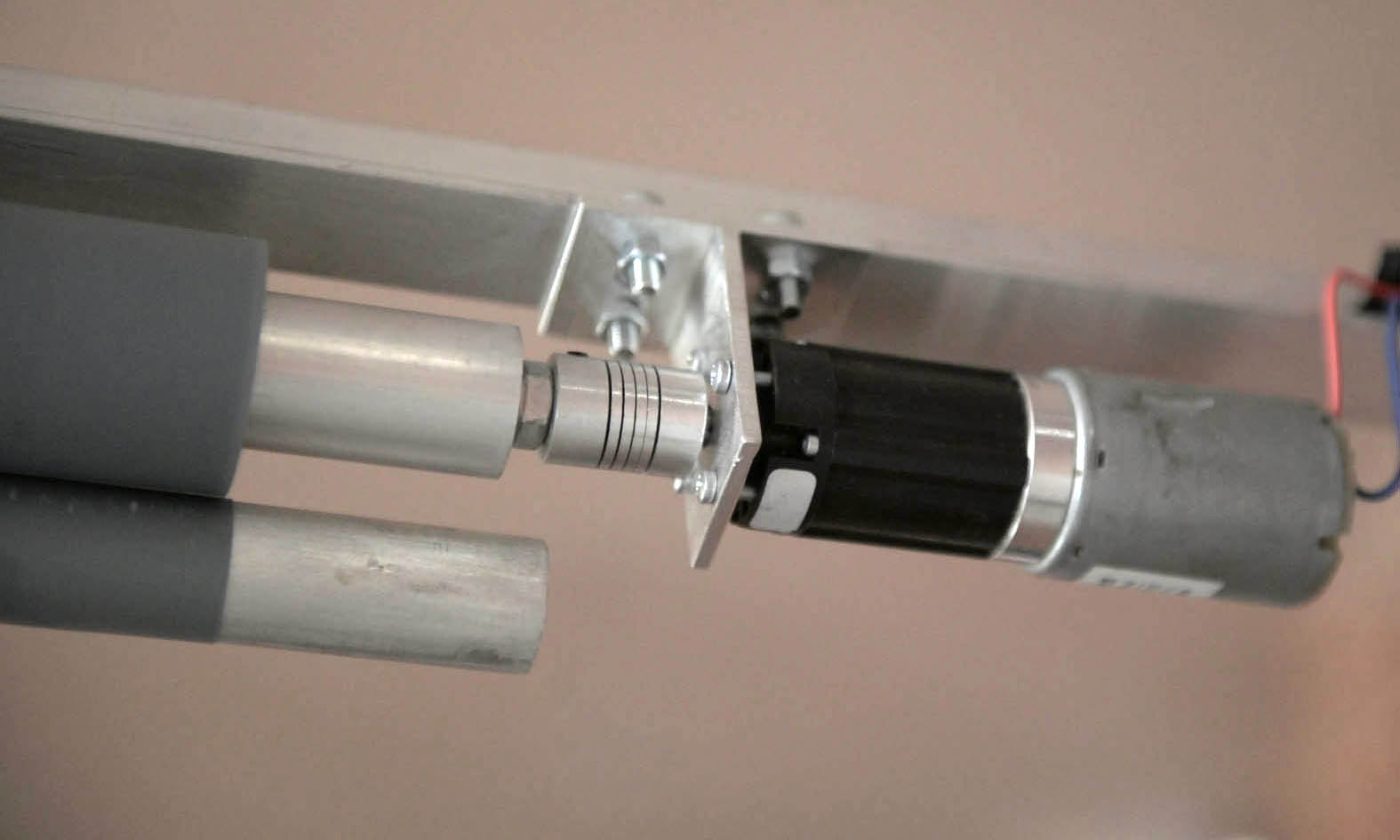

4. The rear projection screen is retracted using a 12v dc motor controlled by the central raspberry pi and a L298N H-bridge.

5. The main computer screen is cast to a portable projector via WIFI.

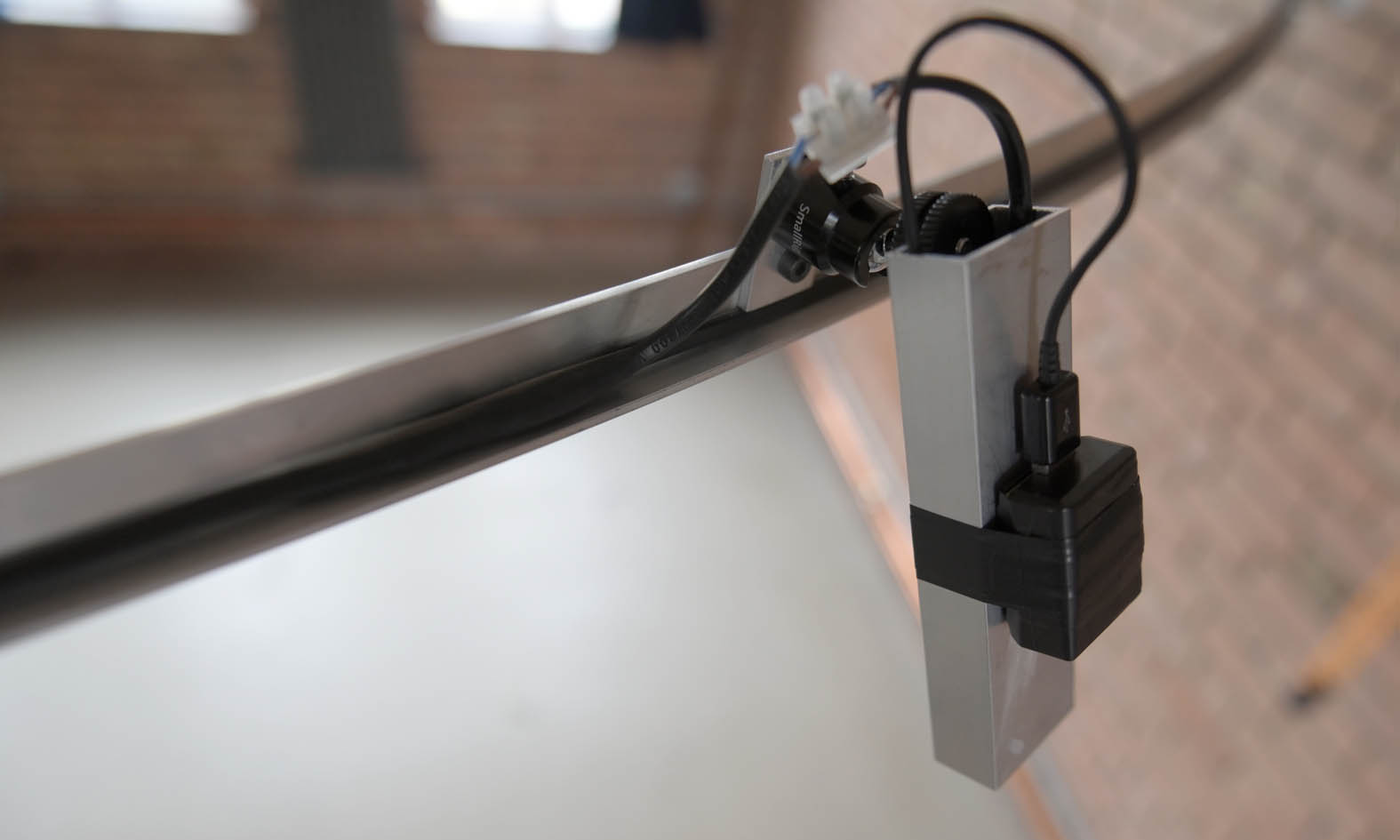

6. The participant activates an led badge which is used to locate their position relative to the position of the screen. The central raspberry 3B+ has a wide angle camera connected which is mounted above the projection screen looking at the participant. Using openCV2 / python, the raspberry pi can see how far the red led light is from the centre of the screen (it's frame). This distance is mapped to the speed of the stepper motor (also control by the central raspberry pi) so that the further the person is from the centre of the frame the faster the motor/ projection screen turns, i.e. it follows the person around the space.

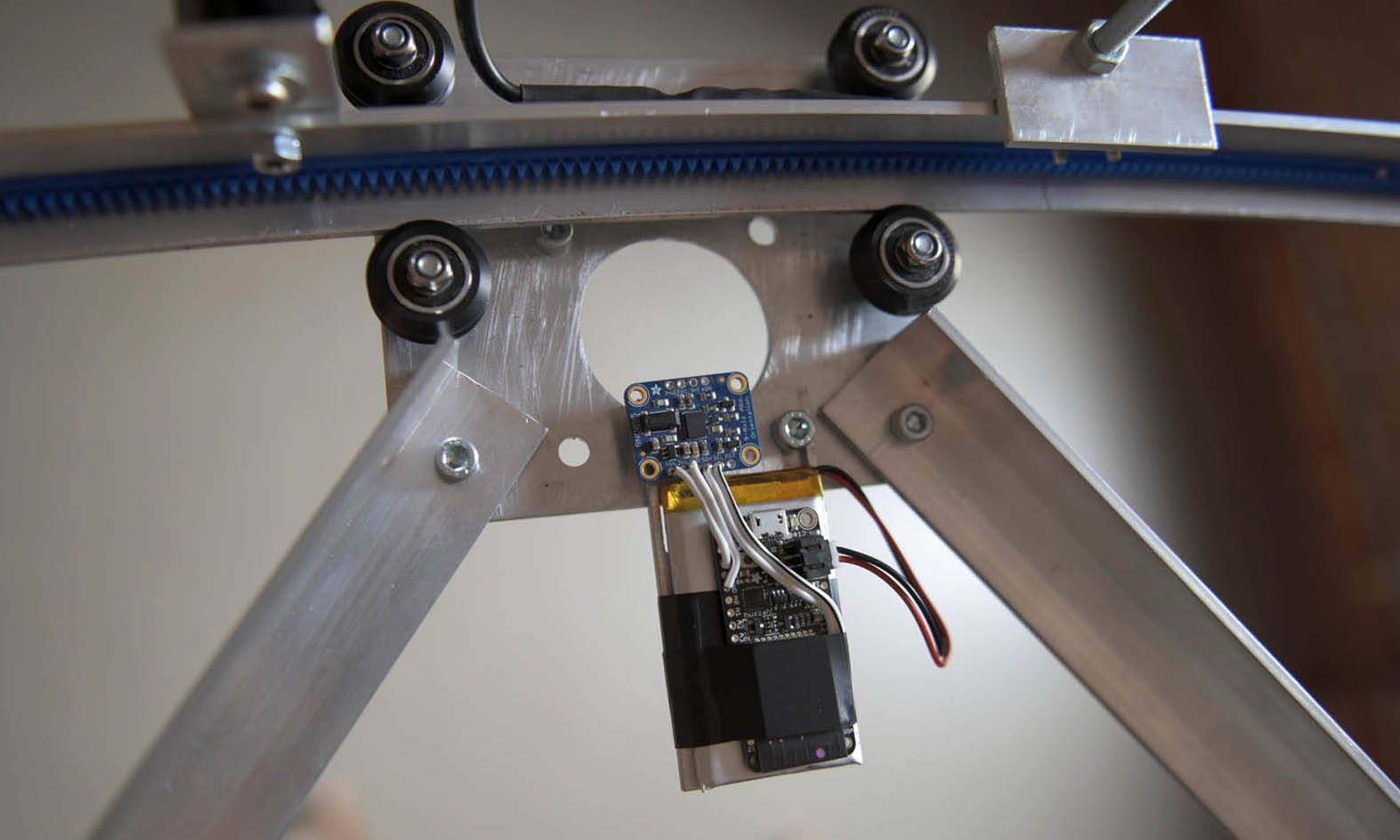

7. The projector / max patch presents the film relative to where the person is standing, i.e. the projector will play the film closest to the camera that filmed it. An orientation sensor is positioned above the person's head directly opposite the projectors position. This data is sent via WIFI using an ESP32 module to the MAX patch. The data ranges from 0 - 359 (degrees), MAX uses this positional data to trigger which of the twelve films should be displayed on the screen. i.e the film closest to the camera which originally filmed it.

8. The max patch also controls the measure of time. Starting at real time then slowing the playrate down to half and then speeding up. Audio of a ticking clock is sent to four speakers surrounding the ring whose speed varies depending on the recording's playback rate.

After 90 seconds of interaction, the process is reset and starts again.

n.b. As the installation depends heavily on WIFI, a router was used to set up a separate local area network and all IP addresses (12x raspberry pi zero's, 1 x raspberry pi B3+, 1x laptop, 1x esp32 module, 1 x projector WIFI module) were all made static.

Why did you use these (technologies)?

As the raspberry pi's were heavily used in this project I decided to use python as the main coding language, even though I had no previous experience in this language. I would have preferred to use openframeworks for some aspects mainly the machine vision however due to the multiple operations the central raspberry pi had to do I thought it would make sense overall to use python. The same block of code could then connect to other devices, using python-socket and ftp libraries, control a stepper motor, incorporate machine vision using openCV2 as well as control other physical computer components, such as an H-bridge and relays.

I decided to use a small red led light to track a person's position. As it needed to be dark for the projection I could not use face recognition or frame differencing especially as the rig rotates. I also wanted to make sure that it tracked just one person. Due to Covid-19 restrictions I didn't want there to be any contact with the installation so I decided to make small led badges which a person could take with them.

What challenges did you overcome?

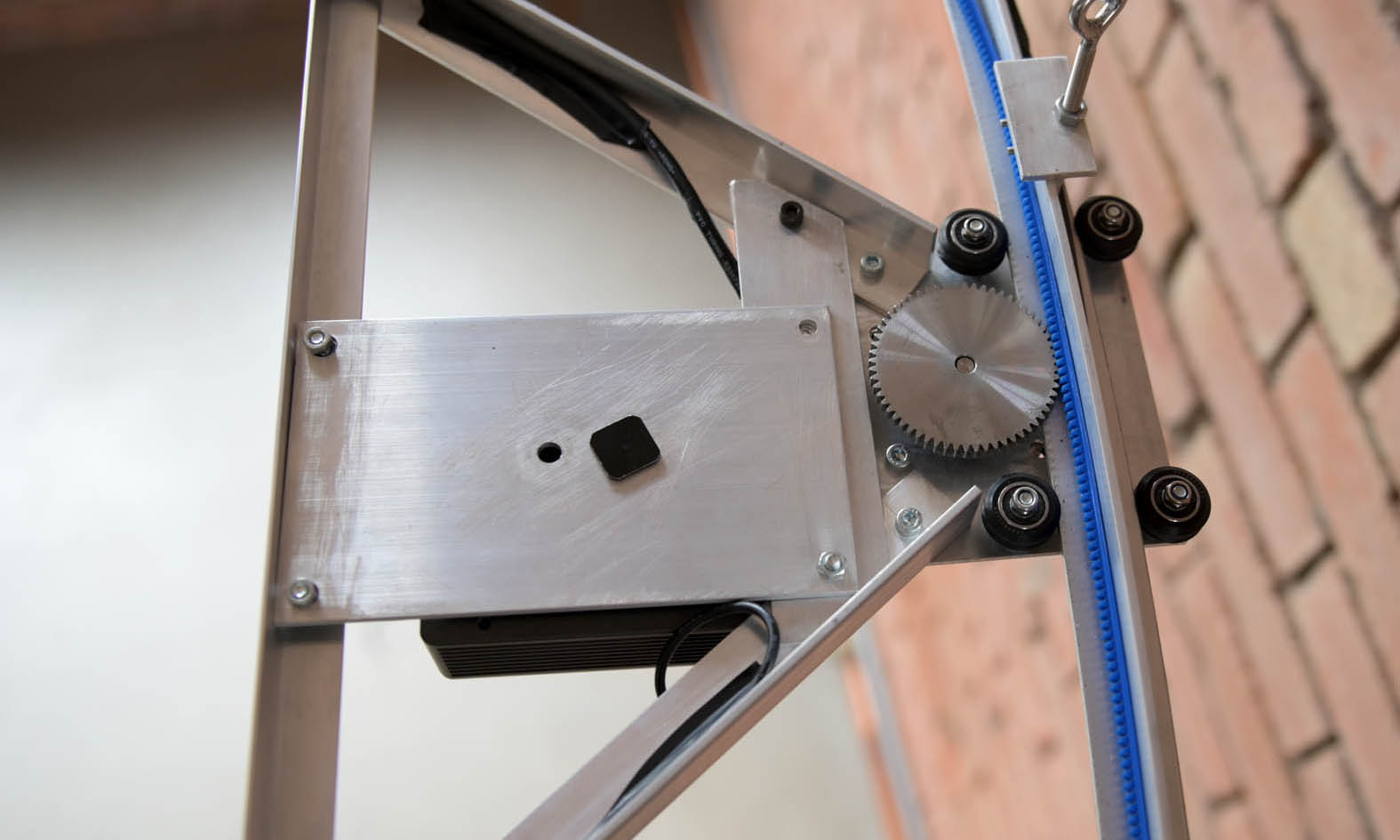

A main challenge was in the construction of the aluminium ring. This is 4 meters in diameter and had two to be perfectly round in order for an internal structure to travel inside of the circle. It also needed to be dismantlable to install in other locations. I designed the ring to be made in five separate 2.5 meter curves that when put together made up the ring. I made custom joins and hanging brackets so it could be assembled and hung easily from the ceiling using wire rope. A flexible rack was mounted around the inside of the ring for a stepper motor with spur gear to engage with in order to turn the internal structure which mounts the retractable rear projection screen and projector, around the inside of the aluminium ring.

I had not programmed in Python before so had to get used to this language.

A large challenge was to connect all the components together using WIFI so the process could be automated. I decided to use a separate router to set up a dedicated LAN, however the amount of elements used made the network set up complicated. I had to learn how to set up static IP addresses and understand the difference between servers and clients to get this to operate.

The processing power of the laptop needed to play twelve films at the same time was also a challenge. By using low resolution (5 mb) raspberry pi cameras and filming with a black background greatly reduce the individual films sizes. Each 14.4 sconed recording was only 2mb in the end which meant the Max patch could play the altogether smoothly.

The configuration of the camera's was also very important. If their position as not aligned to the centre of the installation the recorded images of the person would not transition smoothly, jumping in space depending if the camera was pointing to far up/down to the left/right. I used an external monitor with a red spot to match to a spot on the screen. The cameras could be adjustable as they were mounted with ball joints.

fig.1 network diagram / fig.2 system diagram / fig.3 'filming' proposed exhibition setup / fig.4 'playback' proposed exhibition setup

Video clip of the 4 meter diameter aluminium ring with it's concentric counterpart rotating inside it.

Installation stills: fig.1 four meter diameter aluminum ring / fig.2 portable wifi projector / fig.3 stepper motor rack and pinion / fig.4 esp32 wifi module with orientation sensor / fig.5 center mounted wide angle camera / fig.6 one of twelve raspberry pi cameras (front view) / fig.7 one of twelve raspberry pi cameras (back view) + wiring / fig.8 12v dc motor powered screen.

Future development

I would like to develop the work further by showing it to a wider audience, because of Covid-19 restrictions the numbers were very limited. Audience feedback has been an important aspect of the work to date but this certainly could be extended further, as essentially this is a project investigating how we perceive ourselves time and space. Although the installation is currently designed to be experienced/interacted with by one person at a time (social distancing measures) a future development would be to open up the piece to a gallery audience, creating another layer to the piece, an audience watching the participant interact. Perhaps having a monitor outside the installation space for an audience to view would add an extra dimension? A participant would then know what they were about to experience, how would this affect the work?

A future development of the piece would be to investigate different amounts of control the participant has over the playback of the films themselves. Such as by using their movement to control the playback speed rather than at predetermined rates, maybe this would heighten the connection of the literal projected self. I could do this by utilizing the positional data from the esp32 / orientation sensor programming in such a way that if the person moved by one degree the MAX patch could progress the corresponding film by one or a number of frames.

The use of sound is another element I would like to develop further. The playback of a physical movement may still look ‘normal’ when sped up or down but the spoken word must be replayed at a specific rate. Manipulating sound might communicate a change in the measure of time in a more effective manner than vision.

I would also like to investigate what would happen if the installation was installed in a site specific manner. The initial recording needs to be in complete darkness to isolate the figure but the playback could be presented so the viewer is more conscious of their surroundings. How would this affect the experience and could it then be used as more of a tool to investigate more social/political interventions.

Self evaluation

I used a lot of what I had learnt from the course and this helped me to solidify my knowledge. I had made a prototype of the installation in the 'Special Topics In Programming for Performance and Installation' (MAX) module, but to make this development work I had to use skills from creative coding, physical computing, Programming for Performance and Installation and theory, along with my pre-existing skill base in rig making and photography. I think the project does develop the pre-existing 'time slice' technology in that it moves from a 2D experience to one which can be spatially interacted with. It now becomes a tool for self-reflection, developing investigations in perception, cognition, experience, all longstanding themes in art and philosophy. I think the artwork needs a lot of development both technically and conceptually but I feel it is an interesting and worthwhile path of investigation.

I understood from the beginning that it may not be possible to ‘physically’ exhibit this work in the final group show, due to COVID-19 restrictions. In light of this I decided to make the piece in my studio in Birmingham, so I could open the work up to a test audience through-out the development. In the end for practical reasons it was not possible to install the installation in the final exhibition but I did open the artwork up from my studio space in Birmingham, from which I could control viewings one at a time. The artwork was 'Covid friendly’, essentially a machine-human interface. Participants were asked to wear an LED badge, used for the machine vision to operate. The badge was a disposable element which the participant could take away with them, which ended up as a marketing tool!

I have utilised some of the benefits of computation in speed of processing, communication between elements, machine vision, which all help automate the artwork, but the system itself has little authorship over the experience it produces. I think I could easily extend the research past the MA, focusing on a whole different set of skills such as machine learning, which could potentially take this artwork on a different path. In its current iteration I am pleased with my final project and feel that it is the culmination of two years learning. I feel I have grown in confidence and have developed the computation foundations equipping me with the skills and knowledge to develop future projects.

References

1. Podcast discussing Henri Bergson's 'Time and Free Will' (1889), https://open.spotify.com/episode/6ZdndCpk4RHWYVbWYHvcwv?si=DN37p3DtTnOYv2MDvLJRVQ

2. Theoretical cosmologist Andreas Albrecht, University of California, The Illusion of Time: What's Real? by Robert Lawrence Kuhn, July 2015, (https://www.space.com/29859-the-illusion-of-time.html)

3. Callender, C.; Edney, R. (2001). Introducing Time. Icon Books.

4. Video Mind, Earth Mind: Art, Communications, and Ecology: 5 (Semiotics and the Human Sciences) May 1993, p.19

5/6. Paul Ryan , Self-Processing, Radical Software, Volume I, Number 2 (The Electromagnetic Spectrum), Autumn 1970, p.15

7. Emergence in Interactive Art, Jennifer Seevinck, p.41, 2017

8. Husserliana, vol. X, XXXIII.

9. The Stanford Encyclopedia of Philosophy, first published Feb, 2003; substantive revision Nov, 2016, Metaphysics Research Lab, California, US, by Christian Beyer: https://plato.stanford.edu/entries/husserl/#EpoPerNoeHylTimConPheRed

Other references:

Martin Heidegger, Being and Time , 1927

Henri Bergson, Duration and Simultaneity, 1922

Peter Weibel, Chronocracy (essay), Machine Times, 2000.

Atau Tanaka, Speed of Sound (essay), Machine Times, 2000

"Machine Times" is a collection of essays, interviews and art projects that explores the relationship between time and technology in the context of art, science and philosophy.

Helen R. (trans.) Ingarden, Roman; Michejda, Time and Modes of Being, Charles C. Thomas , Jan, 1964