Lockdown_

Lockdown_ is an augmented reality (AR) installation that continues the artist's research into family history, memory, and the way digital technology may provide another lens through which to examine past experiences.

produced by: Nathan Bayliss

Concept & Context

This project is a response to current events. In this work I’m attempting to gain a new perspective on this strange time, to allow both myself as an artist and anyone who interacts with this work a way of looking at “lockdown” from multiple angles.

At the beginning of the first lockdown, I found it an intense struggle to focus on on any deep thought for a sustained length of time. Within moments I would find myself again on my phone, “doomscrolling” through news and whispered electronic communication with friends as we all remotely attempt to check in on each other, to keep track.

Days passed, time blurred, the domestic environment slowly seemed more and more unreal as the number of “loops” started to pile up. New rituals emerged, new ways of marking time completely untethered from any day / month notation. Any wider understanding of the moment came via screen, be it a news alert, government broadcast, or chat with a family member on another continent.

I am interested in the phenomenology of this moment, the way it feels to understand you are in a moment of transition, where it is clear that no matter what comes next things will never be the same again. Franco Berardi describes the moment using the Spanish word, umbral.

This work coalesces around the desire to explore this moment, to gain a new perspective on it, and to archive it with an eye to the future.

I’m inspired by the work of Forensic Architecture and their application of digital forensic methodologies to re-examine “settled” narratives. In this work I employ similar approach to explore a familiar environment, building a multi-sensory mise en abyme.

This work is also influenced by Karen Barad’s concept of spacetimemattering, the idea that space, time and matter are inexorably entangled within each other. That in the act of remembering we re-create the event anew.

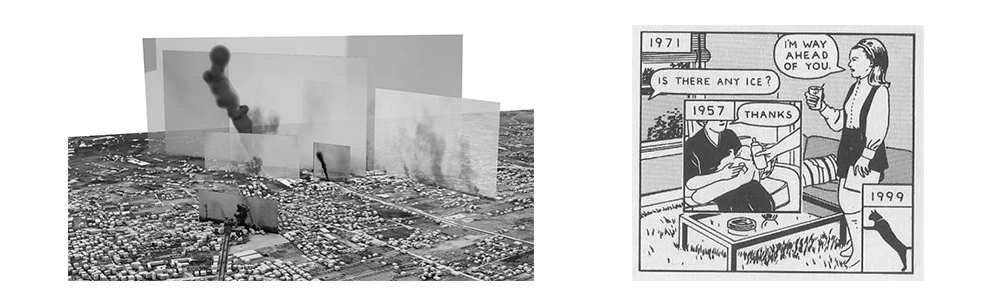

The Bombing of Rafah - Forensic Architecture, Here - Richard McGuire

Another key inspiration is the graphic novel, Here, by Richard McGuire. In this story McGuire uses the frame of a room as a lens through which to view multiple times throughout history, and their overlapping narratives gives the reader a perspective and understanding epochs wide.

A final inspiration comes from cinema, in particular the cinematography of Alfred Hitchcock. This moment carries with it a sense of anxiety and paranoia, and I have found the films Rear Window and Psycho elegant depictions of this: restricted narratives and forced perspectives.

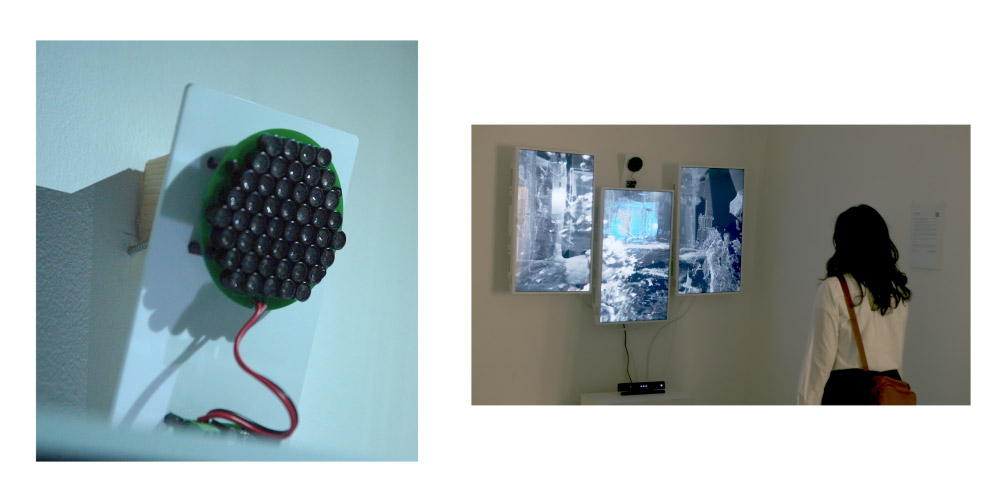

The work is designed as an ambient, screen based installation. It lives as as fragmented aspects orbiting around and through a domestic environment. As a viewer approaches the work, it loops them in and presents a unified window into the space with their position in space translated to a temporal POV within the work - the participant becomes “time.”

Technical

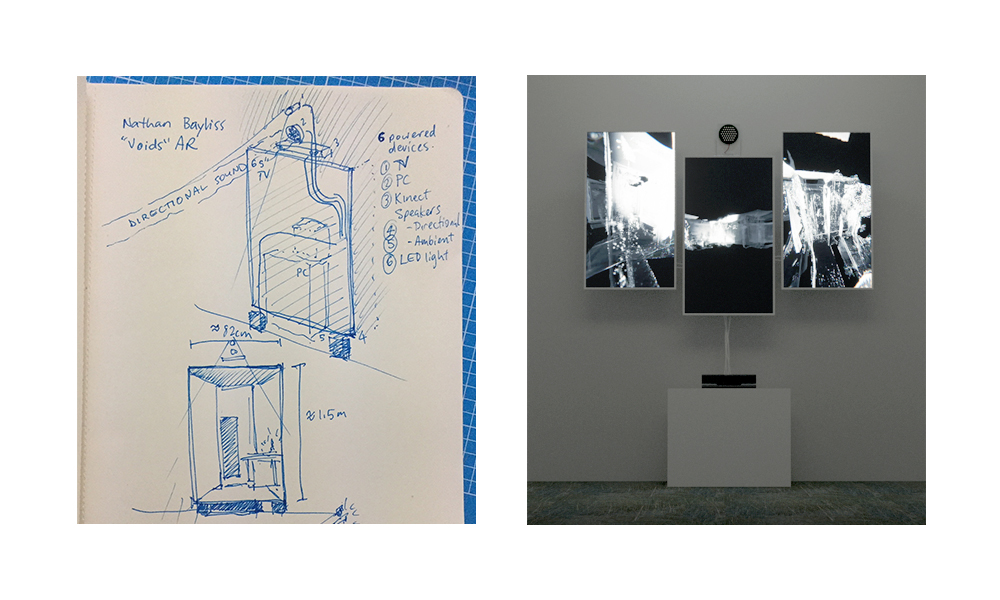

Lockdown_ is built with Unity, developed on a Windows platform. Unity allowed me to make rapid design changes and leverage the powerful Kinect 2 SDK asset (developed by Rumen Filkov) which handled the head tracking. I adapted a head-tracking example to work with 3 cameras for the triptych arrangement, and added smoothed “follow” cameras which would always be lerping to their target destination. This smoothed the head motion and also allowed me to animate between the "default" orbiting position and the user’s POV.

I decided early on that it was important to make this an AR experience, with the screen remaining static and forming a portal for the user to look through. AR provides a restricted point of view, as opposed to VR which places the viewer within the virtual world. As so much of the experience through lockdown was screen-based, the installation had to make use of screens to tell this story.

In my initial tests and mockups I was envisaging using a huge 4k single screen, almost 1.6 metres high and a metre wide. Ultimately it was a budget decision that resulted in me using 3 smaller screens, but this decision helped conceptually as it allowed me to fragment the perspective, showing the scene from multiple angles at once.

The focal point of the installation is the 3D reconstruction of the apartment and the figures within. As I was already using the Kinect for the user tracking I was able to employ it for depth-based 3D scanning, using Kinect Fusion - an inbuilt part of the Kinect SDK. This transforms the Kinect into a powerful 3D scanner, using a voxel based method for building and refining a surface mesh.

This scanning process is usually cleaned up and re-topologised in production work, however for this piece I kept each scanned mesh in the original state. The rough edges and noise are intrinsic to the process, and their inclusion in the end result reflects an honesty and factual documentary style.

The captured slices of the environment were aligned and arranged in Cinema 4D, and saved into a large 600mb FBX file which was imported into Unity.

Following this process I scanned in all the characters. These were scanned in the appropriate room and where possible included parts of their environment in the mesh, to add to the sense of surfaces blending together. Multiple actions were captured allowing stop-frame animation into the scenes.

Once in Unity, development time was largely spent working out an effective mode of interaction. In my initial tests I was using collider objects to trigger fade states on the various parts of the scene. In testing I found that this was very tricky to get working well. The collision objects had a specific XYZ location in the “real world”. As I was testing this in my room with a depth of 2 metres, it didn’t reflect the scale present in the exhibition space. Also, there was not a lot of user feedback letting them know what they were triggering (or even that they were triggering anything). Unless the fades were instantaneous, in which case it looked like things were popping on and off screen, it was too subtle an effect.

The second iteration of the project made the connection between user position and scene interaction much more direct, through the use of animation timelines. By using timelines I was able to choreograph many overlapping layers of animation and then use the player X position to effectively scrub the play-head of the timeline. As the user moved left and right, they move the time back and forth, and because the timeline may control any animatable property of any object, I could choreograph lighting, material, object changes on the fly.

With this mode of interaction I was able to rapidly build scenes and play with different visual effects. I used Unity’s High Definition Render Pipeline (HDRP) to give me more visual controls - depth of field, materials with transparency and refractive qualities, environmental fog etc. Shaders can be a bit of a wormhole, with so many options it can be overwhelming. In the end I kept it fairly simple - aiming for an X-Ray effect and using single-facing shaders that would make objects disappear from their reverse side, heightening the sense of eerie weirdness.

Scene objects were controlled via Unity C sharp scripts. I designed the scripts to have public-facing variables which could be updated in the Unity UI, allowing me to experiment with different values during runtime. The master script triggers the scene rotation through the various “viewpoints”, and turns on and off the corresponding timelines, giving the lighting and animation effects.

The audio was designed to offer an earthy, textural counterpoint to the scientific visuals. Early on in development I got a parametric ultrasonic speaker array - this speaker creates an eerie “beam” of sound that is highly directional. By placing this above the triptych it created a sweet spot audio zone where the textural audio effects would be projected with the end effect sounds like whispering directly into the ear. This serves two functions, the first is to break down the here/there AR threshold and also to encourage the user to spend more time in the best viewing position, where the sound is loudest.

I used a Roland R-26 sound recorder to record the environmental sound FX in various places throughout the house. These recordings were trimmed and normalised in Audacity and then imported into Unity. By placing them into the 3D scene and adjusting their falloff distances I was able to create an ambient soundscape which would give the installation a constant soundtrack if there was no viewer present.

A second layer of audio was added into each animation timeline, and I wrote a script that would take the velocity of the user’s motion and shift the pitch of the sound up and down. This added a direct responsive link between the user’s behaviour and the installation, but was subtle enough to encourage exploration and not seem overtly game-like.

Future development

In a future iteration of this project I aim to use the mobile AR capabilities of Unity combined with AR Kit to allow mobile users to examine this work in their own location. My initial concept included a multi-user dimension to the experience and it would be interesting to allow users to scan in their own environments and add them to the main scene through AR geometry scanning.

During the viva I was asked about the use of screens for the installation, and it was suggested that the use of G05 might have been interesting. During testing I was able to get the system working on my home projector, turning my room into a sort of holodeck. I am deliberately using the screens as part of the overall “story” in the work, however it would be interesting to use larger screens, at different angles, to make a more interesting threshold between the AR world and the user’s environment.

I would like to add more nuance to both the user’s control and the detail in the scenes. Currently the only input is the X-axis of the user’s position, I would push this further and have depth-related controls, adding extra content in the scenes and also using visual effects to show the user that they’re too close to the screen for example.

Self Evaluation & Covid Interruptions

By now the word “unprecedented” is almost a meme, but it continues to be a very accurate summary of the year’s events. As a result I’m very proud of this project. In normal times there are aspects to this I would have liked to explore further (detailed in the above section), however right now I’m just happy that I was able to complete this work, to a level of polish that I feel stands up to anything I’ve made thus far. I was in discussions with my tutor about deferral 2 weeks before the exhibition - it was only after that conversation that I properly began production on this piece.

All that being said, I did encounter some things during the install which I would improve in future. First, I would have centred the location of the triptych on the wall where it was located rather than over to the side. This was to accommodate the artist’s work nearby however ultimately I think I could have done it and it would have made the work look stronger.

Secondly, during testing it became clear that my ultrasonic speaker completely broke one of the other installations in the room that was using ultrasonic depth sensors. We only found this out on the day of the exhibition so there was not time to re-install somewhere else in the church, and the result was I had to use different speakers. This was unfortunate and next time I will make sure to understand how my installation interacts with the pieces around it.

Ultimately though these were small bumps in the road - it was a minor miracle that we managed to have an exhibition at all. I think this work is timely, and will serve as an interesting document to revisit and expand on as the next chapter of this story unfolds.

References

- Versobooks.com. (n.d.). Life in Lockdown: Franco “Bifo” Berardi. [online] Available at: https://www.versobooks.com/blogs/4668-life-in-lockdown-franco-bifo-berardi [Accessed 28 Sep. 2020].

- Forensic-architecture.org. (2019). Forensic Architecture. [online] Available at: https://forensic-architecture.org/.

- By Forensic Architecture - Forensic Architecture - General Press Pack, CC BY-SA 4.0, https://commons.wikimedia.org/w/index.php?curid=76213501

- Barad, K. (2010). Quantum Entanglements and Hauntological Relations of Inheritance: Dis/continuities, SpaceTime Enfoldings, and Justice-to-Come. Derrida Today, 3(2), pp.240–268.

- https://en.wikipedia.org/wiki/File:HereComic.jpg A single panel from the comic "Here" by Richard McGuire, published in RAW Volume 2 #1 in 1989.

- The Quietus. (n.d.). The Quietus | Film | Film Features | A Cinematic Lockdown: Confinement In the Films Of Alfred Hitchcock. [online] Available at: https://thequietus.com/articles/28184-alfred-hitchcock-confinement-rear-window-coronavirus [Accessed 28 Sep. 2020].

- Kinect v2 Examples with MS-SDK - https://assetstore.unity.com/packages/3d/characters/kinect-v2-examples-with-ms-sdk-18708