How to Save a Species

As DNA is treated as editable code, the boundaries between extinct and existing species become blurry.

produced by: Veera Jussila

Introduction

How to Save a Species is an interactive installation that addresses de-extinction. With technologies like CRISPR-Cas9, humans are increasingly trying to edit existing species and revive ones that have been gone long time ago. Like these gene editing technologies, the installation questions the linear concept of time. However, it also challenges our tendency to reduce other species to editable objects.

Concept and background research

As a result of gene editing, ideas that would have been dismissed as science fiction a while ago are now a topic for serious discussion. Suddenly it seems that even turning back time would now be possible. Pieces of the DNA of Yuka mammoth have already showed signs of biological reactions when inserted inside mouse eggs (Yagamata et al 2019). Much of the attention has recently focused on CRISPR-Cas9 technology, commonly referred to as “gene scissors”. With CRISPR-Cas9, researchers try to bring back woolly mammoths, passenger pigeons and other extinct species by copy-pasting the genome of the extinct species into living cell cultures of related species.

Before embarking on this project, I had already studied modern de-extinction technologies for my research project. I argue that the idea of reprogramming species can be seen as rhetorical software (Doyle 1997 cited by Chun et al 2011, p. 105) where these actions are justified with rhetorics of code and computing. According to Chun et al (2011, p. 97), computation can be seen as a means of regenerating archives. In CRISPR-Cas9 de-extinction projects, old data is retrieved from the memory in the hopes of producing the kind of software that is considered desirable.

This background work has directed me towards an installation where linear narratives and boundaries between phenotypes get distorted. With genetical resurrection practices, humans are looking to re-tell a story about the faith of a non-human species. Nature is seen a system that can be edited with predictable consequences, even when trying to save species that no longer exist. When studying these themes, I was impressed by the artwork Asunder where Tega Brain, Julian Oliver and Bengt Sjölén (2019) present a fictional AI manager that calculates absurd fixes to planetary challenges. For my own project, time-based media and programming seemed to be fitting tools for addressing the very themes of time manipulation and the rhetorics of reprogramming nature. I was also interested in the way that Moreshin Allahyari (2020) plays with reimagining past in her art and how Amy Karle (2019) has explored hypothetical evolutions based on 3D scan data of a Triceratops fossil.

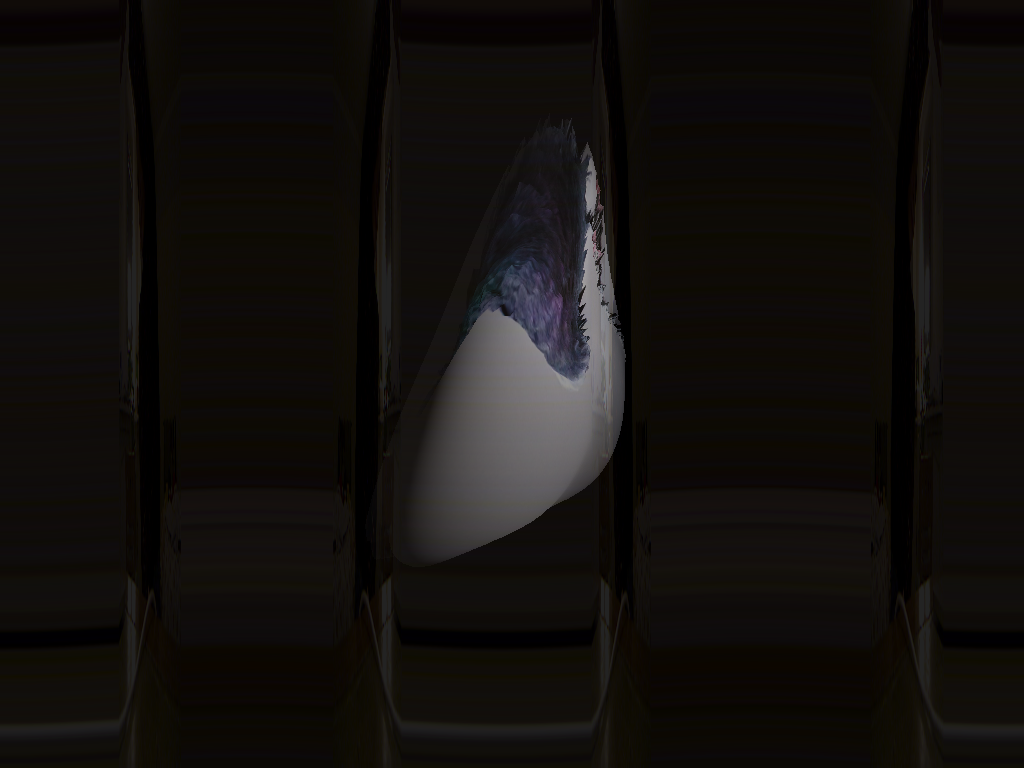

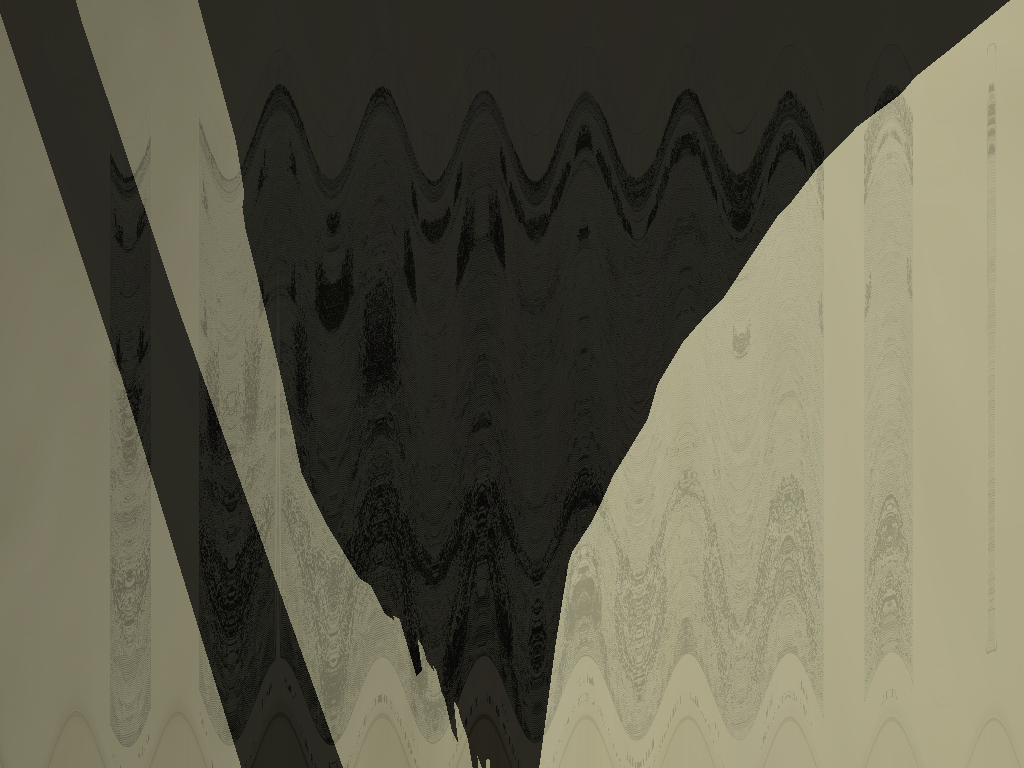

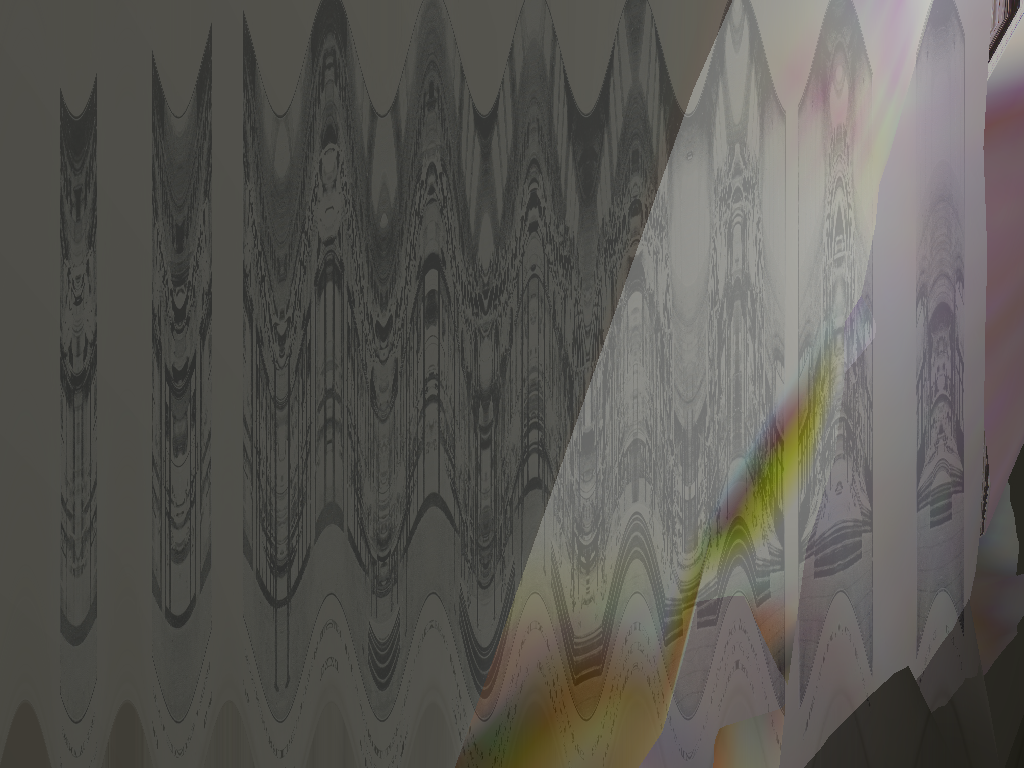

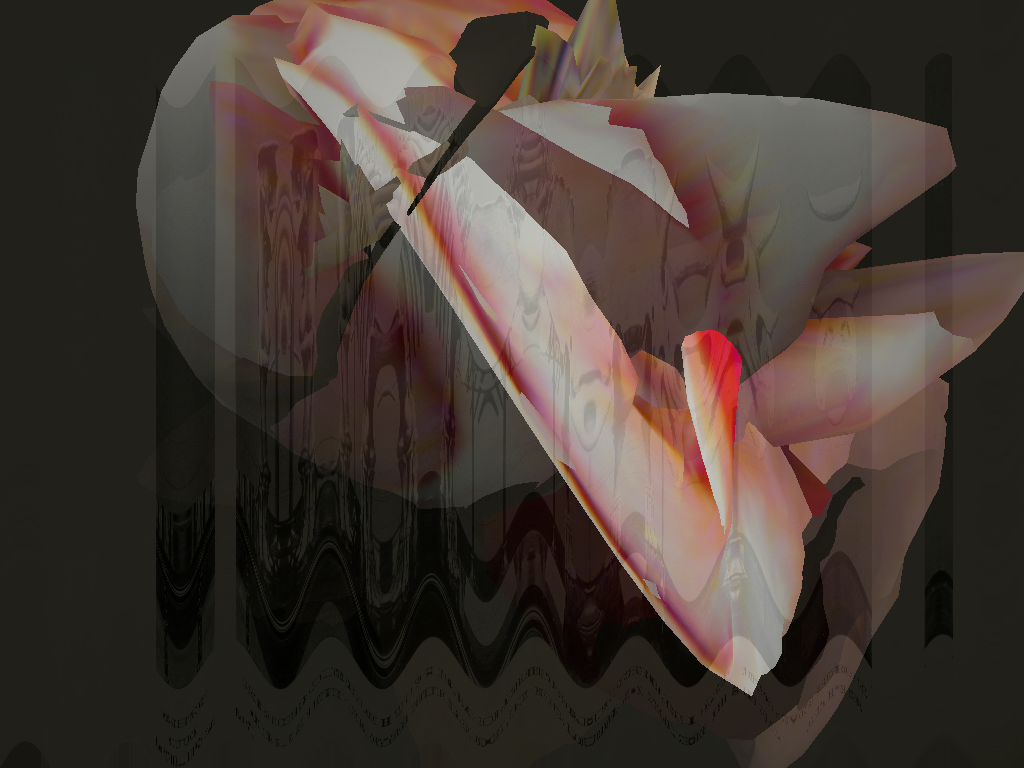

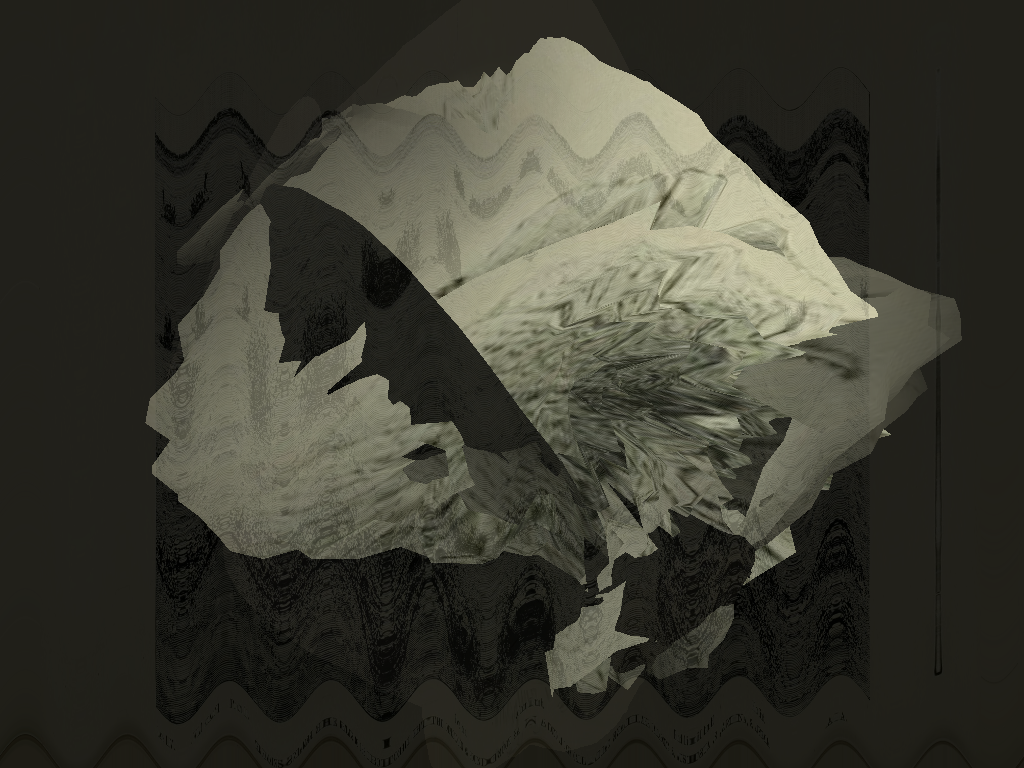

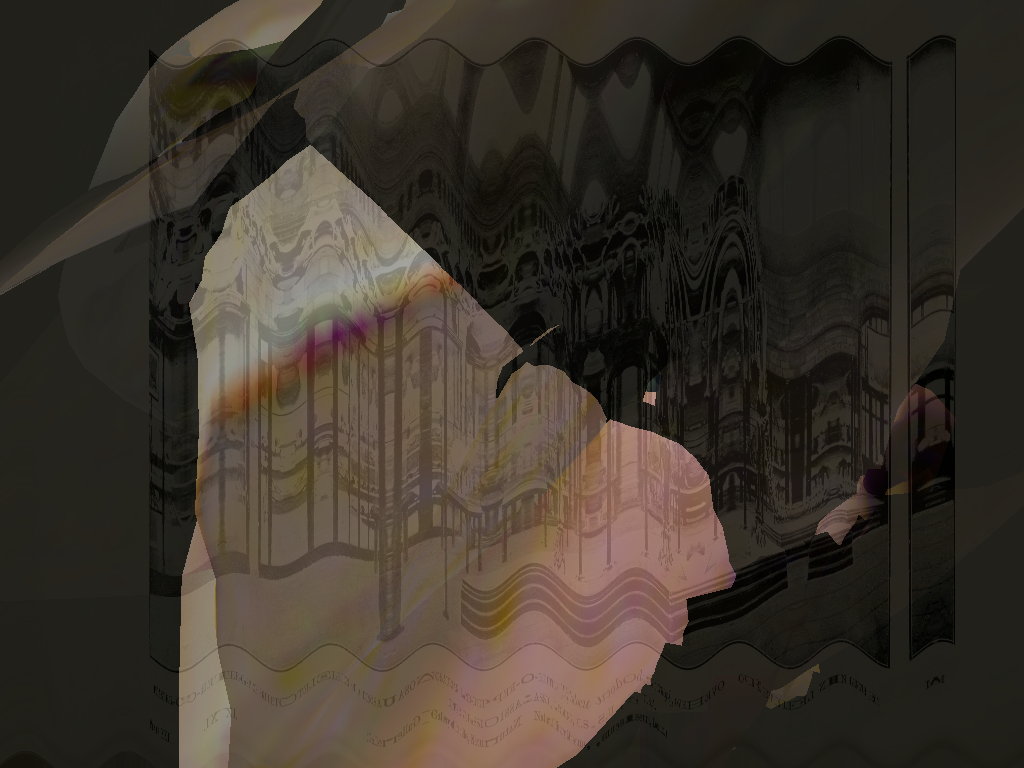

My aim was to create an interactive time machine – or a species generator – where the boundaries between future, past and present get blurred and where the divide between subjects and editable objects is increasingly difficult to maintain. User gets one minute to interact with the abstract shape and its background, bringing sights of unknown species and eerie environments. An important feature is that the program saves a screenshot of the result every 60 seconds. The title, How to Save a Species, has, thus, a double meaning. Via video buffer, user can end up captured in the abstract scene as well. These snapshots serve as alternative monuments: following Donna Haraway (2016, p. 25, 28), they are about re-membering and com-memorating “who lives and who dies and how in the string figures of naturalcultural history”.

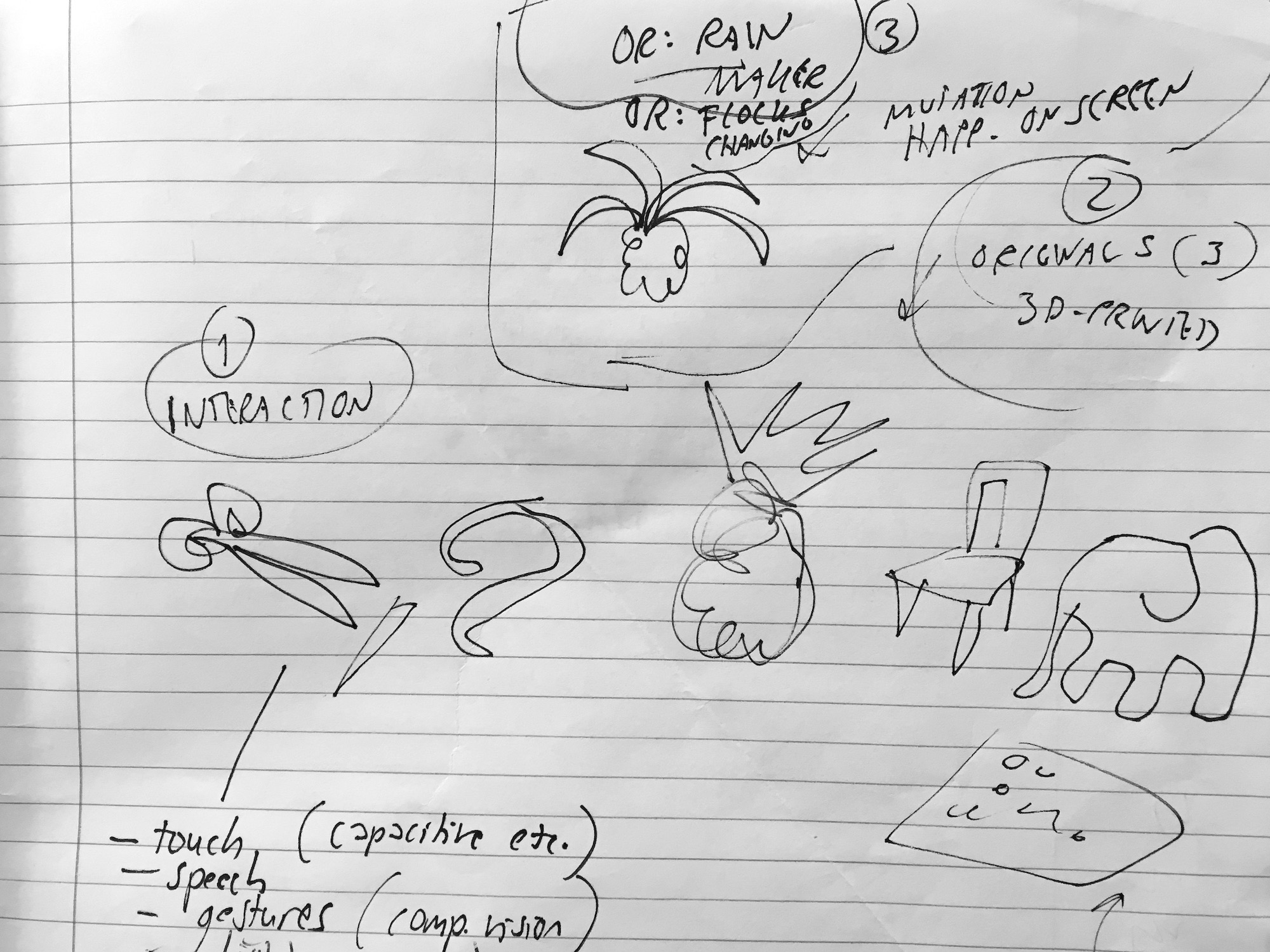

During the planning, it started to become clear that an installation that would require soldering, 3D printing and projectors would not be possible because of the Covid-19 situation. I decided to make a simple prototype that would present one approach to my themes on laptop screen.

Technical

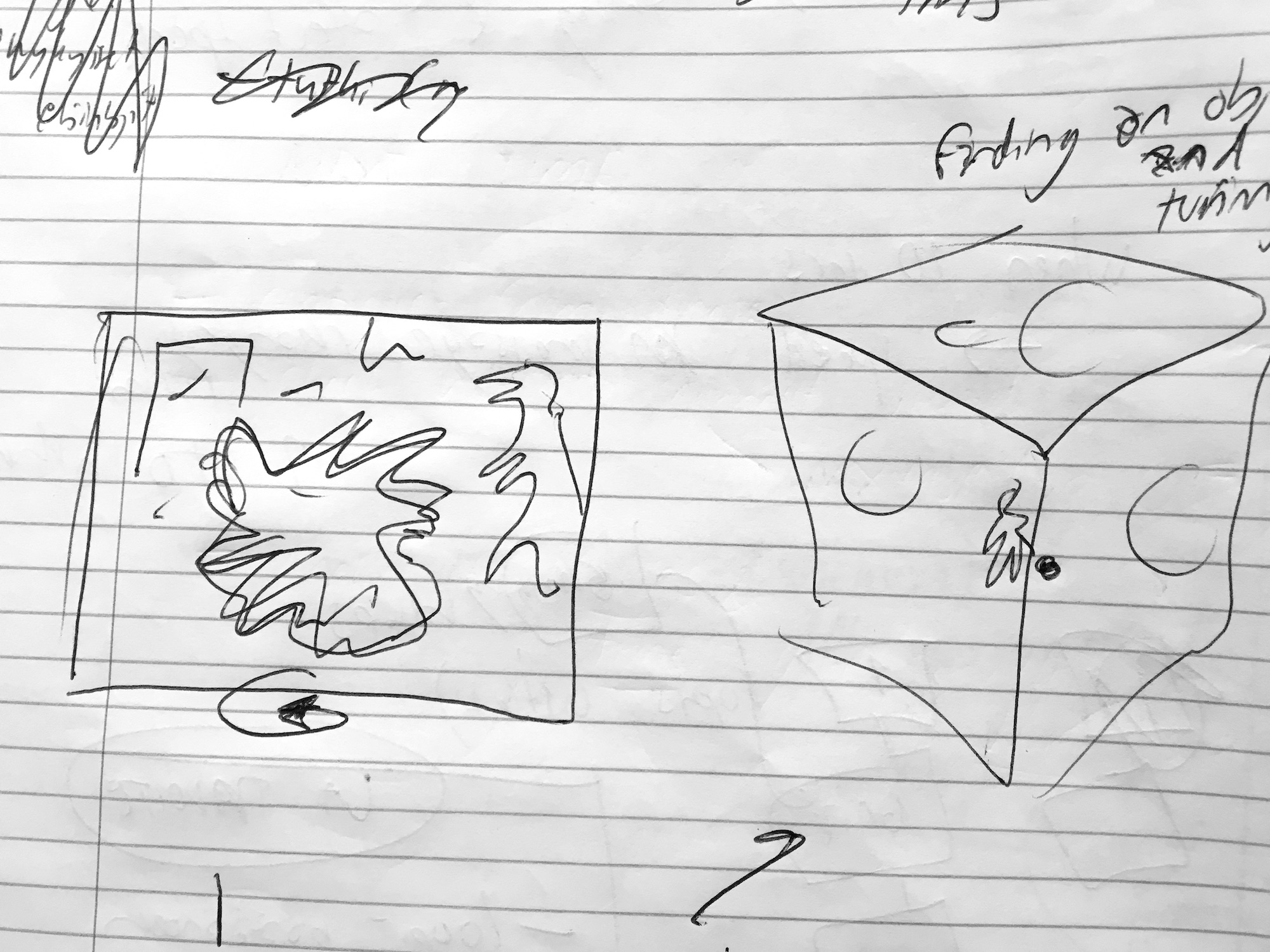

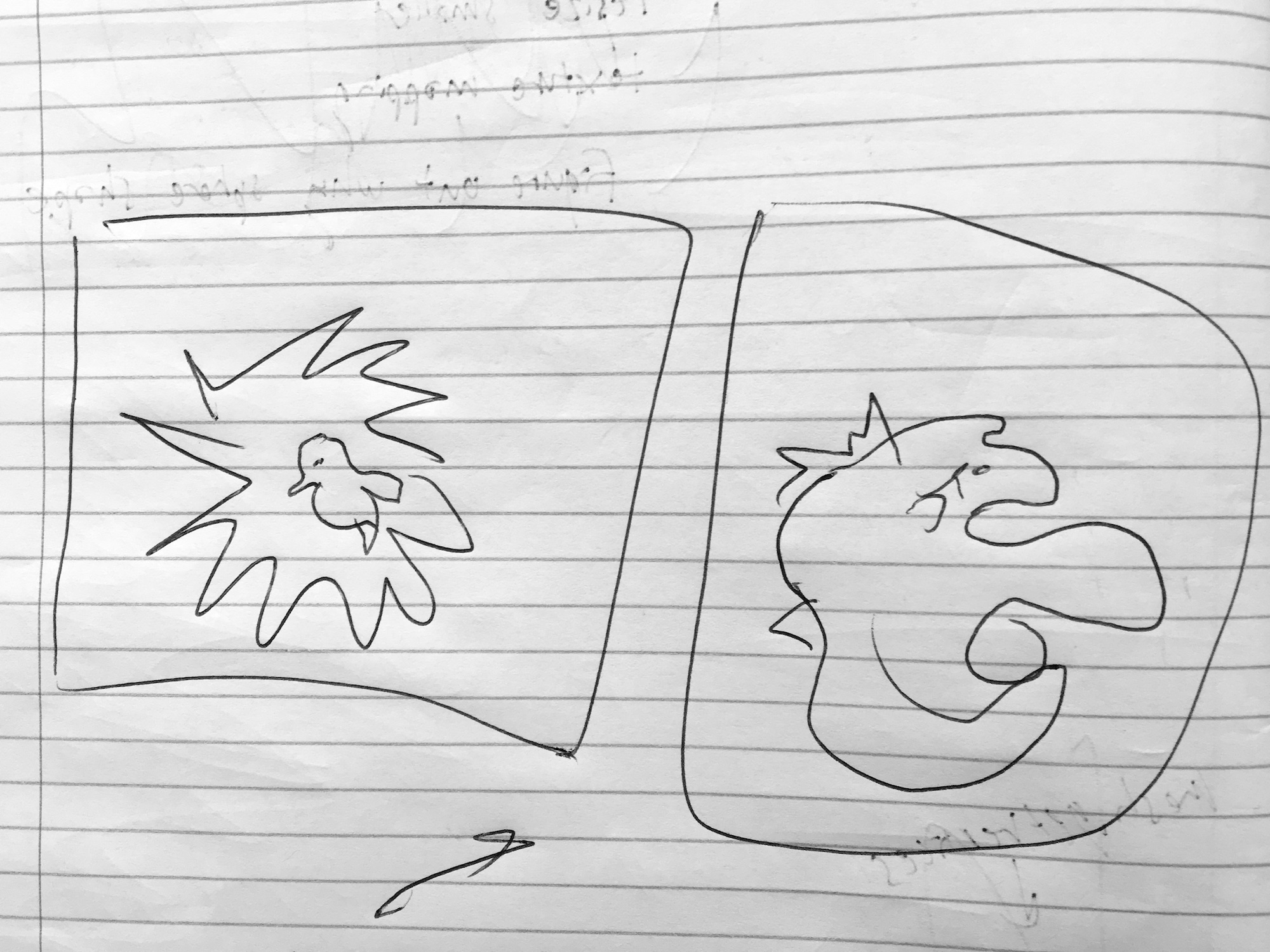

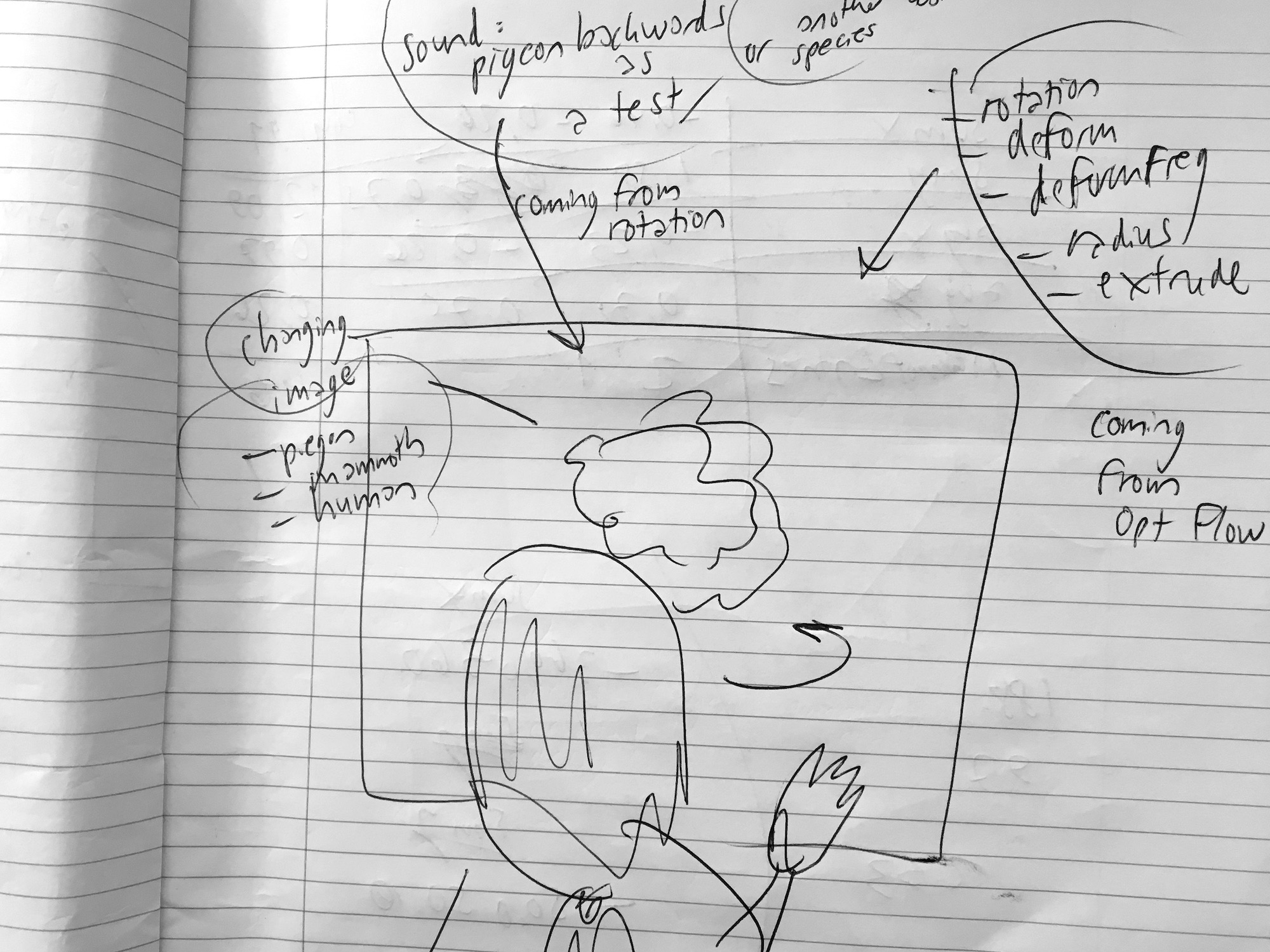

Visuals are coded with openFrameworks. Besides my artistic pursuits, I took this project as an opportunity to learn the basics of 3D animation and meshes. I soon realized that having several layers of FBOs and playing with transparency will give me the effect of different time epochs and eerie locations. I chose to play with a rotating sphere that would undergo transformations, a nod to crystal balls and predicting future as well. Following the example codes from Perevalov and Tatarnikov (2015, p. 108-122, 134-201), I used textures as the contents for my FBOs. Images of extinct species from Biodiversity Heritage Library are mixed with photos of modern species and video buffer content. Like the changes of the contents, rotation and deformations are based on interaction. Inspired by the concept of fictioning by Burroughs and O’Sullivan (2019), the user can also move up and down in time, seeing fragments of creatures and places that don’t exist in the present.

The major part of the development was choosing the best form of interaction. One tested concept was to hide a smartphone with gyrOSC inside an empty ball that the user would then rotate on a flat surface. However, as only one form of interaction was allowed, this option would not have easily enabled the use of video buffer. I tested optical flow and machine learning solutions as well, each of them resulting in different visual outputs. For example, optical flow tended to result in very abstract textures.

I also considered using physical scissors and cutting as the input, referring to the idea of CRISPR-Cas9 as gene scissors. This could have been done by tracking the color of the scissors, for example. However, this approach started to feel to literal when I got eerie, abstract results with the visuals.

Finally, Kinect proved to be the most suitable sensor for this project. Its thresholds can be adjusted to react to the hand movement, ignoring people that are just passing by the artwork. In the exhibition space, Kinect could be hidden from the user behind the screen.

During the project, I took time to learn about shaders and found that they would suit my purpose of presenting time as a liquid material. Developing a shader example by Perevalov (2013, p. 219-225), I was able to create a slit scanning effect that captured the feeling of time travel.

The sounds were created by sending OSC messages from openFrameworks to MaxMSP. For the prototype, I have tested this process with sounds of pigeon cooing and pigeon taking off. Changes in the buffer give feedback to user who interacts.

Future development

I would want to develop the prototype into a larger-scale installation that would ideally have its own room. The screenshots could be projected on the walls as a living archive, and the animation itself could also take place on one wall instead of the screen. Several speakers would be placed around the room.

One natural extension for this project is to replace the sphere with 3D models. Then, for example, user’s face is sometimes reflected on a shape of a mammoth. For my own learning, I have already done some experiments with a 3D model of a iguanodon statue in Crystal Palace Park (Carpenter and Gray 2017). As the dinosaur statues by Benjamin Waterhouse Hawkins have partly become known for their scientific inaccuracy and deteriorating condition (Brown 2020), this could add many layers to the themes of remembering and reviving.

It would also be interesting the replace the snapshots with 3D printed sculptures, resulting in tangible monuments of the interaction. However, producing a 3D print after every 60 seconds would not be realistic – maybe a selection of sculptures could be printed after each exhibition day.

The sound part of the work could be developed further by, for example, imagining sounds that don’t yet exist. Artist Marguerite Humau has reconstructed the vocal tracts of prehistoric creatures, including a woolly mammoth (Chalcraft 2012). It would be intriguing to try to create hybrid sonic landscapes that would represent both extinct and existing species, creating sounds in-between.

Self evaluation

I am content with the eerie landscapes and snapshots. I am also happy with my learning process during the project. The basics of 3D animation and meshes, shaders and FBOs will be useful in the future. I was also happy with my results with Kinect and see myself using the sensor in installations in the future. Obviously, I could have been more efficient by choosing one interaction in the beginning and sticking to that. However, I am happy that I compared different methods from optical flow to image classifying with machine learning as I ended up learning a lot. At hindsight, I would divide more time for the audio part of the work to make it a fully-fledged part of the prototype instead of an initial test. All in all, the project served as a way to explore the concept and basic mechanics of a future installation.

References

Readings and artworks:

Allhyari, M. (2020) Moreshin Allahyari [online]. Available at: http://www.morehshin.com (Accessed: 14 May 2020)

Brain, T., Oliver, J. and Sjölén, B. (2019). Asunder [online]. Available at: https://asunder.earth (Accessed: 14 May 2020)

Brown, M. (2020) Crystal Palace's lifesize dinosaurs added to heritage at risk register. The Guardian, 20 February [online]. Available at: https://www.theguardian.com/culture/2020/feb/28/crystal-palaces-lifesize-dinosaurs-added-to-heritage-at-risk-register (Accessed: 4 May 2020)

Burrows, D. and O’Sullivan, S. (2019) Fictioning. The Myth-Functions of Contemporary Art and Philosophy. Edinburgh: Edinburgh University Press.

Carpenter, I. and Gray, S. (2017) 3D model in Crystal Palace Iguanodon condition survey [online]. Available at: https://archaeologydataservice.ac.uk/archives/view/iguanodon_2017/downloads.cfm?group=857 (Accessed: 14 May 2020)

Chalcraft, E. (2012) Proposal for Resuscitating Prehistoric Creatures by Marguerite Humeau. Dezeen, 11 July [online]. Available at: https://www.dezeen.com/2012/07/11/proposal-for-resuscitating-prehistoric-creatures-marguerite-humeau/ (Accessed: 14 May)

Chun, W., Fuller, M., Manovich, L. and Wardrip-Fruin, N. (2011) Programmed Visions: Software and Memory. Cambridge: The MIT Press.

Haraway, D. (2016) Playing String Figures with Companion Species. In Staying With The Trouble: Making Kin in the Cthulucene, p. 9–29. Durham: Duke University Press.Available at: https://learn.gold.ac.uk/mod/resource/view.php?id=763020 (Accessed: 14 May)

Karle, A. (2019) Amy Karle and the Smithsonian: Regeneration Through Technology [online]. Available at: https://www.amykarle.com/project/_smithsonian_collaboration/ (Accessed: 14 May)

Perevalov, D. (2013). Mastering openFrameworks: Creative Coding Demystified. Birmingham: Packt Publishing. Available at: https://learn.gold.ac.uk/pluginfile.php/1265203/mod_folder/content/0/Mastering%20openFrameworks%20-%20Creative%20Coding%20Demystified%20%28Denis%20Perevalov%29.pdf?forcedownload=1 (Accessed: 14 May)

Perevalov, D. and Tatarnikov, I. (2015) openFrameworks essentials. Birmingham: Packt Publishing. Available at: https://learn.gold.ac.uk/pluginfile.php/1265203/mod_folder/content/0/openFrameworks%20Essentials.pdf?forcedownload=1 (Accessed: 14 May)

Yagamata et al. (2019) Signs of biological activities of 28,000-year-old mammoth nuclei in mouse oocytes visualized by live-cell imaging. Sci Rep 9, 4050. Available at: https://doi.org/10.1038/s41598-019-40546-1 (Accessed: 4 May)

Code (other than already credited):

Lepton, L. (2015) openFrameworks tutorial -005 loading font, 8 Apr [online]. Available at: https://www.youtube.com/watch?v=dzbJibA3vhc (Accessed: 14 May)

Lepton, L. (2015) openFrameworks tutorial -044 timers, 7 May [online]. Available at: https://www.youtube.com/watch?v=8HR6KwxM4Gk (Accessed: 14 May)

Papatheodorou, T. (2020). Kinect fiery comet. Lab assignment for IS71014B: Workshops In Creative Coding 1, week 16. Available at: https://learn.gold.ac.uk/mod/page/view.php?id=720896 (Accessed: 14 May)

Papatheodorou, T. (2020). oscSend. Example code for IS71014B: Workshops In Creative Coding 1, week 15. Available at: https://learn.gold.ac.uk/course/view.php?id=12859§ion=16 (Accessed: 14 May)

Tanaka, A. (2020) Simple Granular Synth. An example patch for IS71086A: Special Topics In Programming for Performance and Installation, session 9. Available at: https://learn.gold.ac.uk/course/view.php?id=12882#section-12 (Accessed: 14 May)