Questioning Temporality Within Procedural Based Computer Music

This paper is an exploration into temporal topics that arise through the creation, archiving and experiencing of procedural-based computer music composition. The project will be using Jem Finer’s Longplayer as a case study in which to extract and analyse these concepts from a computer musicians perspective. Longplayer is ‘a one thousand year long composition’ and sonified piece of sound artwork conceptualised by Jem Finer and commissioned by Artangel. The piece began playing at midnight 31/12/1999, and was set to “play without repetition until the last moment of 2999”. Longplayer uses “simple and precise rules”[9] to determine the output of the composition and is composed for 234 Tibetan singing bowls . Longplayer is currently installed in the lighthouse at Trinity Buoy Wharf in London and is broadcast to ‘listening posts’ in Yorkshire Sculpture Park (UK), Kings Place (UK) and The Long by Now Foundation Museum (USA).[8]

produced by: William Parry

Creation

To understand where and how Longplayer was created in relation to time, it is necessary to have an understanding of where it was situated socially within its contemporary during the time of its creation. Prior to the creation of Longplayer (between October 1995 and December 1999) there had been a broad mix of rule and procedural-based artworks, ranging from early tools of ‘pre-digital’ art such as The Canon, through to Schoenburgs twelve-tone Serialism and later experimental works of John Cage and arguably that of the Fluxus Movement through their restrained improvisation approach to creating its ‘Happenings’[7]. The entry of the personal computer created a domain for increased efficiency in calculating, sonifying and visualising procedural-based works, and and made space for the creation of art-programming softwares such as superCollider, Processing and MaxMSP. The creation of these softwares lead to a new turn of artworks such as Longplayer and other works ranging from fixed multi-media artworks such as Brian Eno’s Bloom, to the new movement of live-algorithmic composition events such as Alograve. The term algorithmic composition umbrellas three main systematic approaches to composition, a ‘Stochastic’ , a deterministic rule-based, and the artificially ‘intelligent’ system.[11]

It is common for the non-algorithmic composer to create a composition, from start to finish, and sometimes follow a standard of musical form (such as ABA). The non-algorithmic composer, during the time of creation has the ability to stack their musical phrases like building blocks, writing notes with an almost ‘stream of consciousness’ approach and ‘penciling out’ blemishes. Alternatively the rule-based algorithmic computer musician relies on forming a set of rules into an executable procedure used to perform a piece of music, the composer now has to adopt a form of ‘algorithmic thinking’ in order to create this music. It’s this ‘algorithmic thinking’ that provokes a questioning of time and the composers intentions for their composition, as the algorithm itself in static form, is unrepresentative of the piece that it will perform. It is the need for time or the composers ‘future visioning’ for the piece to reveal it’s intended effect unlike the non-algorithmic composers visually mapped musical score. The concept of the rule-based composition reinforces musics dependency on time, not only as a physical requirement for the sonification of a piece, or for a macro perceptive of a composition, but for the building of a composition with a complete relevance and connection to a start point, unlike a composition built on a collage of musical phrases or a humanly non-complete deterministic algorithmic process such as a stochastic[6], or AI approach[5].

When uncertainty is applied to algorithmic composition it allows for a development of emergence over time, approaches to this theme have been explored by the use of bio-inspired computing techniques such as genetic algorithms[10], Cellular Automata and AI. The vast complexity of potential variables and the range of results when using these algorithms questions the balance of artistic creation and intervention vs computer agency. Using these ‘indeterministic’ algorithms the algorithmic composer can only ‘future vision’ the hard-coded aspects of the algorithm, and not fully for this emergence that these algorithms are designed for. Using this logic, the artists decision for an implementation of an emergent algorithm could be seen as a clear offering of creative agency for a resulting composition and challenges concepts surrounding the idea of authorship and creative ownership of an emergent composition. This concept appears clear to the Longplayer Trust, through their understanding of the value of the Longplayer algorithm, as on asking Sarah Davies (producer and administrator for Longplayer) if the piece was produced explicitly for singing bowls, she replied describing the piece soley as “... a particular instance of an algorithm”. [1]

Experiencing

Non-deterministic algorithmic approaches to composing like the stochastic and AI methods add an element of ‘randomness’ into their system. Due to this randomness, on experience of these compositions, uncertainty is almost a key attribute to the overall aesthetic of the compositions and is often executed timbrally using traditionally one type of harmonious instrument like Longplayers singing bowls, or Iannis Xenakis’ ‘Pléiades’ vibraphones and xylophones. This allows for a focus on this uncertainty for an emerging aesthetic without listening fatigue. Comparing this to a deterministic form of algorithmic art such as the fractal where a clear structure is perceived, the composition has room to allow for complexities (like a fractals broad use of color) to be added to the overall composition with clear structure. It’s these constraints and complexities that are of an integral part of an artists creative process[1], and allow for artistic specificity and a compositions identity.

“The notion of structure implies something recoverable, in the sense that it is possible to look at an end result and determine the structures that generate it.”[2] Longplayer challenges this idea, as although itself is deterministic, it will not provide evident structure upon human listening, as the algorithm takes far longer to unfold than human life, and therefore will not reveal it’s full structure until it has completed its 1000 year cycle. Longplayers algorithm implements a series of six ‘cycled’ compositions, each taking a different amount of time to complete, the shortest cycle is 3.7 days, with this in mind, part structure could be considered to uncover over the space of two of these 3.7 day cycles revealing repetition, but the nature of the Longplayer algorithm makes this repetition incomprehensible through the implementation of the further five cycles, these being an a form of modification to the perceived composition.

On experience of Longplayer, the listener is subjected to a microscopic section of the composition in relevance to its whole, leaving for only a part reflection of the piece. This could be comparably seen as a play on Fereneyhoughs suggestion of music and its ontological positioning, whereby “...the listening subject at moments “stands apart” and consciousness is engaged in an act of scanning and measuring outside of the immediate flow”[4]. In temporal relevance to Longplayer, an immediate flow could be seen to be the listeners receipt of the composition in part, and the scanning as a reflection of the piece. Upon scaling the length of Longplayer to an average composition time of 10 minutes, 1 second of ‘Longplayer time’ becomes 0.000000002 seconds of the 10 minute composition.1Veit Erlman describes the conclusion of early physician Hermann von Helmholtz search for audio perception that “... body and mind do not simply operate within a given spatial and temporal matrix, they are that matrix.”[3] This concept could, in a distorted poetic way, help emphasise the vastness of ‘Longplayer time’ in relativity to our own, in that a 10 minute bodily perception of the composition is only a grain of its complete. Xenakis explores this topic of micro sound perception, through his creation of granular synthesis. Comparing these concepts in this way, helps to uncover the almost egotistic element of mystery when trying to comprehend music time space in perception and beyond human life.

Archiving

Through its use of rule-based composition creating a piece of music that was intended to last one thousand years, Longplayer questions the notion of the human, social, computing and materiality finite. To question the idea of this finite, one must look into levels of decay amongst these paradigms to set a threshold of its obsolete. Whilst trying to determine a threshold of decay amongst humanistic qualities, like the existence of the concept of this piece could harbor a lengthy and potentially arbitrary conclusion, there is still room to explore the potential decay of Longplayers current materials and its strategies for sustainability during its one thousand year cycle. The Longplayer Trust has been established as an approach to collaborative responsibility to prevent this decay and to provide a support for the sustainability of its first 1000 year duration. On observation of Longplayers current installation, there are two essential elements to synthesise this (and many other) artworks (the original artistic concept behind the piece and the physical materials needed for the installation of the piece).

Regarding Longplayer as “… a particular instance of an algorithm”[1]. Longplayers algorithm is currently implemented through superCollider code, with this in mind and the succinct nature of the computer code, there is no doubt that if there was any damage to Longplayers superCollider code, or executable file it would be rendered unplayable and potentially obsolete. In order to reduce this risk the original superCollider code is published on the Longplayer website, spreading responsibility communally for the code further than the trust. On the website there are also thoughts on materialising the code into “… alternative methods of performance, including mechanical, non-electrical and human-operated versions”.[8]

The idea of Longplayer adapting to its time in order to secure its survival is an interesting concept due to the vast temporal space given for the piece, as it opens up for many future implementations and mutations of the piece, living past its original designer and potentially past human existence. With this in mind Longplayer could be regarded as a new form of neo-futuristic dialogical art, whereby the exchange of Longplayer between present and future generations is of as much importance as it’s initial implication at the turn of the new millennial. To assist in the security of the concept for the piece The Longplayer Trust website has extensive information of the ‘conceptual background’ of Longplayer including notes contributed by Jem Finer and Micheal Morris (Co-director of Artangel). The website also includes letters from thinkers and writers discussing concepts surrounding Longplayer and its existence. The Longplayer Trust also hold frequent events such as ‘The Artangel Longplayer Conversations’, where key thinkers and famous practitioners gather to discuss socio-temporal topics, these conversations are archived and publicised on youtube, and created as “...a good chance to connect interested people to the artwork and talk about its themes. And ...are also a way to find donors who will pay towards its ongoing upkeep.”[2]

Digital

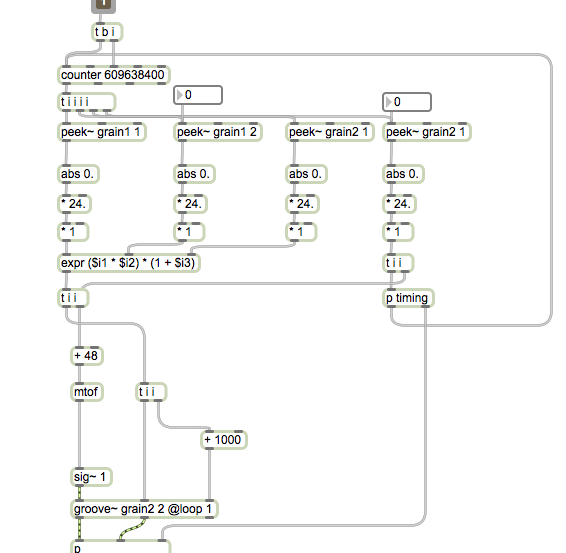

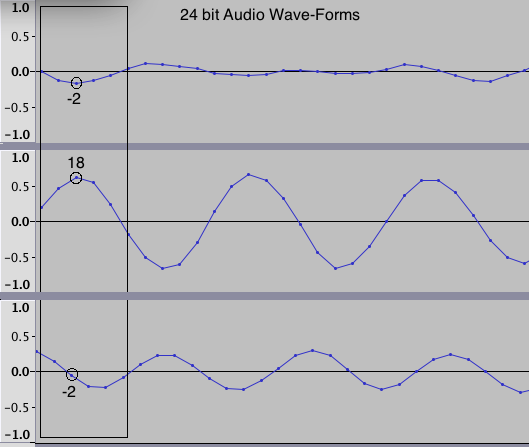

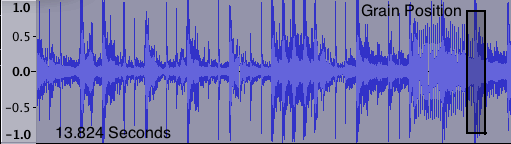

Musics sole dependency on time has helped spark a wide range of philosophical thinking around sound and time perception. Musics implementation of the algorithm has played a large part in revealing aspects of these topics, and digital implementations have made these methods more accessible and in turn helped develop new forms of music composition and publishing such as ‘generative art apps’ and live ‘algorave’. When music is performed by digital means, a new aspect of sound time perception is added, as for a sound to be recorded or reproduced digitally it has to be sampled in computer time. As this typically is at a rate faster than the understood human perception of 20kHz it allows for a insight into grains of unperceived sound. Experimenting with these concepts in my own work ‘grains’ has helped to further expose concepts surrounding sound perception and authorship in music. grains is a deterministic algortihmic composition that has the potential to last roughly a week ‘without repetition’ using 13 seconds of 24 bit audio. Grains uses Xennakis’ technique of granular synthesis as the main form of sonification for the piece and uses a combination of three values to to determine the shape of the grain, the frequency in which it is cycled and the timing and length of the audible grain.

Grains uses a 13 second sample from the Andrew Oldham Orchestra’s cover of The Rolling Stones’ ‘Last Time’, a piece that famously caused a large dispute over copy-write infringement in the Verve’s use of sampling in their ‘Bitter Sweet Symphony’. The use of this sample again provokes a questioning of authorship of ‘grains’ in that it is using all audible parts of the sample, but is arranged to avert perception of the original sample. The sample itself is also the means to compositional structure, using sample value data to determine pitch, timing and timbre. The intention of Grains was to challenge concepts of music sampling and audio perception and de-localise compositional authorship. Creating a 1 week long composition using 13 seconds of audio, like Longplayer, will never be experienced as its whole, nor will it’s its input sample be audibly recognisable through granular synthesis. Grains implementation of such a simple algorithm, with a perspectively small input source, could be seen to be a form of deterministic composition with a complete algorithmic agency, as it’s input is taken beyond it’s intended perspective.

1Rough workings:

(600 Seconds = 10 Minutes) / (1000 Years = 315576000000 seconds) = 0.000000002

Illustration 1: MaxMSP main patch screen shot.

Illustration 2&3: Annotated Screenshot from Audacity Audio Editor Software.

2 * 18 * 2 = 72 (Grain Position)

MAXIMUM 243 = 13824 (13.824 Second Sample Size)

References:

[0]Candy, L Constraints and Creativity in the Digital Arts. [Journal] LEONARDO, Vol. 40, No. 4, pp. 366-367, 2007

[1]Davies, S, The Longplayer Trust [personal emailed interview] (7/3/2018).

[2]E.A Edmunds, Art as Practice Augmented by Digital Agents. Digital Creativity [Journal] (2000).Vol. 11, No. 4, pp. 193–204

[3]Erlmann, V. (2014). Reason and resonance. New York, NY: Zone Books.

[4]Exarchos. D. Temporality in Xenakis and Ferneyhough, JMM: The Journal of Music and Meaning,[Journal] (2014) vol. 13.

[5]Experiments in Music Intelligance2018. main. [ONLINE] Available at: http://artsites.ucsc.edu/faculty/cope/experiments.htm. (Accessed 13 March 2018).

[6]Holtzman ,S. R, (1995.) Digital Mantras. MIT Press.

[7]LaBelle, B., 2012. Background Noise: Perspectives in Sound Art. 2nd ed. London: Continuum International Publishing Group

[8]Longplayer. [ONLINE] Available at: https://longplayer.org/. [Accessed 06 March 2018].

[9]Longplayer Live programme (Artangel, London, 2009)

[10]Nelson, G.L Sonomorphs: An Application of Genetic Algorithms to the Growth and Development of Musical Organisms TIMARA Department Conservatory of Music Oberlin.

[11]The History of Algorithmic Composition. 2018. The History of Algorithmic Composition. [ONLINE] Available at: https://ccrma.stanford.edu/~blackrse/algorithm.html#AI. [Accessed 08 March 2018].