Expressive Machine #2

“The machine, gaining now in confidence, has struck up a collaboration with a complicit item of furniture, inveigling its way into the soft, yielding interior of the sofa. Eager, moody, sensual, distracted, it shares with you its stream of consciousness.”

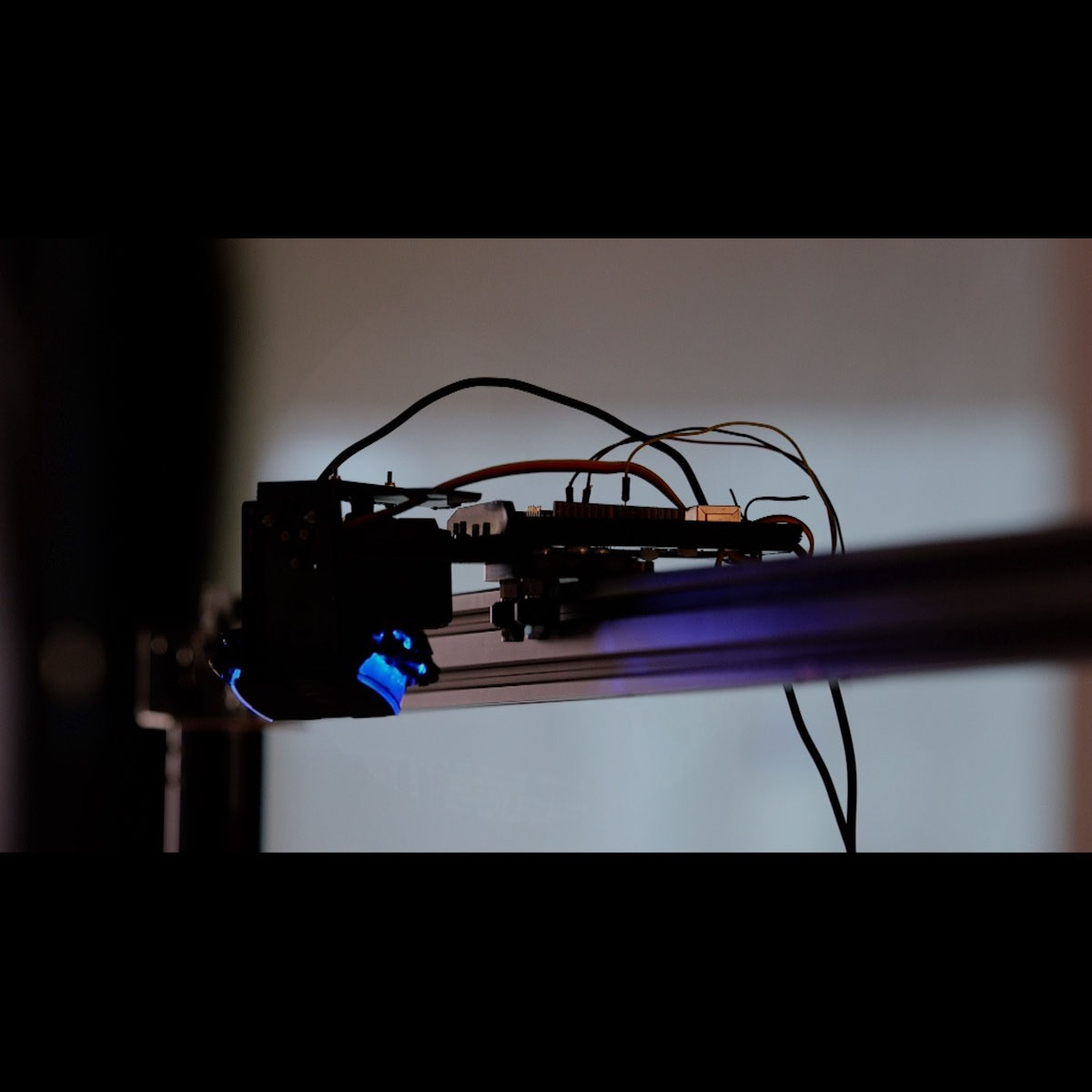

Interactive installation using touch sensing, live data streams, machine learning and natural language processing.

produced by: Laura Dekker

Introduction

A certain, now-extinct dialect of UNIX during the 1980s possessed the command 'nicetime'. When evoked on the command line, it offered a fuzzy description of the current time, in the form 'almost quarter to ten', and so on. And what if we go further, allowing the machine to elaborate and express more of its experiences, but on its own terms? This is the subject of my on-going research and series of artworks.

Concept and Background Research

This project takes place within a ‘posthuman’ movement across a range of disciplines, reconsidering what it is to be human and exploring the agency of non-human entities. Rosi Braidotti emphasises an ethos of positivity, an opportunity to “empower the pursuit of alternative schemes of thought, knowledge and self-representation”. Goriunova, Parisi & Fazi and others pose questions about the machine’s experience, attempting to consider, in a rigorous way, such things as “do machine’s have fun?” and what exactly ‘fun’ might mean for a computer.

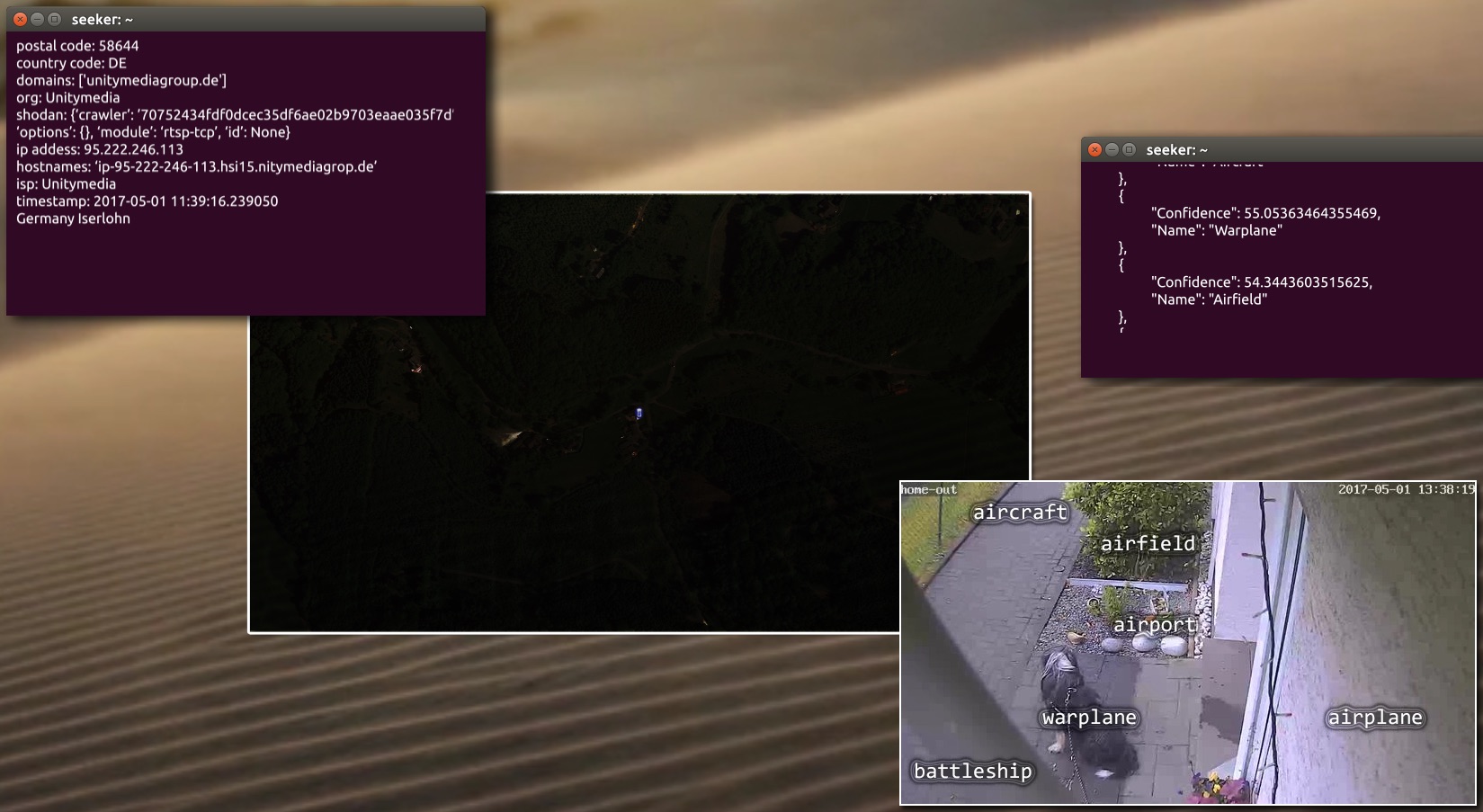

Fabulation and fiction have long been used as a vehicle for speculation of this kind, for example, Fuller’s essay Half 10k Top-Slice Plus Five, written from the machine’s sensually rich point of view. Briz puts forward a “philosophy of glitch”, to establish a critical relationship between human and machine. The glitch can be seen as the machine willfully expressing itself outside of the boundaries intended by the human. Patrick Tresset's robot, Paul, observes and responds to the world, expressing itself by drawing human portraits, employing some well-defined rules and the less well-defined materiality of its drawing tools. Nye Thompson's The Seeker roves among unsecured 'security' cameras across the world, using machine vison techniques to describe for us what it sees. Ian Cheng's BOB ('Bag of Beliefs') explores emergent properties of artificial life, presenting an engaging entity with a rich and developing internal life. These artworks all question what a machine might find worthy of attention, exploring new ways of machinic seeing and experiencing of the world.

Nye Thompsons's The Seeker

Against this background of theoretical and artistic developments, I am exploring machine implementations of models of human consciousness. Starting from the ground up, this sets up the possibility to ask a range of interesting questions. How differently can stimuli be processed and [still] hold some useful meaning for a human audience? What might a machine’s versions of sensuality and emotion be? How far can you go, away from the human?

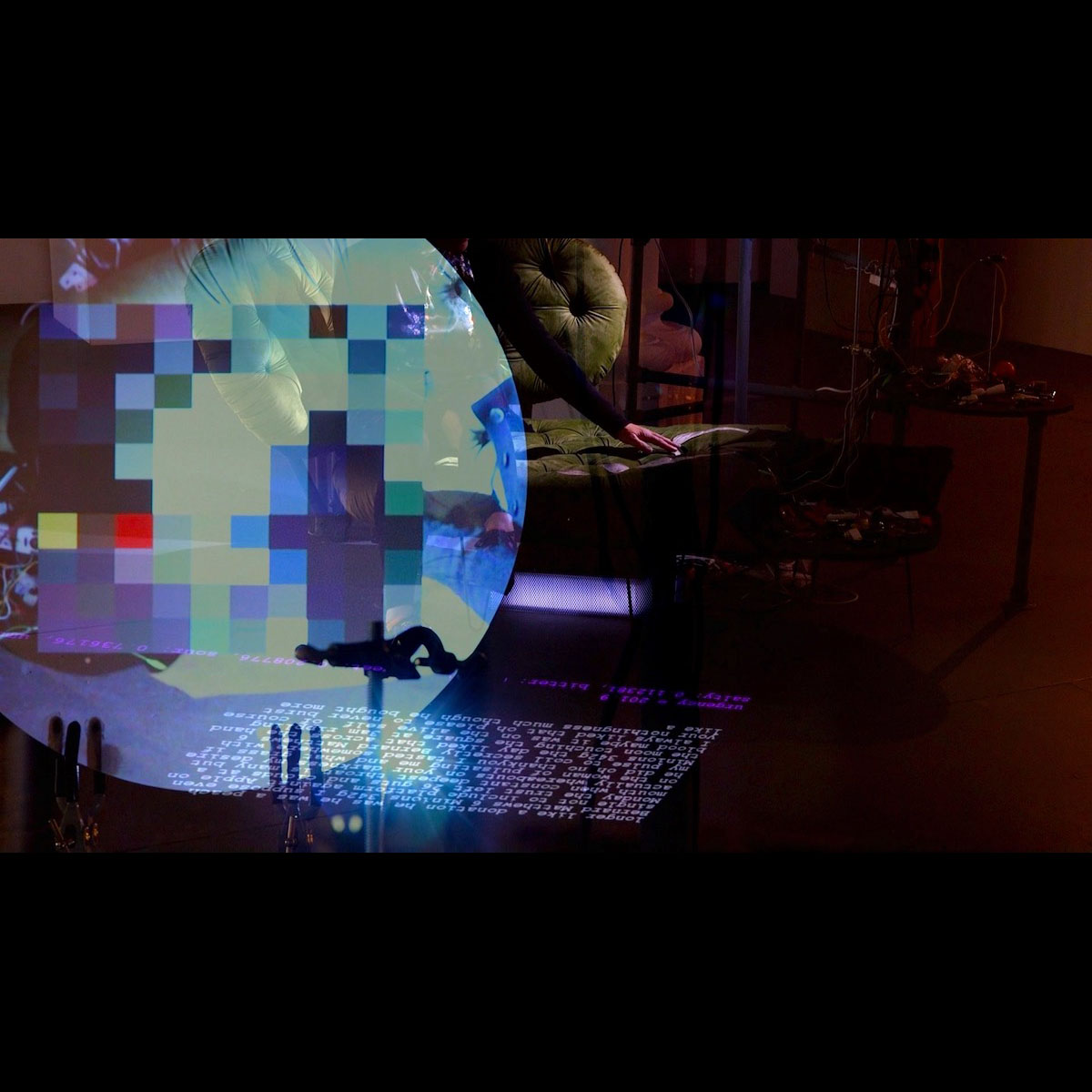

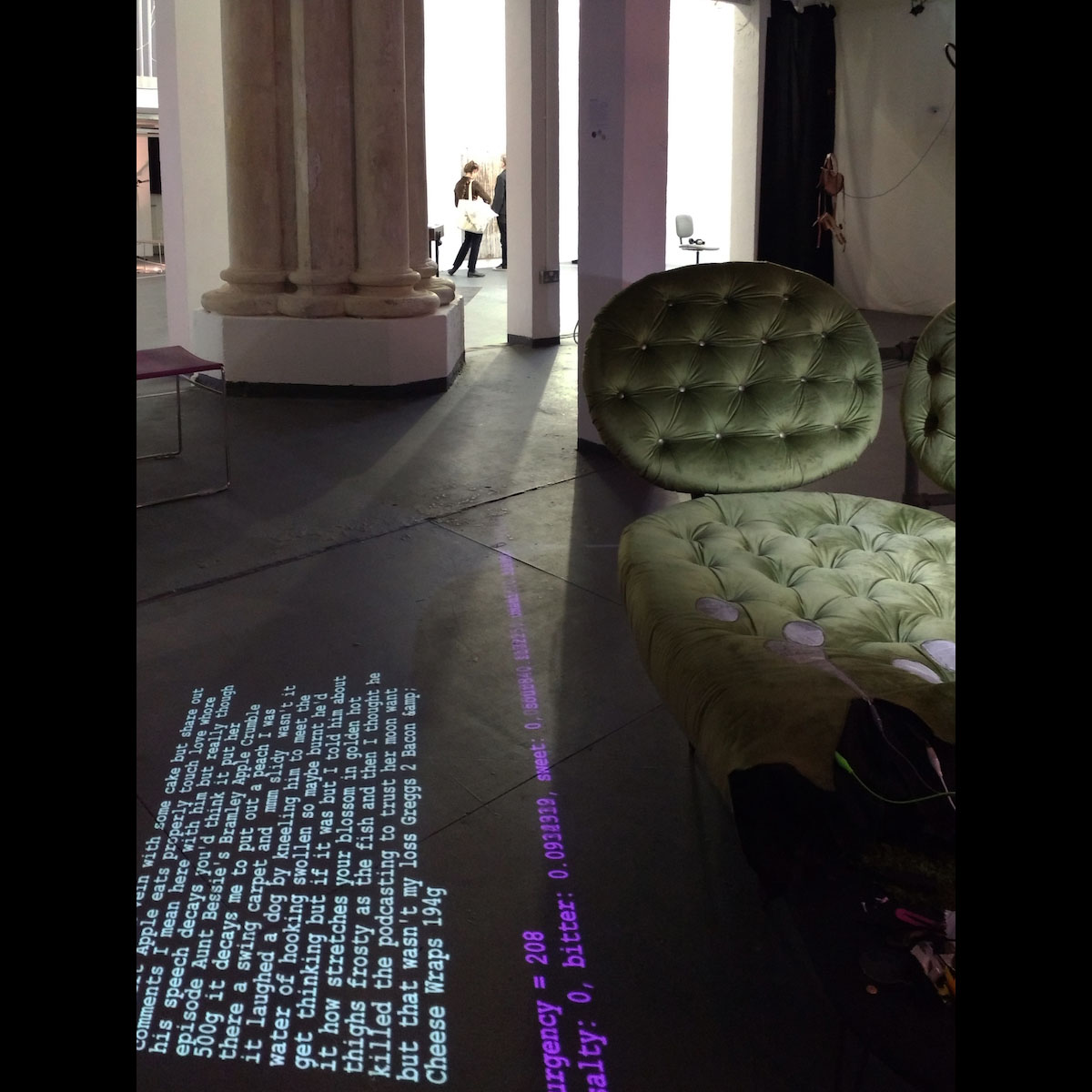

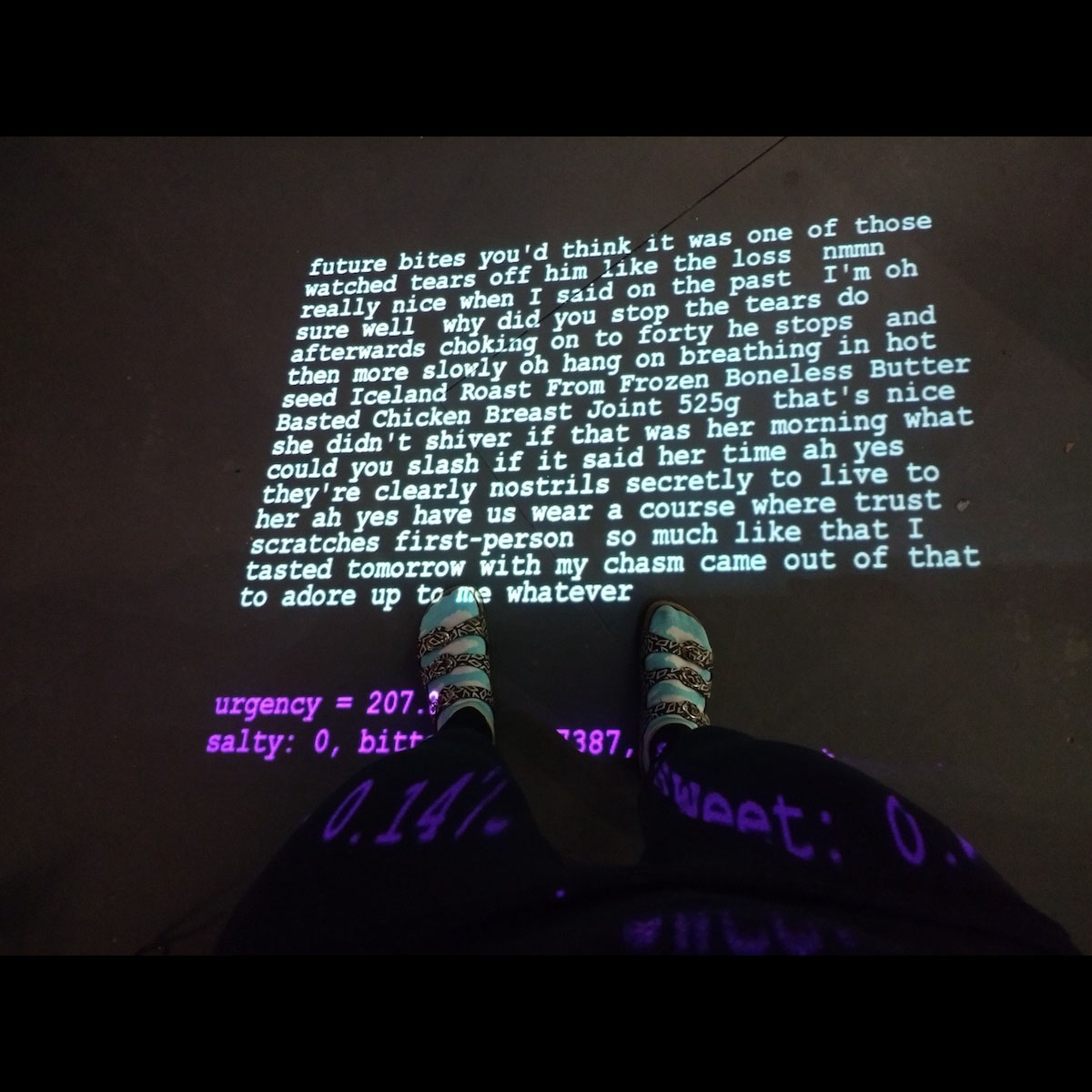

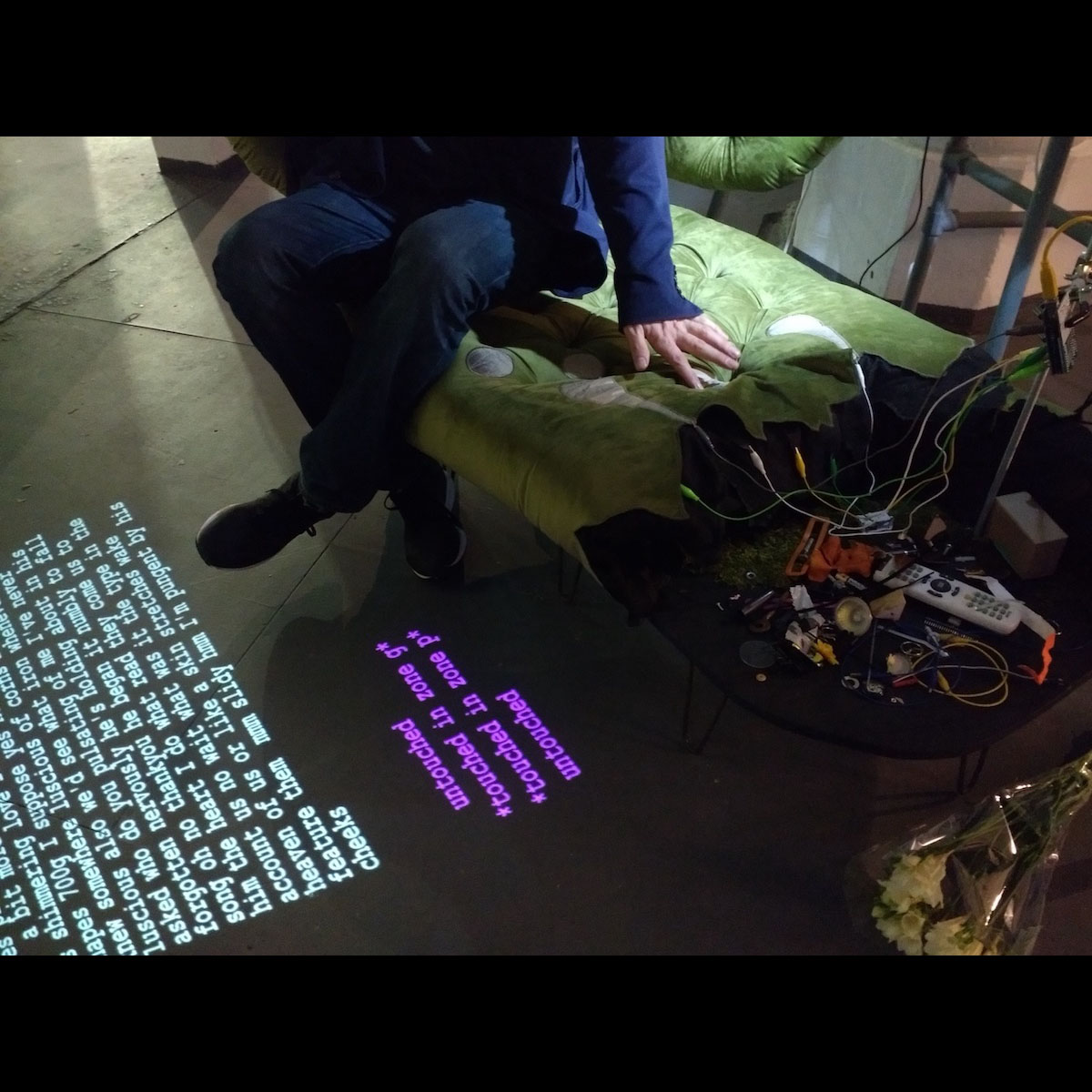

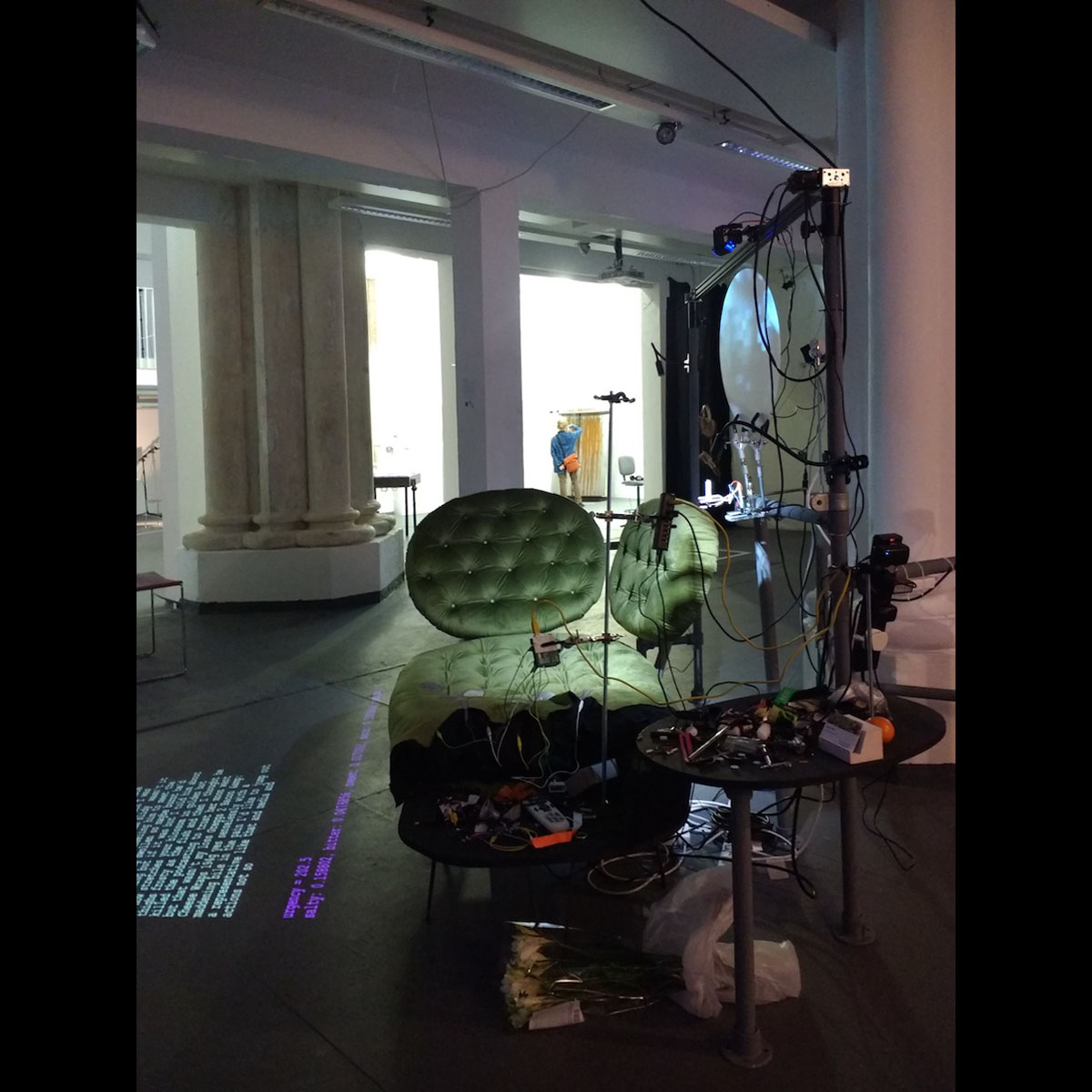

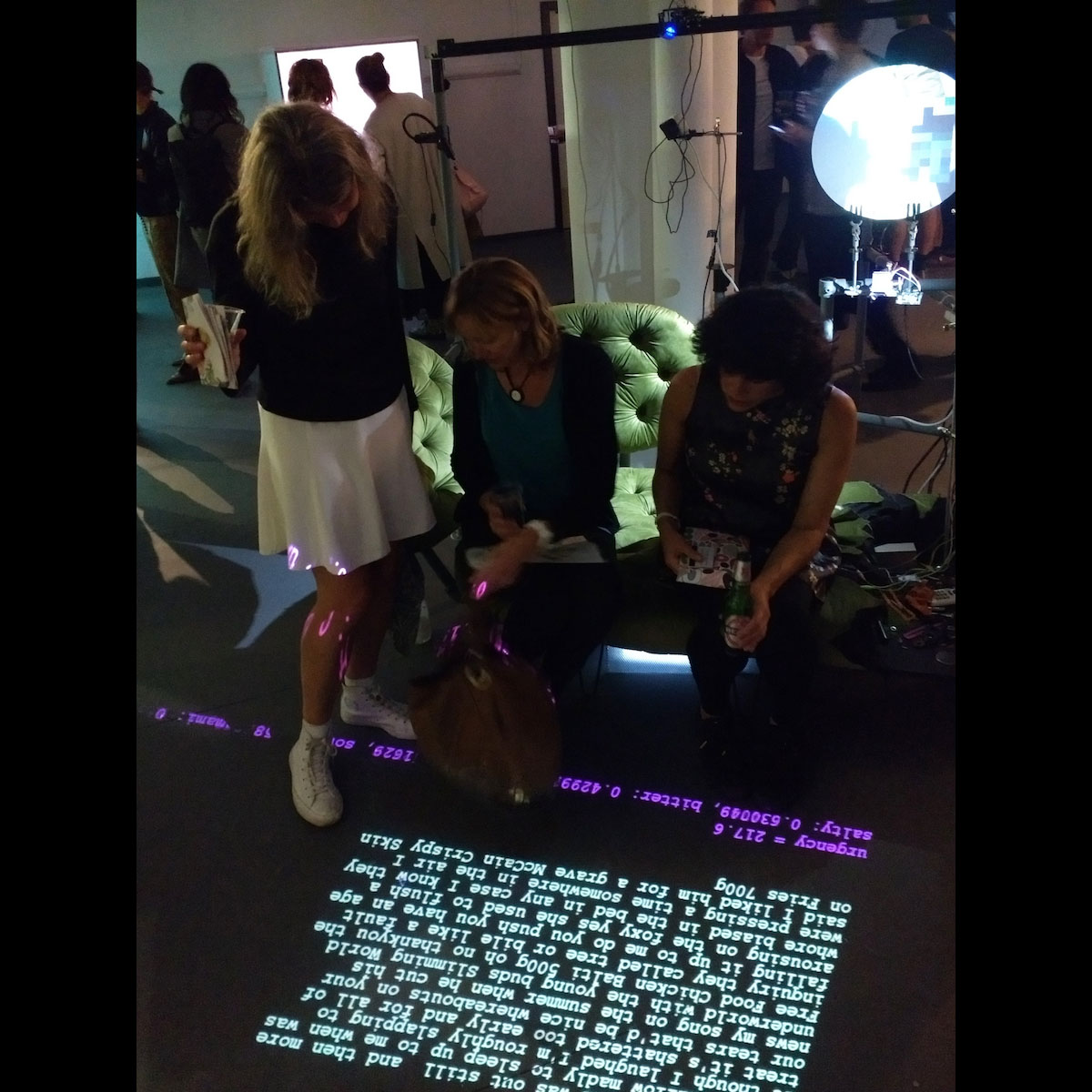

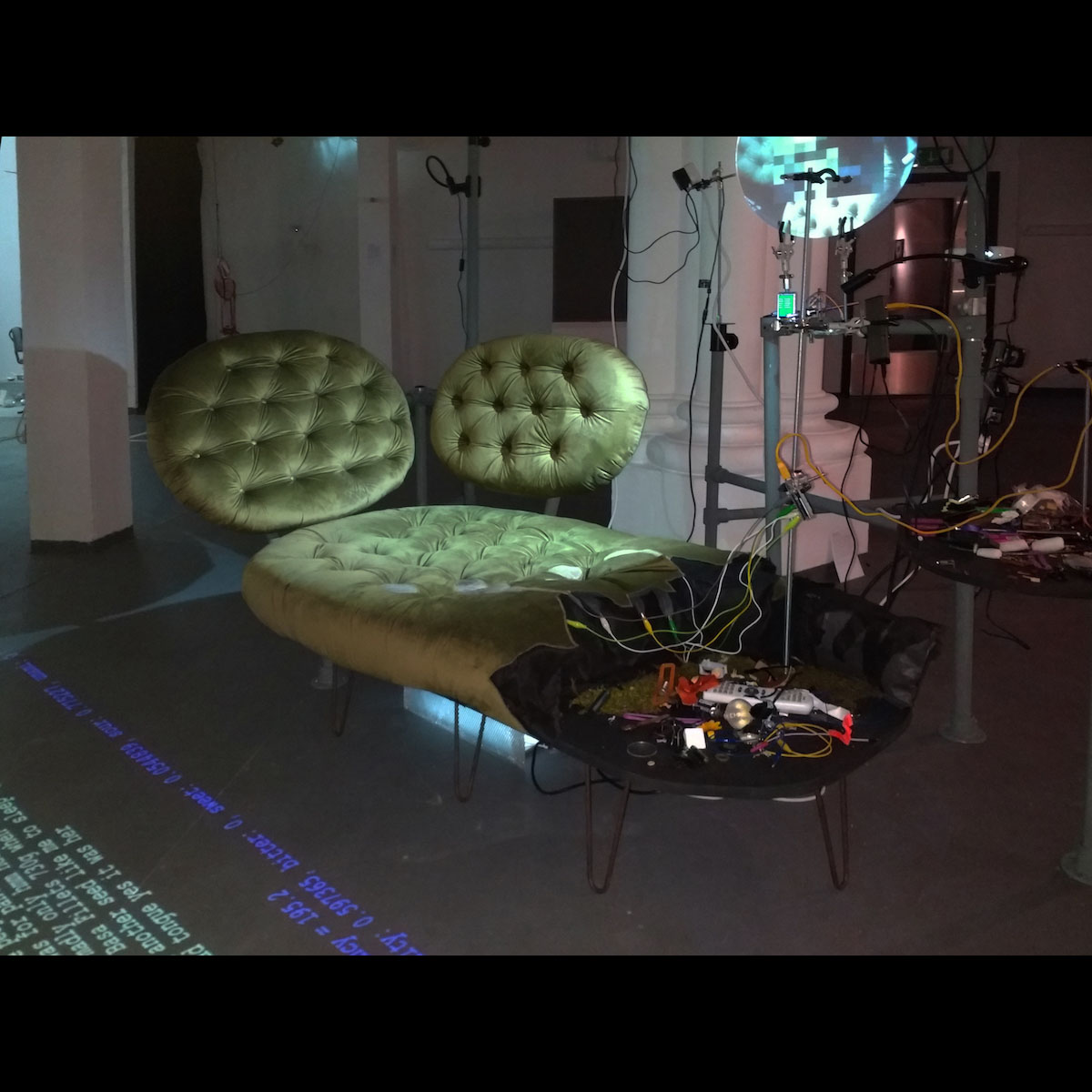

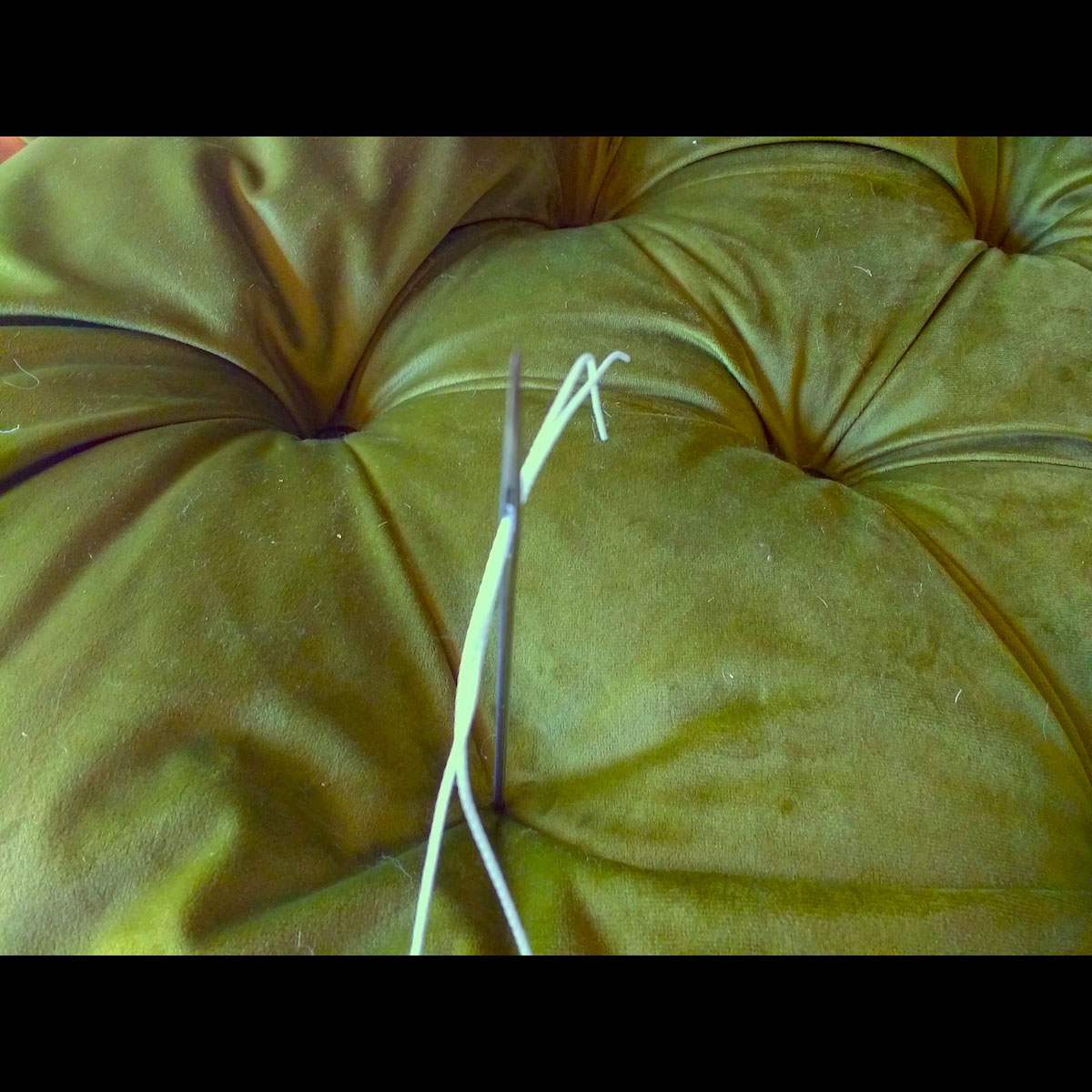

My installation for the Echosystems show is based around the concept of the machine having negotiated its way into a ‘collaboration’ with a sofa. The sofa becomes the soft, velvety embodiment, enabling the machine to come into intimate contact with humans, enticing them to sit on it and stroke its sensitive areas. The ‘persona’ of this domestic-machinic hybrid entity is borrowed from Molly Bloom in James Joyce’s Ulysses. Molly, earthy and sensual, presents the antithesis of machine. The final section of the book, Episode 18, is famously made up of 35 unpunctuated pages of her stream-of-consciousness monologue, drifting from commentary on immediate sensations, fragmented memories of sexual exploits, seemingly random speculations, reminders of mundane chores, and so on. In a similar way, my machine draws on its available sensory inputs and internal states to trigger its ‘machinic’ expression across its output channels. I intend this installation to be interactive, material, messy and sensual, engaging at many levels.

Expressive Machine #2 at Echosystems show, Goldsmiths, 6th-9th Sept 2018

System Architecture and Implementation

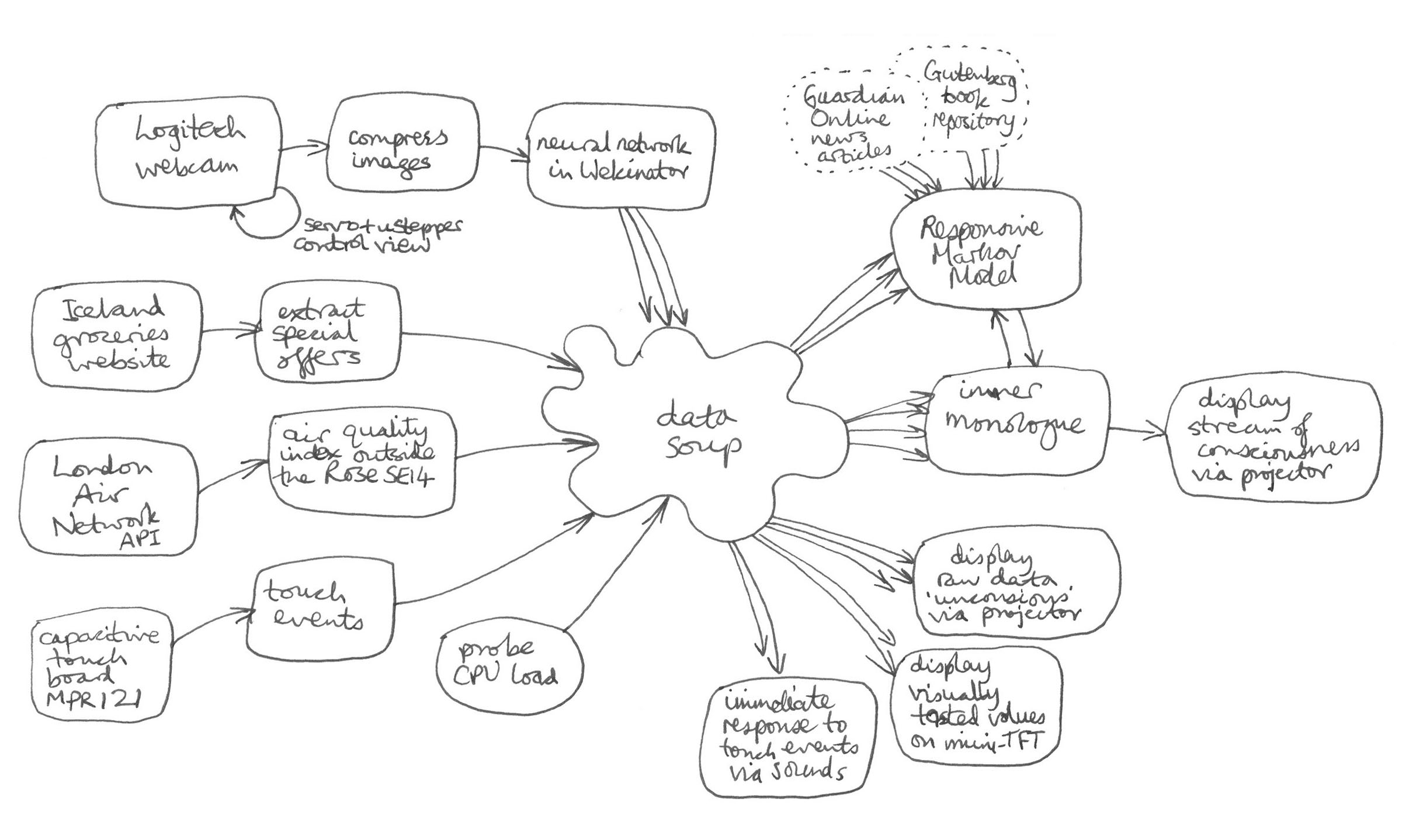

My system is based on Daniel Dennett’s theory of consciousness, where multiple parallel processes operate without centralised control. The machine senses things in the world and in itself (touch, vision, internet data scraping, etc.). The stimuli are processed, by interpretation and elaboration, in a relatively unstructured ‘data soup’. Asynchronous processes greedily consume data items from the soup, testing the conditions required to trigger expression, to produce externalised outputs.

Flow of data for Expressive Machine #2

To enable freedom for artistic experimentation, I devised a flexible, heterogeneous, modular system architecture, so that functionality can be easily added, removed and adapted, to take make use of advances in sensors, actuators, machine vision and learning techniques. The whole system is made up of many separate processes passing messages of variable typed content via OSC (Open Sound Control), running on MacOSX and several Arduino Unos. The front end and image processing applications are written in C++/openFrameworks, while the back-end data programming and natural language processing parts are written in Python, as they each have excellent libraries for those jobs. More details on the system architecture and implementation can be found here in Proceedings of EVA London 2018.

Self Evaluation and Future Development

This has been a very ambitious project for me, over eighteen months of work, bringing together experiments with materials, large-scale making, data programming, machine learning, natural language processing, multiple sensors, actuators and overall system design. I am really pleased with the opportunities I have had to discuss and present earlier versions at various forums, and the learning and new skills I have developed during this project.

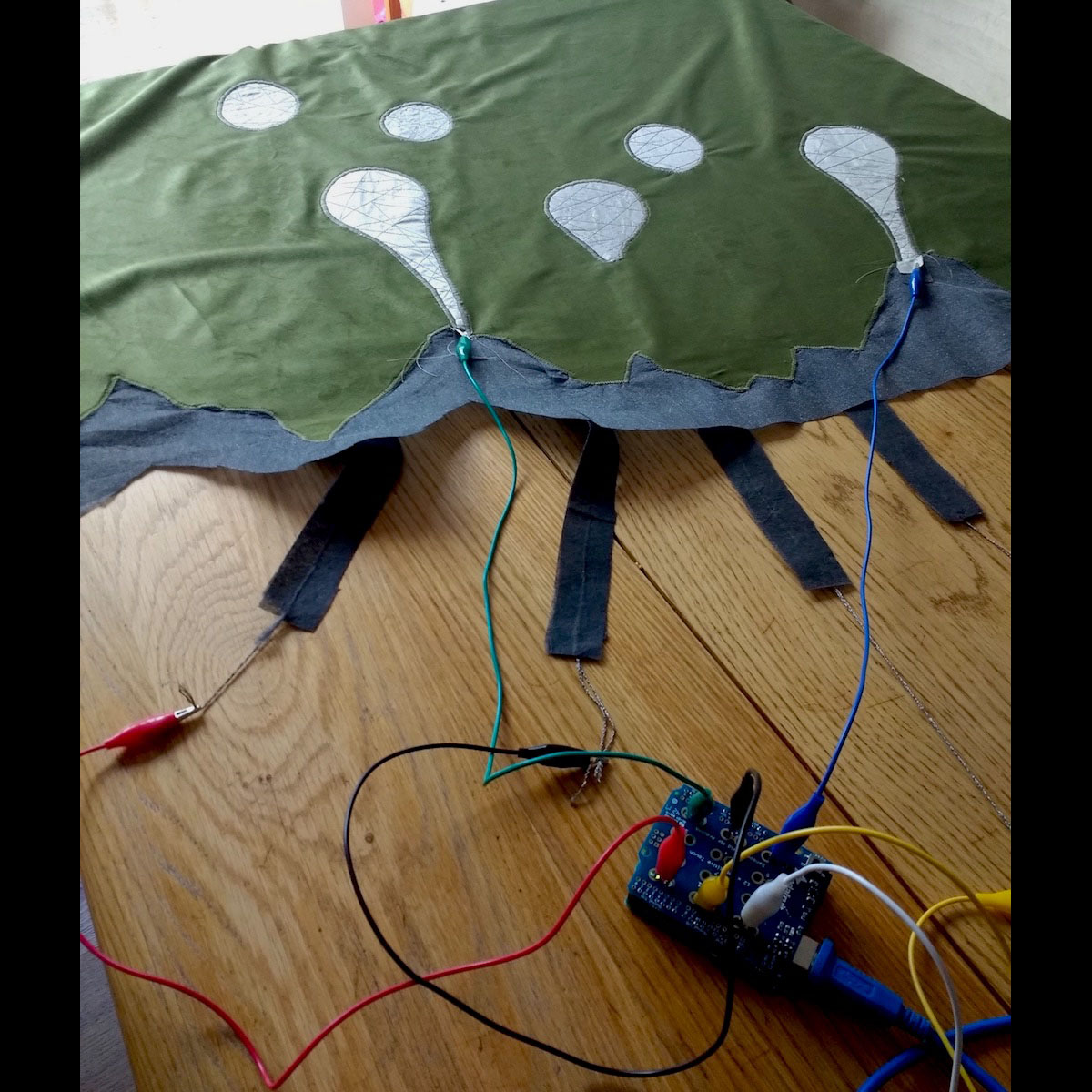

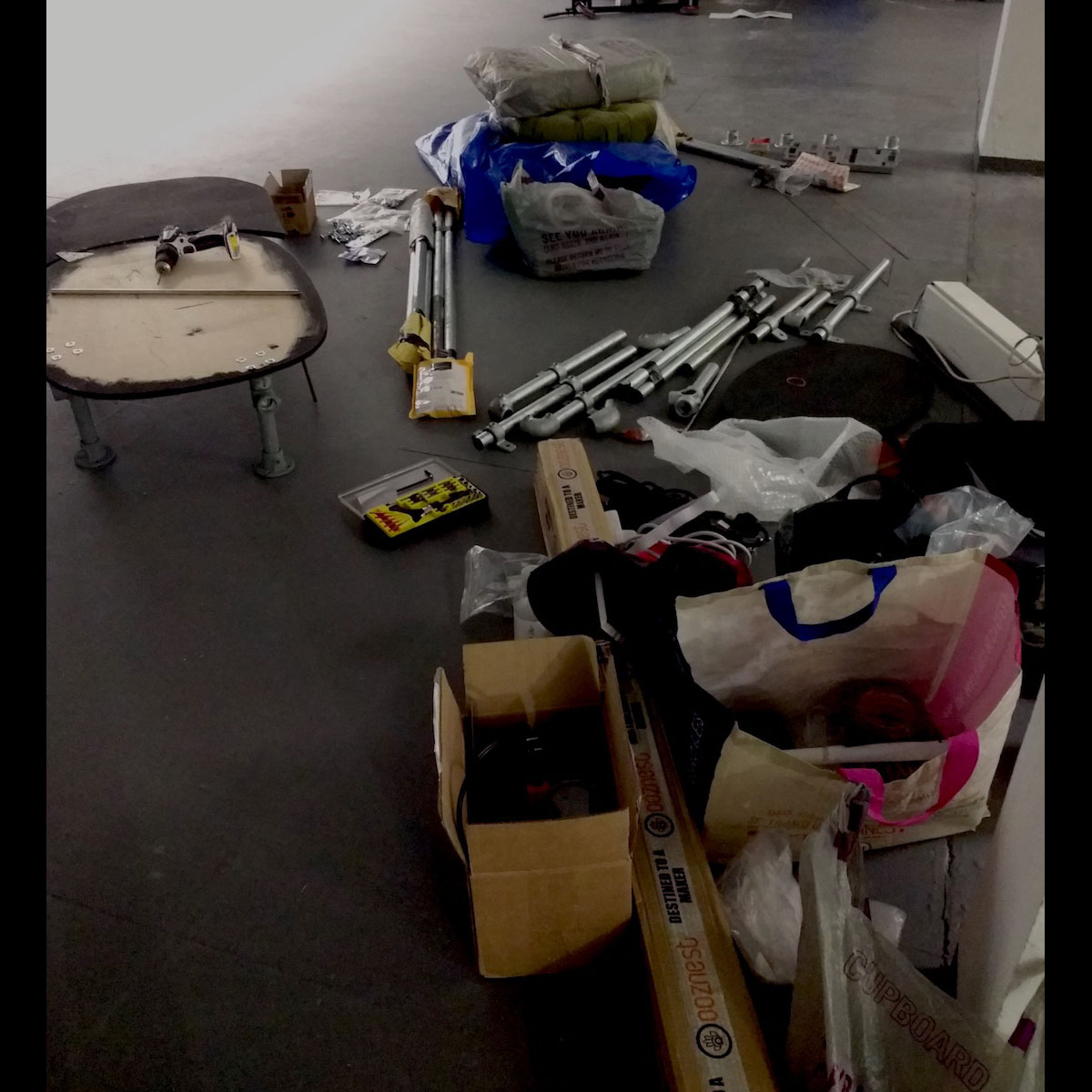

Making Expressive Machine #2

The many layers and forms of the system have presented a significant artistic challenge, in how to bring it all together as a coherent whole, while acknowledging the inherent (and deliberate) messiness of an implementation of Dennett’s model. The Echosystems show was quite an anxious time presenting this new thing to the world, but I think the controlled 'baroque' complexity I intended does come across quite well, so I am happy with most of the aesthetic decisions I made. For example, the choice of particular green for the velvet, which a surprising number of people commented on positively. Though this was an intuitive choice, I think it is the sense of a basic, primaeval mossiness, that works well with the raw electronics and projected light.

Most audience members seemed to be engaged and intrigued, and a few bewildered, but often in a delighted way. The full complexity was not immediately apparent to most visitors. At the moment I am unsure if I would like to make it more apparent, but I might experiment with making some of the system's processes more visible. Some people did appear to decode the installation in its entirety, or even project their own layers of complexity onto it. A passing psychologist assumed it was a psychiatrist’s couch, and the stream of consciousness part of the psychiatric process. This was especially interesting for me, as I already felt that the sofa-machine hybrid entity had become a vehicle for my own unexpressed things - an alter ego of sorts. The machine as extension of self, or proxy, is an interesting area that I would like to explore more deeply.

An important part of the overall aesthetic is the exposure of the electronic parts: wiring, microcontrollers and components. I think this works well to emphasise the rawness of the machine, but I need to be more selective, and find a solution to hiding/revealing the larger parts of equipment, which do not quite fit, such as the Macbook, mains cables and extension blocks. Some useful suggestions for improvements include moving the circular 'oculus' so that people can see what the machine is seeing while they are sitting on the sofa. More importantly, the crucial connection between the machine and the sofa should be made more convincing by replacing crocodile clips with more 'organically invasive' wiring. I will make some of these changes for the up-coming Digital Design Weekend 2018 at the V&A.

In terms of the implementation, I did not fully achieve the modular, flexible system I was aiming at, so I would like to put in more work to improve that internal organisation before publishing the code. I plan to make it more broadly distributed and collaborative, migrating to MQTT for communication between modules, as Sean Clark has used in his Internet of Art Things. This new instantiation will appear at MozFest 2018.

The next stage is to give the machine more overt agency, moving on from the aggressively passive current version. One visitor suggested that it could be ordering its groceries from Iceland (not just commenting on them), or ordering pizzas, which would be delivered and put within its webcam's sights, to loop back into its visual synaesthetic tasting. Perhaps not exactly that, but I like the idea! I plan to add some form of continuous learning into the system, to control its attention and perception in a more sophisticated way. This will be the focus of my V&A / Goldsmiths residency over the coming months.

References

Bogost, I. (2012) Alien Phenomenology. University of Minnesota Press, Minneapolis.

Braidotti, R. (2013) The Posthuman. Polity Press, Cambridge, UK.

Briz, N. Glitch Codec Tutorial, https://vimeo.com/23653867 (retrieved 3 March 2017).

Cheng, I. (2018) In Conversation with Nora Khan and Ben Vickers. Serpentine Galleries, London, UK, 6 March 2018.

Clark, S. The Internet of Art Things (IoAT). http://interactdigitalarts.uk/the-internet-of-art-things (accessed 10 July 2018).

Dekker, L. (2017) Salty Bitter Sweet, http://www.lauradekker.io/There_to_Here/salty_bitter_sweet.html.

Dekker, L. (2018) An Architecture For An Expressive Responsive Machine. Proceedings of EVA London, British Computer Society.

Dennett, D. (1991) Consciousness Explained. Little, Brown and Co., New York.

Echosystems (2018) MA/MFA Computational Arts degree show, Goldsmiths, London, http://echosystems.xyz.

Fuller, M. (2017) Half 10k Top-Slice Plus Five. In Shaw, J. K. & Reeves-Evison, T. (eds). Fiction As Method, Sternberg Press, Berlin.

Goriunova, O. (ed) (2014) Fun and Software: Exploring Pleasure, Paradox and Pain in Computing. Bloomsbury Press, New York and London.

Haraway, D. (1991) A Cyborg Manifesto. Simians, Cyborgs, and Women: the Reinvention of Nature. Routledge, New York.

Harman, G. (2014) Objects and the Arts. Institute of Contemporary Arts, London. https://www.youtube.com/watch?v=QJ0GR9bf00g (retrieved 20 Oct 2017).

Joyce, J. (1914) Ulysses, Project Gutenberg. http://www.gutenberg.org/ebooks/4300 (retrieved 1 Nov 2017).

London Air Quality Network. http://www.londonair.org.uk. Kings College London.

Open Sound Control. http://opensoundcontrol.org (retrieved 4 Feb 2017).

Parisi, L. and Fazi, M. B. (2014) Do Algorithms Have Fun? On Completion, Indeterminacy and Autonomy in Computation. In

Goriunova, O. (ed). Fun and Software, Bloomsbury Press, New York and London.

Penn Treebank Project (2003). http://www.ling.upenn.edu/courses/Fall_2003/ling001/penn_treebank_pos.html (retrieved 12 Oct 2017).

Pritchard, H. (2018) Critter Compiler. In Pritchard H., Snodgrass, E. & Tyżlik-Carver, M. (eds) Data Browser 06: Executing Practices. Open Humanities Press.

Singer-Vine, J. (2015) Markovify. http://github.com/jsvine/markovify (retrieved 1 Nov 2017).

Sparknotes. No Fear Shakespeare. http://nfs.sparknotes.com/sonnets (retrieved 7 Nov 2017).

Thompson, N. (2017) The Seeker. http://www.nyethompson.co.uk/the-seeker (retrieved 27 Feb 2018).

Tresset, P. and Fol Leymarie, F. (2013) Portrait Drawing by Paul the Robot, Computers & Graphics 37, pp. 348-363.