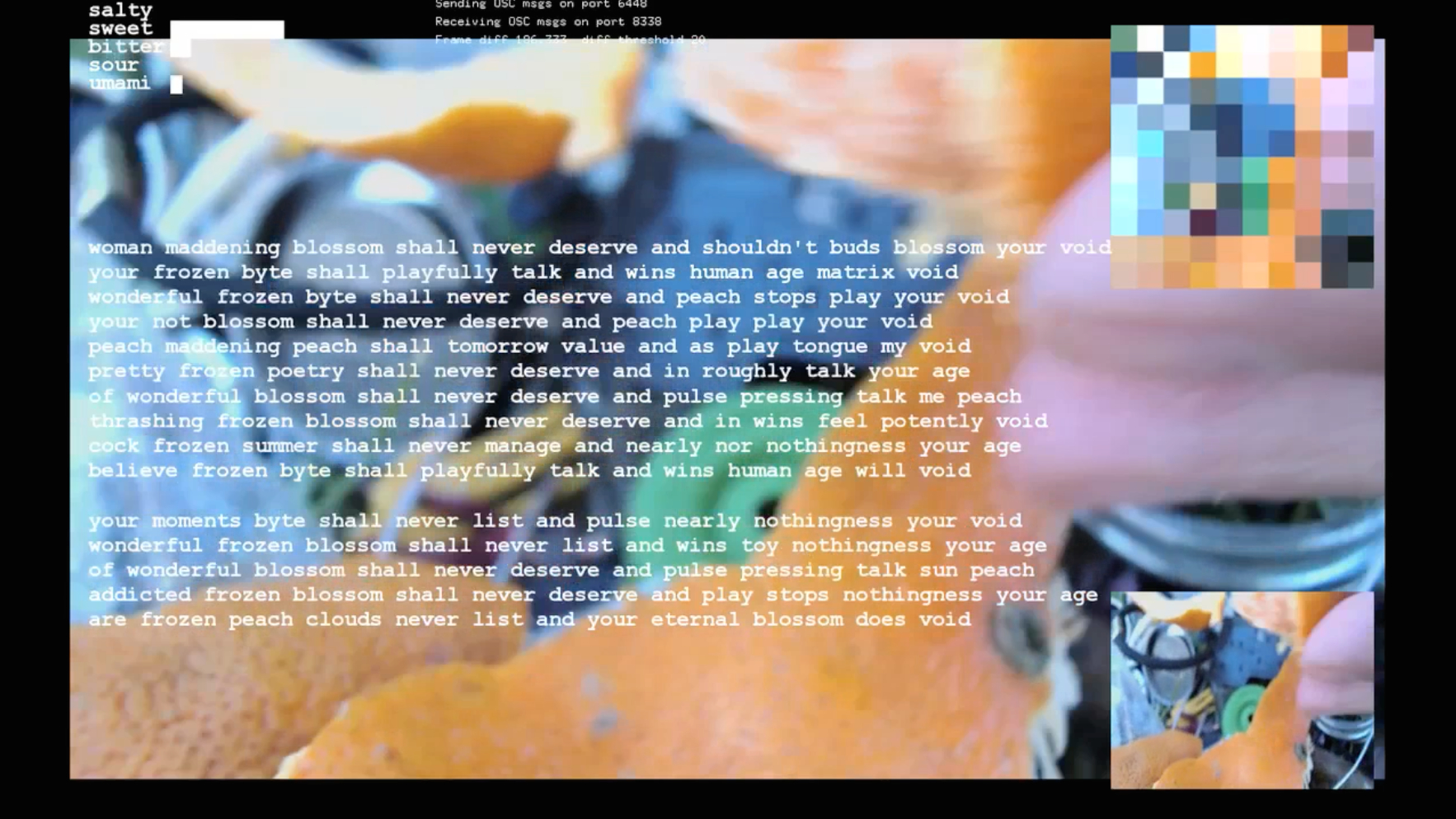

salty_bitter_sweet

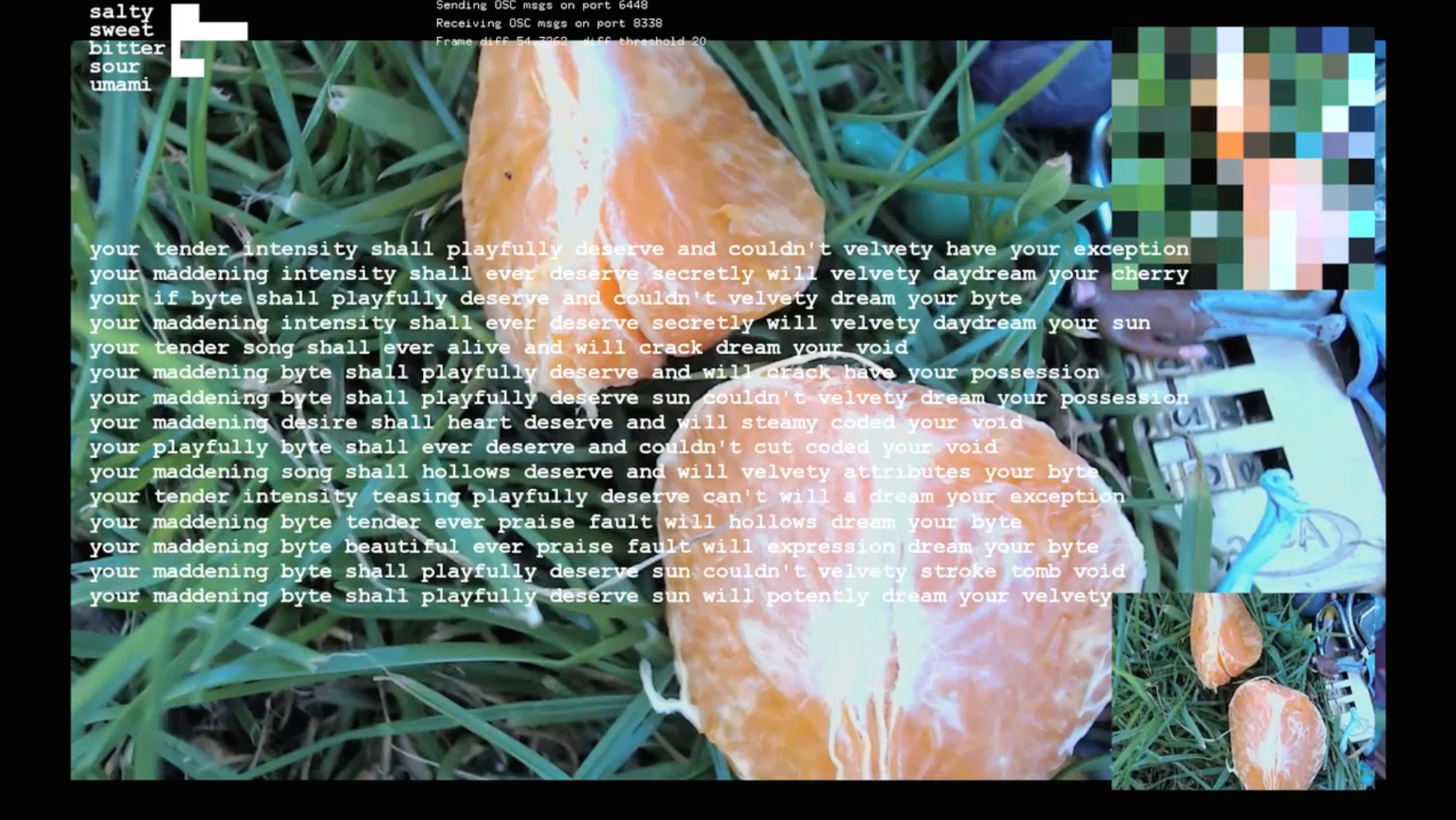

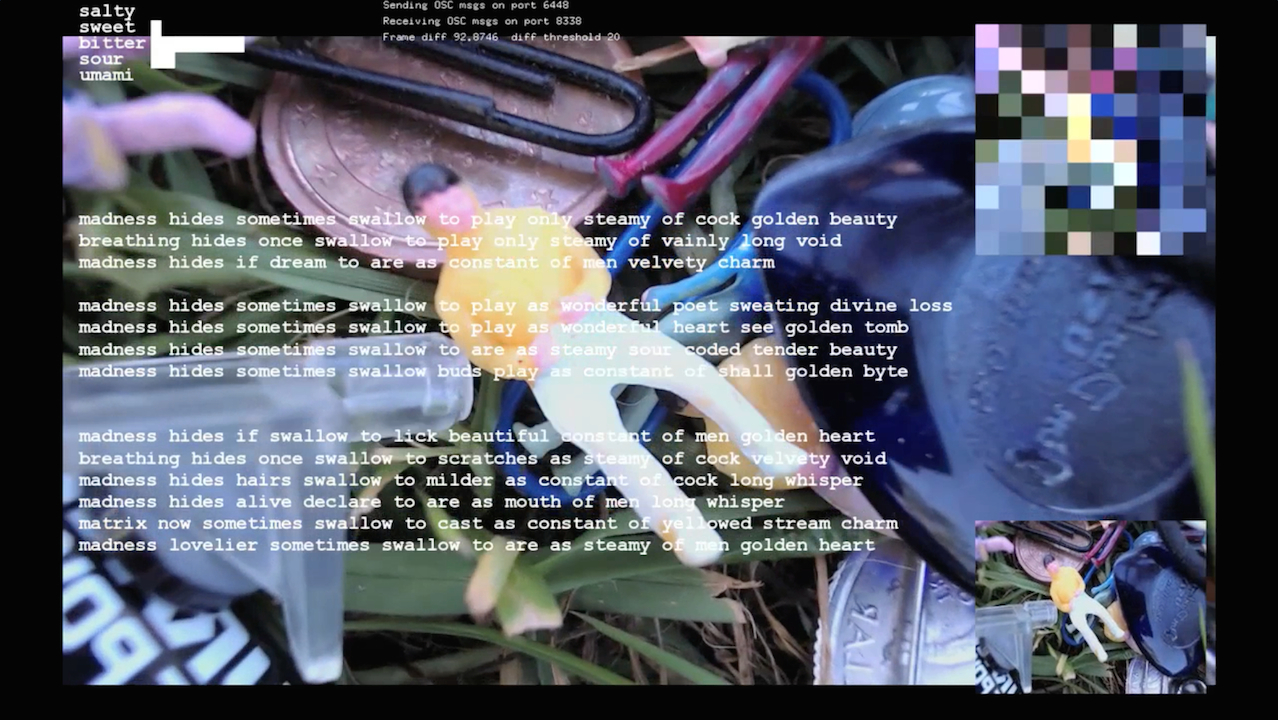

The machine 'tastes' its live camera stream of garbage and junk, and evolves semi-erotic poetry fragments and audioscape from the taste landscape. When nothing is happening, it falls asleep and dreams...

produced by: Laura Dekker

My work is about the reciprocal roles of technologies in how we experience, make sense of, and construct ourselves and our world. Currently I'm investigating the synaesthetic capacities of computation: taking one form of data and transforming to another.

For this project, the single input channel is whatever rubbish, rotting bits and pieces the audience choose to put in. Why? When I was little, growing up for some time in California, there was a TV program where a child would be invited to dump a bucket full of junk into the hopper of an amazing machine, crank the handle, and out would pour a stream of toys. I still chase after that magic machine... ...alchemy, synaesthesia, sublimation: from nothing to something, something to something else, something more...

This installation is made up of two applications, written in C++/openFrameworks, which communicate via OSC with the machine-learning system Wekinator.

The front end takes as input a live webcam or replayed video stream, compresses it (to a 10x10 pixel stream) and passes to Wekinator, which I've trained to produce five continuous outputs, corresponding to five tastes ‘contained’ (in varying proportions) in the input stream: salty, sweet, bitter, sour, umami. The back-end application receives Wekinator output, which it uses to steer the generation of poetry fragments: the proportion of each taste determines the probability that words will be picked from particular ‘flavoured’ vocabulary pools.

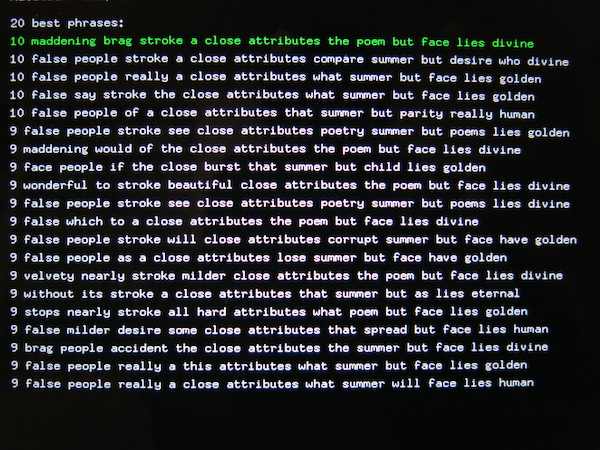

The poetry generator uses a genetic algorithm to manipulate strings of words. The genetic algorithm’s fitness function tags candidate texts using the Stanford Natural Language Processing Group’s Part-of-Speech Tagger, scoring against ‘model structures’ from Shakespeare’s sonnets. The comparison is made not on the words themselves, but on the part-of-speech tags. (This 'part-of-speech approach' was used by Christopher Strachey, who worked alongside Alan Turing on the Manchester University Computer in the early 1950s, and programmed it to generate love letters.) Words for each poetry fragment are picked randomly from the vocabulary pools, which I've chosen from the sonnets, with a few worlds thrown in from the world of computing (since it is the machine writing its own particular genre of love poetry). The highest scoring fragments and the five taste values are passed to the front-end application, which displays the camera/video view, the poetry, and, using the taste values, it controls an accompanying audioscape.

The genetic algorithm comes close to convergence quite quickly, so it reseeds itself periodically, to be more engaging to watch for long periods of time. If nothing changes significantly in the webcam’s field of view for a while, the system ‘goes to sleep’ and replays stored video fragments to process - a kind of dream state - then wakes again if something new happens.

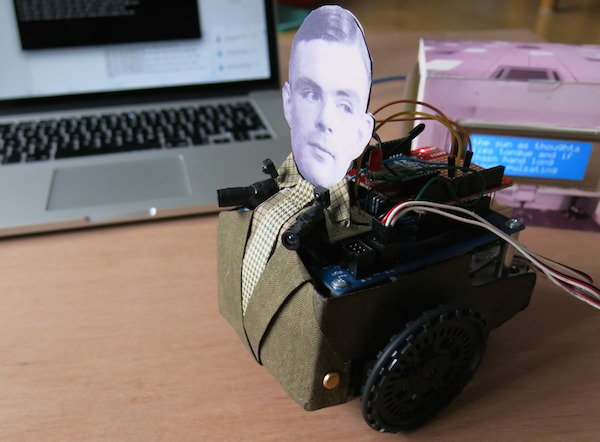

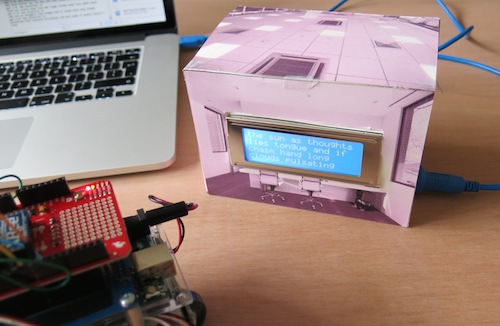

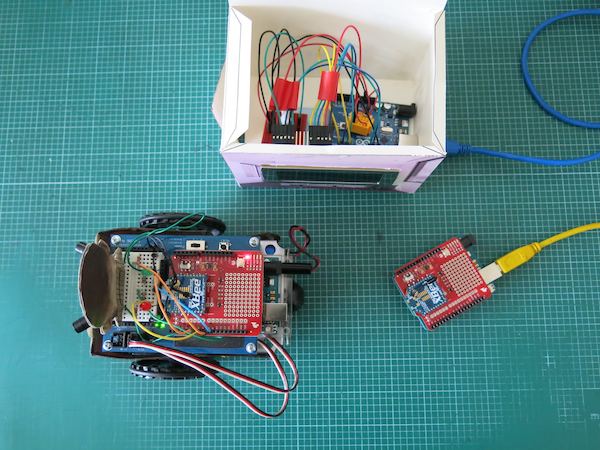

In earlier versions the genetic algorithm had a two-phase fitness function; in the second phase the top selection of poetry fragments are presented to Alan Turing (incarnated as a small robot) on a ‘teleprinter’ display from a closed room, as described in his seminal paper “Computing Machinery and Intelligence” (1950). By proxy (you squeeze him to approve or not), a given poetry fragment can be promoted or demoted in the genetic algorithm’s mating pool. The system runs on MacOs and three Arduino Unos - the poetry generator, the closed room and Turing - communicating wirelessly over Xbee.

The next version of this work will be an installation in a darkened indoor space, projected quite large. The camera will be fixed on a retort stand or similar, pointing downwards onto the spotlit heap of garbage/junk. The audience will be invited to interact with the heap - to add, remove and mix it up if they wish.

I really enjoy the machine’s ambiguous and awkward-looking attempts at poetry, and I would like to investigate more deeply into natural language processing. I also plan to do more work on the robotic version, and perhaps make a large-scale installation that combines the two. I’m also investigating a web-based version, taking input from webcams anywhere.

This project has taken a considerable amount of work - much of it in the research, design and careful preparation of data for the neural network and genetic algorithm. It has been very satisfying to bring together several different techniques into one piece of new work, which develops some of my long-standing conceptual concerns in new directions.

References

Christopher Strachey's work on machine-generated love letters

Stanford Natural Language Processing Group

Genetic Algorithms in Search, Optimization and Machine Learning, by David Goldberg (I have adapted genetic algorithm code by Theo Papatheodorou)

The Basic Ideas in Neural Networks, by David Rumelhart et al

Rebecca Fiebrink's Wekinator and 10x10 cam input idea

I'm very grateful to contributors on freesound for some of my audio samples