Evolution

Evolution is a multi-media dancing performance which explores the relationship between body and virtual environment. The performance is delivered by a dancer (Iris Ath) in the front and a programmer (Andi Wang) at the backstage. It presents the evolution process of the body when the dimension of the world is growing. The camera and the Kinect sensor catch the movement of the dancer and rendered the visual effect in real-time, which bring a special fictional journey to the audience. Since technology has already changed our lifestyle, it can also bring more possibilities to live performance on stage.

produced by: Andi Wang

Background and concept

Evolution is mainly inspired by the Pix2pix, Bill Viola(video artist), Space Odyssey by Stanley Kubrick and the Tesseract by Charles Atlas, Rashaun Mitchell and Silas Riener. Bill Viola’s artwork gives me a new angle to see the video. The essential basis of video to him is the movement - “something that exists at the moment and changes in the next moment. ” If the fundamental element of the video is images and time which form the movement and narration, we can see the video as another space and the camera angle is the eye to observe that space. Following this comprehension, live video is a way to build a virtual environment in real-time and interaction in this condition is a bridge that links the virtual space with reality, the space we live. My previous work has explored this idea and this time I tried to take live performance as a media for the concept expression.

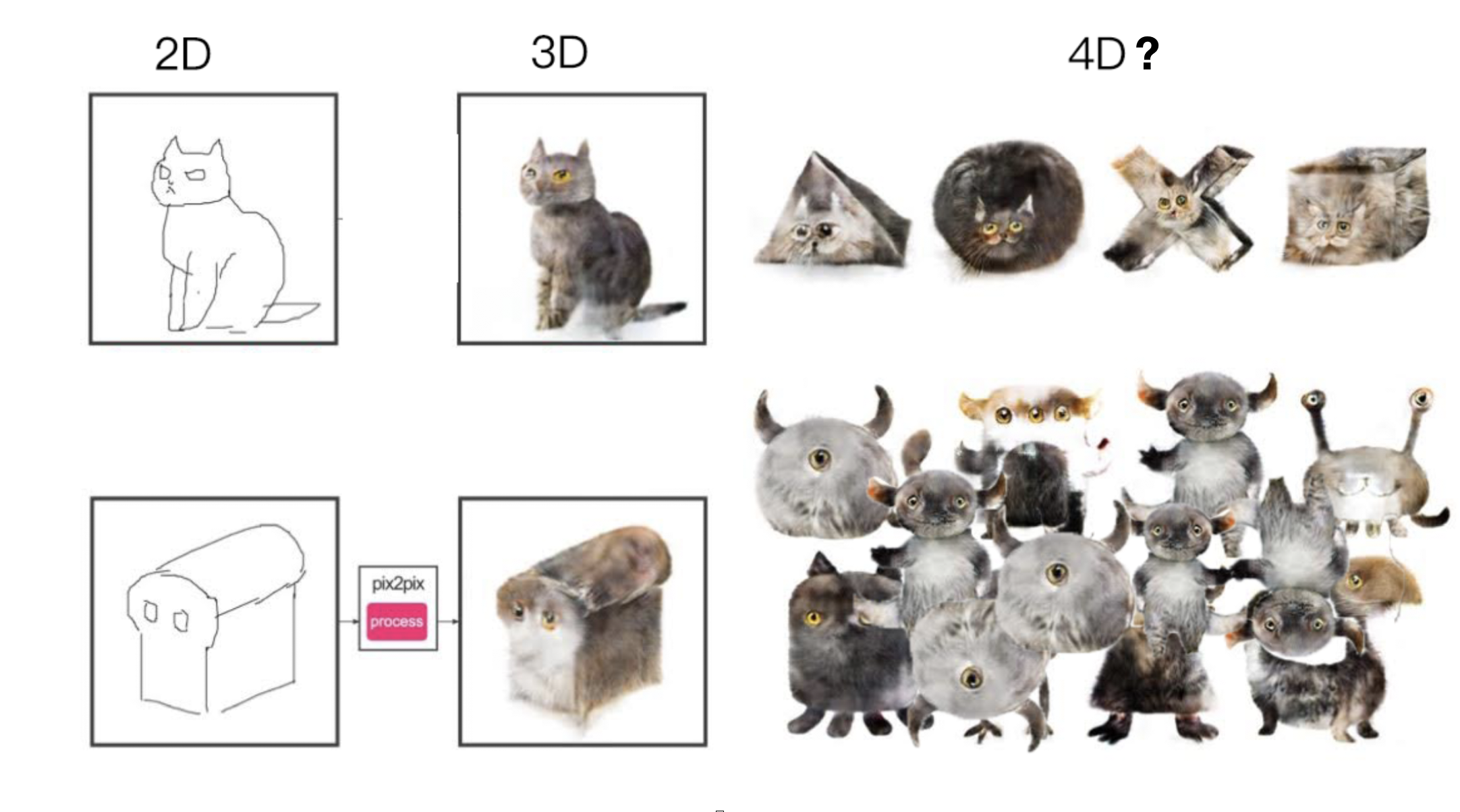

The concept of dimensional travel is mainly inspired by Pix2pix. The following image can help to explain my initial idea and inspiration.

2001 Space Odyssey inspired me of the 4th-dimensional world and the evolution of the creature. Besides, other fictional movies such as Interstellar and the current 4th dimension and black hole theory also affect a lot of the visual design. A-infinity tunnel with a distorted visual piece is what I imagined that a 4th dimension world would be. In addition, the Tesseract inspired of how they use the camera and deliver visual on stage.

The performance is divided into three parts, each represents the different format of the body(“creature”) and progressive evolution of both body and identity. The beginning is a short video shows the drawing process which represents the born process of the “creature”. The second part using body-mapping represents the growing process of the body. In this stage, the creature grew from “2D” to “3D”, and it is trying to figure out the material it was made of so the visual mapping on the body is using body elements such as eyes and hands. In addition, gender fluid and fluid identity are also considered in the video design so I choose the abstract pattern and leave it to the audience to think. The third stage is moving to the 4th dimension world, the creature has a mature identity of itself and willing to explore the environment surrounding it. This part has used the edges painting, kaleidoscope and tunnel slit-scan to represent the 4th-dimensional space. All the visual piece is catching by a live camera and post-processed in real-time.

Following is the setup process of the performance.

Technical

Pix2pix - The begging video is using the pix2pix code by Memo Akton and screen recorded the drawing process. The training model is the pictures of the dancer. I have tried to train with 3 different models and it gives a different result. At last, I decided to use the last one.

Following is the recorded training process of three different datasets.

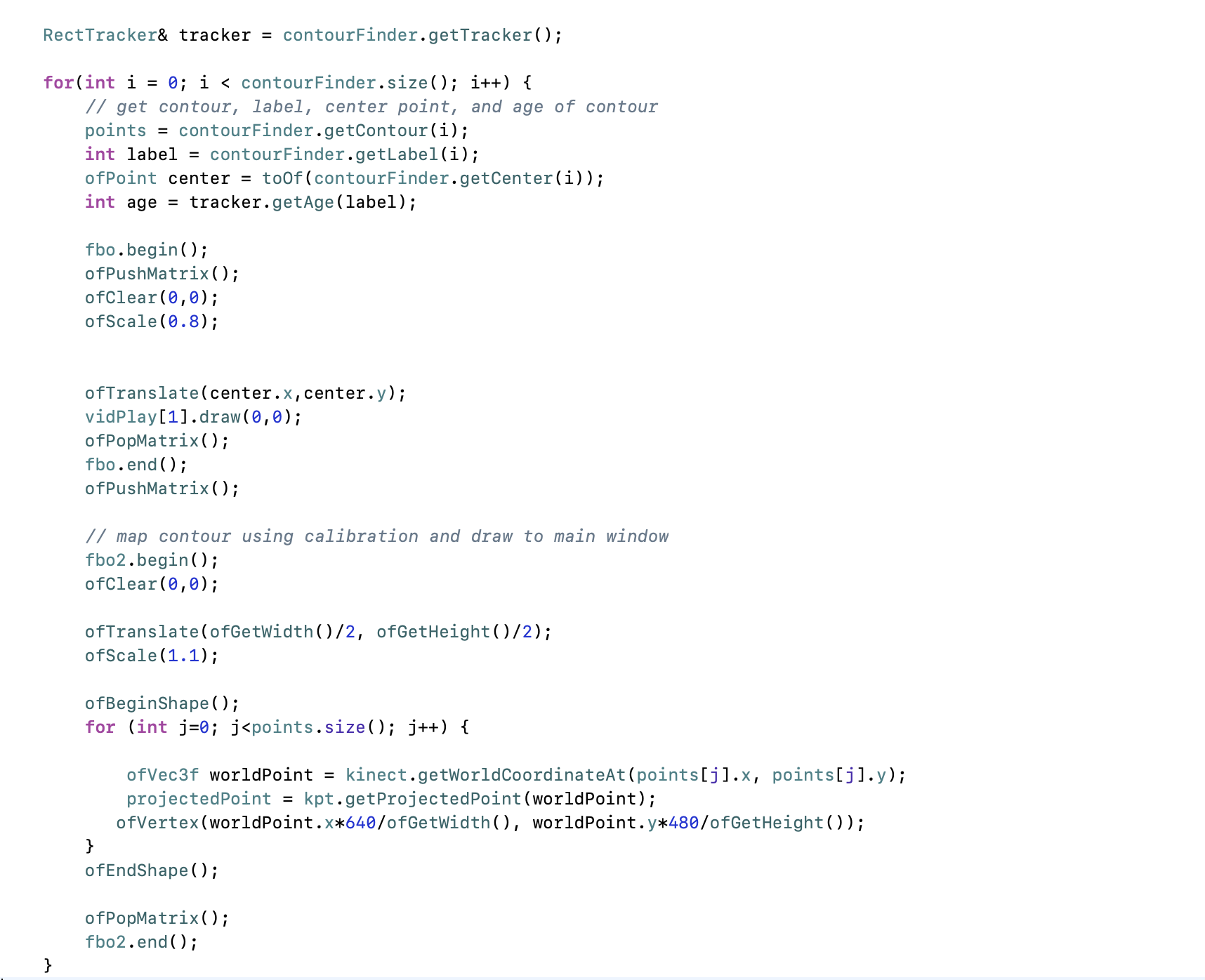

Body mapping - The body mapping part is using the KinectPorjectorToolKit addon which is able to convert the human body to a vertex shape. However, the calibration did not work with IOS platform so I scale it to the projector size instead of using the calibration. In order to map a video as a texture on the shape, I used the mask shader and translate the video position to the center of the vertex shape.

The edge - The edge part is using the edge function in the ofxCV addon. I mirrored the image and if the camera stays at the same position with the projector, it captures the projector's view and formed an infinite loop.

Kaleidoscope - the kaleidoscope is benefited from the ofxKaleidoscope addon made by David Tracy, I have modified the setup code and combine it with the edge from ofxCV.

Slit-scan - The code of infinity tunnel has borrowed part of the code from Tim Curtis, The Balloon of the Mind. The slit-scan and delay effect is using the slit-scan code we learned on Workshop in creative coding first term lecture.

Following is the code test for the final scene, the infinite tunnel.

Put them all together - My initial plan is to have the dancer performing on the stage while I will control all the scene switch at backstage. However, since my dancer cannot present at all performances, I have to perform myself while there is no one control it at back. Therefore I calculate the time of each stage and using the ofGetElapsedTimef() to run an automatically played program.

There are several buttons I can press to control the program.

“Space” - The “play” button.After the adjustment of the Kinect threshold value, press space to run the whole program.

“g” - mapping white background on the body, use for adjust body mapping and make sure it mapped accurately.

“s” - save the threshold value and quite the adjustment mode.

“h” - hide the cursor

“0-5” - individual scenes.

Following is the code I used for mapping.

Difficulty

There are tons of bug and difficulties when writing the code in one program and I will only mention some of them. When I use the USB camera to replace the laptop’s webcam working with the Kinect, the depth image get stuck and fail to track the depth image fluently and accurately. Therefore, I have to use the camera of the Kinect which is only 640 x 480.

Since the camera vision requires light and the projection mapping requires dark space, it is difficult to balance the lighting set up. Additional reading lamp binding on the Kinect and a spotlight assist to solve this problem. However, the dancer needs to pay attention to the position, if she moves outside the light range the camera view shall turn black.

Besides, it is worth mentioning that the ofVideoPlayer function sometimes failed to load more than one videos, it fixed when compiling in release mode instead of debug mode.

Future development and self-evaluation

This piece has met my expectation and I am satisfied with the quality except the poor light set up. It has brought a unique experience we normally can have with screen and film to a live stage performance. And the audience has given a different reaction to this piece. Some of them focus on the screen only and ignore the performer, while others can see the dancer and the screen at the same time. Besides, the context of G05 is more suitable to use three screens instead of one, but this piece is designed to be shown on a traditional one dimension stage. It would be great if I can cover the other two wall with a black curtain.

For future development, I would like to extend the duration of the whole performance and expand it with more scenes. But for now, because of the time limitation and there are other students sharing G05, I have to limit it to 5 minutes long. Besides, due to the limited budget, I have to use the Kinect 1 which has low resolution. I think it would be great if I can replace it with other Kinect and increase the resolution.

At last, I would like to thank my dancer Iris Ath, who has helped me with all the setup, rehearsal and choreography.

References

Background music - 11,600 years ago by Blear moon

Choreographer / performer - Iris Ath

Addons:

ofxKaleidoscope by David Tracy - https://github.com/davidptracy/ofxKaleidoscope

ofxKinectProjectorToolkit by Gene Kogan - https://github.com/genekogan/ofxKinectProjectorToolkit

ofxKinect by Theodoroe Watson - https://github.com/ofTheo/ofxKinect

OfxSyphon by Anthony Stellato - https://github.com/astellato/ofxSyphon

ofxOpenCV, ofxCV, ofxGui, ofxXmlsettings, ofxSecondWindow

Borrowed code:

The Balloon of the Mind by Tim Curtis - https://www.openprocessing.org/sketch/168655

Webcam Pix2pix by Memo Akton - https://github.com/memo/webcam-pix2pix-tensorflow

Kubrick, S., & Clarke, A. C. (1968). 2001: A space odyssey.

Atlas C , Mitchell R, & Riener S (2019) Tesseract.

Video source: Eyes blink by Dale Brunning - https://www.youtube.com/watch?v=yQTa9UlZNUk