Division Occurrence

This piece depicts my own interpretation of sound through exploring the composability of space.

produced by: Yu Liao

Introduction and inspiration

Division occurence is an audio visual project that aims to explore the multi definition of space through sound. Heavily insipred by experiemental music notation, the flexibility of experimental music notation enables performers to expand(vertical or horizontal), contract, or remain the space as it seems to be. Releasing nodes from two dimension to freely float to right, left, back, forward, up, down, and all points between without reading direction. Composers are regarding sound as points that scatter in the space of notation, enabling performers to start from random point and connect them freely, the space can expand, shrink or remain as defined. The commonality of connecting points as a composition is adding another dimension that beyond euclidean geometry.

Music, often defined as the science or art of ordering tones or sounds in succession, in combination, and in temporal relationships to produce a composition having unity and continuity. In my perspective, it is about how people dividing and arranging a static or non-static space and so does visual. Even just a thin string is cutting the space into half. Two parallel lines can trick your brain process the visual has a depth. In this work, I want to interprete the idea space by playing with audio and visual by using the simplest elements.

Concept and background research

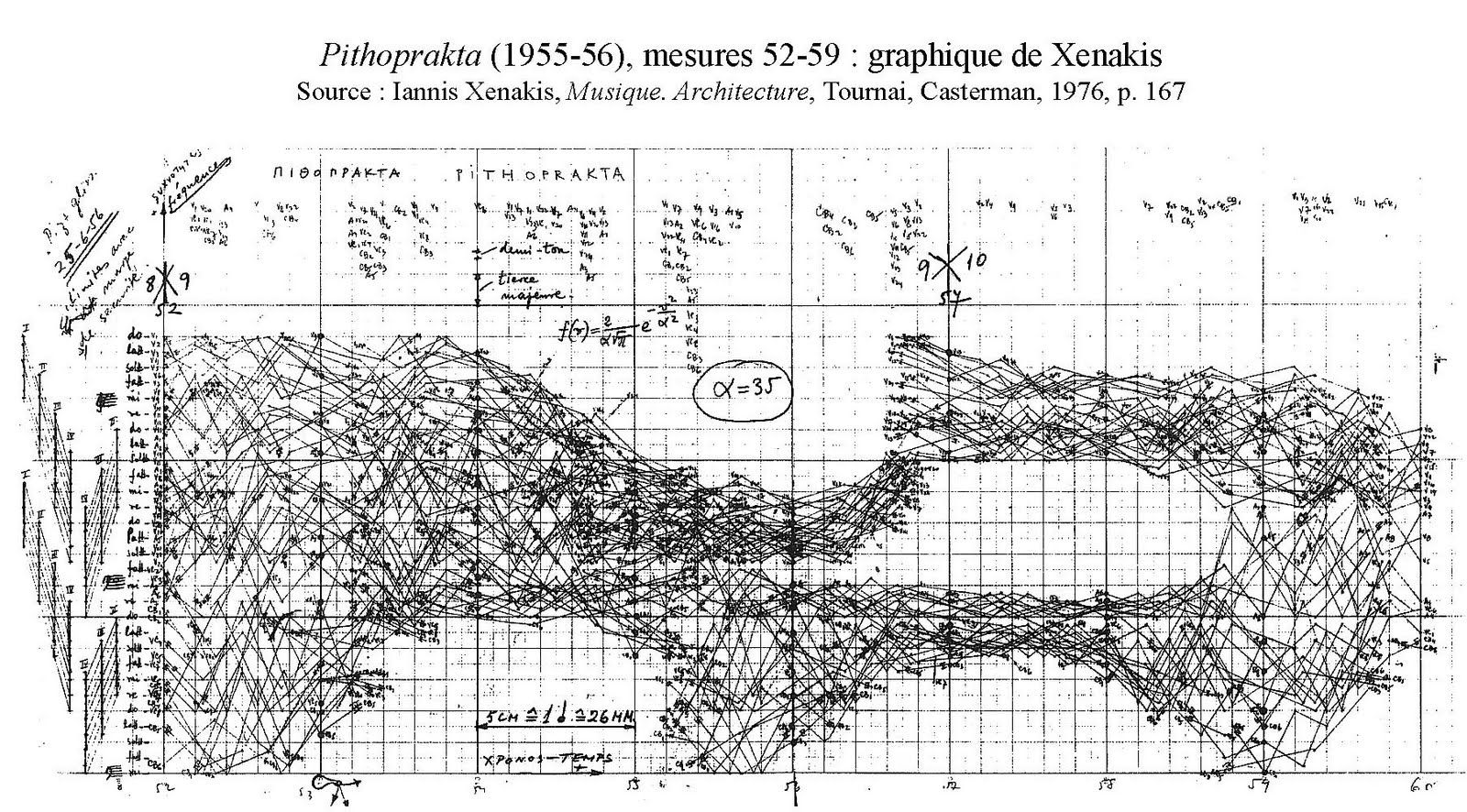

The notion of space itself, as it is used in music – as well as in many other fields – has many senses; as the French writer Georges Perec would say, there are »spaces of space«. The biggest influence on my work is the pioneer in electronic music, Iannis Xenakis, once mentioned the proportion is something that you can feel. You have to feel proportions in music, in architecture, in art wherever you use them or manipulate them.

Frequency, as definition, is the number of occurrences of a repeating event per unit of time. It’s an important parameter used in science and engineering to specify the rate of oscillatory and vibratory phenomena, such as mechanical vibrations, audio signals, radio waves, and light.

Space and time has been an important element in music production. I found out experimental music notation expands these two ideas beyond traditional music notation. Greek avant-garde composer Anestis Logothetis employs the system to different ways imprinting the contemporary sound on the score in order to express the sense of space in musical notation. There are many of his works involves the sense of space in music notation. You can notice the theory of depth perception constantly appeared in his work. Beside positioning sound in three-dimensional space through graphic. According to Logothetis the polymorphism of graphical notation has both to do with space and with the method by which it is read. The composer takes into consideration the divergences of the different performers to retain subjective definition on space.

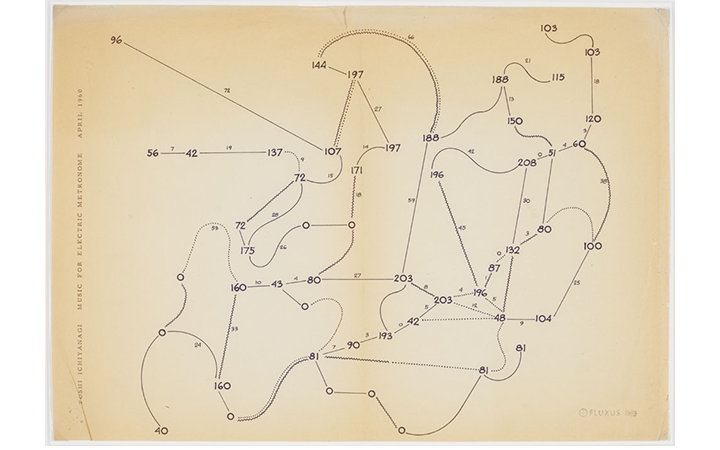

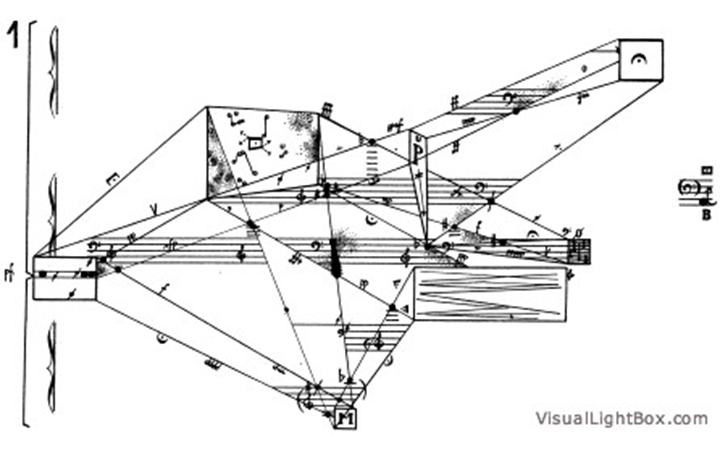

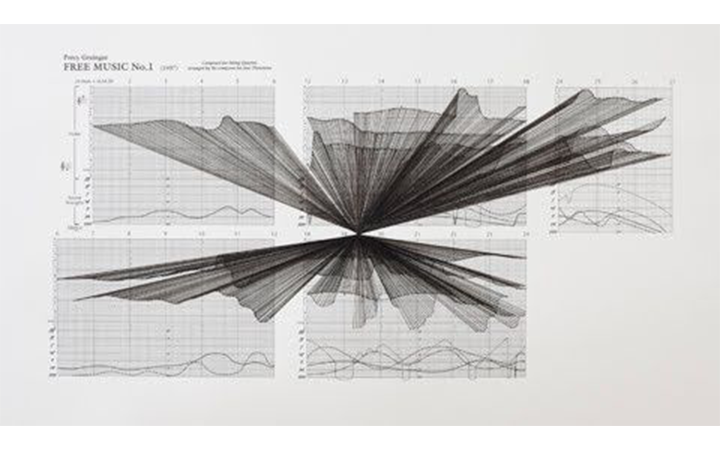

The images below are experimental notation that use different ways to interpret the idea of space.

Fields of indeterminacy - Toshi Ichiyanagi

Bussotti and Ferrari - Luc Ferrari

Sound Drawings - Marco Fusinato

Pithoprakrakta - Iaanis Xenakis

Technical and process

This project aims to create music only with pure sine waves with sound effects like envelopes, filters, frequency modulation and so on. The biggest challenge I encountered is mapping the sound data to create visuals. I have try different numbers of mapping to see the results match the sound or not.

ofxOsc -

I use touchOsc to send accelerometer data to openframework and map the xy value(-1~1) to the position on the screen. The data is very glitchy. Without using low pass filter, the point on the screen will be very rough and irregular.

ofxMaxim -

The audio is based on sinewaves with 8 different pitches. Metronome ticks per 7 frames every so often. Iteration increase every 4 bars, the pitch will also change based on the root chords for the arpeggio. The osc messages are mapped into physical position on the screen, and also influencing the audio output, x position as the cutoff point of the lowpass filter, y position as the resonance of the filter.

The video shows the test pattern I made from the begining.

Future development & self evaluation

With this experience, I would definitely love to bring this work to another level. By building it as an audio-visual “system”, this work itself, is representing how I define the notion of space. This project realize my first step of using simple geometry shapes and strings to create the sense of space by playing with depth perception. With the sound of sinwave audio in iteration, wrapped in equal portion to create the division occurence, a way to divide static time in to pieces.

I can imagine this work being used in audio visual performance and also choreography performance. Different approaches to choreograph the space will be an interesting compilation. Dancers or veiwer attached with sensor that sending osc messages that change the visual based on the position. The visual can trick people's perception by showing depth perception. The movements of viewer sync with the visual will also create the motion thus bring in the idea of space.

In terms of audio, it can be much more improved by compose it as a longer piece and adding more other sound effects. And extract more sound data from sound waves, adsr effect, time and tempo distribution. As for visual, instead of using if condition to draw different visuals, there are many other ways to make the program more efficient by using pointers or ofFBO. And also the content be more versatile with different obects, characters, shader textures, lightning...and so on.

The data mapping can also be more diverse and smooth. The first difficulty I encounter is mapping the audio visual to the visual parameters, I need to map the audio data with different min and max value (each audio set has different character). I did many tests to see the possibilities and for better results. And the osc sender data is also very glitchy, I have to put the data into arrays to calculate the difference of previous frame and current frame to smooth the data.

To make it as a performance or installation, I should also organise and bridge different scenes more smoothly.

Reference code

[1]animation - https://www.openprocessing.org/sketch/397343

[2]ofxMaxim Library - https://github.com/micknoise/Maximilian

[3]ofxOsc Library example