Robotic Shadow Puppetry

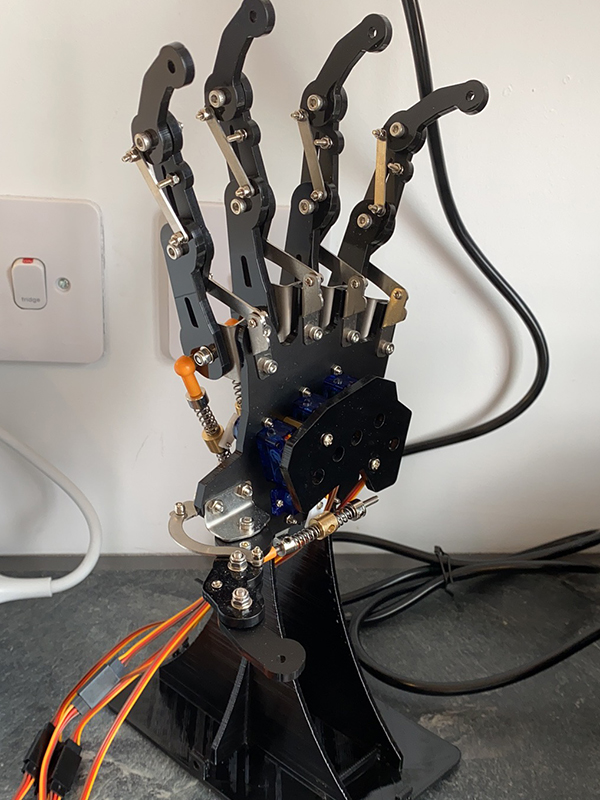

Robotic Shadow Puppetry is a piece of shadowgraphy game that simulates animals such as a rabbit, hound or ox represented in virtual shadow by two robotic hands. This installation creating in Openframeworks interacts with robotic hands, voice, images and Leap Motion.

produced by: Zhichen Gu

Introduction

Robotic Shadow Puppetry is a piece of fake shadow projection installation. In this game, the player can control two robotic hands by image and gesture sensor separately to achieve some particular shadowgraphy. As the final project in Workshops in Creative Coding Two, the brief gives to design a piece of work reimagining a collaboration interaction between human and machine. The theme of this work is inspired by traditional Shadow play, which is popular in various cultures among both children and adults in many countries around the world.

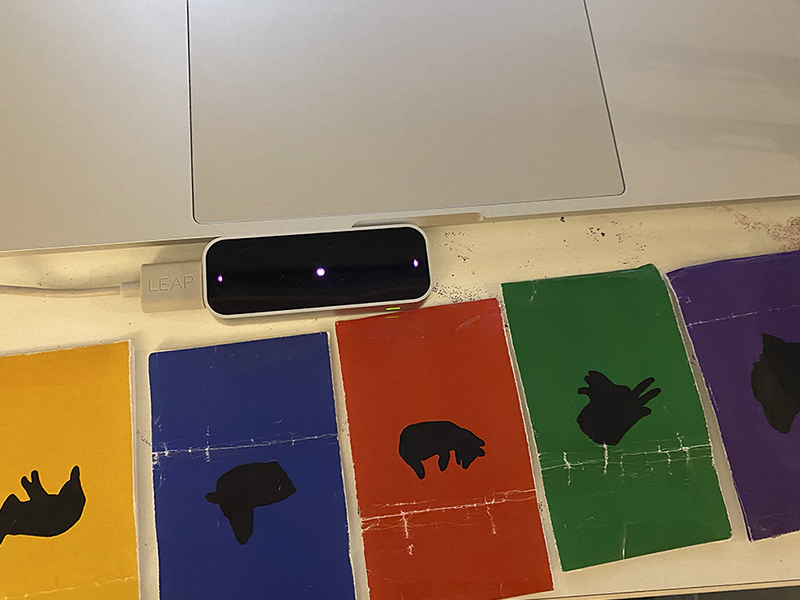

In the original plan of this work, I would like to consist of the shadow image by one human hand and one robotic hand. Lighting is essential for shadow puppetry. However, due to some limits, I found it hard to set up an environment for testing and furthering it under the covid situation. Therefore, I try to convert most exhibition parts into a virtual environment and run two robotic hands simultaneously. Leap Motion is used to detect and report most gestures of my hand's motions in time, and the Leap Motion data control one robotic hand as the avatar of the actual hand play.

Concept and background research

The inspiration comes from Shadowgraphy, also known as Shadow Puppetry, the traditional art of performing a story or show using images made by hand shadows. When I was a child, I remember seeing this performance a few times. In this performance, the talented puppeteer can make the figures appear in different forms, walk, dance, fight, nod and laugh. So I try to think about how it would be like using robots to do the same plays as virtual body interactions. It seems to be a reasonable effort. When the body is “transformed,” composited or telematically transmitted into digital environments, it should also be remembered that despite what many say, it is not an actual transformation of the body, but of the coding command and pixelated composition of its recorded or computer-generated image.

Technical

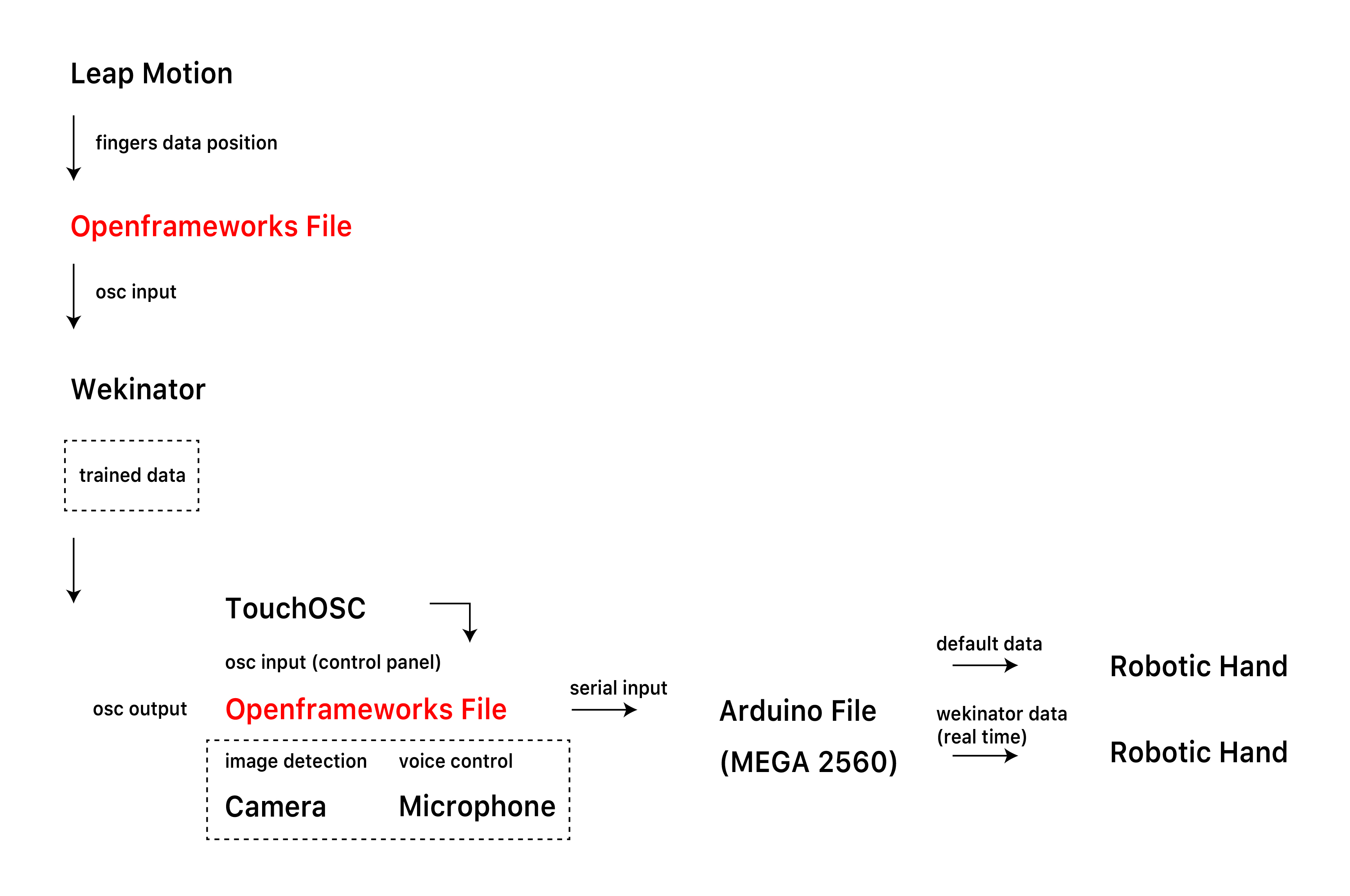

This work complies in C++ using Openframeworks mainly. And there are seven extensional addons, ofxLeapMotion2, ofxMaxim, ofxOpencv, ofxOsc, ofxRapidLib, ofxHandDetector and ofxVoiceController(ofxHandDetector and ofxVoiceController are the custom addons creating in Week 17's Labwork). Some data training works in Wekinator and Arduino controls robotic hands' servos.

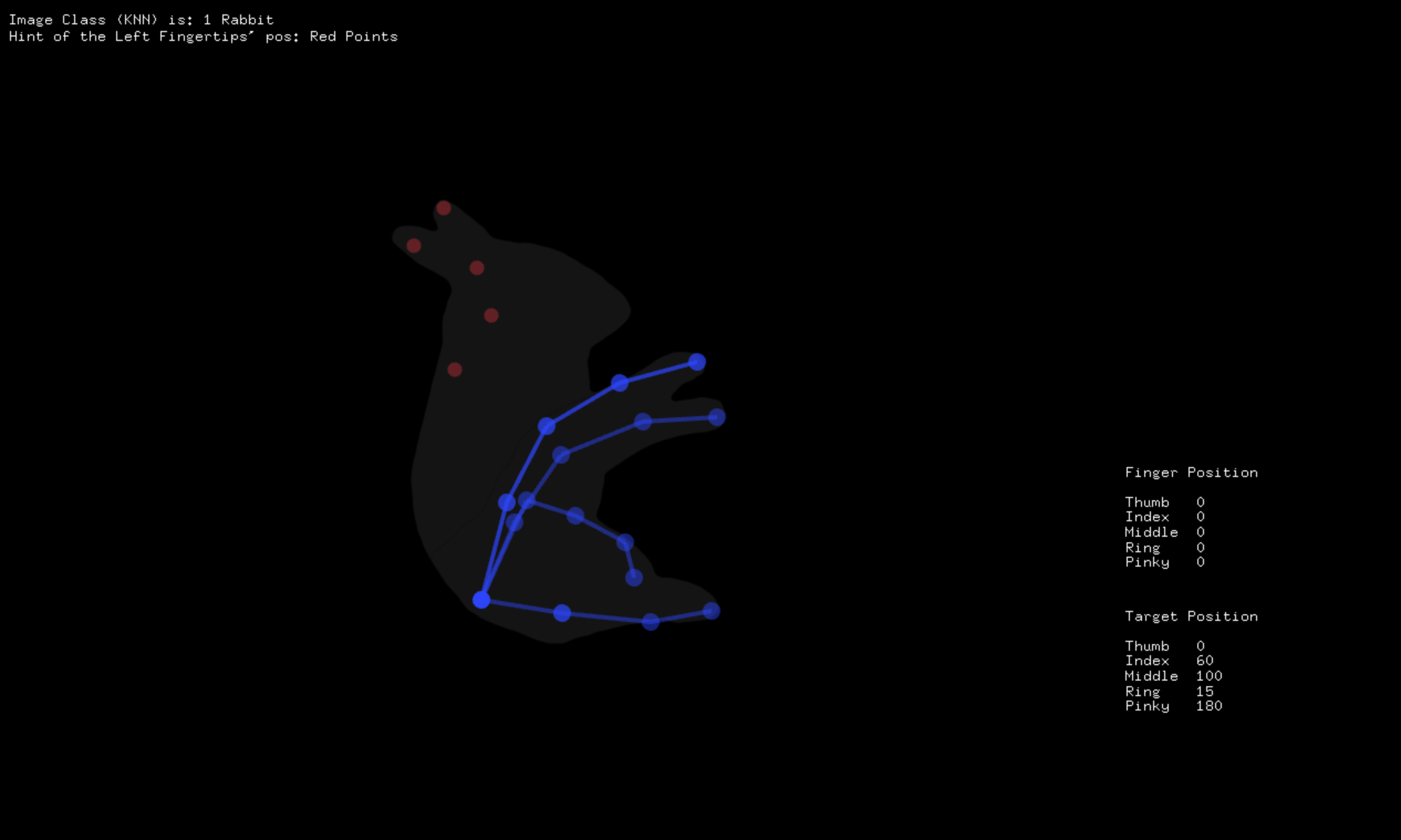

Due to the limits of accuracies and stabilities of Leap Motion, I use Wekinator to train the data of gesture detection from Leap Motion. Therefore, the project separates into two OF files. One sends Leap Motion data to Wekinator, then the trained data will send to another OF files(the main one).

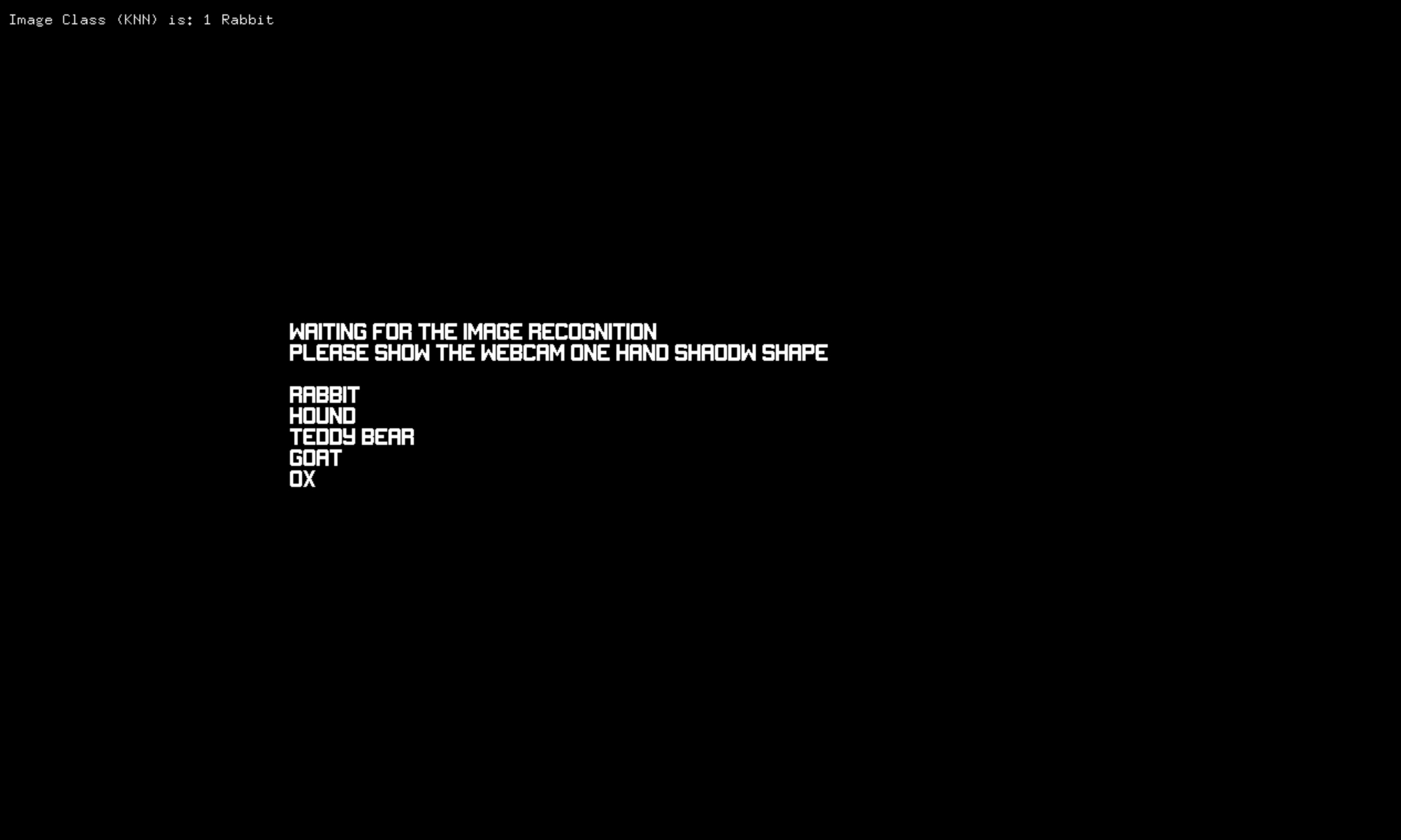

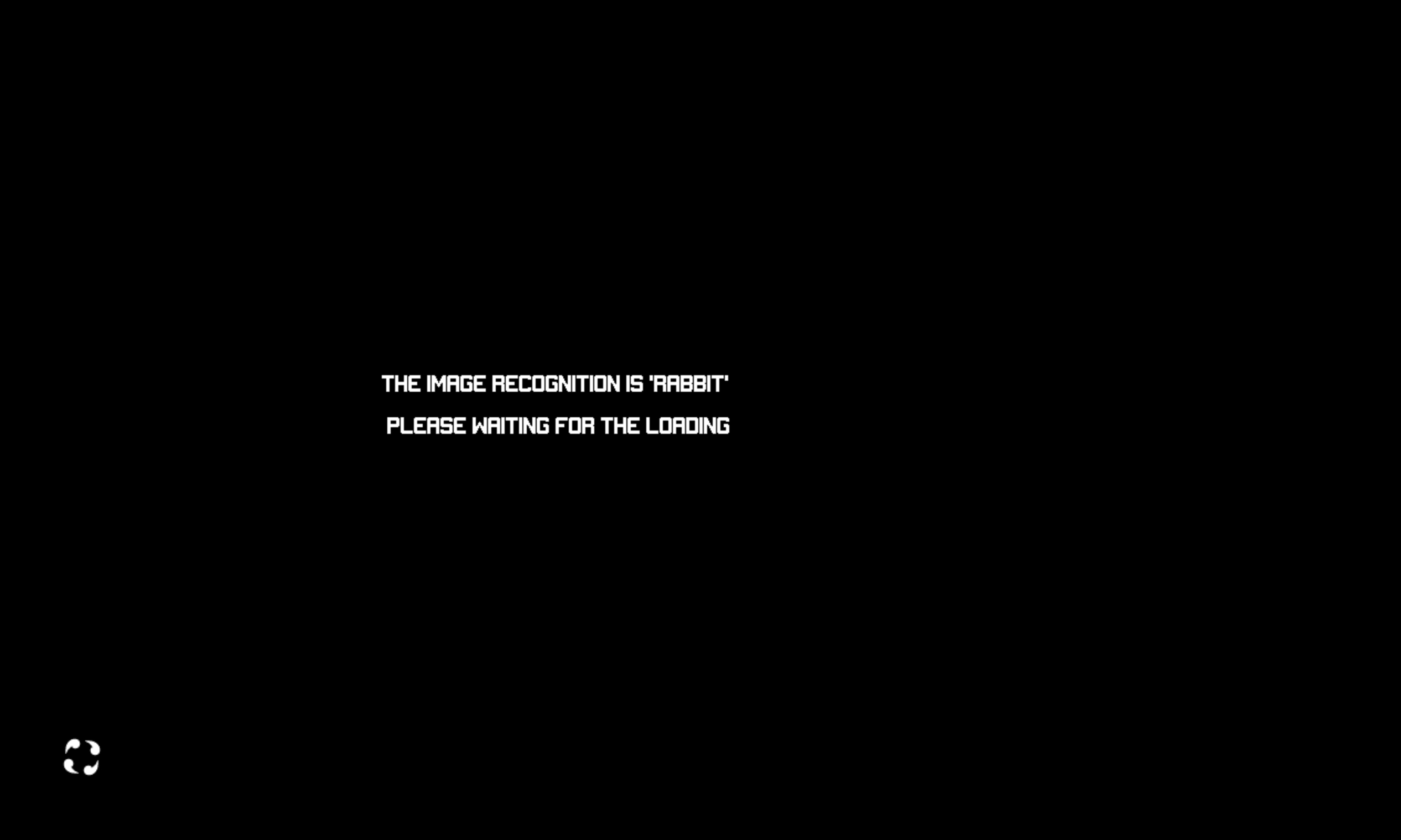

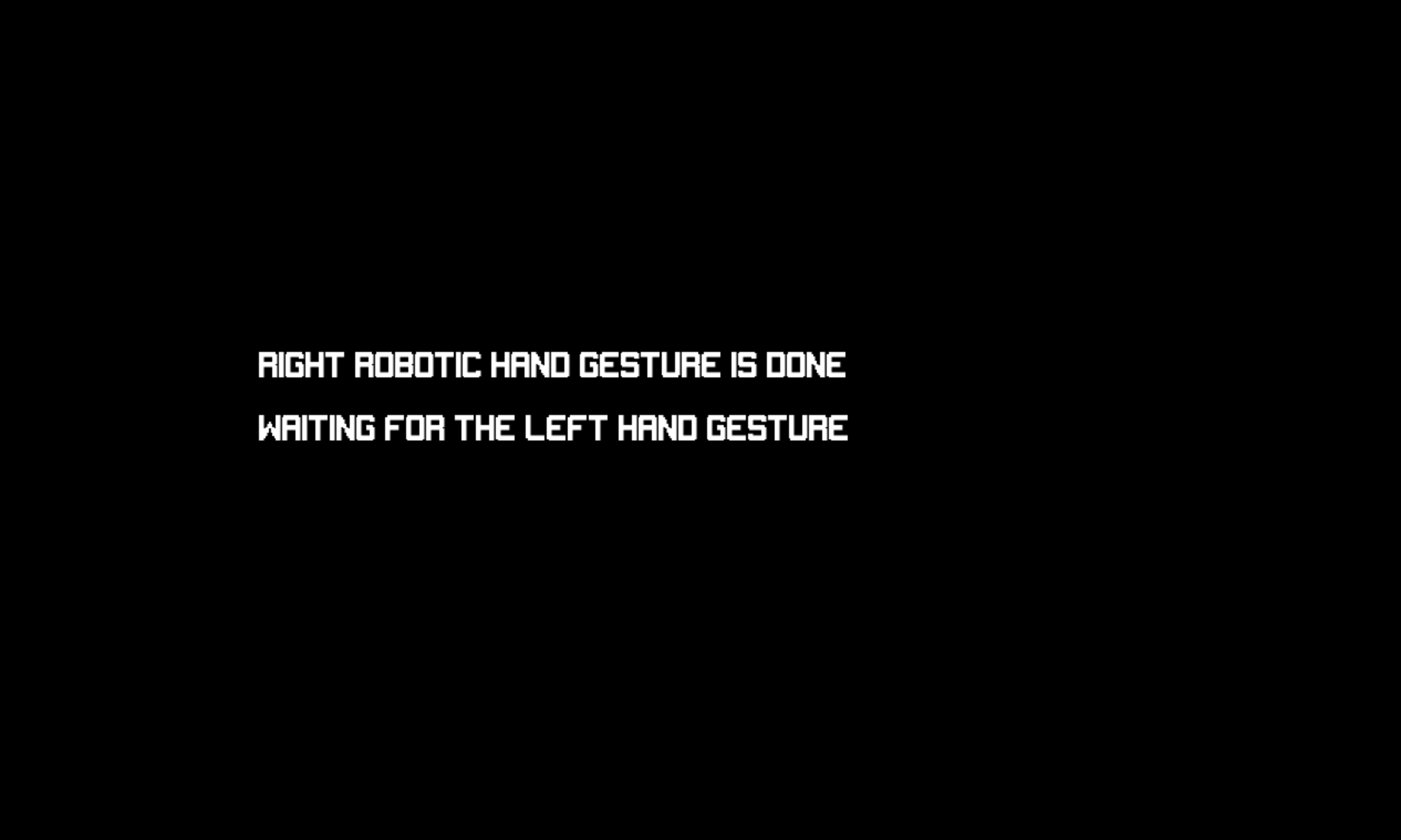

Openframeworks

TouchOsc application controls the game panel, and there are three options in the start scene. Start(start the game directly), Shape(you can choose the station without image detection), Equipment(display the state of all tools). Then, Image Detection and Voice Control are used to choose the theme in the second scenes. Once one animal shap is selected, a manipulator will start to take action and its robotic fingers run into the setting position of the corresponding shadow. After the first robotic hand stops working, the another hand that Leap Motion controls will launch and wait for the command. In the panel, you can find the Target Angle and Cur Angle, two real-time data, at the lower right corner of the scene, indicating the difference of matching completion. When both data is close enough, the evaluation(score) will come up, and the final shadow puppetry will display.

Leap Motion, Arduino, Wekinator

Leap Motion detect and send finger & gesture data. Arduino control the robotic hands' servos. Wekinator trains data of Leap Motion, receive and resend to OF files.

Leap Motion & Wekinator & Robotic hand

Image Detection && Choose shape theme

Start Scene Panel

Display & Component print & Images for detection

Future development

Due to the lack of consideration initially, I did not think about the limitations of the manipulator. Hence, the shadowgraphy presents in a virtual environment, and I would like to make a more realisable shadow puppetry cooperation in the future. Besides, I didn't finish some original ideas about custom panels and interactions with machines. I would like to include some dialogue features in the original plan, while this is removed due to time constraints.

Self evaluation

I feel satisfied with most work has arrived. Meanwhile, this project takes me a stride forward on included addons of Openframworks and interactions with other applications. I could feel the before and after change when I struggled against debugs and forced me to keep going. I'm looking forward to what I will learn from term three, and I would like to do more related works.

References

Week 17 Marchine Learning Lab Assignment: https://learn.gold.ac.uk/course/view.php?id=16022§ion=18

Leap Motion2 addons: https://github.com/genekogan/ofxLeapMotion2

Hand Shadow Puppetry gallery: https://etc.usf.edu/clipart/galleries/266-hand-shadow-puppetry

Wekinator Leap Motion examples: http://www.wekinator.org/walkthrough/