Can You See What I Am Hearing?

A Critical Framework for Algorithmic Synesthesia and Multisensory Art

By Max Jala, Jinyuan Li, Cheng Yang, Byron Biroli

I. Abstract

We are interested in providing a critical framework for the synesthetic experience in non synesthetes through algorithmic synesthesia and multisensory art: examining the role of culture in finding meaning and discerning sensory and aesthetic value. Enquiring to identify any synergies or universalities across neurological and cultural boundaries.

Synesthesia blends one input and translates it into a different output. It is a rare condition occurring in approximately 1 in 2,000 people, (American Psychological Association). However with algorithmic synesthesia the synesthetic experience is ubiquitous.

More than translating one input into another i.e. sound data into visuals, we want to articulate the complexity afforded us through the ecology of computational art. Going beyond processes and procedure to examine biases in how we perceive, experience and translate sensory inputs in different ways based on societal, technological, temporal and neurological phenomena.

Highlighting the role culture plays in shaping our perception of sensory input and how, with awareness, artists can simultaneously be aware of and manipulate audiences.

II. Concept

Synesthesia

Synesthesia comes from the Greek word, ‘to perceive together”. A synesthete is a person with an involuntary, neurological condition where the brain processes information in one form giving way to a perceptual experience in another (Sagiv 2005). It is a rare condition occurring in approximately 1 in 2,000 people, (American Psychological Association). People with synesthesia experience a “blending” of their senses when they see, smell, taste, touch or hear (Gaidos 2009). The experience could be as simple as a sound perceived as visuals or tasting words, becoming as complex as hearing a trumpet and perceiving time projected in the space, or feeling sensations on the skin when imagining something.

Multimodality & Cross Modal Interaction

In non-synesthetes input in one sensory modality complements or enhances the perception in other modalities. Although non-synesthetes do not experience transcoding of one sensory into a different sensory output, they also do not experience sensory inputs in isolations. This universal experience of ‘perceiving together’ is known as a multi modal experience. For example, with our backs turned, in the dark, we can hear fire crackling and feel its warmth. We know this is fire and can imagine the flames in our mind. Cross modal interaction describes the neurological processes in relaying sensory inputs and interpreting sensory outputs that take place in synesthetes and non-synesthetes alike.

Interestingly, visual information can override auditory information in localization tasks. For example, when watching a movie, we perceive speech as coming from the actor's mouth rather than the speakers to the right and left of the screen. (Sagiv). These examples present genetically inherent biases towards specific sensory modalities that dominate cross modal experiences in, particularly in non-synesthetes. We presume that if enough research was undertaken on synesthetes, similar markers towards particular synesthetic experiences would be identified.

Algorithmic or Digital Synesthesia

As computational artists we are interested in the complexity afforded us through the medium of software art and how a genuine synesthetic experience is in fact ubiquitous through man-machine interaction.

The ecology that comprises the algorithm, hardware, software and human imagination has given artists the opportunity for complex, multimodal expressions where the neurological processes of cross modal interaction are replaced by manipulations via interfaces that traverse from one input into a desired hardware that is then transcoded into another, or multiple outputs simultaneously.

Sonification

Sonification has enlarged the multimodal palette allowing us to take any information or sensory input and transcode it into almost any almost any output. For example, text data from the diaries of world war two ship captains can be transcoded into a chat bot which can then be transmitted as haptic vibrations on a sensory device that resonate as an audible, abstract frequency.

Today, non-synesthetes can hear income inequality, they can have abstract cross modal interactions akin to a synesthete. New York City-based data visualization artist Brian Foo coded a song to life using median income numbers from the U.S. census. It shows the legitimate crescendos of class as the 2 train travels through boroughs like Brooklyn and the Bronx, or areas like Wall Street (Kelly Kasulis, mic.com)

Cultural Difference is Perception

The central question for us as researchers and computational artists is not “how” to implement algorithmic synesthesia but “what” to implement (Dean 2011).

The “what” for us is of crucial interest to us as artists. Beyond any notional genetic preponderances, we are acutely aware of the anthropology of the perception and innate biases between cultures. When we focus less on this dominance of one sense over another and begin to analyse our central question of “what” the input or output is and “how” it is perceived by each individual, the question of “why” we interpret inputs in the way we do as they relay through our nervous system in a series of interpretations reveals biases that reflect the complexities of how we experience the world depending on where we are situated.

Socio-temporal cultural iconography

Historical conditions and technology influence human perception. We are conditioned by both an agreed set of cultural norms and the technological progression itself. It makes sense that the ways in which people perceive the world vary as cultures vary. (Constance Classen).

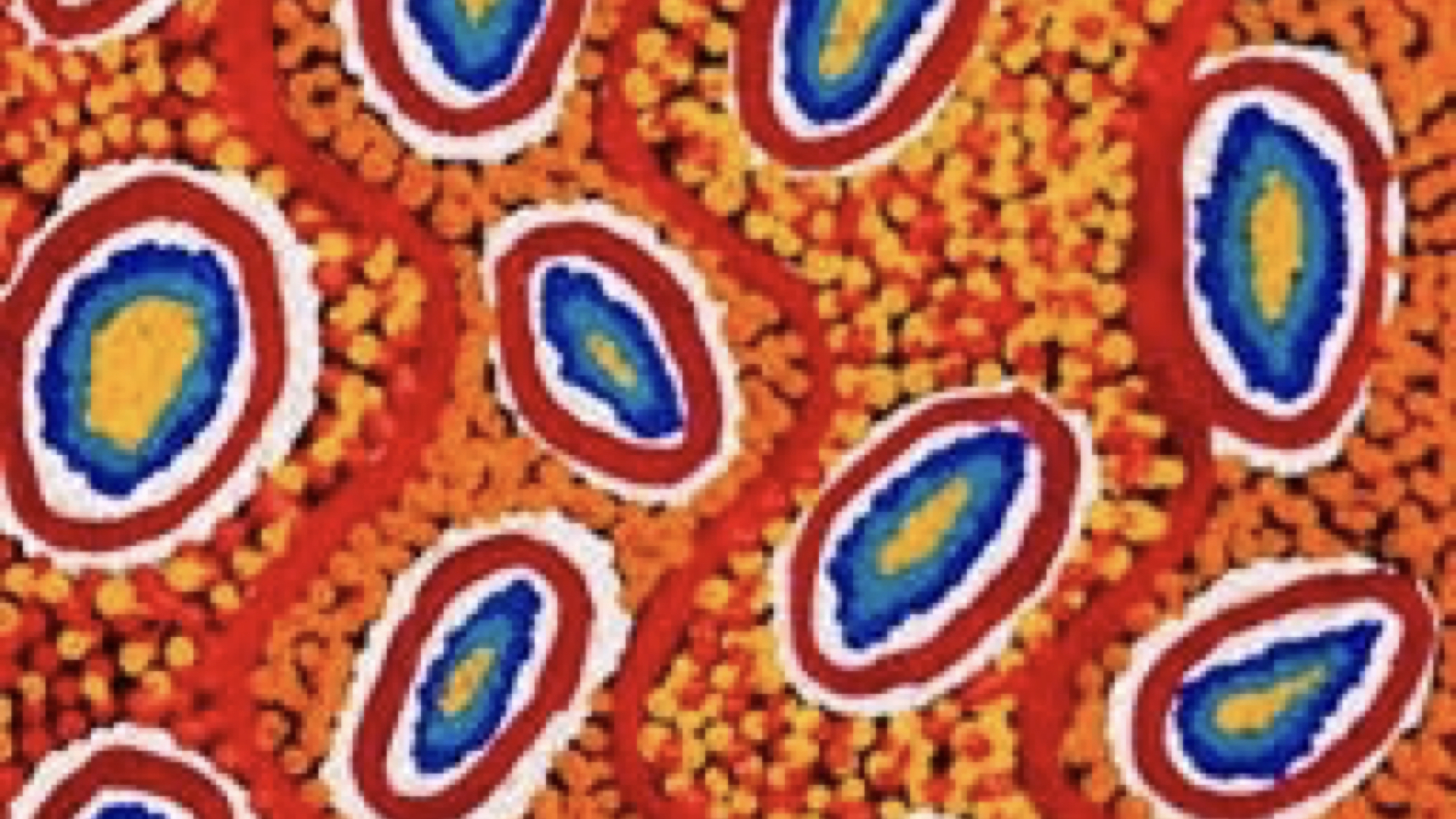

Over many millennia, each culture has carefully devised codes, symbols or iconography that have specific meanings. Many of these symbols have developed in cultural isolation, however, due to the nature of information, in that it travels, there are notable convergences in meaning between cultures. Take for example contemporary indigeonous Australasian and contemporary European cultures. If we were to ask contemporary artists from both these cultures to explain the meaning behind the below image:

It would be reasonable to predict that the European may refer to pointillism, molecular biology or red blood cells. Whereas the Australasian would point to the red earth in Australia brushlands and aboriginal dreamtime paintings. If then, this image was an output in a sonification artwork which also had low tones, what then would be the interpreted meaning between these two contemporary artists. What would the low tones represent? Could they be a didgeridoo, or would they be a container ship? It is safe to say that the meaning would be markedly different between the two artists. More so between peoples from different periods in history.

As software artists, our awareness of the possibilities offered us by the seemingly endless processes, procedural formats and complexity of computational art is always balanced by the meaning of inputs and outputs and how meaning can be interpreted and manipulated. Knowledge and research of the meaning of sensory inputs and outputs counterbalanced by purposeful manipulation of the same is the privilege given to us as multimodal designers.

III. Artefact

Our artefact is an audiovisual demonstration of the cultural bias that occurs in perceptual association, while also attempting to apply some of the known perceptual patterns that occur when hearing particular sounds. The visuals played around with the idea of sensory binding, leaving object clues by distorting and partially concealing images.

Jinyuan Li and Adam Macpherson (friend - not in group) created the sound pieces, adding random generic and ‘culturally specific’ audio samples which were distorted to different degrees.

Cheng Yang and Max Jala created in visual response to the sound pieces and are algorithmically synesthetic in that they are audio reactive. During creation, both participants were not privy to what the samples were - inviting the individual’s personal cultural bias to contaminate the pieces.

Audio-visual sample A:

This is in a lower sound profile related to darker colours and more fluid shapes. You can see a crowd of people after working and getting off the subway in a hurry and rushing from the basement towards the ground station exit, the wall wiht grey china tiles. Fireworks bloom in the deep blue sky and you may see the football hooligans on a big digital screen just across the street.

Audio-visual sample B:

This is more orintal. it is based on a style of city pop music. With higher sound profile , you can related it to more vibrant colours: neon colour, purple, colourful night. And also contains more structured and sharp shapes. You can see the a train running besides the sea, people after the whole day working, going back home or hanging out with their friends. It’s a night can infinitely absorb people’s tiredness of the day and give the permanent, static space for people to release stress freedomly. You also can hear different languages, English, Mandarin, just like immersing in a night of diversity culture background of city.

IV. Podcast

Bibliography

Carpenter, S. (2001). Everyday fantasia: The world of synesthesia. Monitor on Psychology, 32(3). http://www.apa.org/monitor/mar01/synesthesia

Classen, C. (1993). Worlds of Sense: Exploring the Senses in History and across Cultures, Routledge, London.

Classen, C. (1997). Foundations of the anthropology of the senses, International Social Science Journal Volume 49, Issue 153, pages 401–412. https://doi.org/10.1111/j.1468-2451.1997.tb00032.x

Dean, R. T., M. Whitelaw, H. Smith, and D. Worrall. (2006). The mirage of algorithmic synaesthesia: Some compositional mechanisms and research agendas in computer music and sonification. Contemporary Music Review 25(4): 311–327.

Gaidos, S. (2009). The colorful world of synesthesia. https://www.sciencenewsforstudents.org/article/colorful-world-synesthesia

Marks L E, (1975) On coloured-hearing synaesthesia: Cross-modal translations of sensory dimensions. Psychological Bulletin 82 303 ^ 331

Ramachandran, V. S., & Hirstein, W. (1999). The science of art: A neurological theory of aesthetic experience. Journal of Consciousness Studies, 6(6-7), 15–51.

Sagiv, N., J. Heer, and L. Robertson. 2006. Does binding of synesthetic color to the evoking grapheme require attention? Cortex; a Journal Devoted to the Study of the Nervous System and Behavior 42(2): 232–242.

Sagiv, N., and J. Ward. 2006. Crossmodal interactions: Lessons from synesthesia. Progress in Brain Research 155: 259–271.

Sosnowska, E. (2015). Touch, look and listen: the multisensory experience in digital art of Japan. Journal of Science and Technology of the Arts, 7(1), 63-73. https://doi.org/10.7559/citarj.v7i1.147

Ward J, Huckstep B, Tsakanikos E, (2006). Sound colour synaesthesia: to what extent does it use cross-modal mechanisms common to us all?'. Cortex 42 264 ^ 280

Ward, J., Moore, S., Thompson-Lake, D., Salih, S., & Beck, B. (2008). The Aesthetic Appeal of Auditory-Visual Synaesthetic Perceptions in People without Synaesthesia. Perception, 37(8), 1285–1296. https://doi.org/10.1068/p5815

Whitelaw, M (2008). Synesthesia and Cross-Modality in Contemporary Audiovisuals, The Senses and Society, 3:3, 259-276, DOI: 10.2752/174589308X331314