The Lament Configuration: The Next Killer App

by: James Treagus

In early 2021 a colleague came to me with an interesting story. They had heard rumours that an anonymous group of programmers were working to develop an app which had the possibility to kill. My initial reaction to this was one of scepticism. Such an endeavour sounded more like an abandoned plot line from Charlie Brooker’s techno-horror anthology Black Mirror, than a real-life concern. However, being more than familiar with the internet’s propensity for blurring the boundaries of fiction, I decided to take the bait and investigate. This app, if it existed, was obviously not the creation of a mainstream tech company, and it was unclear why or how such a program might be created, and who would choose to do so.

After following the trail of rumour through the usual internet backwaters on Reddit, 4Chan and dark web forums, someone at last replied to one of my many emails, agreeing to be interviewed about the app and its development, and to provide me with a copy of the software. The following is the interview conducted with the user who would identify themselves only as ‘Loki’, carried out by email exchange between March 24th and April 10th 2021, as well as my analysis of the core functionality of this so-call, killer app.

Developer Interview

JT: Hi Loki, [1] thank you for agreeing to talk with me. Can you first tell me a little about yourself and why you are contributing to this app?

L: I’m a junior dev at a tech company by day, I’ve a lot of side projects I like to keep active and this is one of them. My day job is pretty boring and routine, so I’m always interested to get [sic] involved with things which are outside of the norm. I get that “an app which kills” sounds bad, but really it is just a thought experiment. We are just trying to see what is possible on the platform.

JT: What is the app? Can it really kill someone?

L: Ha, so within the team we’ve been referring to it as the lament configuration, I think it’s taken from an old horror movie that one of the guys loves, but I’ve not seen it.[2] It’s basically a standard mobile game like Candy Crush or whatever, but different people are working on different levels, so it doesn’t really have a core identity. One level might be a match-three game, another one might be more like a Snap-Chat filter. It really depends on what the level designer wants to achieve with the level. Each level requires the user to grant the app more permissions though, which are used to gather data from the player’s device. The data collected by lower levels is then made available to later ones in order to leverage the player into giving up even more info. There are for sure more effective ways to gain access to a person’s information if you really wanted to, but the challenge with the project is to keep it all within the structure of the game, so it never becomes obvious what is happening. The ultimate goal of the game is to gain complete control of the player. So the app can’t really kill anyone, but it might be possible to get them to kill themselves. Honestly I think that the possibility was originally just a joke someone on the team made, but it now seems to be this thing which we can't shake. [3]

JT: You mentioned that there is a team involved in the app development. Can you tell me how you all met?

L: I don’t know about the others, but I found the project probably much the same way you did. I heard a rumour on a forum somewhere, got curious and started digging. I joined before the suicide stuff, so it was just about user manipulation. Still kinda dark, but it didn’t really worry me too much. Since the killer app thing has been going around there have been more people showing up on the forum, some want to help, but most I think are just curious, it has started to pull the focus of the build in that direction though. Of those that get involved, there have been a couple of nut jobs, but they get banned quickly. There are probably a couple of dozen contributors at the moment, but maybe only a handful who are regularly active on the build.

JT: Could you elaborate on the undesirable people who tried to join the team?

L: Yeah, it was only a couple of instances that I knew of. One guy thought he could use the app to get revenge on his ex and was asking about the best ways to trick them into downloading it, another was posting about some really twisted sadistic shit. They both got kicked.

JT: Is it possible they might have downloaded the app before they were banned?

L: It’s possible. The thing doesn’t look anything like a finished polished product though, it’s a construction site. Really what we call the app is just a place where we can share and play with ideas, so I don’t know how much use they could have got out of it, not for what they were interested in anyway. We’ve closed all the development files though. The build was being downloaded by people who weren’t contributing and I don’t think any of us were comfortable with that. [4]

JT: If the original aspiration for the app was not the death of the user, how did members of the team react to realising that it was a possible end result? How do you feel about working on something that might be able to be used in that way?

L: Some people left. But like I said, it was always an experiment in user manipulation, so I think if you were on board with that from the start you kinda knew that it could go to some dark places. I definitely had a bit of an ‘oh shit’ moment, but this thing is never going to be deployed. Plus, the tech is already out there, we aren’t inventing anything new. A lot of the manipulation techniques that get implemented are taken from other places, even mainstream apps like Facebook and Instagram which run their own version of manipulations. We just reskin them a bit. [5] The company I work for does extensive testing to make sure that what they think will happen when the user gets their hands on the app is what actually happens. We don’t do that, so we take stuff that is already tested, then all the feedback on ideas is within the group and in the end it’s just opinion on if we feel something would work or not. If the government or someone really wanted to do this for real they could just as easily as we are. They might have already for all we know. [6]

Build Analysis

With an eye to the ethical implications of making public the specifics of the application, I cannot as I would like, share an in depth analysis of each of its levels and how they interact to continually leverage the user into giving over more access to their device’s functions. Instead I will briefly discuss an example of the tactics as evidenced in one of the early levels, and then move on to discuss the wider app ecosystems which makes such manipulations possible. The following analysis is of an android version of the application.

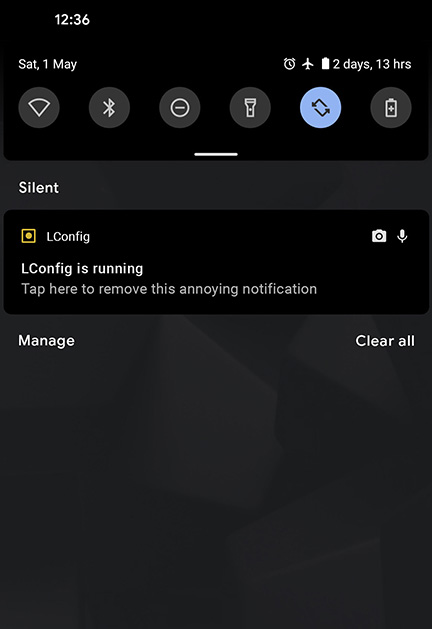

Each of the game’s levels appears as an independent puzzle. The aim of one of the first levels (from the designer’s point of view) is to get the user to allow the app to always be running in the background. This establishes a persistent base which later levels utilise to record audio and sound while the user is unaware. To accomplish this the design of the level mimics a tamagotchi style game, where the user must keep a digital creature alive by providing for it’s simulated needs. The persistent nature of the creature provides a plausible legitimation for the ‘always on’ status of the application, and a strong incentive for the user to keep the app running in the background (figure 1).

Figure 1: The disarming nature of the creature helps to encourage users to keep the app running, signalled by the notification icon on the top bar.

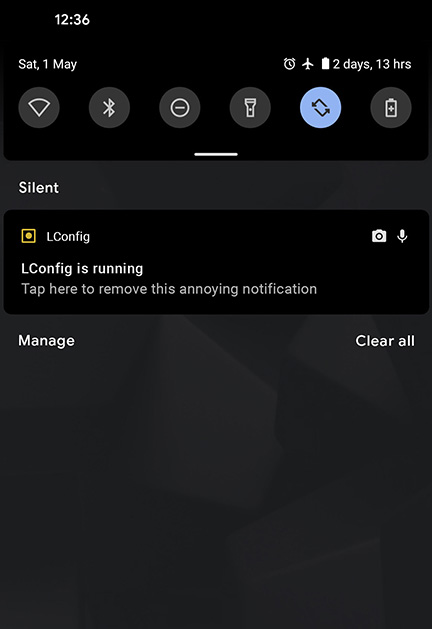

Android’s security features provide a notification for applications running in the background. This notification indicates when such apps are using the microphone or camera, allowing the user to monitor such activity, further, it cannot be dismissed in the manner of normal notifications, by swiping it away, and it remains until the corresponding application is no longer running. While it might seem legitimate to the user that the app is constantly active and this notification present during this level, once it is complete it would be expected that the app no longer requires this functionality, and thus the notification to be no longer visible. The app therefore needs to hide this security notification.

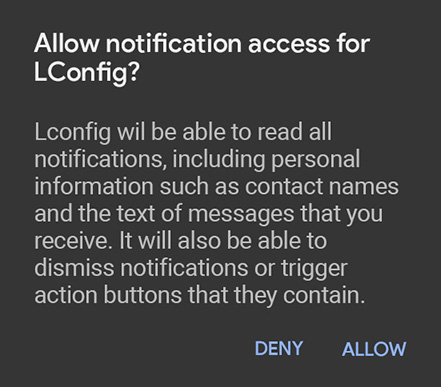

It archives this by exploiting the user’s ‘notification anxiety’. That is, the Pavlovian response triggered by the presence of a notification, and the resultant anxiety induced by seeing the notification icon but being unable to dismiss it (Fitz et al. 2019; Iyer and Zhong 2021). The app presents custom text within the notification instructing the user “tap here to remove this annoying notification” (figure 2). This leading language is intended to solidify in the user’s mind that the notification is indeed annoying, and they will be reminded of this every time they check any of their other notifications. If the user accedes and decides to have the app remove the notification they will also allow it access to “read all notifications, including personal information such as contact names and the text of messages you receive” (figure 3).

Figure 2: Android notification screen showing the Lament Configuration running in the background. Without disabling the notification, icons are clearly displayed alerting the user that the app is utilising the microphone and camera.

Core to the app’s functionality, then, is how devices handle permissions to access data. In particular exploiting the many instances when a permission only needs to be granted once, and then is maintained in perpetuity without the user needing to restate their consent. Android, for example, splits its permission handling into ‘installation permissions’ and ‘runtime permissions’. Installation permissions are granted at the time of installation, but cannot grant access to ‘dangerous’ features, which can only be accessed via runtime permissions, which must be granted at runtime when the app needs to gain access to such data. As of Android 11 location, microphone and camera permissions can be granted on a one-time-only basis, with access needing to be given in each use case, however, this is optional to the user. Other permissions, once granted at runtime, persist unless the app is unused for one month, at which time they reset. (‘Permissions on Android’ n.d.). Apple, by contrast, will only request permission to access sensitive material the first time that the app tries to do so, once it is granted it will continue to grant permission by default on subsequent attempts (‘Requesting Access to Protected Resources | Apple Developer Documentation’ n.d.).

The goal with these hard coded architectures is to put the user in ultimate control of where and when permissions are granted. This is of course admirable, but it also opens the system to the kind of social engineering abuses that the Lament Configuration employs, since it is often the user themselves who represent the biggest security weakness in a system. The developer guides for both systems list a number of best practices which they recommend developers follow in order to mitigate such manipulations. Apple’s, for instance, are explicit in directing developers to not engage in practices which might result in the user being tricked, or mistakenly induced, into granting an app access to data (such as the Lament Configuration’s leading language). Ironically in doing so the guidelines also informs developers exactly how such an act might be achieved (‘Accessing User Data - App Architecture - IOS - Human Interface Guidelines - Apple Developer’ n.d.).

Figure 3: Text generated by the Android operating system when determining an application's access to notification features.

With an emphasis on user responsibility, both Apple and Google sidestep a considerable amount of legal and social responsibility once the app is in the user’s hands, ending their oversight responsibilities at their respective app stores. While historically Google’s Play Store has lagged behind Apple’s App Store in terms of app verification processes, apps uploaded to both are all manually verified to not contain malware, malicious content and comply with each company’s safety and security standards. However, since the Lament Configuration app is not downloaded through the app store it is not subject to these same safeguards.

Installing an app outside of the store ecosystem is not straightforward, and such a manual installation might present a high bar to entry for many users, and this undoubtedly represents a further level of safety against malicious applications. Apple devices first require the user to install a secondary app, such as Altstore (https://altstore.io/) which exploits tools used for development testing to ‘sideload’ unverified apps. On Android devices the user has to enable ‘Developer Mode’ (which involves the slightly esoteric action of tapping the otherwise innocuous ‘build number’ menu element 7 times) and then turn on ‘USB debugging’, thus allowing any Android compatible app to be downloaded and run.

At the time of writing, however, Apple and Google are both contending law suits brought by Epic Games for monopolising practices after they pulled the hugely popular game Fortnite from their stores following Epic Games’ attempted to bypass their store payment systems (Albergotti 2021). Apple is also facing an antitrust investigation from the European Commission over its App Store policies (European Commission 2021). These legal actions have the potential to force both companies to allow greater access to apps outside of their stores and oversight. It is perhaps coincidental that simultaneously the BBC have just published an article warning of a large scale scam in the UK which gathers personal data by tricking people into downloading an Android app by circumventing the app store in exactly the manner described above (BBC News 2021).

Conclusion

So, is the mythological Lament Configuration capable of killing? It is likely Loki is correct in saying that the app, in the form it currently exists, will never be deployed, and if it were it would entirely fail at being any sort of danger. The input of multiple uncoordinated designers is evident in an application which is inconsistent at best. The user interface varies from slick, through clunky, to non-existent, and at times its implementation of social manipulation is heavy handed to the point of hilarity. But to write it off so easily would be to hold a very narrow view of the technological ecosystem, and it is telling that during my analysis I made sure to keep my test device air-gapped and disconnected from any and all networks at all times. The problem was that despite all its short comings, I could not be sure that it wouldn’t do exactly what it was rumoured it could.

Despite being put together by a forum of anonymous developers, the development of the technology utilised in the app was not put together by a forum of anonymous developers. That is, its pieces were manufactured separately, independently, and sometimes by large tech companies with a reputation for making thing which really do work. Most of the elements within the Lament Configuration are well researched, documented and freely available online if you know where to look, some of them within application developer documentation. Creating the final ‘product’ is merely an act of assembly. As in Hellraiser, all of the pieces for the puzzle box’s functioning are already present, innocently inert. It is only in the particular nature of their relationships that the device achieves its name and horror arises.

It is hard, and perhaps unwise, to argue against corporate transparency, yet the risk with open access to advanced technologies and processes is that applications like the Lament Configuration become ever more plausible. This plausibility in turn brings with it the risk that in being taken seriously publicity is generated which actualises the object. While this particular application may or may not be dangerous, the idea of it is. Like the Blue Whale Challenge, the Lament Configuration is a point of convergence for pre-existing phenomena. Teens were congregating on internet forums to discuss self-harm before the notion of the Blue Whale Challenge arose, but the very public circulation of the idea of such a game gave those acts a locus which they had not previously possessed. So to with the Lament Configuration. It is notable that Loki stated the majority of interest in the app arose after rumours of its deadly nature began to circulate, and that one of the effects of this new interest was to partially generate its cause, as though once the idea was raised, a community appeared in order to actualise it just to see if they could.

If, as Baudrillard supposed, these copies without originals represent a sorcery, a conjuration by their intonation, we must be increasingly careful the words we speak and the ideas which we put into the world, even if they are only thought experiments. It might be seen that I myself have fallen into this trap, first by following the rumour to its source, and secondly by re-presenting it here, yet how is one to warn of a danger, when the danger is carried in the warning itself? In closing then, I offer a counter sorcery, a banishing spell: The Lament Configuration does not, and never has, existed, and you should not be worried about it.

Notes

[1] It should be noted that as pertains to most knowledge gained through the internet, Loki’s involvement with the project is entirely unverified, and taken purely on faith alone. It would not be the first time an individual has been interviewed and positioned as an authority, when in all likelihood they were an internet troll. For example, almost all of the reporting during the 2016 US election regarding Pepe the frog as a white supremacist symbol. This was, at least initially, a hoax on the media perpetrated by 4chan, as the meme’s links with the Alt-Right were relatively tenuous until media reporting solidified the identification. Most of those interviewed claiming to be white-nationalists were likely 4chan trolls (Miller-Idriss 2018, 126–28).

[2] The name derives from Clive Barker’s 1987 horror movie series, Hellraiser. The MacGuffin, acquired from a Moroccan street market (such things always appear from places deemed mysterious or hidden) which instigates the horror is a Rubix cube-like puzzle box, called the Lament Configuration. When solved the box opens a gateway to a parallel dimension—the home of the sadomasochistic Cenobites, who are unable to tell the difference between pleasure and pain. The Cenobites’ promise new forms of carnal pleasure, but the result is always death (‘Lament Configuration’ n.d.). The obvious comparison here is between the Lament Configuration, as shown in Barker’s movies, and Pandora’s Box of Greek myth, which when opened, released horror into the world.

[3] The escalating nature of the app’s ‘game’ bears a striking similarity to that of the Blue Whale Challenge, which briefly made headlines around the world in 2016 after a number of teen suicides were linked with it. The Challenge, reportedly first appearing on Russian social media sites, consisted of completing 50 tasks over 50 consecutive days, directed by a handler to the player. The tasks began with relatively mundane things, such as “‘wake up in the middle of the night’ or ‘watch a scary film’", but gradually escalated, culminating in the “demand that the user kill themselves”(Adeane 2019). As horrifying as this seems, and despite reporting which links hundreds of teen suicides to the game, there is little evidence it ever existed before it was reported (Adeane 2019). The subsequent moral panic, undoubtedly centred around a paranoia of hidden forces which can reach out to, and manipulate us through our networked devices, became exactly that which it superficially appeared to be afraid of. This conclusion—that the articulation of the possibility of the event and the actualisation of the event cannot be separated—puts the Blue Whale Challenge firmly in the realm of Baudrillard’s third order simulacra: a copy without an original, an act of sorcery (Baudrillard 1994, 6). Loki states that this is also what has happened with their app development: to the outside observer, the intonation that the app could induce the user to suicide is indistinguishable from the actualisation of the app inducing the user to suicide, whether or not such a use-case is actually possible. As they express later, the idea of a killer-app became almost self-fulfilling.

[4] This collective sharing of information in an unstructured development environment is not uncommon to web-based communities, and has allowed some of these projects to iterate and outpace any similar commercial ventures. The most notable case is probably that of ‘deep fake’ technology, which uses machine learning to replace human faces in digital video. Indeed, the term deep fake is taken from the username of the original redditor who first began posting their experiments using the technology to paste celebrity faces into porn videos. A community rapidly grew around the practice, sharing tips and code, and within a few months was able to match the output of similar Holywood technology (Cole 2018). The rapid iteration of the technology perhaps had less to do with any carnal eroticism, than with a techno-fetishism. When interviewed by Samantha Cole, one user who had developed an app to simplify the deep fake process commented:

“I think the current version of the app is a good start, but I hope to streamline it even more in the coming days and weeks, [...] Eventually, I want to improve it to the point where prospective users can simply select a video on their computer, download a neural network correlated to a certain face from a publicly available library, and swap the video with a different face with the press of one button” (u/deepfakeapp quoted in Cole 2018).

Though the mainstream tech sector is beginning to wake up to the social and ethical implications of their decisions, and is subject to judicial and investor oversight, casual hobbyists and amateurs have only their own consciences to answer to. Often the gestation of technology within such amateur communities is built and iterated upon just to see if it can be, with little or no regard for wider social or ethical issues.

[5] Loki is here probably referring to the various methods that social media sites use to keep users engaged, such as infinite scroll, and the strategic use of metrics (such as Facebook’s ‘Like’). In an interview with Matthew Fuller, Ben Grosser writes on the application of these metrics to change browsing habits:

“I suspect that Facebook enumerates everything. [...] I would suggest that the primary question asked by Facebook's designers when deciding which metrics to reveal is whether a particular count will increase or decrease user participation. [...] I'm not told how many things I like per hour, or how many ads I click per day, or how effective the 'People You May Know' box is in getting me to add more friends to my network. These types of analytics are certainly a significant element within the system, guiding personalization algorithms, informing ad selection choices, etc. But would showing these types of metrics to the user make them more or less likely to participate? If the answer is less then the metric is hidden” (Fuller 2012).

This is part of a wider strategy used by apps which borrow techniques from the gaming industry—most notably the gambling industry—to induce micro hits of dopamine in the user which establishes a desire that can never be sated, and thus keeps them returning for more (Gilroy-Ware 2017; Iyer and Zhong 2021). That Loki’s group have taken up these ideas, re-assimilating them into a gaming environment, demonstrates the ease with which conceptual, tactical and strategic cross-pollination can occur between digital (cont.) environments. Though media companies, such as Facebook might jealously guard the technical specifics of their algorithms, the social engineering techniques which they employ are well documented. This brings into focus the uncomfortable truth which is hardly ever broached in discussions of corporate transparency: that making public such practices only ever lowers the bar to access powerful and potentially dangerous technologies.

[6] While it is true that a government organisation might, on paper, have more resources to allocate to such a problem, as has been seen elsewhere in the technology industry, it is often simpler to allow a small start up to take on all initial risks and then simply buy them out once they have a proven product. The deployment of such analogous strategies by governments to use unaffiliated communities in the development of shadowy technologies verges on conspiracy theory, but the Russian government's digital misinformation tactics during the 2016 US election cycle demonstrate that governments are not oblivious to the power of outsourcing what might classically be deemed intelligence work to unknowingly complicit internet communities (Mueller 2019).

References

‘Accessing User Data - App Architecture - IOS - Human Interface Guidelines - Apple Developer’. n.d. Apple Developer | Human Interface Guidlines. Accessed 1 May 2021. https://developer.apple.com/design/human-interface-guidelines/ios/app-architecture/accessing-user-data/.

Adeane, Ant. 2019. ‘Blue Whale: The Truth behind an Online “Suicide Challenge”’, 13 January 2019, sec. BBC Trending. https://www.bbc.com/news/blogs-trending-46505722.

Albergotti, Reed. 2021. ‘Apple Is Going to Court with “Fortnite,” and It Could Forever Change How Apps Work’. Washington Post, 30 April 2021. https://www.washingtonpost.com/technology/2021/04/30/epic-apple-trial-antitrust-fortnite-app-store/.

Baudrillard, Jean. 1994. Simulacra and Simulation. Translated by Sheila Faria Glaser. The Body, in Theory. University of Michigan Press.

BBC News. 2021. ‘Flubot: Warning over Major Android “package Delivery” Scam’, 23 April 2021, sec. Technology. https://www.bbc.com/news/technology-56859091.

Cole, Samantha. 2018. ‘We Are Truly Fucked: Everyone Is Making AI-Generated Fake Porn Now’. MotherBoard. 24 January 2018. https://motherboard.vice.com/en_us/article/bjye8a/reddit-fake-porn-app-daisy-ridley?utm_campaign=buffer&utm_content=bufferd5a78&utm_medium=social&utm_source=facebook.com.

European Commission. 2021. ‘Antitrust: Commission Sends Statement of Objections to Apple on App Store Rules for Music Streaming Providers’. Text. European Commission. 30 April 2021. https://ec.europa.eu/commission/presscorner/detail/en/ip_21_2061.

Fitz, Nicholas, Kostadin Kushlev, Ranjan Jagannathan, Terrel Lewis, Devang Paliwal, and Dan Ariely. 2019. ‘Batching Smartphone Notifications Can Improve Well-Being’. Computers in Human Behavior 101 (December): 84–94. https://doi.org/10.1016/j.chb.2019.07.016.

Fuller, Matthew. 2012. ‘Don’t Give Me the Numbers—an Interview with Ben Grosser about Facebook Demetricator’. Rhizome. 15 November 2012. http://rhizome.org/editorial/2012/nov/15/dont-give-me-numbers-interview-ben-grosser-about-f/.

Gilroy-Ware, Marcus. 2017. Filling the Void: Emotion, Capitalism and Social Media. London: Repeater.

Iyer, Ganesh, and Zemin (Zachary) Zhong. 2021. ‘Pushing Notifications as Dynamic Information Design’. SSRN Scholarly Paper ID 3585444. Rochester, NY: Social Science Research Network. https://doi.org/10.2139/ssrn.3585444.

‘Lament Configuration’. n.d. Wiki. Hellraiser Wiki. Accessed 17 April 2021. https://hellraiser.fandom.com/wiki/Lament_Configuration.

Miller-Idriss, Cynthia. 2018. ‘What Makes a Symbol Far Right’. In Post-Digital Cultures of the Far Right: Online Actions and Offline Consequences in Europe and the US, 123. transcript Verlag.

Mueller, Robert S. 2019. ‘Report On The Investigation Into Russian Interference In The 2016 Presidential Election. Vol 1’. Washington D.C.: US Department of Justice. https://www.justice.gov/storage/report.pdf.

‘Permissions on Android’. n.d. Android Developers. Accessed 25 April 2021. https://developer.android.com/guide/topics/permissions/overview.

‘Requesting Access to Protected Resources | Apple Developer Documentation’. n.d. Apple Developer | Documentation. Accessed 25 April 2021. https://developer.apple.com/documentation/uikit/protecting_the_user_s_privacy/requesting_access_to_protected_resources.