Cellphone

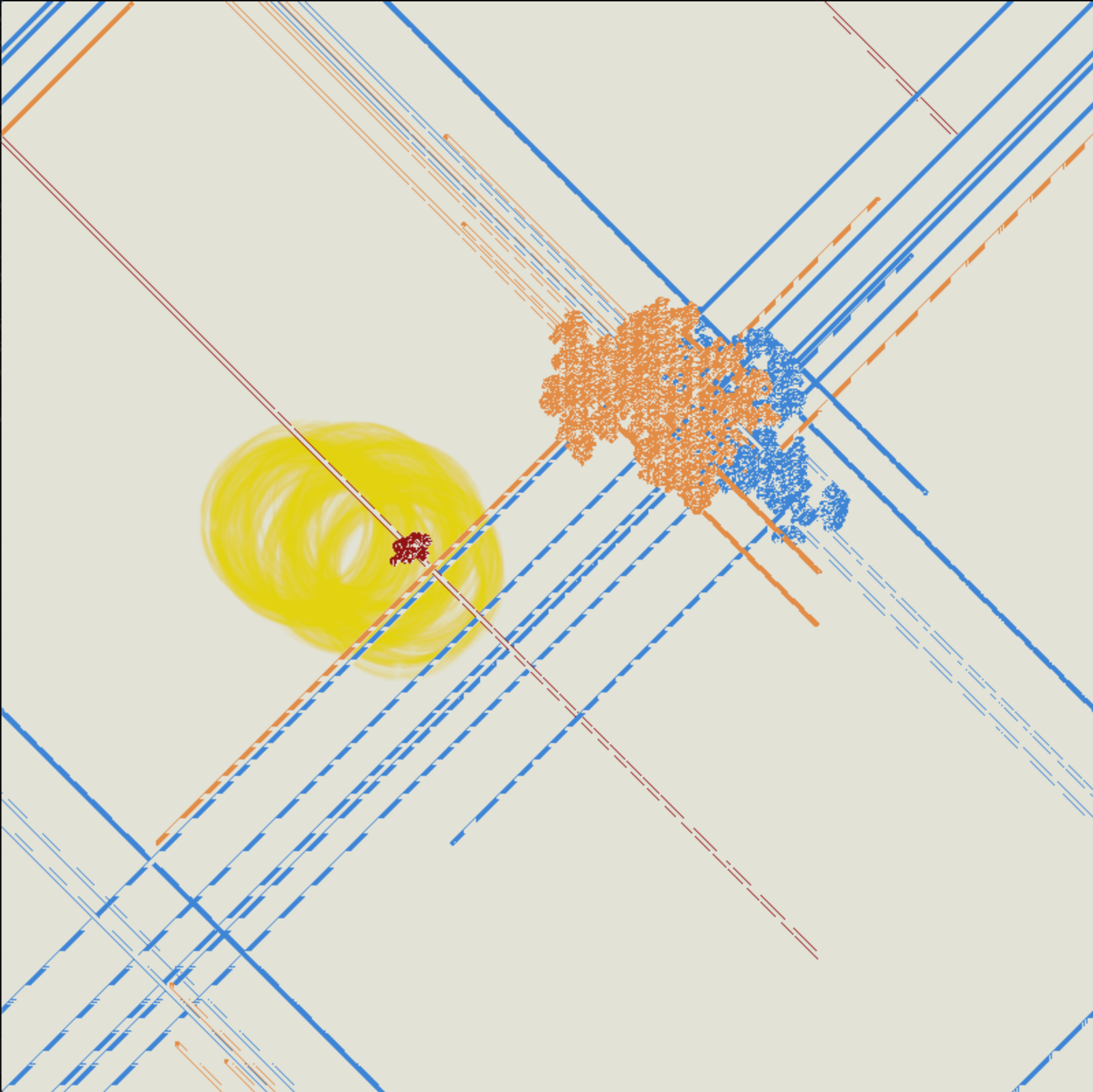

Interactive artwork whose visuals are affected by talking about the work itself. Using voice recognition and machine learning the artwork is aware if it is being spoken about.

produced by: Ankita Anand

Introduction

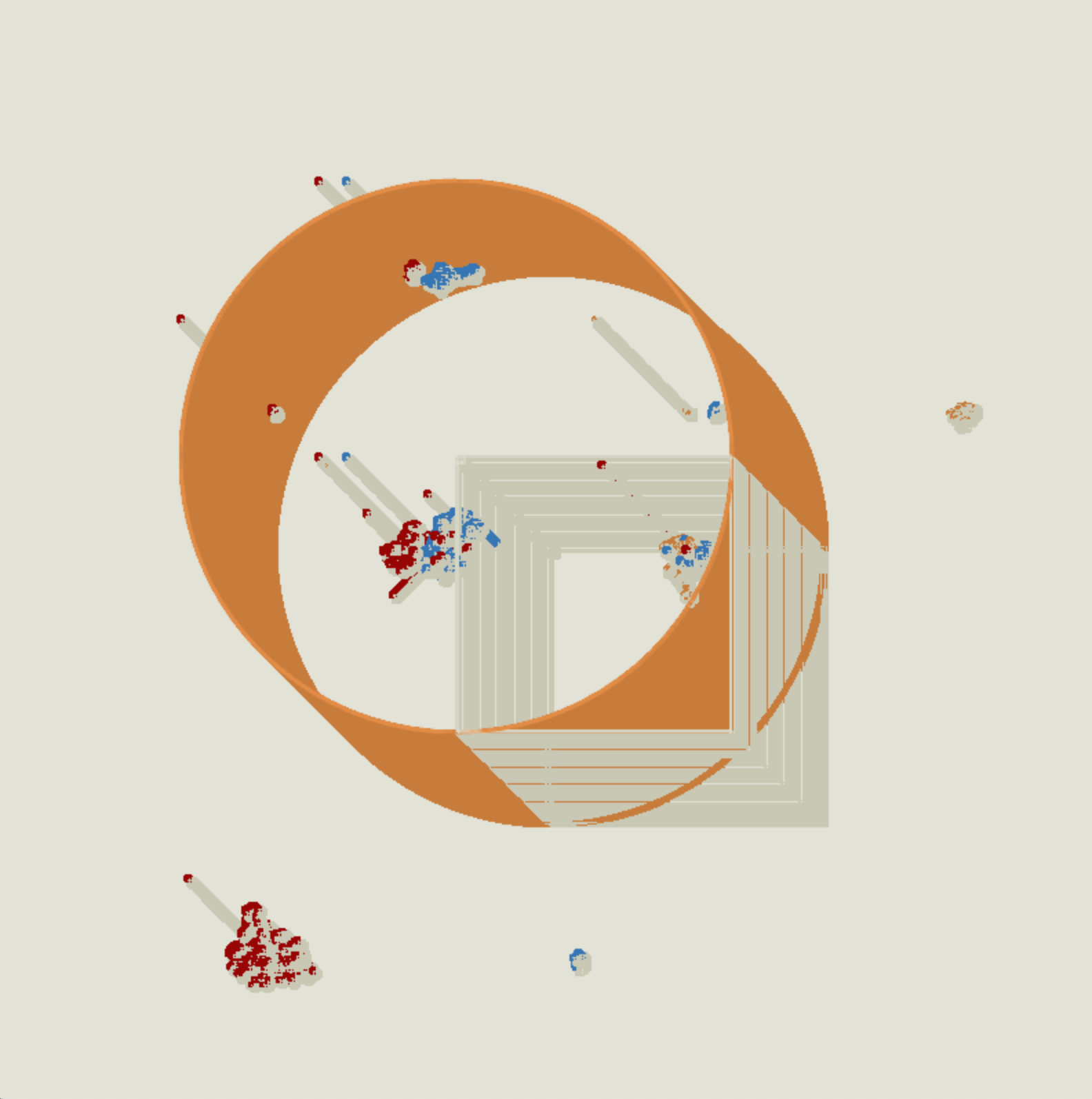

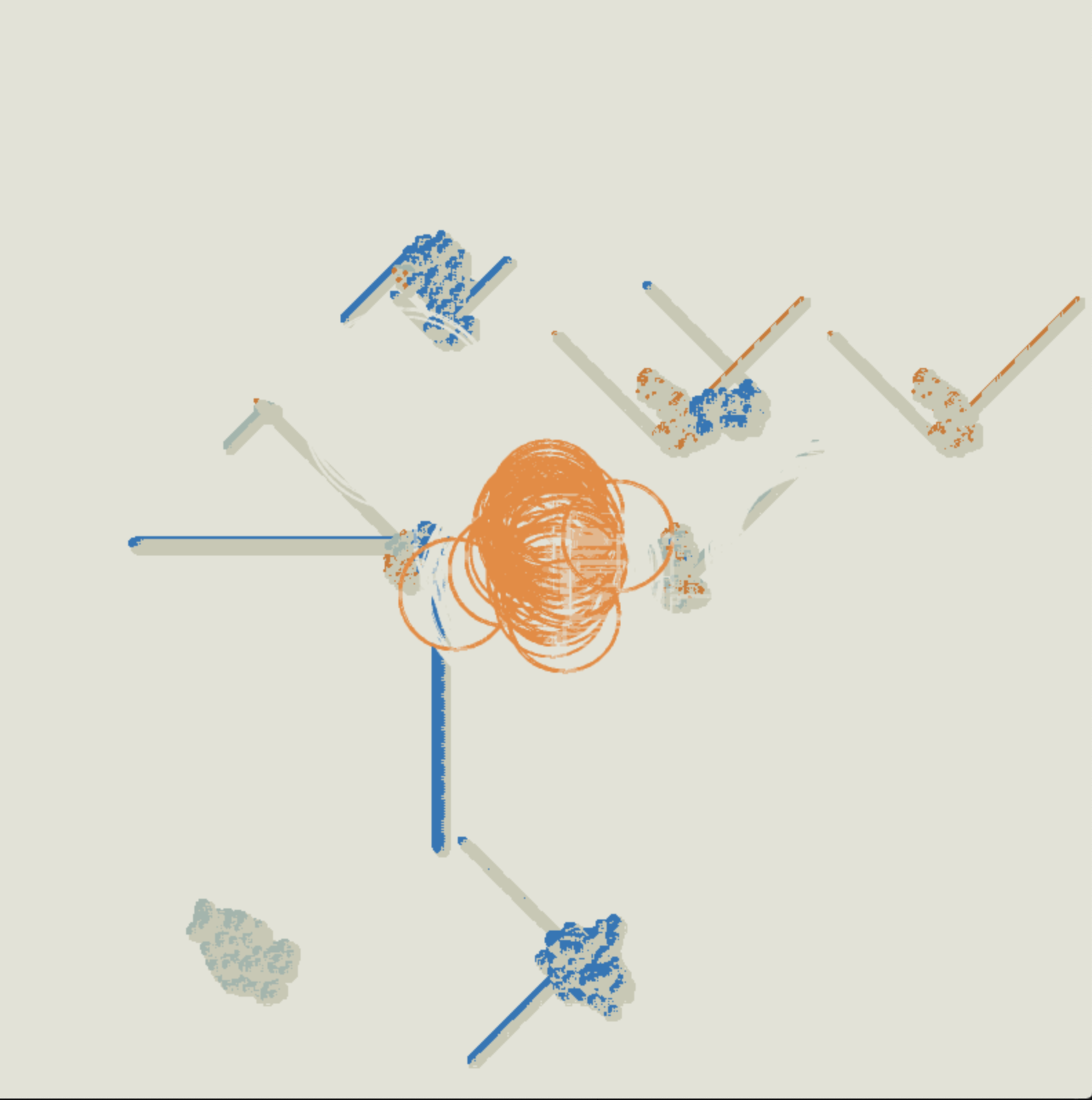

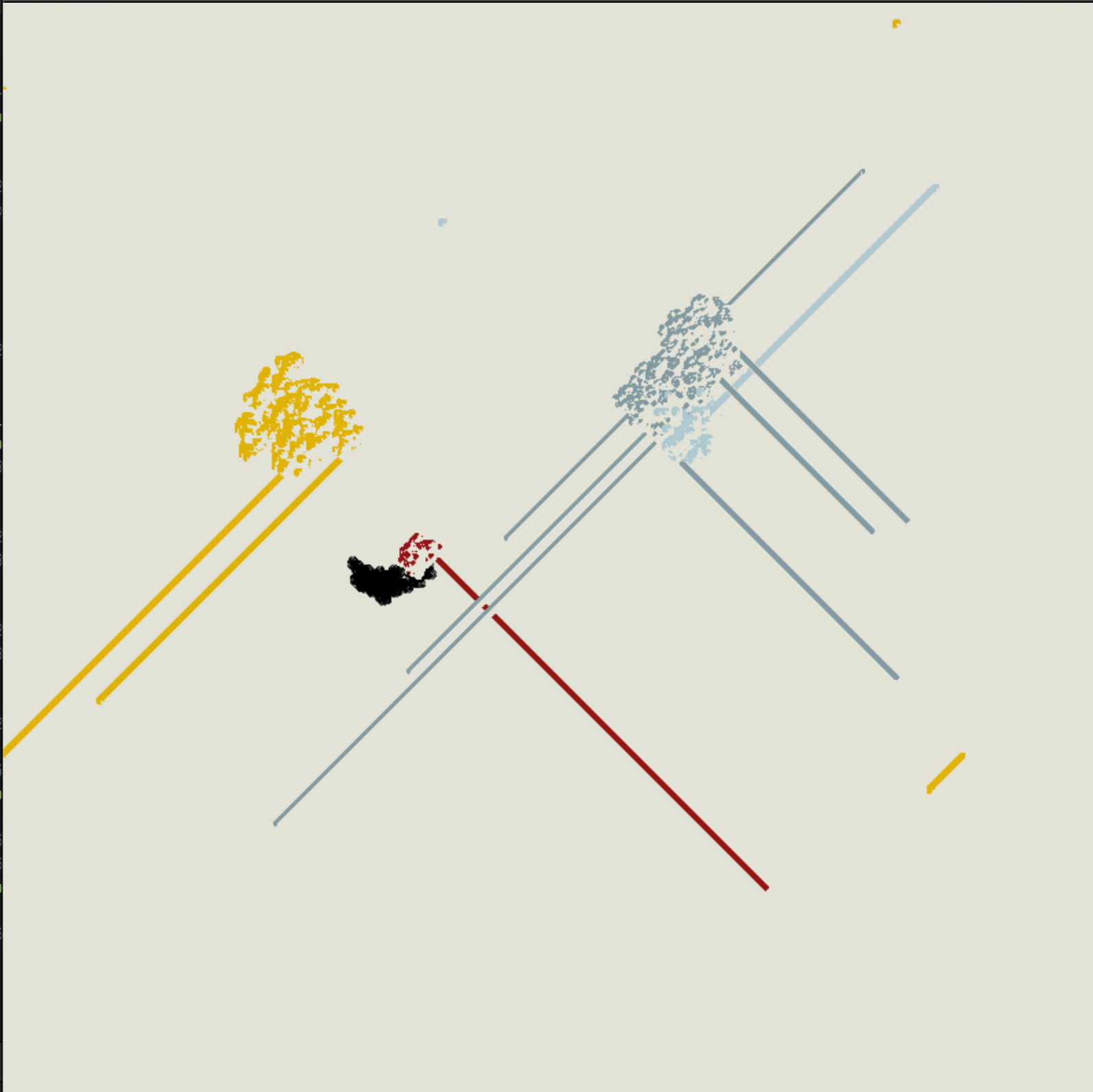

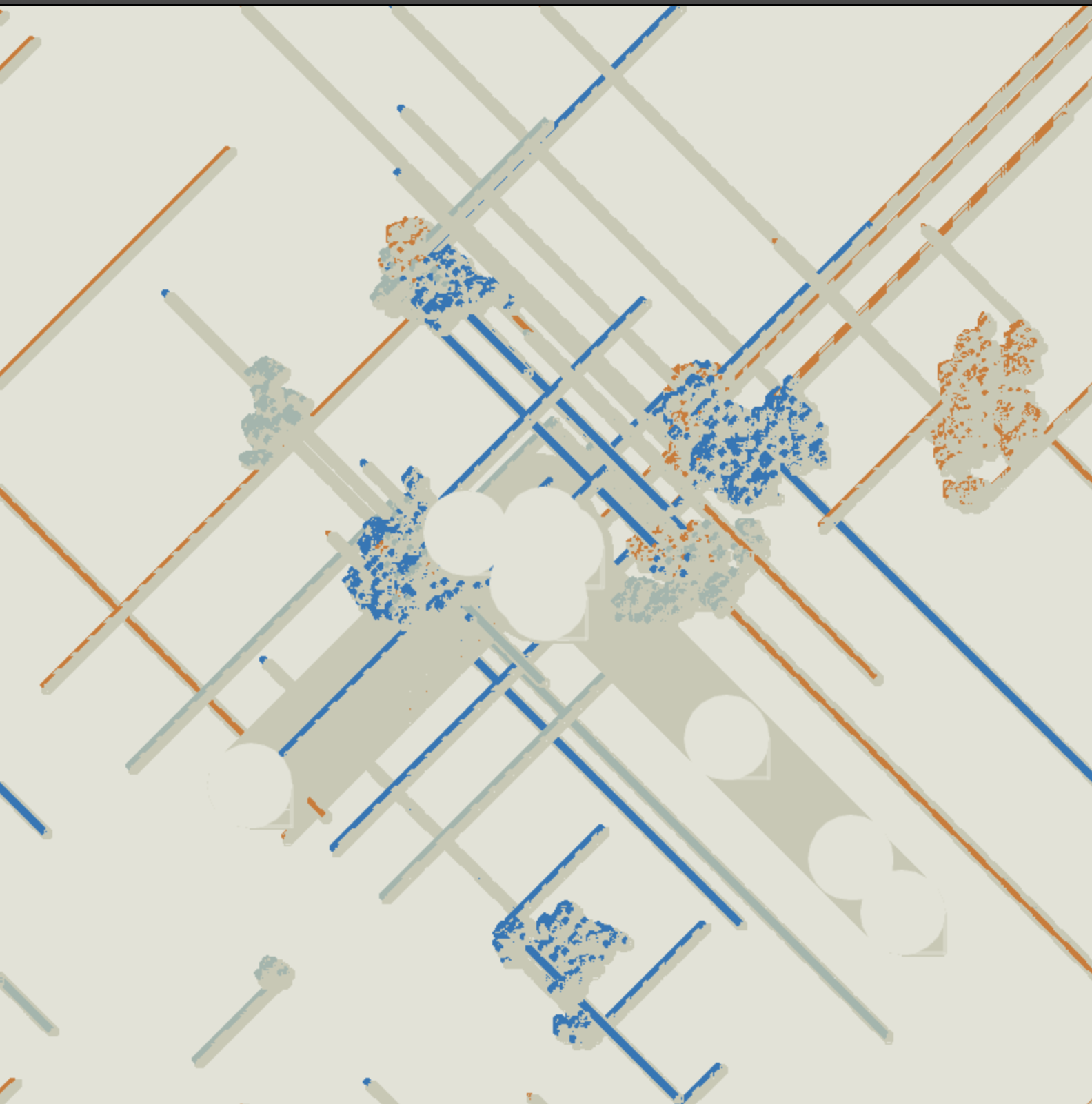

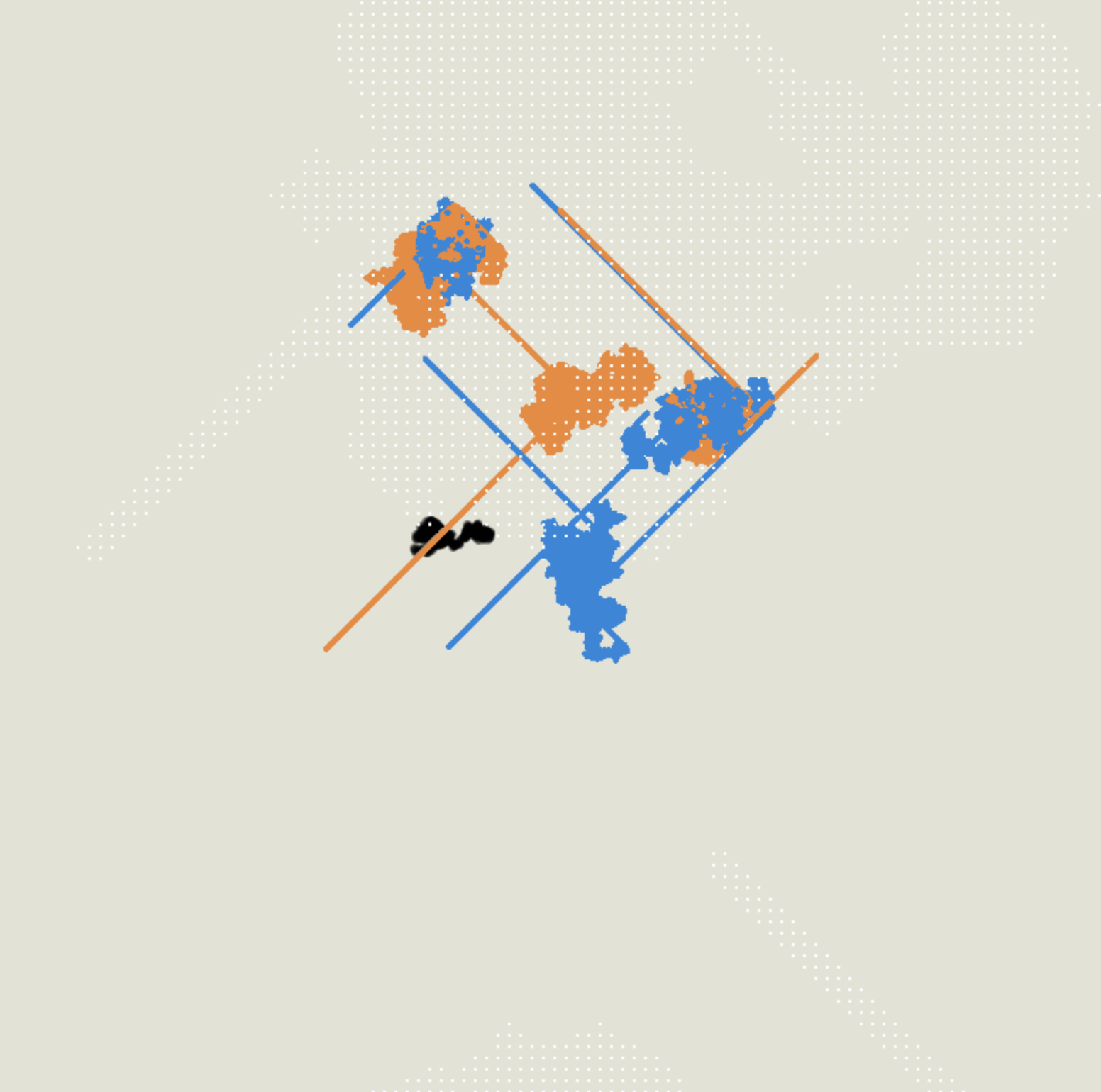

This project is visually based on John Conway's Game of Life - each cell on the screen lives, reproduces or dies based on a set of rules. The cells are triggered by particular words - often prevalent in gallery discourse - when spoken into the microphone and thus the presence of the audience at the exhibition manipulates the generation of the cells thereby dictating 'cellphone's' aesthetic.

Concept and background research

For this project I wanted to engage people in a more subtle and indirect interaction. I have always been attracted to self-referential art which is humorous and questions itself. My initial idea was that the visuals on the screen should be affected by their own light creating a feedback loop. However the readings from the photo-resistor did not fluctuate enough and I was thinking about how I could involve the presence of the exhibition itself in the artwork. I was inspired by artworks breaking 'the fourth wall' or referencing themselves, one of the earliest examples of this bieng Botticelli's ‘Adoration of the Magi' (1475) where he himself is looking out from the painting. C’est ne pas un pipe by Magritte and Carl André's 'Equivalent VIII' are also inspirations as they reference themselves.

Since the cells were an autonomous system I wanted the system itself to be aware of its surroundings. I considered the visuals as a work of art on their own - I spent most of my time working on this aspect of my project. I was very enamoured by John Conway’s Game of Life which we explored in Term 2 and I played around with the code. I then came across a website (https://bitstorm.org/gameoflife) which became an easy way for me to experiment with different shapes and eventually led me using gliders for my visuals which would leave a trail and accumulate overtime. The aesthetics came about whilst I was experimenting with various colours and shapes. The colours are somehow inspired by my Processing project from Term 1. I am fascinated by object oriented programming and how objects can be given autonomous behaviours which result in unexpected visuals.

Technical

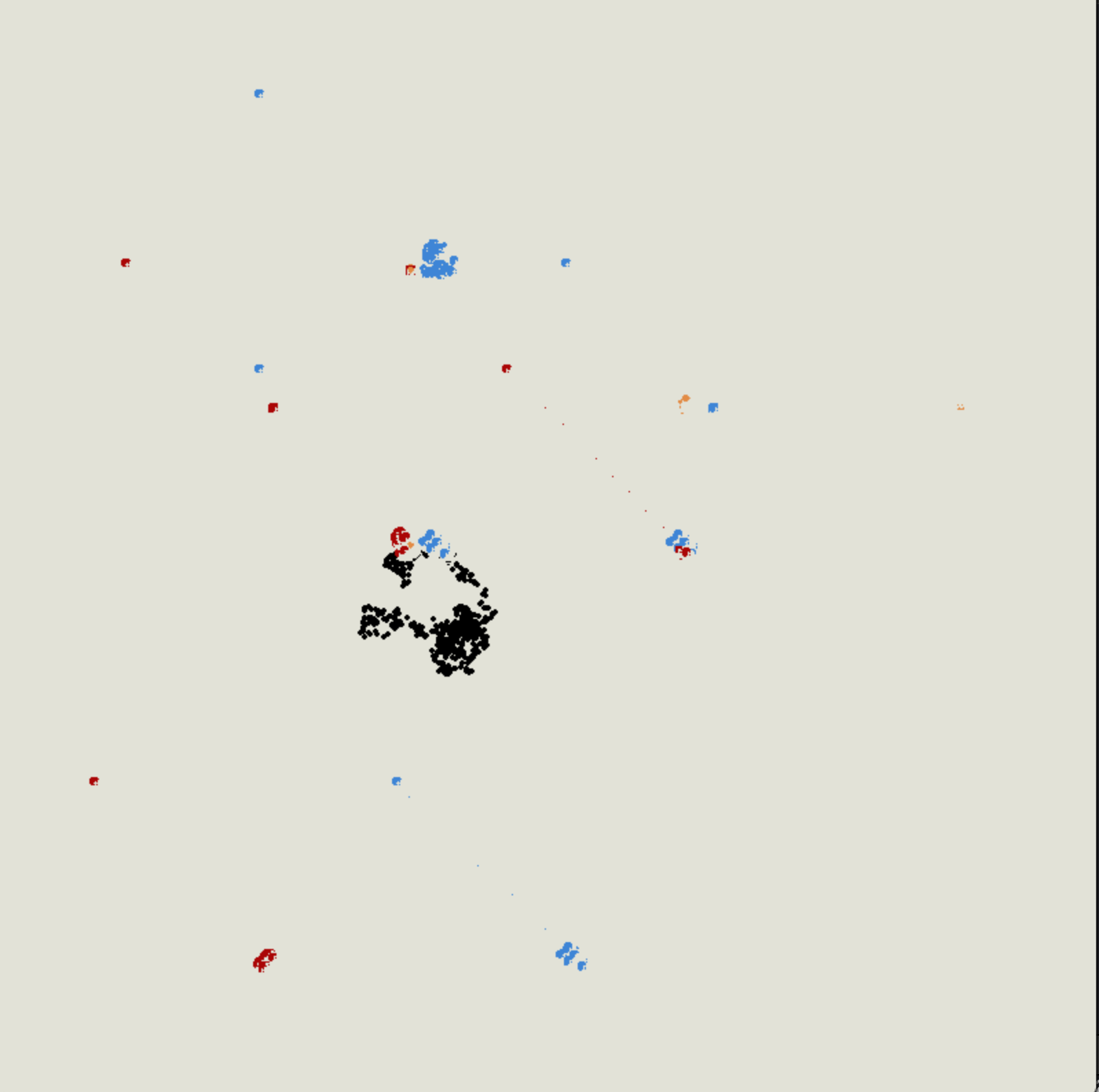

The visuals are several Game of Life systems which are minuscule cells reproducing and dying - they check their neighbours and flip colours according to certain rules. Most of my technicality was in experimenting with different cells, grids and Fbos to check what made the program run smoothly and what made it slow. For voice recognition I used the voice controller add-on we worked on in class based on mfccs. I trained it to recognise certain words such as art, aesthetics, visuals, dull. Each of these words was classified into a different class. The first class recorded (0) was just with background noise. I had to re-train at the exhibition as everything sounded different and the noise levels kept fluctuating. Then using conditional statements I generated more cells whenever that class was activated and when someone said 'dull' or 'boring' then it would start generating cells the same colour as the background giving the illusion of an ‘eraser’.

Future development

I would like to extend this project to possibly more words , perhaps connecting it to an external API. The visuals could be presented in various ways - maybe in a very tiny form on the floor or as a large mural on the wall - to somehow work on the presentation of the screen. I would like to work on the code more and make the interactions of the cells more complex and interdependent. Would be interesting to add different interactions from the environment that make visuals change overtime at a slower pace.

Self evaluation

I enjoyed working on the visuals and exploring Game of Life - I feel that my interests made me move towards autonomous behaviour and object-oriented programming to explore abstract concepts. I am satisfied with the visuals however I would definitely like to work more on presentation of the work and the screen itself. Taking part in the pop-up was a challenge as I got to test reactions live. How people interacted with the piece was very different than what I had in mind. Firstly it was tricky to get the classifier to work in a noisy environment - it was not correctly and accurately classifying. Secondly people at the exhibition expected more direct interaction as they kept talking into the microphone till they felt they saw some drastic change. Overtime as the cells accumulated it was difficult to see where they were being generated, however, this was part of my concept as I wanted a more subtle and indirect interaction. I learnt that it is always good to test an installation in real time in a space with noises and people specially if you are dealing with a microphone. I had some issues with the iMac as the screen kept going on screensaver mode even though that was turned off and other updates were being installed interrupting the visuals. It made me think how much CPU memory, and electricity power was needed to keep such exhibits on - specially if it is accumulative piece, versus for example a painting. The ethics and cost of having an electronic artwork was something that came into my awareness. I wish to continue my exploration into indirect interactions, object oriented programming and the role of the audience.

References

https://bitstorm.org/gameoflife/

https://www.nationalgallery.org.uk/artists/sandro-botticelli

Meandering River https://www.creativeapplications.net/news/meandering-rivers-onformative/

ofBook on openframworks https://openframeworks.cc/ofBook/chapters/foreword.html

Workshops in Creative Coding Term 2 : Voice Controller, Game of Life

Special thanks to Harry Morley and Keita Ikeda