Sonic Stroll

Three-dimensional interactive score for electronic instrument.

produced by: Raphael Theolade

Introduction

This project is an interactive score for electronic instruments, associating the tactility of a mundane material like sand to audio-visual feedback. The modifiable relief of the sandpit, the potential objects that can be dropped in and the hand of the user are the palette of physical potentialities allowing the creation of an architectural soundscape.

Concept and background research

There is a musicality lying in the topography of every landscape which can be revealed by a poetic dwelling. For instance, the immensity and emptiness of the desert have always been a great source of inspiration for creation. The materiality of the sand evokes a distinct metaphysical experience of time and space. Both fluids, everchanging and unlimited. Moreover, sand dunes hold the particularity to sing in some places in the world.

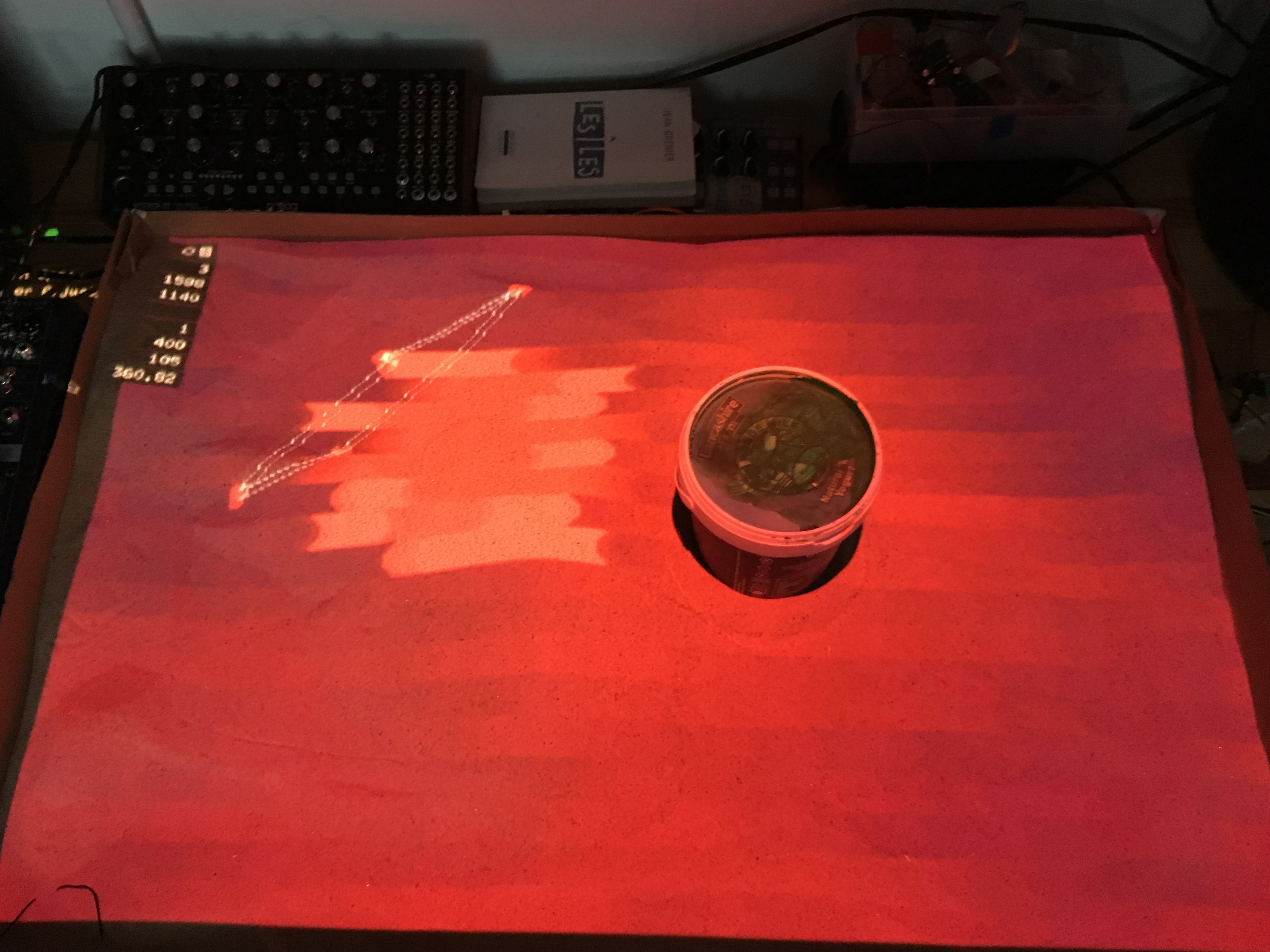

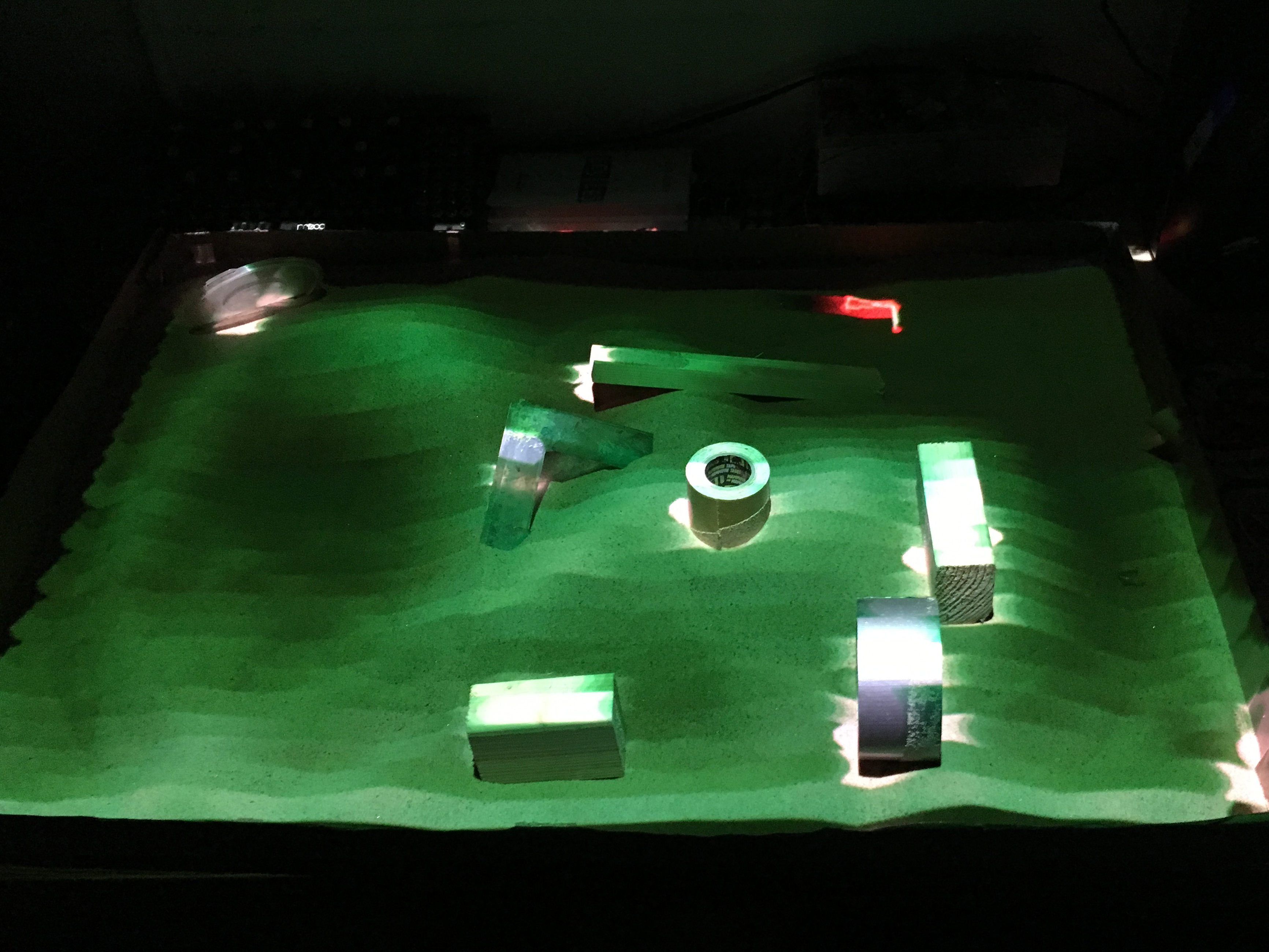

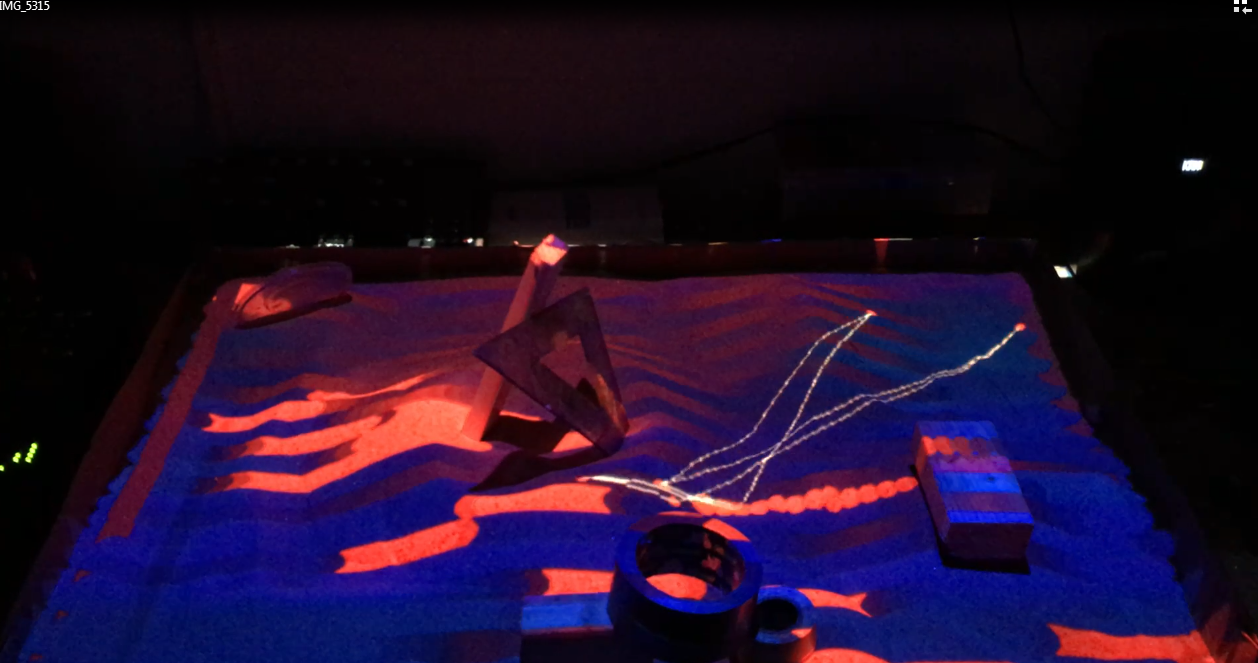

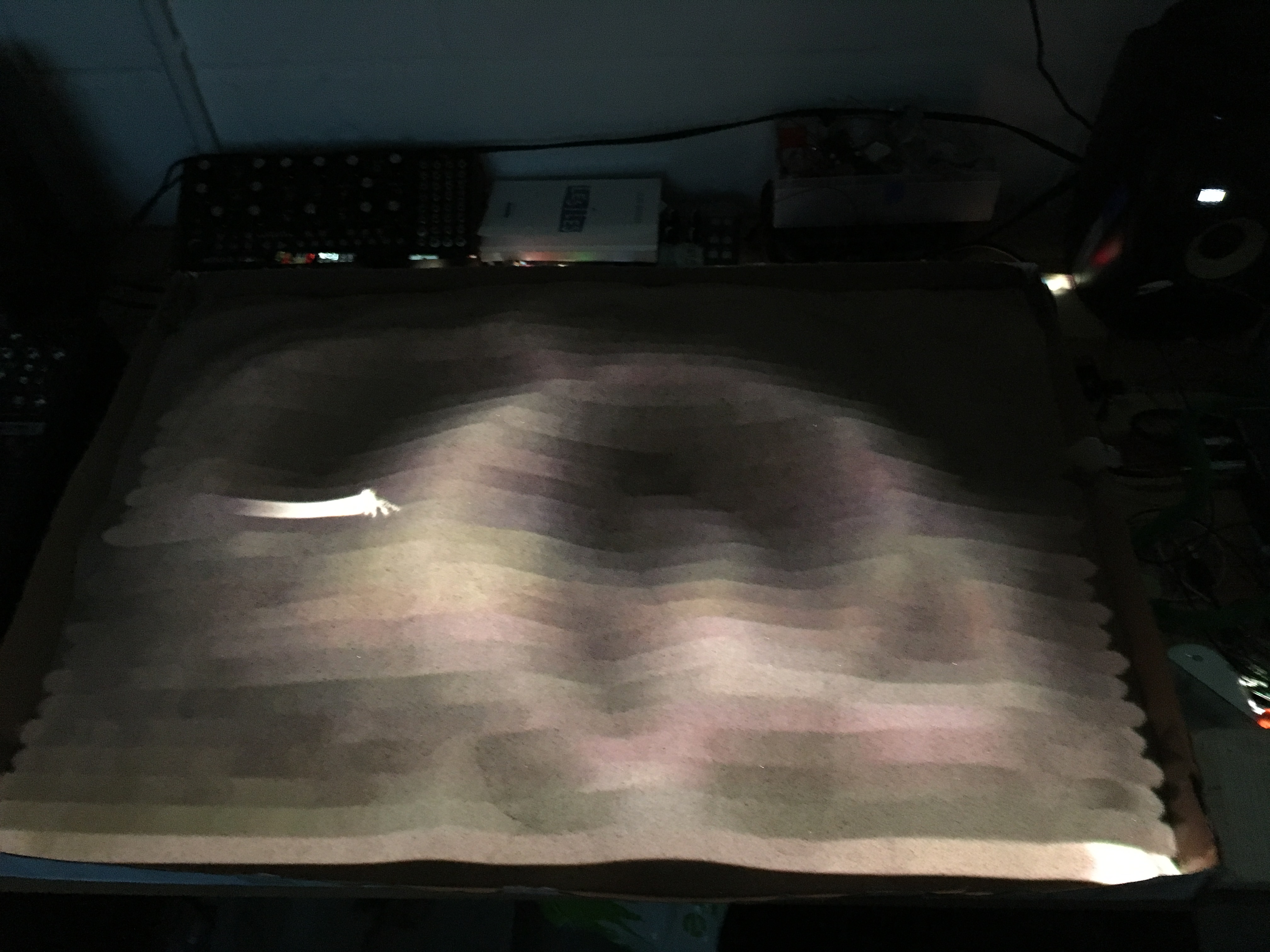

I propose for this installation a shallow sandpit as a model of a sand landscape. The presence of a wanderer is represented by a projected light trace on the surface. Depending on its position, this agent interprets the relief around him and reveals its hidden melody. The walker is followed by its area of perception signified by a small network of particles. The area it has already explored is marked by a projection.

The human visitor is invited to change the topography of the landscape by touching the sand, or by placing wooden blocks (or any other object). Acting as an architect, the trace that he will leave will interfere with the initial musicality interpreted by the walker and which will be heard by the next visitor, once the walker will pass again.

Technical

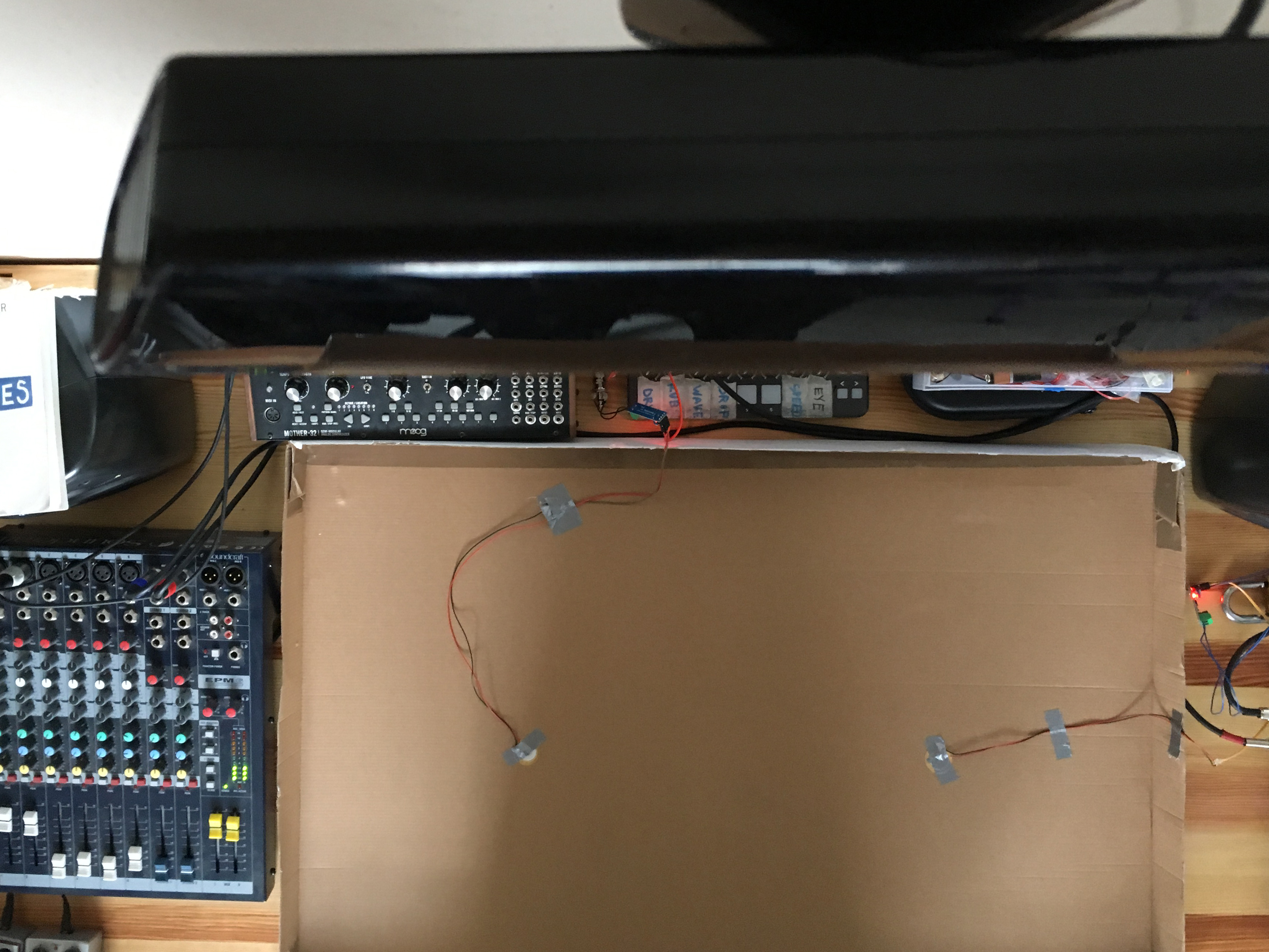

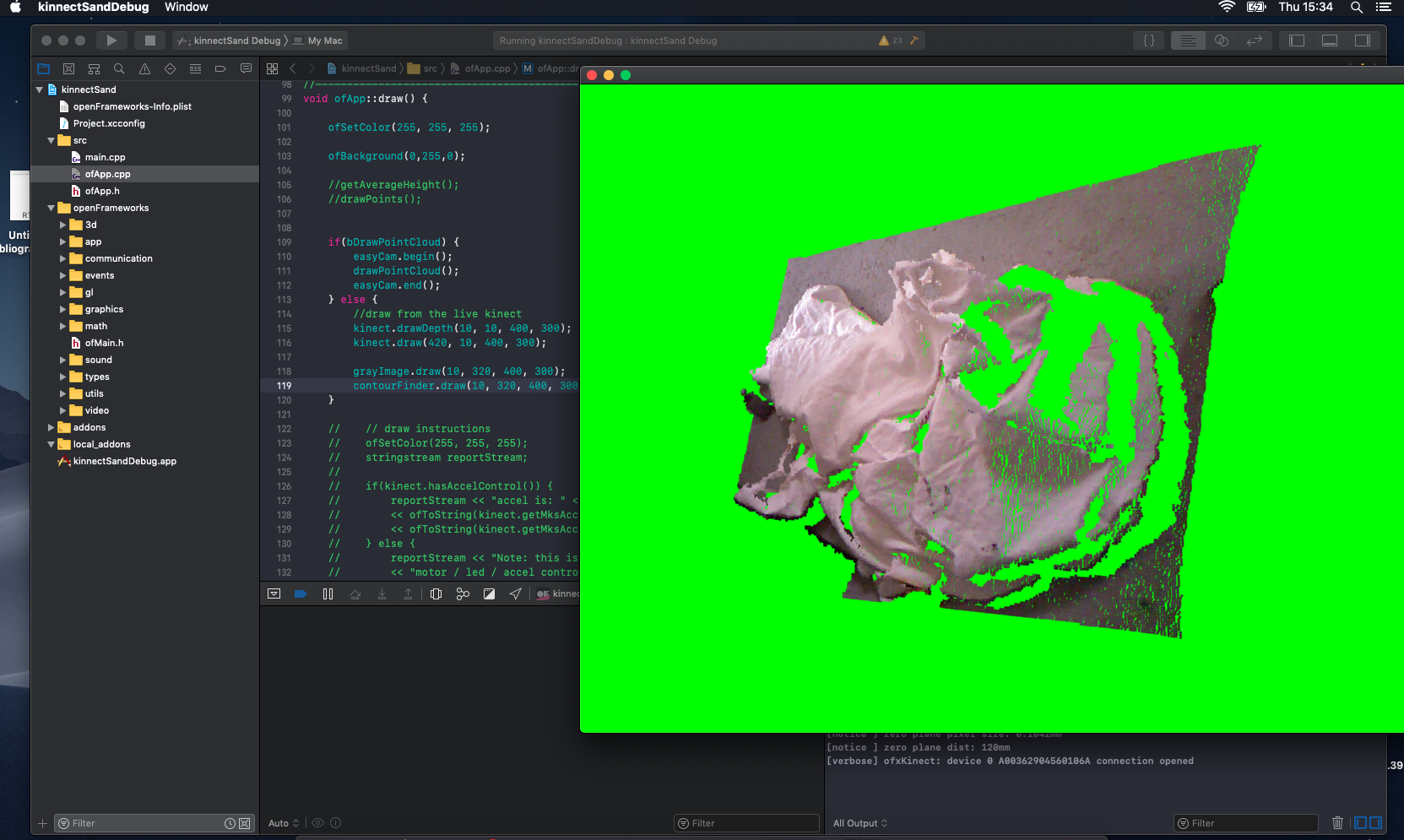

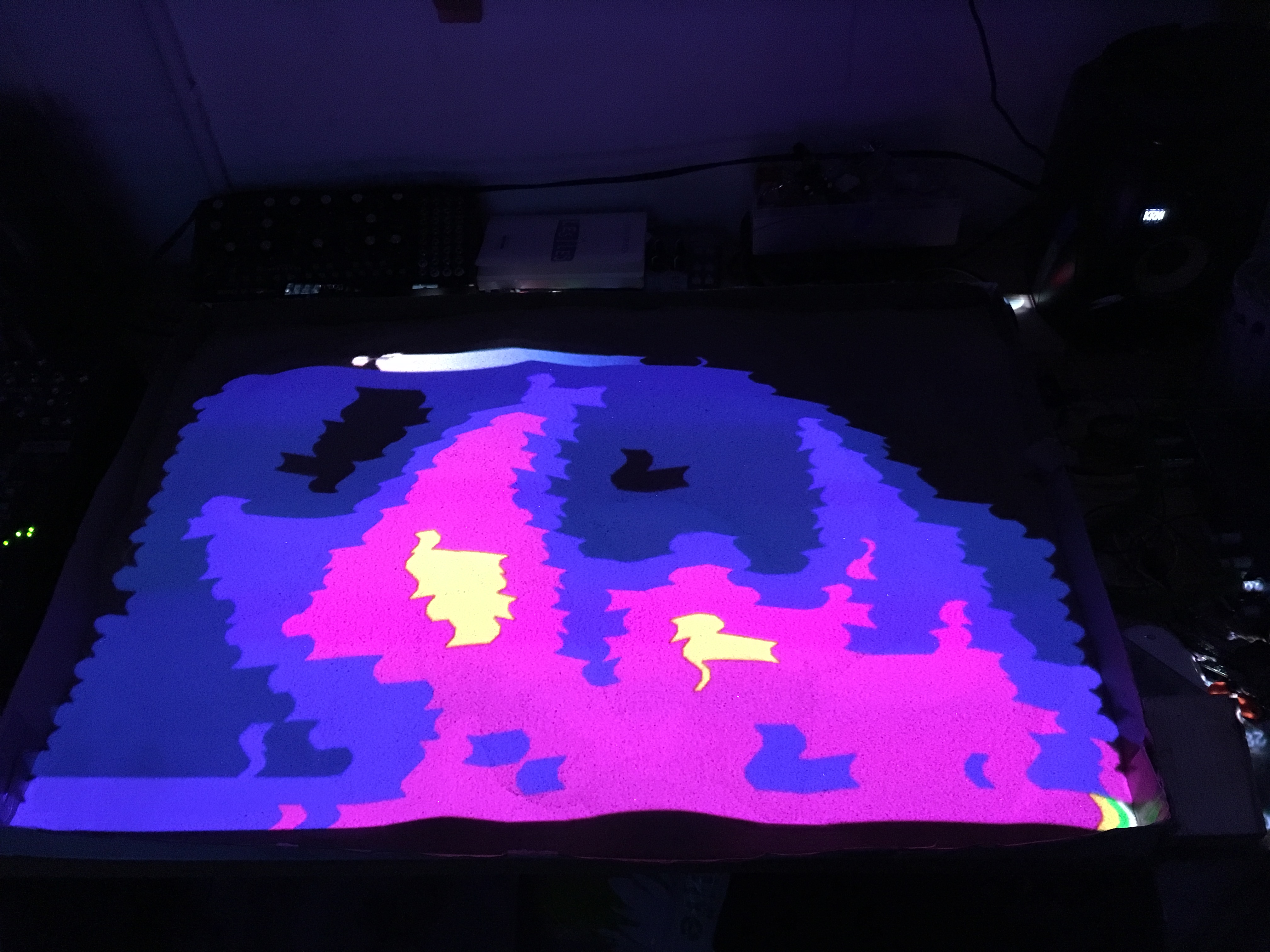

This rather simple installation is based on a depth camera which detects the relief of the surface. The animated trace and the particles store the depth information recorded by the camera and send the corresponding message via OSC (Open Sound Control) protocol to Max/Msp. The message is passed to a selection of virtual synth (semi-modular Aalto by Madrona Lab). According to this information, a beamer projects the result of the linear interpolation (lerp) between to chosen colours.

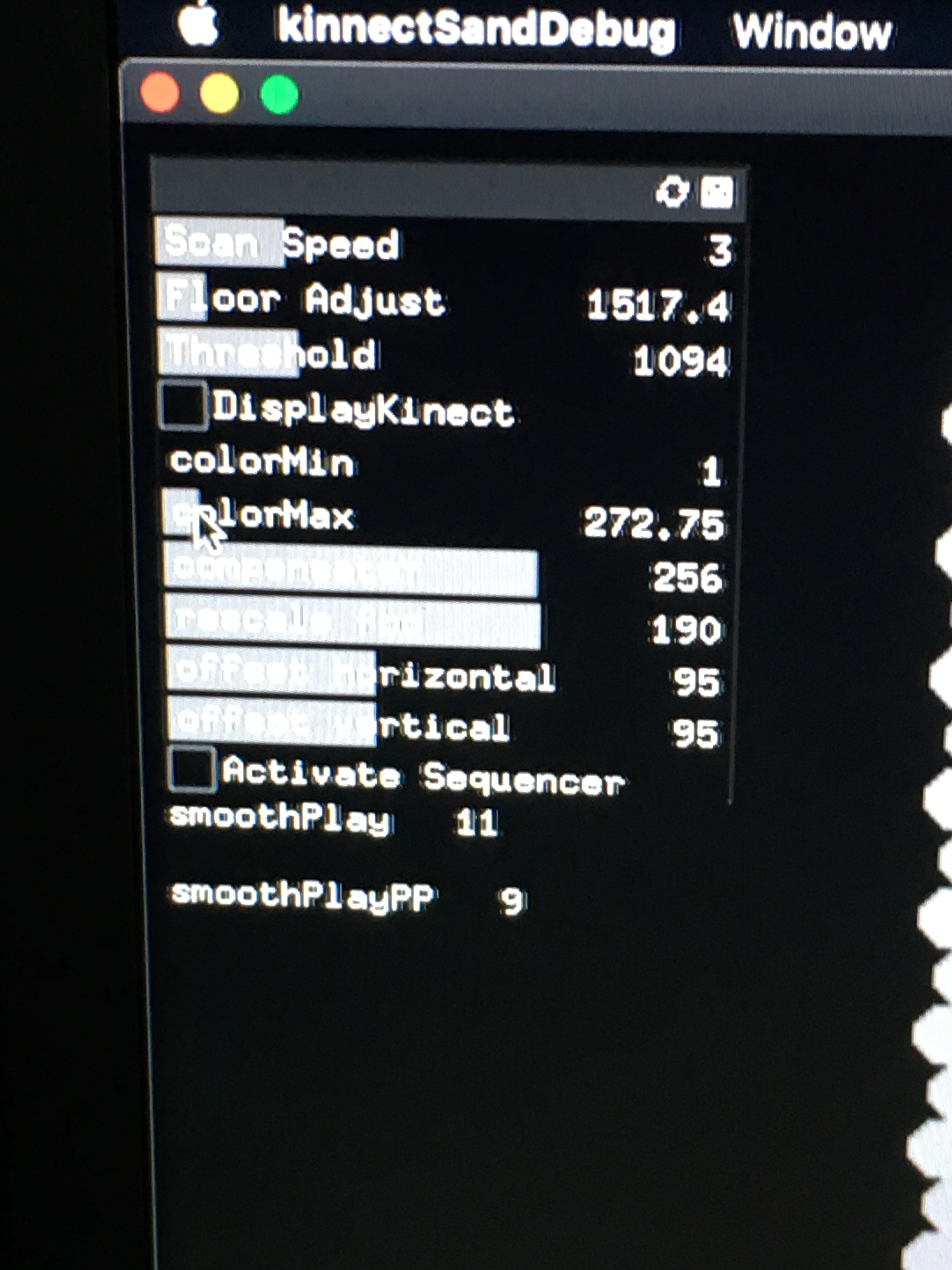

I implemented two versions of the walker. One scans the surface linearly and the second is based on a random walker algorithm. It can be easily changed with a GUI in the software, along with a selection of variables which allow to set up the installation. The distance from the camera to the surface can be adjusted as well as the range of the depth variation (min/max), the speed of the trace and a helpful debug mode displaying vivid colors to indicate the different depth levels.

Future Development

I think it would be great to extend the possibilities of sound variations to add more richness to the audio piece. It would be interesting to modulate the sound of the sand stored in a buffer each time a user interacts with the piece, rather than using synths.

Also, I would like to improve the projected pattern by adding more subtleties in the outcome and find a solution to update the projection interactively.

And most importantly the behaviour of the walker could be way more complex and could have its own form of intelligence to react always differently to the objects added in the sand.

Self evaluation

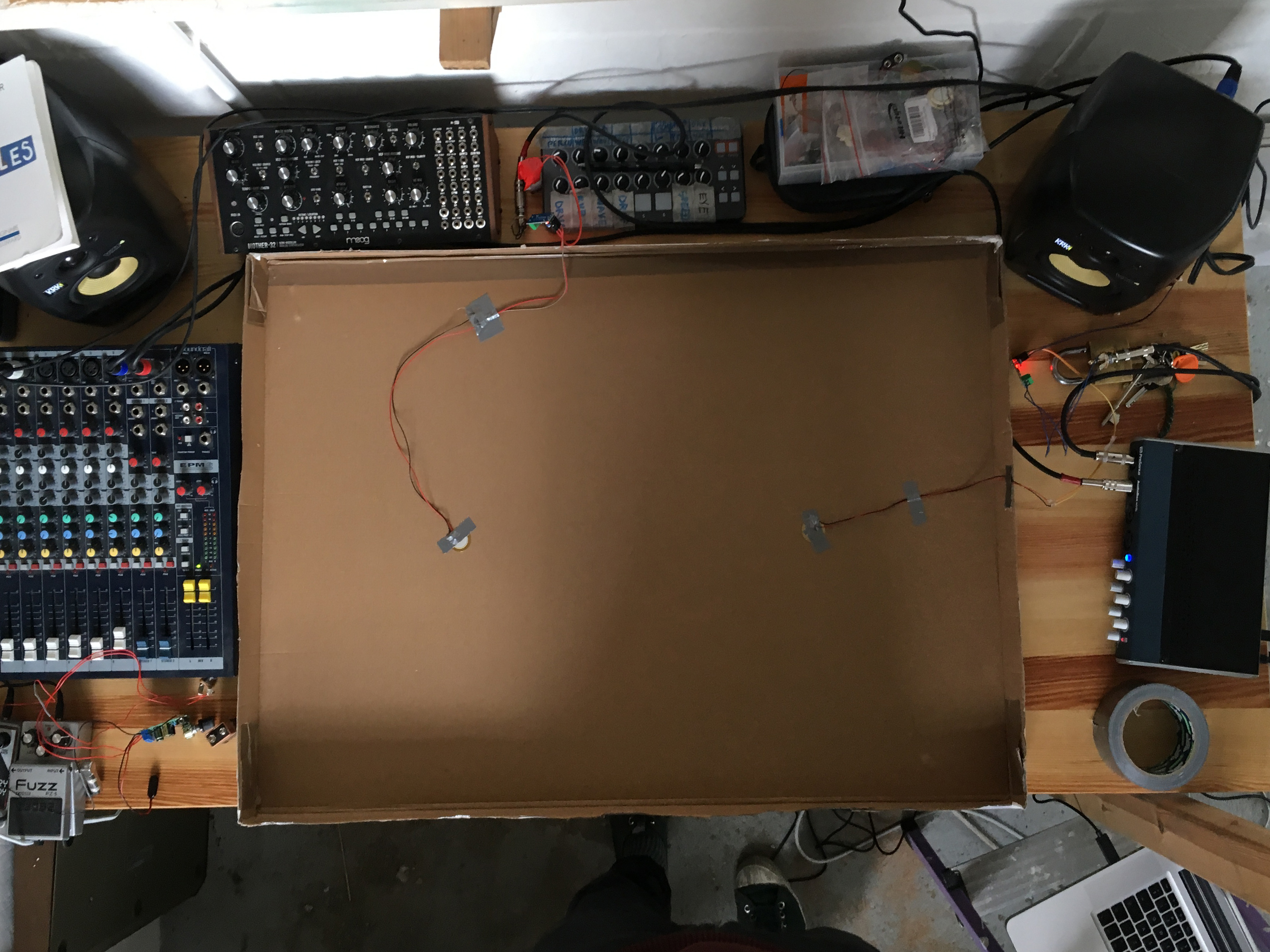

The creative process leading to this result has been quite hectic. The good point is that I had the chance to experiment with different possibilities and I learned a lot. At first, I wanted to build an instrument based on the sound produced by the sand when moved by a user. I tried to add six contact microphones at the bottom of the sand pit, and I attempted to train a RapidLib machine learning regression system to modulate the sound, depending on the hands' position, and the relief. It quite worked but was too slow to train, even though the resolution of Kinect's points to compute was low at the end. I also spent too much time to write an algorithm to record the training data in a json file (which worked) before realising it was obviously already done and simple. Then I tried to work with ofxCv to detect hands position, but time was running so I gave up to focus on something efficient and showable.

Once I had the code working to send Osc to Max/Msp according to the depth values, I understood that a visual clue was missing to figure out the source of the sound variation. The idea of the walker pleased me. But as the information sent was quite simple; I decided to add a system of particles to create more type of sounds and more visual complexity. One problem though: to be able to see the projection the installation has to be in the dark, and then the volumes of the sand were not visible anymore...That is how I decided to implement graphics to show the volume. I also wrote a function to update the projection when something is changed but it slowed down the program too much, and I had to get rid of it. Eventually, the hardest part was to small tweak all the parameters to obtain a consistent result regardless of the walker position. I had to write some code to compensate the orientation of the Kinect (which was not right above the sandpit...) to get the same depth from every point of a flat surface. Then finding the right depth range to get nice shades of color and nice sounds were long. Also, I really hoped I had more time to code the sound instead of using Max/Msp.

Nevertheless, in the end, I am satisfied with the result. I think it is quite engaging and poetic. I feel like I learned a lot and that I created the kind of installation I always wished to be able to make.

References

- 2008-Kenneth-White-Geopoetics.Pdf. http://www.alastairmcintosh.com/general/resources/2008-Kenneth-White-Geopoetics.pdf. Accessed 14 May 2019.

- C++ Language - C++ Tutorials. http://www.cplusplus.com/doc/tutorial/. Accessed 14 May 2019.

- Madronlab.https://madronalabs.com/products/aalto.Accessed 14 May 2019.

- DevilMayWeep. Singing Sand Dunes (1 Hour). YouTube, https://www.youtube.com/watch?v=uwx9zKahKSU. Accessed 14 May 2019.

- OpenFrameworks Is a Community-Developed Cross Platform Toolkit for Creative Coding in C++.: Openframeworks/OpenFrameworks. 2009. openFrameworks, 2019. GitHub, https://github.com/openframeworks/openFrameworks.

- OpenKinect. https://openkinect.org/wiki/Main_Page. Accessed 14 May 2019.

- SandScape. 2003, http://tangible.media.mit.edu/project/sandscape.

- Vst~ Reference. https://docs.cycling74.com/max5/refpages/msp-ref/vst~.html. Accessed 14 May 2019.

- Watson, Theodore. Legacy OpenFrameworks Wrapper for the Xbox Kinect (OF Pre-0.8.0+ Only): OfxKinect Is Now Included and Is Being Maintained in OF Releases - OfTheo/OfxKinect. 2010. 2019. GitHub, https://github.com/ofTheo/ofxKinect.