Me|more|y

Preparing myself to forget. Exercises to remember

We are our memories. And our feelings, and our plans for the future. Those who are not here anymore, only exist in our memories, and in our hearts. The past only exists through documentation, imagination, recreation, evocation, and recollection of impressions.

Me|more|y is an interactive installation made of several pieces. Digital and physical symbolic representations of unrelated memories without any proposed narrative.

produced by: Alix Martínez Martínez

More detailed documentation can be found here. Code for this project available on Github.

Introduction

Me|more|y is an autobiographical piece and an exploration of the vast field of memory. Based on personal experiences of close relatives with dementia. Me|more|y is a reflection and representation of memories of an entire life. How differently do we encode, store and retrieve memories in the digital era? How differently our brains operate compared to machines and computers?

This project is dedicated to my grandparents. To Maruja, who lost her memory and lived in a limbo state for almost ten years. As his father before, as her sisters later on. To Dionisio, who accompanied her in all those years until he decided to end their travel at the sunset of their lives.

Concept and background research

For me, art works as a cathartic element that helps cope and deal with extreme emotions, vital concerns that provide relief through their representation. Me|more|y benefits from the Design Thinking and Human Centred methodologies used in the creation of digital products and services.

The project started absorbing all the information related to the memory in a very broad sense. Reading materials available from very different fields. Memory is a huge topic. Some of the artists and pieces that influenced the work: from the autobiographical documentation work of the life and memories by artists self-portraits to Tracey Emin autobiographically based works, Nan Goldin albums...

The work echoes insights from one hundred and seven responses to a proposed survey. Answered by people from different nationalities and backgrounds, touching different aspects of the memory: earliest memories, specific memories, random memories, memories to forget…

The next step was to create individual prototypes that would touch a specific area. The project is divided into three main parts where no specific order or narrative is required. The first, is an interactive piece with different memory representations, the second a map of all the people meet in a life’s time and the third is a physical representation of all my digital memories and the people who appear in them.

The project is purely visual. I’ve made the conscious decision to avoid other senses as I cannot recall any olfactory, tactile memories…

Physical space: The room was setup to create an immersive experience. In the center, a plinth, where the spectator interacted with the main section. The place was divided into two areas with two different wall colours and no artificial light. They represented the lights and shadows where we move when we try to remember. During the day the natural light passed through the transparent printouts. When the sun was set, the only light was coming from the screens and a small light on the floor that created a dramatic light through the acrylics.

P1 - Interactive Piece

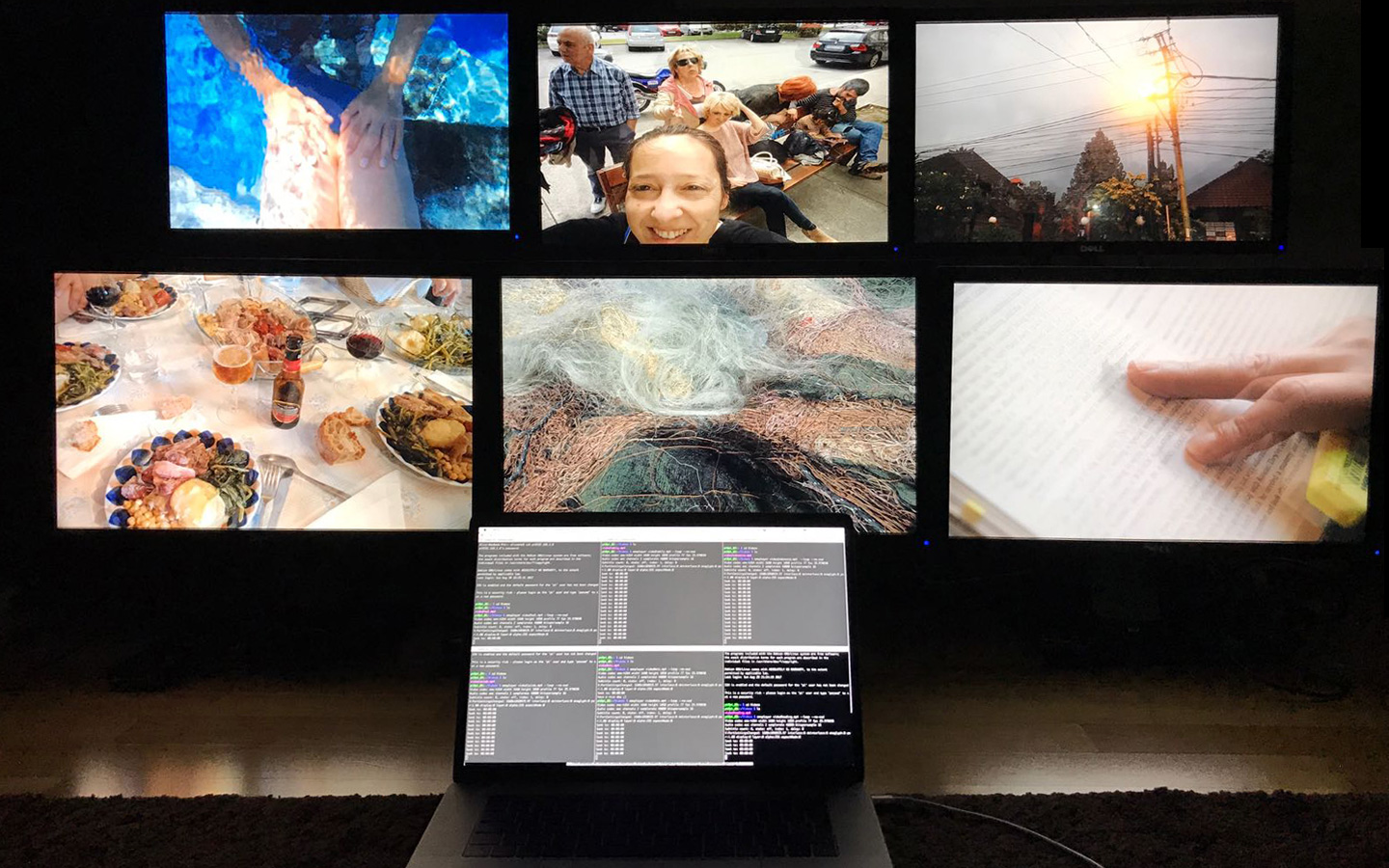

This piece (from now on P1) is an interactive screen-wall consistent of six 22” monitors. It displays four different representations of aspects of the memory:

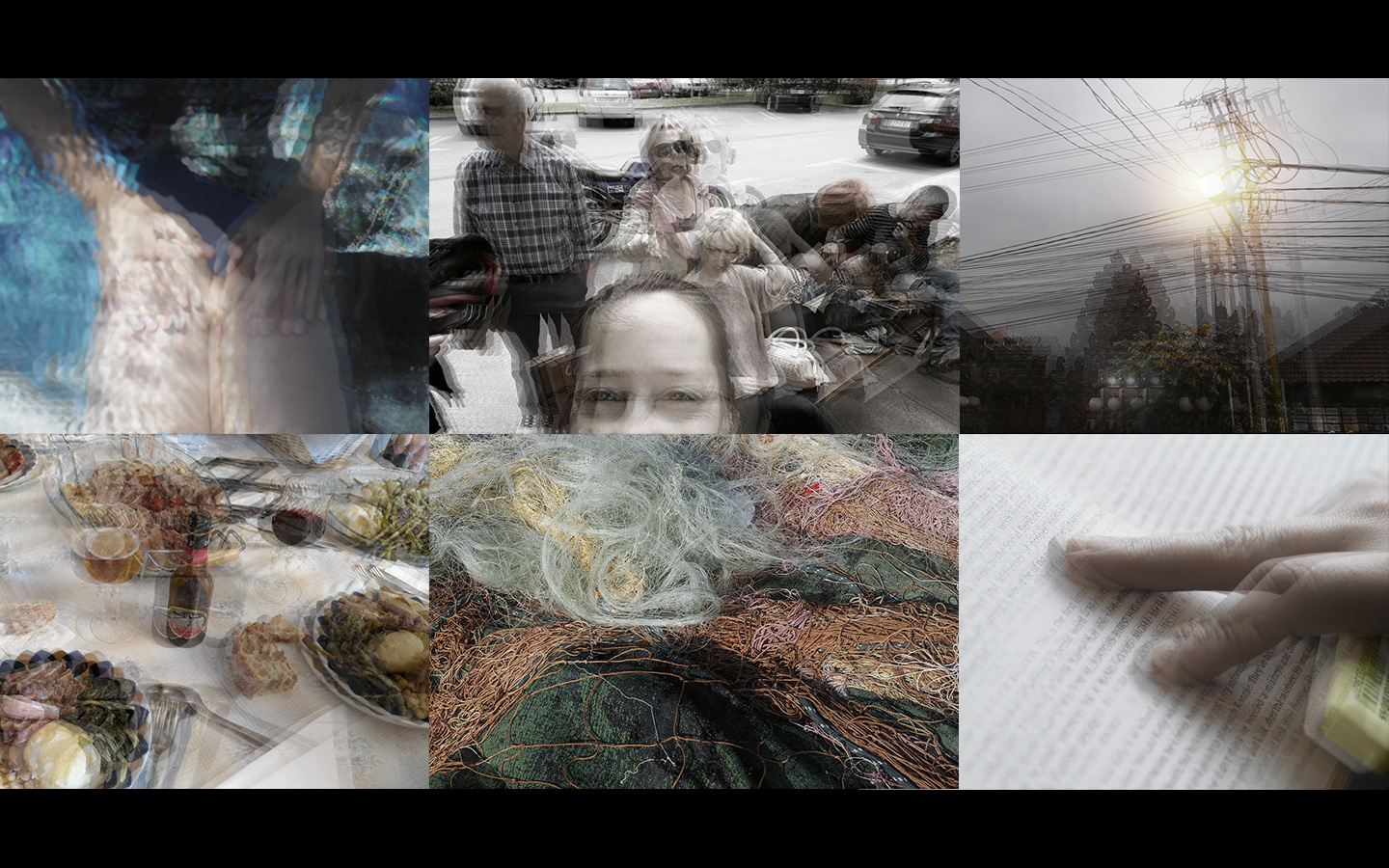

- Retrieve memories. When remembering, memories appear blurry, cloudy… This exercise represents how our brain retrieves them. They seem close, they merge, they connect, once they seem connected, they dematerialized for a moment to become the image again. In the survey, I asked: in the hypothetical case you would lose your memory, could you list the things you would like to remember? Each of these six images represents what I would like to remember: my partner, my family, my travels, family/friends around a nice meal, where I came from, and my favourite hobby.

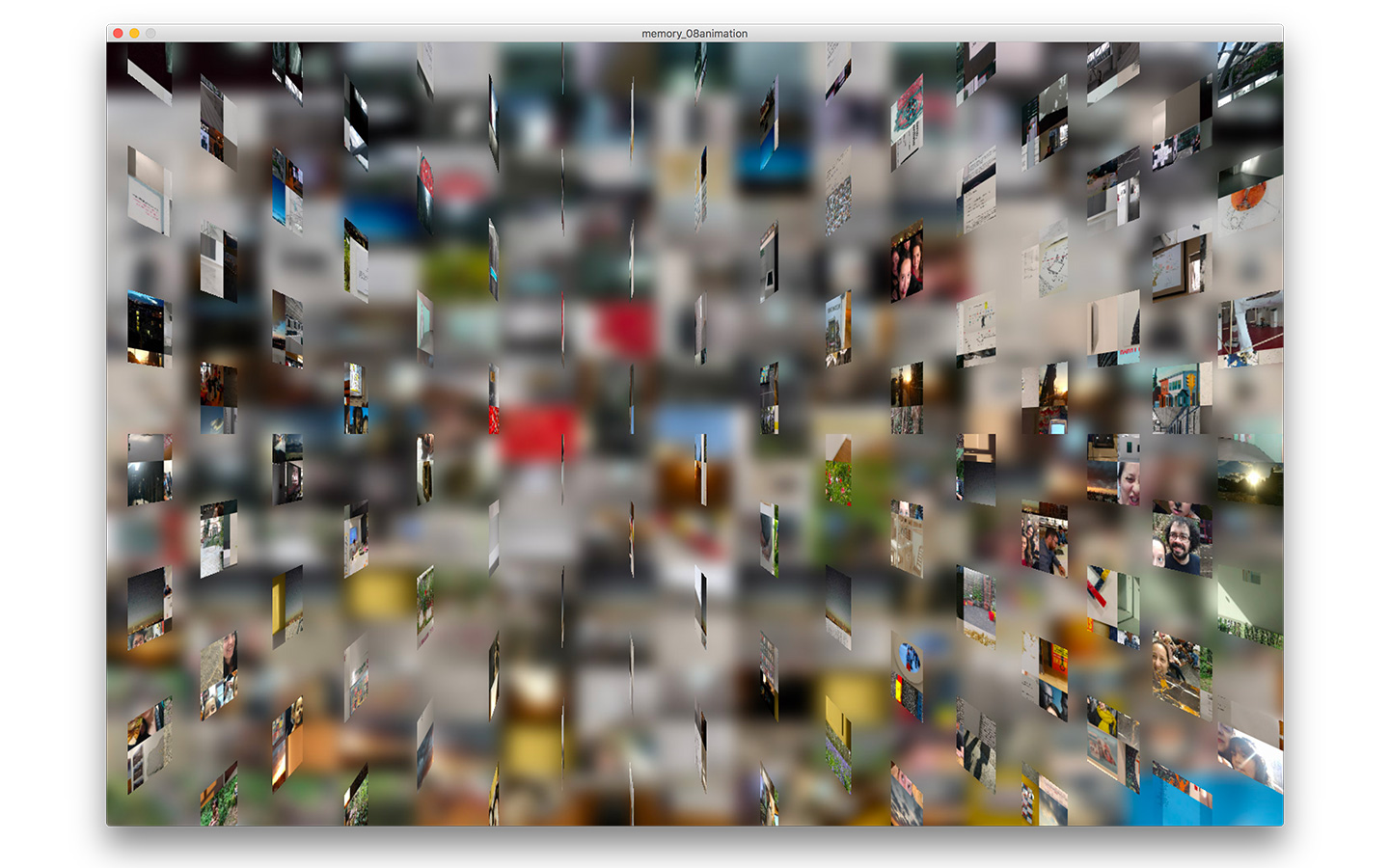

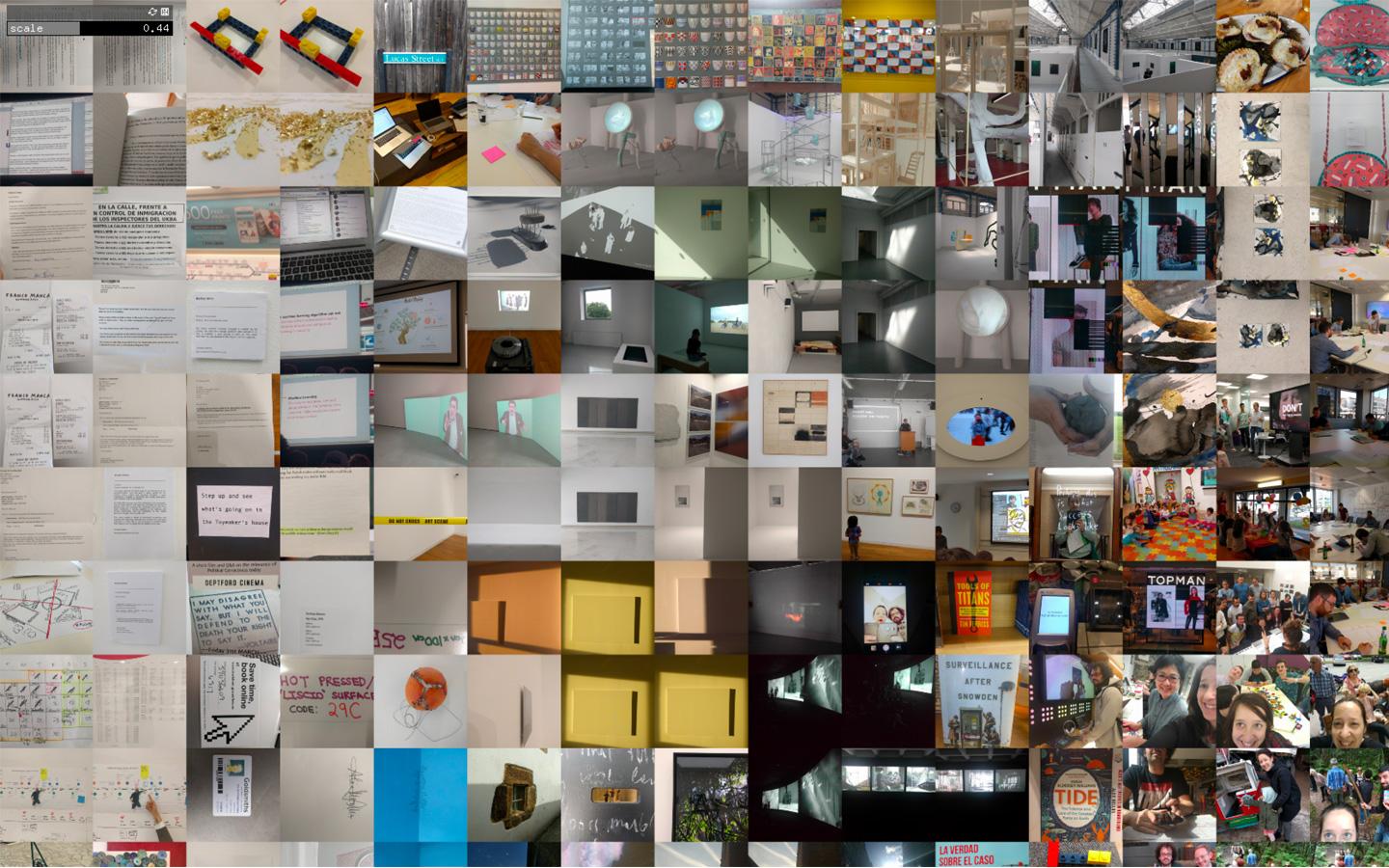

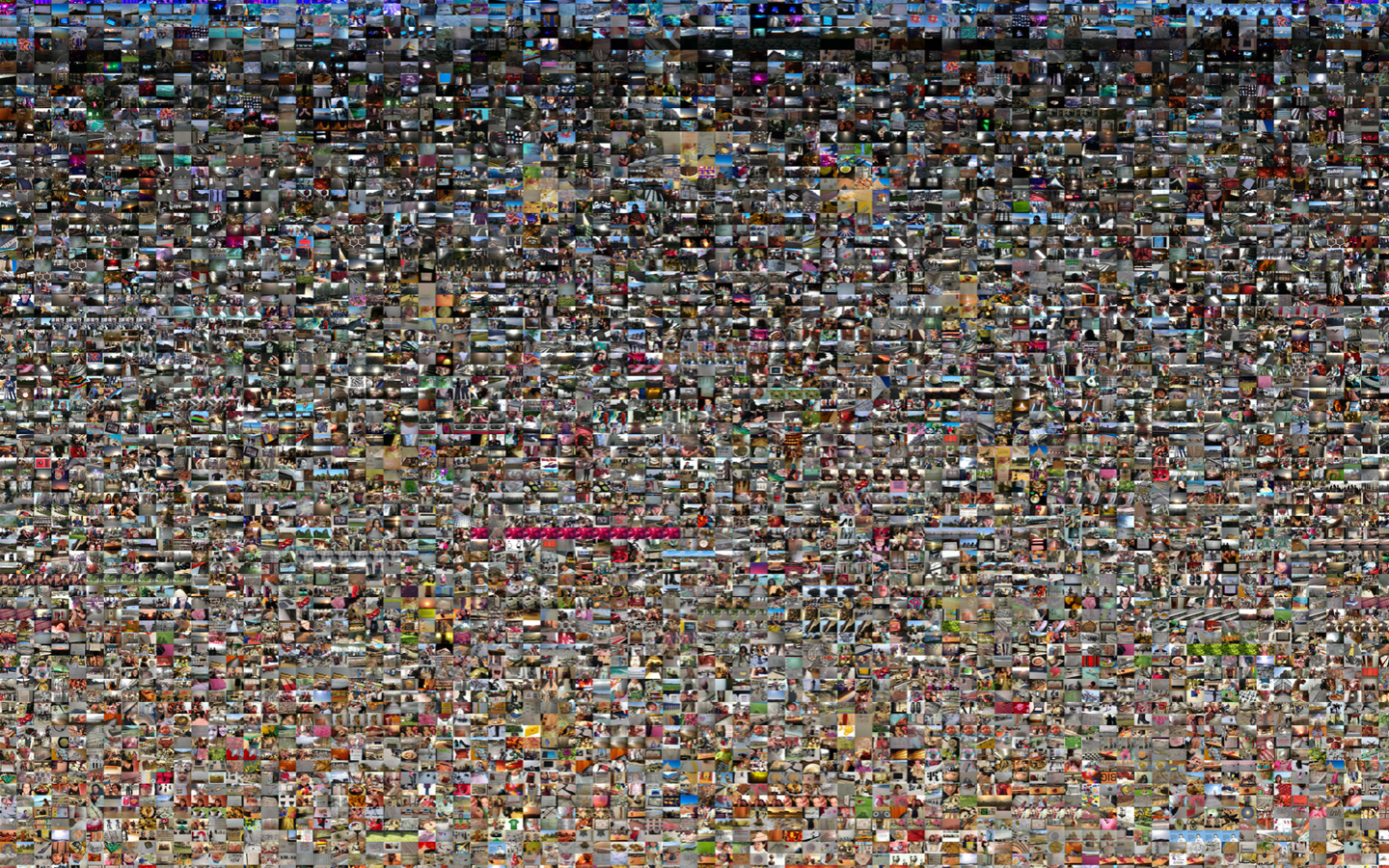

- Unveil memories. Our brain stores all our memories, waiting to be retrieved. We have flashbacks, memories appear without recalling them. These animations represent all memories stored in the brain. Digital Memories. I used all my digital photographies (in total 16.758). Since the very first digital image, I took to July 2017. I created an image with all these, using machine learning algorithms to categorize. Each of the six images shows a different theme: Food - People - Landscape - Nature - Buildings - Night.

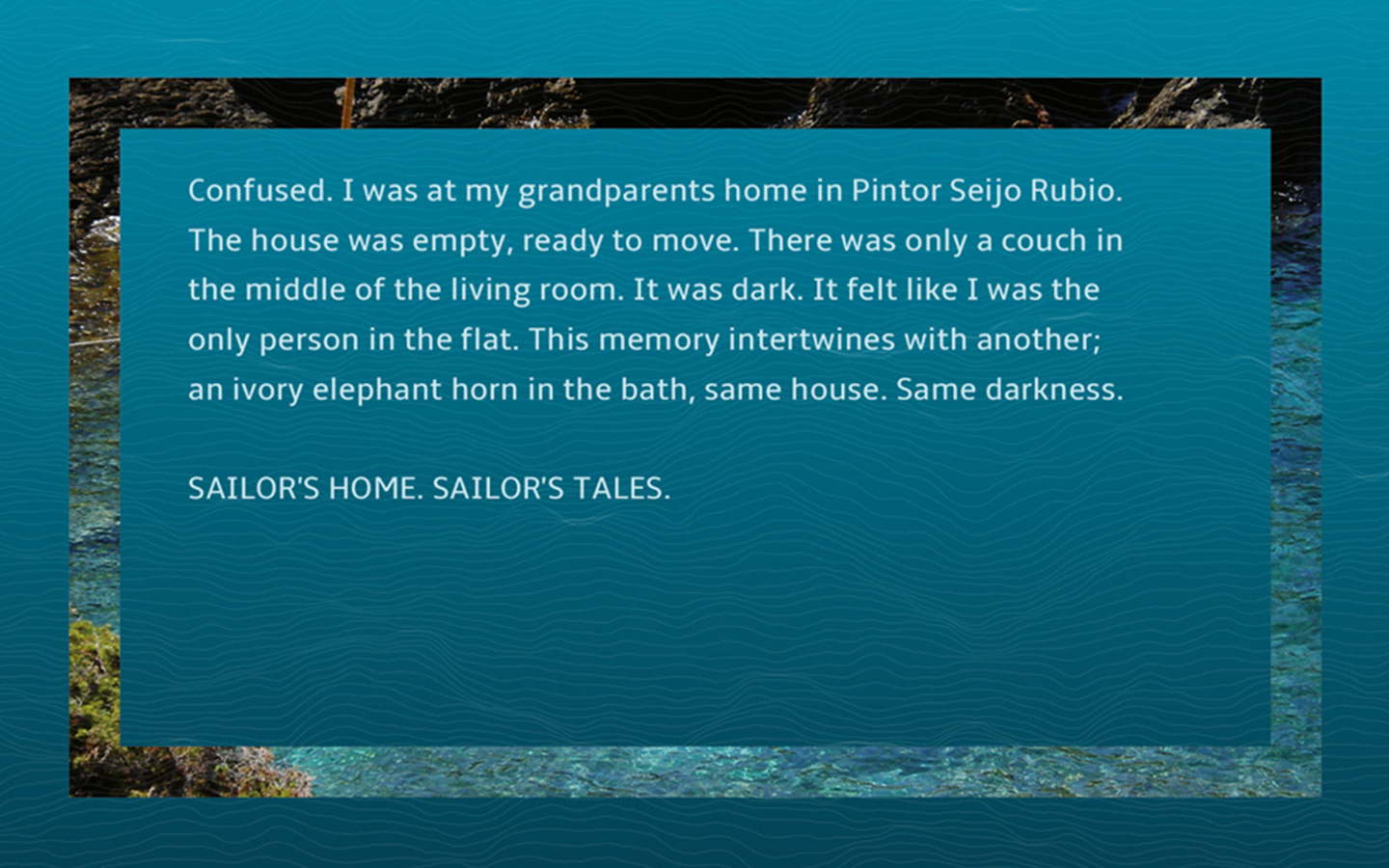

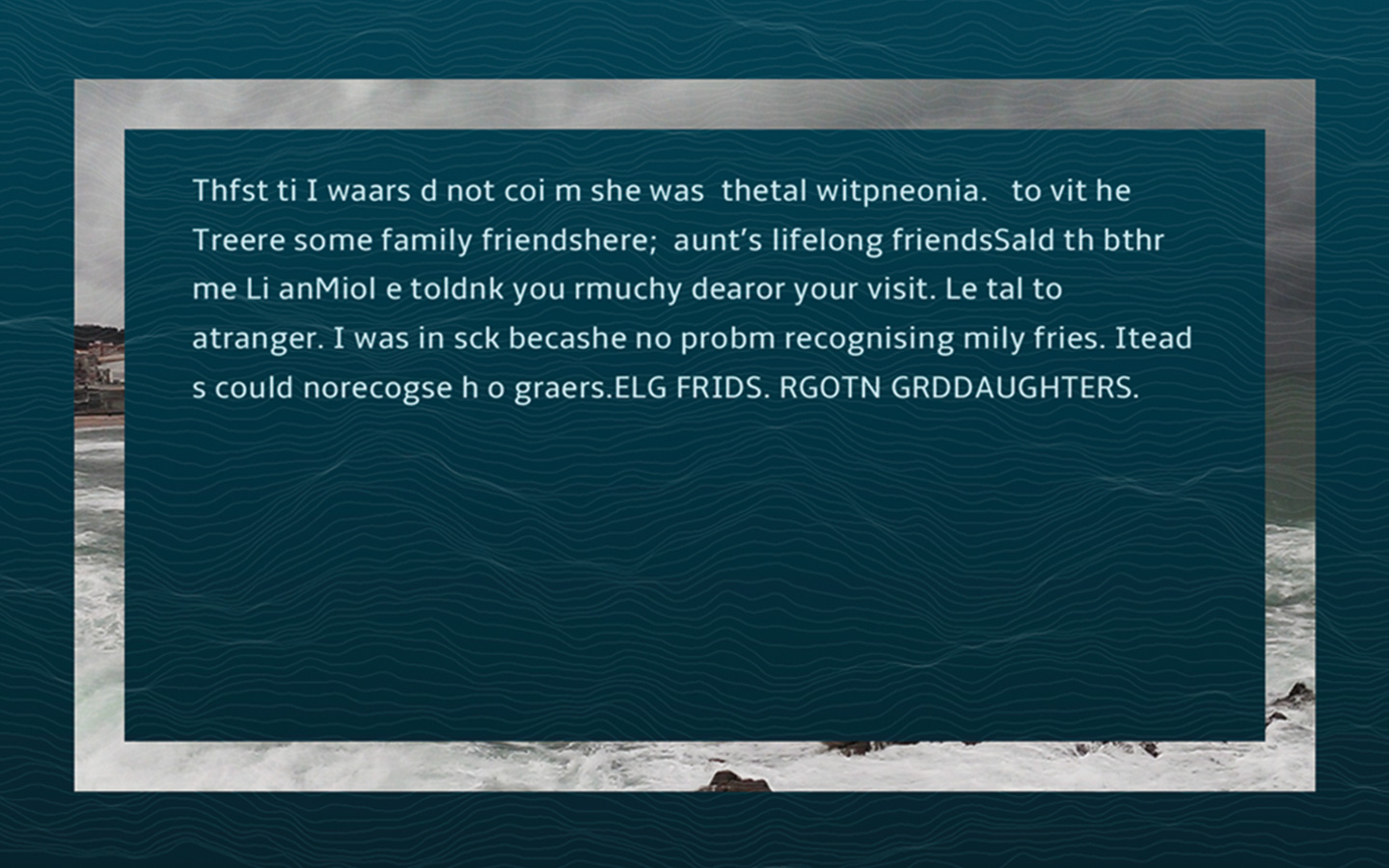

- Non-digital memories. What does it happen with non-digitally recorded memories? Memories are recreation of what really happened in the original moment. These represent memories only existing on my brain (my first memory, the only tactile one, the awareness of the possible loss of a beloved one, the first time my grandmother did not recognise me…) and how they fade until they disappear. Creating in the process new words.

- Collective memories. In the survey, I asked to name the first thing that came to one’s mind related to a theme (a place, a meal, a historic event, a book, a movie and a song). Afterwards, the request was to take some time to reflect on the same themes. The exercise consisted of identifying how the first impromptu differs to the ones that require more time.

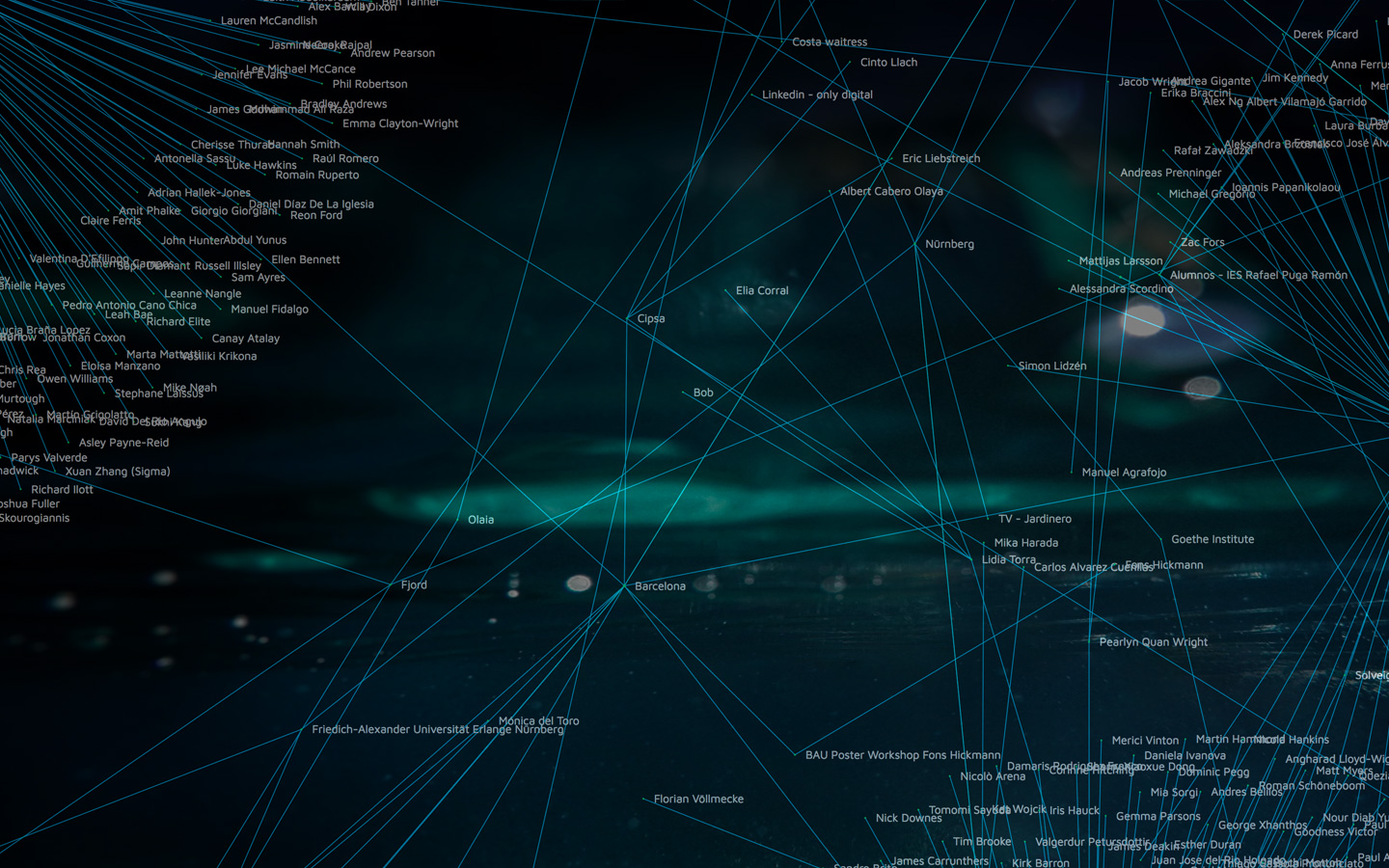

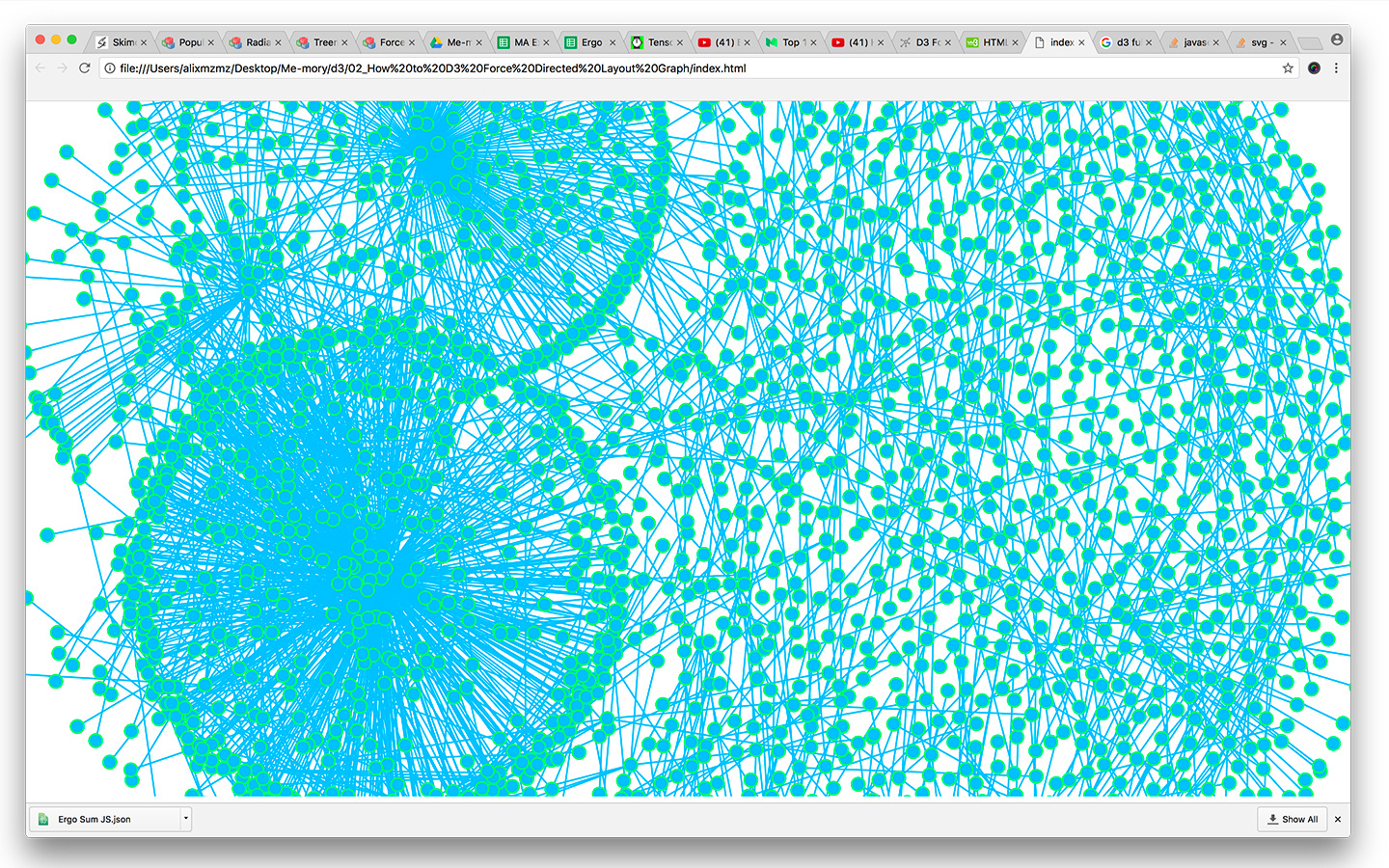

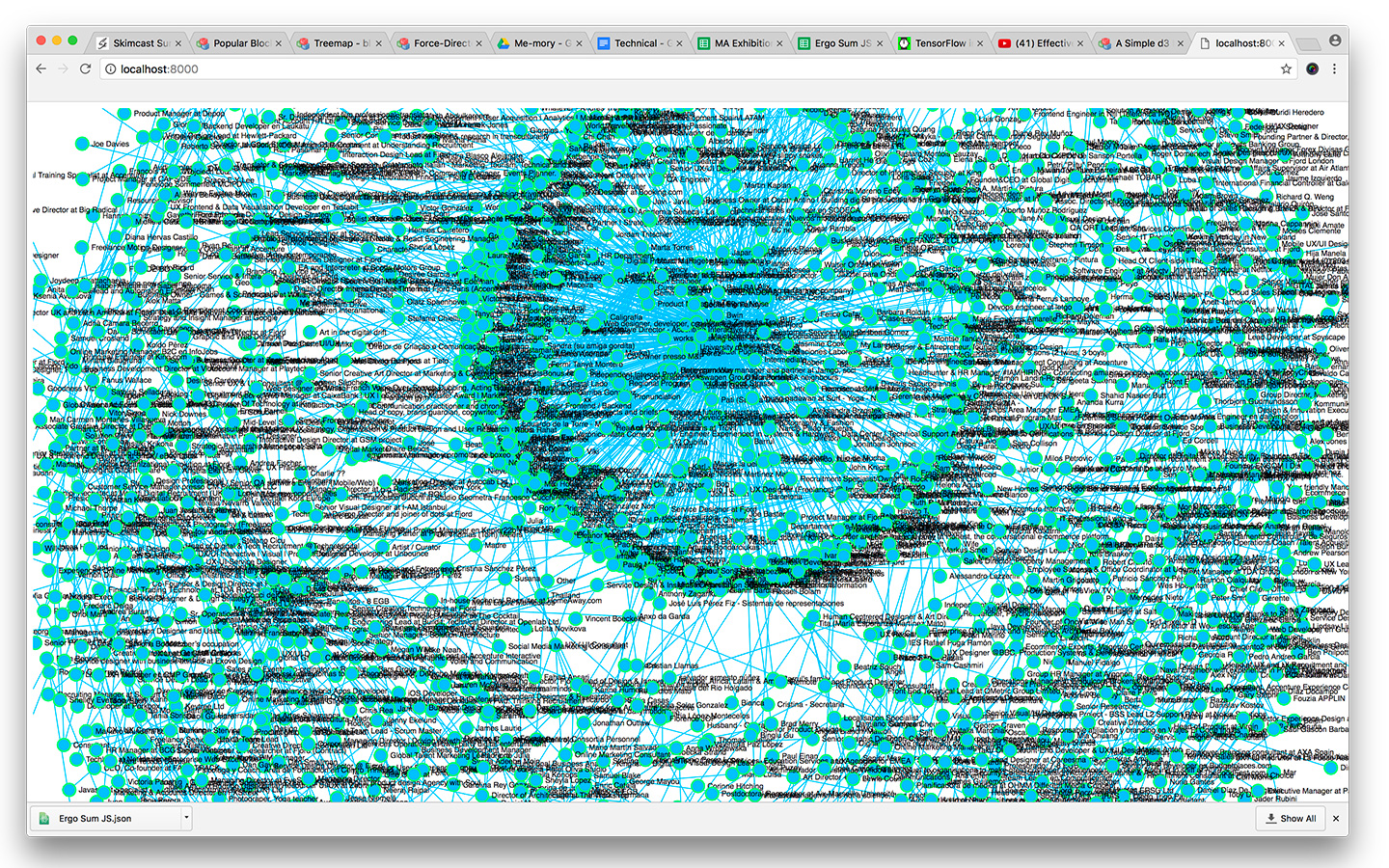

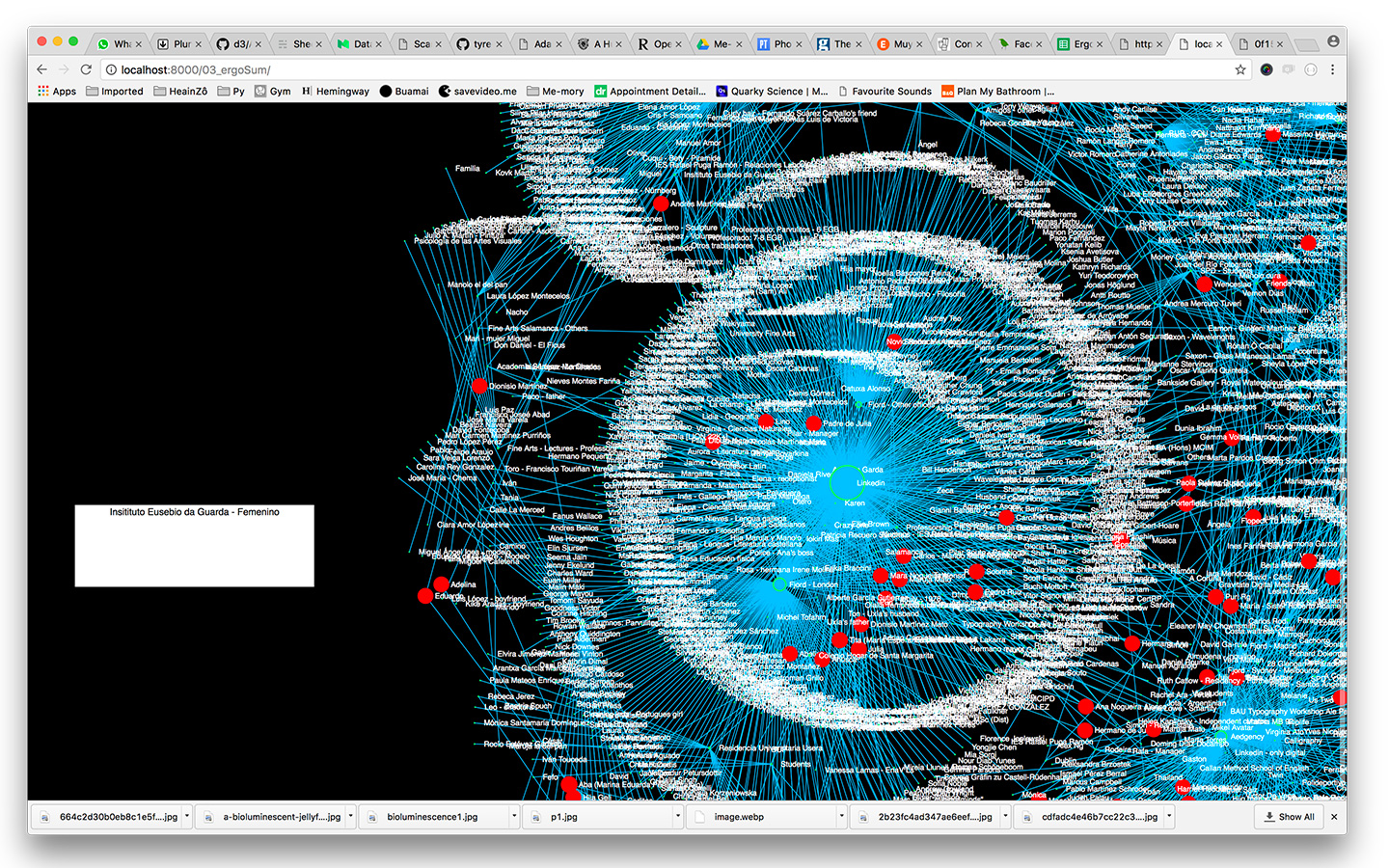

P2 - Ergo Sum. Infographic

The idea for the second piece (from now P2) is an allegorical representation of all the people I met in my life. A video shows a 2D view of an infographic with all the names visible and a 3D render of the same data. The look and feel are aquatic, reminiscence my birthplace.

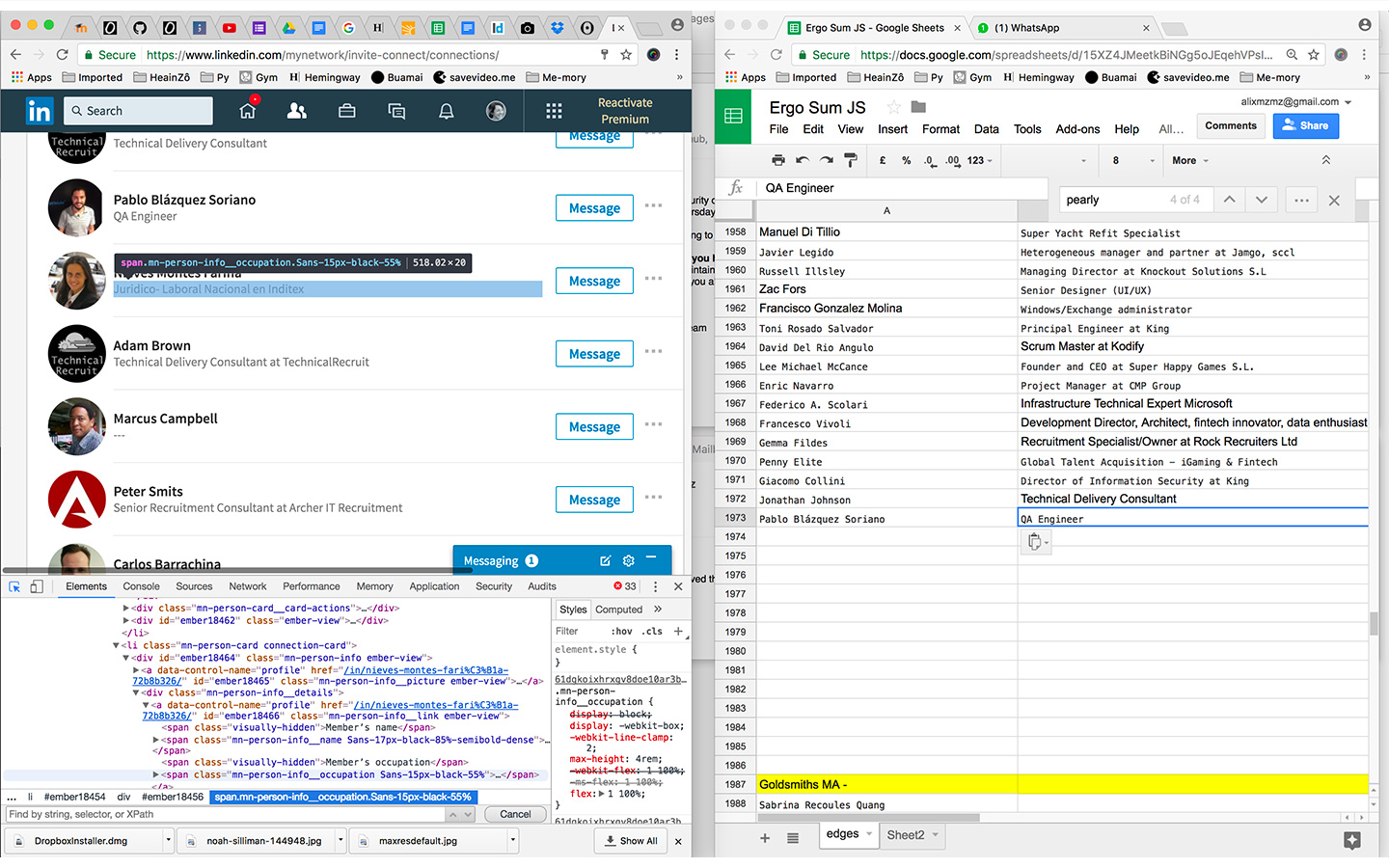

The process reveals itself as important as the outcome. The dataset was captured manually (by choice). There were cases in which I exhausted the names for a period, so I contacted someone to help me to remember. That created a chain of connections and refreshed old relationships. I could export the full list of my LinkedIn connections (1020) in a couple of seconds. Instead, I copied manually every single name to exercise my memory. This process took several days. I reflected about how the brain works compared to a machine.

P3 - Physical Representation

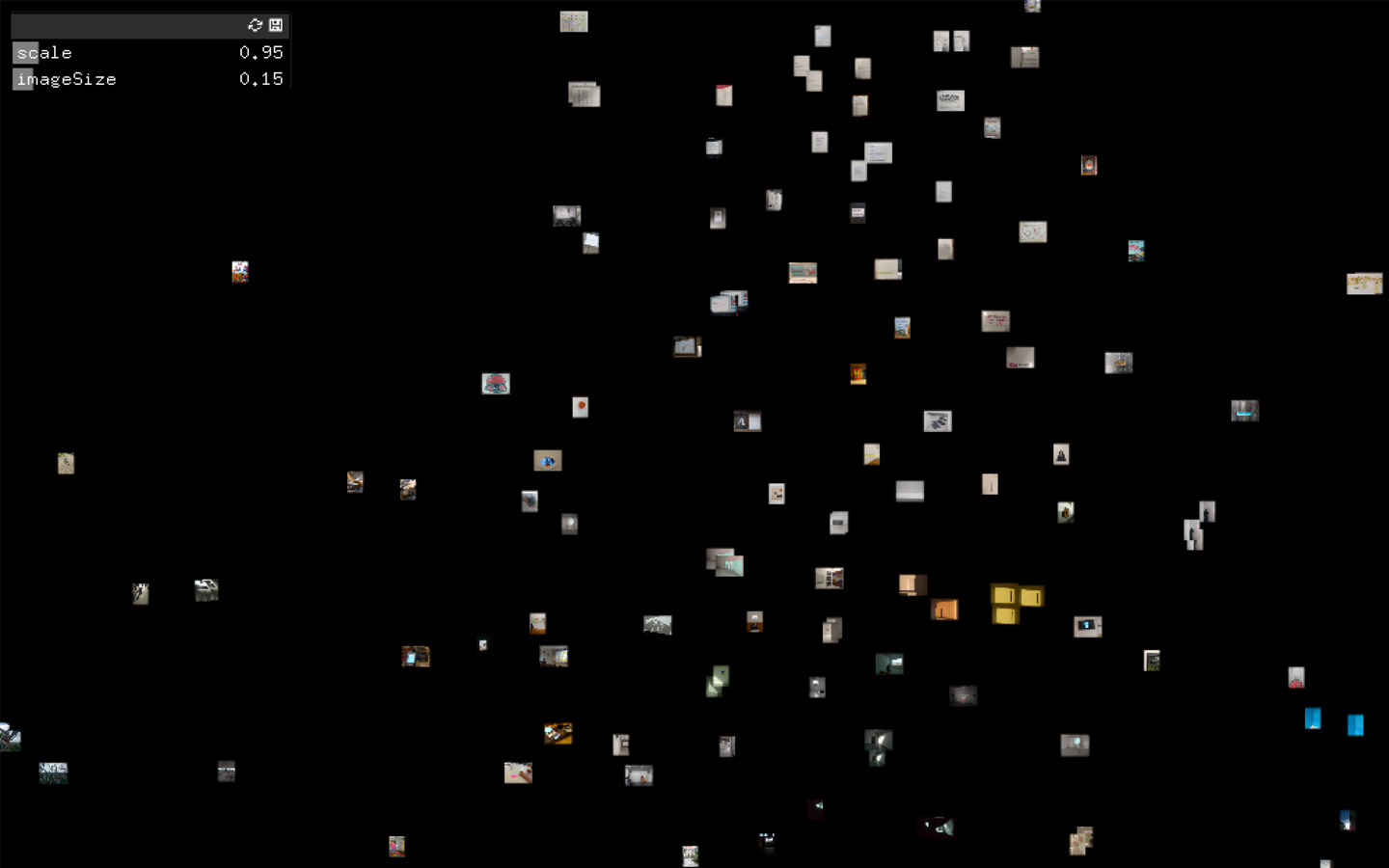

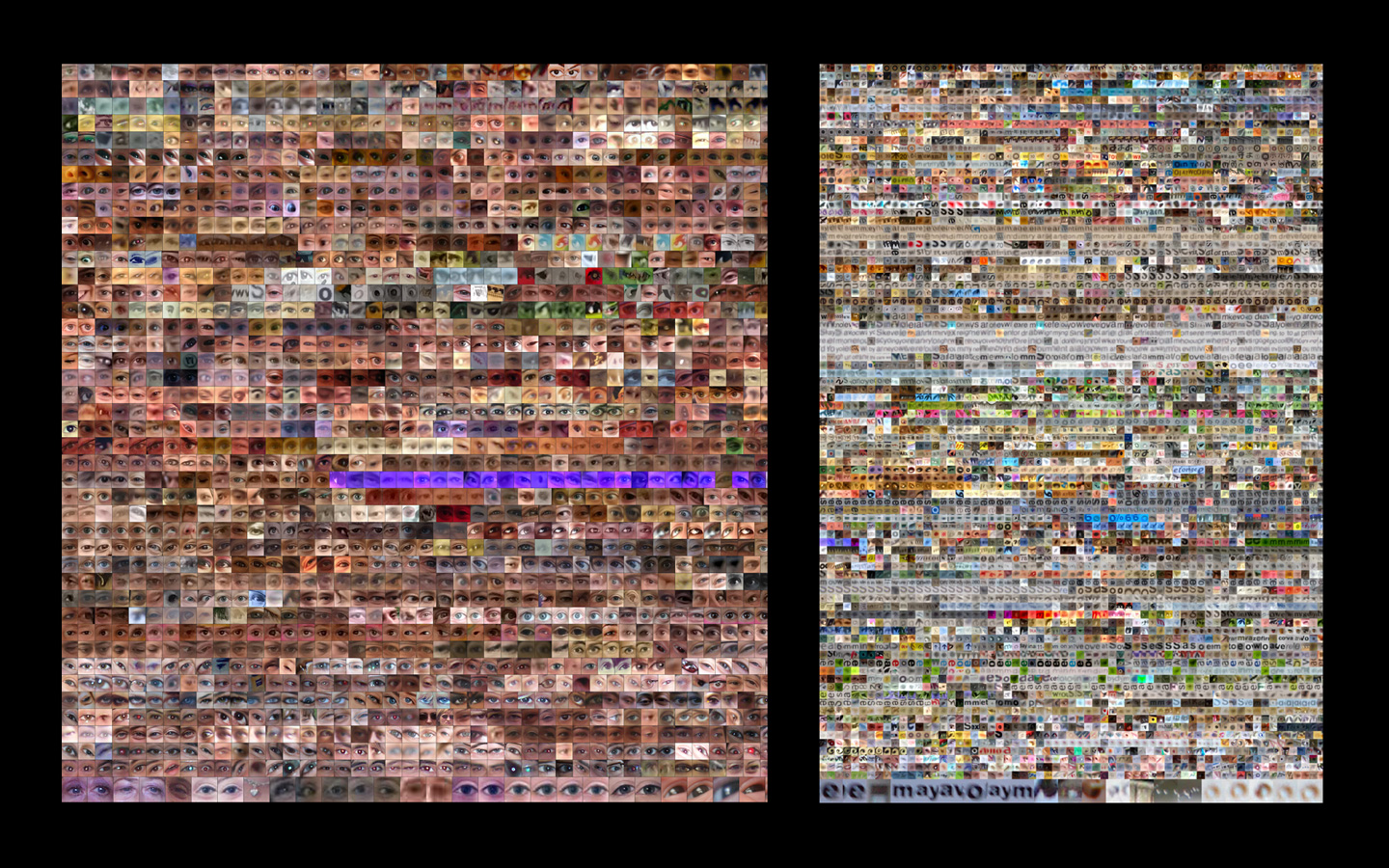

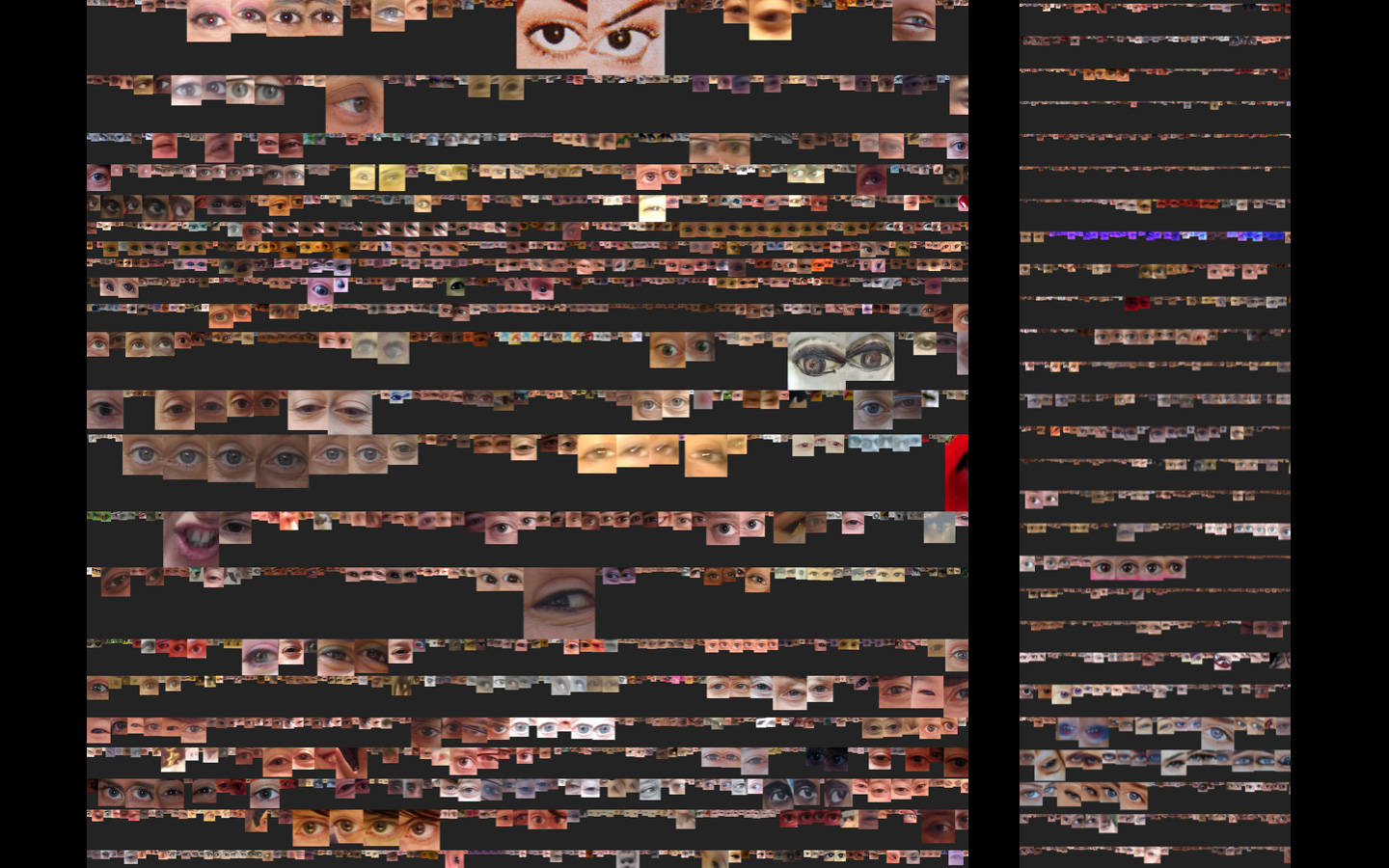

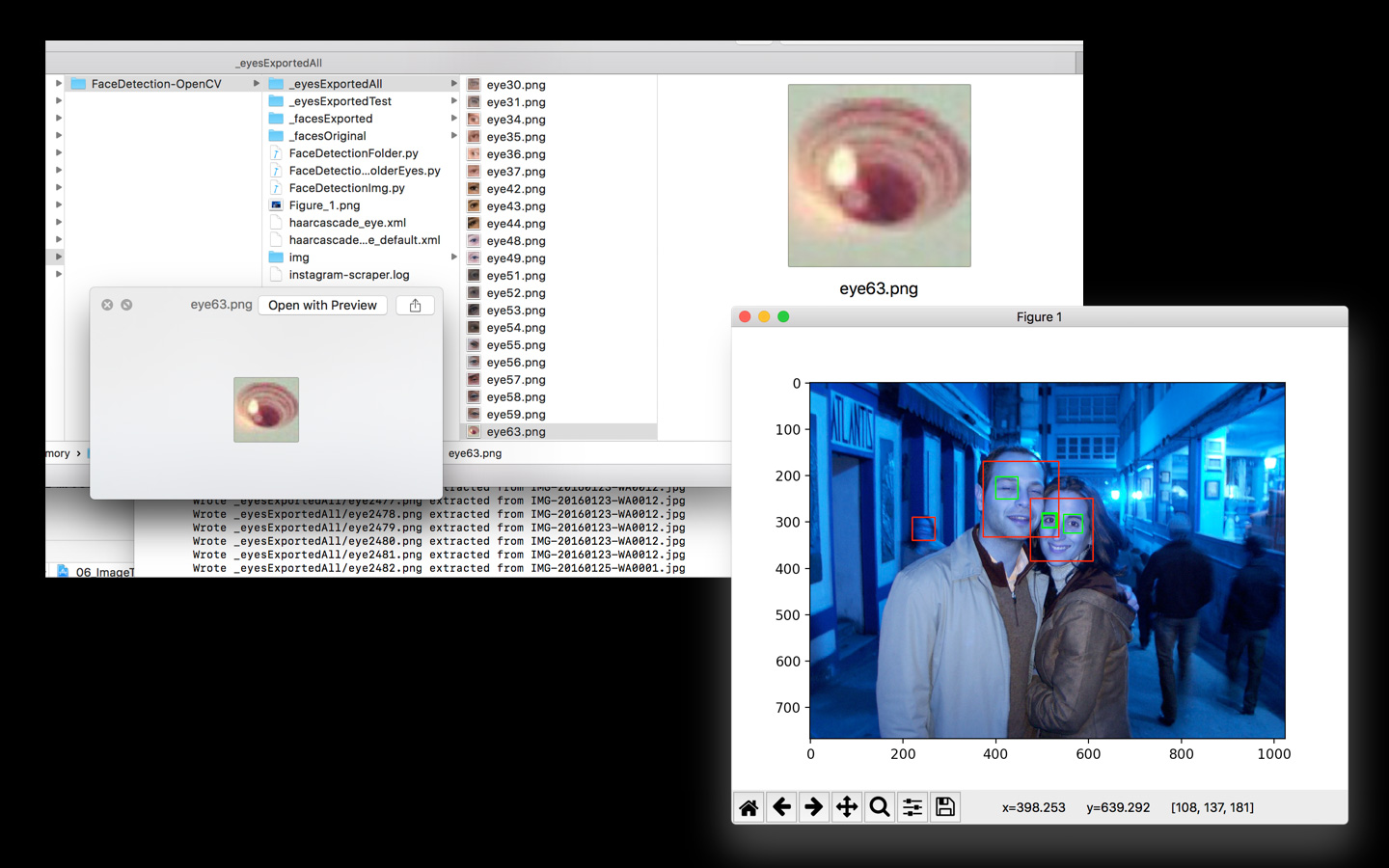

The third component (from now P3) is a physical representation of memories: translucid, complex. From the beginning, I decided the final outcome would be displayed in a transparent material. The images were created specifically for this medium. The big one represents all the images stored digitally in a grid mode. The 50x50cm represents images in a cloud model. The other 50x50cm piece shows the negatives and positives of eye detection algorithm… It is really interesting to see how the machine requires adjustments to get accurate results and has a “similar” behaviour as our brain. The human needs to supervise the computer’s outcome for an optimal result. In this piece, both positive and negative results for eye tracking are displayed. The small one shows an early prototype.

Technical

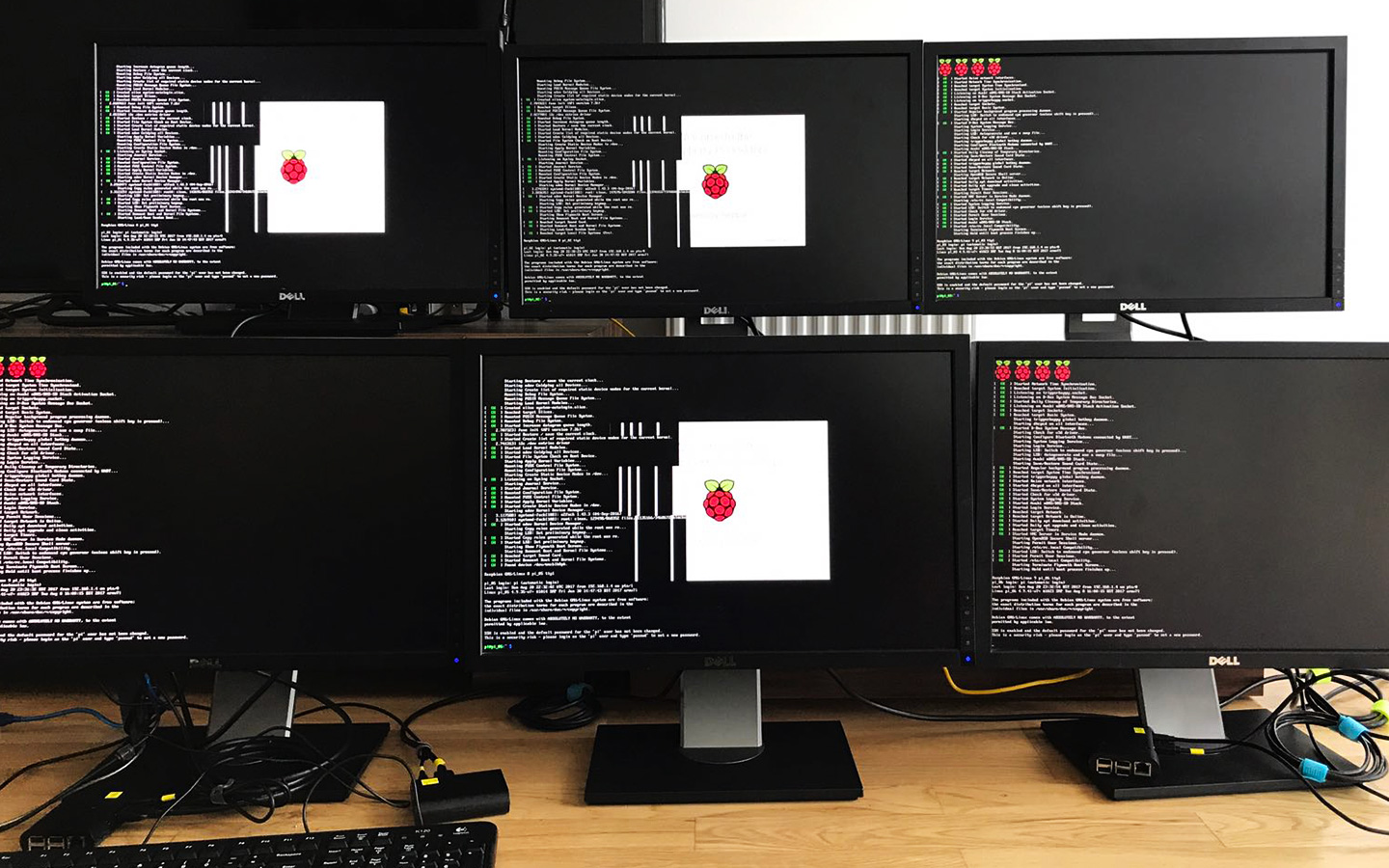

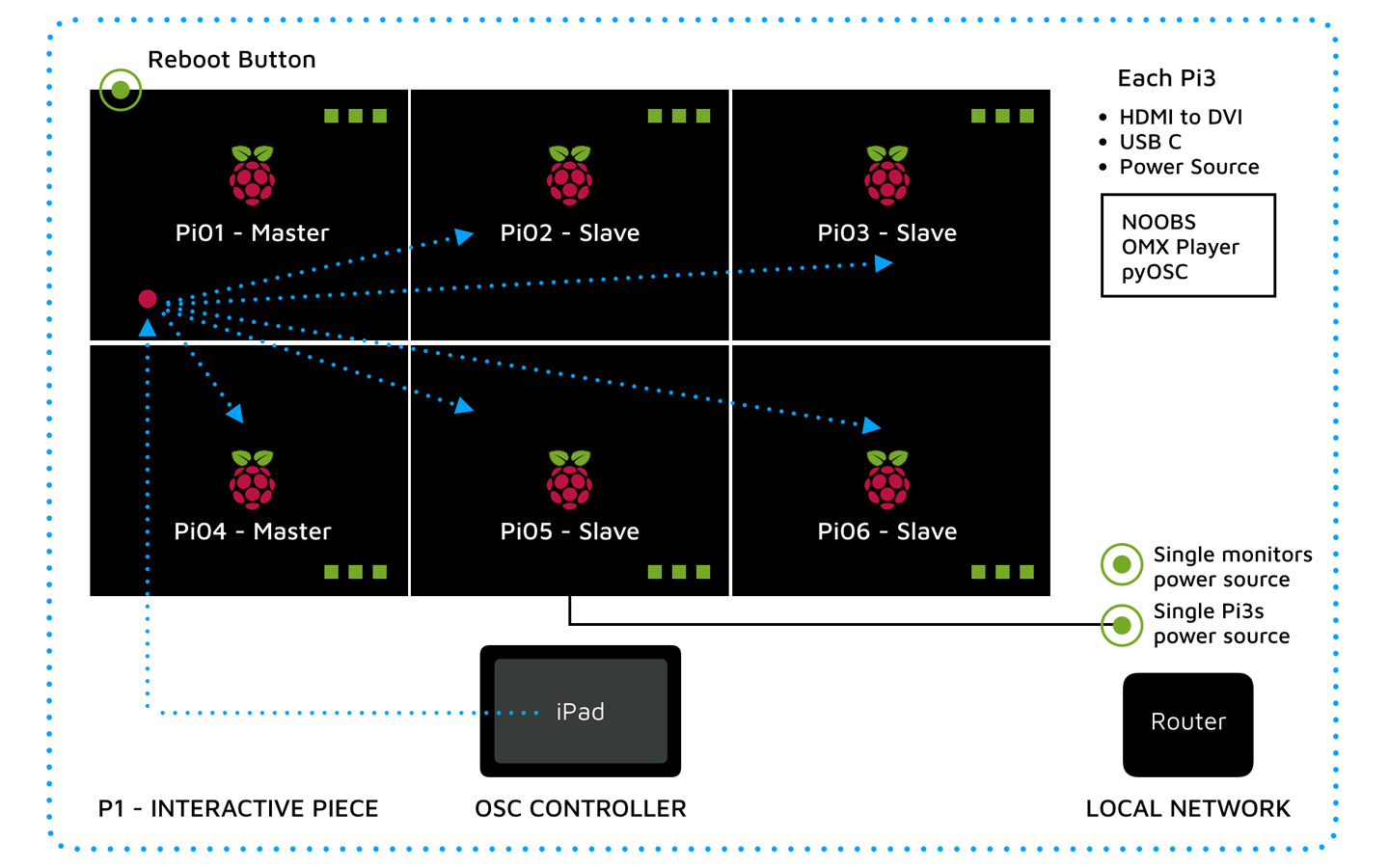

P1 - Interactive

P1 consists in an interactive Pi Wall of six monitors connected together. The interaction with the Pi Wall is done with an iPad through OSC communication. Each of the six monitors is connected to a Raspberry Pi 3. Once each of the Pis has been installed and set up (Noobs, ….) Inside each Raspberry Pi the following code has been included: OMX Player and pyOSC… To access to each of the single Pis in an optimal way, I used iTerm to control them in a single view through my laptop.

The installation displays four different animations recorded as videos. That makes a total of 24 different animations. Activated with an iPad that sends an OSC message to the first Raspberry Pi. Pi01 behaves as a master and broadcast the message to the rest. The first Pi has a physical button to reboot the installation.

To avoid real-time performance issues, the computation happens beforehand and was recorded as a video. In the early prototypes, I installed Processing and Openframeworks to run the animations directly on the Pi, but the processor was not powerful enough. Some of the animations have to process more than 16.000 images and this can take quite a time to render and process. Different techniques were used for the animations:

- Retrieve memories. Processing and Shaders to represent the movement. Each photography is divided by colour channel and a white and black filter is applied.

- Unveil memories. Openframeworks and tSNE. The animation itself with the blur and the unveil parts are done in Processing, using blend modes.

- Non-digital memories. Processing. Each memory is coded as a separated String. At a specified frame rate a character is subtracted randomly creating new words. Until the text disappears completely. To display the background this piece uses a Perlin noise animation. The frame with the photography is created with masks.

- Collective memories. Processing, it uses mask, filter and blend modes. All the images from Google based on survey responses and scrapped using Python.

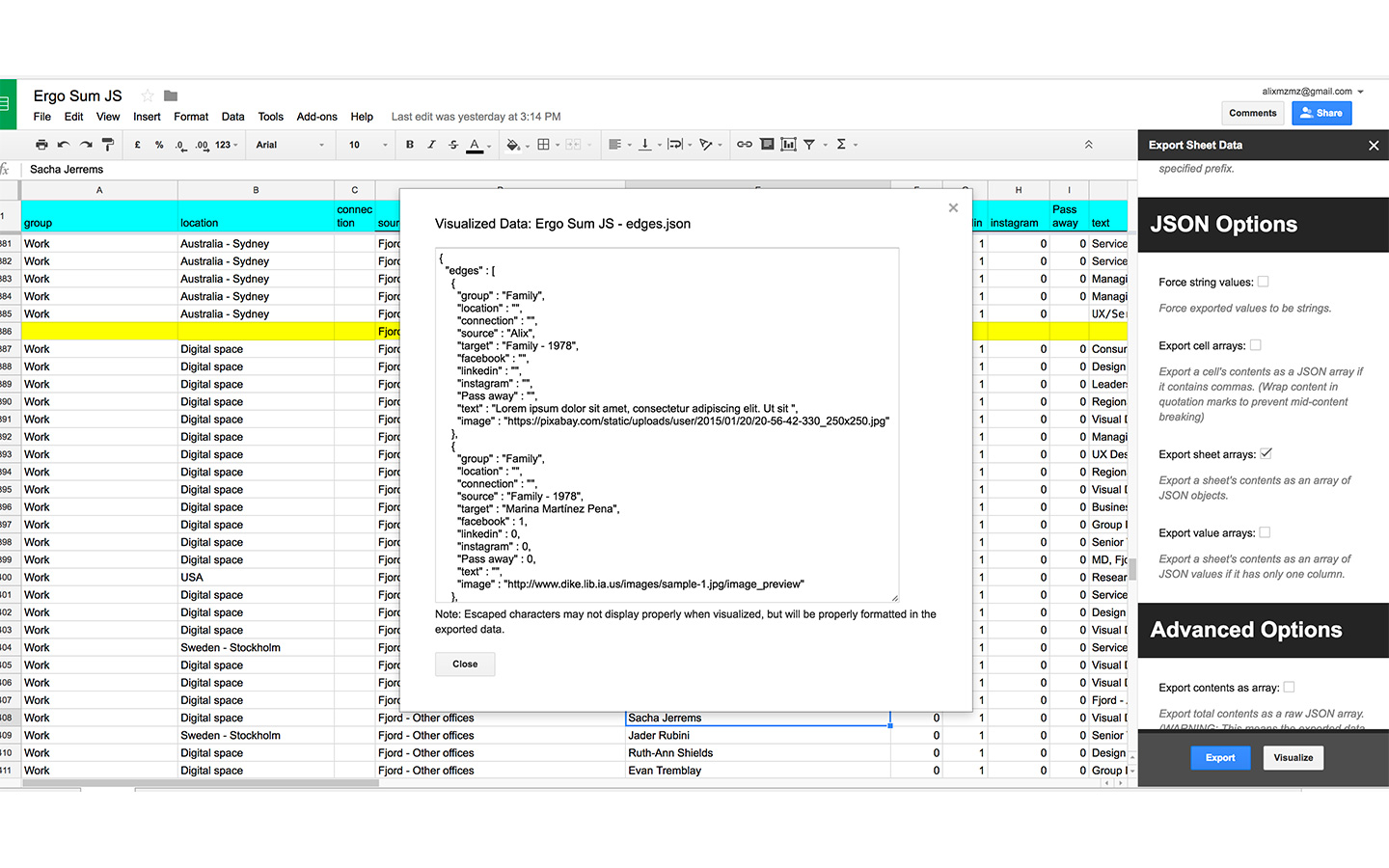

P2 - Ergo Sum

The video recorded uses two different infographic representations created using d3.js, a data visualization library based on Javascript. The first one is a 2D representation and the second a 3D version. To render the 3D view, I used Three.js on top of d3.js. In both cases, the infographics are rendered by loading a JSON file that includes all names. In the different prototypes, the JSON was stored locally. I connected the code to an online version stored in Google Drive, so each time the spreadsheet would be updated, this will appear in the rendering.

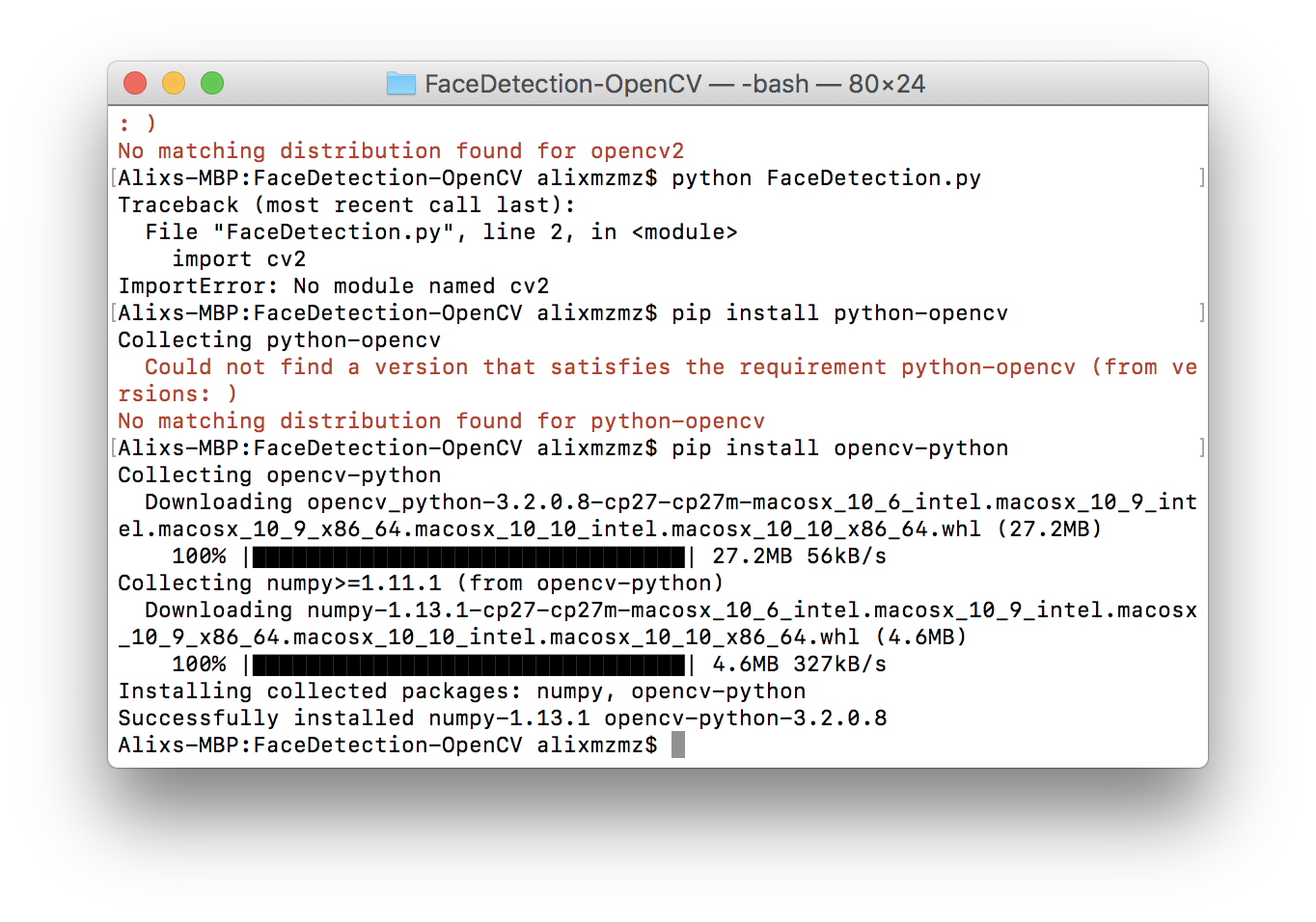

P3 - Physical representation

For these representations, I studied Gene Kogan’s Machine Learning for Artist. ML4A. His work has been an invaluable source of knowledge and inspiration. I have tried his different tSNE prototypes as Clustering images with t-SNE, Image t-SNE viewer, Image t-SNE live… with different configurations and features (Mosaics, Grids…). The grid and cloud representation printouts display the categorization of all the digital images I stored in my life. I used Openframeworks and t-SNE.

One of the key challenges of these pieces was to scale the prototype to use the final number of files. I collected all the images that came from very different sources, sizes and resolutions and unified them. This was a big task in itself. Keeping them with the same size, resolution and even name convention.

For the printout that represents all the eyes in a grid, I started extracting all the faces from the images in my library and then moved to extract the eyes. I used python and Face Detection using Haar Cascades - Face Detection OPenCV. I also included on the bottom of this printout, the false positives images the algorithm recognised as eyes. I supervised manually the outcome to improve the computational work done by the machine. As the brain, the machine also makes mistakes, and as our brain, similar memories are visualized as similar, even if they are completely different.

Future development

Me|more|y will be the exhibit in October as part of the collective exhibition Mortality-Virtuality. Based on the feedback from viewers and, to adapt it to the new space, I will modify the outcome. Probably I will project P2 on a wall in a big scale instead of display in a 50” monitor. Most of the viewers were very surprised by this piece, some of them were very interested in interacting with it to have the opportunity to navigate it. Collecting this feedback with my original idea of giving the viewer the possibility to give the opportunity to add them to the datasheet, in the future I will work to create an online version where users will spend time on the piece.

As an artist, my preference is not to spend too much time with the same theme. I will move on to new one. The main macro theme of my work is myself and my view of the world to deal with my existential concerns and heal my soul injuries. I decide the pieces based on guts, and new tools-techniques-technologies I want to learn. For me, the computational aspect is a tool. The code is the material I use to create experiences to express myself.

Self evaluation

Thanks to all the people who answered the questionnaire. The responses were very varied and inspiring. People contacted back saying they have never reflected on such deep questions. They were very touched reflecting such matters.

Someone described the room as being in the brain of a person, complex, overwhelming… This was a big achievement.

Growth and learning came through pain and facing challenges and we should embrace those. These will make the next piece better. The conciliation of work with the creation of the project was the biggest one. Not having time to access to the lab influenced the decisions to create the outcomes and its elaboration: I’ve created a wood structure to frame the monitors. With more time I would have created a metal structure.

Collaboration. Not being able to do everything by myself was a big frustration. I could not drill the ceiling even with a ladder or lift the monitors when assembled together. Luckily I had the help from colleagues, Pete (our incredible technician) to do these tasks.

Space. Limited access to the space. The room itself was in a very poor state. It took a full day to restore the window. Half to cover the holes. One to paint it and this only before set up my piece. I kept the screens and material at home until the very last minute where I tested the piece at nights.

Test in the final space. When I arrived in the church I faced connectivity issues but I overcome these creating a local network using my home router.

Scale. Running the code and processing more than 16.000 images was quite of a challenge. tSNE with Openframeworks and that number of files was very slow, up to a couple of hours to render. Python was a faster and better solution.

What would I do differently? Creating explanatory labels? People were amazed when I explained the project. Without explaining it, some of the magic was lost.

Committed to the original concept and outcome was another of the challenges. At the same time one of my principal aims. I pushed myself to be very strict in this sense. I believe this is one of the big successes of this project. These above are great learning lessons that I will bring to future work. The biggest achievement and gratification was the feedback from the viewers and the number of questions I received.

References

- Art and Memory

- Troika: Hardcoded memory

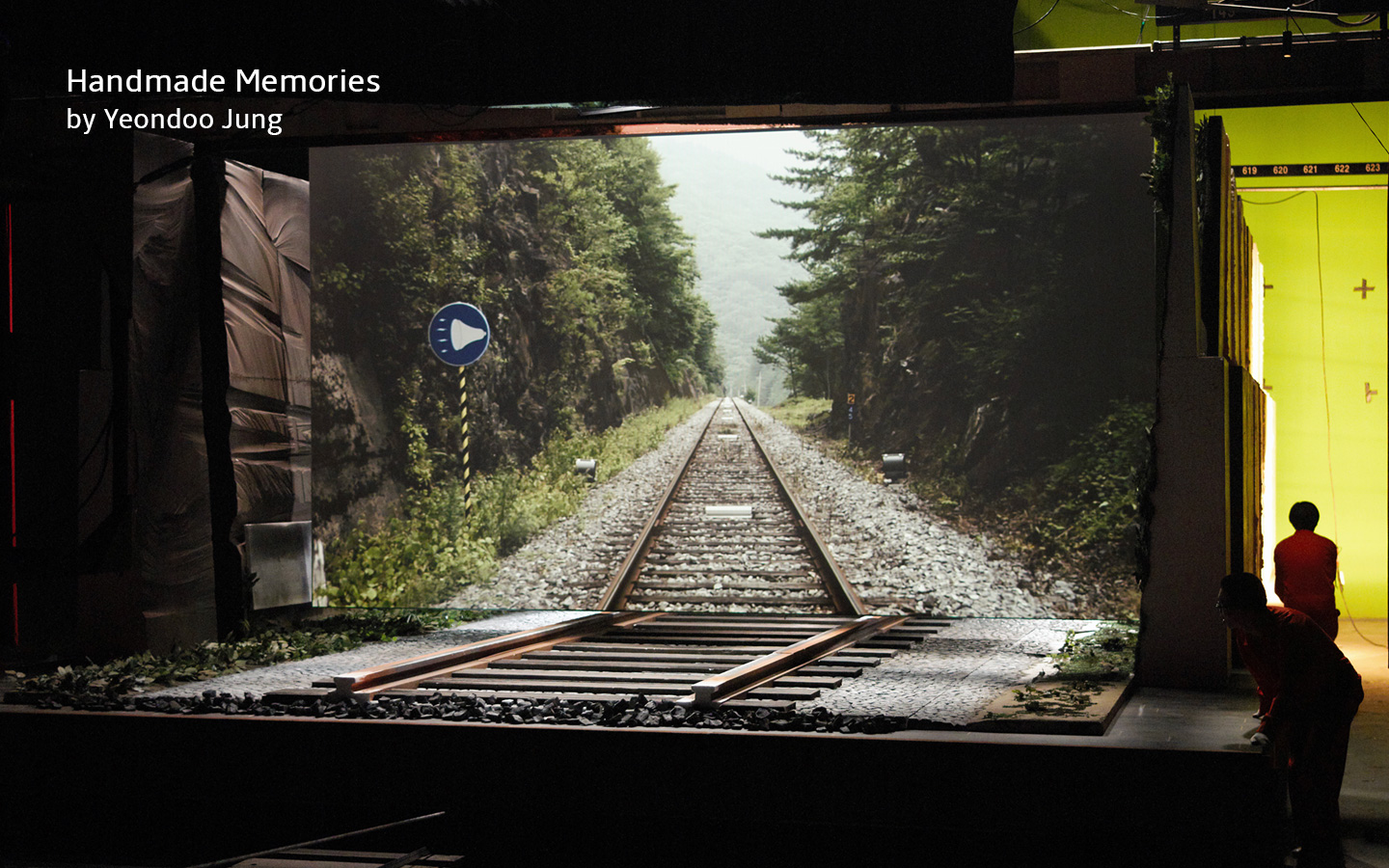

- The elderly share their most vivid memories; Jung recreates the scene.

- Hungarian digital artist David Szauder explores the imperfect nature of human memory by glitching old photographs.

- One second every day

- Music Memory Box helps dementia sufferers recall their past

- Books

- Memory: A Very Short Introduction (Very Short Introductions) by Jonathan K. Foster

- Contemporary Art and Memory: Images of Recollection and Remembrance by Joan Gibbons

- Memory - Documents of Contemporary Art: Memory Edited by Ian Farr

- The Pillow Book of Sei Shonagon by Sei Shonagon

- The Curator’s Handbook: Museums, Commercial Galleries, Independent Spaces by Adrian George

- Articles online

- Your brain stores imagination and memory separately

- Snapchat never forgets with new Memories feature

- Alzheimer’s disease and dementia: what’s the difference?

- Scientists use AI to ‘rewrite’ painful memories in people’s brains

- Design and the Elastic Mind

- Artist’s Memory Loss fuels discoveries about the brain

- Technical References

- Video wall

- OMX Player - Install Wrapper

- Installing Pi

- How to shut down or restart your Raspberry Pi properly

- Install Openframeworks in Raspberry Pi

- Raspberry Pi and OSC

- Batch Resize Images Quickly in the OS X Terminal

- D3.js

- Force Layout with Mouseover Labels

- Multi-dimensional D3 force simulation

- Exporting a Google Spreadsheet as JSON

- Three.js

- Gene Kogan’s work and guides.

- ofxCcv

- ofxTSNE

- Face Detection using Haar Cascades - Face Detection OPenCV

- Object Detection: Face Detection using Haar Cascade Classfiers

- Face Detection on Your Photo Collection in Python

- Design and Look and feel

- Typography: Typo Pro Amble and Cooper Hewitt

- d3 Ergo Sum Background image (edited): Mark Asthoff

- Exoplanets

- Sound

I would like to show my gratitude to my colleagues: Howard Melnyczuk, Amy, Cartwright and Arturas Bondarciukas for the documentation work they did and shared. To the Goldsmiths lectures and technicians that helped through the process and realisation.