Jittery

Jittery is an interactive sculpture that uses the movements and sounds of servo motors to capture the visceral repercussions of the anxious Asian body. I use my own history of anxiety with Asian racial politics in the United States as vectors for a larger narrative on the human condition, of being hidden and being seen, and on a more intimate level, the anxiety of navigating the world as an Asian immigrant.

produced by: Timothy Lee

Introduction

"Jittery" is an interactive sculpture that explores my anxiety around Asian racial politics in the United States, and particularly with the societal expectations for the Asian American community to be demure and/or non-confrontational. The work responds to the proximity of the viewer by emitting hostile jittery sounds and movements as if to confront the violation of space. My interests in using the movement and sounds of servo motors to portray this anxiety and shift in behavior stems from previously using servo motors for the Physical Computing courses at Goldsmiths. I was inspired by kinetic works by artists such as Daniel Rozin, whose animated sculptures of individual motions that generate a collective movement, inspired me to have the same animation in my own works. In playing around with the circuitry and corresponding behaviors of servos, I loved the visceral sounds they created and the often jittery effect they could display - the title of the work is derived from this behavior. The de/activation of the painting based on the viewer's engagement with it highlights my anxiety that comes and goes, particularly in line with the longstanding assumptions by society that the Asian community is invisible.

Concept and background research

Beyond the personal references of this work, the origin of this work came from an interest in creating a likeness of oneself computationally, robotically, or algorithmically. Since the first near-real-time facial tracking software was developed by Matthew Turk and Alex Pentland in 1991 at the Massachusetts Institute of Technology (Turk & Pentland, 1991), there has been an obsession in the ability to track and record our faces and tie them to our identity; indeed, the association between our face and our identity has been solidified by social constructs, cultural influences, and the institutions we live in (Cole, 2012).

I am interested in the relationship between a person’s face and their sense of self, and was able to resonate with works by artists and activists who contemplate not only the social but also political implications of tying our face with our identities. Artist and specialty mask maker Kamenya Omote produces three-dimensional face masks of strangers’ faces to sell. These realistic masks were created by printing onto a three-dimensional model created from the model’s face. Omote’s “That Face” series tunes into society’s preoccupation and obsession with our likeness, and creates masks in an era where you can, through plastic surgery, literally buy and sell a face. Industrial designer Jing-Cai Liu developed a wearable projector that projects different faces onto one’s own face as a response to the use of commercial mass surveillance. The project explored themes of the dystopian future, provoking debates about the “emerging future” and creating solutions to maintaining the privacy of our identities (Liu, 2017). By superimposing another person’s face on top of your own, Liu claims their identity is guarded and the wearer is protected from “privacy violations” (Liu, 2017).

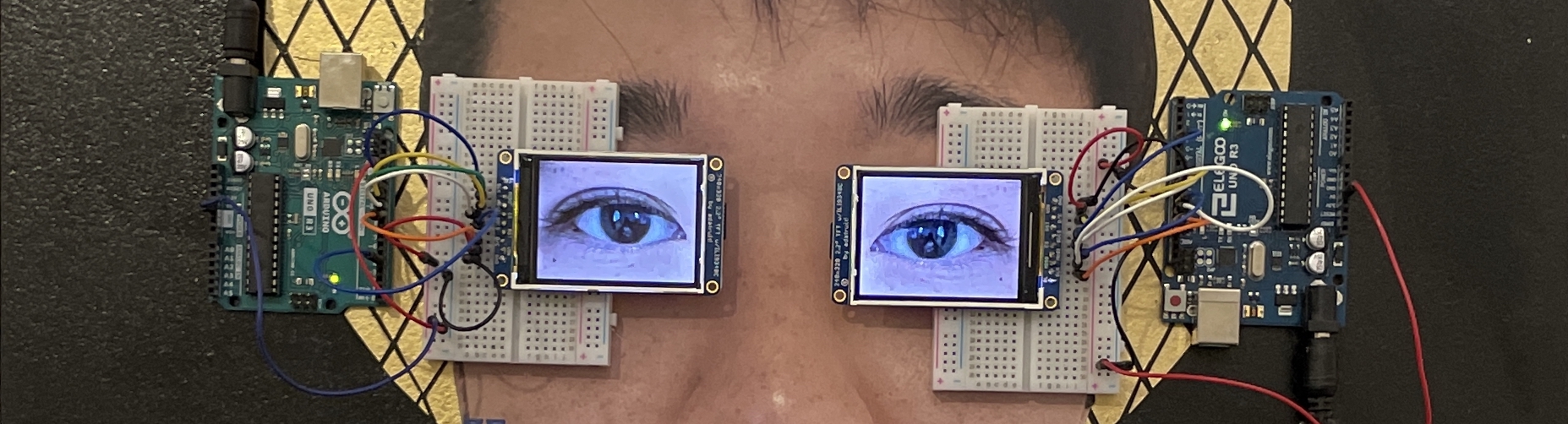

I chose to create this self-portrait computationally because I find it interesting to explore the capabilities, and limitations, of using physical computing as a method of expressing abstract human experiences. The inspiration to convey my anxiety through computation stemmed from an experience listening to the frantic rotations of servo motors – they sounded like a chaotic nest of chirping birds, and it encapsulated the sensation of the jitters I experience during a panic attack. This human-machine interaction became a point of interest for me, and I chose to replicate human conditions through physical computing. In particular, I fixated on the digital display of the eye – often considered in cliché expressions as the window to the soul, but reproduced as a .bmp file. The eyes and the mouth are also signifiers of identity; as an Asian-American, these were facial cues that I paid particular attention to because of society’s preoccupation with the Asian silence and the Asian eye. The screens suggest windows to somewhere – but what is shown is a never-changing captured moment of an anxious gaze. Ultimately, the concept of my project materialized into a hybrid painting-sculpture because there are parallels to be drawn between the scrunity paintings undergo from our gaze and the same gaze with which the Asian American community is often looked at.

Technical

In developing the technical side of the artwork, there were three milestones that needed to be achieved: to display an image of my eyes on the two LCD screens, to program the servos to move in a waveform, and to create interactivity in the piece whereby it responds to the distance of the audience.

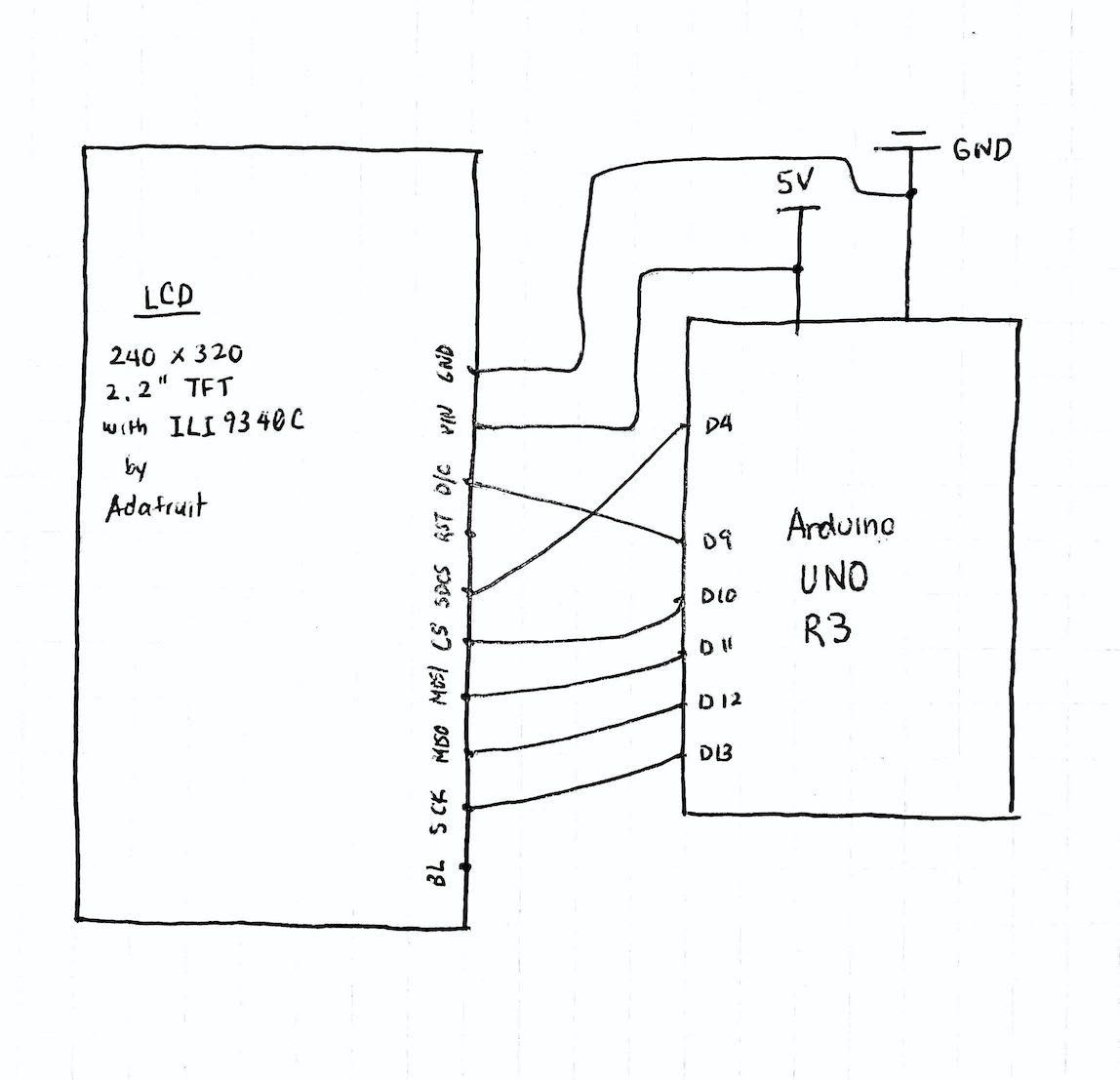

Uploading the image to the LCD displays was fairly straightforward - I used a circuit reference from Adafruit's resource site to wire my Arduino UNO R3 to a 240 x 320 2.2" TFT w/ ILI9340C LCD shield. Each shield, corresponding to each of the two eyes, was attached to a separate Arduino and wired the same. I then converted a photograph of my eye into a .bmp file and uploaded it to the LCD by calling it from a micro SD card that it was stored in. In adjusting the code, I opted to call the image in setup() rather than loop() because of the uploading feature of the LCD displays; whenever the image was re-uploaded during each iteration of the loop() cycle, there would be a flash of a blank white screen that disrupted the eerieness of a constant gaze. Likewise, the top-down nature of the image upload made it awkward to switch between images of open eyes and closed eyes to simulate the effect of blinking. In the end, I opted to have a stagnant image displayed that suggested a continuous, unbroken, stare from the painting to the audience. The advantage of leaving the image to be called only once in the setup() stems from the technical challenges of communicating between two separate arduinos. There was a possibility of initiating communication by attaching a sensor to one arduino that the other could respond to, but in the end, the computation would have had no effect in the end-result of the work.

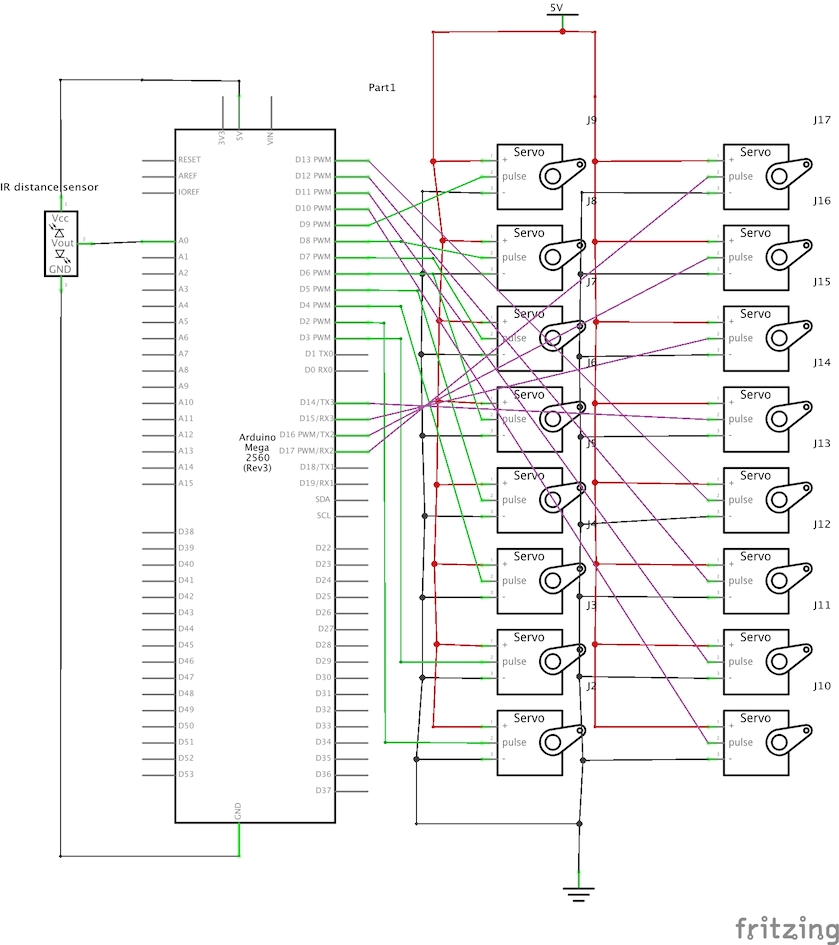

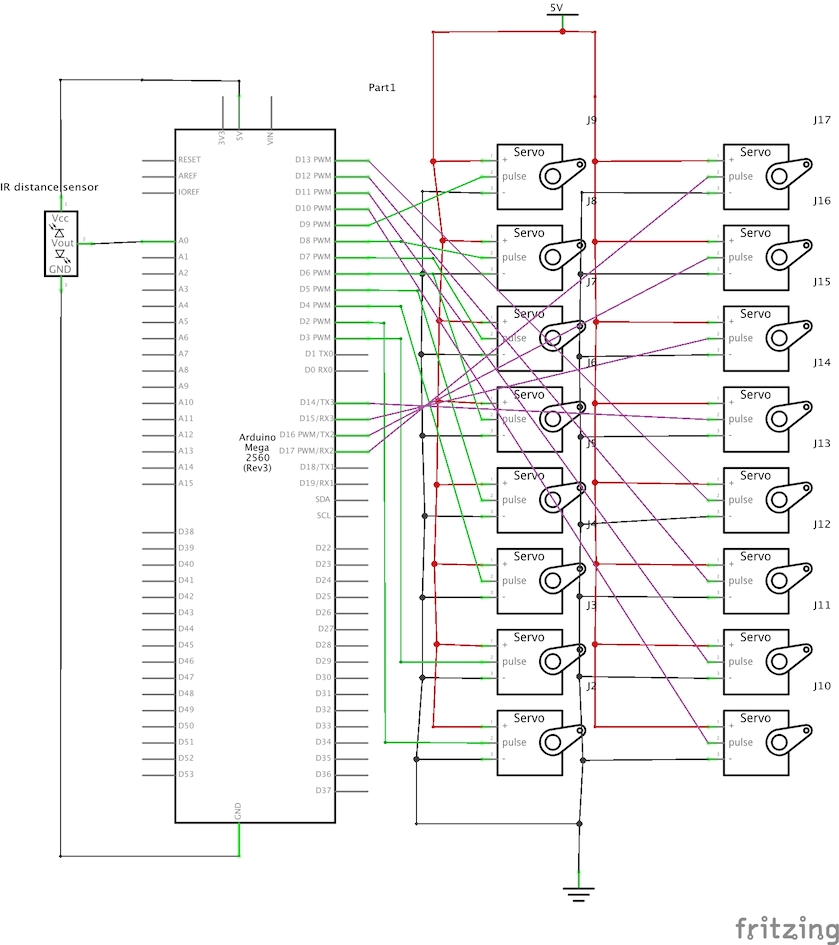

For my servo movements and the interactive component of my work, I used an ARDUINO MEGA to connect 16x 9g microservos as well as an IR distance sensor. Because of the collective draw of electricity that the servos require, I chose to wire the servos to an outside DC power supply (5V, 20A) and powered the ARDUINO MEGA separately. Because I had originally built and wired my piece in New York, there were differences in wiring that I had to adjust to when presenting in London. Namely, the concept of ground in the United States is slightly different than that of earth in the United Kingdom when it comes to electricity. Grounding means providing a return path for the current. Earthing discharges the electricity into the earth. As a result, I had to connect a new wire from my external DC power supply into the GND port of my ARDUINO MEGA. This stems from the properties of the earth wire in the UK and the need to ground the signal coming out of the Arduino to enure the circuit is connected properly.

In programming the behavior of the servos, I wanted them to rotate in a waveform across the horizontal plane. I positioned each of my servos in columns, and programmed all the servos within each column to successively rotate at 45 degrees. The end result is an illusion of a wave - the servos rotate in a smooth movement from the left side of my face to the right side. The interactivity of the piece comes from the communication between the servo motors and the IR proximity distance sensor. The sensor is connected to the A0 analog input on the ARDUINO MEGA, and can measure the distance between itself and an object that is in front of it in cm to a fair degree of accuracy and consistency. As a result, I created a conditional statement whereby if the distance of the viewer in front of the IR sensor is less than 90cm, the servos would begin to rotate at a random angle between 0-90 degrees. Otherwise the servos would continue rotating down the row in a wave. I decided on the distance of 90cm because that is, to most people, the extent in which you invade personal space. As a result, I wanted the servos to respond violently and in a confrontational manner when the artwork's personal space was violated.

Bringing it all together, the wiring of the artwork was challenging because there was a lot of wires to keep track of, and make connections with. I chose to clean up my wires as best as I could and connected them to other relevant lines using a wire nut, to ensure everything was clean and insulated. The wiring around the LCD displays were delicate; shaking the work would often cause the screen to go blank, at which point the Arduino would need to be reset to resolve the issue.

Future development

In developing this work further, I would love to create this pieces as a series, with servos that all act independently but also in sync with the rest of the works. The idea of multiple, same, iterations of this piece, with the exception of the manner of portraiture shown, would highlight concerns about the Asian American community as a whole and remove the spotlight from individual experiences. In depicting my own face in Jittery, I wanted my personal experinces to begin a conversation about anxiety and identity, and ultimately become vectors for social narratives. Ultimately, I want my works to generate a larger, more universal, conversation about societal expectations on communities and the anxiety that stems from one's resistance to conform.

Self evaluation

Ultimately, this project allowed me to explore physical computing in a way that I could convey abstract human conditions such as anxiety and the emotional mannerisms. In reflecting back on my research and the process, I wish I could have created more works in series to present in the final exhibition. I believe the impact of the interaction between my work and the audience would have been amplified by the number of works present - a group confrontation over an individual one. Unfortunately, I was limited by what I could physically bring with me to London because I had been studying the past year completely remotely in New York. In addition, I would make the switch between the waveform "normal" behavior of the servos to the random "hostile" one more streamlined - perhaps to have a better transition between the two states. It could emerge through having more and more servos act randomly as the audience member gets closer to the work. However, I was pleasantly surprised with the sounds that the servo motors made and how they were able to accurately capture the two emotional states of the piece.

References

Bibliography:

Cole, S. A. (2012). The face of biometrics. Technology and Culture, 53(1), 200-203. Retrieved from https://gold.idm.oclc.org/login?url=https://www-proquest-com.gold.idm.oclc.org/scholarly-journals/face-biometrics/docview/929039973/se-2?accountid=11149

Hyperallergic (2020). Japanese Shop Sells Hyperrealistic 3-D printed face masks. https://hyperallergic.com/610180/japanese-shop-sells-hyperrealistic-3d-printed-face-masks/

Liu, Jing-Cai. Wearable Face Projector (2017). Retrieved from http://jingcailiu.com/wearable-face-projector/

Simon, Bernd, 2004. Identity in Modern Society. A Social Psychological Perspective. Blackwell, Oxford.

Turk, M. & Pentland, A. (1991). Eigenfaces for Recognition. Journal of Cognitive Neuroscience, 3(1), 71-86. Retrieved from https://doi.org/10.1162/jocn.1991.3.1.71

Code/Circuit References:

https://www.toppr.com/guides/physics/difference-between/earthing-and-grounding/

https://learn.adafruit.com/2-2-tft-display/adafruit-gfx-library

https://github.com/adafruit/Adafruit_ImageReader

https://github.com/adafruit/Adafruit_ILI9341

https://learn.adafruit.com/adafruit-mini-tft-0-dot-96-inch-180x60-breakout/drawing-bitmaps

https://www.avrfreaks.net/sites/default/files/forum_attachments/exmaplesketch.txt

Physical Computing I & II modules taught by Jesse Wolpert

Special Thanks to Robert Hall for his guidance