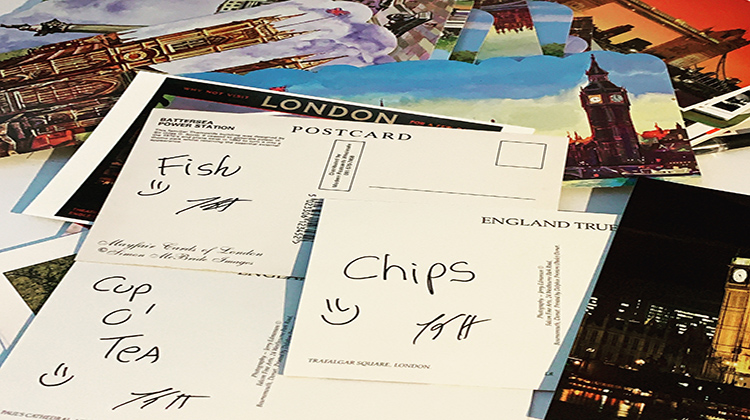

Fish, Chips, Cup 0'Tea

"Yes, London. You know: fish, chips, cup 'o tea, bad food, worse weather, Mary fucking Poppins... LONDON." (Snatch, Guy Ritchie, 2000).

produced by: Kris Cirkuit

Introduction

"We all have a story to tell. Do we tell the truth? To whom do we tell what? Which version of events is relayed to the listener? Is all narration reliable, or are we all unreliable narrators?

Background and concept

I aimed to create a performance where the audience helped create an interactive narrative that was unlikely to be repeated. I wanted to provide a unique insight into my journey in living in London and leaving London after 25 years. Each member of the audience is given a postcard of London. On the back of this postcard are one of three options "Fish", "Chips", or "Cup 0'Tea". The distribution of the postcards is not equal. "Cup O'Tea" is far more common than "Fish". "Chips" is somewhat likely. My performance is determined by what postcards I draw. It is generative by human interaction as well as by computational processes in Max.

Some of the tales I tell will be true. Some will be lies. Most people will not know what is true and what is a lie. I will lie about the postcards mattering. In one scene, it does not matter what postcard I draw. The result is always the same.

"Fish, Chips, C'Tea" comes with a trigger warning regarding noise, flashing images, potentially upsetting images, and strong language. This is not a work for the faint-hearted to experience. It is, however, necessary- this is what the work needs to fulfil my conceptual ambition.

The artists Ryoji Ikeda and Ryan Jordan were massive influences on my final piece. I love both of their work, and what I find most inspiring about them and their work is that it is bold. They produce brave work that can be very intense but benefits from that intensity. Both of these artists have the potential to be very loud and again I love the almost impoliteness of that. Art, in my view, is often perceived as needing to be quiet, like the hush of a white cube gallery. I chose to disrupt the quiet narrative. Below are some links to some of my favourite works by Ryoji Ikeda and Ryan Jordon.

http://www.youtube.com/watch?v=cT6llBCtY3Y&ab_channel=FACTmagazine

http://www.youtube.com/watch?v=rLQfsniCA8I&ab_channel=HorseyofGormenghast

I wrote most of the music in my work. I generated some of the sonic work in Max. Some of the music I wrote in Ableton. I deliberately wrote some of the music in Ableton instead of using generative processes to create a specific atmosphere. Using Ableton, I produced high-quality work that complimented the piece, and I plan to use these songs for release on my label.

I did not write all of the music. I did, however, receive written permission to use any copyrighted material. The songs and the attitude behind those songs were a significant influence on my final work.

There is one scene in which I am talking/shouting about my life. I did not write these lyrics. They are from the song "Frustration" by The Restarts. I sent a message to Kieran and got permission to use the lyrics. He agreed, and I received written permission.

In another scene, I am talking about regeneration. I am talking about the destruction of communities for the sake of luxury flats, chain stores, and the massive social inequality present in London. As I was filming this scene, the song "Sick of Capitalism" by Chris Liberator and Stirling Moss ran through my head. I wrote to Chris and asked for permission to use a sample, and I received written permission.

Links to both of the works mentioned above are located in this blog's "References" section.

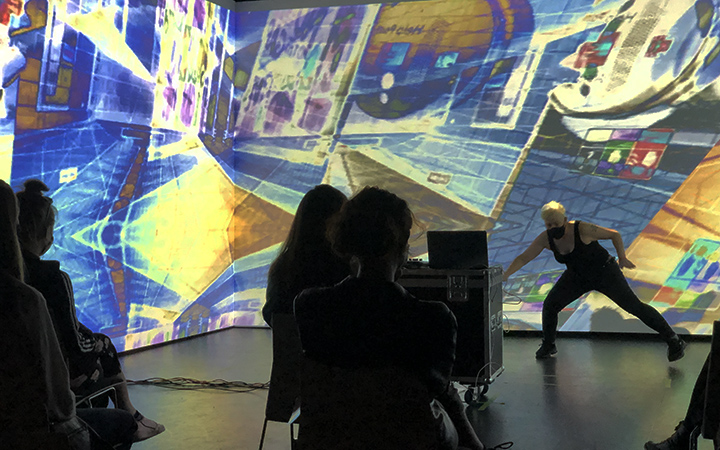

More conceptually, the work plays with the notion that the audience is also a performer. The audience co-creates the story with me. Each unique performance is a journey that we all take together. It is about for a short time building a small community in the space. I love the mystery of the performance- even I do not know precisely what will happen!

As the performance is never quite the same, I have added some additional photos- There is a gallery at the end of the blog!

Technical

The Code

The code is in Max. I split the work into several components for clarity and ease of use. I created a modular work where each part can be easily altered for further development or another performance. To use computational power effectively, I split the code- the video runs in Gen and is therefore predominately run off the GPU. I had never worked with Gen previously, so studying this part of Max and learning to write shaders took effort and research.

The audio processing runs on the CPU. I chose to work with some aspects of Max that I was familiar with while still expanding my knowledge. I used techniques such as FFT processing of my voice to control video, amplitude measurement to affect saturation, contrast, video speed, and controlled random triggers to alter the video, thus linking the sound to the visual work.

The splitting of the computational load enabled me to run the whole performance on my laptop and have enough CPU left over to power a multi-channel soundcard. I also custom mapped my Novation APC 40 to trigger events and control the sound in the performance.

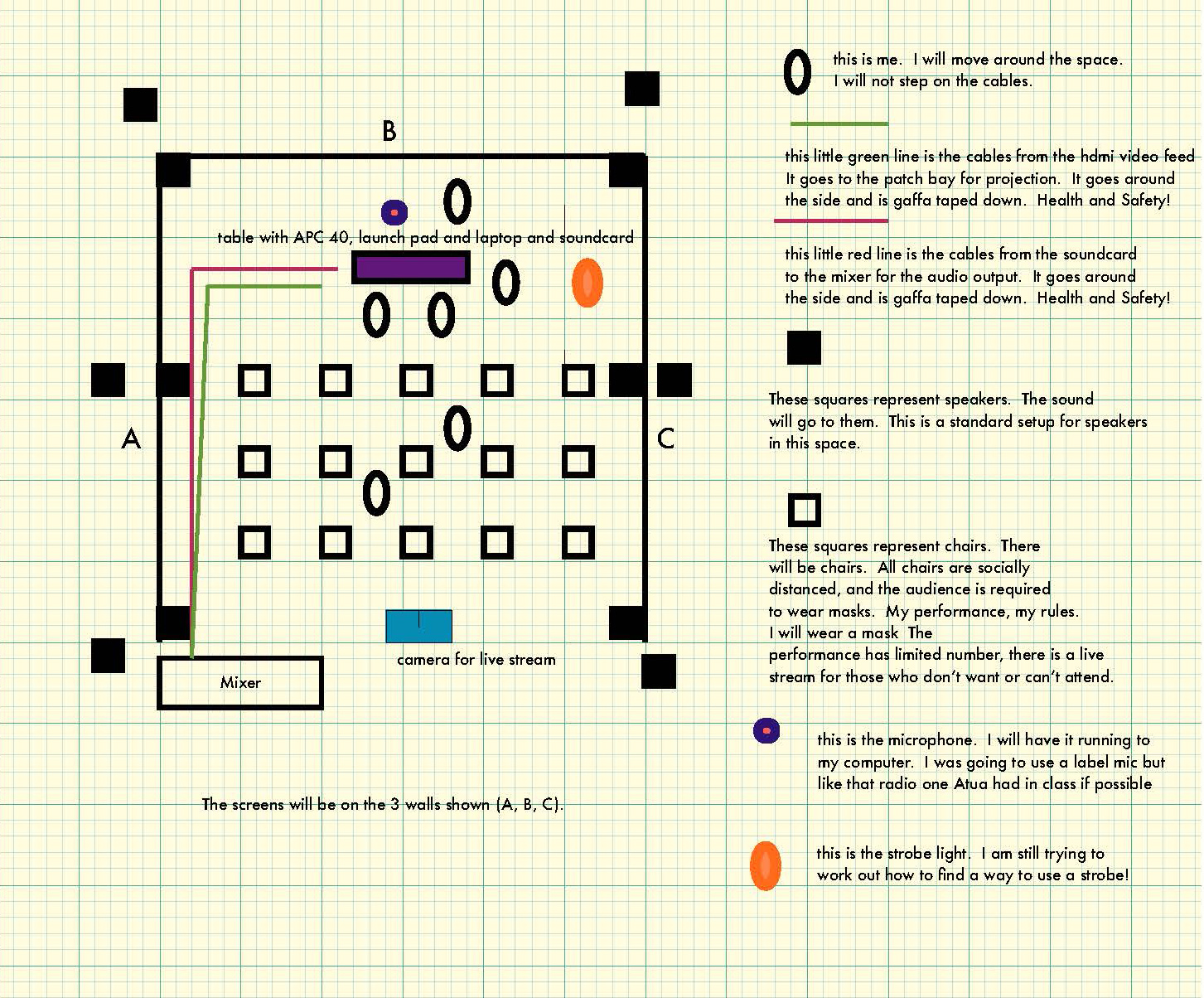

The Room

G05 is a great space. It also required considerable work, patience, determination and study to make the best use of it. Below is my tech rider from which I planned my use of the space.

I used multiple channels on my soundcard routed to a side patch bay and then patched into the main sound mixer. I chose this setup because it minimised the cable length to the patch bay as it was off to the side instead of running cables through the audience.

This digital mixer has an uncommon internal routing system. It is very logical, but I needed to learn the logic behind it! Once I got the hang of it, it was straightforward to choose which speakers to send my sound to and adjust the volume and equalisation for each bit of the performance.

The video setup uses a local network and two siphon streams. To successfully run the video, I created two different siphon servers in Max. I then used NDISiphon to send the video to the central Mac near the sound mixing desk at the back of the room. This Mac ran MadMapper software, and I used this to create a range of video outputs.

I controlled the MadMapper scenes from my APC 40, which was on stage. I achieved this by mapping the APC 40 to send messages via OSC to control the scene changes.

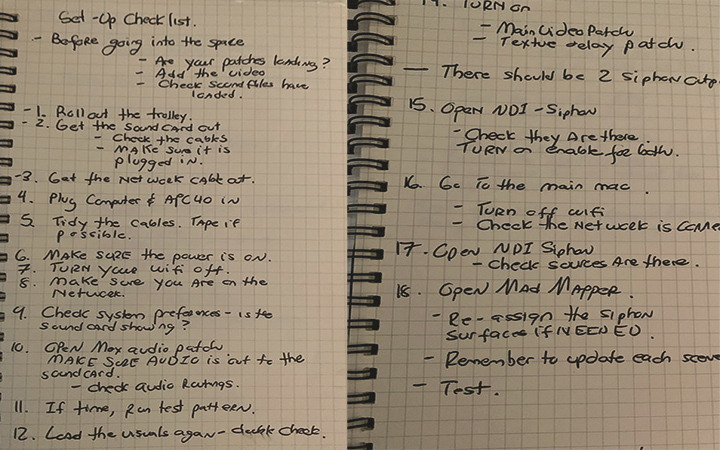

The setup procedure was complex and required everything to connect smoothly. In the end, I chose not to live stream as the set-up was already very intense. The photo below is one page of my pre-performance checklist.

Future Development

I was unable to use a microphone successfully in G05. Due to the surround speaker system, I encountered unpleasant feedback despite my best efforts. I went so far as muting the back speakers so that I was not in front of any live speakers and placing a lapel mic under my facemask. Despite this, there was horrible feedback. If possible, I would like to use a microphone for clarity and looping/controlled feedback experimentation in future iterations of the piece.

The story continues and changes form. I want to perform the piece again and develop new scenarios and scenes. Overall the work was well-received, and I imagine it could be performed in various settings, including theatre and festivals.

Self Evaluation

I did well on this performance. I achieved what I set out to do, and I learned a lot through the piece's development and implementation. There is still room for improvement. Some of the code is very simple, and could I could go deeper without adding unnecessary complexity. I could have used buffers to store live microphone input and avoid the feedback issue but decided against it. Using the buffer method may have improved my performance. I used the live sequencers in Max to organise video files through triggering midi notes. This was not the best way to manage the code, and although I am glad I experimented with it, ultimately, it was not the best way forward.

References

The Restarts. 2004. System Error. UK https://www.youtube.com/watch?v=gLvoyaecL9s&ab_channel=TheRestartsChannel

Liberator, Chris and Stirling, Moss. 2012 Sick Of Capitalism Want My Money Back! Stay Up Forever Protest 03. UK. https://www.youtube.com/watch?v=H78mxTWIJF0&ab_channel=GERMSGEMS

Costa, M and Field, 2021. A Performance In The Age Of Precarity. Bloomsbury Press, UK.

Winkler, T 1998, Composing Interactive Music: Techniques and Ideas Using Max MIT Press, United States.

Taylor, Gregory. 2018.Step By Step: Adventures in Sequencing With Max/MSP. Cyling74, USA.

Jitter Tutorial 41: Shaders (In the Max documentation).

Jitter Tutorial 42: Slabs and Data Processing (In the Max documentation).

Jitter Tutorial 43: A Slab Of Your Own (In the Max documentation).

Max Msp 7 tutorial: Psychedelic Jit GL Gen Video Manipulator. Programming For People 10 Jan 2016 https://www.youtube.com/watch?v=2i2E-t73jww&ab_channel=ProgrammingforPeople