Noisy Body

Concept

Noisy Body is an audio reactive piece which uses the Kinect sensor to generate a 3D pointcloud which is then made to respond to sound/music. The title reflects the two different noise elements that drive the body's movements and behaviour: first the body is audio reactive, responding to noise (or sound), second the body’s animations and behaviours are driven by Perlin noise. The piece aims to be sensorially immersive with movement, sound and space all playing off each other to distort the subject's figure to produce abstract images and forms.

The piece hopes to put into focus the viewer, with all visual elements being derived from the subject's body and surroundings. By using sound and noise to distort the image, one's figure constantly shifts from being familiar to unrecognisable and abstract.

Through the use of the Kinect's depth sensing capabilities, the piece creates a sort of out-of-body experience for the viewer with the perspective rotating and zooming.

The following video is taken directly from the Processing sketch:

Technical Details

Noisy Body is built on Daniel Shiffman's 3D pointcloud example. The 3D pointcloud base allows for the perspection panning and zooming shown in the piece. Modifications were made mainly in adding audio-reactivity to the individual points of the pointcloud.

For sound analysis the Minim library was used for its FFT capabilities. FFT allows for a raw sound wave to be organise in terms of frequency - essentially sorting frequency bands in accordance to their pitch. The Minim library allows for different audio inputs to be used (e.g. microphone or computer audio), for the sake for recording and fidelity my piece used the internal computer audio, but for installations and more real world interactivity microphone input can be substituted in seamlessly.

In order to create the audio-reactive body morphing effect, the x,y coordinates of the pointcloud points (drawn as lines) are converted into a 1D indexed list which then has the modulus operator applied, allowing the programme to loop through selected frequency bands of the FFT to modulate the sizing of the specific points. In addition, each point is rotated by noise mapped to (x, y) to create a more fluid and organic animation and form.

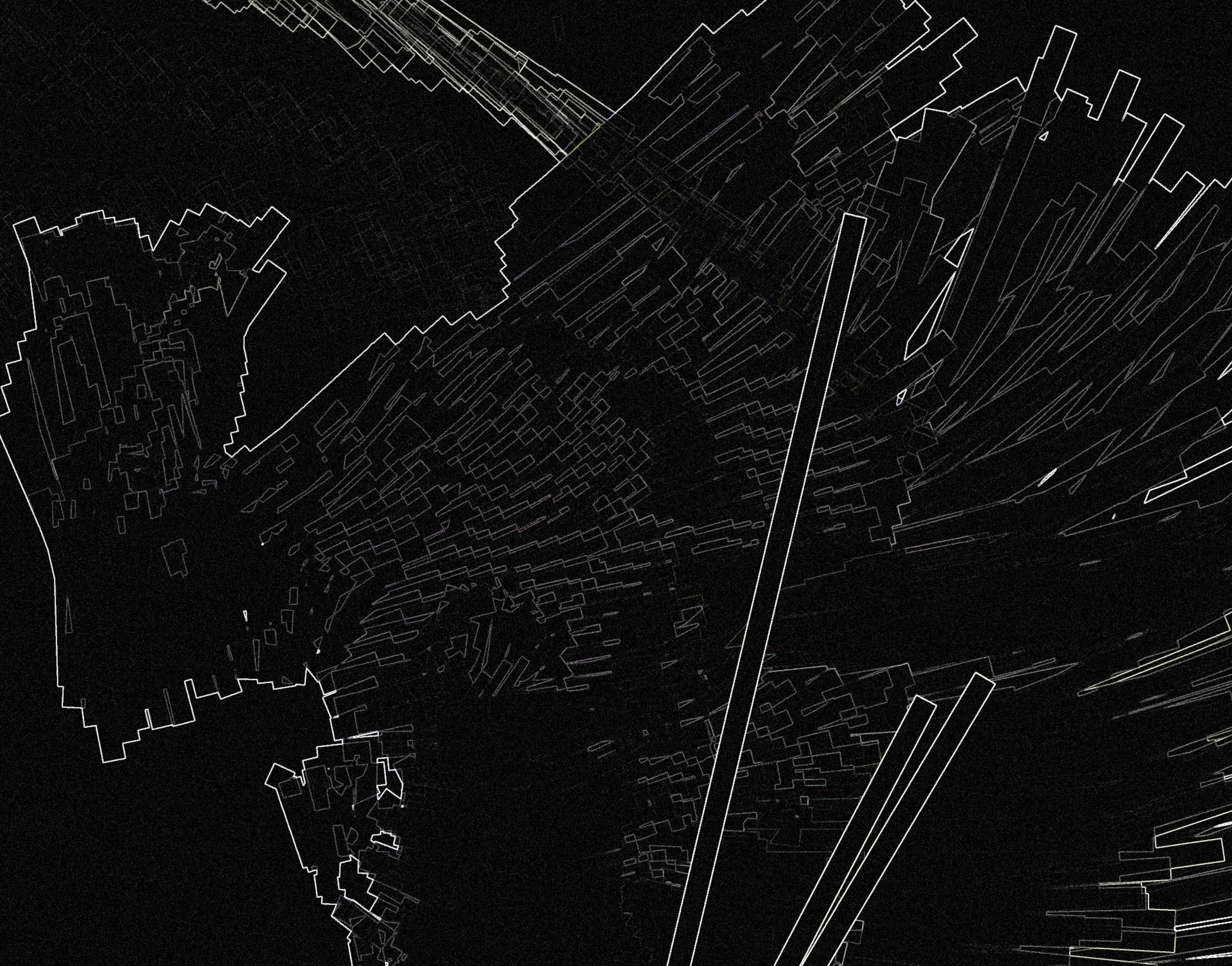

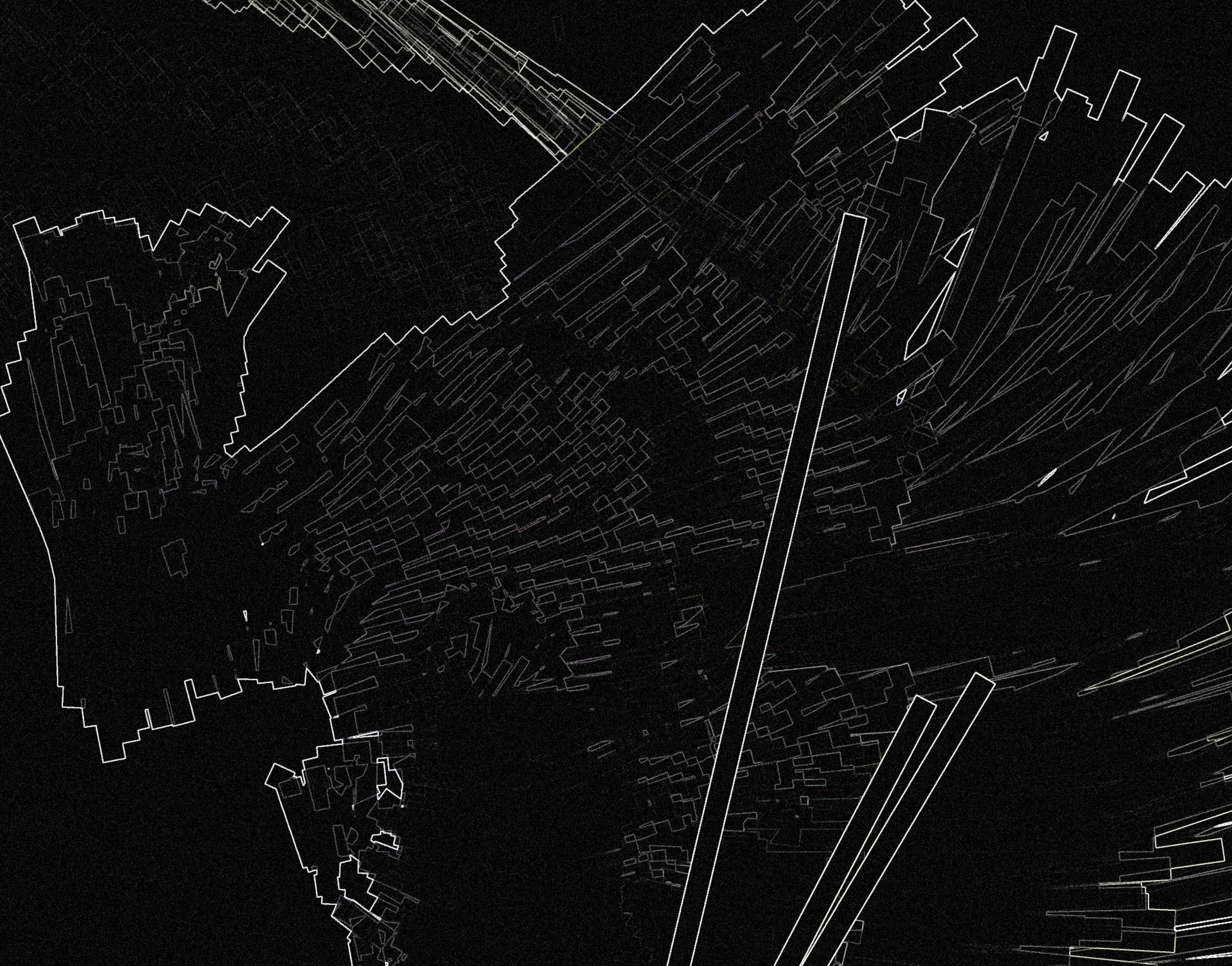

For the final gloss of the piece, the PostFX library was used to apply some final effects on the piece - specifically the sobel effect (creates outlines) and noise effect (applies grain to whole image) were used.

More than a standalone Processing sketch, this piece is intended to be used a flexible live performance tool for sound artists, VJ’s or dance and theatre productions. The sketch includes adjustable variables (gain, smoothing and object scaling), allowing the behaviour of the piece to be tweaked according to the ‘feel’ of the accompanying music and can be used to achieve different visual effects and compositions. The adjustment controls allow for the level of image distortion to be controlled allowing the the subject's figure to shift between familiar and

Furthermore, the final output of the piece is broadcasted via Syphon so other live visual softwares (e.g. VDMX, Resolume) can access the image to apply further effects live and at a whim - this adds further lifespan and variation to the piece. The relatively minimal visual style and colour scheme lends itself well to being further modified live and being used alongside other footage as a layer.

The following video demonstrates the modification in VDMX enabled by broadcasting (via Syphon) the raw Processing sketch:

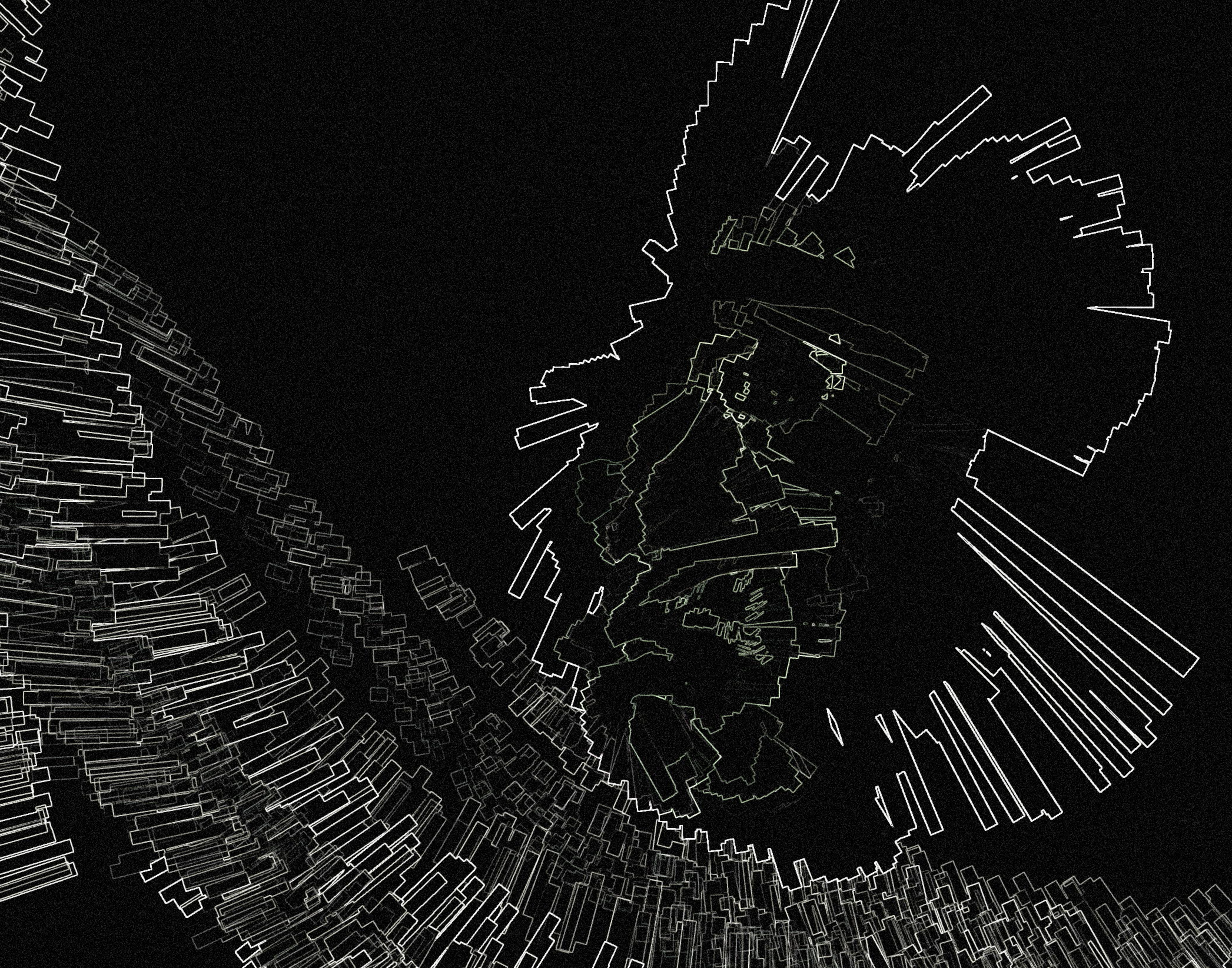

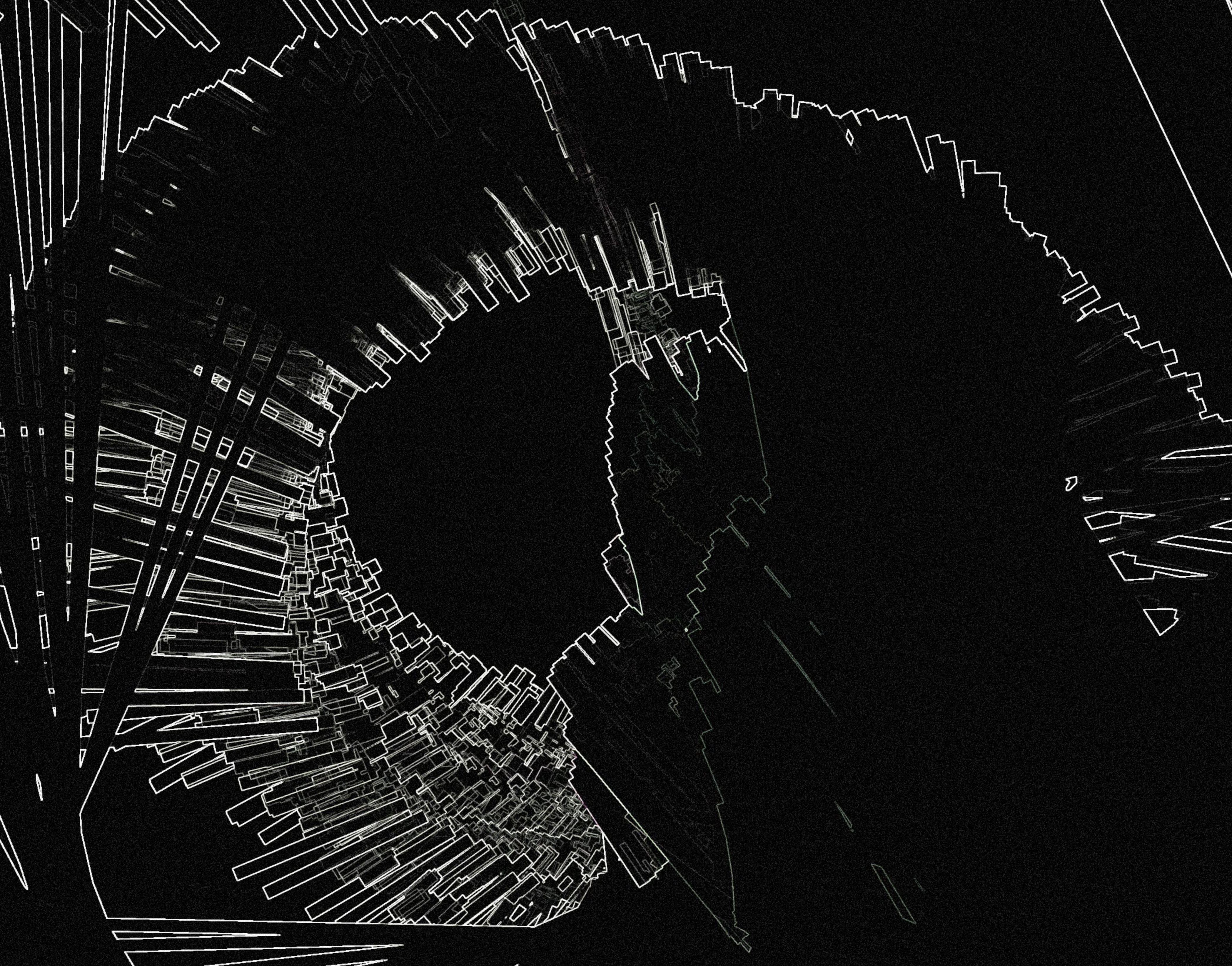

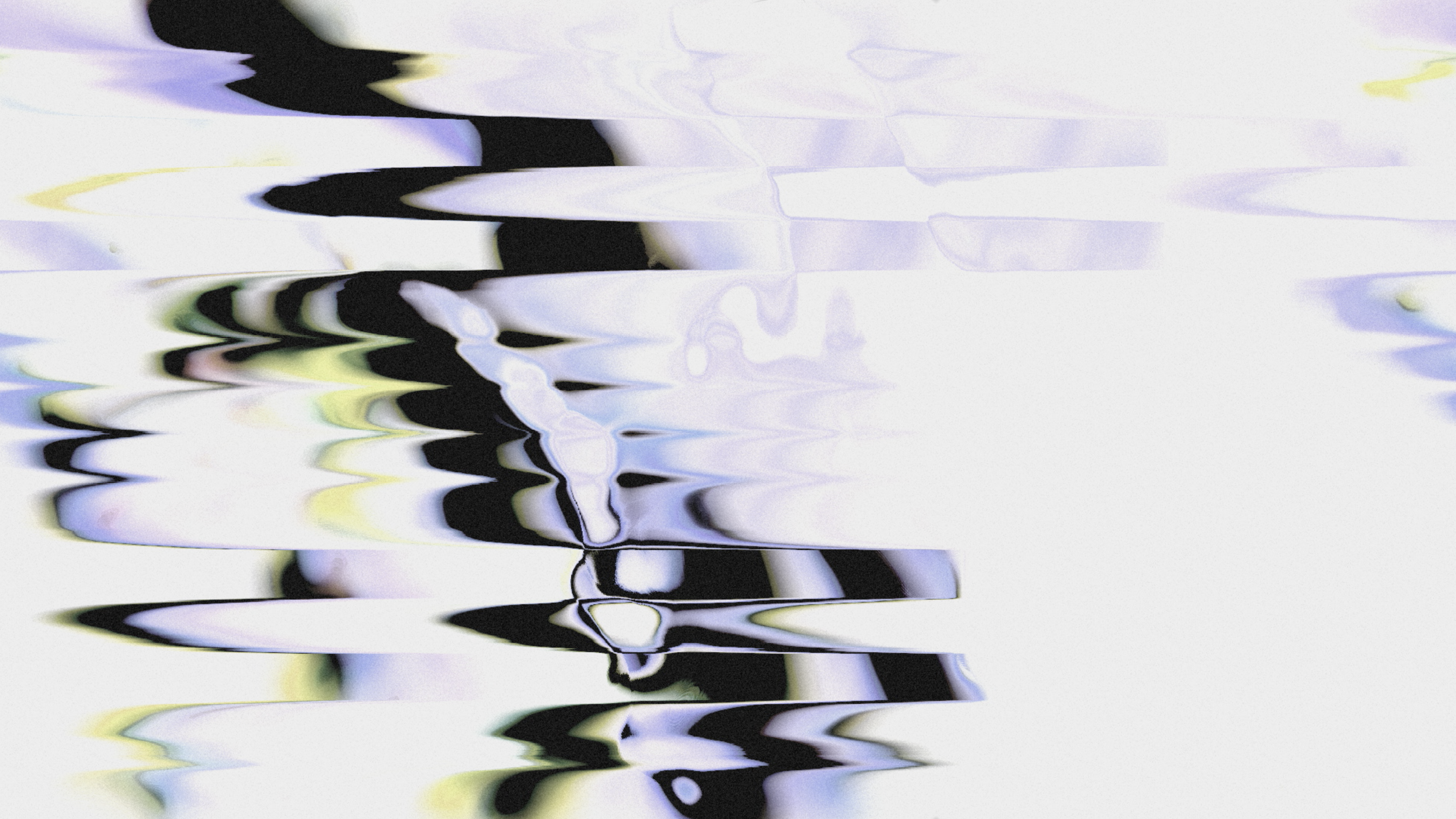

Stills from Processing Sketch:

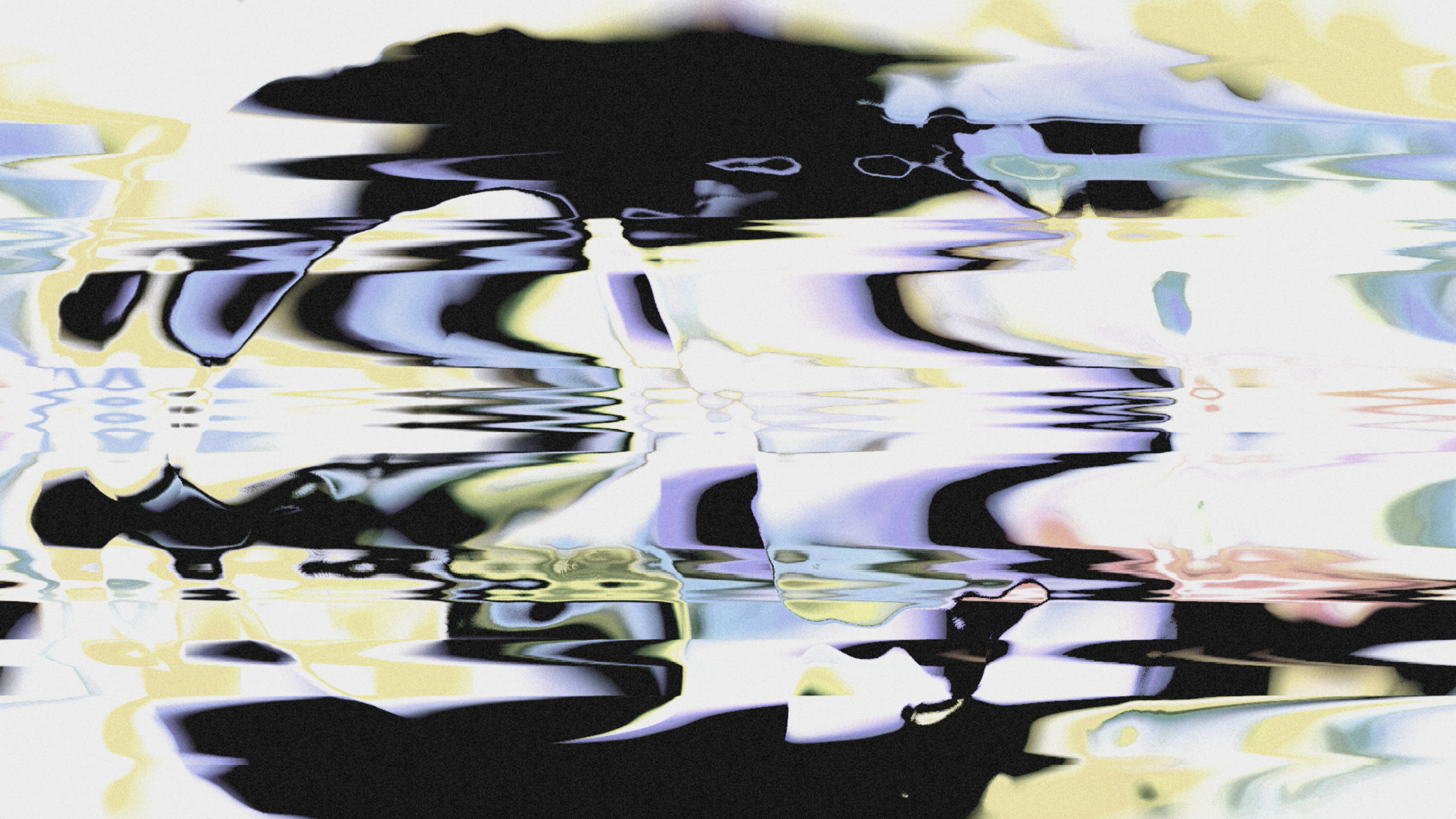

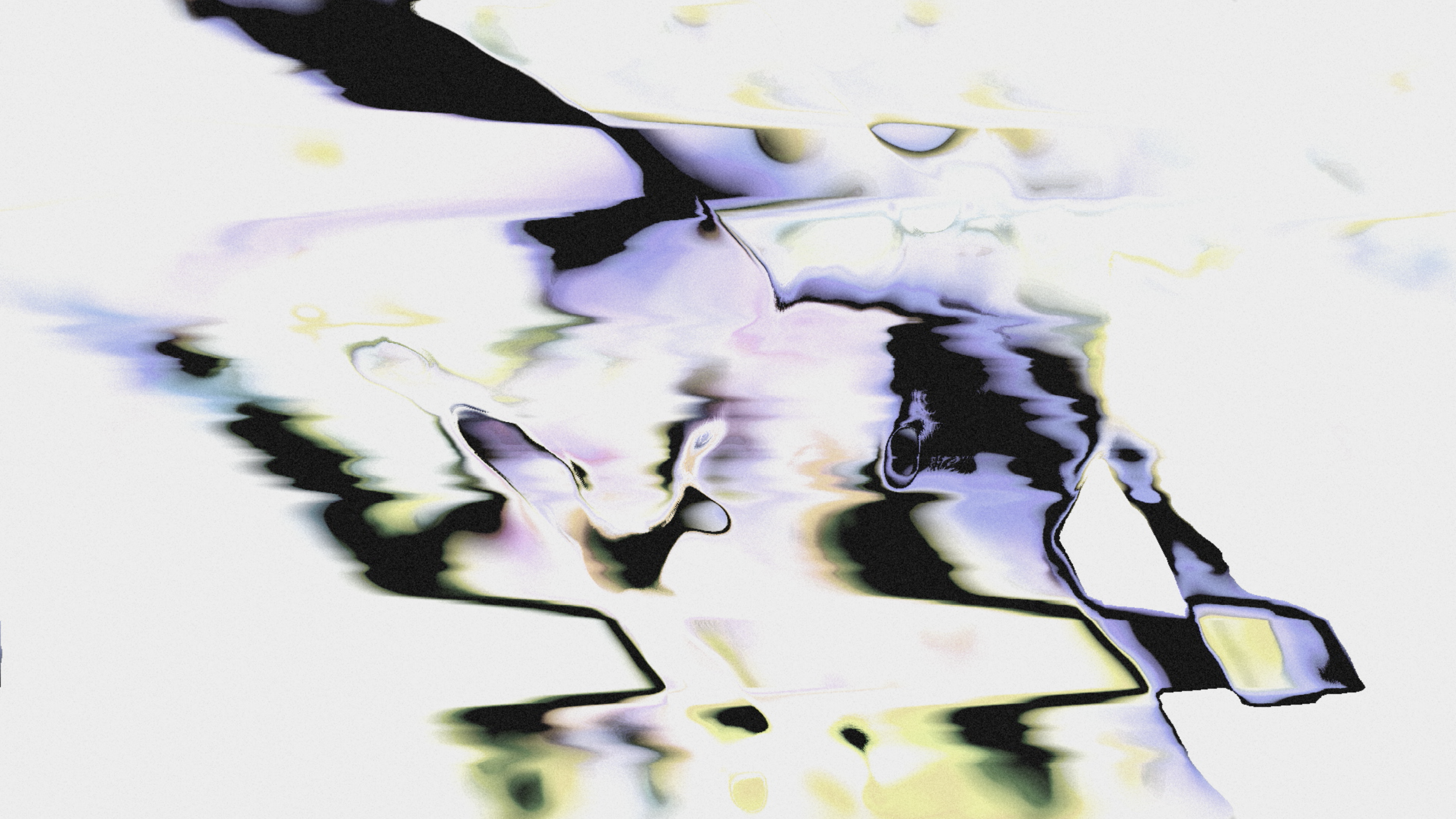

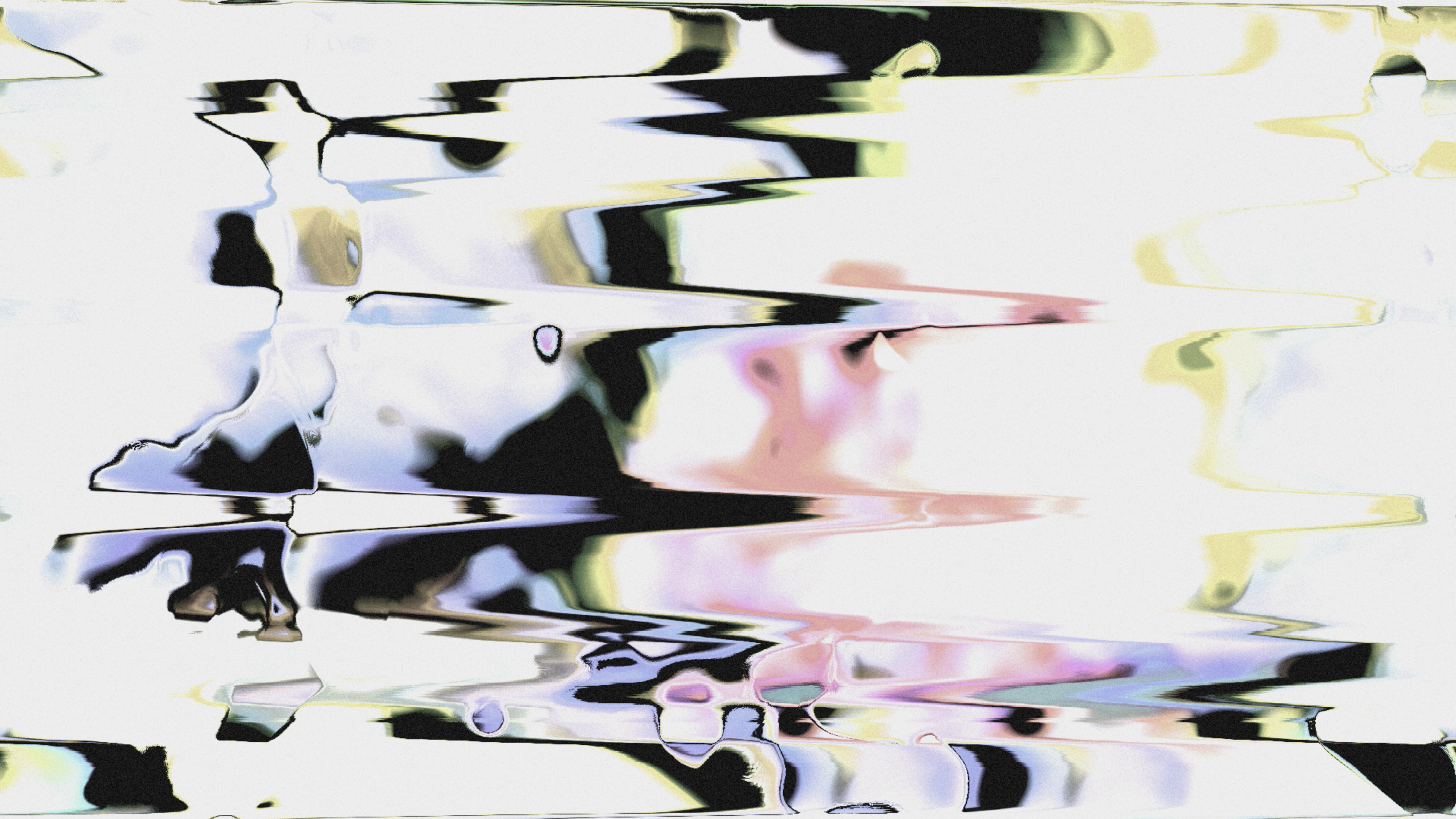

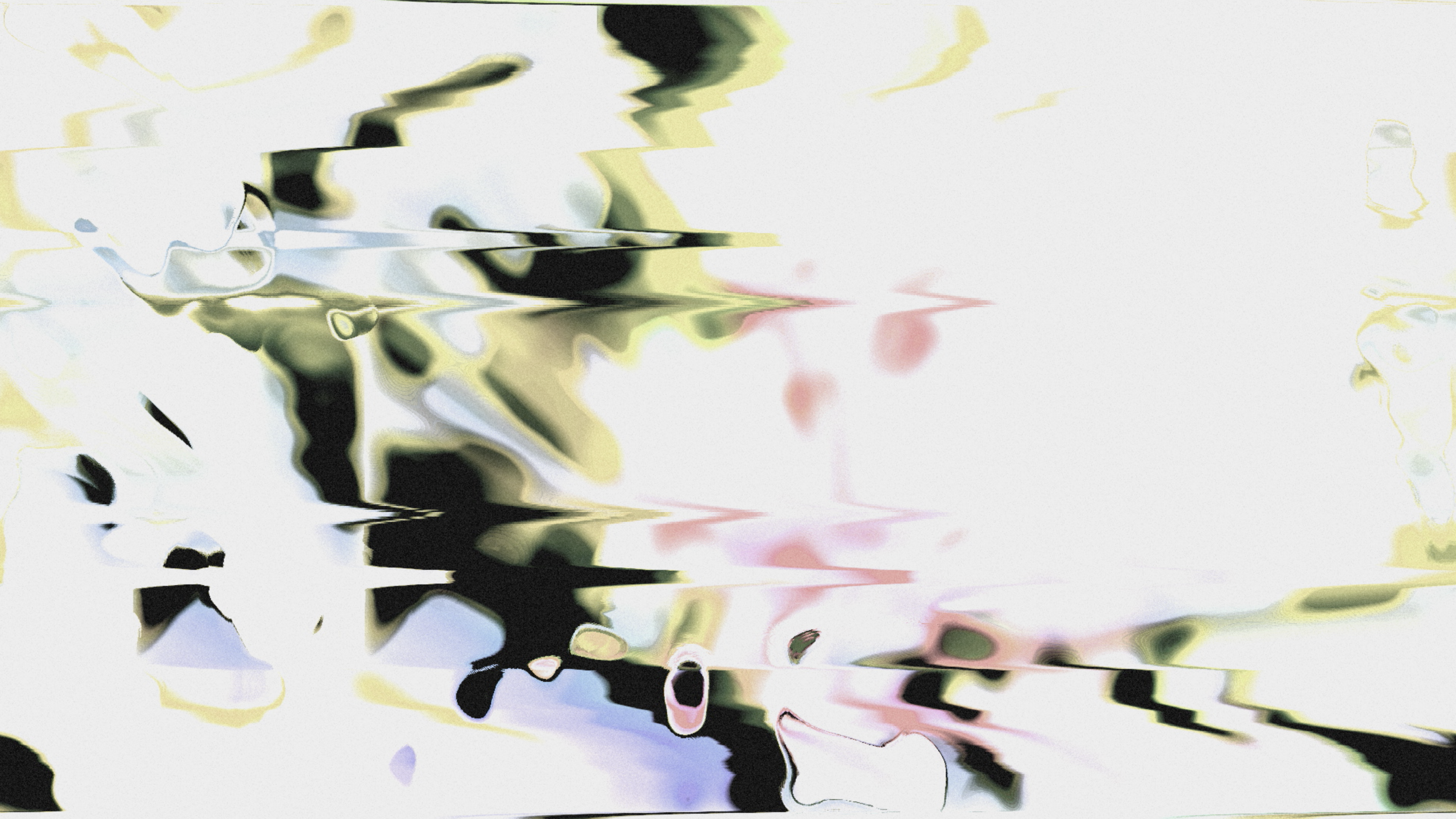

Stills Modified Live in VDMX:

Final Image:

References:

Code Built on Daniel Shiffman's Kinect PointCloud Example:

https://shiffman.net/p5/kinect/