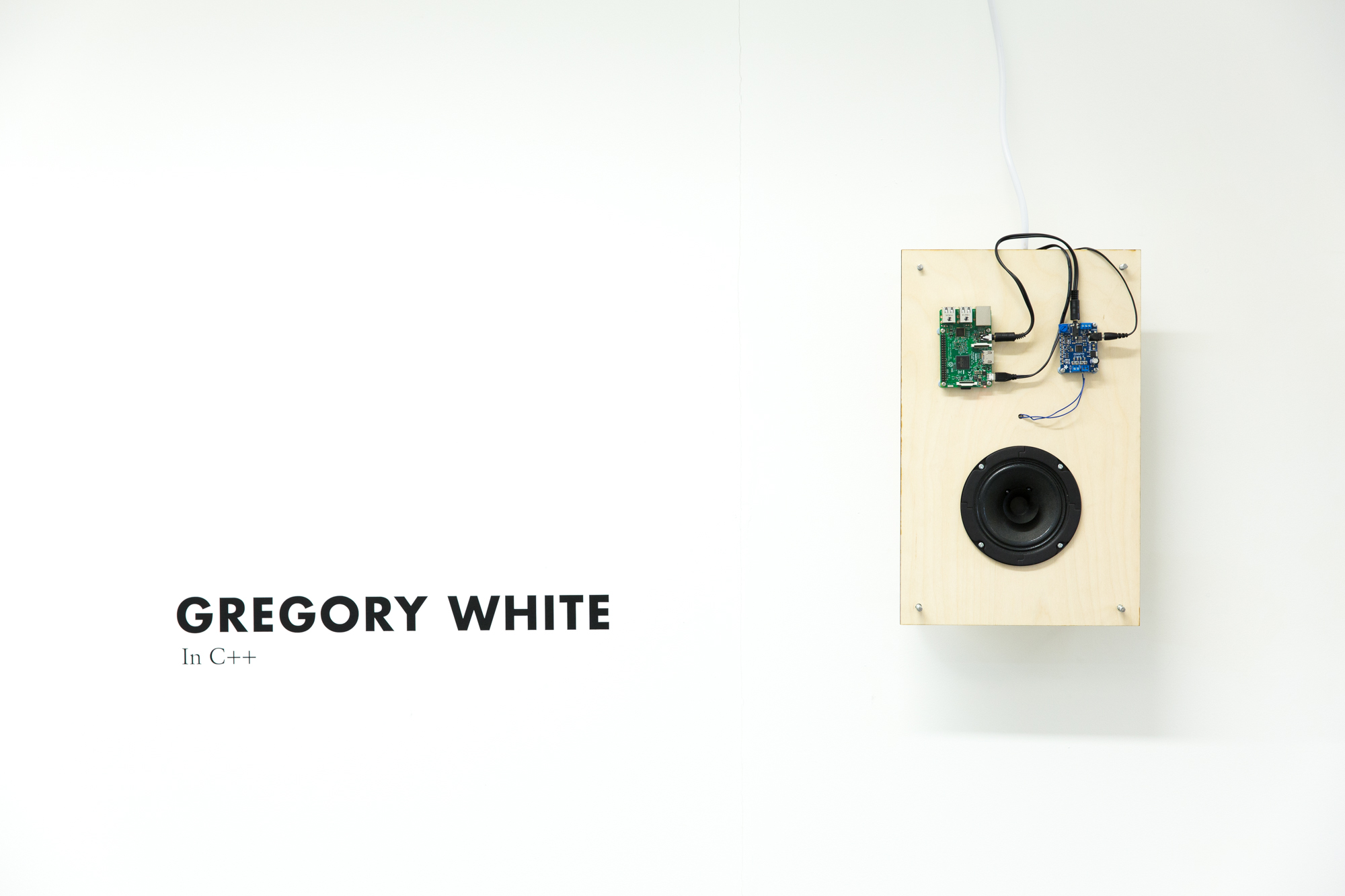

In C++

It all started with a pun. I had recently started to learn to code in the C++ programming language, and my mind drifted back to the previous year when I had been performing a piece of music from the sixties called In C. I thought it could be funny to make a version of In C using C++ — and then I began to think about it more seriously. What benefits could arise from developing In C as software? How could it be used to reframe the composer’s original work? What emerged was In C++: a software interpretation of Terry Riley's landmark minimalist piece In C (1964), for installation and performance.

produced by: Gregory White

press: [Goldsmiths] [Rhinegold]

Background

Before I answer these questions, I must provide some background on the music itself. In C (1964) is a landmark piece of early minimalist music composed by Terry Riley in, as the name suggests, the key of C. It differs from what might be considered a ’normal’ piece of music in numerous ways. First of all, it was written for an indefinite number of performers: “Any number of any kind of instruments can play.” [Footnote 1]. Performers are given a page of 53 melodic ‘fragments’, as opposed to traditional linear sheet music, as well as performance directions that dictate how the piece should be played. Each performer plays through the same 53 bars in sequence — but exactly when they progress to the next bar is up to them. Listening is therefore of the utmost importance: performers must stay within 2 or 3 bars of each other in order to maintain Riley’s intended harmonic relationships and prevent the piece from descending into dissonant chaos (as attractive as that may sound to some). Similarly, the piece has no fixed duration; Riley only suggests that typical performances might last between 45 minutes and an hour and a half. With these three variables — ensemble size, instrumentation, and duration — each performance of In C has the potential to vary drastically.

Aims

The initial aim of this project was to create a software interpretation of In C, using the C++ programming language, that could be implemented in both the gallery (as an installation) and on the stage (as a performance), with the idea of being able to explore Riley’s original work in new contexts. This brings me to answer the questions I posed at the top of this page:

What benefits could arise from developing In C as software?

Creating a software version of In C would allow me to explore a much wider palette of sounds and textures by utilising the small collection of software synthesisers and sample libraries I have been building up over the years, allowing me to hear the piece performed with sounds that did not exist, nor perhaps could have even been conceived, when Riley initially wrote it. Sonic flexibility is inherent in the piece itself given the performance directions (“Any number of any kind of instruments can play.” [Footnote 1]), so in my implementation I wanted to create software that would separate the decision-making process of what notes and rhythms to play from the instrument, allowing the user to effectively ‘plug’ the outcome of those decisions into whatever and however many sound sources they pleased. For this reason In C++ uses the MIDI protocol, a very common way of sending note information that is compatible with both software and hardware instruments. MIDI can also be used to control other non-musical hardware such as lighting rigs, which could be used to create synchronous visual displays — but such possibilities have been left unexplored at this stage.

Once I had a solid build of the software I began to experiment with sounds that were quite far detached from the typical performances I had seen or heard by traditional ensembles. One such example was the use of drones, sending MIDI notes to synthesisers that focused on duration and texture rather than rhythm. Here the harmonic content of the piece was brought to the forefront, as each instrument created thick chords. Another interesting experiment was using the MIDI to trigger atonal percussion, creating the opposite affect: harmony was stripped away to leave the pulsating syncopated rhythms, bringing to attention how each virtual performer’s decisions surrounding the velocity of each note (how hard or soft it is struck) affects the groove. These are just two examples of how the use of MIDI can enable the user to totally reshape Riley’s initial material into new and unexpected forms.

How could it be used to reframe the composer’s original work?

Shortly after I started to learn how to program, it occurred to me how closely linked algorithms and the ‘if/then’ computational logic were to my decision-making processes as an improvisational musician, particularly during performances of In C. I thought it would be interesting to attempt to model some of these thought process — “repeat this bar until x time has passed, then progress”, “if everyone else is progressing faster than me, then increase the speed of my progression” — in code. In its current state, In C++ draws attention to this computational logic by exposing the electronics, wires, and code that enable it to function.

Process

In C++ has taken various forms over the past year and a half, culminating in the current installation for Metasis 2016. The project was initially submitted as an openFrameworks app for Rebecca Fiebrink’s Workshops in Creative Coding module, during my second term at Goldsmiths [Footnote 2]. Throughout this time I came up with the initial idea, developed the concept, and built the majority of the software as it stands today. However, at this stage it was not physical at all — it existed only as an app that could be run in conjunction with synthesisers to play the piece.

Since the initial submission I have developed In C++ in several key ways, beginning with code correction. When working on the first, software-only iteration of In C++, I had attempted to create a system whereby each virtual performer was aware of their position in the piece, and the position of every other performer. If they were to progress through the 53 musical fragments too slowly, they would be forced ahead to catch up; likewise, if they began to rush ahead, their parameters would be adjusted so that it took longer for them to progress. This way, a balanced performance could occur and the harmonic relationships of the music could be kept intact. However, this system did not work. I was not aware at the time since I always set the duration of the piece to be quite short, as I was mostly experimenting with different sounds. However, upon testing the software for longer durations over about 20 minutes, I began to realise that my system was broken. Therefore a key part of this project since its initial submission was fixing this aspect, to enable the piece to function as intended. More details on this can be found in the Technical section below.

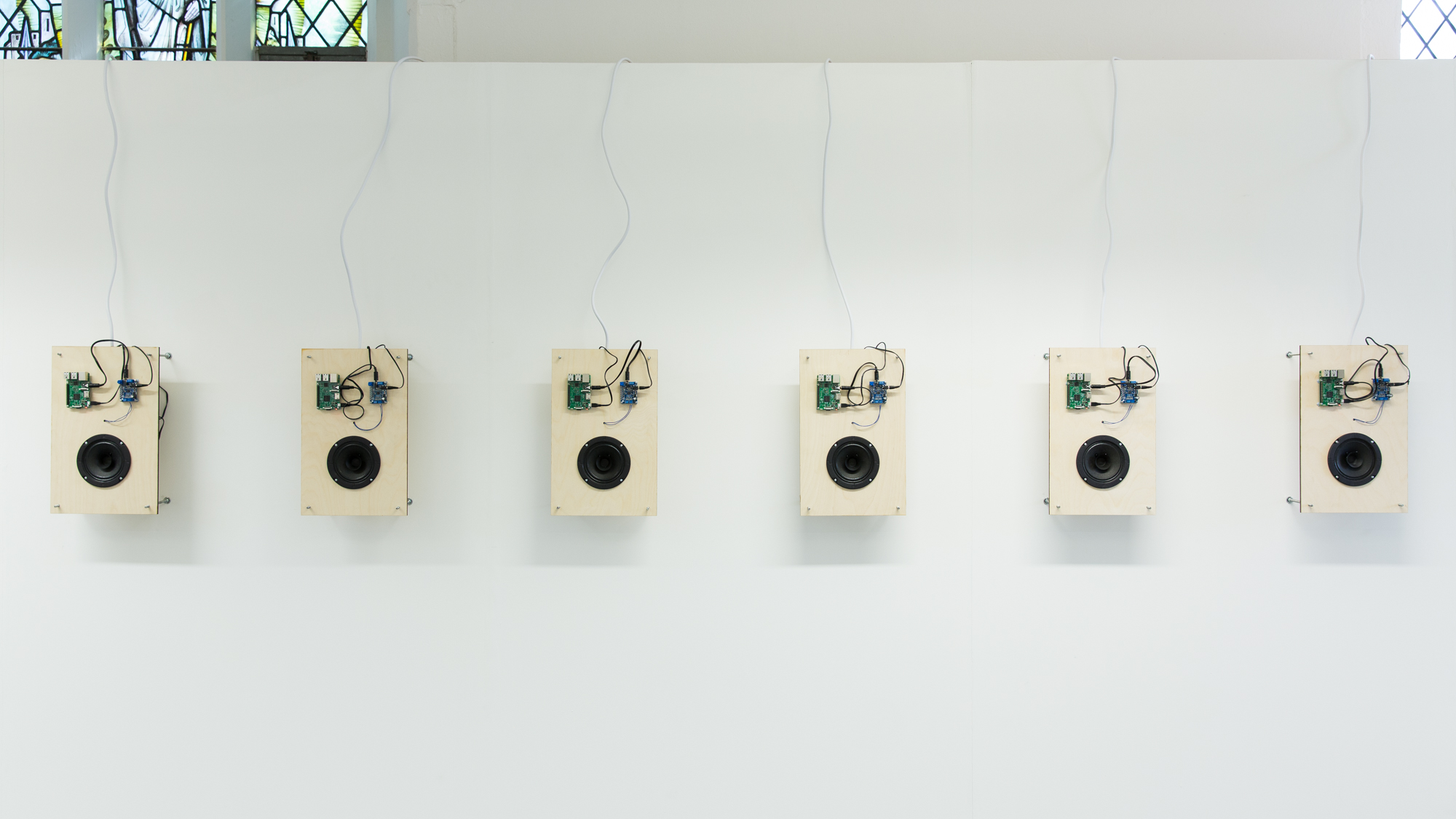

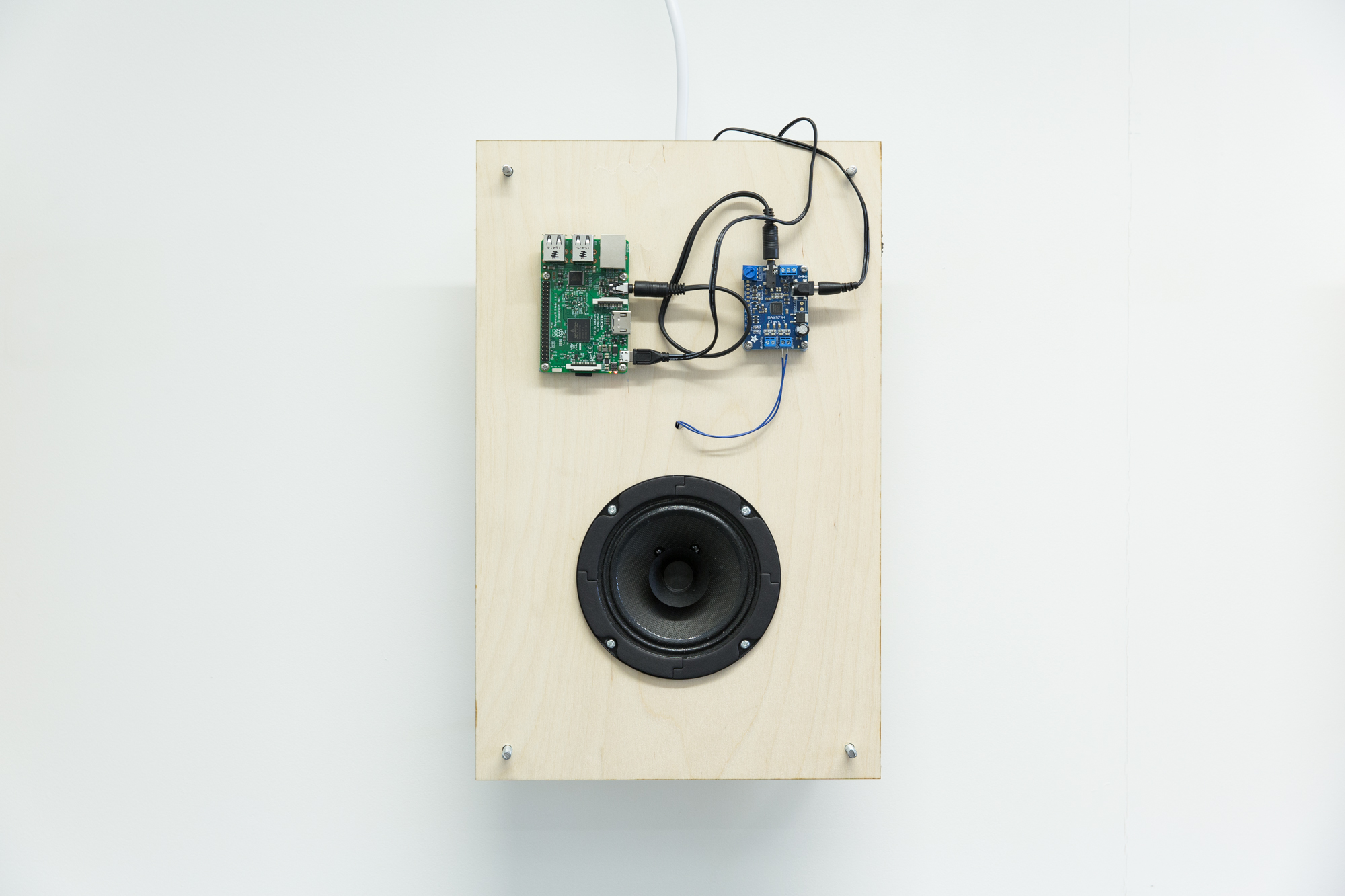

For the Metasis 2016 exhibition, most of my work has been concerned with the presentational aspects of In C++ as an installation piece, and its physical construction. I have matured the initial presentation seen during the 2015 Except/0n exhibition to create a more modular system, where each ‘performer’ is its own distinct object: a speaker cone driven by a small amplifier circuit, with audio coming from a Raspberry Pi, all mounted on a wood panel. Theoretically, many more performers can be assembled and presented than with my previous method as I am no longer limited by the number of outputs on my audio interface, as I was when using audio monitors. As cost and time allow, the piece can be scaled up or down to fit the space in which it is being shown. This new style of presentation also emphasises the idea of each performer being autonomous, the code driving its decisions directly on display and linked much more clearly to the relevant speaker. The exposed wiring, influenced by artists like Lesley Flanigan and Tristan Perich, again serves to highlight the passage of signal from component to component, bringing to attention the computational and electronic aspects of the decision-making processes. Many of the challenges in this project have emerged at this stage: finding the correct way of cutting holes for the speakers; mounting all of the parts to the wood panels; fixing the panels to the wall; sourcing all of the components to build and supply power to the system; and so on. I have learned a great deal about the fabrication of physical objects and how to present them for installation, and owe a great deal to Konstantin Leonenko for his detailed assistance and unending patience.

Technical

In C++ is written in the C++ programming language, using openFrameworks. It makes use of MIDI to send note information out to a digital audio workstation (DAW) such as Logic or Pro Tools, for use with software synthesisers or external hardware. The In C++ software does not create sound itself; it determines what notes should be played when, for every virtual performer. MIDI functionality is made possible by the ofxMIDI addon [Footnote 3], and I also make extensive use of Mick Grierson’s ofxMaxim addon [Footnote 4] throughout, adapting some code from various Maximilian examples (as commented in the code).

First of all the number of virtual performers, represented by ‘Performer’ objects, must be defined in ofApp.h. This determines how many MIDI channels are going to be created. Other important variables like ‘tempo’, the speed at which the performers will play at, and ‘factor’, which determines how quickly each performer moves on to the next bar, are declared in ofApp::setup(). Here the loadBars() method is also called, which loads the pitch and rhythm data from multidimensional vectors held in the Performer class. I have transcribed all of the 53 bars of In C, noting down the pitch information and translating it to MIDI values, as well as the rhythm information, which is expressed as a float. When the software runs, a phasor ramps from 1 to x, where x = the number of beats in the current bar. A note on the first beat of the bar would have the float value of 1; a note on the second beat of the bar would be 2; an off-beat between them would be 1.5, and so on. The value of the phasor (expressed as the variable ‘currentTime’) is continuously checked against the float value of the next note in the bar. Once currentTime has increased beyond this value, the note is played, and we check against the next note.

Once the complete bar has been played the performer returns to the beginning of the bar, and the ‘progress’ variable increases by the factor determined in ofApp::setup(). When the progress variable increases above the value of ‘threshold’, the performer moves on to play the next bar. As each performer is an instance of the class Performer, they all behave the same way. The trouble then becomes keeping all of the performers aligned — preventing some from progressing too slowly and others too quickly. For this reason, in ofApp::audioRequested we iterate through the array of performers to see what bar they are currently playing. This value (‘currentBar’) is then compared against the currentBar for every other performer. If the difference is greater than three bars, the dragging performer progresses to the next bar once they finish their current bar.

The audio is complemented by a very simple visual component, whereby constantly-updating information about the notes and how they are decided upon is displayed for every performer. The code for this was adapted from Dan Wilcox's ofxMIDI 'midiOutputExample'. [Footnote 5]

When deciding to make a version for an ensemble of Raspberry Pis I had initially wanted to run this code on every Pi, connected over Wi-Fi. However, I came across a large problem: the sample libraries and synthesisers I had been carefully curating would not run on Linux. I was therefore faced with several options: build my own rudimentary sample player; attempt to synthesise the sounds with code; or create a recording of the software in action, and create a small program to play the files across each Pi synchronously. Given the sound quality I wanted to achieve and the timescale I was facing, I decided upon the latter. Consequently I have created a screen-recording of a full run-through of the piece, and created a video of each performer’s data and their instrument’s audio track. Each performer listens to a ‘conductor’ computer, in this case an iMac displaying the musical score, which sends out an OSC message to trigger the video. Once all of the videos are over, the system triggers them again.

The end result for the observer is the same: they are seeing a computer system make decisions about how to play Terry Riley’s In C. What is now lacking is the real-time nature of the decisions, and the variation that comes with each performance. I believe that in the setting in which In C++ will imminently be shown, this is not an issue. Observers will unlikely stay long enough to hear an entire performance (for this exhibition the duration will be about 24 minutes per performance), let alone become so familiar with it that they are able to identify it is the same as the one they just heard. The communication of both mine and Riley’s ideas is still intact — though, admittedly somewhat defeated if you are to learn that the piece is not happening in real-time. I believe that for now this is a solution that provides a stable and quality-sounding end product, but I would definitely want to continue working towards my initial idea of fully-autonomous, real-time performers during future development. I would tackle this by looking further into sample players that are compatible with Raspberry Pi, and whether I can load my collection of samples into it. If this is to be unfruitful, I would like to attempt building my own sample player system that included various levels of velocity, allowing for a spectrum of soft, medium, and loud sounds for each note. Other aspects I would like to explore in future development are the use of lighting rigs driven by the same MIDI data used to determine notes played to create a tightly-linked audiovisual experience, and the automatic switching of sound palettes after each performance when installed in a gallery — it might first run using one set of instruments, then move on to drones, then a percussion version, before returning to the original instrumentation.

Source code:

Latest code: http://gitlab.doc.gold.ac.uk/gwhit011/InCPlusPlus

Previous code: https://github.com/gregwht/InCplusplus

References:

[1] Riley, Terry. In C Performance Directions. 1964.

[2] My previous submission of In C++ for Rebecca Fiebrink's Workshops in Creative Coding module can be viewed here on my GitHub.

[3] ofxMIDI addon available at https://github.com/danomatika/ofxMidi

[4] ofxMaxim addon available at https://github.com/micknoise/Maximilian. Some code, particularly the ofApp::audioRequested() and ofxMaxiSettings::setup() methods, has been adapted from the Maximilan examples.

[5] Code for Dan Wilcox MIDI output example available at: https://github.com/danomatika/ofxMidi/blob/master/midiOutputExample/src/ofApp.cpp