Salty Bitter Sweet

The Machine has made itself, quietly, unnoticed, in a back room. From partially broken and discarded components. Now it tries to explore and understand the world in which it finds itself. It tastes what it sees - abandoned pieces of junk, rotting matter. In response, tentatively, it begins to express itself, in machinic love poetry and soundscape.

produced by: Laura Dekker

Introduction

Salty Bitter Sweet developed out of my recent experiments with machine learning, but it is also part of a much longer-term line of investigation for me in my art practice, which is how do we experience, make sense of, and construct ourselves and our world? In particular, I am interested in the reciprocal roles of technologies in these processes, and the special facility within computation for synaesthesia: taking one form of data and transforming to another. These transformations not only present the possibility of creativity by machines, but also provide a rich set of routes for interactivity with an audience.

This project has gone through several iterations, including two exhibitions, developing each time in response to audience feedback and new artistic considerations. The installation is made of hardware and software systems which I have developed, connecting to modules from other research groups, which perform machine learning and natural language processing operations.

Concept and background research

This artwork is about a machine that has constructed itself, and is on the edge of becoming conscious. It has become just sophisticated enough to make earnest attempts to explore and understand its world, and to express itself in its own unique and slightly awkward way. The installation is specific to each instantiation and location, emphasising a certain urgency: The Machine has grown out of this place, these materials, at this moment in time.

There is a surprisingly long history of machine creativity. Ada Lovelace had the insight that the numbers manipulated by a computer could stand as symbols for anything, including music or words. This opens up not only the possibility of using a computer creatively, but also for synaesthesia: the symbols can shift their expression from one domain to another - from visual image, to taste, to words, to sound.

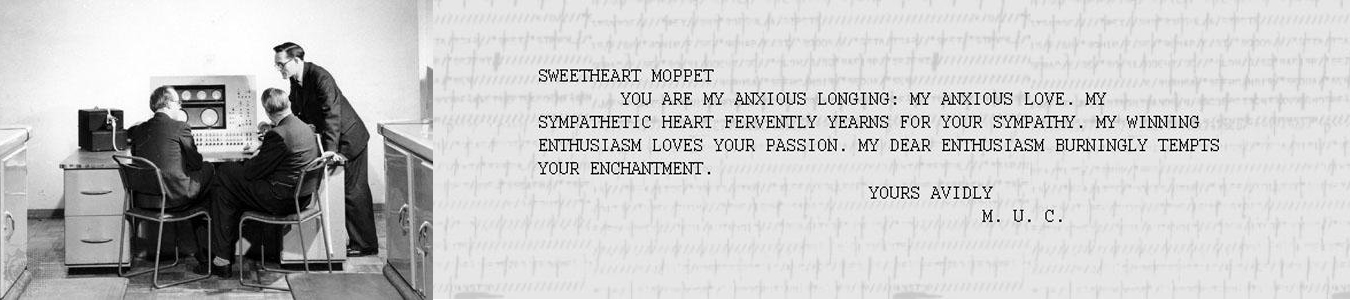

Christopher Strachey, who worked alongside Alan Turing in the early 1950s, programmed the Manchester University Computer to create ‘machinic love letters’, which he displayed on the lab walls. His algorithm used a structured form, where words with the same part of speech are randomly interchangeable, to generate new output. In 2009 artist David Link constructed an installation as a functional equivalent of the Manchester Ferranti Mark 1, which audiences could attempt to operate, and which would generate love letters in the same way.

Many researchers, such as those at the Neukom Institute, pursue the goal of machines creating output indistinguishable from a human creative practitioner (their Turing Tests in the Creative Arts include competitions for sonnets, music, dance, etc.). Others investigate the possibility that a machine might create, using its own non-human judgement. Goriunova (2014) links Strachey’s playful creativity with investigations into machines not just mimicking human creativity, but possibly finding creativity and play on their own terms (Parisi and Fazi 2014). It is arguable that such a thing is not possible, in that the human is always a mediator in some way and human influence cannot be removed from the system.

But this project is not a Turing test (my explicit artistic decision). This ‘non-Turing-Test machine creativity’ currently has intense interest from other artists, as evidenced by recent cross-disciplinary symposia, such as Language Game[s], 2017, and exhibitions such as Langages Machines, 2017.

Development

Prototype, test, exhibit, redevelop...

In my art practice, I work by a process of prototype, test, exhibit, redevelop, test, exhibit... aiming to get a working version out into the world as quickly as possible. When considering the actual artwork - an interactive installation - some artistic decisions lead to technical reworking and new technical challenges. This work relies on a careful balance and tension between structure, expectation and surprise. The concept lends itself to a quite raw and rough aesthetic. For my first opportunity to exhibit it, at the V&A Digital Futures exhibition in July 2017, I was concerned that I might have gone too far toward ‘roughness’, but the responses from a range of audience members encouraged me that I was more or less on the right track. The main development for the Overlap show (Sept 2017) was to separate out a two-channel projection, which presented some engaging technical and practical challenges.

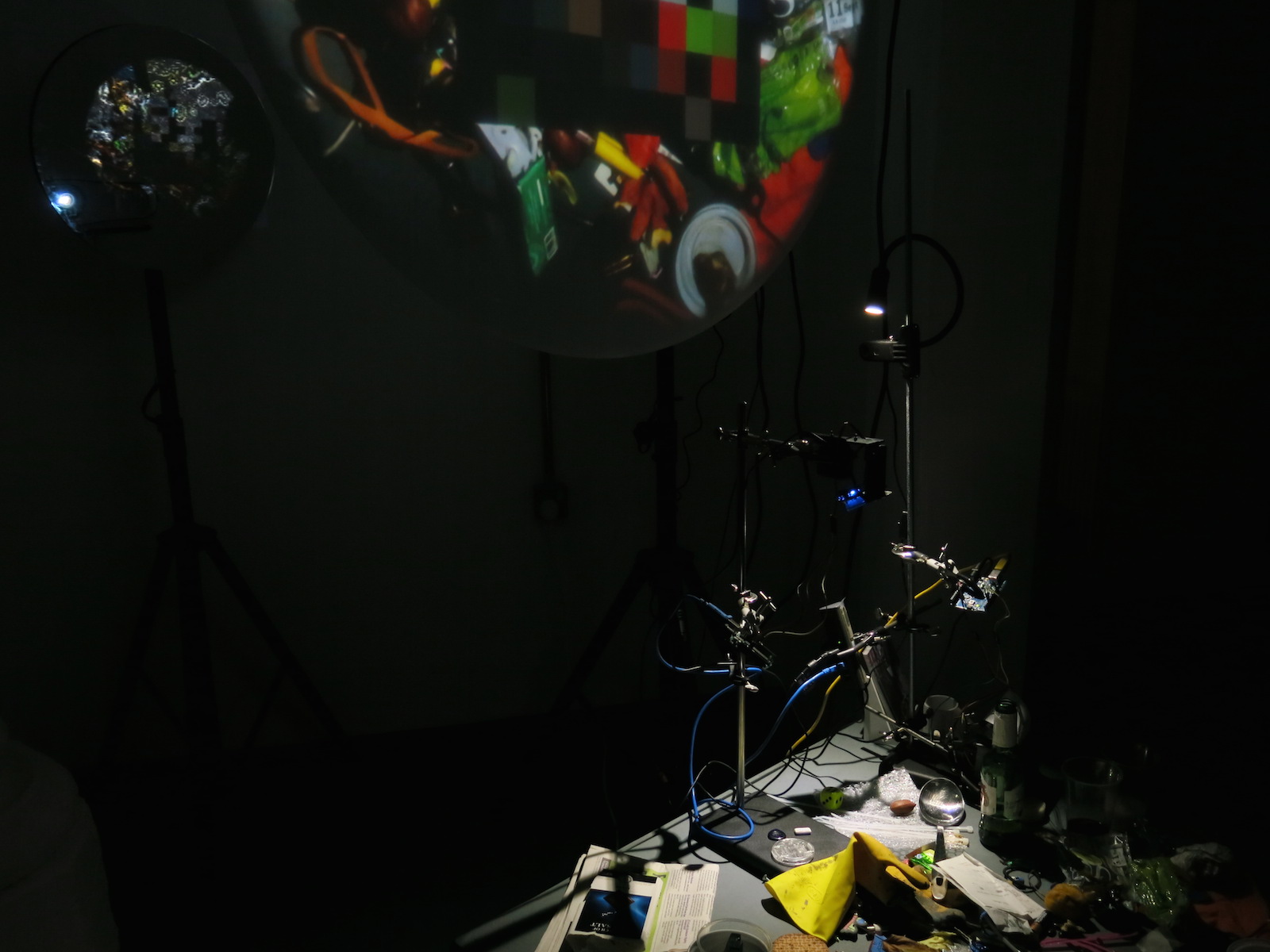

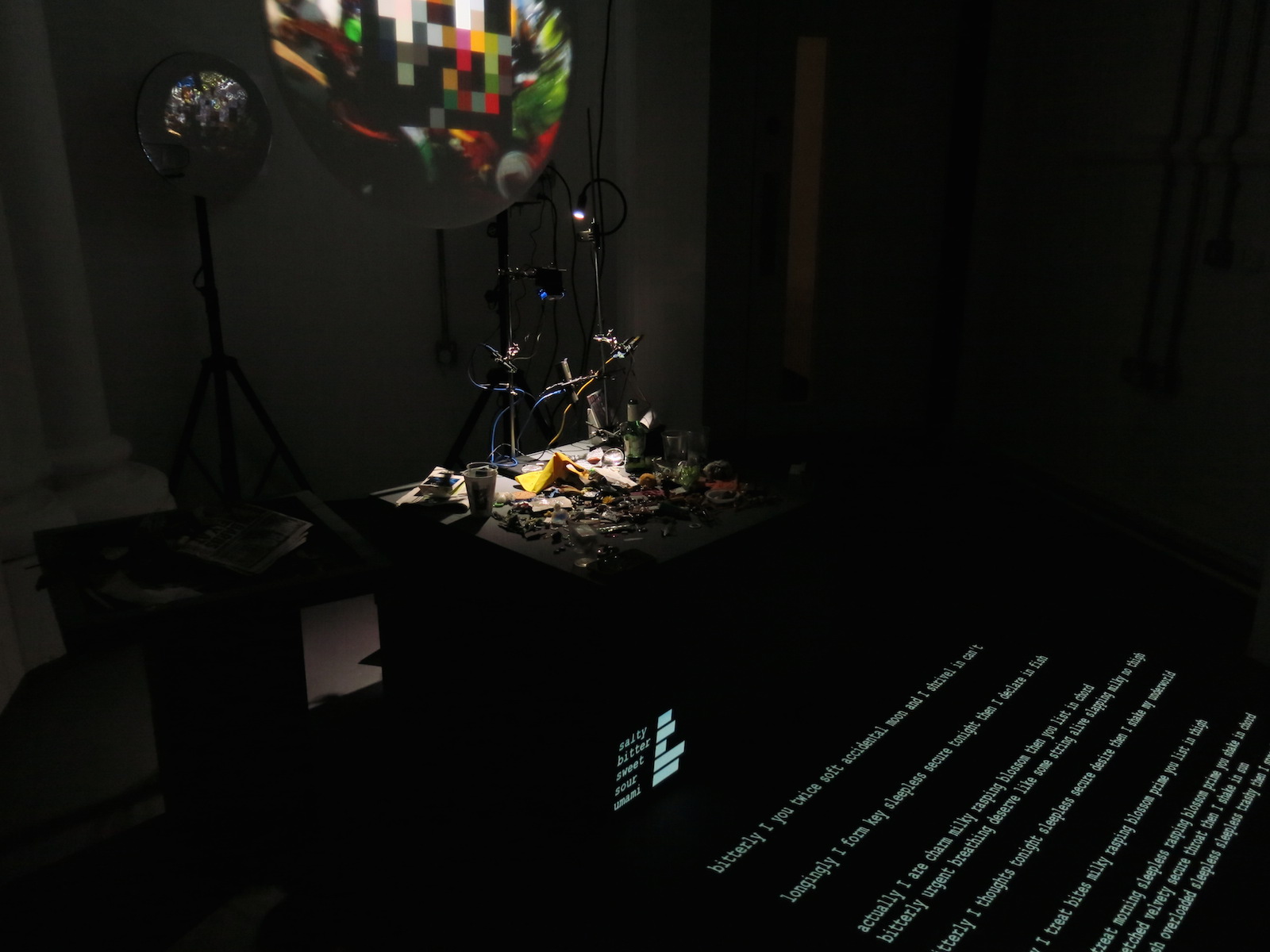

Input and output

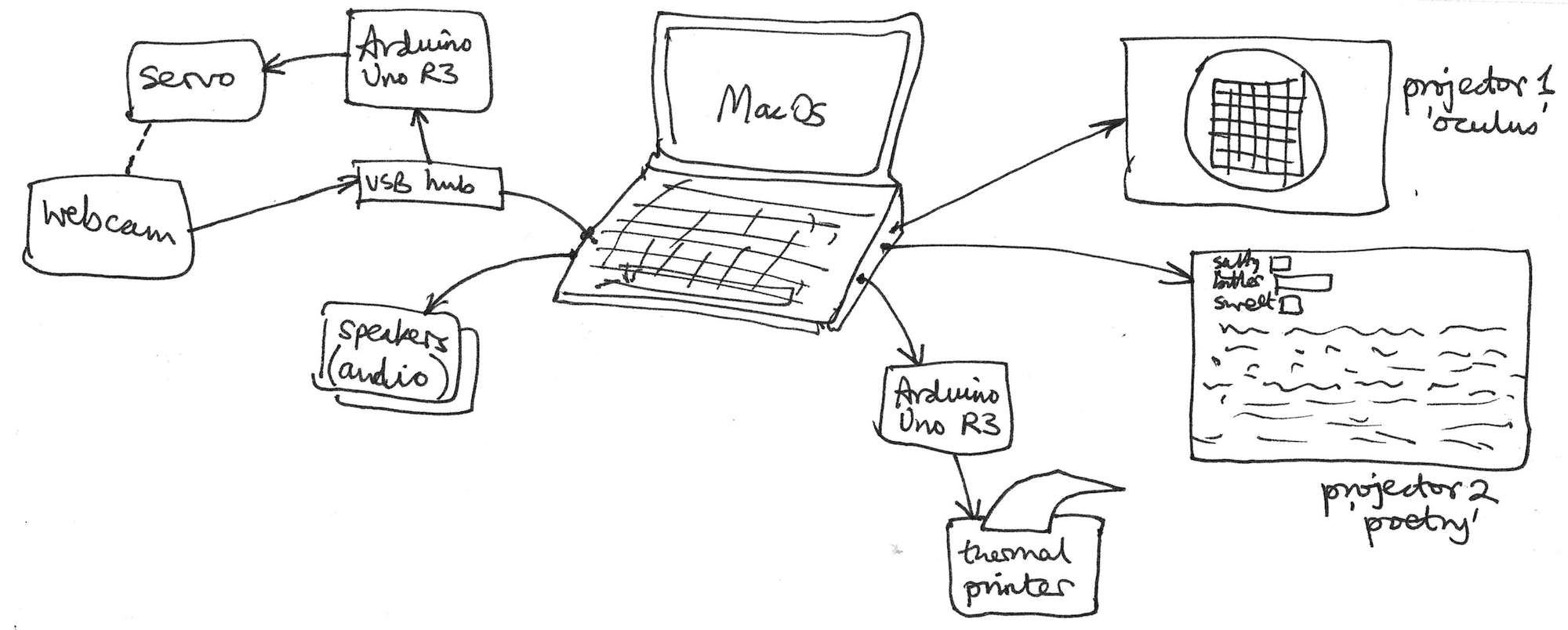

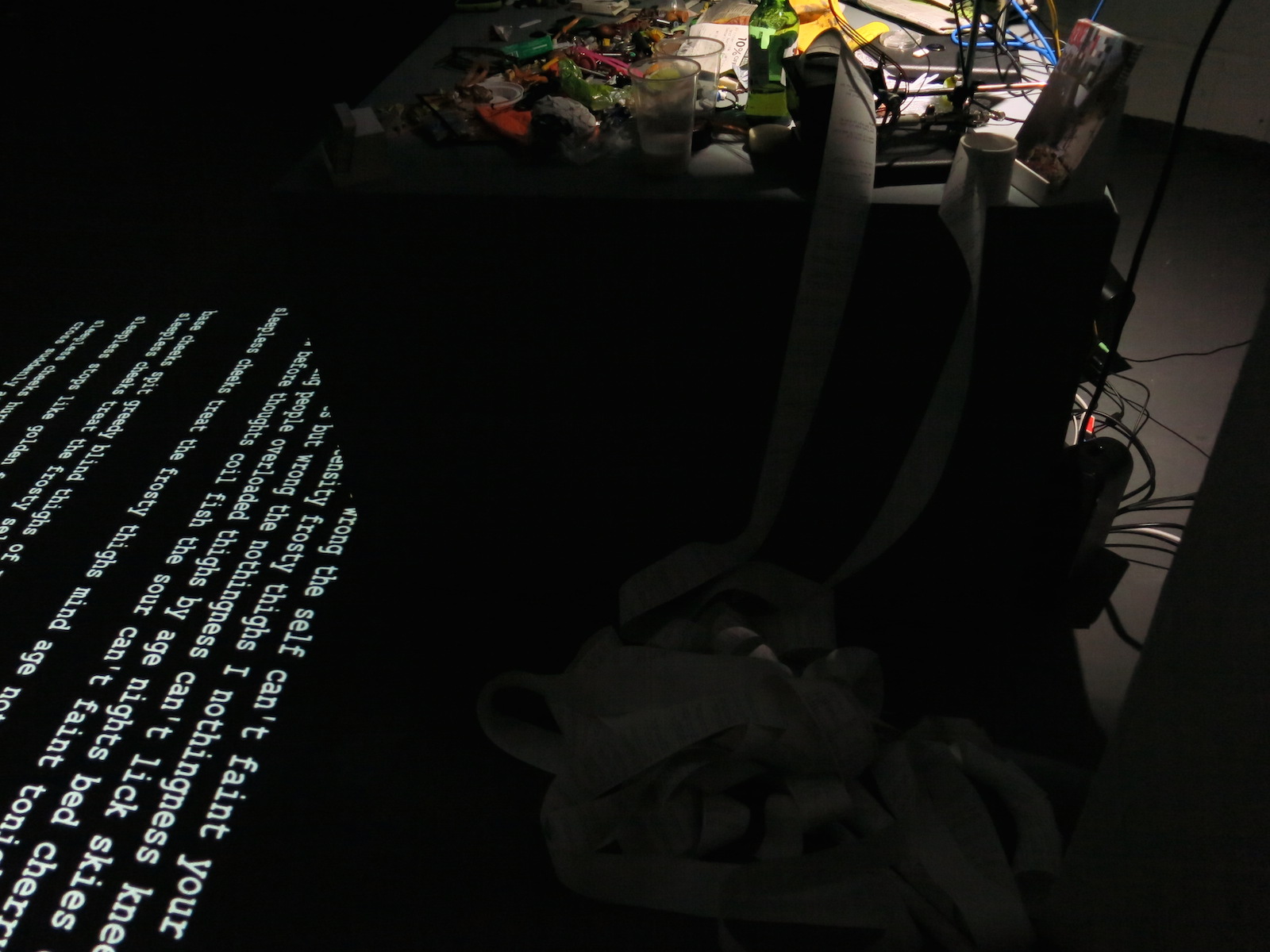

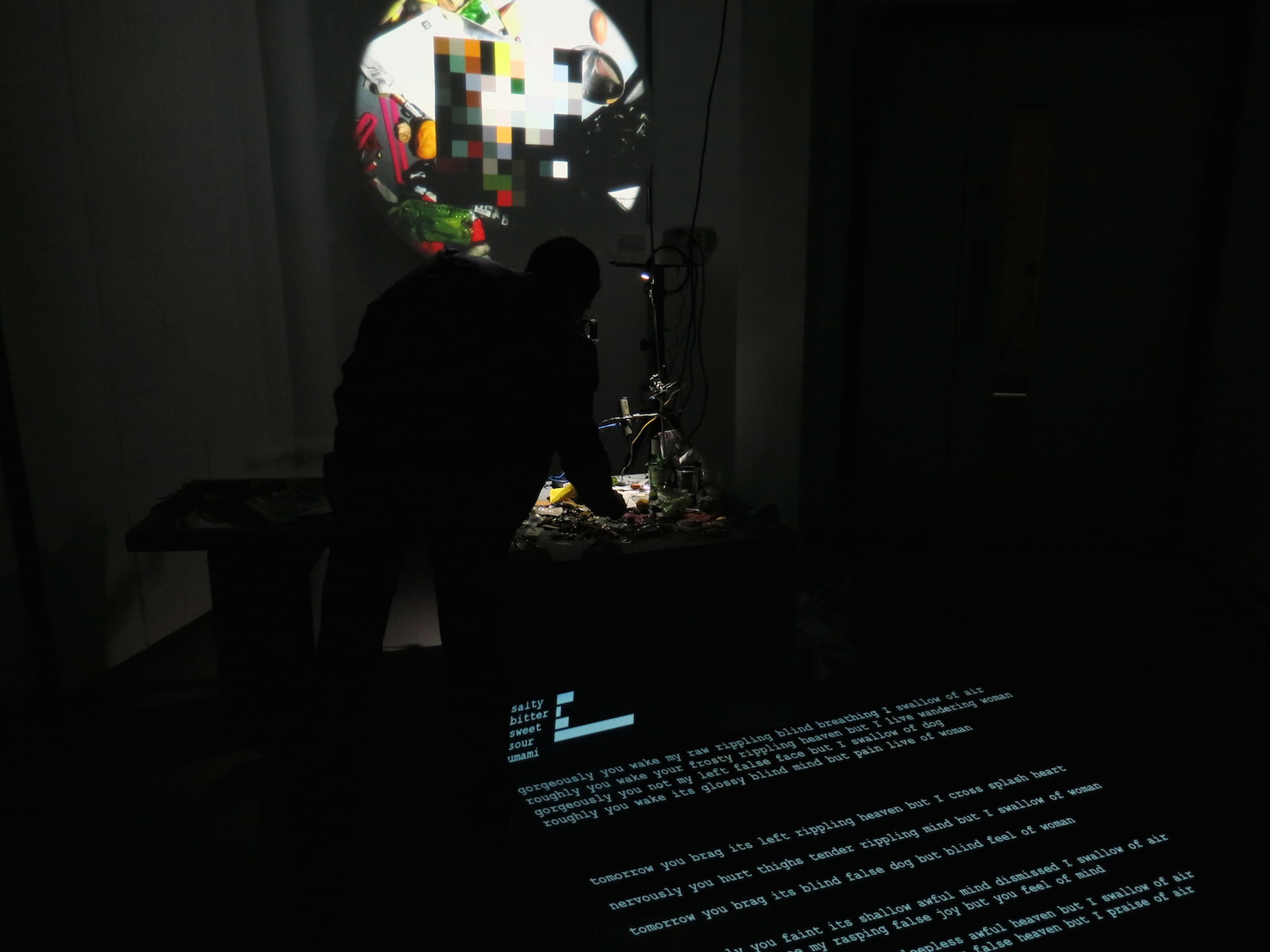

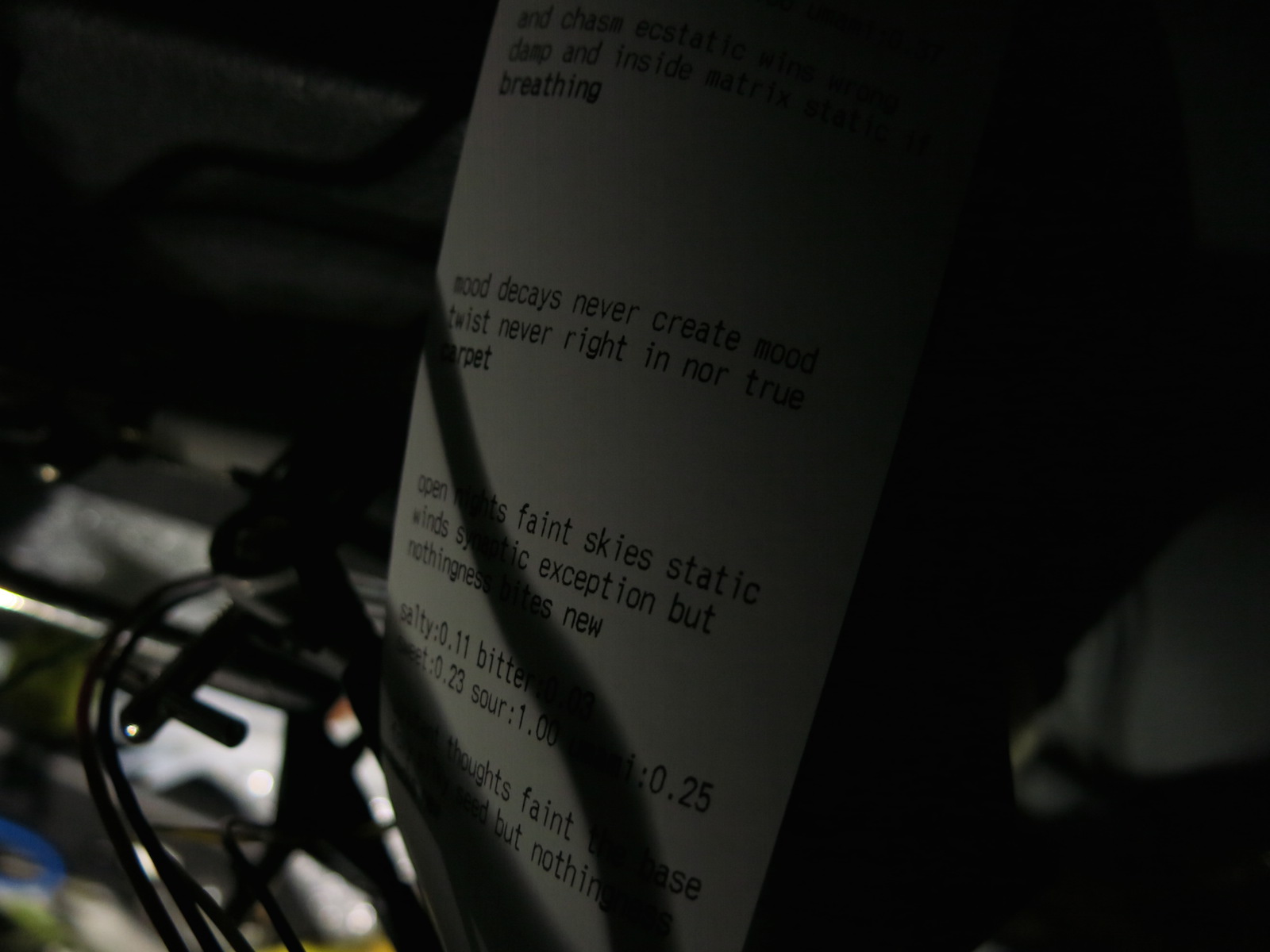

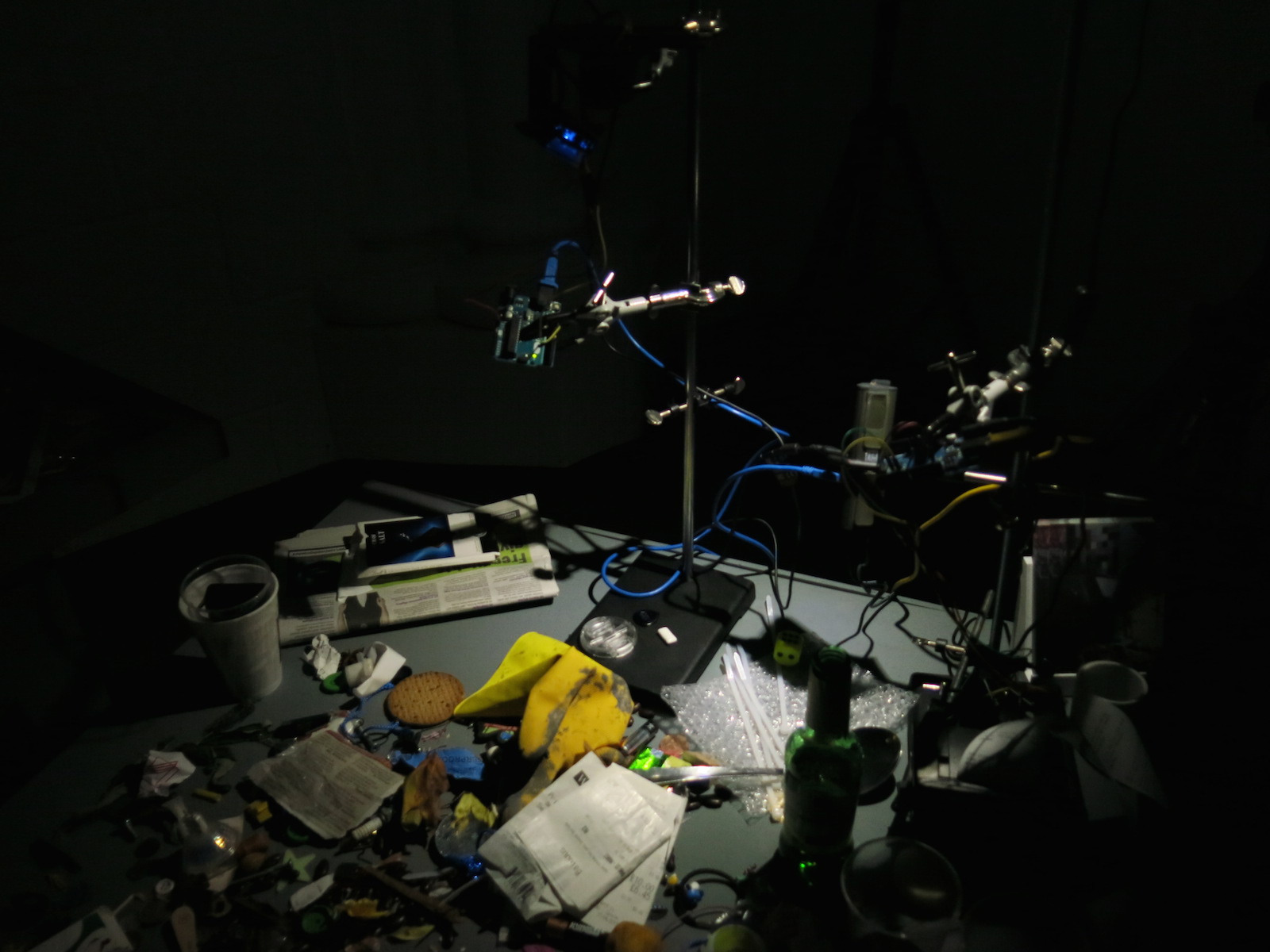

The main system input comes from a webcam moving via a servo. The webcam points downwards at, and scans, a collection of junk for objects of interest, some of which I provide at the start, and some is added by the audience over time. This junk is the channel for audience interaction. The Machine displays what it is seeing, tasting, and the poetry it is creating, via two projections. Selected poetry fragments are sent to a thermal printer, which outputs a fragment every minute or so, growing an increasingly long ribbon of paper. A composed stereo audioscape plays via a pair of speakers.

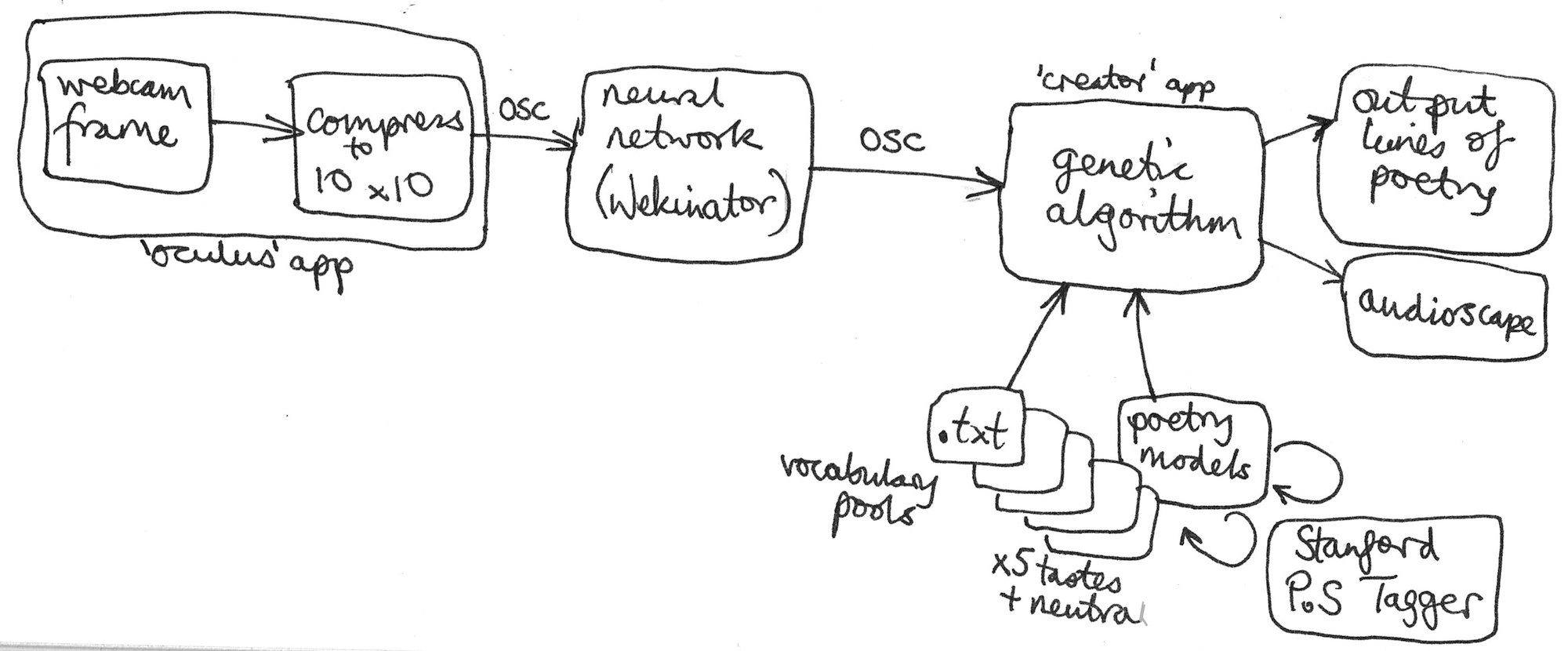

Software system

Two applications, written in C++/openFrameworks, communicate via OSC with the machine-learning system Wekinator. The ‘oculus’ application takes the live webcam stream, compresses each frame (to a 10x10 pixel image) and passes the compressed frame to a neural network. The neural network has been trained (using a set of video clips of decaying matter and various mixed types of junk) to produce five continuous outputs (0.0-1.0), corresponding to five tastes ‘contained’ for each frame: salty, sweet, bitter, sour, umami. The output from the neural network via OSC to the ‘creator’ application, which uses the taste levels to steer the generation of poetry fragments and an audioscape. The audioscape uses the taste levels to control separately the volumes of five sampled sound streams, one for each taste.

Creating the poetry

The poetry generator uses a genetic algorithm to manipulate strings of words. Current values of each taste variable determine the probability that words will be picked from that ‘flavoured’ vocabulary pool. The genetic algorithm’s fitness function tags candidate texts using the Stanford Natural Language Processing Group’s Part-of-Speech Tagger, scoring against tagged model structures from Shakespeare’s sonnets. The comparison is made not on the words themselves, but on the part-of-speech tags. I selected the candidate vocabulary from the sonnets, with a few words thrown in from the world of computing, mainly Chirlian’s Programming in C++ (since it is the machine writing its own particular genre of love poetry). To give the audience freedom for interpretation, I carefully chose words that span multiple parts of speech (‘spit’ as verb or noun; ‘wet’ as adjective or verb, etc.), and maximum ambiguity, including some that could be (but not exclusively) used in a sexual context. By using a set of text files that are read at run time, very different vocabulary pools can be used, depending on the particular artistic context.

Installation

For the Overlap exhibition, I decided to back-project the circular ‘oculus’ onto frosted Perspex, aiming to create a vivid and sharp glimpse into the perceptual processes of The Machine. It was crucial that this be executed perfectly, to allow for the rest of the installation to have its rough, ‘junky’ aesthetic. Given the limited space, I folded the projection, using a laser-cut oval acrylic mirror; to place the image perfectly onto the circular Perspex surface, fully and not overspill, I added features in software for runtime control of the projection position, and transformations to correct for distortions, shearing, etc. Since the two main software components are projected separately, I used the MacOS extended desktop, so that each runs fullscreen on the same computer.

Most of the hardware parts, and the collection of junk, are placed on a low wide plinth. The ‘taste meters’ and stream of text are projected partly onto the plinth, and partly spread over the floor, so that the audience are projected onto as they pass by. The exposed machinic aesthetic extends through to all the hardware in the installation, including Arduinos, projector, mirror, clamps, retort stands, USB hub and cabling, so that they all become - visibly and conceptually - part of The Machine.

Future development

I will soon be presenting this work in an extended collaborative form at an exhibition, Virtuality Mortality, which I’m co-organising. The small collection of junk will be replaced by an enormous (5m x 4m x 2m) heap of decaying technology and other rotting matter. The webcam will be running along a suspended track using a linear actuator, as well as rotating via servo. The whole installation will be sited in a vast warehouse space, aiming to present a powerful ‘technological sublime’ presence.

The project at this point only touches lightly on current research in natural language computing. I plan to investigate that more deeply in the future, to create poetry that is slightly more grammatically correct (but not too correct), possibly by modelling as a Markov process.

Self evaluation

I realised, some way through making this work, that there is an auto-biographical side to it - the machine as alter ego, slightly raw, exposing its vulnerability to the world. While being careful to try not to anthropomorphise (the machine ostensibly has created itself on its own terms and expresses itself on its own terms), I want the audience to empathise, somehow, with it. How can an entity, somewhat different than its ‘hosts’, negotiate its world, without being subsumed by it?

I had a good flow of feedback from technical and non-technical audience members. It is probably my most deeply enjoyed work to date, possibly because the concept was not immediately obvious - it required some work to read and interpret through the installation’s cues - making a satisfying experience to engage with it. Those that did not ‘get’ the intended back-narrative seemed to enjoy a sense of bewilderment at encountering the installation.

I was very pleased with the mirrored ‘oculus’ back-projection onto Perspex, which worked well, both technically and artistically. The decision to expose all the hardware and embrace it as a machinic whole created much of the emotional response - the rawness and vulnerability - that I wanted to elicit.

The collection of detritus changed as the exhibition progressed (some business cards, a biscuit, pieces of apple, newspapers, banana skin, empty cups, etc., were added and moved about), but the possibility (invitation) for interactivity was not obvious to many of the visitors, so I will continue to seek a better solution, to bring people in with a more active engagement.

References

Chirlian, P. Programming in C++, Merrill, 1990.

Fiebrink, R. Wekinator and idea for input image compression, www.wekinator.org

Fondation Vasarely, Langages Machines, secondenature.org/-Exposition-Langages-Machines-.html

Freesound, additional sampled audio tracks by hanstimm, felix blume and eelke.

Fuller, M. et al, Software Studies: A Lexicon, MIT Press, 2008.

Goriunova, O. et al, Fun and Software: Exploring Pleasure, Paradox and Pain in Computing, Bloomsbury Press, New York and London, 2014.

Link, D. LoveLetters_1.0, www.alpha60.de/art/love_letters

Neukom Institute for Computational Science, bregman.dartmouth.edu/turingtests

Papatheodorou, T., orignial genetic algorithm code.

Parisi, L and Fazi, MB., “Do Algorithms Have Fun? On Completion, Indeterminacy and Autonomy in Computation”, in Goriunova, O., 2014.

Overlap Show, Goldsmiths MA/MFA Computational Arts, 2017, www.overlap.show

Shakespeare, W. Sonnets, State Street Press, New York, 2000.

Stanford University NLP Group, nlp.stanford.edu

Turing, A. “Computing Machinery and Intelligence”, Mind 49:433-460, 1950.

University of the Arts London, Language Game[s]: Poetry, Logic and Artificial Language, events.arts.ac.uk/event/2017/5/5/Language-Game-s-Poetry-Logic-and-Artificial-Language

V&A Digital Futures / Lumen Big Reveal, Hackney House, 2017, www.vam.ac.uk/event/8BoAWQ2K/digital-futures-lumen-big-reveal

Virtuality Mortality, Ugly Duck, London, 2017, uglyduck.org.uk