BOTHER

Direction, original concept, choreography, creative coding and video editing by: Clemence Debaig

BOTHER [/ˈbɒðə/] (verb) - Take the trouble to do something / Feel concern about or interest in / Cause trouble or annoyance by interrupting or otherwise inconveniencing someone.

BOTHER is an interactive and durational dance piece inviting the audience to influence, positively or negatively, the fate of the dancer in front of them.

It explores the notions of control, care and digital harassment, questioning how we behave differently when interacting with each other through the proxy of technology. It also intends the portray the impact of that alien agency, inducing pleasure, joy, violence or pain.

BOTHER is set up as a social experiment, exploring how the audience will react when given the power to control the dancer. Will they behave in an empathetic way, allowing the dancer to be free and happy? Or will they act in a more sadistic way, generating violent reactions? Will they care more about the person in front of them or the entertainment they get from the performance?

Performers:

Clemence Debaig, Marialivia Bernardi, Ashley Kim Wakefield, Kristia Morabito, Oliver Charles, Marcello Licitra, Tracy Garcia, Konstantina Katsikari, Ana Vallejo Buitrago, Chloe Bellou, Pedro De Marchi

Technical details

Kinect

Arduino

openFrameworks

Using ofxCv, ofxOpenCv, ofxKinect and Keita Ikeda’s “cutout” class to align the projector and the Kinect

Concept and influences

The structure behind the concept

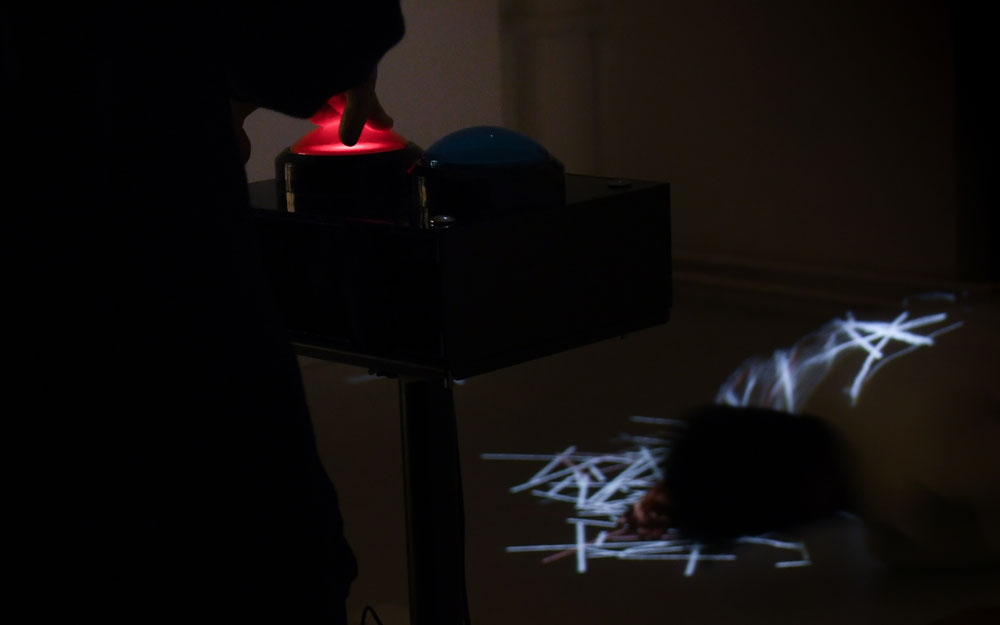

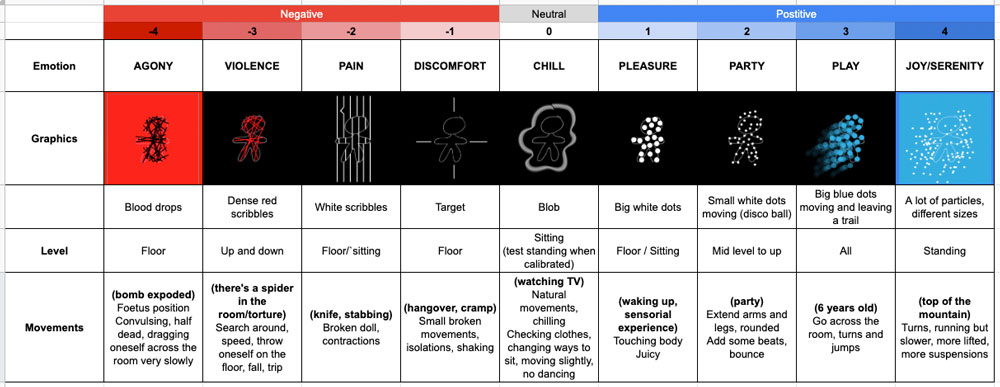

BOTHER offers the audience the ability to “control” the dancer in front of them. They have 2 pushbuttons available, one blue, one red. When pressing either of those, the graphics projected onto the dancer change to express the selected level of emotion. The movements of the dancer also change accordingly. From the audience’s point of view, it gives a strong feeling of control.

Behind the scenes, BOTHER is made of 9 levels, 4 “positive”, 4 “negative” and one “neutral”. The pushbuttons act as + and - buttons, moving a value along the scale, increasing or decreasing the intensity towards the 2 extremes.

(-4) Agony

(-3) Violence

(-2) Pain

(-1) Discomfort

(0) Neutral

(1) Pleasure

(2) Inner joy

(3) Playfulness

(4) Serenity

Research and influences

There were 3 components that interested to me at the beginning of the project: exploring the notion of control, including some form of audience participation, playing with body projections to communicate different emotional states.

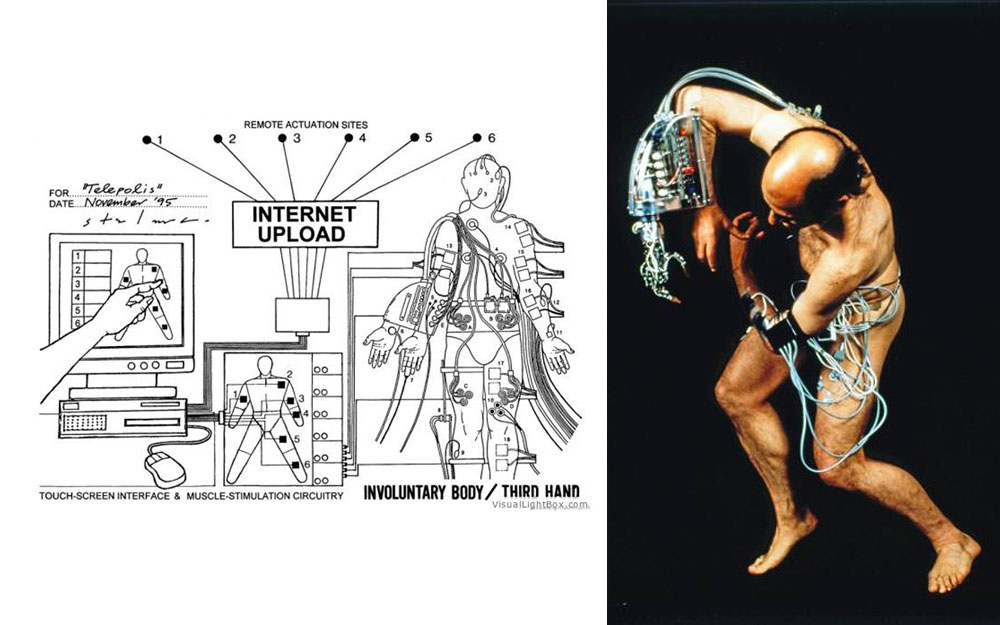

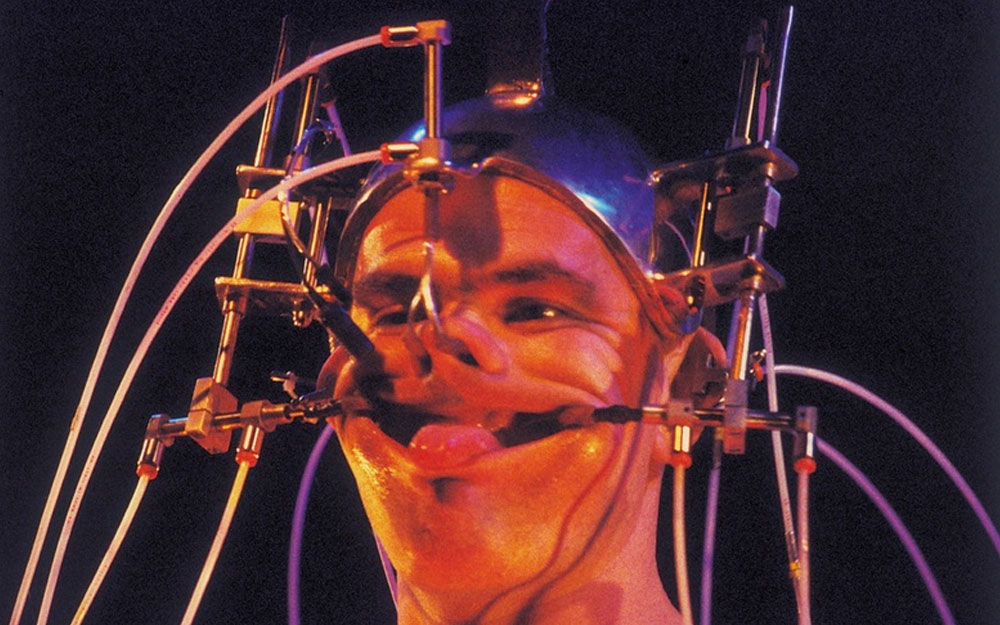

As part of my initial research, I looked into artists like Sterlac with “Ping Body”, Marcel.li Antunez Roca with “Epizoo”, Marina Abramovic with “Rhythm 0”, all exploring what happens when control is given to the audience and elements alien agency in a performative context.

From a visual and dance point of view, I also looked into companies like Chunky Moves and Company DCA (Philippe Decouflé) who’ve explored how to use computer vision and body projection to enhance what the performance of the dancer.

Technical challenges

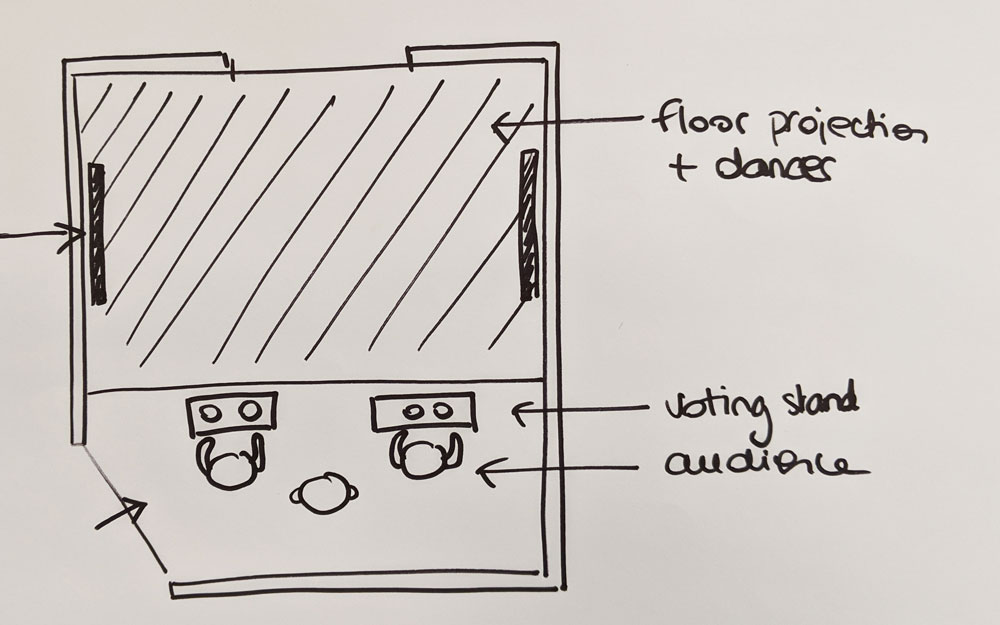

Room layout

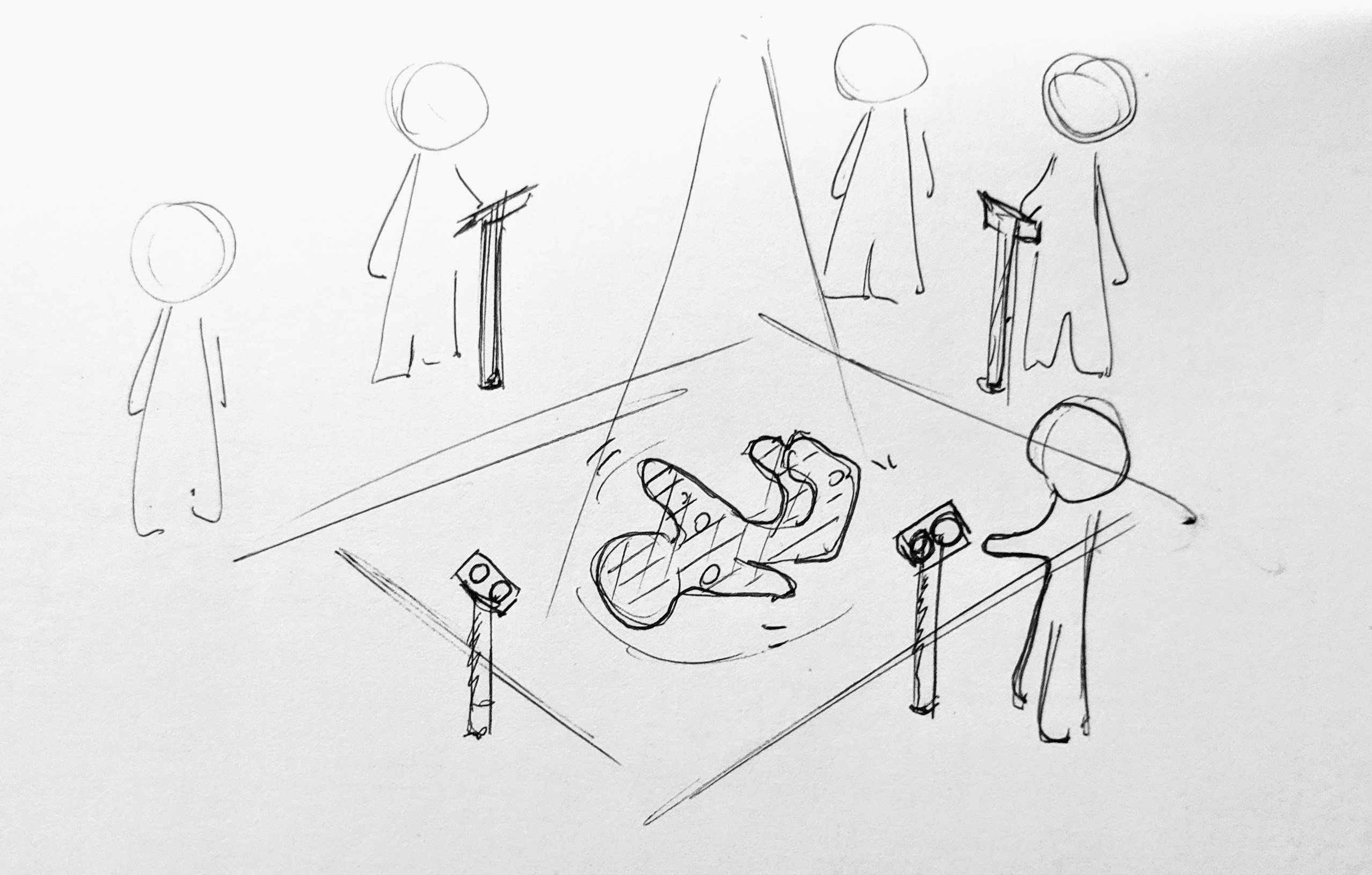

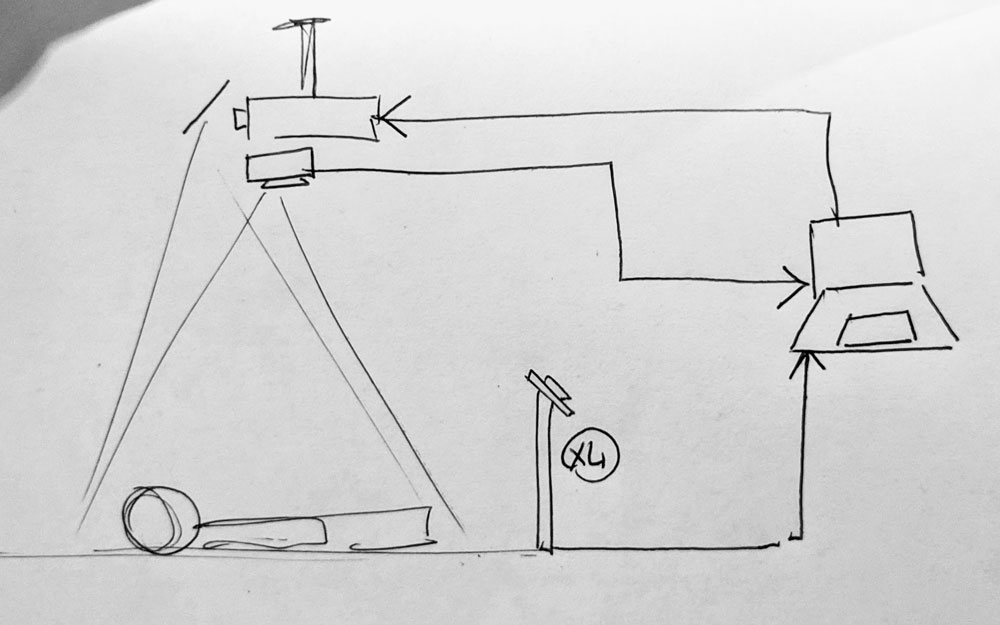

The first technical challenge had been to work around the limits of the physical space and define the right setup for the room. The ceiling in the room isn't very high and I wanted to have enough floor projection so the dancer had enough space to move around.

I also wanted the projection to come slightly from the front, so if some movements would bring the dancer to a standing level, the graphics would still be on the body and not only on his/her head.

I started by doing a few tests from the floor, with everything upside down, placing elements on the floor and pointing at the ceiling. This helped me see how big the projected image could be but also the limits of the Kinect.

This helped me define the best position for the projector and the Kinect before drilling into the ceiling.

Projector, mirror and Kinect

Most projectors can’t be positioned vertically so I had to find a way to use a mirror and redirect the image to the floor. I decided to use a tablet mount to hold the mirror, itself fixed onto the projector. This ended up being very handy as the mount offered a 360 rotation angle and helped a lot during the setup to adjust the position of the mirror to fit the exact projection area I wanted.

The next technical challenge was to align the projector and the Kinect so the graphics would be aligned with the dancer’s body. I have had the chance to start from Keita Ikeda’s “cutout” class, which offers an image interpolation algorithm. After a few adjustments, I found it much faster method to set up the different elements when I arrived on-site compared to any other Kinect addons I had found online, with the flexibility to re-adjust on the fly if needed. Keita's code allows to see what the camera sees and "crop" the image to the wanted area by moving the four corners. I just had to put the projector into grid mode and then crop the Kinect image to only show the projected surface. (#genius)

Building the user interface

The main interface presented to the audience was a bix with 2 push buttons, one blue and one red. I wanted those push buttons to invite the audience to interact by their design. I finally found these brilliant giant dome buttons with an integrated led. They are both connected to an Arduino nano, then encapsulated into a black laser-cut perspex box.

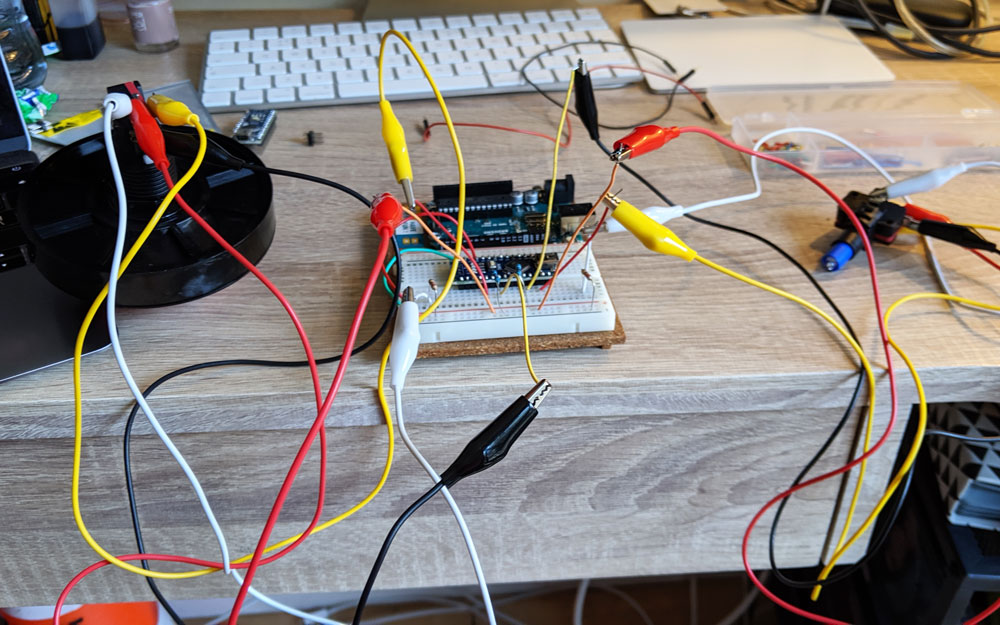

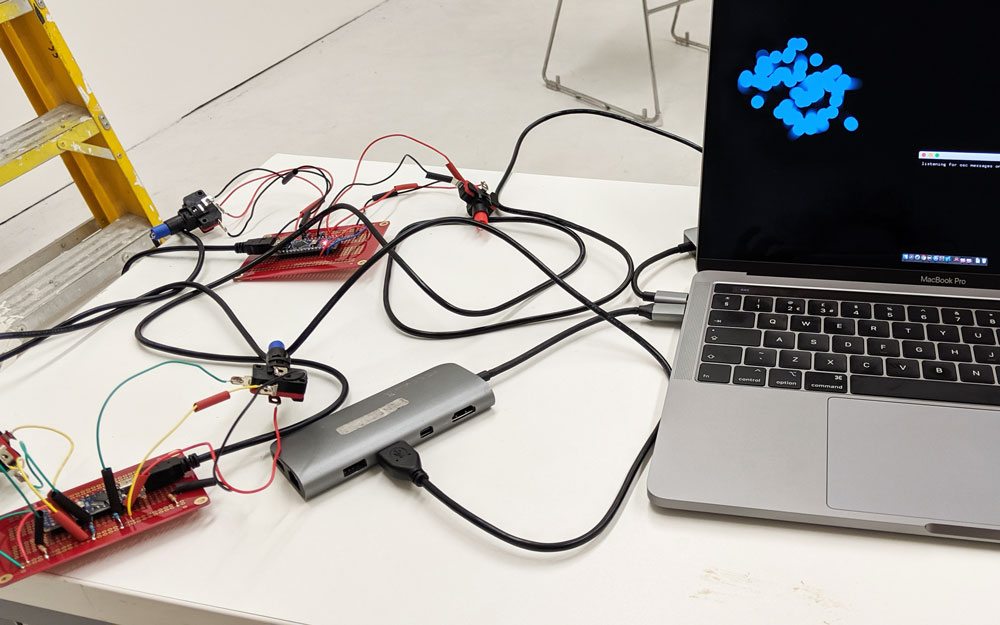

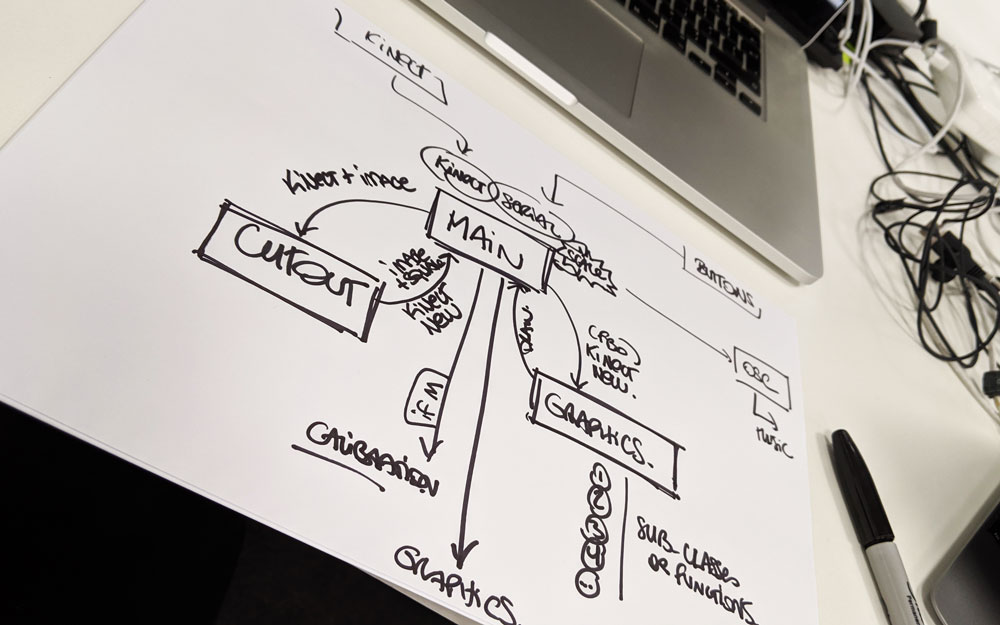

Interfacing Arduino and OpenFrameworks

Last technical challenge had been to interface the Arduino Nanos connected to the pushbuttons with and openFrameworks. The pushbuttons only increase and decrease the scale value used in openFrameworks, but using an Arduino offered more flexibility to adjust the behaviour of the led to provide feedback to the user. In the final version of the project, I am simply using serial to pass information from the Arduino to openFrameworks.

Small experiments and iterative process

I knew I would have to learn a lot of new skills throughout the process(eg how to use Arduino, or how to align the projector and the Kinect). So I started the project by breaking it down into small independent experiments.

I started with the following separated experiments:

1- Visual experiments (what graphics could I use)

2- Kinect-projector alignment (with just using the grayscale image from the Kinect)

3- Arduino with 2 push buttons (on the breadboard)

4- Arduino to openframeworks

Then I continued by assembling some things together:

5- Kinect-projector alignment with the scale, building the class structure for the 9 levels in my scale (with fairly empty visuals for now) - keeping simple keyboard interactions to go up and down the scale

6- Work on the visuals for each level one by one, in parallel of working with the dancers (see details below)

7- Build and solder the final Arduino Nanos with the big pushbuttons

And finally:

8- Connect the Arduinos and openframeworks, and polish some of the transitions

Iterative process between dance and tech

During the summer, I organised 4 different workshops and rehearsals with the dancers. But, due to everyone’s availability, I had to split those sessions into smaller groups. So, in the end, I ran a total of 10 sessions from the end of July to the beginning of September. From a pure performative point of view, it would have been easier to have everyone at the same time, less rehearsal time, more time for me to code. But from a creative process point of view, it gave me the opportunity to iterate between each session and it became a great asset in the project.

Embodying the 9 levels of emotions

Behind the scenes of BOTHER are 9 levels of emotions, from joy to pain. The first part of our process has been to define clear movement textures for each of the levels. We used improvisation techniques from physical theatre to contemporary dance. And we worked on being able to instantly switch from one level to another.

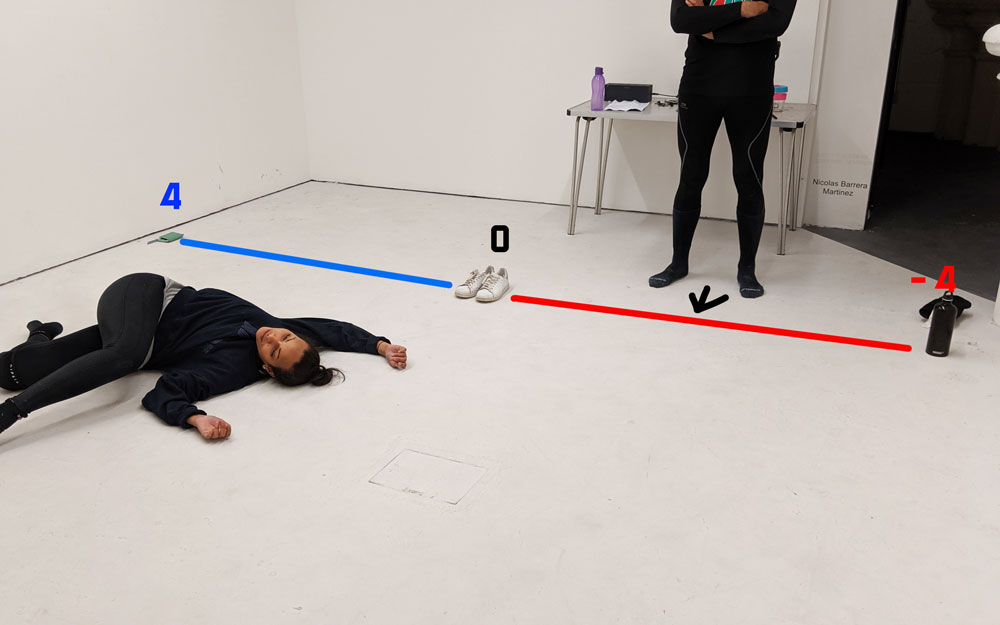

In the first workshops, we had no tech available yet, so we used a “visual” scale on the floor. The centre of the room was the neutral position and the walls were the 2 extreme levels. The people playing the audience were physically standing in different positions along that virtual line to indicate to the dancer which level to embody.

Graphics and movement, iterations

My initial idea was to keep the dancers on the floor to really play with the projection onto the bodies. But we quickly realised that the dancers had not enough range of movement to embody the 9 levels by staying on the floor. So, I reconsidered the visuals for some of the levels to allow them to stand or move in the space, changing the focus of the projection onto the floor itself and less on the bodies.

Each level has been defined iteratively this way. Exploring different movement textures, testing with the projection, adjusting the textures and adjusting the graphics.

In parallel, I also considered the error in the tracking and projection alignment. The floor in the room is not straight, so I had to set the threshold on the Kinect a bit closer to what I would have wanted in order to avoid capturing the floor in some places. As a result, if the dancer is completely laid down, the arms and the legs will disappear. So to avoid missing parts of the body to hide some potential misalignment with the projector on the edges of the projection, I made some of the graphics wider than the tracked silhouette and added some movement so it doesn’t give time to the eyes to catch any errors. It gives the illusion of a perfect alignment.

Framework

When we got to a stage where the textures were defined and the graphics in place, we worked with the following spreadsheet to define all the elements for each texture: name of the emotion, visuals, range of motion, levels, type of movements. A very new approach for the dancers, working with a structure like this, and using spreadsheets in the studio!

Next steps

Data analysis

Part of the project was to observe the audience’s behaviour when offered this level of control. What decision would they make? Would the audience feel more empathetic or sadistic? What happens when someone is alone in the room with the dancer? What social dynamics happen when there is a group?

Behind the scenes, all the user interactions have been recorded. Which button has been clicked, how long they stayed in a certain “mode”, where did they leave the dancer. This will allow me to do some thorough analysis and hopefully identify some interesting patterns.

In parallel, a lot of visitors contacted me after the exhibition to share their experience with me because they got very surprised by their own behaviour and felt compelled to talk about it.

From the point of view of the performers, there are also a lot of interesting stories to explore. How we sometimes felt objectified. How we detached ourselves from the situation and coped with this alien agency. How it felt like playing a game. Or how, on several occasions, some behaviours felt very abusive.

Therefore, I am intending to combine the analysis of the data collected with stories collected from the audience and the performers. It will take the form of a series of blog posts or a potential paper.

Setting BOTHER in the public space

The visitors coming to an exhibition like the Degree Show form a very specific type of audience. They are prepared to see an exhibition, they don’t come here by mistake and they are open to the idea of experiencing different things through art pieces and be challenged.

I would be interested to see what happens when we push the experiment to the public space. I am picturing a dancer in white in the middle of a pedestrian area and those 2 buttons available. What would happen then?

Thanks (many)

Many thanks to the tech team for their help during the setup, especially Pete, Tom and Joe.

Many many thanks to Keita Ikeda for sharing is "cutout" code and simplifying this project a lot by providing more than a starting point in solving the alignment between the Kinect and the projector.

And a huge thanks to all the dancers for being part of this process, for sometimes being more excited than me and for performing this extremely physically demanding piece for 4 days, for up to 1 hour each day. #love #letsdoitagin