Data Moshpit

Dance to glitch yourself out!

produced by: Claire Kwong

Inspiration

I’ve always wanted to try datamoshing. As a VJ, I perform live visuals by glitching video. I thought OpenFrameworks was the perfect medium for datamoshing, because I could manipulate each pixel programmatically.

I read tutorials for how to datamosh with conventional methods, using software like Avidemux. I was inspired by Denial of Service’s music video for “Contour & Shape,” which captured video using a Kinect and then manipulated them in Processing.

I was also inspired by the artist Camille Utterback, particularly her Liquid Time and Entangled installations. I like how viewers physically change the video with their body movements. I also like how the video distorts to aesthetically appear liquid. I’ve been interested in gestural control, and wanted to incorporate that into the input for this project.

Intention

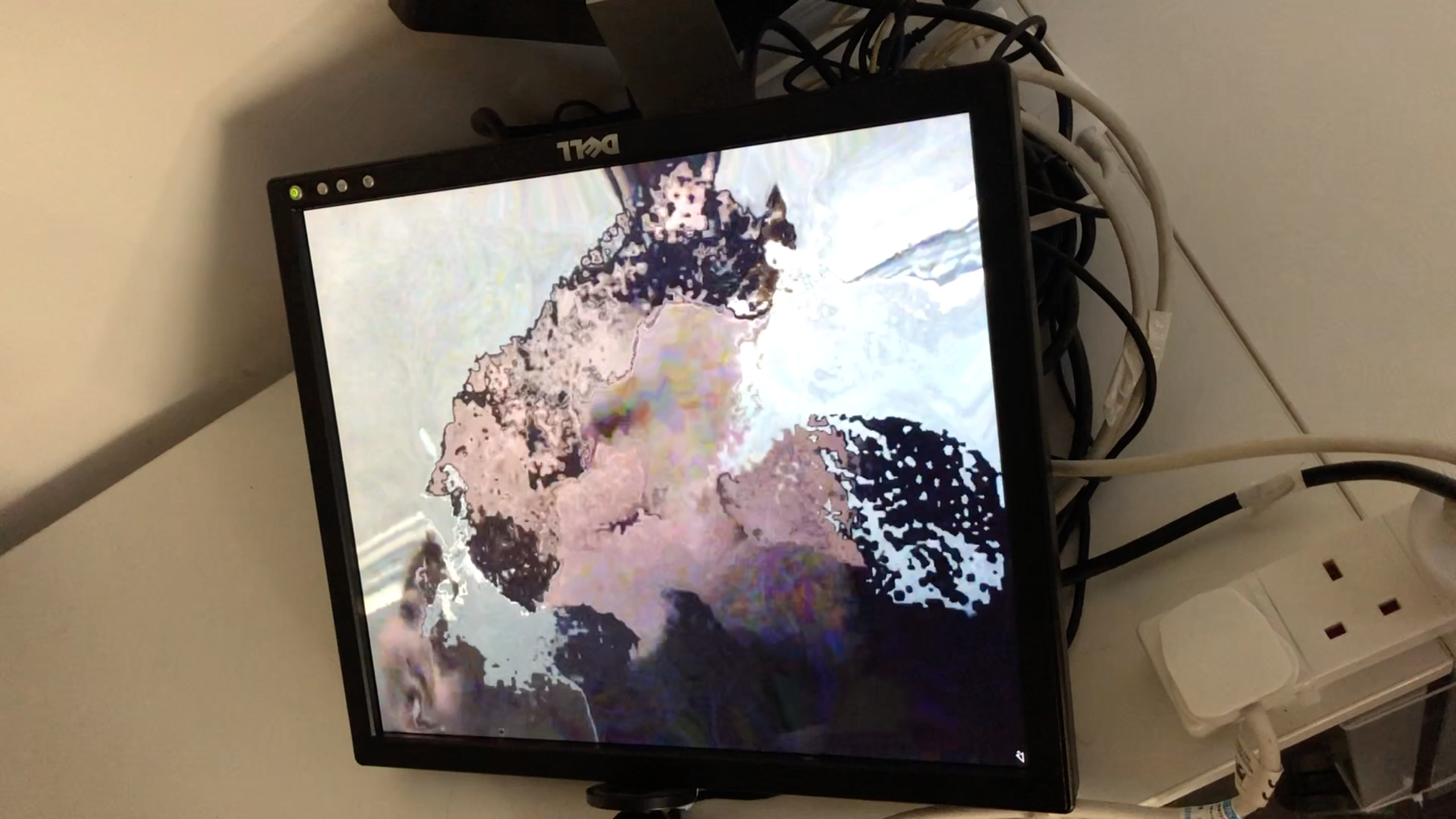

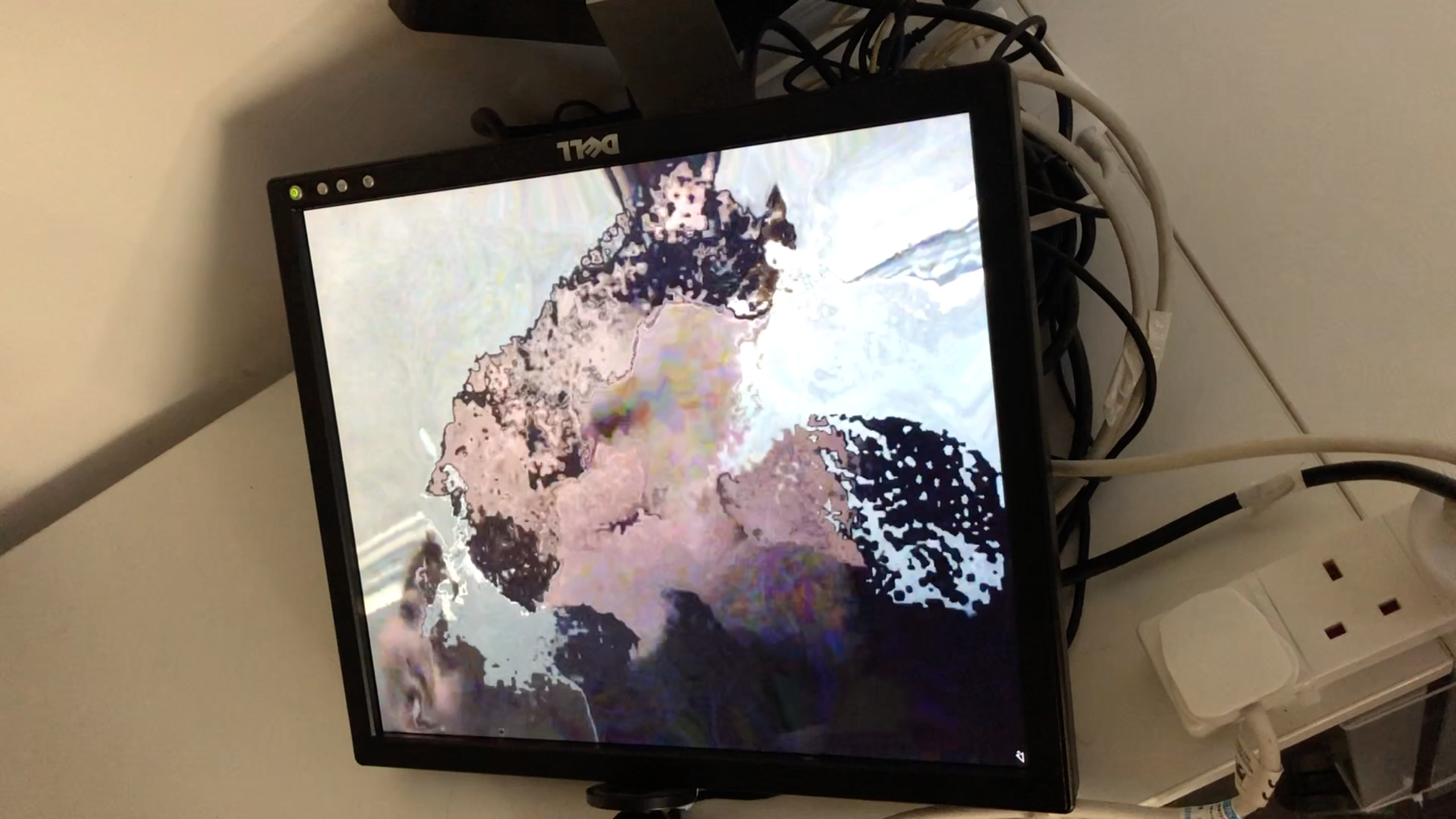

I wanted to make the act of glitching physical. I wanted viewers to physically push pixels around, like a Zen garden. Finally, I wanted to create the sense that the video was liquid and the pixels were melting. I aimed to replicate digital glitches – how pixels can leave trails on the screen when they move.

I set up the physical installation so that viewers looked down onto the horizontal screen. This was intended to give the sense of a reflective liquid surface sloshing around beneath the screen. I also wanted to force viewers to see themselves in an unnatural position. The knocked-down screen evoked broken electronics.

As an installation, I wanted viewers to distort their bodies with their movements. I found that this interaction gave immediate feedback and inspired viewers to move in unnatural ways. However, I’d also like to use this software to glitch out other videos for VJing.

Technical

I started by combining the in-class code examples opticalFlow, which calculates optical flow between two consecutive video frames, and opticalFlow_morphing, which uses optical flow between two images to morph another image. I used the calculated flow between two consecutive video frames to morph the current frame of the video.

I created buffers (deques) of video flow images because I wanted to make the movement effects last longer. Each time the video updates with a new frame, I calculate the sum and average of all the flow values in the buffer. I use the sum for the updateMorph function, and the average values to calculate the total flow value, which indicates how much a viewer is moving. I use this control other parameters, so the video glitches more when the viewer moves more.

When I calculate optical flow for one frame, I will only push the flow image onto the buffer if the total flow value exceeds a constant, determining whether there is movement. This allows the glitched image to stay in place until a viewer disturbs it with more movement. I also pop the first image off the buffer if the size has exceeded the maximum size of the flow vector. This maximum size increases when there is more movement, so the glitch effects last longer.

I experimented by changing the numerical parameters of calcOpticalFlowFarneback. I found that only changing winSize (window size) made a visible effect. I change the winSize so that the effect is more liquid and glitchy the more the viewer moves (the higher the total flow value). I liked the pointillism effect that I got with smaller values of winSize.

I tried using a Kinect as input, and I correlated the image depth with the amount of glitch. But I found that depth correlated directly to flow value, because the number of moving pixels decreases the farther back the viewer is. I also wanted to use the camera because it’s more portable – I can use my laptop camera if needed. The viewer is looking at their camera image, so it makes sense to restrict the input to what is visible.

References

Denial of Service. “Contour & Shape.” https://vimeo.com/118130413

Optical flow, in Workshops in Creative Coding 2, Week 14, https://learn.gold.ac.uk/mod/resource/view.php?id=600281

Optical flow morphing, in Workshops in Creative Coding 2, Week 14, https://learn.gold.ac.uk/mod/resource/view.php?id=600281

Tucker, Phil. “Datamoshing.” http://datamoshing.com

Utterback, Camille. "Liquid Time Series.” http://camilleutterback.com/projects/liquid-time-series/

Utterback, Camille. “Entangled.” http://camilleutterback.com/projects/entangled/