Cyber-Selves Searching for Love

Cyber-Selves Searching for Love is a program that ignites a discussion about the research I have been conducting over the year. These ellipses feature the conversations I received from some of my willing participants, as well as some of my own experiences from online dating apps. This piece is meant to supplement my research through, what I argue to be, the most intimate forms of our “multiple-selves”; the self we are when using online dating apps.

Produced By: Julianne Rahimi

Portfolio: https://juliannerahimi.com

MFA Blog: https://juliannerahimi.com/computational-arts-mfa-blog/

Introduction

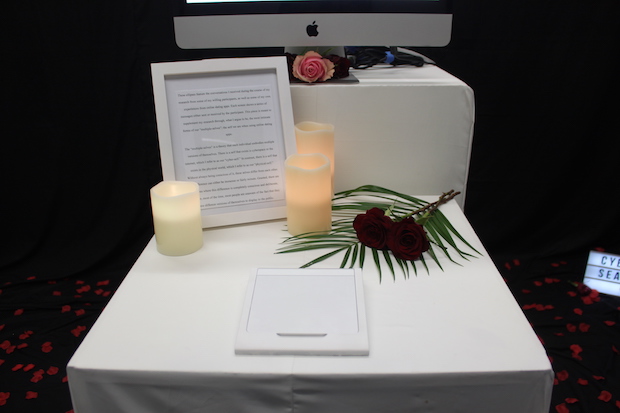

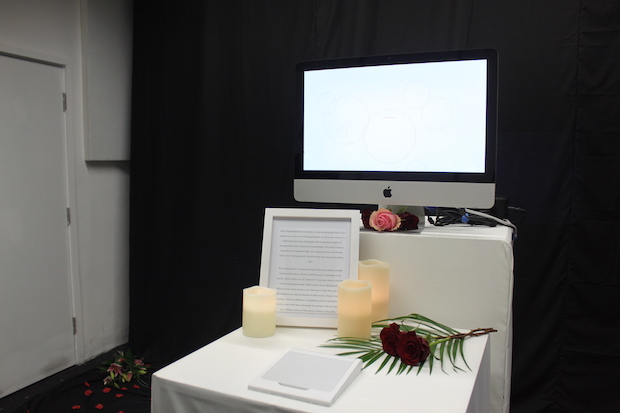

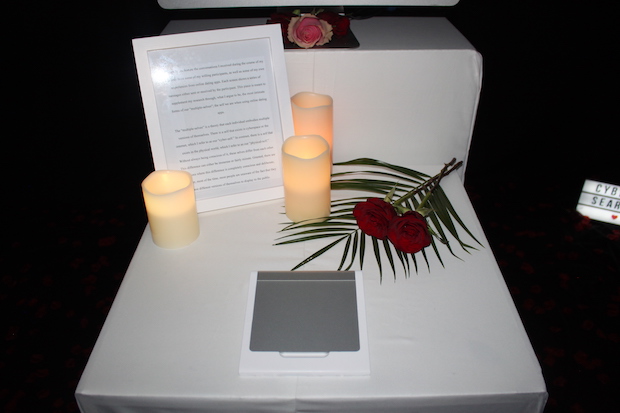

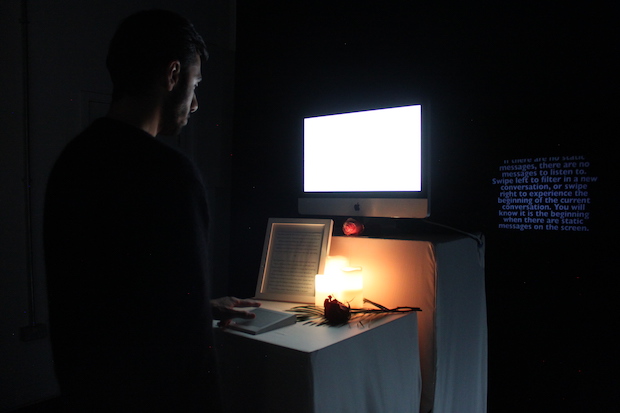

Cyber-Selves Searching for Love is a visual installation that invites users into an immersive environment where they feel as if they are on a date with a computer. It is the middle ground where the online world meets the "physical world," or the world we live in in our everyday, walking lives. It was created as an extension of the "multiple-selves" theory and a way to exemplify one aspect of the "multiple-selves" in hopes of creating a conversation about the different versions of ourselves that live within us.

The “multiple-selves” is a theory that each individual embodies multiple versions of themselves. There is a self that exists is cyberspace or the internet, which I refer to as our “cyber-self.” In contrast, there is a self that exists in the physical world, which I refer to as our “physical-self.” Without always being conscious of it, these selves differ from each other. This difference can either be immense or fairly minute. Granted, there are situations where this difference is completely conscious and deliberate, however, most of the time, most people are unaware of the fact that they have different versions of themselves to display to the public.

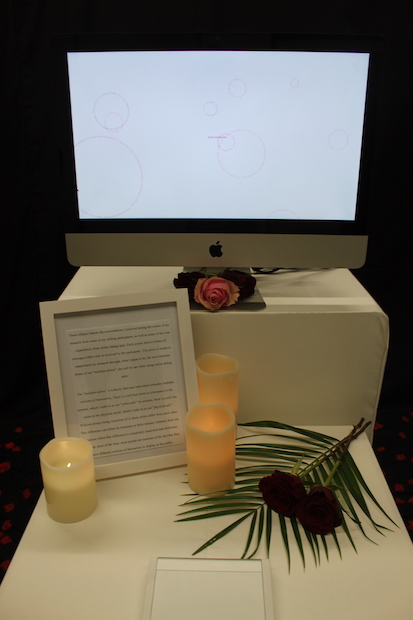

Cyber-Selves Searching for Love sheds light on the most intimate version of our cyber-self through visualizations and audio. Each screen shows a series of messages either sent or received by the participant. A pair of messages continuously remain static at a random location on the screen. Users can double tap the Trackpad to enable the audio functions of the program. If they do not care to read or hear more about the conversation, they can swipe left to filter in a new set of messages from a different conversation and a different pair of online dating app users. If they are interested in knowing more about the conversation on the screen, they can swipe right and a new set of static messages will be placed at a random location on the screen.

Although I am exemplifying only one aspect of our multiple-selves, it is the version of the self that helped me understand the multiple-selves and how our cyber-self differs from our physical-self. Whilst being fundamental to my research and my dissertation, my piece aims to create an immersive environment that is conceptually driven and allows users to reflect on the idea of the multiple-selves and how they are reading the most intimate version of someone else’s cyber-self.

Concept and Background Research

Cyber-Selves Searching for Love was created from the research I conducted for my dissertation on the multiple-selves. In my dissertation I differentiate between the cyber-self and the physical-self. This piece is the hybrid between the two selves; where the online world and the physical world meet.

To understand the multiple-selves, I researched various media theorists, such as Wendy Chun, Walter Benjamin, Jeremy Bentham, Michel Foucault, and more. I also used statistical data to support my claims regarding these different selves and how we are different people online than we are in real life. In addition, I focused heavily on anonymity and the effects it has on who we are online because I noticed my participants were embarrassed or reluctant to send me conversations from their mobile dating app experiences. Since they were no longer deemed “anonymous” and would have to face the scrutiny of someone in the physical world knowing what they said online, a plethora of excuses accompanied the conversations I received. While the risk of their physical-self experiencing the scrutiny of their cyber-self’s decisions is high without them sending me their conversations, the only people that can, potentially, scrutinize them are the people they engage with online. An easy fix to this is to never meet these people. However, they already knew me, so the risk is inevitable.

More information about the research I conducted and my process can be found on my website, juliannerahimi.com, under the “Computational Arts MFA Blog” tab.

Wendy Chun’s Control and Freedom: Power and Paranoia in the Age of Fiber Optics was one of the main inspirations for my work. Since I felt the people we are on online dating platforms is the most intimate version of our cyber-selves, Tinder, Bumble, and Grindr became great inspirations for my work. I wanted to emulate the interactions on these apps, which I did using gestural motions.

In today’s society, we have become accustomed to certain known gestures, like swiping. This motion has been made popular through apps like Tinder. We have also become accustomed to double tapping our screens to show we “like” something. This has occurred because of Instagram and its gestures to interact with a photo. These motions became an inspiration for the interactions users would have.

Technical

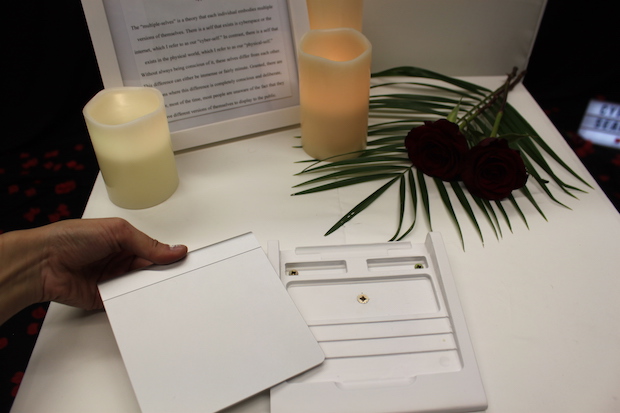

Cyber-Selves Searching for Love is written in p5.js and uses p5.speech, hammer.js, and p5.dom; as well as BetterTouchTool, a third-party software used to enable some of the gestural functions. Physically, Cyber-Selves Searching for Love was comprised of an iMac, an ExquizOn LED projector, two Akram speakers, and an Apple Magic Touchpad. The iMac ran the program on Google Chrome and the Apple Magic Touchpad detected gestural motions to interact with the text ellipses in the program. The projector was used to project an instruction video, and the speakers played the audio when triggered on the touchpad.

With the Apple Magic Touchpad detecting gestures, users could swipe right to continue filtering through a conversation, swipe left to filter in a whole new conversation, or double tap to hear the messages read aloud. While the double tap function was enabled through hammer.js, I used BetterTouchTool to convert swipe motions to arrow keystrokes. This was done to overcome a challenge I faced, where the Apple Magic Touchpad was not picking up the gestural motions in the fashion I wanted it to. Essentially, I wanted to emulate the motions used on mobile dating platforms. Unfortunately, hammer.js did not pick up these motions on the touchpad the same way it would on a tablet or touchscreen device. To solve this issue, I converted swipes to keystrokes on BetterTouchTool and set it to only work when using Google Chrome.

Future development

To further my project, I would love to include more conversations in an interactive way. Since this piece is web-enabled, it can easily be housed online. With it online, I would want to add a feature where users could submit their own conversations to the program in real time. This could be through a website that simultaneously hosts the program and the submission page. I think this feature would make the piece a lot more interactive and engaging for users. This would also be a great way to further my research in the fashion I sought to research in, which is through the use of anonymity. By enabling users to submit their conversations in real time, no one would know who they were, as I would not require users to submit any names. This aspect of my research is incredibly crucial to my theory of the multiple-selves.

Self Evaluation

Overall, I am very pleased with the outcome of my project. Although it was far more challenging than I expected, I feel as if I was able to overcome the obstacles I faced and created a functioning program that was robust while remaining aesthetically pleasing. However, if I were to be given the opportunity to improve my piece in any way, I would improve three things: the gestural interactions, the static positioning of the ellipses, and the audio functions.

In terms of the gestural interactions, I would have liked to have this detected through a tablet or smartphone. I felt the touchpad did its job to emulate the gestures used on mobile dating platforms, however, I would have liked to replicate it. By using a tablet or a smartphone, it would feel more like a mobile dating app and enable users to use one finger, as opposed to two.

The static positioning of the ellipses has been written so that the ellipses appear in a random location each time they become static. If given the chance to improve, I would have these messages position in the same exact position each time they become static. The reason this was not achieved in time for the show was because each ellipse was a different size. By making them random, one ellipse did not completely take over another. In order to achieve something like this, I would need to be positioning the static messages from the center of the ellipses, as opposed to the left top corner of the graphic. Next, the positioning would have to have conditionals attached to it so that the ellipses did not overlap each other or move off-screen. I cannot say I am entirely sure where I would have placed them, but I am sure that is an aesthetic decision that can be worked out through trial and error.

Lastly, I would have improved the audio functions. One thing I was tempted to implement was idle audio. I wanted users to enter a room that was already filled with sound. I contemplated having the idle audio read out my dissertation. When it detected a gesture, it would pause the idle speech and resume it only when a certain amount of time had passed where no gestures were detected. However, I am still not 100% certain if I would add this feature. On one hand, I felt that much audio could be overwhelming, while on the other I felt it would give a lot more insight into the multiple-selves and the research I had been conducting.

Aside from these three tweaks, I felt the installation was very successful and truly transported users to a world where they could imagine being the daters on the screen. I was very pleased with the technical challenges I was able to overcome. I learned a great deal while creating this program, as the algorithm was quite complicated and creating graphics for an array was something I had never done before.

Source Code:

Code: https://github.com/jrahimi/textEllipse

References:

Instruction Video: https://vimeo.com/232703695

Prototype Video: https://vimeo.com/208703282

Text in the picture frame on the plinth: These ellipses feature the conversations I received during the course of my research from some of my willing participants, as well as some of my own experiences from online dating apps. Each screen shows a series of messages either sent or received by the participant. This piece is meant to supplement my research through, what I argue to be, the most intimate forms of our “multiple-selves”; the self we are when using online dating apps.

The “multiple-selves” is a theory that each individual embodies multiple versions of themselves. There is a self that exists is cyberspace or the internet, which I refer to as our “cyber-self.” In contrast, there is a self that exists in the physical world, which I refer to as our “physical-self.” Without always being conscious of it, these selves differ from each other. This difference can either be immense or fairly minute. Granted, there are situations where this difference is completely conscious and deliberate, however, most of the time, most people are unaware of the fact that they have different versions of themselves to display to the public.

Libraries: https://github.com/hammerjs/touchemulator, https://hammerjs.github.io/dist/hammer.js, http://hammerjs.github.io, http://ability.nyu.edu/p5.js-speech/

BetterTouchTool: https://www.boastr.net

https://processing.org/tutorials/text/ (Parts of my code have been modified from the code on this page)

https://github.com/processing/p5.js/wiki/Processing-transition

Main Literature:

- Chun, Wendy Hui Kyong. Control and Freedom: Power and Paranoia in the Age of Fiber Optics. MIT Press, 2006.

- Benjamin, Walter, and Harry Zohn, translators. "The Work of Art in the Age of Mechanical Reproduction." Illuminations, edited by Hannah Arendt, New York: Schocken Books, 2007, pp. 217-251, masculinization.files.wordpress.com/2015/05/Benjamin-walter- e28093-illuminations.pdf.

- Foucault, Michel. Discipline and Punish: The Birth of the Prison. Translated by Alan Sheridan, Second Vintage Books Edition, Vintage Books,

- 1995, monoskop.org/File:Foucault_Michel_Discipline_and_Punish_The_Birth_of_the_Pr ison_1977_1955.pdf.

- Finkel, Eli J., et al. "Online Dating: A Critical Analysis From the Perspective of Psychological Science." Psychological Science in the Public Interest, vol. 13, no. 1, 7 Mar. 2012, pp. 3- 66. SAGE Premier Collection. doi:10.1177/1529100612436522.

Links to Pages I Looked at While Creating Cyber-Selves Searching for Love:

Processing Strings and Drawing Text: https://processing.org/tutorials/text/

Daniel Shiffman "Programming from A to Z": http://shiffman.net/a2z/intro/

OSC Simple Guide: https://www.jroehm.com/2015/10/a-simple-guide-to-use-osc-in-the-browser/

http://codeforartists.com

hammer.js/Hammer.js: https://github.com/hammerjs/hammer.js/tree/master/tests/manual, https://github.com/hammerjs/hammer.js/wiki

hammerjs/touchemulator: https://github.com/hammerjs/touchemulator

thebird/Swipe: https://github.com/thebird/Swipe

colinbdclark/osc.js: https://github.com/colinbdclark/osc.js/

genekogan/p5js-osc: https://github.com/genekogan/p5js-osc

Move.js: https://visionmedia.github.io/move.js/

Example of Hammer.js swipe: http://alpha.editor.p5js.org/yining/sketches/HyoEADcgx (but this one uses document.body which means swiping is enabled anywhere on the screen)

Example of Hammer.js swipe: http://jsfiddle.net/6jxbv/119/ (only moves box)

Example of Hammer.js gestures: https://codepen.io/runspired/full/ZQBGWd

Example of Hammer.js swipe velocity: http://alpha.editor.p5js.org/projects/rkOj4bueg

Example of Hammer.js swipe image: http://alpha.editor.p5js.org/yining/sketches/H1qyGcYex

Example of Hammer.js gesture detection: http://alpha.editor.p5js.org/projects/HyEDRsPel, https://codepen.io/jtangelder/pen/lgELw

Example of Hammer.js swipe single box: http://jsfiddle.net/6jxbv/119/

Example of Hammer.js swipe one in a line: http://www.webdevbreak.com/episodes/touch-gestures-hammerjs/demo, http://www.webdevbreak.com/episodes/touch-gestures-hammerjs, http://www.webdevbreak.com/episodes/touch-gestures-hammerjs-2

Example of Hammer.js swipe and drag: http://jsfiddle.net/gilbertolenzi/uZjCB/208/

Example of Hammer.js touch: https://codepen.io/berkin/pen/HKgnF

Example of Hammer.js select and move: http://cdn.rawgit.com/hammerjs/touchemulator/master/tests/manual/hammer.html

Example of p5.js ("Soft Body") node: https://p5js.org/examples/simulate-soft-body.html

Example of writing text on canvas: http://jsfiddle.net/amaan/WxmQR/1/

Example of createImage(): https://p5js.org/examples/image-create-image.html

Example of createGraphics(): https://p5js.org/examples/structure-create-graphics.html

Example of drawTarget(): https://p5js.org/examples/structure-functions.html

Example of p5.js Objects: https://p5js.org/examples/objects-objects.html

Example of p5.js Interactivity: https://p5js.org/examples/hello-p5-interactivity-1.html

p5.js List of Examples: https://p5js.org/examples/

Example of Array of Objects: https://p5js.org/examples/objects-array-of-objects.html, https://p5js.org/examples/arrays-array-objects.html

Example of Array Number Count: http://jsfiddle.net/AbpBu/, https://jsfiddle.net/h1d69exj/2/

Example of Objects: https://p5js.org/examples/objects-objects.html

p5.js Refrence Page: https://p5js.org/reference/

p5.js Overview: https://github.com/processing/p5.js/wiki/p5.js-overview

Beyond the Canvas (pointers, createCanvas, createElement, etc.): https://github.com/processing/p5.js/wiki/Beyond-the-canvas

JavaScript Basics: https://github.com/processing/p5.js/wiki/JavaScript-basics#adding-properties

Processing to p5.js: https://github.com/processing/p5.js/wiki/Processing-transition

Hammer.js Refrence Pages: http://hammerjs.github.io/api/, https://github.com/hammerjs/hammer.js/issues/241, http://hammerjs.github.io/tips/, https://github.com/hammerjs/hammer.js/wiki/Getting-Started, https://github.com/hammerjs/hammer.js/tree/master/, https://github.com/hammerjs/hammer.js/wiki/Tips-&-Tricks#horizontal-swipe-and-drag

Intro to DOM manipulation and events: https://github.com/processing/p5.js/wiki/Intro-to-DOM-manipulation-and-events

p5.dom: https://p5js.org/reference/#/libraries/p5.dom

Touch Events: https://www.w3.org/TR/touch-events/

HTML5 Canvas: https://www.w3schools.com/html/html5_canvas.asp

HTMLElements: https://www.w3schools.com/html/html_elements.asp, https://developer.mozilla.org/en/docs/Web/API/HTMLElement

HTML Event Attributes: https://www.w3schools.com/tags/ref_eventattributes.asp

HTML Global Attributes: https://www.w3schools.com/tags/ref_standardattributes.asp

HTML Class Attribute: https://www.w3schools.com/html/html_classes.asp

HTML id Attribute: https://www.w3schools.com/tags/att_global_id.asp

HTML DOM Style Object: https://www.w3schools.com/jsref/dom_obj_style.asp

Style position Property: https://www.w3schools.com/jsref/prop_style_position.asp

document.getElementById(): https://developer.mozilla.org/en-US/docs/Web/API/Document/getElementById

document.querySelectorAll(): https://developer.mozilla.org/en-US/docs/Web/API/Document/querySelectorAll

document.getElementsByClassName(): https://developer.mozilla.org/en/docs/Web/API/Document/getElementsByClassName

KeyboardEvent keyCode Property: https://www.w3schools.com/jsref/event_key_keycode.asp

Hammer.Press(options): http://hammerjs.github.io/recognizer-press/

HTML DOM Events: https://www.w3schools.com/jsref/dom_obj_event.asp

Making and Moving Selectable Shapes on and HTML5 Canvas: A Simple Example: https://simonsarris.com/canvas-moving-selectable-shapes/

touchStarted(): https://p5js.org/reference/#/p5/touchStarted

select(): https://p5js.org/reference/#/p5/select

push(): https://p5js.org/reference/#/p5/push

p5.Element: https://p5js.org/reference/#/p5.Element

Instance Container (document.body): https://p5js.org/examples/instance-mode-instance-container.html

createGraphics(): https://p5js.org/reference/#/p5/createGraphics

Positioning your canvas: https://github.com/processing/p5.js/wiki/Positioning-your-canvas

JavaScript HTML DOM Elements: https://www.w3schools.com/js/js_htmldom_elements.asp

event.target: https://api.jquery.com/event.target/

this.: https://github.com/processing/p5.js/wiki/JavaScript-basics#adding-properties

p5.js changing data types: https://forum.processing.org/two/discussion/18799/p5-js-changing-data-types-from-float-into-int

int(): https://p5js.org/reference/#/p5/int

Math.floor(): https://developer.mozilla.org/en/docs/Web/JavaScript/Reference/Global_Objects/Math/floor

Logical Operators: https://p5js.org/examples/control-logical-operators.html

Operator Precedence: https://p5js.org/examples/math-operator-precedence.html

JavaScript Arrays: https://www.w3schools.com/js/js_arrays.asp

input(): https://p5js.org/reference/#/p5.Element/input

select(): https://p5js.org/reference/#/p5/select

JavaScript umber toString() Method: https://www.w3schools.com/jsref/jsref_tostring_number.asp

parseInt(): https://www.w3schools.com/jsref/jsref_parseint.asp

Array.prototype.map(): https://developer.mozilla.org/en-US/docs/Web/JavaScript/Reference/Global_Objects/Array/map?redirectlocale=en-US&redirectslug=JavaScript/Reference/Global_Objects/Array/map

Array.from(): https://developer.mozilla.org/en-US/docs/Web/JavaScript/Reference/Global_Objects/Array/from

map(): https://www.w3schools.com/jsref/jsref_map.asp

Array.prototype.some(): https://developer.mozilla.org/en/docs/Web/JavaScript/Reference/Global_Objects/Array/some

Object.getPrototypeOf(): https://github.com/hammerjs/hammer.js/issues/414

How TO - JavaScript HTML Animations: https://www.w3schools.com/howto/howto_js_animate.asp

Object.keys(): https://developer.mozilla.org/en/docs/Web/JavaScript/Reference/Global_Objects/Object/keys

foreach: https://developer.mozilla.org/en-US/docs/Web/JavaScript/Reference/Global_Objects/Array/forEach

Hammer Positions: https://github.com/hammerjs/hammer.js/issues/577

Hammer.Manager: https://hammerjs.github.io/jsdoc/Manager.html

Hammer.Swipe(options): http://hammerjs.github.io/recognizer-swipe/

Touch-Action: https://developer.mozilla.org/en-US/docs/Web/CSS/touch-action, http://hammerjs.github.io/touch-action/

Touch Emulator: http://hammerjs.github.io/touch-emulator/

Hammer.Tap(options): http://hammerjs.github.io/recognizer-tap/

Hammer.Press(options): http://hammerjs.github.io/recognizer-press/

Emulate touch on desktop: http://hammerjs.github.io/touch-emulator/

Recognizer: https://hammerjs.github.io/jsdoc/Recognizer.html

How to use Hammer to swipe: https://stackoverflow.com/questions/16873981/how-to-use-hammer-to-swipe

Hammer.js get DOM object where event was attached: https://stackoverflow.com/questions/29984702/hammer-js-get-dom-object-where-event-was-attached

How to return X and Y coordinates when using jquery.hammer.js: https://stackoverflow.com/questions/17391478/how-to-return-x-and-y-coordinates-when-using-jquery-hammer-js

e.center.x & y same as element .left/.top in Hammer.js 2.0?: https://stackoverflow.com/questions/17391478/how-to-return-x-and-y-coordinates-when-using-jquery-hammer-js

Is there a deltaX/deltaY of last event fire?: https://github.com/hammerjs/hammer.js/issues/414

Selecting select option triggers swipeleft event with mouse: https://github.com/hammerjs/hammer.js/issues/1091

Delegating Hammer.js events with jQuery: https://stackoverflow.com/questions/10191612/delegating-hammer-js-events-with-jquery

Why is Hammer executed when 'touchstart' was stopped: https://github.com/hammerjs/hammer.js/issues/318

Does hammer.js handle touchmove?: https://stackoverflow.com/questions/30218216/does-hammer-js-handle-touchmove

What's the best way to generate image from text using JavaScript and HTML5 APIs?: https://hashnode.com/post/whats-the-best-way-to-generate-image-from-text-using-javascript-and-html5-apis-cik6k8rbj01izxy53619llzzp

How can I generate an image based on text and CSS?: https://stackoverflow.com/questions/17618574/how-can-i-generate-an-image-based-on-text-and-css

Equivalent of pTouchX/Y for objects in 'touches[]': https://github.com/processing/p5.js/issues/1478

Array of Images p5.js: https://stackoverflow.com/questions/40652443/array-of-images-p5-js

What's the best way to convert a number to a string in JavaScript?: https://stackoverflow.com/questions/5765398/whats-the-best-way-to-convert-a-number-to-a-string-in-javascript

JavaScript get number from String: https://stackoverflow.com/questions/10003683/javascript-get-number-from-string

Regex using JavaScript to return just numbers: https://stackoverflow.com/questions/1183903/regex-using-javascript-to-return-just-numbers

How to convert a string of numbers to an array of numbers?: https://stackoverflow.com/questions/15677869/how-to-convert-a-string-of-numbers-to-an-array-of-numbers

How do I convert a string into an interger in JavaScript?: https://stackoverflow.com/questions/1133770/how-do-i-convert-a-string-into-an-integer-in-javascript

How to find the array index with a value?: https://stackoverflow.com/questions/7346827/how-to-find-the-array-index-with-a-value

Objects move position automatically when trying to select all on mousedown (Fabric.js): https://stackoverflow.com/questions/40041803/objects-move-position-automatically-when-trying-to-select-all-on-mousedown-fabr

How to select and move an object to mouse position?: https://forum.unity3d.com/threads/how-to-select-and-move-an-object-to-mouse-position-javascript.134116/

Javascript interate object: https://stackoverflow.com/questions/14379274/javascript-iterate-object

Selecting select option triggers swipeleft event with mouse: https://github.com/hammerjs/hammer.js/issues/1091