Simulacra

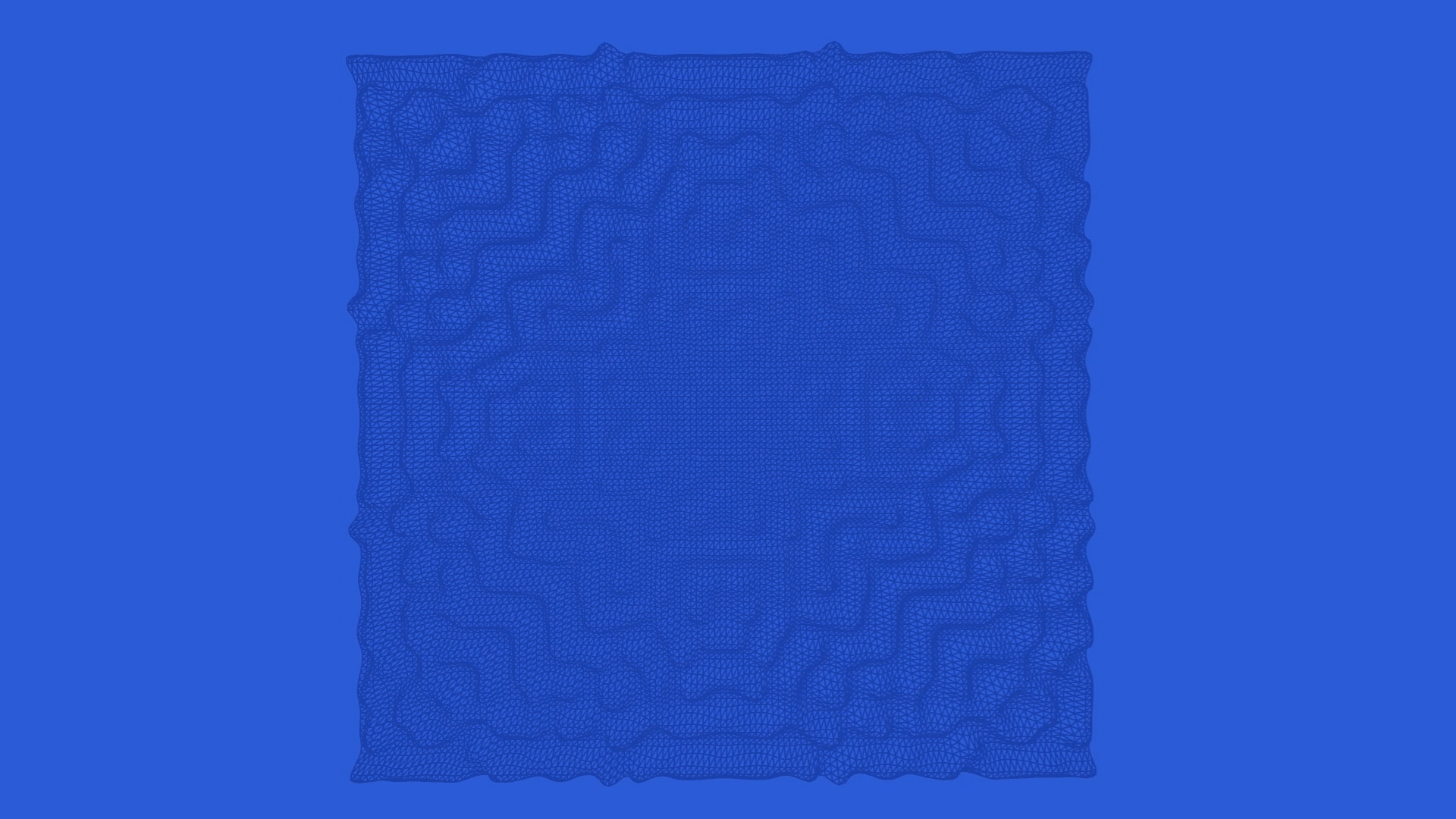

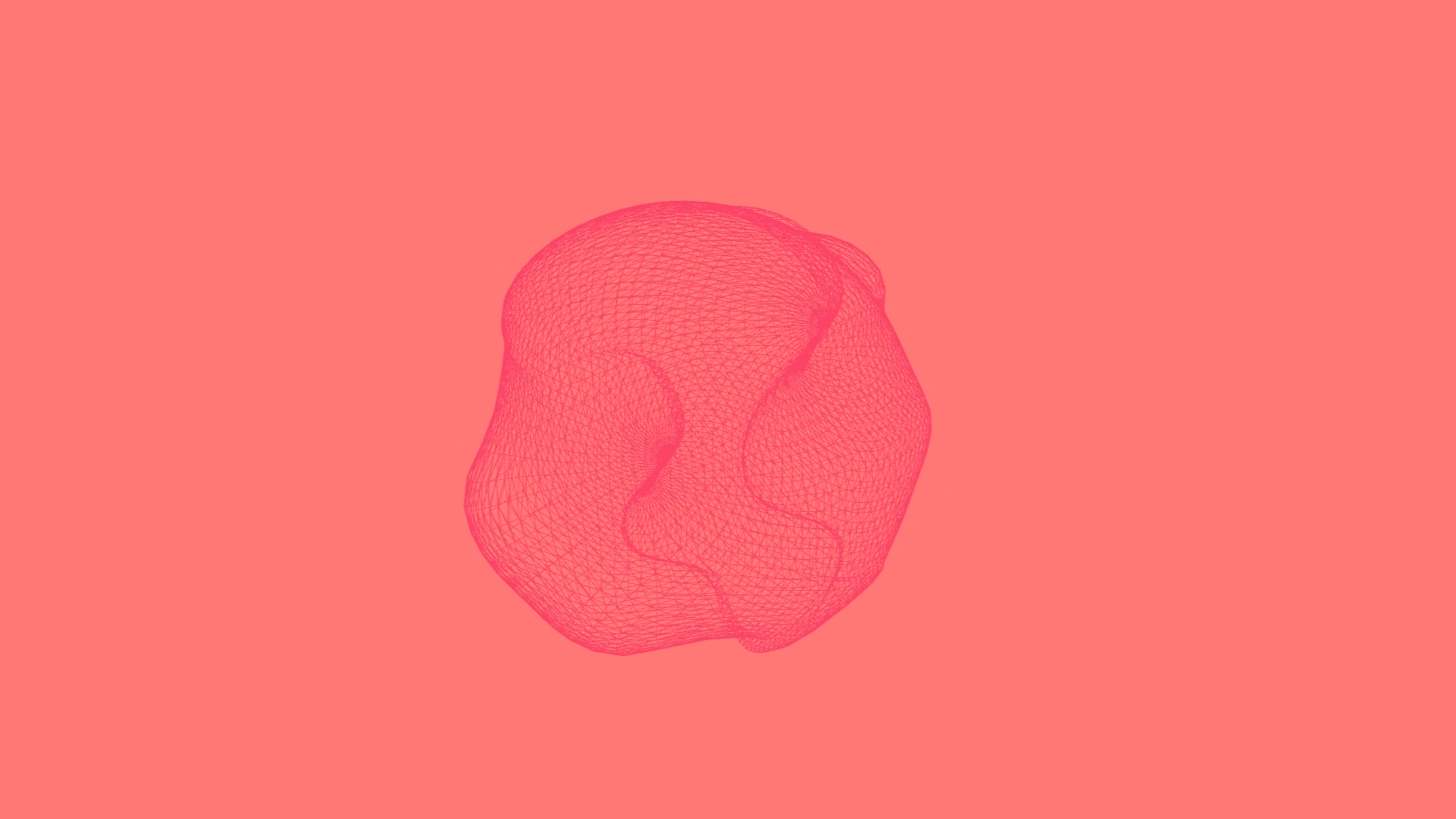

Soft and bulbous, Simulacra is a series of procedurally generated digital artefacts which aestheticise the body through fleshy materials and intestinal textures. By exploring the human connection with the soft and somatic, Simulacra embraces the grotesque, where once rigid non-human forms bulge and undulate in seamless and satisfyingly endless loops.

produced by: Joseph Rodrigues Marsh

Introduction

Simulacra is a series of digital artefacts which explore the uncanny, through both mesmeric and grotesque infinite loops. Custom software uses a series of geometric algorithms to generate forms which are then procedurally animated and rendered using the Blender Cycles engine.

Concept and background research

In Simulacra, the intention was to create a series of intimate and bodily artefacts which resonate viscerally with the viewer, exploring how digital media can be felt and experienced through something beyond mere passive observation. The artefacts would serve as a medium to explore how ones perceived affordances can inform our understanding of things1, with each thing existing as a simulation of our own understanding.

“To see is to always see from somewhere, is it not?”

Maurice Merleau-Ponty, Phenomenology of Perception 2

The title of this piece was inspired by the writings of Baudrillard, where he discusses the idea of a simulacra being the simulation of a simulation, something which is more real than the reality it was intended to represent, and where reality begins to merely imitate the model3.

In Simulacra, the intention was not to recreate existing forms through complex simulations of real-world phenomena, but to mimic life in a way which is unreal but not surreal, blurring the line between nature and artifice, with each artefact existing in a digital space free from real-world restrictions but exhibiting behaviours reminiscent of the natural world. Simulacra explores the possibilities of whether a digital object can be sensed in a metaphysical way, to be felt, tasted or smelt.

“It matters not whether an artwork is real or fake, what matters is whether it is a good fake, or a bad fake”

Orson Welles, F For Fake. 4

Through the traditionally non-human practice of computation, the idea of creating life, or the illusion of something which exhibits life-like behaviour, became a strong focus. Creating a framework which allowed forms to be emergently created through a bottom-up computational process, exhibiting behaviours reminiscent of life but are in fact achieved through noise, was in part an attempt at removing the possible biases of the artist in creating an artwork to avoid influencing a viewers’ interpretation.

The artefacts were composed and rendered in the open-source modelling platform Blender, a simulated space where nothing is real and everything is a simulation, from the creation of geometry to the ray-tracing of each photon in the calculation of light, but the output of that fabrication can become something perceivably real to the viewer5.

By making the artefacts loop seamlessly, the aim was to draw the viewer into a deeply mesmeric and meditative experience, enveloping the participant in a hyper-reality filled with glutinous soft-bodies. The artefacts explore the boundaries of what is perceived to be real and what is synthesised, through playful renderings of things exhibiting uncanny behaviours.

Technical

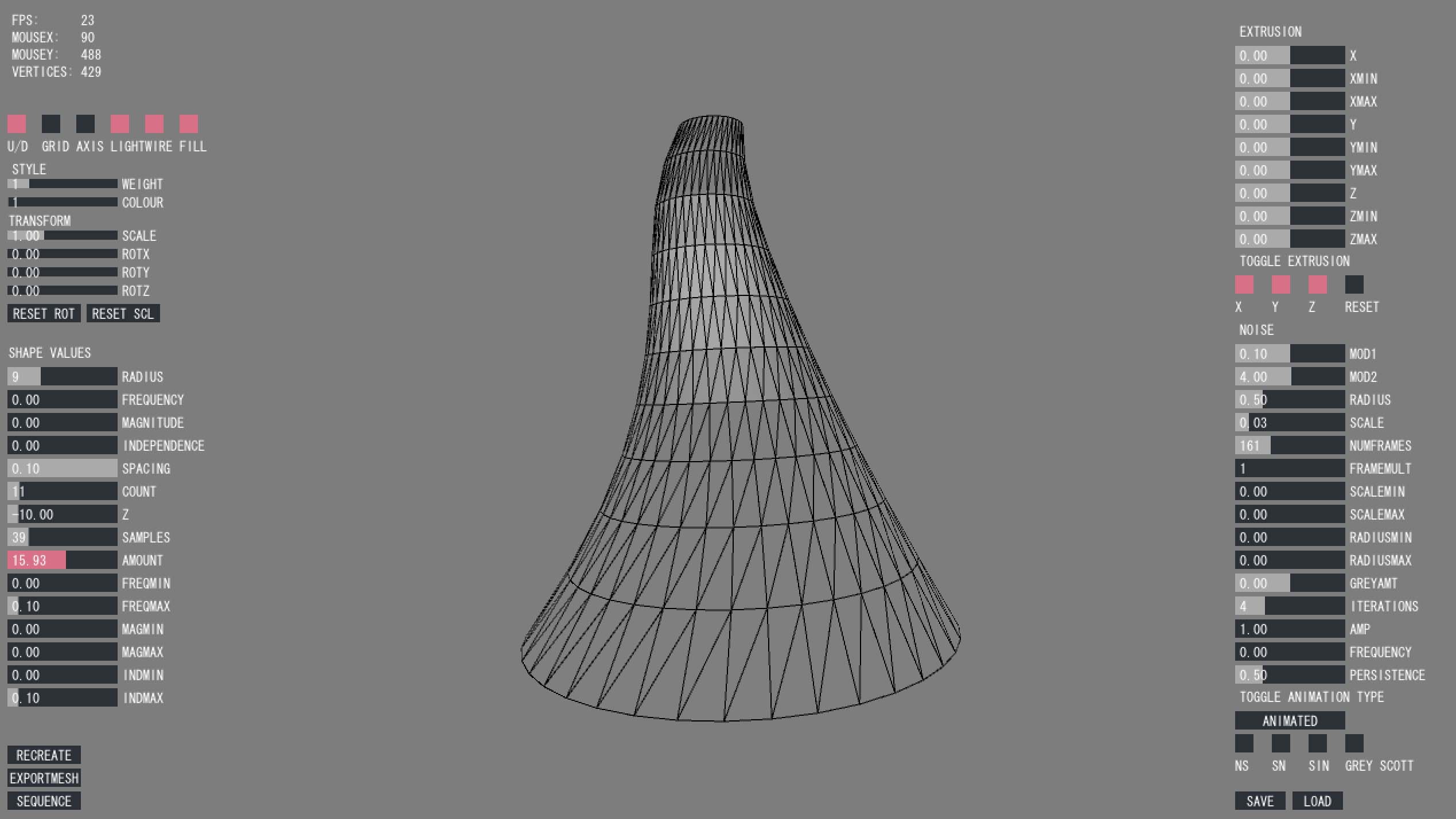

The project was a continuation of my final project for the Programming for Artists II module, titled Simulacra Studies. A boilerplate provided by Lior Ben-Gai allowed for the basic creation of geometric forms within Processing with the intention of 3D-printing an artefact. This initial framework was developed to playfully and procedurally animate geometric forms, with various noise algorithms driving the movement of each vertex. A combination of randomness and control allowed for the creation of intimate and fleshy lifeforms.

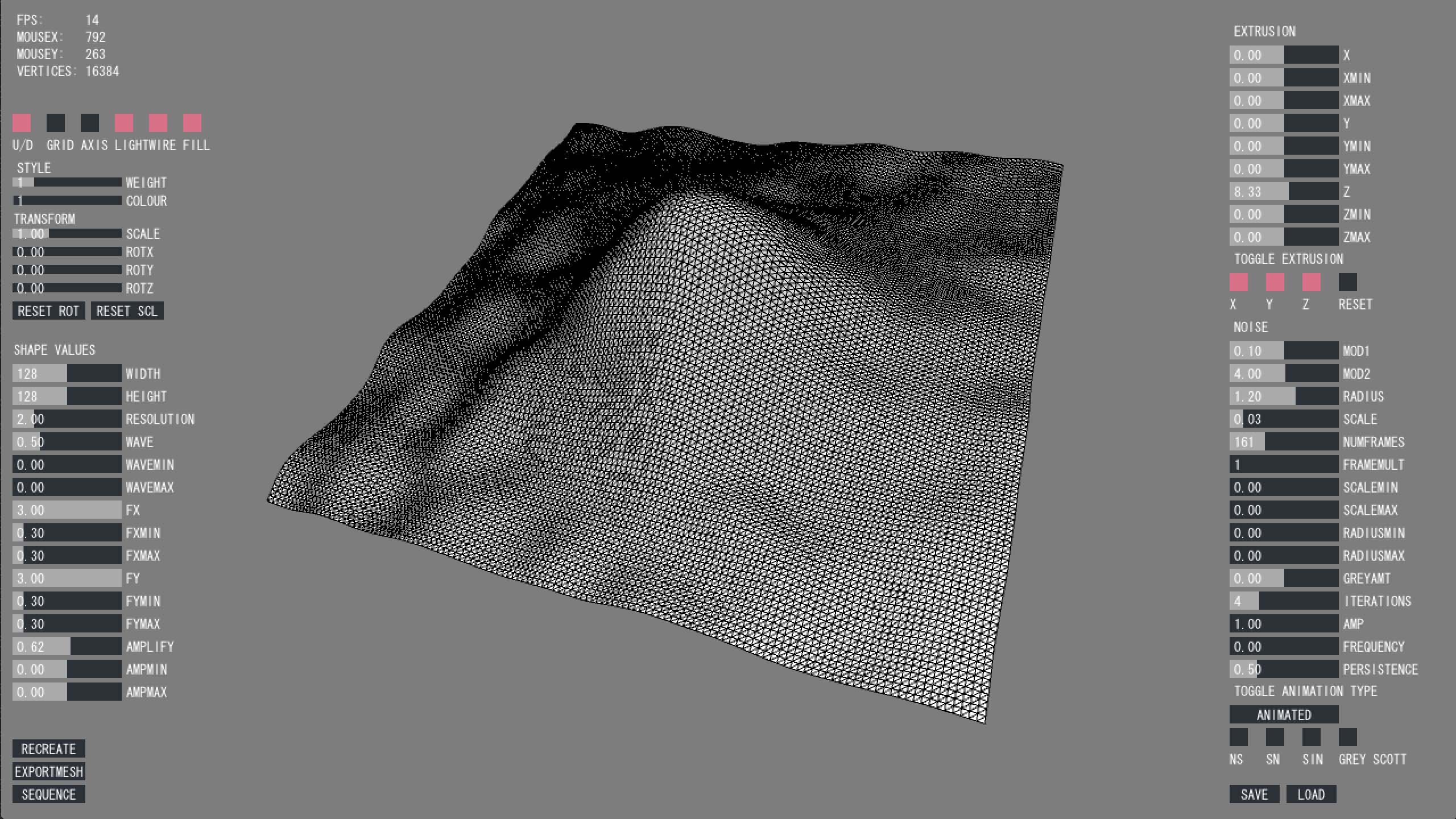

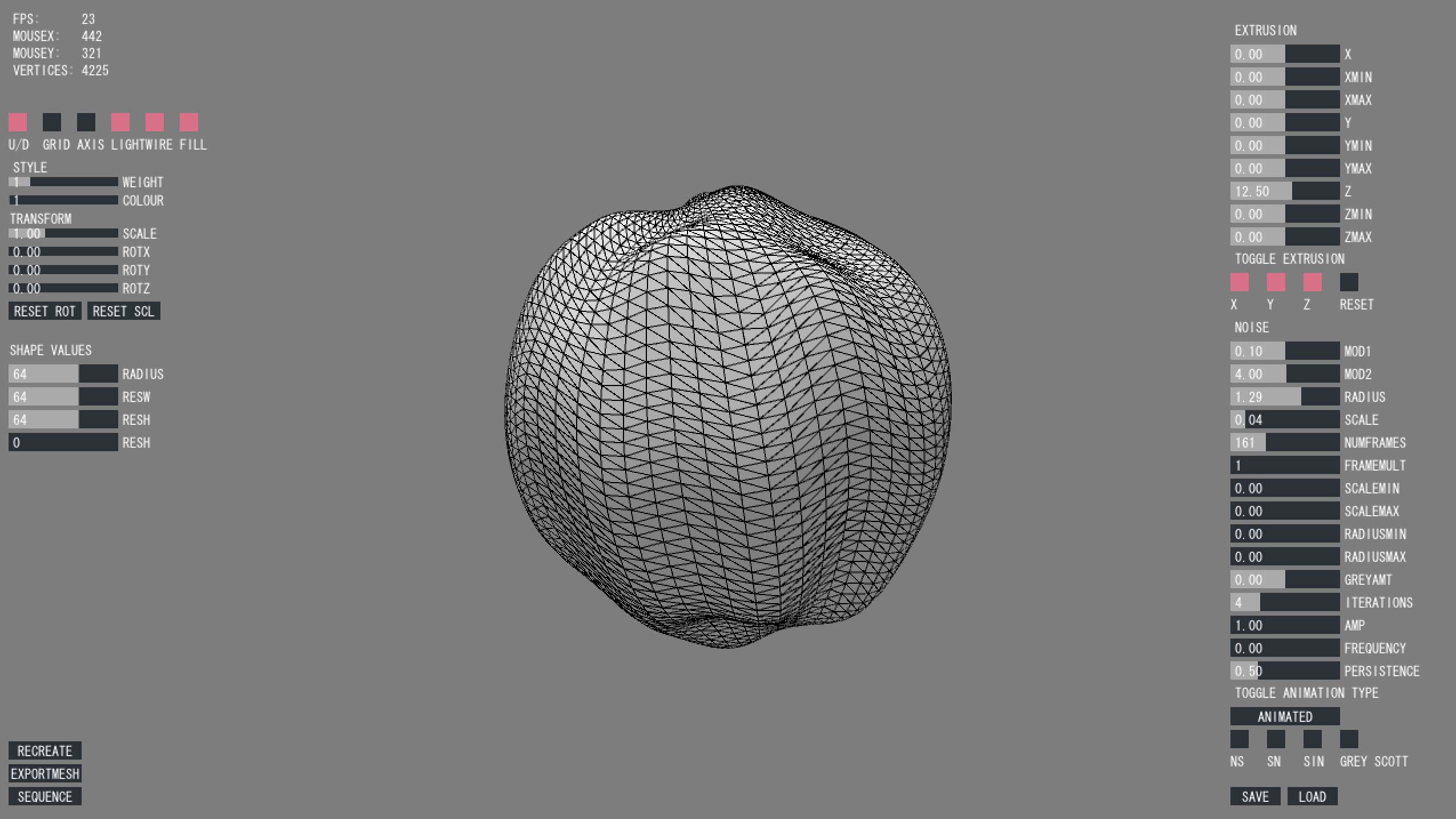

The software was written with in Processing using an object-oriented approach. Individual forms are initialised in their own class through inheritance from a master ‘Artefact’ class. Geometric algorithms determine the creation of the initial shape, from a simple plane to more complex geometries like the hyperbolic paraboloid. Each vertex is of a custom type, which takes a vectors initial position, creates a new modulation vector depending on which noise algorithm is selected and adds them together, storing each modulated vector in an array. The noise algorithms are powered by a Java implementation of the Simplex Noise algorithm. The noise is derived from a four-dimensional noise plane, taking values from a circle drawn in the third and fourth dimension, creating a seamless loop. The looping noise algorithm was adapted from work by Etienne Jacob, with a nice visual representation of the concept by Golan Levin can be found here.

To present the animations in a way that matched the aesthetic vision of the piece, a switch from real-time to frame-based animation was essential to be able to produce three-dimensional renders. A custom function was written with the use of the Nervous System OBJExport library, which exported the current state of the vertex array for each frame. A key aspect of using custom software was to allow the creation of animations through an intuitive and playful interaction. The software makes extensive use of GUI slider controls from the controlP5 library, allowing fine control of a shapes properties and the playful creation of animated sequences by modulating noise algorithms in real-time.

These sequences were imported into Blender with the use of the Stop-motion-obj script, which sequentially loads a single OBJ file per frame. Artefacts were generally composed using an isometric camera and soft top-light, creating an illusion of inspection, of looking down upon something, with isometric perspective causing distant objects to appear on the same focal plane as those closer, reinforcing a feeling of the uncanny. Depending on whether the form was exported from Processing using either a triangle-strip or triangluation method of meshing the points into a solid form, or whether the raw array of points were exported, various techniques were used to visualise the OBJ sequences within Blender. For solid meshes, Principled sub-surface scattering shader materials were used extensively to give the illusion of depth and fleshiness, where light appears to scatter beneath the objects surface. For objects containing a raw array of points, either a particle system of spheres or metaballs were used to visualise the forms.

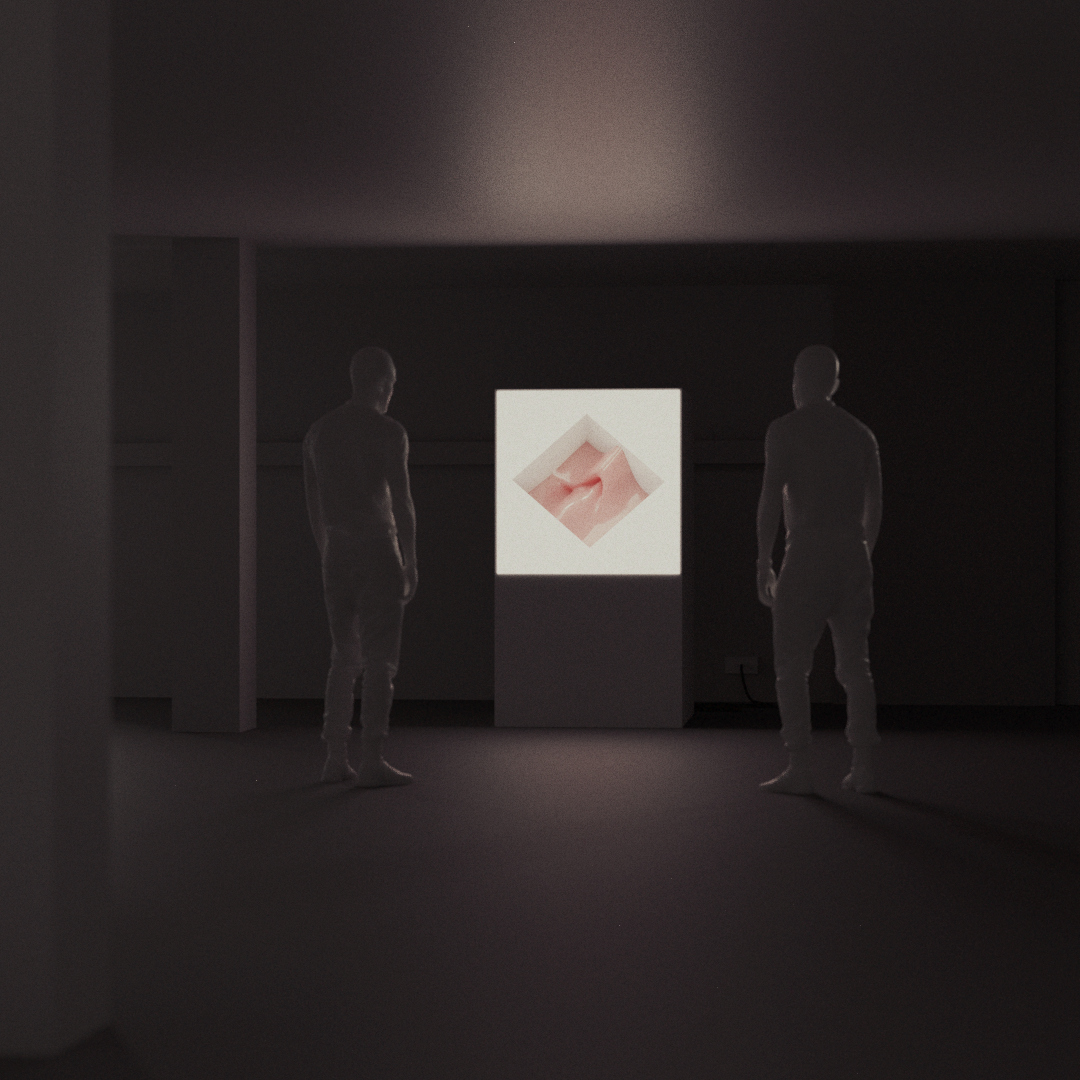

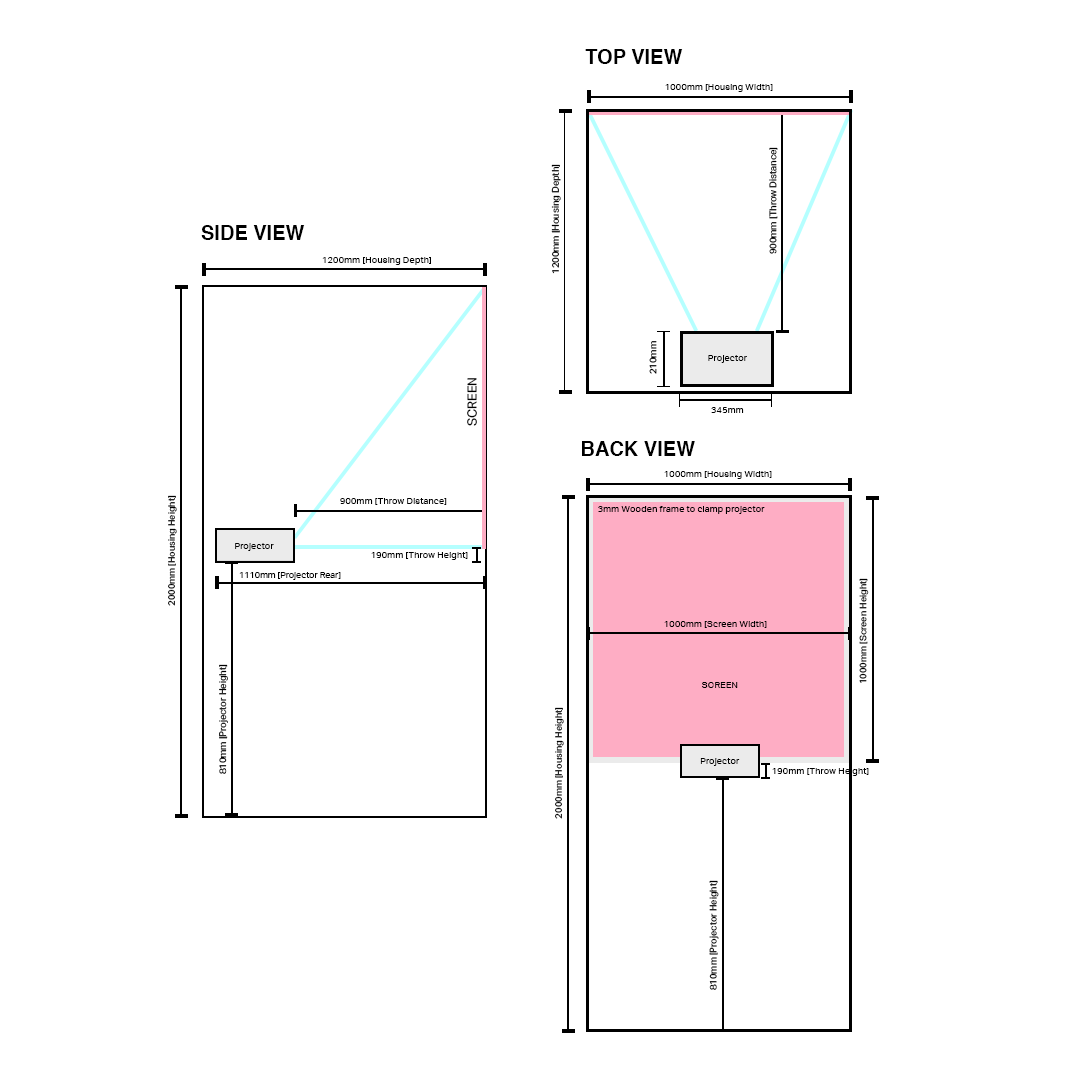

To exhibit the piece, it was important to consider how the artefacts could exist within their own space, separate from that of the main exhibition, along with considering the restrictions of the assigned space within the church. After some development, presenting them in an imposing and dominant white obelisk, a reflection of the original geometric forms of each artefact before they had been modulated or obscured, appeared to be an appropriate medium. The scale of the obelisk was intended to create an immersive viewing experience, with artefacts dominating the space by filling a participant’s field of view. A back-projection system was developed where images are projected onto the obelisks front face, a 1x1 metre back-projection screen, allowing viewers to approach and inspect the artefacts closely without breaking immersion from shadows cast across the screen. The unit was built in a modular fashion for easy transport and install within the space, with a system of seven panels being simply bolted together.

The artefacts would be exhibited in bustling and busy space with viewers moving from exhibit to exhibit, which was at odds with the visceral and intimate viewing experience intended. To counter this, the film was made to loop, allowing for a film which can be observed at any time. A loose narrative order meant for a viewing experience which held the same meaning, irrespective of when the viewing began or when the gaze was broken. To autonomously display the artefacts, a simple openFrameworks app was written to seamlessly loop the final film on a Raspberry Pi via the ofxOMXPlayer addon, which allows openFrameworks to make use of the Raspberry Pi’s GPU for smoother playback.

Future development

The process of developing a custom animation software and a workflow which is scalable, opens a huge amount of possibilities for development. The next stage would certainly be to build upon animating with computational noise towards more data-driven animation, incorporating myriad forms of information to represent the sources in similarly abstract and aesthetic ways. Allowing for a more emergent, bottom-up approach by implementing more computationally interesting and complex algorithms, particularly Andy Lomas’ morphogenesis work Cellular Forms13 which is immensely fascinating, where forms exhibit truly life-like behaviours without being based on any specific organism.

Another interesting pathway would be to allow a participant to interact with the forms, influencing their structure, exploring how interaction could impact a viewers understanding. It would be interesting to play with expectations of how an artefact might react through responsive, intuitive and meaningful interaction. A realistic way of achieving this would be to port the software into Unity or GLSL vertex shaders, and through exploration of human-computer interaction technologies.

Throughout the development process, a lot of consideration was given to how audio might influence the viewing experience, if adding sound effects would make real the subtleties, giving real-world meaning. Through experimentation however, it became apparent that this made the artefacts too real, grounding them in reality by becoming mere representations of the given sound, leading the viewer down a path of sound recognition rather than allowing their affordance to give life and meaning to the artefacts. Developing the artefacts to be coupled with sound however, in a similar way to Crumpling Sound Synthesis14, would also be an interesting direction of development. The introduction of a sound-synthesis element to the software, where each artefact is generatively creating its own sound over time would free the sound design process from the use of real-world sounds and their implied connotations.

Render times were a constant stumbling block throughout the process, with some loops running for 400 frames and taking three minutes per frame to render, which achieved only a somewhat acceptable result in terms of noise. In future, using Gaussian Material Synthesis15, the use of which was an original intention of this project, would be an exciting prospect.

For future exhibitions, there is value in exploring the possibility of making the piece more imposing, more intimate and more immersive. Introducing a stronger and clearer narrative element would allow each artefact to grow and develop over time, creating affect with the viewer.

Self evaluation

My intention with this piece was to create a series of artefacts which gave the impression of life, isolated elements of a living being, of physical properties which exist in their own space, to create affect and to encourage the viewer to question their own affordances. On experiencing differing viewer interpretations, from meditative and tranquil to the grotesque and visceral, the project was a success in creating something uniquely and individually interpretable, where the artefacts are abstract enough to allow different meanings to form for each viewer.

From exhibiting the piece however, the overwhelming impression of the artefacts were that they were fleshy, which while being my intention, didn’t leave much room for the viewer to find meaning beyond the human body. The overwhelmingly pink colour palette was an aesthetic choice, while on reflection broadening the palette while retaining the apparent depth of sub-surface scattering materials would allow for further abstraction, leaving the artefacts open to a wider interpretation.

In terms of technical evaluation, my aim originally was not to create a publically released version of the software. It was a means to playfully create generative looping animations without the use of proprietary software. If the software was ever to be released in the public domain, a large amount of work would need to go into user experience, UI design and making the software more intuitive for a new user.

The development process was plagued with frame-rate issues, mainly due to complex three-dimensional calculations taking place solely on the CPU. While the final iteration of the concept was a presentation of rendered artefacts, there was almost no choice other than to present something isolated from the software. Identifying and understanding computationally expensive processes is something I aim to improve upon in future in order to make intuitively interactive experiences.

I began this course wanting to explore different ways in which a viewer can experience moving image as a means of advancing my understanding as a filmmaker, and to learn to programming from a conceptual and artistic standpoint rather than a purely technical one. In that respect, I feel I have achieved what I set out to do with this work and my studies as a whole. There is however always more to learn and I am excited about the opportunities I am now presented with in developing my ideas further.

References

Simulacra code and instructions. (Github).

[1] Norman, D. (1988). The Design of Everyday Things. MIT Press.

[2] Merleau-Ponty, M. (1962). Phenomenology of Perception. Routledge.

[3] Baudrillard, J. (1981). Simulacra and Simulation. University of Michigan Press.

[4] Orson Welles. (1973). F For Fake. Speciality Films.

[5] Wolz et al. (2014). What's Wrong with an Art Fake? Cognitive and Emotional Variables Influenced by Authenticity Status of Artworks. MIT Press.

[6] Rodrigues Marsh, J. Simulacra Studies, Programming for Artists II, Goldsmiths

[7] Jacob, E. 4D Looping simplex noise algorithm.

[8] Levin, G. Looping noise explanation GIF.

[9] Zoellner, M. Nervous System OBJExport library for Processing.

[10] Schlegel, A. controlP5 Library for Processing.

[11] Justin. Stop Motion OBJ Blender addon.

[12] Van Cleave, J. ofxOMXPlayer.

[13] Lomas, A. (2014). Cellular Forms. AISB50.

[14] Cirio et al. (2016). Crumpling Sound Synthesis. ACM Transactions on Graphics.

[15] Zsolnai-Fehér et al. (2018). Gaussian Material Synthesis. SIGGRAPH 2018.