Exploring altruism – the common ground of multispecies evolution

This essay serves as a speculation over the emergence of altruistic behaviors as strategies that are responsible for the evolution of species and how modern tools such as machine learning could replicate and even improve such strategies that have allowed this emergence to happen to bring more insights into the inner workings of the need of altruism.

produced by: Elisavet Koliniati

From the Selfish Gene point of view (as studied by biologist Richard Dawkins on his book on evolution) to the Evolution of Cooperation (as analyzed by political scientist Robert Axelrod), I will try to document the phenomenon of selfish agents behaving in an altruistic way without a central authority to enable their evolution. This phenomenon has a multispecies approach and apart from evolutionary consequences, it has also given us a deeper understanding of human behavior models. Its main key concepts draw from the field of Game Theory.

Game theory is a logical extension of evolutionary theory. It is only a conceptual short step from this point to cooperation and competition among cells in a multicellular body, among conspecifics who cooperate in social production, between males and females in a sexual species, between parents and offspring, and among groups competing for territorial control. Game theory is thus a generalized schema that permits the precise framing of meaningful empirical assertions but imposes no particular structure on the predicted behavior.

Every behavior is a matter of “weighing” the consequences of acting in a cooperative or defective manner both in the short but also in the long run. If the possibility of encountering the same interaction is low then an agent is usually better off defecting on other agents, but cooperative behaviors provide bigger profits and a more stable strategy in repeating interactions, especially when defection relies on the other agent’s cooperation to make the most out of it.

One of the most profound examples of understanding the above contradiction while using Game Theory, is the Prisoner’s Dilemma example which shows why two completely rational individuals might not choose to cooperate, even if it appears that it is in their best interests to do so.

Analyzing some common strategical behavior

Some of the key strategies that dominate Game Theory which we are going to be replicating in our Machine Learning examples are:

- TIT for TAT: a nice strategy where if provoked, a player subsequently responds with retaliation; if unprovoked, the player cooperates.

Implementing a tit-for-tat strategy occurs when one agent cooperates with another agent in the very first interaction and then mimics their subsequent moves. This strategy is based on the concepts of retaliation and altruism. When faced with a dilemma, an individual cooperates when another member has an immediate history of cooperating and defaults when the counterparty previously defaulted.

- TIT for TWO TATS: an exploitative and punishing strategy where an agent will defect twice for each defection received.

Using Machine Learning to document the emergence of altruism:

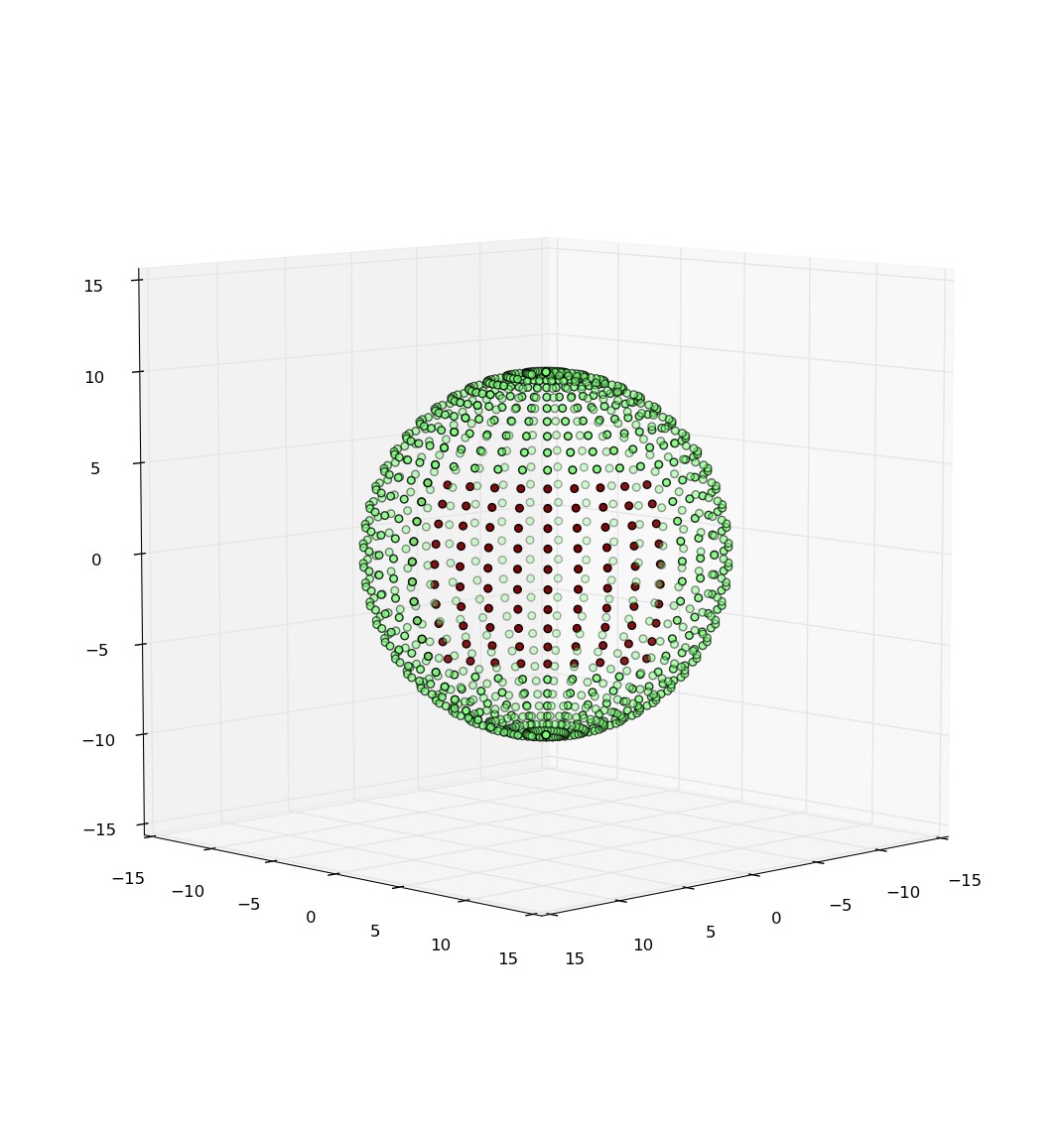

For the machine learning aspect I am focusing on building a training environment in Unity Engine using Unity ML-Agents. With Unity ML-Agents, a variety of training scenarios are possible, depending on how agents, brains, and rewards are connected. These include: Single Agent, Simultaneous Single Agent, Cooperative and Competitive Multi-Agent, and Ecosystem. To train our agents with the above strategies we will use reinforcement learning.

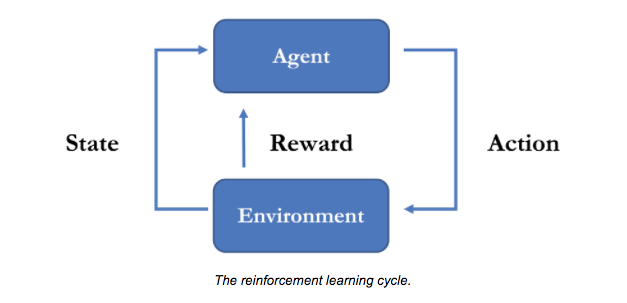

Reinforcement learning

Reinforcement Learning is a learning method based on not telling the system what to do, but only what is right and what is wrong. This means that we let the system perform random actions and we provide a reward or a punishment when we think that these actions are respectively right or wrong. Eventually, the system will understand that it has to perform certain actions in order to get a positive reward.

The right or wrong decisions, are going to be based on different scenarios that implement some social structure examples.

Using Unity ML-Agent Toolkits

Choosing what is wrong and right – training behaviors

Every agent makes its own observations about the environment, its own set of actions and a specific objective. Thus this is why I would like to explore how we can train such behaviors within Unity using reinforcement learning, with the help of the ML-Agents Toolkit.

Throughout 8 different cases, we will examine how agents can evade each other strategies and give rise to various social constructs. Through these we will try to decipher how a single individual using a new strategy can invade a population of natives, stressing out the occasions where a newcomer does better with a native than a native does with another native based on the concept of reciprocity.

Previous already conducted researches have shown how cooperation based on reciprocity:

1. can get started in an asocial world,

2. can thrive while interacting with a wide range of other strategies,

3. and can resist invasion once fully established.

Potential applications include specific aspects of territoriality, mating, and disease.

These are the researches we will call upon to shape our training models, provided mostly in the book of Robert Axelrod, the Evolution of Cooperation. Considering how the evolution of cooperation could have begun, the study of social structure was found to be necessary and is the main focus of this research study.

Invading a population of “meanies” – How altruism brought species to their evolution

A population of meanies who always defect could not be invaded by a single individual using a nice strategy such as TIT FOR TAT. But if the invaders had even a small amount of social structure, things could be different. If they came in a cluster so that they had even a small percentage of their interactions with each other, then they could invade the population of meanies and with the use of machine learning I would like to prove this very simple but powerful concept of evolution.

The social structure of cooperation

Three factors are examined which can give rise to interesting types of social structure, as stated in Axelrod’s work: labels, reputation and territoriality.

1. A label is a fixed characteristic of a player, such as sex or skin color, which can be observed by the other player. It can give rise to stable forms of stereotyping and status hierarchies.

For the needs of the specific artifact I created agents of two different colors: blue and green, that would affect the outcome of the interaction.

2. The reputation of a player is malleable and comes into being when another player has information about the strategy that the first one has employed with other players. Reputations give rise to a variety of phenomena, including incentives to establish a reputation as a bully, and incentives to deter others from being bullies.

For the needs of the specific artifact I stored the agent’s interaction choice of the previous round and made it visible to the other agents to take it into consideration for the future.

3. Finally, territoriality occurs when players interact with their neighbors rather than with just anyone. It can give rise to fascinating patterns of behavior as strategies spread through a population.

For the needs of the specific artifact I would like to simulate the colonization of successful strategies among agents who are static and interact only with their neighbours.

1. The issue with labeling – self confirming stereotypes:

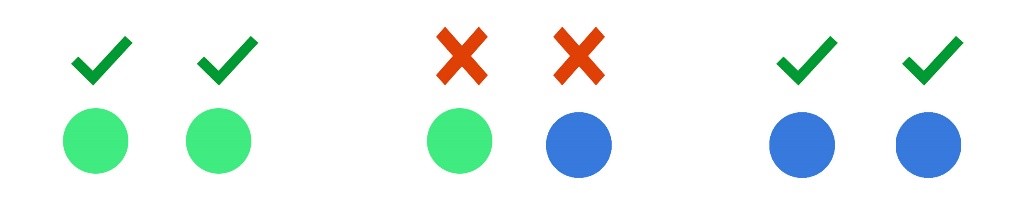

One of the most interesting but disturbing consequences of labels is that they can lead to self-confirming stereotypes. The first example of machine learning could prove exactly that: Suppose that every agent has either a Blue label or a Green label.

Further, suppose that both groups are nice to members of their own group and mean to members of the other group. For the sake of concreteness, suppose that members of both groups employ TIT FOR TAT with each other and always defect with members of the other group.

We provide rewards and punishments as follows:

1. If both agents cooperate, both will gain: +1 points.

2. If one agent cooperates and the other one defects, then the agent who defects gets: +1 points and the person who cooperated gets:-1

3. If both agents defect they get: -1.

Case 1: Introducing a new Agent that knows nothing about the game.

After we have trained our agents to interact in the aforementioned way, we will then introduce a new-untrained agent who knows nothing of the game to see what happens. We will soon see that a single individual, whether Blue or Green, can do no better than to do what everyone else is doing and be nice to one's own type and mean to the other type.

This incentive means that stereotypes can be stable, even when they are not based on any objective differences:

The Blues believe that the Greens are mean, and whenever they meet a Green, they have their beliefs confirmed. The Greens think that only other Greens will reciprocate cooperation, and they have their beliefs confirmed.

It would be interesting to see the analogy of how this might happen: I could play around with numbers and have only a few agents acting with stereotypes and the majority starting with a cooperative manner. Would these few agents be enough to constitute to a population of “meanies” towards different color agents?

Case 2: Introducing a new Agent that tries to break out of this loop (act as a deviant) and see how its payoff will be.

If you try to break out of the system, you will find that your own payoff falls and your hopes will be dashed. So if you become a deviant, you are likely to return, sooner or later, to the role that is expected of you. If your label says you are Green, others will treat you as a Green, and since it pays for you to act like Greens act, you will be confirming everyone's expectations.

The consequences of stereotyping

This kind of stereotyping has two unfortunate consequences: one obvious and one more subtle.

- The obvious consequence is that everyone is doing worse than necessary because mutual cooperation between the groups could have raised everyone's score.

- A more subtle consequence comes from any disparity in the numbers of Blues and Greens, creating a majority and a minority. In this case, while both groups suffer from the lack of mutual cooperation, the members of the minority group suffer more. No wonder minorities often seek defensive isolation.

Case 3: How minorities are formed

We will create an environment with eighty Greens and twenty Blues, and we will make everyone interact with everyone else once a week.

· Then for the Greens, most of their interactions are within their own group and hence result in mutual cooperation.

· But for the Blues, most of their interactions are with the other group (the Greens), and hence result in punishing mutual defection.

Thus, the average score of the minority Blues is less than the average score of the majority Greens. Consequently, we will soon see a tendency for each group to associate with its own kind (pushing the blue agents to the edge of the map) but the above effect will still hold.

Why minorities suffer more

This effect still holds because if there are a certain number of times a minority Blue meets a majority Green, this will represent a larger share of the minority's total interactions than it does of the majority's total interactions (Rytina and Morgan 1982).

The result is that labels can support stereotypes by which everyone suffers, and the minority suffers more than the rest.

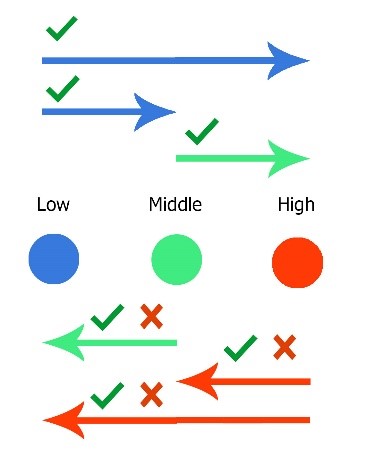

Case 4: How status hierarchies are supported

Labels can lead to another effect as well. They can support status hierarchies. For example, suppose that everyone has some characteristic that can be readily observed and that allows a comparison between two people. Now suppose that everyone is a bully toward those beneath them and meek toward those above them. Can this be stable?

The answer is yes, and we can provide through Machine Learning another illustration.

We will create a training environment that have specific Agents with tags: Low, Middle, High.

Suppose every Agent uses the following strategy when meeting someone beneath them: alternate defection and cooperation unless the other player defects even once, in which case never cooperate again. This is what being a bully is all about: you are often defecting, but you are never tolerating a defection from the other player.

And suppose that every Agent uses the following strategy when meeting someone above them: cooperate unless the other defects twice in a row, in which case never cooperate again. This is being meek in that you are tolerating being a sucker on alternating moves, but it is also being provocable in that you are not tolerating more than a certain amount of exploitation.

This pattern of behavior sets up a status hierarchy based on the observable characteristic. The people near the top do well because they can lord it over nearly everyone. Conversely, the people near the bottom are doing poorly because they are being meek to almost everyone. It is easy to see why someone near the top is happy with the social structure.

But is there anything someone near the bottom can do about it acting alone?

With this training system we will see that actually there isn't. The reason is that when the discount parameter is high enough, it would be better to take one's medicine every other move from the bully than to defect and face unending punishment. Therefore, a person at the bottom of the social structure is trapped. He or she is doing poorly, but would do even worse by trying to buck the system.

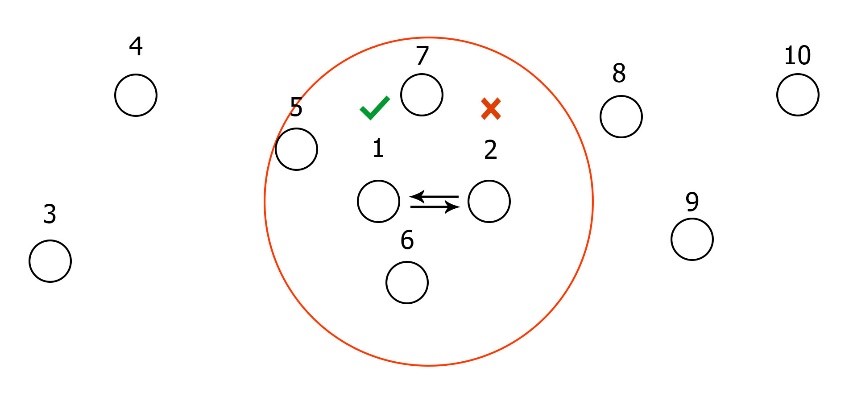

1. Reputation and Deterrence

A player's reputation is embodied in the beliefs of others about the strategy that player will use. A reputation is typically established through observing the actions of that player when interacting with other players.

Case 5: Bringing reputation into play

For example we could have Agents that are within a region of an interaction happening, store the result of the interaction to put in future use, when it is their turn to interact with the specific agents. In the above example, agents 5,6 and 7 will remember that 1 has tried to cooperate and 2 has defected.

We could then have them play either a TIT for TAT strategy or a TIT for two TATs and see how well they will do. The case for both strategies would be:

· If an agent remembers that the other agent has defected on someone else who cooperated then: defect

· If an agent remembers that the other agent has cooperated with someone who defected on them then: cooperate

Seeing an agent who defects on another agent who also defects, might be seen as a justified punishing behavior of a previous interaction. Thus, one agent might choose to have a fresh start with either of them and cooperate in a new future interaction.

Is the knowledge of previous interactions helpful in this case?

How much better could the agents do with the information than without it? (Raiffa 1968). Knowing the other's strategy would have allowed a player to do substantially better in only a few cases. The question about the value of information can also be turned around: what is the value (or cost) of having other players know your strategy? The answer, of course, depends on exactly what strategy you are using.

Case 6: The effect of having one’s strategies known

To try this out, we could have another training with our agents with the exact same strategies (50% using TIT for TAT and the other 50% using TIT for TWO TAT). We would see that for an agent using an exploitable strategy, such as TIT FOR TWO TATS, the cost of the other agents knowing it, can be substantial. On the other hand, if an agent is using a strategy that is best met with complete cooperation, then it could be glad to have its strategy known to the other agents.

So what is the best reputation to have?

Having a firm reputation for using TIT FOR TAT is advantageous to a player, but it is not actually the best reputation to have. The most profitable reputation to have in this case if it is successful, is the reputation for being a bully. The best kind of bully to be is one who has a reputation for squeezing the most out of the other player while not tolerating any defections at all from the other. The way to squeeze the most out of the other is to defect so often that the other player just barely prefers cooperating all the time to defecting all the time. And the best way to encourage cooperation from the other is to be known as someone who will never cooperate again if the other defects even once.

Case 7: Creating bullies

Among a population of agents behaving nice using TIT for TAT we could train specific agents as bullies and record their outcome. We could also run multiple tests with different numbers of bullies to see their effect on a sum of nice behaving agents.

Fortunately, it is not easy to establish a reputation as a bully. To become known as a bully you have to defect a lot, which means that you are likely to provoke the other player into retaliation. Until your reputation is well established, you are likely to have to get into a lot of very unrewarding contests of will. For example, if the other player defects even once, you will be torn between acting as tough as the reputation you want to establish requires and attempting to restore amicable relations in the current interaction.

What darkens the picture even more is that the other player may also be trying to establish a reputation, and for this reason may be unforgiving of the defections you use to try to establish your own reputation. When two players are each trying to establish their reputations for use against other players in future games, it is easy to see that their own interactions can spiral downward into a long series of mutual punishments.

1. Territoriality

Nations, cells, tribes, and animals are examples of players which often operate mainly within certain territories. They interact much more with their neighbors than with those who are far away. Hence their success depends in large part on how well they do in their interactions with their neighbors. But neighbors can serve another function as well: It can provide a role model.

If the neighbor is doing well, the behavior of the neighbor can be imitated. In this way successful strategies can spread throughout a population, from neighbor to neighbor.

To demonstrate this example we could make a training environment where the individuals remain fixed in their locations, but their strategies can spread. This process is a mechanism of colonization by which successful strategies can spread from place to place.

Case 8: Simulating the colonization of successful strategies between neighboring agents

Consider an entire territory divided up so that each player has four neighbors: one to the north, one to the east, one to the south, and one to the west.

In each "generation," each player attains a success score measured by its average performance with its four neighbors. Then if a player has one or more neighbors who are more successful, the player converts to the strategy of the most successful of them (or picks randomly among the best in case of a tie among the most successful neighbors).

All this leads to a rather strong result: the conditions that are needed for a strategy to protect itself from takeover by an invader are no more stringent in a territorial social system than they are in a social system where anyone is equally likely to meet anyone else. So if a rule is collectively stable, it is territorially stable.

The role of the future possible interactions

Every nice strategy in order to thrive needs to build on the possibilities of future interaction in order to prove valuable in the long run. However, even with the help of a territorial social structure to maintain stability, a nice rule is not necessarily safe. If the shadow of the future is sufficiently weak, then no nice strategy can resist invasion even with the help of territoriality. In such a case, the dynamics of the invasion can sometimes be quite intricate and fascinating to look at.

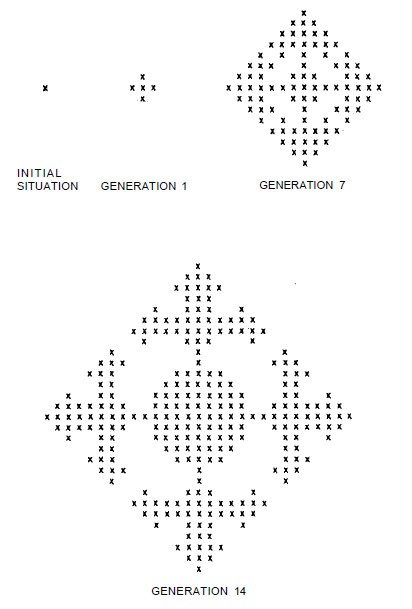

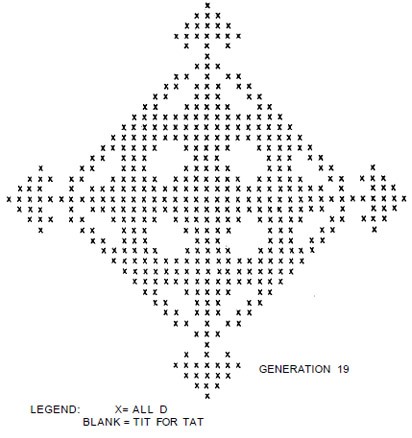

Case 9: Narrowing down future interaction possibilities – how “meanies” can evolve

Above we see an example of such an intricate pattern. It represents the situation of a single player who always defects invading a territorial population of individuals using TIT FOR TAT. In this case, the shadow of the future has been made quite weak. The above figure shows what happens after one, seven, fourteen, and nineteen generations. The meanies colonize the original TIT FOR TAT population, forming a fascinating pattern of long borders and bypassed islands of cooperators.

What would happen if we let the ml-agents decide by themselves which is the best strategy to use to make a territory collectively stable?

Case 10: Letting the agents decide what is best for them

To see what happens when the players are using a wide variety of more or less sophisticated decision rules, it is only necessary to simulate the process one generation at a time. Each training results provide the necessary information about the score that each rule gets with any particular neighbor it might have. The score of a territory is then the average of its scores with the four rules that neighbor it.

Once the score of each territory is established, the conversion process begins. Each territory that has a more successful neighbor simply converts to the rule of the most successful of its neighbors. We can repeat the simulation multiple times with different random assignments each time. Each simulation was conducted generation after generation until there were no further conversions. This took from eleven to twenty-four generations.

These findings conclude that in each case, the process stopped evolving only when all of the rules that were not nice had been eliminated. With only nice rules left, everyone was always cooperating with everyone else and no further conversions would take place. The territorial system demonstrates quite vividly that the way the players interact with each other can affect the course of the evolutionary process.

A variety of structures have now been analyzed in evolutionary terms, although many other interesting possibilities await analysis and machine learning can provide important insights into understanding the mechanisms behind them.

Bibliography:

- R. Axelrod - The evolution of cooperation, WD Hamilton,

- H. Gintis - The Bounds of Reason: Game Theory and the Unification of the Behavioral Sciences, Princeton University Press, 2009

- R. Dawkins – The Selfish Gene, Oxford University Press, 1976

- Ursula K. Le Guin - The Carrier Bag Theory of Fiction, Ignota, 2019

Links:

https://unity3d.com/machine-learning

https://unity3d.com/how-to/unity-machine-learning-agents

https://github.com/Unity-Technologies/ml-agents/blob/master/docs/ML-Agents-Overview.md