Nexus

Nexus aims to explore the link between ecologies, networks and emergence, as portrayed through computation. It is an examination of the Biophilia Hypothesis which suggests that humans possess an innate tendency to connect with nature and with other forms of life.

produced by: Danny Keig

Introduction

Three individual environments live and grow, each with unique rules for their inhabitants. When certain conditions are met, an environment may establish a connection with another, impacting the destination system’s fundamental workings. The three systems are codependent, and together they are self efficient, however, one can seed the processes and communicate with the systems by bringing a hand close to the light interface.

Utilising physical geometry, these environments and their communications are projection mapped onto the surfaces and are brought into the real world for us to observe up close. Communications between systems and the user sprout up as if finding a path along stepping stones.

Concept and background research

This piece was to be a celebration of the simple pleasure we derive from watching living things. I wanted to investigate whether Biophilia may apply to technologies which embody behavioural aspects of the natural world.

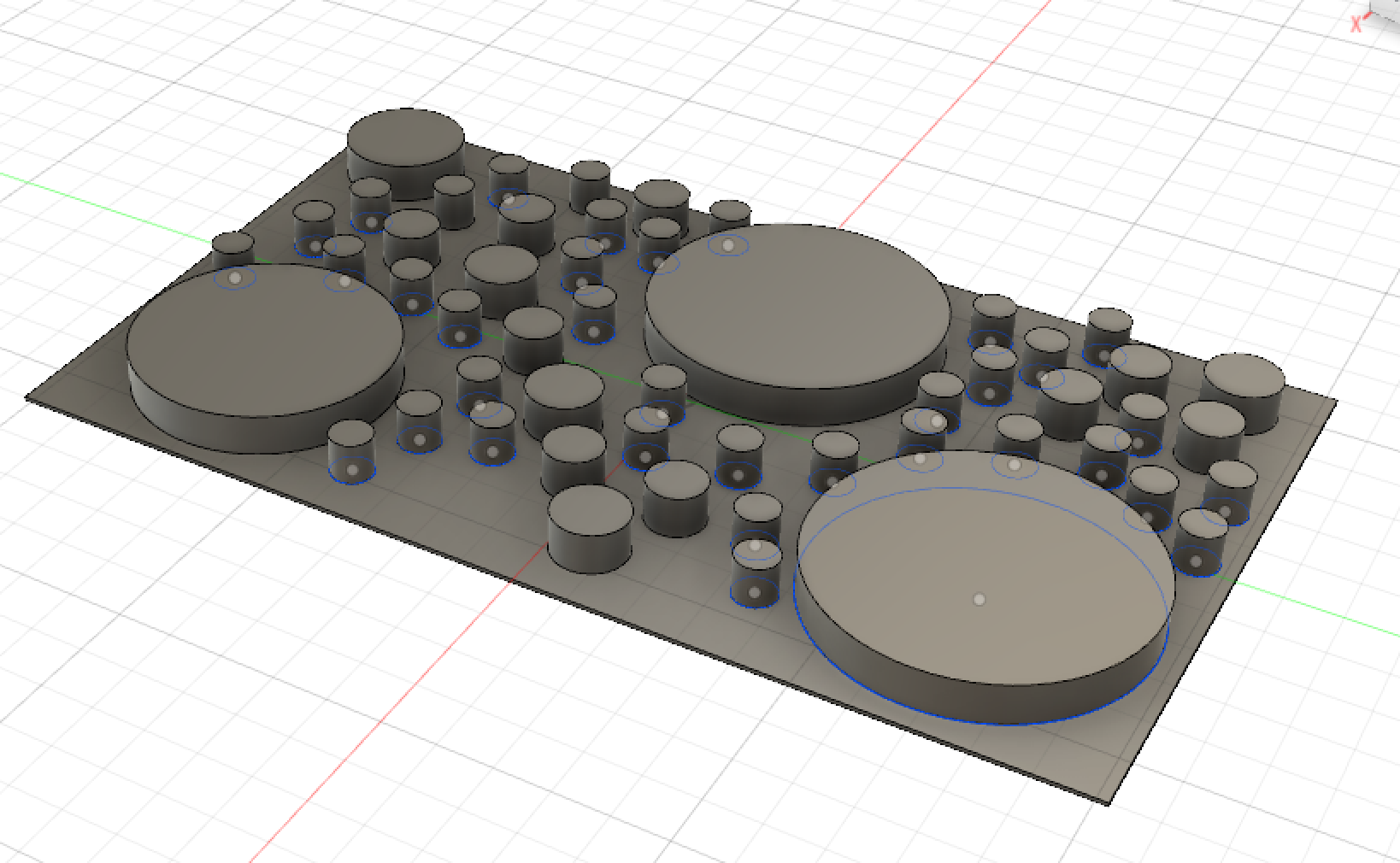

The initial concept was to create three unique digital ecologies that are interconnected and to generate a cyclic flow of communications which keep the whole system moving. I was originally interested in making a triptych of suspended panels which I would project the three environments onto. However, realising that I needed to make the relationship between these environments much more obvious to the audience, the layout of the work evolved into a tabletop plan where communications could be seen from above as they traversed across pieces of physical geometry.

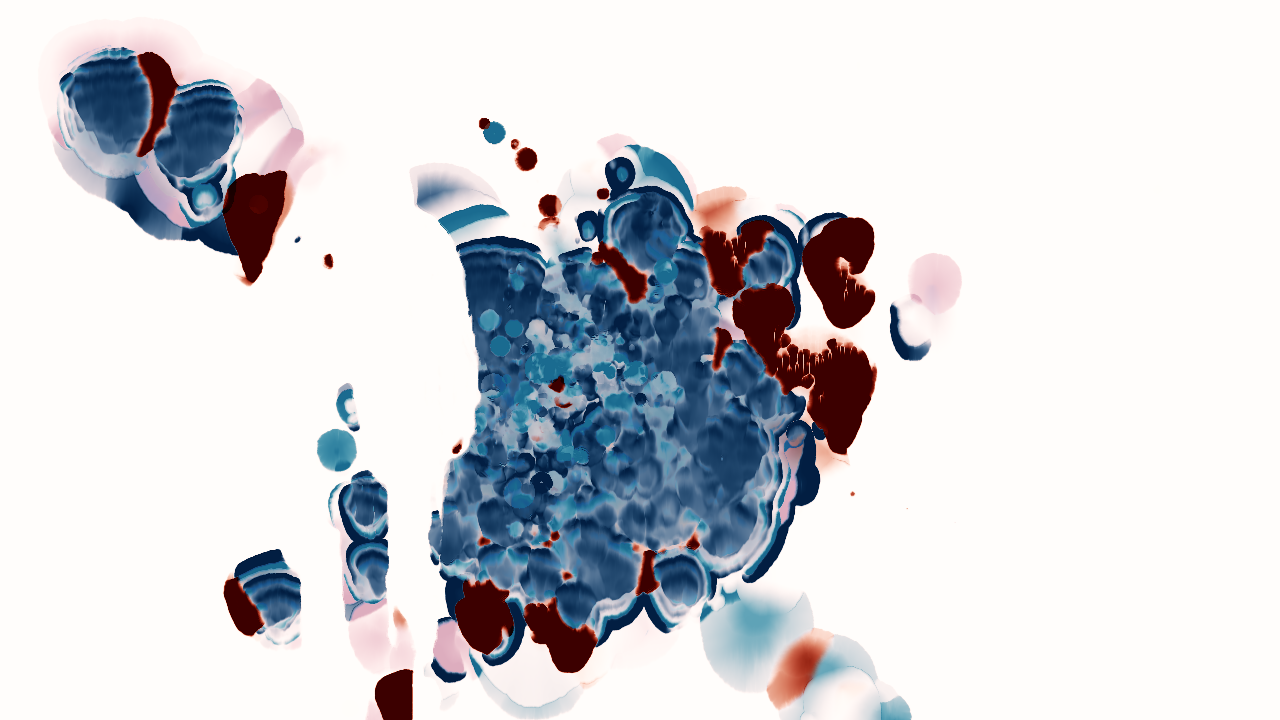

Despite the changes in physical form, my visual inspiration remained the same. I was greatly inspired by the aesthetics of Kenichi Yoneda whose work resembles both digital watercolour blending and cellular growth.

Keinich Yoneda (Kynd)

The work of teamLab was inspiring as it is incredibly responsive for the audience and really feels alive. I wanted to create a work where the viewer could be transfixed by all of the interactions without the need for understanding any of the logic behind it and this is something that teamLab’s work captures well.

teamLab

Jared Tarbell and Casey Reas create emergent generative work based on simple rules and processes. I found these pieces really inspiring aesthetically and technically.

Jared Tarbell

Casey Reas

The emergent robotics artist Norman White creates physical computing pieces which are entirely different from what I intended to create, but I loved how he captured the behaviour of natural systems through technology.

Norman White

Technical

The openFrameworks code consists of many particle system of different kinds. I created unique particle systems for each of the three environments, and another variation to use for all of the stepping stones. Using so many particle system instances I became concerned about my frame rate and expected it to drop as I developed my piece more. I looked into Kyle McDonald’s binned particle system which limits the number of particles each will compare itself with to just those within a certain boundary. This code was difficult to wrap my head around and required a few modifications for my project, but this addition really helped in avoiding CPU bottlenecks and made the project run as smoothly as it did in the final exhibition.

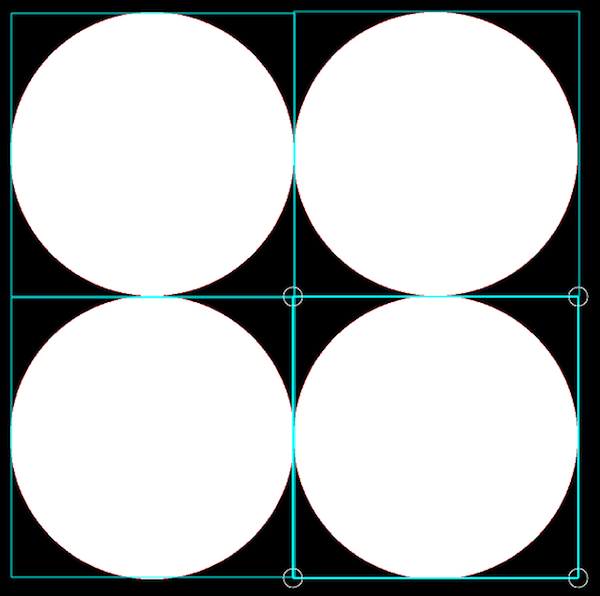

Since the piece evolved into a projection mapping project I decided to use the openFrameworks add-on ofxPiMapper as I was familiar with it, I could keep everything contained in openFrameworks, and it allowed me to use circles. ofxPiMapper was extremely flexible but working with so many FBO sources presented me with a few challenges. I decided early on that I wanted each of the physical circles to contain a unique instance of a particle system as to give it the most unpredictable and natural behaviour. This decision required the use of many FBO sources, one for each circle. I managed to reduce this number and somewhat simplify the file management in Xcode by fitting four particle systems into one FBO source. Each of the main systems would have its own FBO source, and the stepping stones would be handled by a number of separate FBO sources, each of which could contain four unique systems.

One FBO source handling four circles to project four unique particle systems.

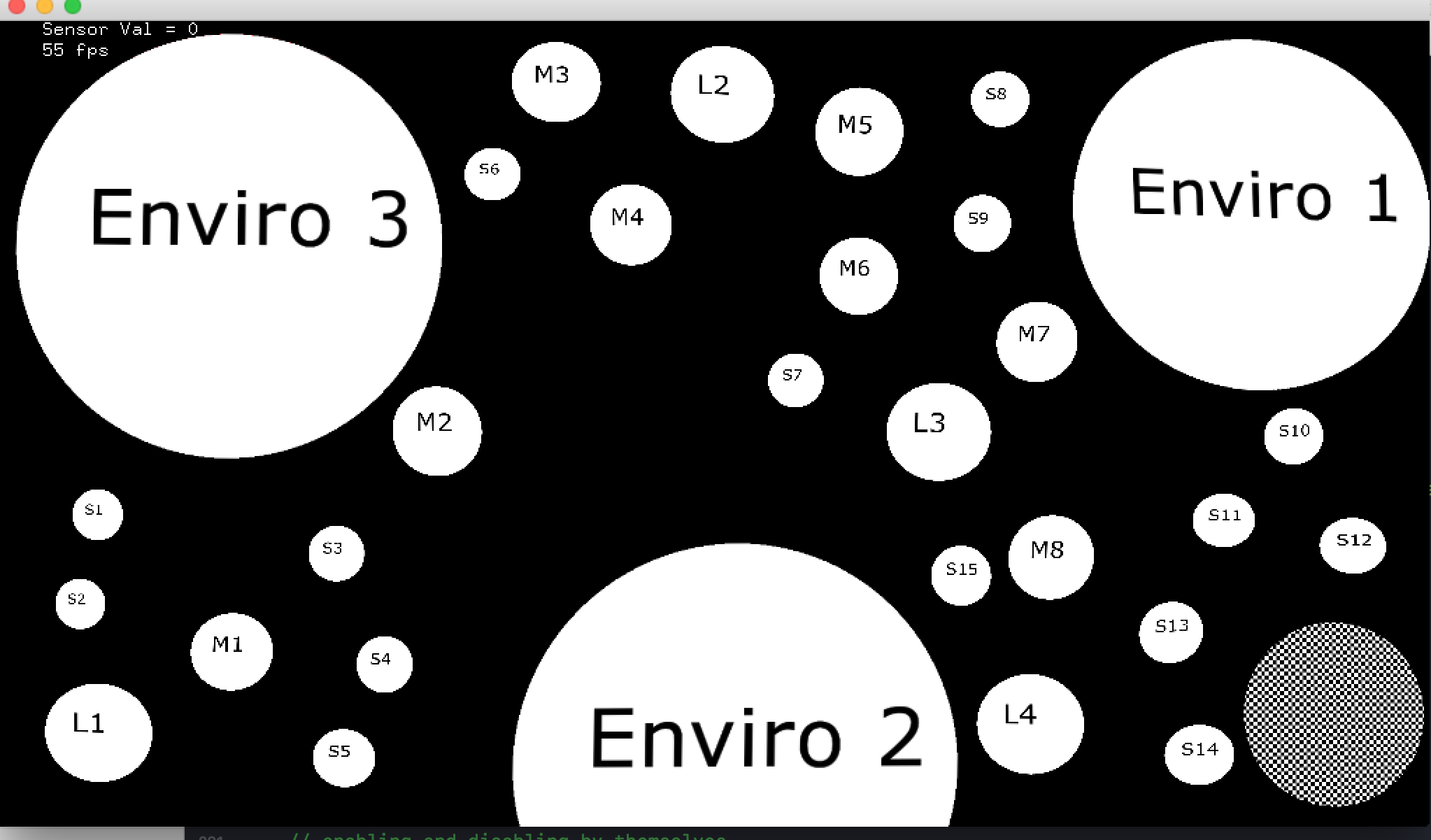

Using ofxPiMapper, the obvious choice for creating the stepping stone sequences would be to use the scene manager with a JSON file. I decided against using this however as it seemed as though it would not be flexible or dynamic enough for my idea. I desired for all of the systems to have dynamic communications with each other and with the interactive interface, and I feared that using the scene manager would mean that multiple communication paths would not be able to begin and end concurrently. The scene manager would effectively take over all of the surfaces and display a preprogrammed sequence that would direct every surface at once. Instead of this approach, I kept all of the sequencing within openFrameworks. I designed a system that would listen for a communication trigger from one of the systems, and then start a random preprogrammed sequence to another system. This meant that all environments could start and end communication sequences at the same time, and the interaction could interrupt these to start a signal amidst the currently active sequences. This approach required many separate timers to keep track of different sequences, so I created a timer class from which I could make instances belonging to each environment as well as the sensor.

ofxPiMapper projection mapping layout with all of the surfaces labelled

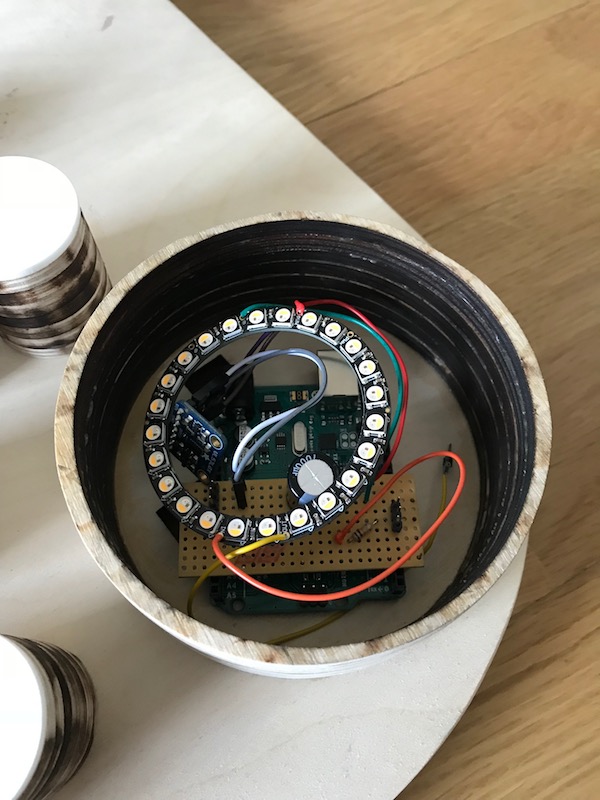

The Arduino technology and code was kept very simple, consisting of one proximity sensor to detect the hand, and one neopixel ring that would glow to attract the user. The challenge with this combination was in multitasking the Arduino and avoiding ‘delay’ in my code so that the sensor could measure at the same time as a neopixel sequence was active. Additionally, I needed to bit shift the value from the proximity sensor in order to send it to openFrameworks as a four digit number. Both of these problems were solved by very helpful tutorials which I have listed below.

Arduino along with proximity sensor and neopixel ring hidden in its housing

On a purely organisational level, due to the number of particle systems and FBO sources I was adding, I realised that I needed to find a solution to all the code which I had repeated many times in my project. I realised I had to learn a great deal about inheritance and polymorphism in C++. I was able to reduce what would have been lots of repeated and confusing code into code which utilised base classes and derived classes. This also allowed me to fix bugs much more efficiently as I only needed to fix them in one place, and not in the repeated code.

Having wanted to explore shaders in this project initially, I decided instead to use openFrameworks add-ons which could recreate some of those qualities. I used two add-ons, ofxBlur and ofxFboBlur, which worked really well together to create a pleasing depth to the animations. One of these blurs made the colours more vibrant and bright, and when layered with the other add-on, created a really pleasing trail effect. I also linked the proximity sensor to the depth of this blur so as to create a visual connection between the position of the hand and the system that the user was sending a signal to. This value had to be smoothed out to remove any unnatural jumps in values so as to make the blur responsive yet organic looking.

The fabrication of the physical piece was time consuming, but was mostly a smooth process. I laser cut many wooden discs of different sizes and stacked them to get more height. The top layer was made with white acrylic in order to capture the light from the projector. My original design for the light interface had to be reworked so that the holes formed a hand, in order to make the interaction requirements more obvious to the audience.

Early Fusion 360 sketch of the fabrication plan

Near-complete fabrication (with initial light interface design)

Self evaluation

Overall I am very pleased with the outcome of the project. I think I was successful in my goals and created a work which accomplished what I set out to do. I felt that using projection mapping along with the physical fabrication was a really important part of the piece and gave the projections a certain life to them which would have been lost on screen. It really felt like the surfaces contained the lifeforms rather than them displaying them.

I feel that the interaction was not as satisfying for the audience as it could have been. While the addition of the hand shape over the light interface made the interactive element more obvious, there were still many people whose intuition told them that all of the surfaces should be interactive. Seeing viewers wave their hands over all of the projection surfaces expecting to directly impact the particles was an instant signal to me that the interaction had partially failed. I realised that I could have been far more successful in this if I had based the project around a Kinect depth camera from the beginning, detecting hands over the projection surfaces. The work was built around the concept of designing a self-sufficient system, where the viewer may (or may not) seed the process from a point outside, both physically and technically separated from all of the environments. I feel that I resisted a more direct interactive approach and in this sense I really feel that the conceptual layer of the work somewhat restricted the technical layer, and subsequently the quality of the interaction.

The logic of the program could have been developed much further also. Having all of the environments so self-contained in their circles made the program run in a much more predictable manner than I had intended. One approach to changing this would have been to allow the particles from each system to travel outside of their home system, along the stepping stones, and invade another system. This would have been more difficult to program, but would have resulted in a more interesting living system that would have the potential to evolve instead of stagnate. I think this last addition could also have made the communication paths more obvious to the audience, because at some times these were difficult to follow in the final presentation of the work.

Future development

There are many potential directions for developing the piece further in the future, many of which are based upon my reflections on the piece over the course of the exhibition. The project would really benefit from advances in the logic and behaviour of the systems. I think the piece could be vastly more interesting if the system used genetic algorithms and possessed the potential to evolve over time, with the systems intermingling and coexisting in close quarters. I feel that this could create a more captivating ‘living system’.

The code could benefit from further changes related to polymorphism and inheritance. I intend to rewrite the code that deals with communication sequences of stones. I will attempt to reduce all of the sequence code to one function that runs a for loop for any sequence, and a 2D array which holds all of the possible preprogrammed sequences. I feel that such changes will make the code much more readable for others as well as making the project much easier to develop further in all other areas.

Reworking the interaction using a Kinect, the interaction could be much more intuitive and satisfying for the user while still keeping most of the other technical aspects such as the projection mapping intact. There is even the possibility of making the work on a larger scale, having it be floor based instead of table based, where the audience moves amongst the environments, perhaps walking through them, with the Kinect linking their positions with the behaviour of nearby particles.

I would really like to develop the visual side using shaders, creating more of a blended growth image, rather than dealing with individual particles. However, I would like to research the possibility of keeping the logic I have developed and sending the coordinates of these particles to the GPU to create different visuals using GLSL to replace or enhance those I developed for the exhibition.

References

openFrameworks source code

https://github.com/dannyZyg/MA-CompArts-Final-Project

Arduino source code

https://github.com/dannyZyg/MA-CompArts-Final-Project-Arduino-Code

ofxPiMapper add-on - Krisjanis Rijnieks

https://ofxpimapper.com/

Binned particle system - Kyle McDonald

https://github.com/kylemcdonald/openFrameworksDemos/tree/master/BinnedParticleSystem/src

openFrameworks forum discussion of binned system

https://forum.openframeworks.cc/t/quadtree-particle-system/2860

Tutorial video for creating parameter smoother class - openFrameworks Audio Programming Tutorials

https://www.youtube.com/watch?v=BdJRSqgEqPQ

Programming Interactivity - Joshua Noble

http://shop.oreilly.com/product/9780596154158.do

Multi-tasking the Arduino - Part 3 - Unleashing the power of the NeoPixel! - David Earl

https://learn.adafruit.com/multi-tasking-the-arduino-part-3/overview

Nature, Technology and Art: The Emergence of a New Relationship? - Ursula Huws

https://muse.jhu.edu/article/608229/pdf

Ethics, Ecology, and the Future: Art and Design Face the Anthropocene - Kayla Anderson

https://muse.jhu.edu/article/587785/pdf

Emergence: The Connected Lives of Ants, Brains, Cities, and Software - Steven Johnson

https://www.penguin.co.uk/books/25647/emergence/9780140287752/