Movement at the Intersection of Georgetown Road and Southgate Drive, Tilton Illinois

This project explores the movement across a specific intersection in a small town in Illinois, collecting elements captured by a live webcam feed over the course of several days, and displaying them in an interactive application

produced by: Jonas Grünwald

Concept and background research

The initial idea for collecting movement on traffic intersections was based around my London accommodation overlooking a busy intersection, and my interest in capturing and sorting the elements that move across it on a daily basis.

Another aspect is the idea of the window overlooking and framing this space, from the view of my apartment, and the way the vehicles and people moving through this frame are visible for a small moment and then lost to the city again, a moment that I was looking to preserve and further explore.

Since I was unexpectedly not able to return to London, where I was planning to set up a camera pointing outside at the intersection from my window, I instead explored a variation of the project that replaces the framing of the window, with the almost similar idea of a live traffic webcam feed, also offering a window of sorts, into an intersection in Tilton Illinois, USA, a place I have never been to personally.

The "Village of Tilton - Traffic Camera" webcam feed appealed to me for one because of the diverse range and quantity of entities moving across it every day which I thought would be nice to capture, as well as its extremely forgettable small town USA entropy aesthetic. Over the course of the project I collected some secondary research about the town, mainly through internet sources, that I used in the project video to some extent.

Initially, my aim was to create printed matter using the collected elements in an algorithmic layout, and I am still planning to do this at a later point, however as per the requirements of the assignment I created an interactive element-browser application, that allows exploring the collected elements via head motion controls.

The idea here is to create a reference back to the original method of observation, looking at movement through the frame of a window, in this case the window of the computer screen, through the specific use of hands-free head motion controls.

Note: For the purposes of the assignment, the project is very loosely based on the 1954 Alfred Hitchcock thriller “Rear Window”, in which a photographer, confined to his apartment due to a broken leg observes his neighbours through his window.

Technical

The project is implemented in two separate OpenFrameworks applications.

Feed Observer

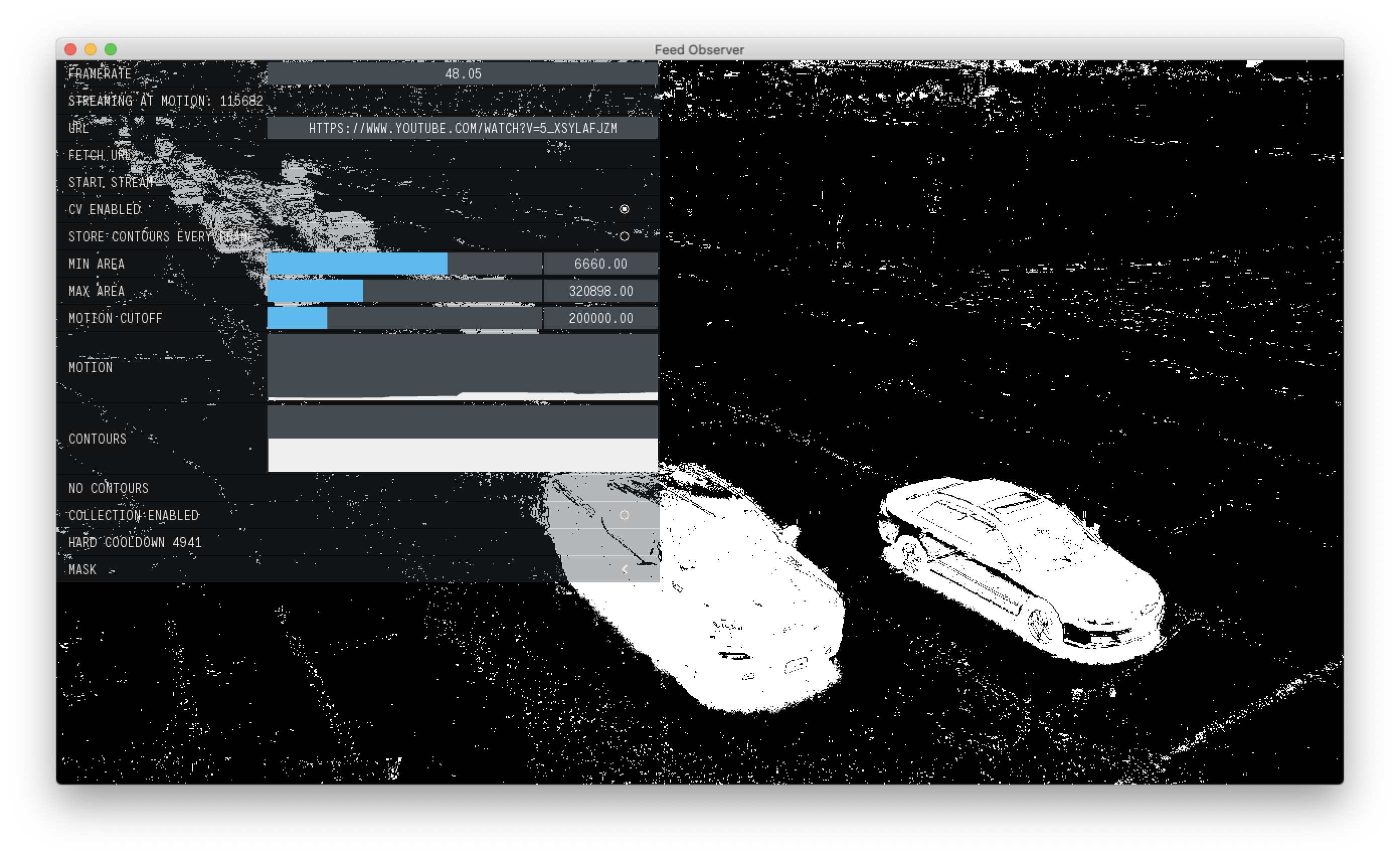

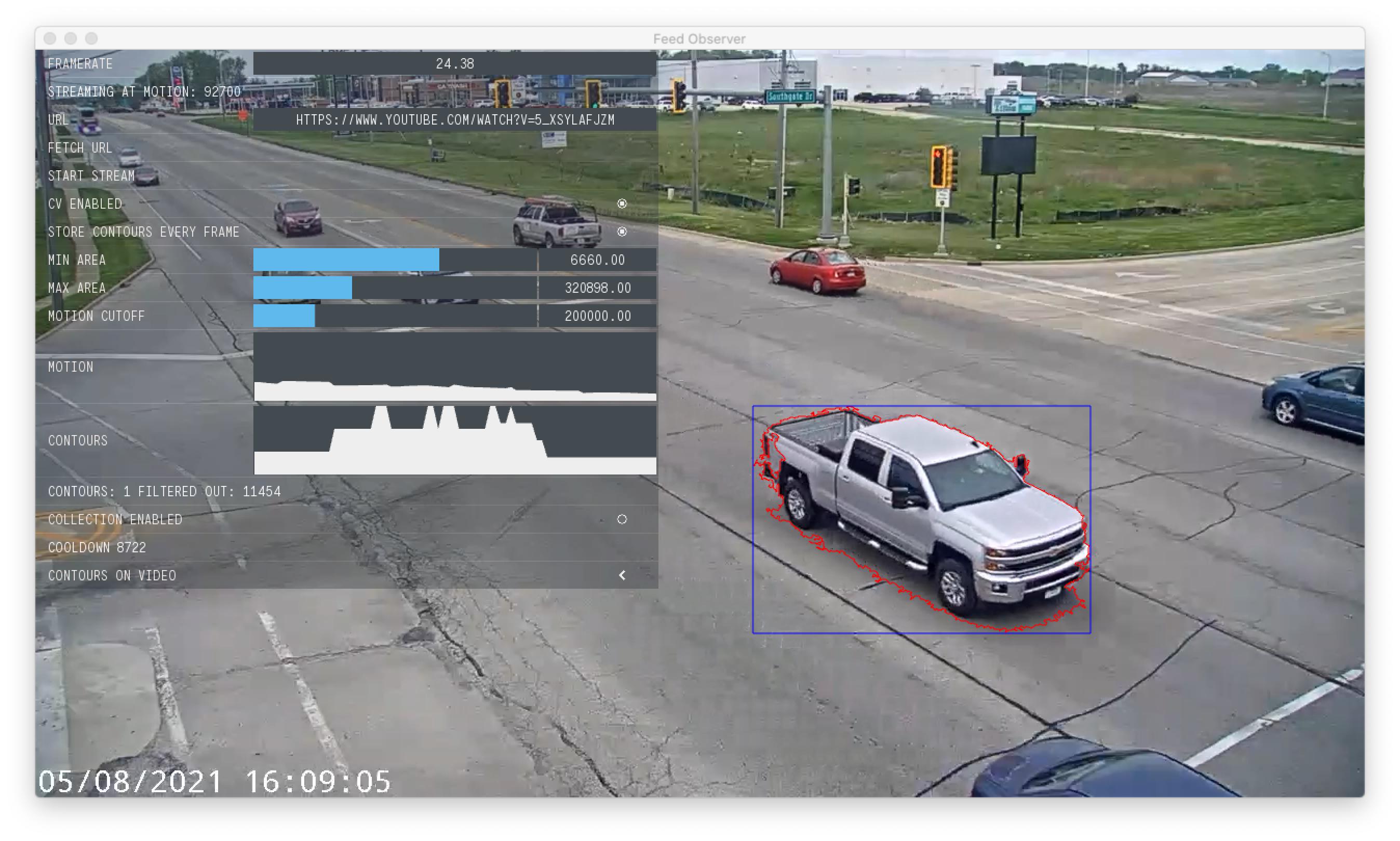

The FeedObserver app is responsible for collecting movement based on a webcam feed. From a regular youtube URL, the application derives a HTTP live streaming URL, based on this a livestream feed is established, which is then fed into a series of computer vision methods in order to isolate moving elements.

The KNN background subtraction method provided by OpenCV is used, in order to create a foreground mask, highlighting moving elements in front of the background with white pixels.

An OpenCV contour detection algorithm is then used on the created mask, in order to generate contour vertices for the moving elements, these vertices are converted to ofPolyline objects and bounding boxes for each detected element, creating the pool of exportable elements.

At this stage already, contours are filtered based on size parameters that can be adjusted in real time using the GUI.

When an element is to be exported, an alpha mask is generated based on the ofPolyline of the elment, using this mask, a transparent PNG cutout of the element is created, cropped to the elements bounding box, and stored to disk. This is the base loop of the element collection process.

A cooldown system ensures the collected elements are of high variety and quality, this particular webcam feed will occasionally adjust to changing lighting conditions, causing the background subtraction algorithm to register change in the entire picture, until it adjusts to the changed conditions, the cooldown is therefore triggered when there is a large amount of change taking place in the picture, to halt collection until the background subtractor has learned the new background.

The cooldown is also triggered to stop collection while the feed lags, which it does from time to time, additionally each time an element is collected, the cooldown is triggered in order to avoid collecting too many duplicates of the element, and space out collection over a bigger timeframe.

In order to facilitate the collection, the FeedObserver app is set up to run on its own on a Linux Mint machine, I wrote a systemd service in order to manage the application, the service is started and stopped using two cron jobs, so that the program runs only during daytime hours in Illinois.

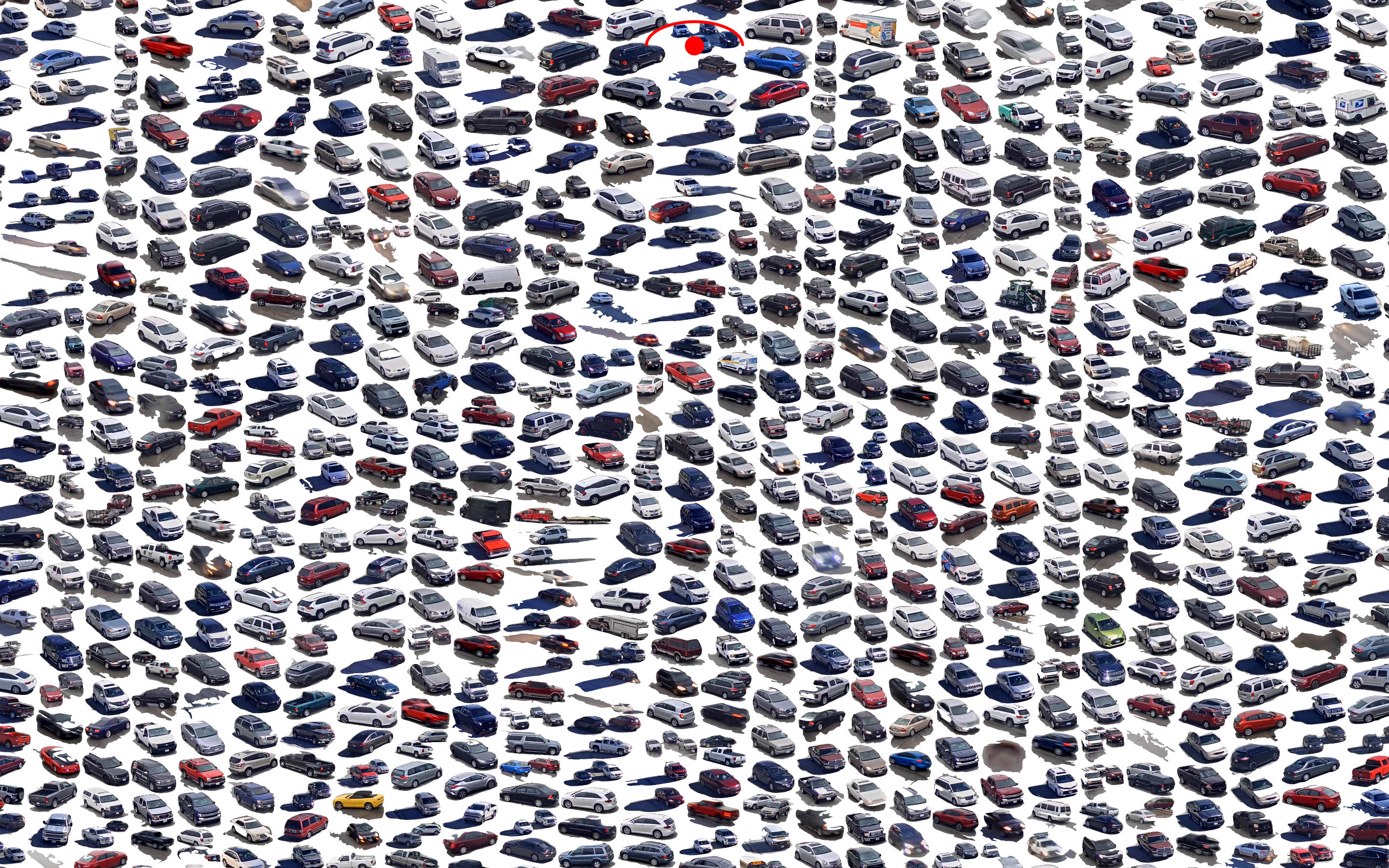

After several days of running, about 10000 elements were collected.

ElementBrowser

The main challenge with the ElementBrowser application was the layout system. Since the elements all vary in size, a simple fixed grid layout would not have achieved the visual I had in mind, instead I approached the layout as a 2D bin packing problem, a concept that is already known in Computer Science, and which has no 100% correct solution but several approximations with varying efficiency.

Over the course of exploring this problem, I initially created an add-on to wrap a shelf packing algorithm developed by Mapbox for use in OpenFrameworks, but then ended up using a different solution instead as I preferred the resulting layout.

The implementation I ended up using is an adaption of the 'Maximal Rectangles' algorithm, described by Jukka Jylänki in their paper "A thousand ways to pack the bin".

In order to allow navigation with head movements, I used the ofxFaceTracker2 add-on, which provides a pose estimation of the head position, from which I extracted the rotation component in order to move the viewport.

Future development

As mentioned, the aim is still to produce large scale prints of the elements.

Additionally, I would like to explore more windows to collect elements from, an idea was to contrast the current collection by choosing a more organic source, such as movements of insects or animals to collect and preserve.

Another point of interest is the actual motion path of each element, the preservation of which would require frame-to-frame tracking of elements, which is not currently implemented, but which I am interested to further work on at some point.

Self evaluation

Despite not being able to implement the project the way I had originally intended, I am very happy with the way it turned out, I was surprised with how organic and pleasant the elements look in the calculated layout grid, and I'm excited to further work on this project.

I am aware I did not really stick very close to the brief, but I have been wanting to do this project for a while and I'm glad I went with it.

References

Method to retrieve HLS streaming ULR from Youtube link using youtube-dl by OpenFrameworks forum user eelke

Bin packing code by GitHub user falki147

based on "A Thousand Ways to Pack the Bin - A Practical Approach to Two-Dimensional Rectangle Bin Packing" by Jukka Jylänki