Headscape

Headscape is a sonic immersive installation that incorporates the spatial sonification, presented binaurally over wireless headphone which aids the listener probing in the ‘physical’ and ‘digital’ space. Physically the position and orientation of auditor’s body are being captured by a Kinect and synchronised with the digital 3D sonic environment made in Unity3D. In this way, listener is being 'inside' the soundscape (instead of listening to it) which attempts to create a strong feeling of immersion. The soundscape making follows the term ’ transohonia ’ defined by Heikki Uimonen “ mechanical, electroacoustical or digital recording, reproduction and relocating of sounds”.

produced by: Taoran XU

(To get binual listening please put on headphone while watching the video)

Concept and background research

Headscape is the realisation follows my individual binaural study in acoustic perception which I did for Compuational-arts Based Research module in term 2. In this research I studied the capability of how headphone and sound can manipulate the perception and acoustic images in the listeners' heads based on one case : A soundwalk piece made by Janet Cardiff.

According to Pierre Couprie, soundwalk is a key aspect of soundscape studies is the sensitisation of citizens to their acoustic surroundings and the educational imperative of assisting in the development of the individual’s listening skills. Soundwalks are an aspect of this, comprising periods of time when one listens with greater attention than usual to one’s sonic environment (i.e. soundscape). Soundwalkers may even let their ears determine the route of the walk. A soundwalker may at the same time be recording material for further use in Soundscape Composition.

Comparing with 3D cinema, a soundwalk piece is a 3D sonic narrative which can only be percieved with a headphone. The listener like audience for cinema, can only experience the piece with fixed observing view. That is, what every listener will experience is exactly the same. In my piece I create a sonic environment instead which allows listener to explore. Every listener will have his or her own soundwalk after experiencing my piece. The listener himself becomes the soundwalker. The name of the piece"headscape" is combined with 'head' and 'soundscape'. It is a soundscape that exists in your head when you put on the headphone and when you put off the headphone you are back to the reality.

How willingly are they to stay in such a room and explore? How are their acoustic images look like, do they differ a lot or more or less the same? Will they be awared that we are living in a world which has been ' carpentered ' by technology?

Technical

The whole piece can be divided into three parts : Skeleton tracking/ 3D soundscape design/ visuals.

• Skeleton Tracking

Skeleton tracking is done in Processing with 3rd party library SimpleOpenni. With the help of SimpleOpenni, I can get 3D skeleton positional data and their orientations. In Headscape, I only use the position of head and the orientation of body. Once I get the position and orientation I can define a virtual 3D space in Unity. I synchronise the postion and rotation of the main camera in Unity with the postion and orientation of head captured by Kinect and calculated by SimpleOpenni via OSC in real time. In this way, people's physical movement in the physical space is affecting the virtual movement of main camera in the virtual space I made in Unity. Listerners are no longer just listen a soundscape piece, they are immersed into a soundscape instead. Their physical spatial positions and orientations will affect the final audio output they hear from the headphone. The only tricky problem I encountered in this part is to decompose the rotation matrix into XYZ axis in degrees as in Unity, the rotations are in XYZ axis with degrees but in SimpleOpenni, I can only get the rotation matrix which is a 4X4 array. With some googling and browsing I decomposed the matrix successfully in a way.

rotationX = atan2( matrix[1][2] , matrix[2][2] );

rotationY = atan2(-matrix[0][2] , sqrt(matrix[1][2] * matrix[1][2] + matrix[2][2] * matrix[2][2] ) );

I gave up the rotation in Z axis as it is not stable enough.

• 3D Soundscape design

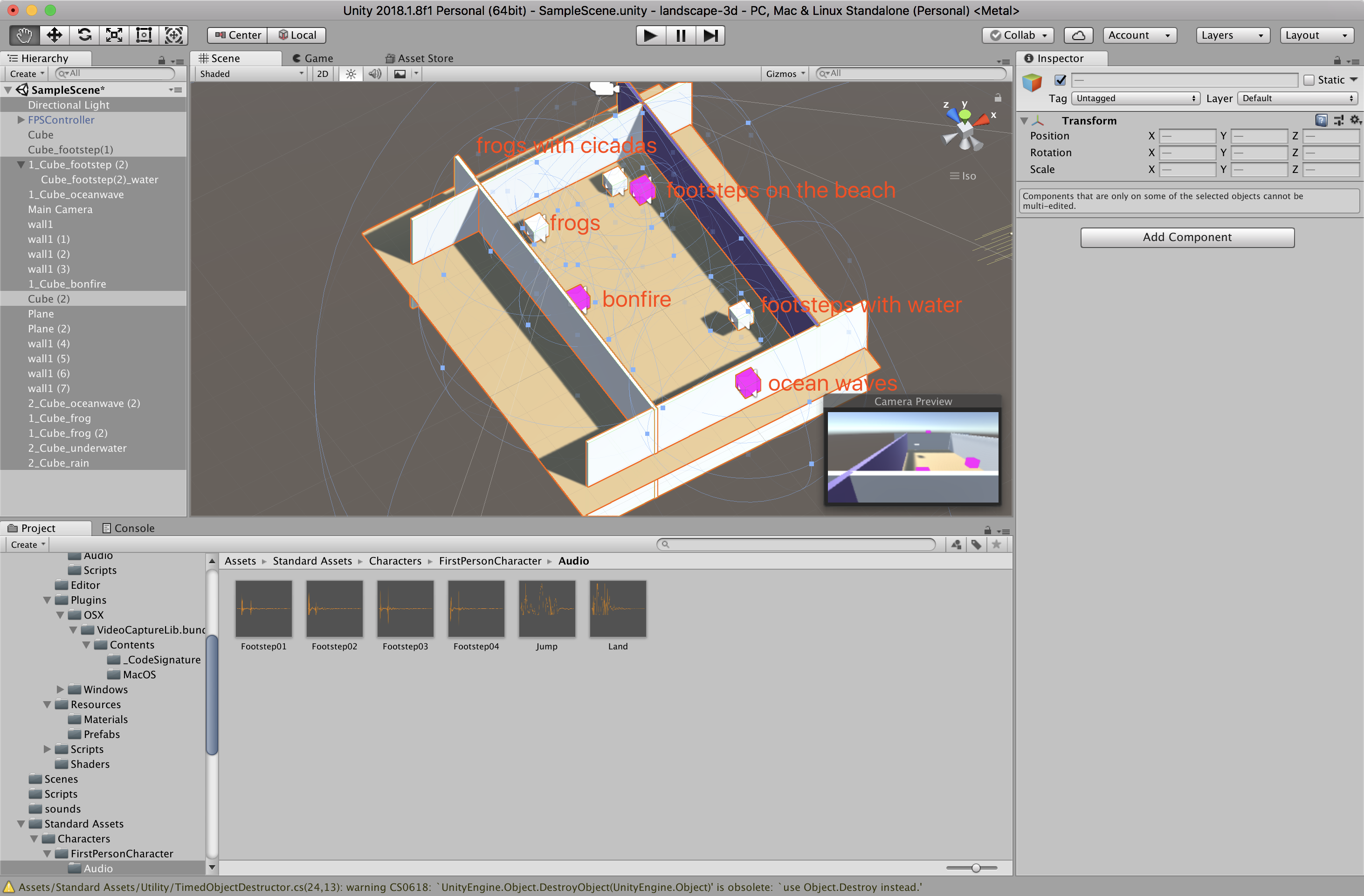

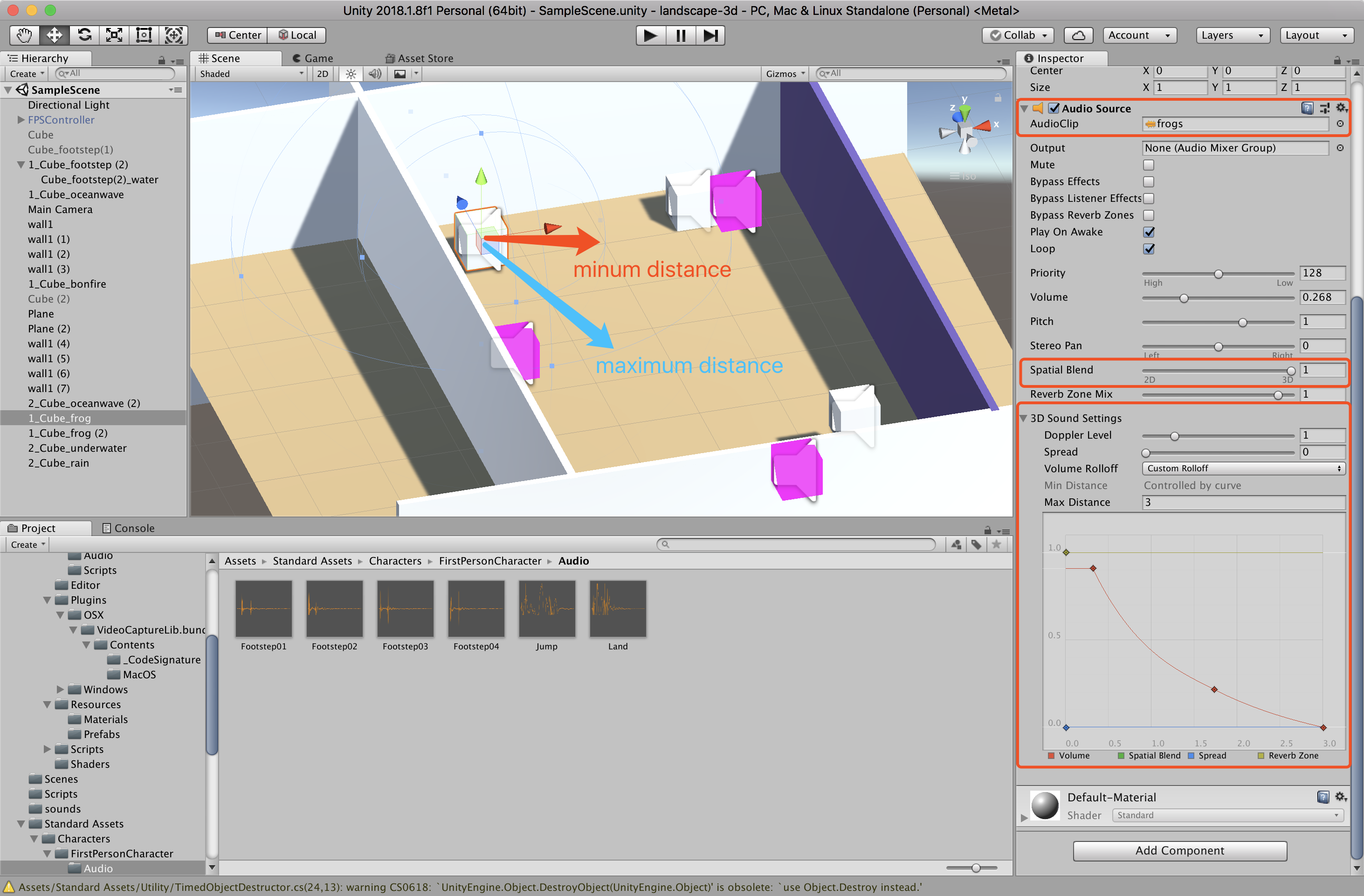

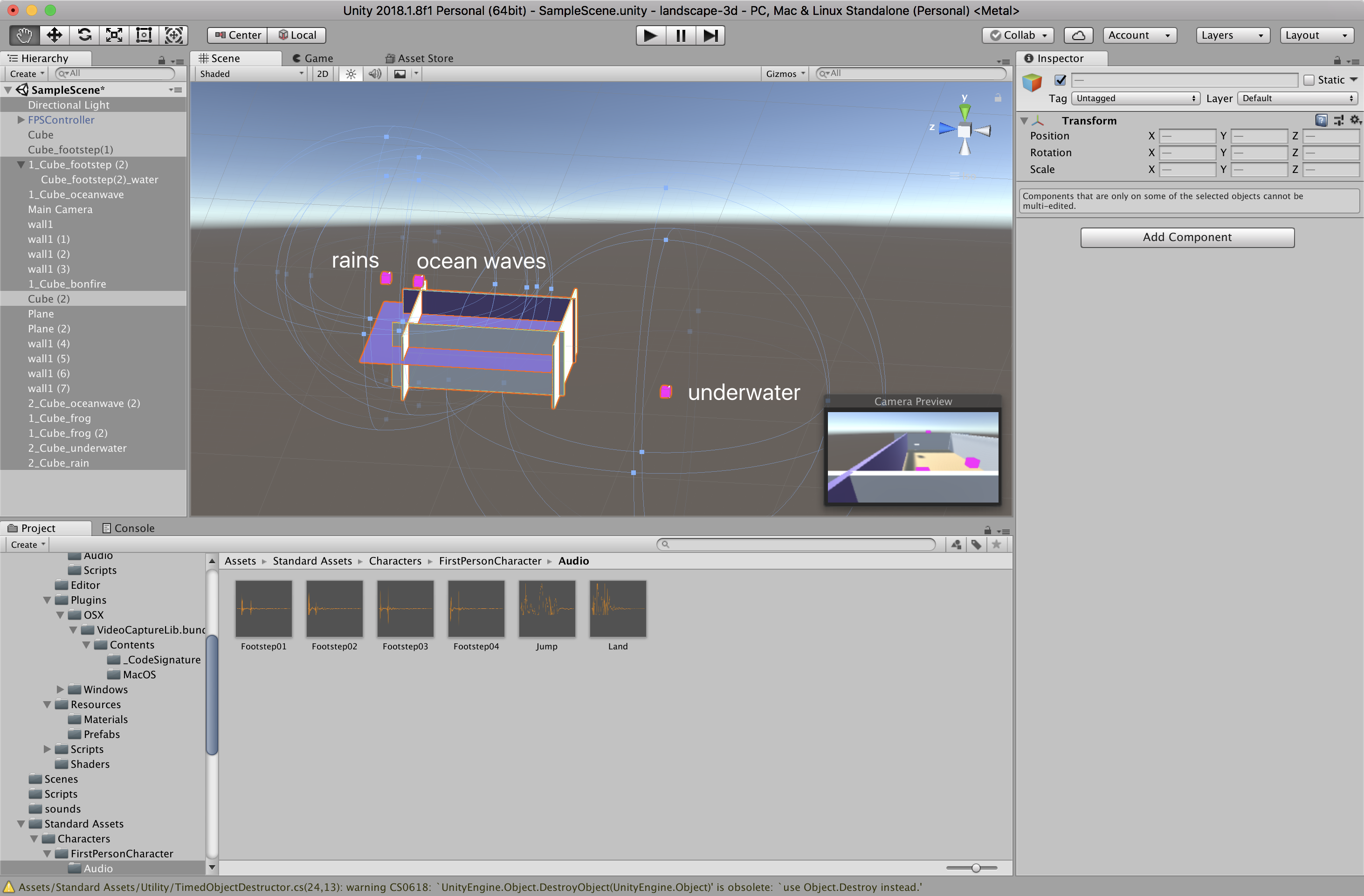

Soundscape is composed in Unity3D because it has built-in HRTF(Head related transform function) for you which provides a creative tool for me to express my ideas. Moreover, Unity is a free and cross-platform game engine I can export my piece into PCs and Macs. In Unity, a scene consists of game objects. And every game object can have a sound source with a 3D audio setting. In this way, I can easily create a sonic enviorment as I like. Firstly, I place game objects into the right place in the space. Then, I attach each of them a sound source from my recording libaries. Finally, adjust the 3D audio settings to create a more realistic and immersive 3D soundscape.

From Mick Grierson, there is a sonic immersion theory which divides sounds into three parts:

- Voice - It fully attracts your attention

- Symbolic Event - It only attracts your attention when it happens. Like your phone is ringing.

- Ambient sound - It does not attract your attention but it create immersion.

In my piece, I use latter two only as I want my listener to be like a god, observing the enviroment by himself and does not have any interaction with the environment. I use the two footsteps recordings to indicate the landscape. At first, listener hears someone walking on the sand which indicates he is on the beach. And gradually follows the footstep listener hears oceanwaves and someone is walking in the water which indicates he is near the oceanwave. After that, if you the listener is curious enough, he will follow the footstep and walk forward a bit more, in that case, he is teleported into scene two which is under the water. The footsteps themselves are like the acoutisc shadows of the landscape, which indicate the gerographical characteristics of the environment. I also use the bonfire soud recording to indicate that it is a evening.

- Visual

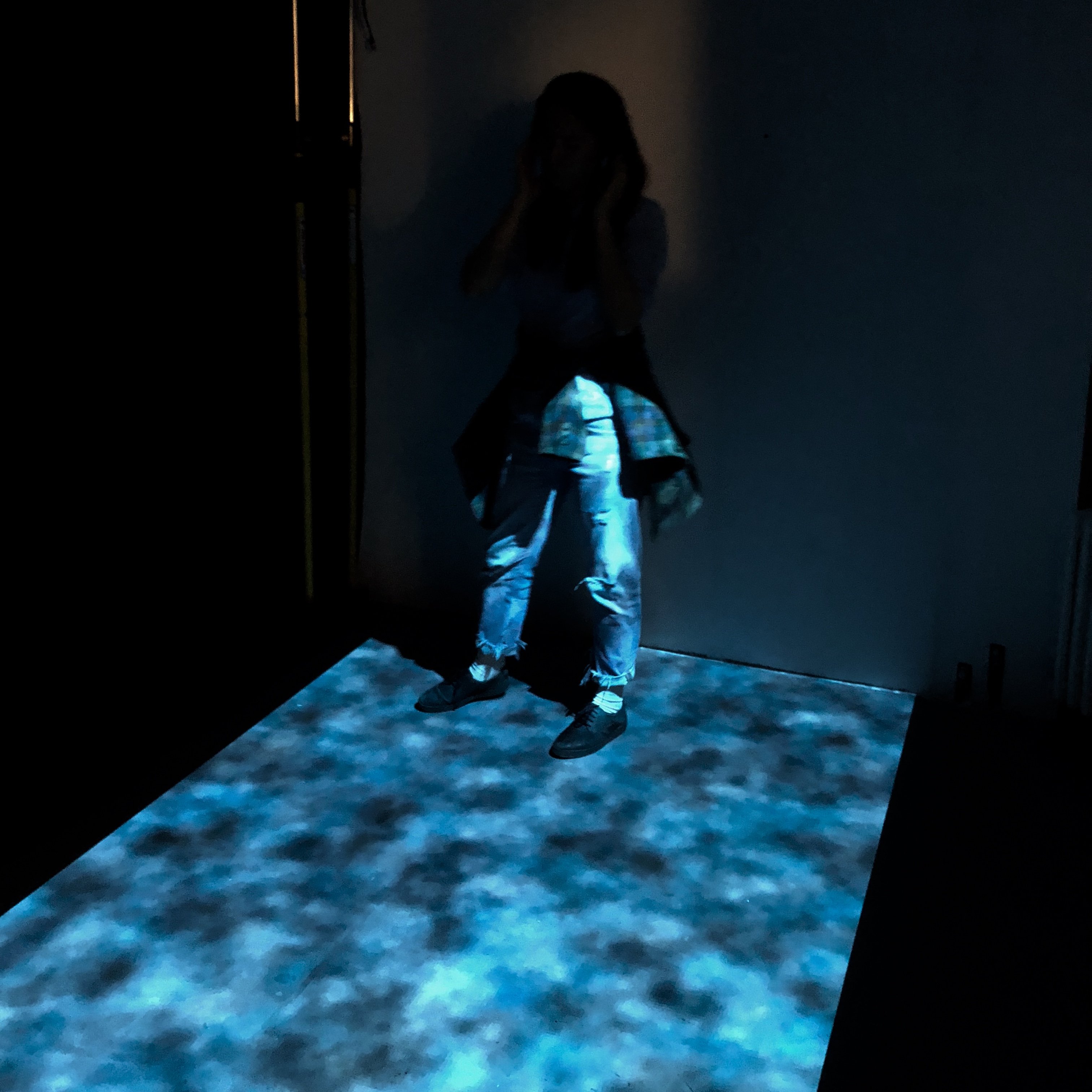

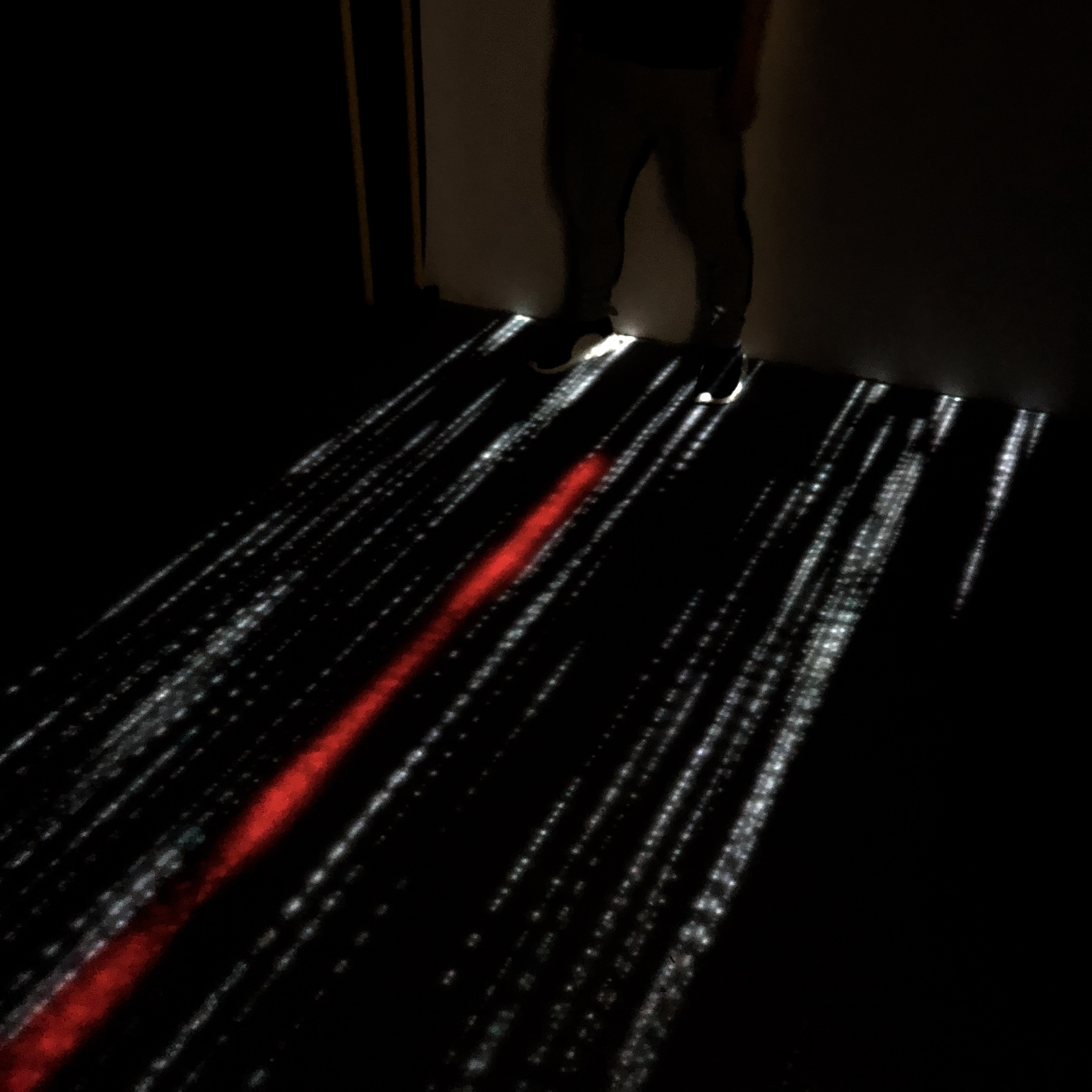

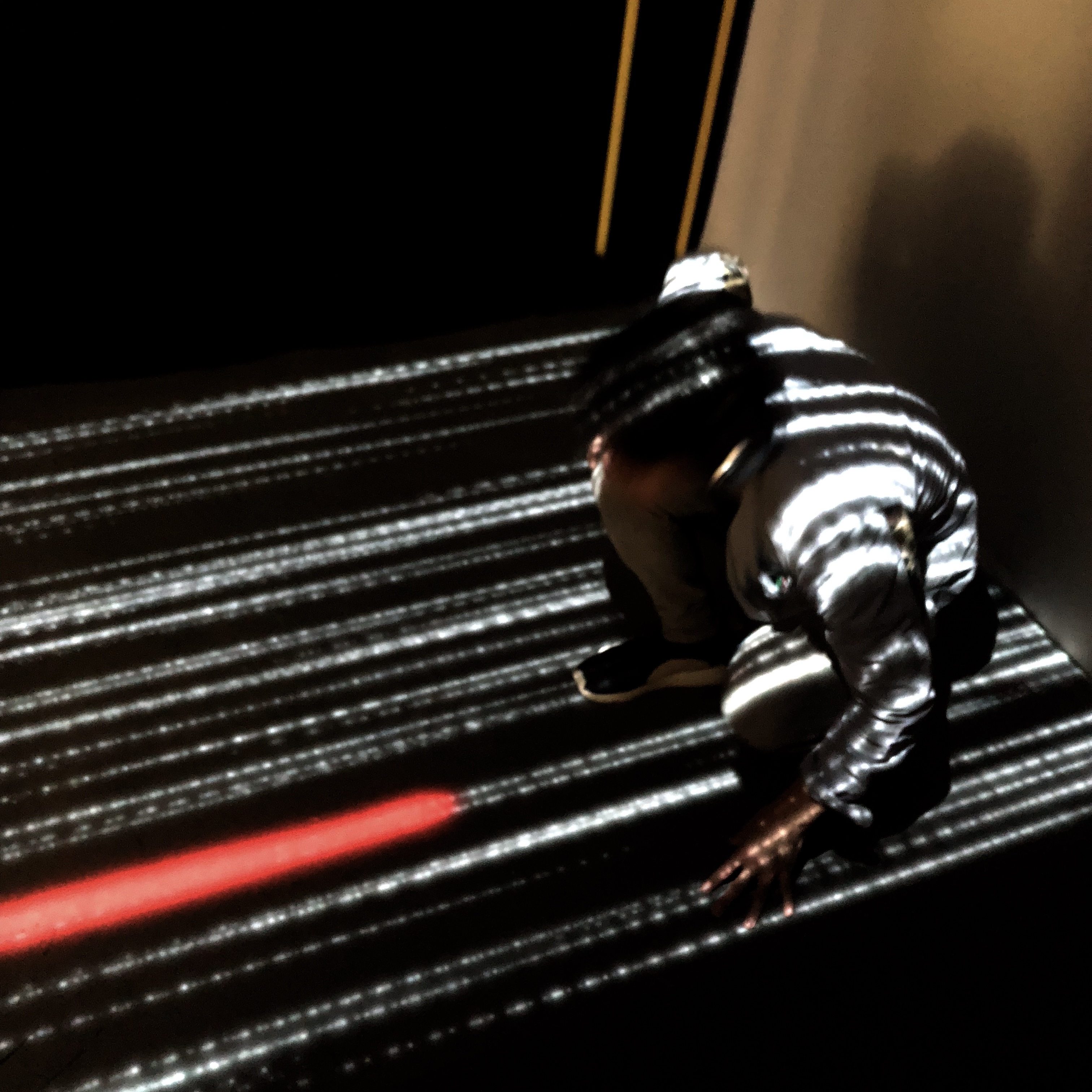

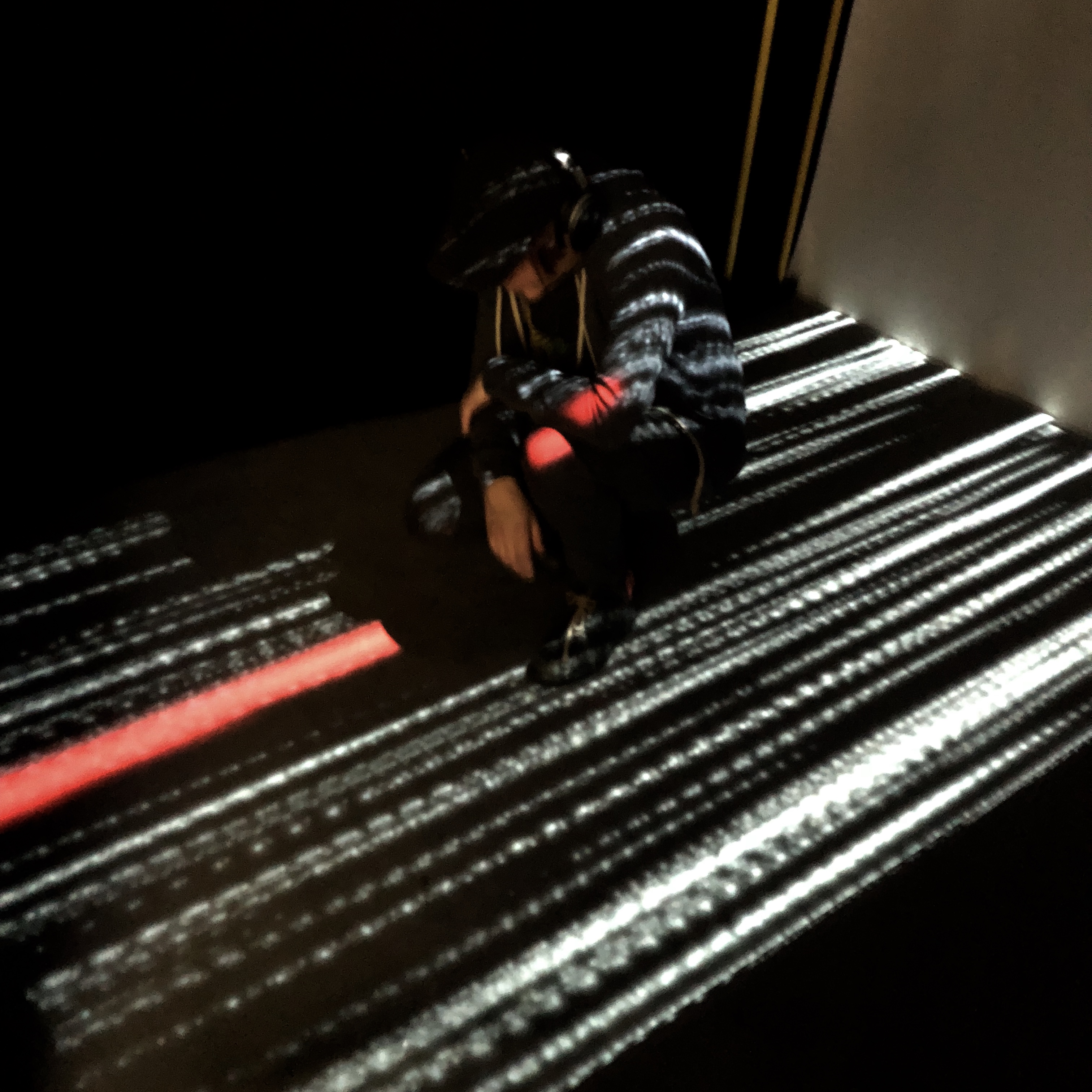

At first, I did not want to have visuals for my piece at all as it was a sonic immersion work. Visuals are likely over the power of hearing and makes hearing less immersive. However, later I realised that if there was no visual it would be hard for me to document my piece and I do need visuals to indicate the interaction zone for my audience - the space they are entering is limited. So I create two abstract patterns for each scene. The visual is done in Processing as well.

In scene one, there are two particle systems, one is white and the other is red. When Kinect captures a person, the red particle beam will appear in front of him. Guiding listener to walk forward. The white particle beams are like stars, I want to mimic the mood of that environment which is a stary night,you are walking on the beach.In scene two, I use noise pixels to mimic the feel of you are inside the ocean and out of the ocean.

Self Evaluation

People with different background hehave differently towards this piece.

I was delight to see people moving theire body inside the space feeling immersed. I heard people shouting when they were suddenly inside the ocean. I saw people swiming using their hands when they were inside the ocean. A woman said she felt she was in a vocation and I should improve the piece, it has a future, people stay home and relax. People who are sensitive to the sounds stayed longer and move their body much more slowly.They went down and move their head a bit to feel the binaural listenning and sensing the soundscape.

However, some people who are not sensitive enough to the sounds just stayed for few minutes and move their body quite fast to see the visual patterns change. They are more likely attracted by the visual projection instead of what is projecting from the headphone.

Plus, this piece is so hard for me to document and make a recording due to the tiny space I had. The Kinect is designed to only track one person and I blocked other audience to wait outside which left little space for me to make a recording.

Further Development

Change tracking technology

Kinect has its own limitations in terms of tracking volumes, noise and SimpleOpenni itself has limitation as well. It can not define the orientation properly if the person's side facing towards it. And it quit CPU comsuming. In the openning night, I encountered an akward situation, the programme no longer tracking person after running one hour. My Macbook Pro bacame super hot. I had to pause my programs time to time. Later, during the exhbition, I used two macs to run 3 programmes. One for Kinect and the other for Unity and the visual. It help in a way but can not deal with a long term exhibition. If I want to display my piece in a gallery I have to change into a better solution.

Larger Scale

Due to the ceiling is so low, my interaction zone can only be the same size as the projections on the floor. It is about 2.2m only and the project itself is around 45 cm long so the actual distance to project is around 1.8 m. With the best short ratio projector I can have from the school, the final project area is around 2.5m X 1.4m which limits my creative expressing. Actually, the soundscape design started only after the space was defined and decorated. I only have 7 days to compose my soundscapes. If I had more space, I could create more complex environment.

Complexity

Add interaction with the objects in the scene. Like objects will follow you or move away from you when you are at certain area.If you have any suggestions and question about my piece please feel free to contact me via my email. 🙂

xutr12@gmail.com / xutr12@163.com

Reference