Satisfaction of Oscillation

produced by: Taoran Xu

Introduction

This is a gestural based interactive audiovisual installation which allows recipients to control the play heads and play speeds for both images sequences and sound file in real time via an iPhone app. It tries to break the boundary between the digital and the physical as the recipients can feel the physicality of that ‘discarnate man’ in the screen especially when the recipient is shaking the phone the corresponding oscillation of audiovisual playback occurs.

Hardware: MacBook Pro / iPhone

Software: Gyrosc ( iPhone App ) / Wekinator / openFrameworks / Max msp; openFrameworks addon: ofxMaxim / ofxOsc

Motivation

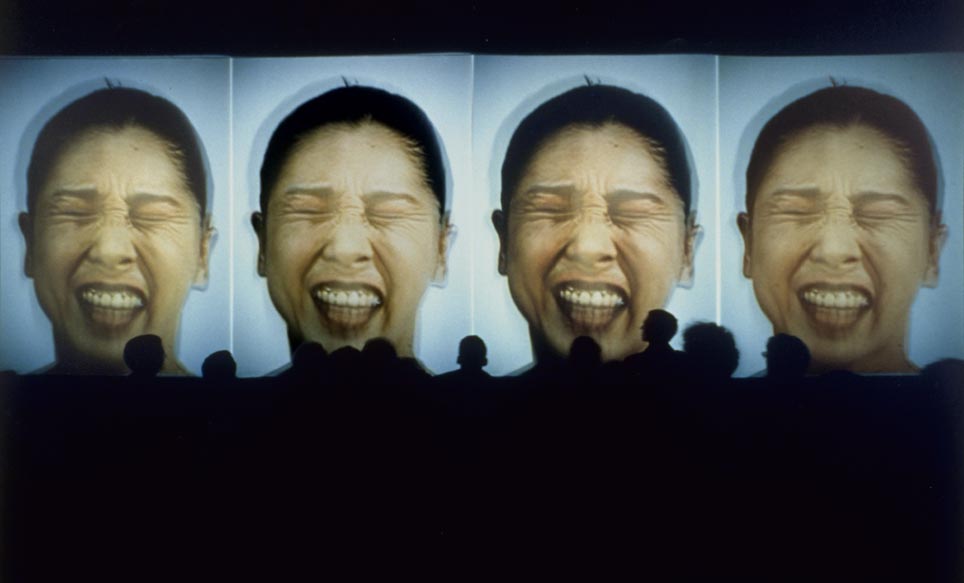

“MODELL 5, premiered in a first version 1994 at the ICC London has been described as one of the most beautiful experiments in bringing digital video to a theatrical setting. Using a technique derived from the principals of the sound design technique called granular synthesis but applied to the rather fat grains of single video frames (visual content and sound), GRANSYN manages to evoke from a few expressions on the face of the performer Akemi Takeya, a frenzied exploration of the alter ego within touching distance. “

MODELL 5 is one of the most unforgettable audiovisual artworks that I have ever encountered which has strong impact to me. After being used the artistic signal processes , the audiovisual clip enlarges my sensorial experience as the information the clip provides is augmented.

Apart from that , Chinese government recently emphasises on the control of Internet especially the content. Bilibili, one of the most creative video websites in China is in the blacklist because of its Glitch channel. The authors of all kinds of videos in the Glitch channel mainly use the videos provided by the social media like news report, interviews, movies, music videos etc. Also known as “raw video” . The video creators use the techniques such as cutting, pasting, montage to create new videos which manipulate the original reality the original one provides. That is what the government cares about. The video clip I choose is from an interview with the government spokesman about the Internet control in China. The translation of the quote in the clip ”中国在治网管网方面 ,也有自己的先进经验 ” is :

“China also has its own advanced experience in managing the network management network. ” —— by Google

“China also has its own advanced experience in governing and managing Internet ” —— by me

So, the idea to create a work that shows irony against the control using similar approaches as in MODELL 5 arises.

Technical Workflow

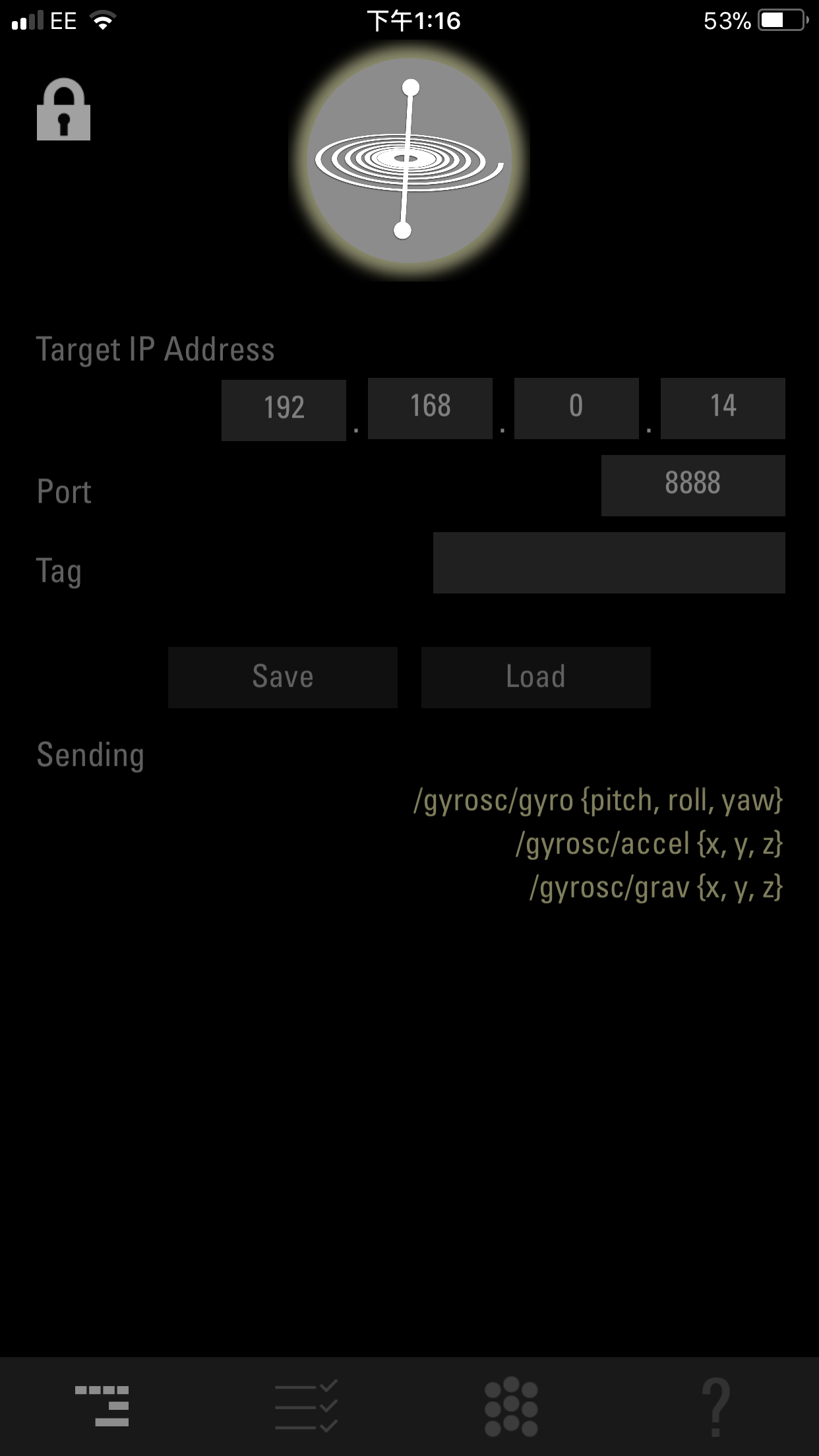

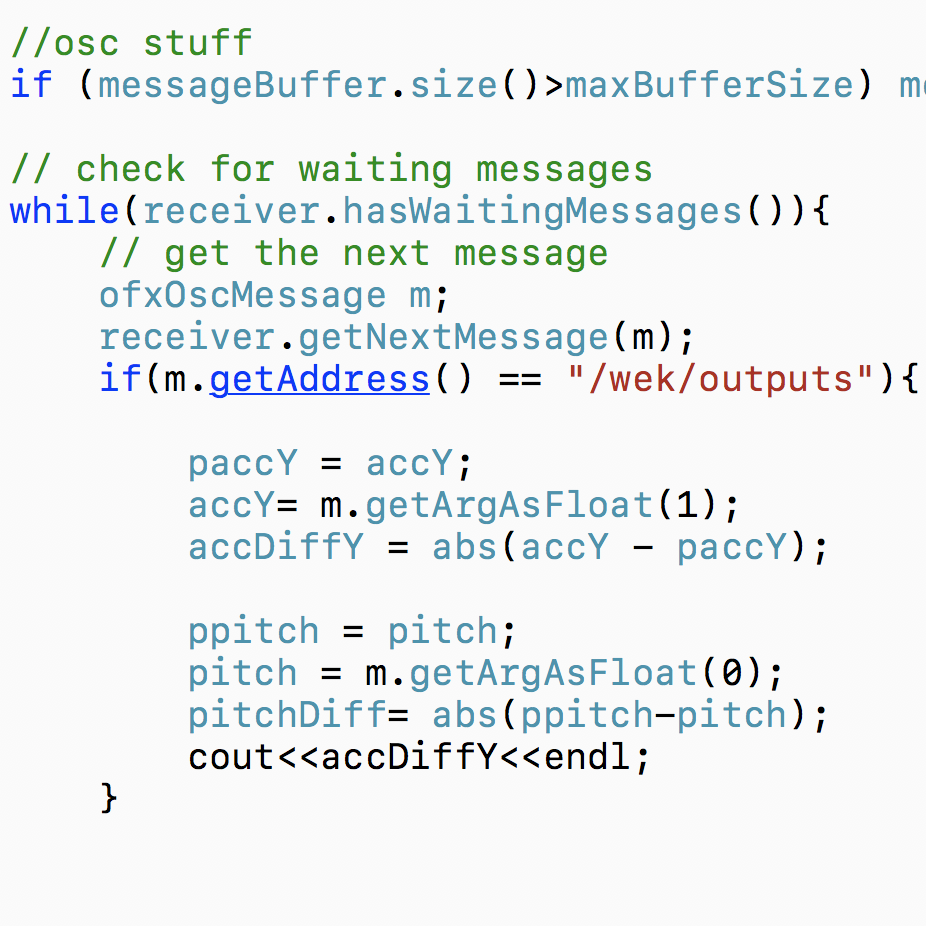

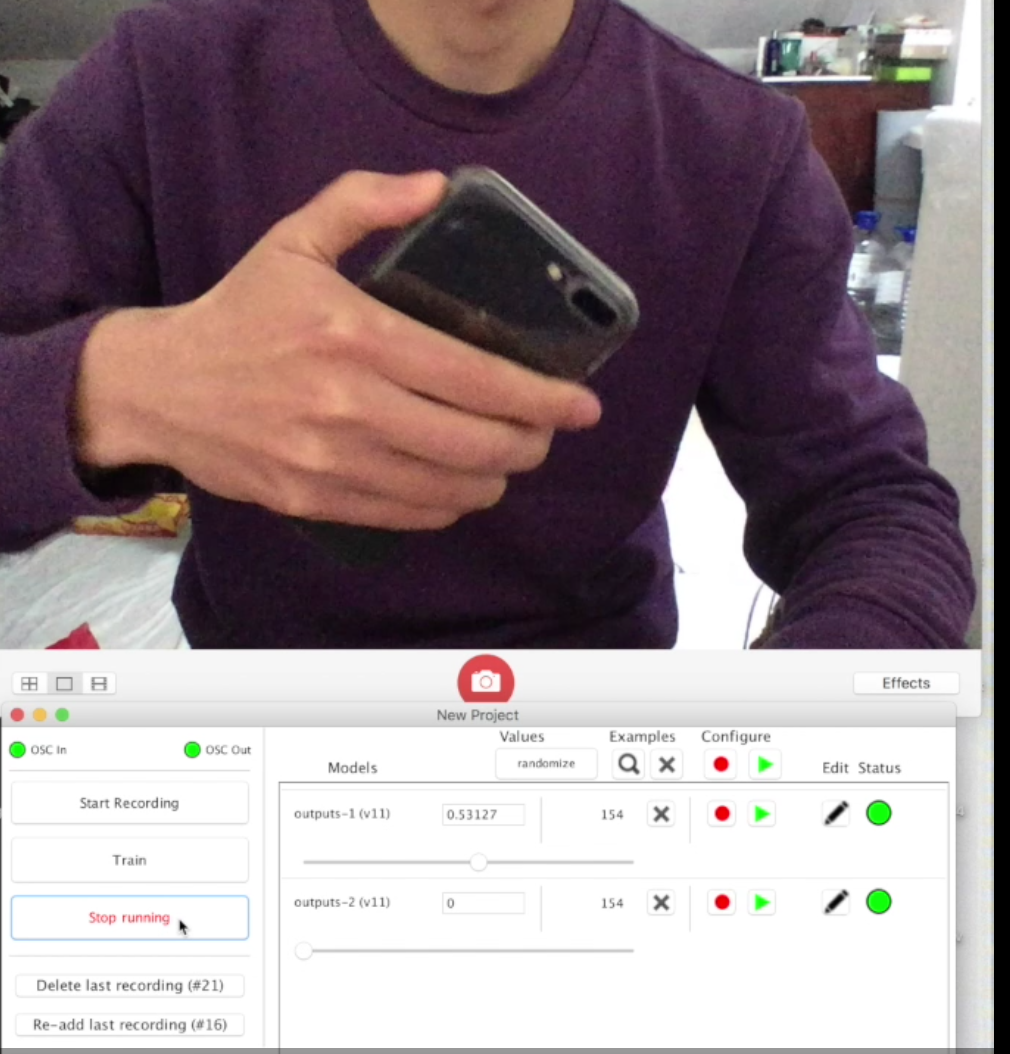

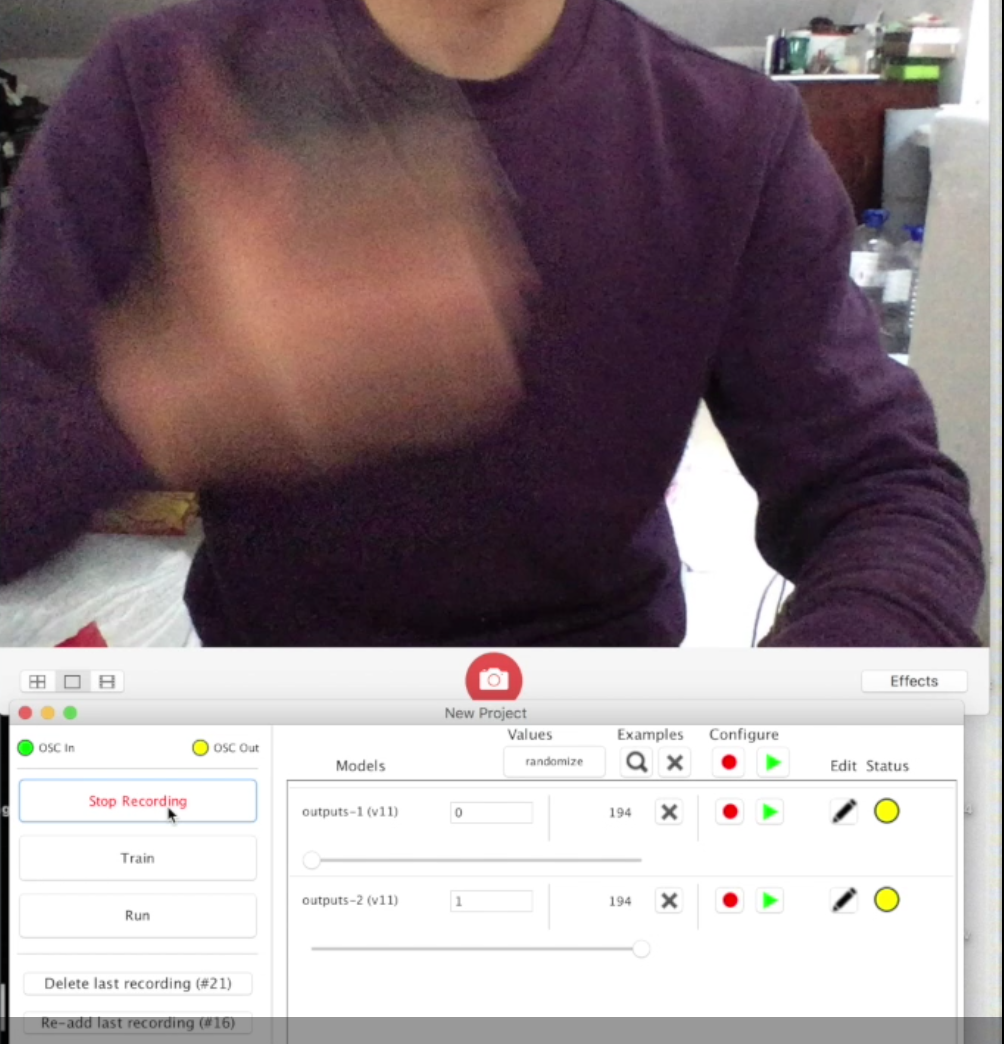

1. The interaction inputs are made and sent by Gyrosc, the iPhone app which collects the built-in gyro data and sends them to any applications that the Support Open Sound Control protocal. In this project, I use two variables : a) Pitch value b) Acceleration value in Y axis.

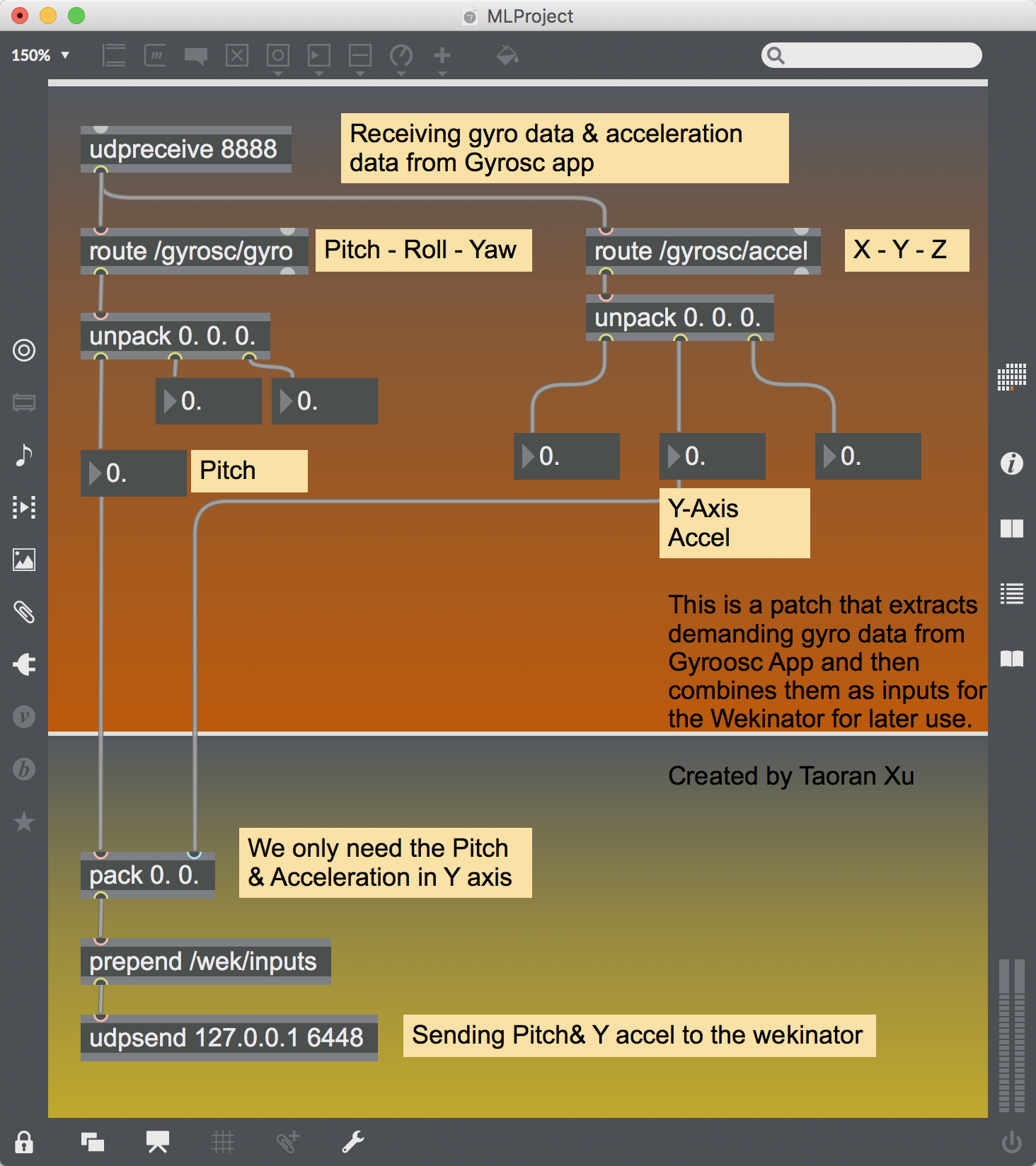

2. Gyrosc sends other data which I do not need, what is more, the pitch value is from the brach"/gyrosc/gyro" and the acceleration is from "gyro/accel" so the process of extracting my demanding data is needed as the Wekinator can only receive data from one branch. I create a max patch dealing with the inputs extraction to get pitch and acceleration in Y axis and combine them as a new OSC message. The new OSC message is the input for Wekinator for later training purpose.

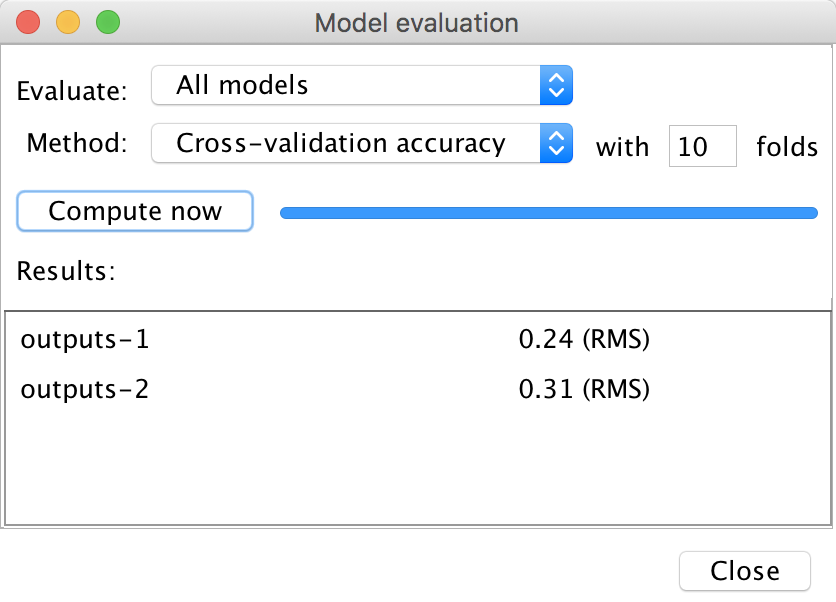

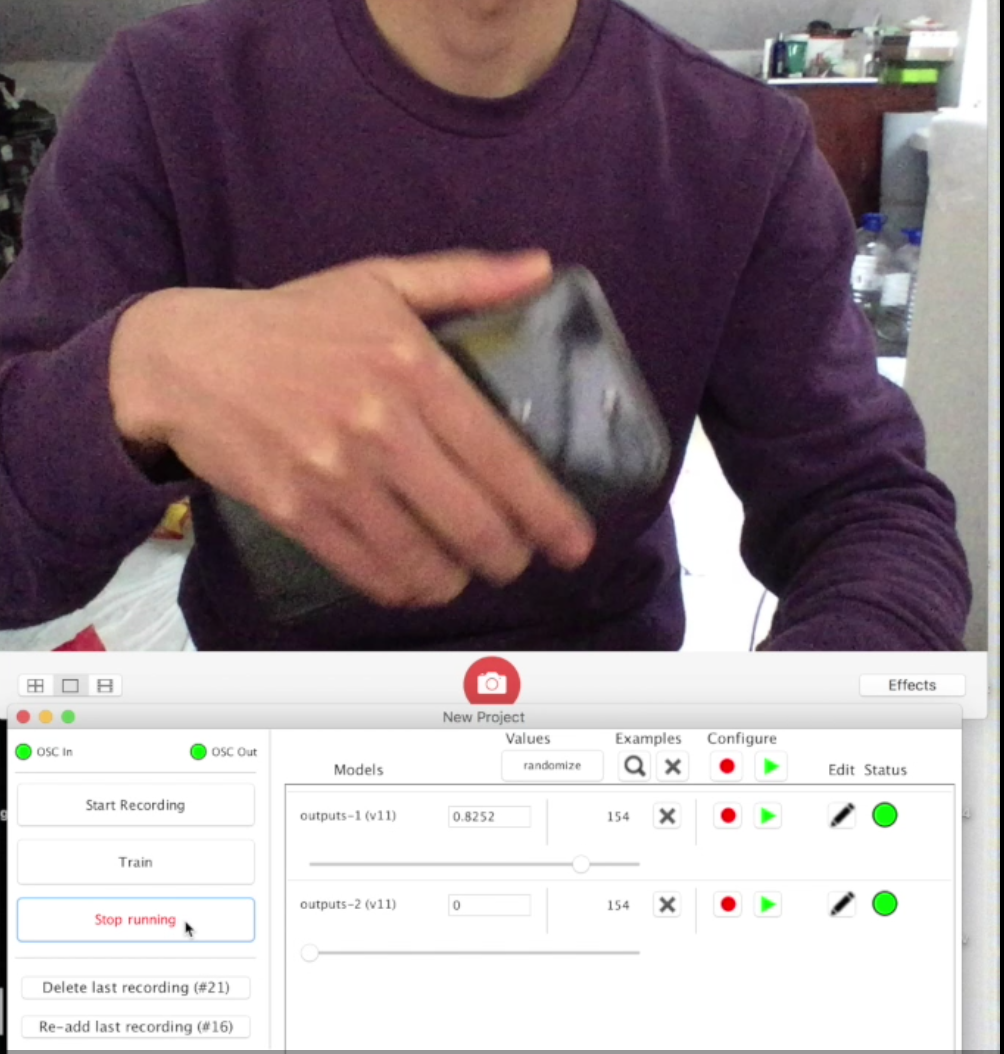

3. Receiving the data and training the data until I am pleased with the results.

4. The main OF programme receives the OSC messsage from Wekinator and responds with messages by triggering interaction. I detach the audio from the original video and deal the visual and audio seperately. The pitch value will control the play head of both images sequence and audio file, the acceleration in Y axis will control the play speed of images sequence and audio file.

a) For visual part

1. I create an arrary of type ofImage to store every frame of the video clip for later process.

2. Set the loop length to be 10 frames. Create a variable "index" (aka play head)and use it to set the indice for each visual part. Top left is index, top right is index + 20, bottom left is index + 40 and bottom right is index + 60.

b) For audio part

1. Create 4 copies of the audio files as there four copies of the visual.

2. Set the same play heads for each audio cresponding to its visual, and the left two are for left channel, and the right two are for right channel outputs.

* The version I submit in the learn.gold skips the machine learning process, which only contains step 1 and step 4.

Gesture Design

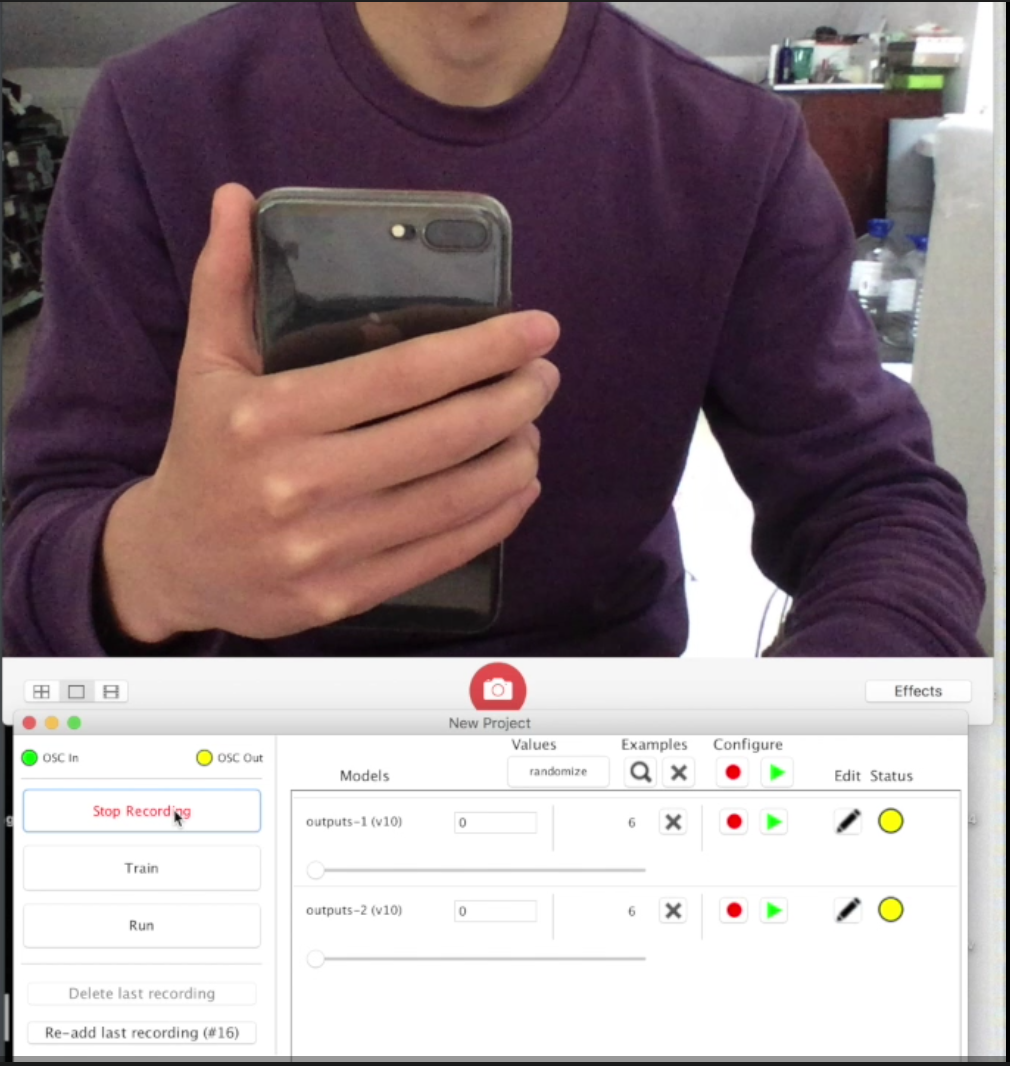

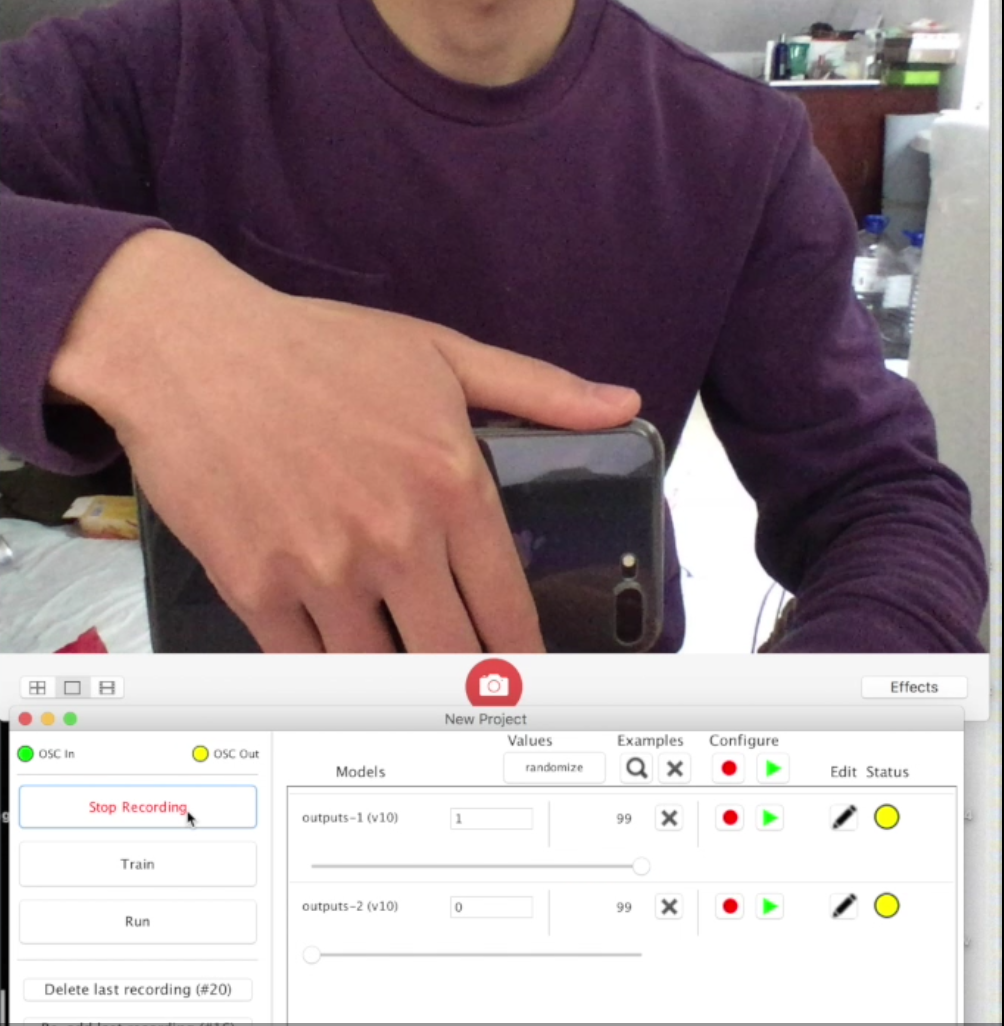

I create two gestures for interaction in this project.

Gesture #1:

Uses Pitch data to control the play head position in the image sequence.Pitch ranging from zero degree to 90 degrees to 0 to 1 for the output.

Gesture #2

Uses the difference of acceleration in Y axis to define the play speed in order to create a sense of uniform bonding between iPhone oscillating physically and audiovisual clip oscillating digitally.

Conclusions

This interactive AV installation more or less achieves my original idea as audiovisualo integration however there is room for improvement:

a) The images sequence does not loop seamlessly as you can notice there is a short pause before the next loop starts.

b) The ofxMaxim makes it hard for me to play a specific trunk of audio in specific speed. So as to say the machanism of its play(float frequency, float startPosition, float endPosition) function is different with its play(float speed) function. There is no such a function as play(float speed, float startPosition, float endPosition) funtion. I digged into the source code and tried to create one but failed.