Deep (Blue) Fake

Deep (Blue) Fake is an interactive artwork inspired by ‘Game Over: Kasparov and the Machine’ that explores the hidden human labour behind artificial intelligence.

Produced by: Sarah Cook

Introduction

Deep (Blue) Fake is inspired by the documentary ‘Game Over: Kasparov and the Machine’ which follows the 1997 chess match between Russian Grandmaster Garry Kasparov and IBM’s chess-playing computer ‘Deep Blue’. The match was deemed symbolic in the battle between man and machine, as Deep Blue’s triumph over one of humanity’s ‘great intellectual champions’ was seen as a sign that AI was catching up with human intelligence.

Stills from 'Game Over: Kasparov and the Machine'

Deep (Blue) Fake echoes aspects of the setup that Kasparov faced, taking the form of a custom-made chessboard which the participant is invited to interact with by playing against a seemingly autonomous opponent, who’s pieces move around the board independently.

In this instance the ‘opponent’ is actually multiple people working on Amazons ‘MTurk’ platform - ‘a crowdsourcing website for businesses to hire remotely located "crowdworkers" to perform discrete on-demand tasks that computers are currently unable to do’.

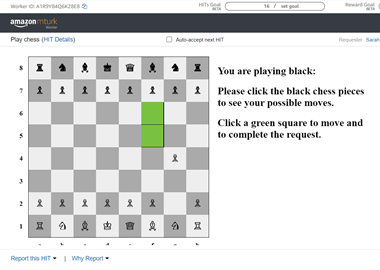

Image showing the interface sent to Amazon's MTurk. Remote workers interact with the chessboard by selecting the next move.

Concept and Background Research

MTurk is named after ‘The Mechanical Turk’, a chess playing automaton constructed in the late 18th century that was later found to be an elaborate hoax controlled by a human hiding inside. The platform is frequently used to outsource menial tasks such as dataset labelling that are crucial to the development of machine learning systems, often for a very low wage.

I was interested in exploring the connection between The Mechanical Turk and human labour in the era of artificial intelligence, when many seemingly automated tasks involve the hidden labour of human workforces.

Drawing of The Mechanical Turk revealing the secret compartment where a human player would control the Turk’s moves

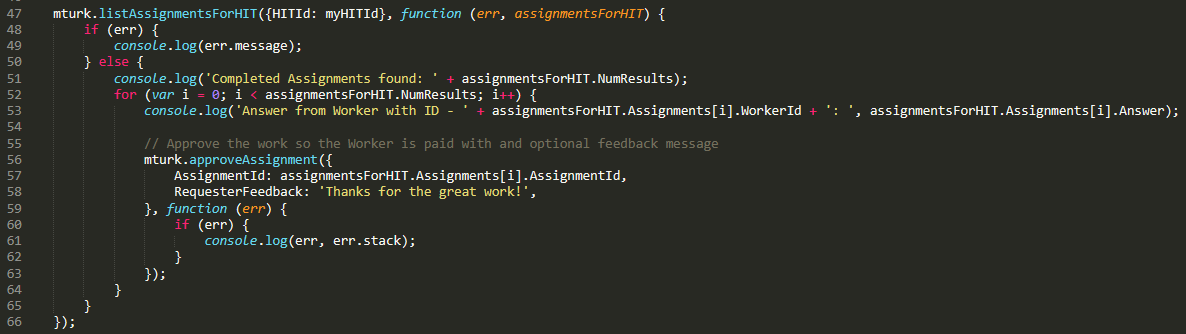

I wanted the piece to give the illusion of ‘magic’ often accredited to technology. I tried to achieve this by designing the system to be almost invisible, automating the call to MTurk and requiring no further input beyond the physical player moving a piece. Something that interested me about the MTurk API that I wanted to incorporate into the artwork was the idea of humans as ‘functions’ that can be called through snippets of code.

Python code snippet creating MTurk task

Technical

- The system behind the artwork consists of Open Frameworks, Amazon’s MTurk API, Python and Arduino. Addons used in Open Frameworks are: ofxIO, ofxPoco, ofxOSC and ofxSerial. The flow is:

- openFrameworks generates the virtual board, recording all chess piece locations in a map.

- The human plays white and makes the first move, recording their move within openFrameworks, which updates the map of chess locations and writes it to a JSON file.

- openFrameworks calls a Python script, which generates a MTurk hit containing a digitised version of the current gameplay using the updated JSON file.

- An MTurk remote worker accepts the hit and chooses the next move of the game.

- The results are returned to the Python script, which sends the new coordinates to openFrameworks via OSC.

- openFrameworks updates the location of the chess piece and sends a message with the new coordinates to an Arduino via serial communication.

- The Arduino moves the MTurks chess piece via a custom made XY table, an electromagnet, motors and drivers.

- Returns to Player 1.

Further development

The project challenged my knowledge of memory management and data structures as I needed to learn about pointers and converting data types to communicate over serial and OSC. It also improved my knowledge of interfacing between various systems and building infrastructures.

To develop the work further I would create a more seamless interaction by using computer vision to record the physical participant’s moves, without them needing to type each move into the system. The trigger to call the MTurk could then be embedded within a chess clock button and sent by the Arduino.

I attempted some initial experiments using ofxCV’s Frame Differencing example. I found that lighting and shadows caused some issues when attempting to isolate which squares on the board had changed. The next step I would explore would be using computer vision to isolate the chessboard object and perhaps using edge detection to divide it into squares and assign each square to its corresponding coordinate. This would enable me to identify which two squares had changed and determine the most likely move made by the participant.

Initial computer vision experiments using ofxCV’s Frame Differencing example

References

The code behind this artwork was adapted from various sources.

openFrameworks:

https://github.com/JustinBird/ChessGame

Arduino and Hardware:

https://forum.arduino.cc/t/serial-input-basics-updated/382007

https://create.arduino.cc/projecthub/maguerero/automated-chess-board-50ca0f

Python:

https://github.com/aws-samples/mturk-code-samples

Javascript:

The JavaScript was written by artist and collaborator Rod Dickinson, and makes use of the chess.js library: https://github.com/jhlywa/chess.js/blob/master/README.md