Porous Borders

This is a piece about memory, landscape and time which uses water as a medium to both generate and distort images projected onto

a flowing surface.

produced by: r moores

Introduction

This combines physical elements - water, cloth, metal, light, with computational representations of the natural world datafied through machine learning and projected back onto those elements to form a responsive whole.

Concept and background research

The work, if it is about anything, concerns time, memory and landscape. It doesn’t aim to convey a particular message, either about computation or the contemporary world. My influences are for the most part from painting and film - the landscapes of Gerhard Richter and images from Andrei Tarkovsky's films, particularly the way he used water in his compositions. There is a very strong sense of time in both these artists work and I hoped some of their mastery of it would come through in mine.

"I pursue no objectives, no system, no tendency [..]. I don't know what i want. I am inconsistent, non-committal, passive; I like the indefinite, the boundless; i like continual uncertainty" (G.Richter, Notes 1966, in: The Daily Practice of Painting, ed. Hans-Ulrich Obrist, transl. David Britt, London 1996)

I was interested in some kind of stochastic input, and also in the non-linear, adaptive behaviour of machine learning models as a way of breaking out of repetitious algorithmic patterns. Water was appealing medium for this - unpredictable and loaded already with connotations of the passage of time. It also bends light: I wanted to place some sort of distorting filter between the viewer and the imagery, but a real one, not a video processing effect.

I am also interested in the affective, pre-cognitive levels of consciousness (what N. Katherine Hayles terms 'Unthought') and the realm of atmosphere or mood, where a precise meaning cannot be determined or captured in language, but can emanate through a space, perhaps solidifying into meaning retrospectively. Though preceeds language, but affect preceeds thought.

From reading Patricia Clough on the 'datalogical turn' and Wendy Chun's 'Programmed Visions', I wondered if datafication could be seen as a kind of memory loss. The cGAN used in this piece to generate landscapes had been trained on 40 thousand images scraped from Flickr. Already haunted, these photographs are subject to further abstraction, their location in time and place, as well as that of the photographer is lost, datafied into a field of potential pixel values in latent space.

The model is trained to produce highly realistic, ficticious landscape images from a ‘semantic map’, a colour segmented image tagged for various features: grass, sky, clouds etc. In this case it is given bad data: the maps generated by trying to track the water on the screen do not generate anything resembling a map.

Technical

The installation ran on three computers: a Raspberry Pi to control the pump speed, a PC with a good graphics card to run the mchine learning model live, and a Mac mini which dealt with camera input, projection and generating the semantic maps to send over the model. All were networked over ethernet via a switch.

I wanted to try something other than OSC for messaging between Openframeworks on the mac mini and the Pi, as I wasn’t so much concerned with the speed as the reliability of the transmission: and all the OSC libraries i have seen outside of commercial products only implement OSC over UDP, despite the option of TCP in the specification. After looking into zeroMQ, I came across a more recent project, nanomessage and decided to give it a try, using the python library on the Pi side and an openFrameworks addon, ofxNNG on the mac mini. I found that if I caught any disconnection errors on the Pi, the system was really robust - either side could disconnect for long periods of time (eg overnight) and would re-connect and start working immediately once the client or server reappeared.

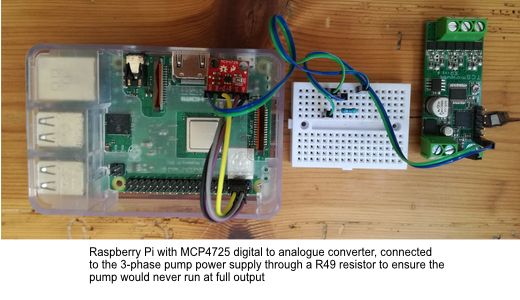

The water pump has a variable speed motor which could be controlled by a 0-5V analogue input. I had done some research into pumps and found that i would need one with a reasonable amount of lift to push the water up an 8mm pipe at the rate required. I had decided to use a Raspberry Pi rather than an Arduino type board as I wanted to use a TCP/IP connection and have the option to remote login. As the Pi’s don’t come with a true analogue output and the pump would not accept a PWM signal for its speed reference, I used a MCP4725 digital to analogue chip to generate the control voltage, controlled via the i2c bus on the Pi. The GPIO pins were controlled using the ‘pigpio’ library which comes with hooks for Python and C and has as a really good software debounce function built in.

For the machine learning model i relied heavily on the Runway hosted implementation of the SPADE semantic image synthesis model. On a linux system, the model can be run ‘live’ on a local GPU - the setup is a little tricky but once in, the model ran smoothly for 5 days on Manjaro linux, inside a Docker virtual machine, with CUDA acceleration (using the Nvidia container runtime) - it would return an image from a semantic map generated by openFrameworks code in around half a second, at two second intervals.

The handling of the HTTP requests between openFrameworks and Runway proved quite difficult and I ended up with a slightly hacky system which used the openframeworks Url loader class for queries (data then had to be decoded with an external library) and part of the ofxRunway addon, slightly altered to work with the SPADE model to send new semantic maps to the PC.

Semantic map and image produced:

Future development

I would like to develop the work into a series of panels, in a darker, more meditative space with more control over lighting and the positions of the projector and camera. I also like to work with original models, perhaps using tensorflow directly or keras, but in any case removing the dependency on an external application. I would be interested to see if the training data could be generated over a longer period of time from images or audio acquired by the work itself, in-situ.

I would also like to develop the sound aspect the work - it was a bit lost in a large noisy space, but the sound of the water falling had a strong effect which could perhaps be amplified or looped back into the assemblage in some way.

Self evaluation

In general, I think the work was successful and held its own as a coherent piece of art. I felt i had achieved a lot in a short space of time, but had only just begun to explore the possibilities of the work. The space was far too bright for the camera to track the water as planned so the imagery was driven as much by ambient light as the movement of the water. Not what I had intended, but the piece did at least respond to its environment. I had little time to experiment with different tracking algorithms in on the water - i could have made much more of angles and other features to create a much more obvious link between the water and the imagery being generated. Front projection would have been much better, and would have avoided the 'hotspot' from the lamp.

The imagery didn’t quite have the alien landscape quality i was hoping might result, but i think it worked as abstract and did convey some organic quality and notion of flow of time.

i would like to reduce the number of addons, which were mainly for the HTTP communication to and from the model, i'm sure this could be done much more simply (and asyncronously), using built in functions from openframeworks.

I would stage this work again though -i think it has potential for further development.

References

N. Katherine Hayles(2017) 'Unthought: The Power of the Cognitive Non-Conscious', University of Chicago Press.

Wendy Chun(2011) 'Programmed Visions: Software and Memory' MIT Press.

https://github.com/rychrd/waterScape.git # MA project home page

https://nvlabs.github.io/SPADE/ # the original NVidia paper on spatially adaptive segmentation technique

https://github.com/agermanidis/SPADE # the Runway model

https://runwayml.com/ # Runway ML application site

https://libspng.org/ # PNG decoder, simpler than libpng

https://pypi.org/project/pynng/ # python bindings for nanomsg-next-generation

https://github.com/nariakiiwatani/ofxNNG # openframeworks addon version of NNG

http://ww1.microchip.com/downloads/en/DeviceDoc/22039d.pdf # MCP4725 specification

http://abyz.me.uk/rpi/pigpio/ # Pigpio library

https://os.mbed.com/teams/The-Best/code/MCP4725/ # MCP4725 mBed page