Mishmash us

An interactive AI that live generates a new human-machine hybrid creature using GAN based machine learning, facial recognition system, and web hosting, which investigates the human-machine dynamics surrounding the core idea of conflict, boundaries, and symbiosis.

produced by: Betty Li

Introduction

Mishmash us is a machine that breaches human-centered machine learning guidelines(HCML) and stops developing itself to be more human-like. It imagines a human-machine hybrid bounded by liminality, collapsing into grotesque violence. In the fantasy world of Mishmash us, humans are not being worshipped anymore, but scrutinised into materials of its new invention.

Mixed trained with three different image databases of thousands of human faces, machines, and microscophic human cells, Mishmash us presents a generative adversarial network (GAN) that stucks in entropic chaos of ambiguity, the ambiguity of not knowing the differences between a human and a machine. The outcome is a morphed creature of both human and machine features. Mishmash us will live generate new creatures into an image archive grid, where one of the creatures will be replaced by a new generation every second, based on the current creatures in the archive, combined with some randomness in latent space.

The audience can generate their own Mishmash profile by taking a selfie and inputing their names on the webpage. It will randomly choose one of the creature on the archive grid and mishmash the human face with it using neural style transfer. With a facial recognition system embedded, the actual facial attriubutes of the audience will be analysed by the AI and displayed alongside the otherworldly Mishmash profile image.

Concept and background research

The core concept of Mishmash us is to obfuscate the boundaries between humans and machines. It envisions a future of the two entities entangled in a decentralized power dynamics, where machines have the agency to observe humans but not to serve, and humans are not validated with superioty by actively distinguishing themselves from the machines. Mishmash us deliberates a future outlook where machines and humans become inseparable as the invincible force of technology brings them together in shattered human-centralism.

Humonoids and CAPTCHA

The ignition of the whole concept is CAPTCHA. The idea of proving youself is not a robot by recognizing some AI-generated squiggly numbers and letters is absurdly posthuman to me. At the same time, humans are endeavouring to create the most human-like machines. However, stronger the resemblance comes with harsher the dichotomization: the more machines behave like humans, a more enhanced CAPTCHA system is needing to be developed to counteract with it. The paraodox, control, the abundance of power are all driven by human desires. Mishmash us contravenes that anthropocentric worldview by granting the machine agency to do something entirely non human empathetic. It is not trying to get in people's heads, but scrutinize humans to realize its own creation.

Left: 3D CAPTCHA; Right: “Kodomoroid," the very first news-reading android created by Japan.

To be is to be: mapping the philosophy of being and becoming onto the human-machine relationship

"He was free, free in every way, free to behave like a fool or a machine, free to accept, free to refuse, free to equivocate; to marry, to give up the game, to drag this death weight about with him for years to come. He could do what he liked, no one had the right to advise him, there would be for him no Good or Evil unless he thought them into being." -- Jean-Paul Sartre, L'âge de raison (The Age of Reason) (1945)

According to the philosophical dichotomy of being and becoming, being refers to ideas that exist in the immaterial realm of pure information, while becoming indicates ever-changing concrete material objects. The ever-developing technology aims to perfectly adapt to the human ideology, which reflects on the ontological meaning of existence eventually.

In Plato's Theory of Forms, he claims that forms or ideas are prior to any instance of an object with a given form, and the forms exist in another realm that is more real than the everyday physical world of material objects. Jean-Paul Sartre, who believes “existence precedes essence," states that "Imagination is not an empirical or superadded power of consciousness, it is the whole of consciousness as it realizes its freedom" (Imagination: A Psychological Critique). That is to say, being is empowered and suggests freedom. Mishmash us puts the machines on the other side of the power scale. It is made free to imagine, to ideologize, to celebrate being.

Technical

The setup is consist of a large scale display showcasing the biological archive-like images of the AI generated Mishmash creatures in live, and a smaller screen for people to interact with and generate their own Mishmash profile by morphing their face with the Mishmash creatures.

1. Finding the right techniques and models for the idea

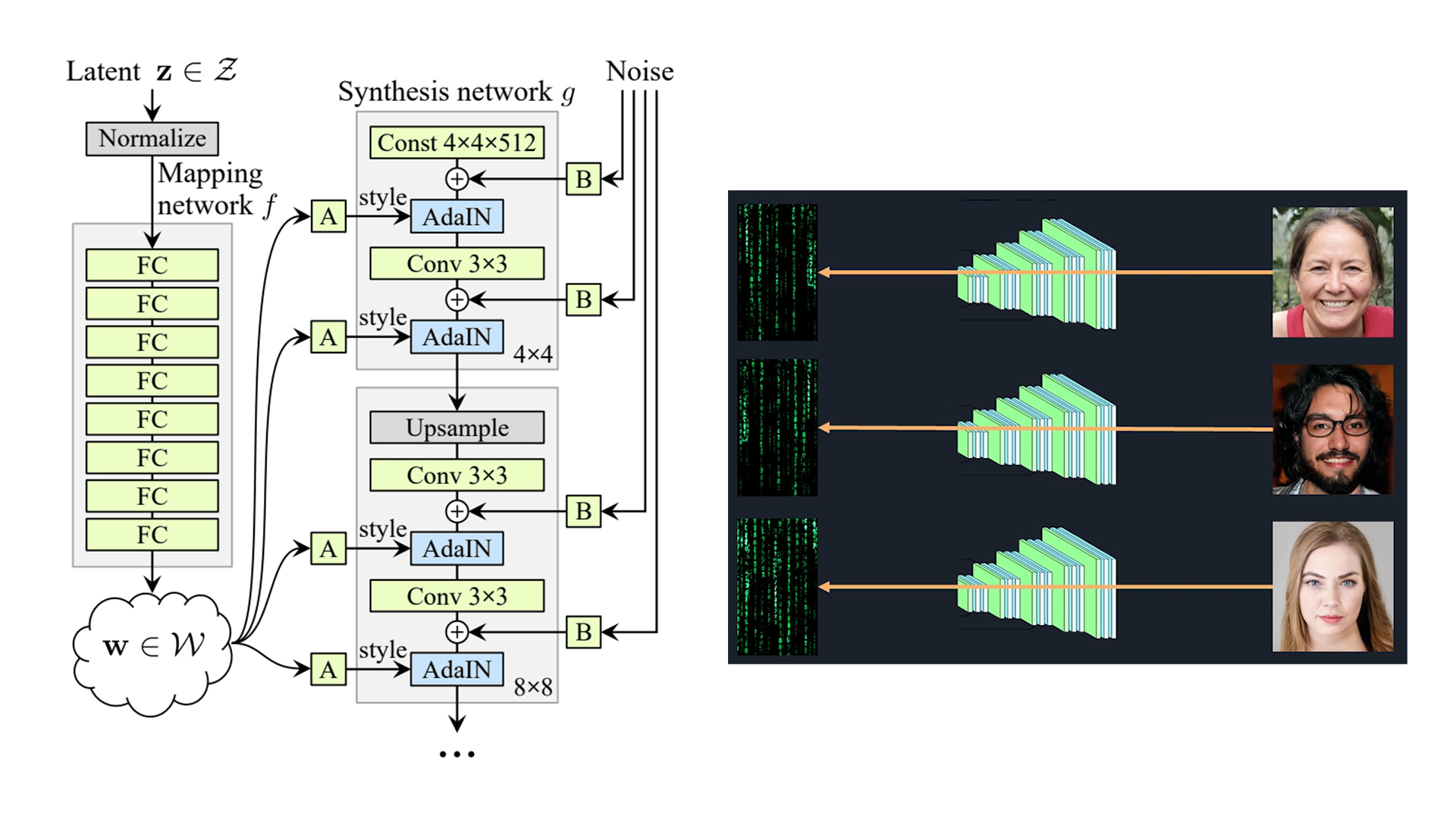

Live generated images from the AI should ideally be aesthetically fitting my idea(creepy distorted mixture of human & machine) but also showing varieties in the forms. Upon researching, I've settled on STYLEGAN for the image generation. It is using the progressive growing GAN training method, which means I can mix train the model with multiple datasets and have each one of them impact on the outcome. Besides, there is plenty room for randomness and diversity by playing with the latent space.

Left: STYLEGAN framework diagram; Right: STYLEGAN latent space interpretation

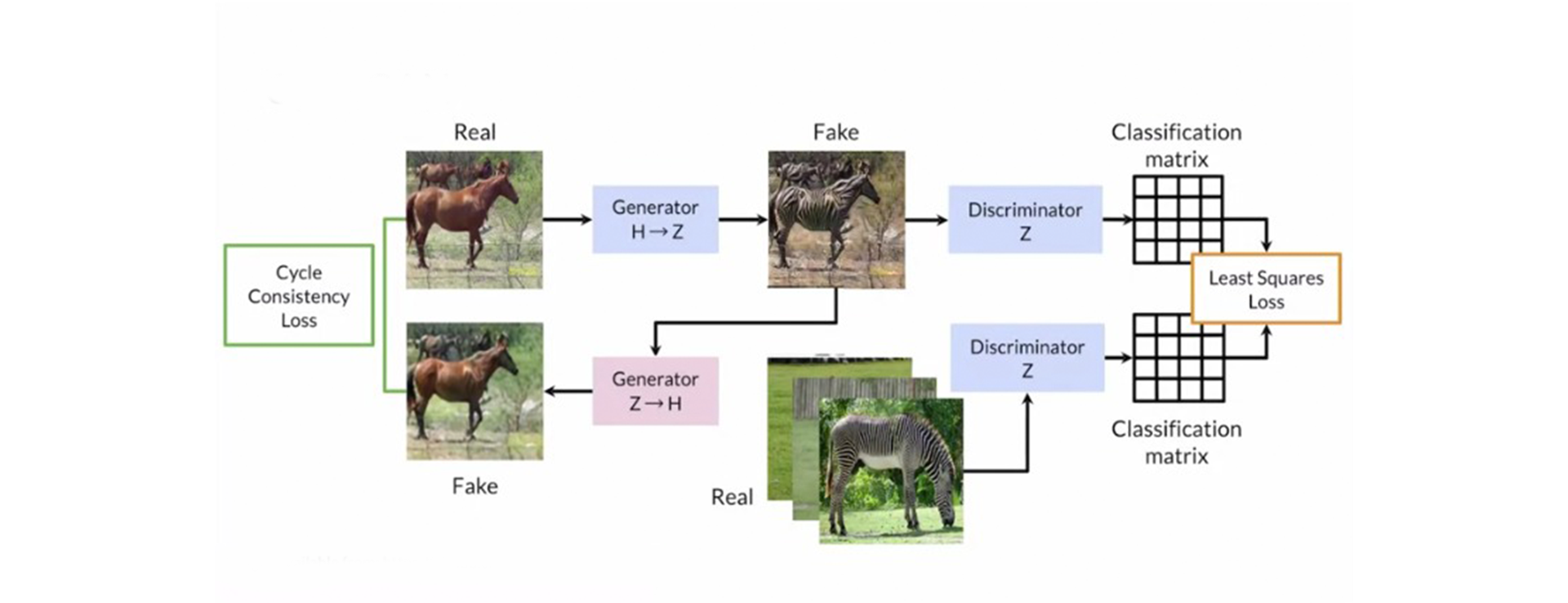

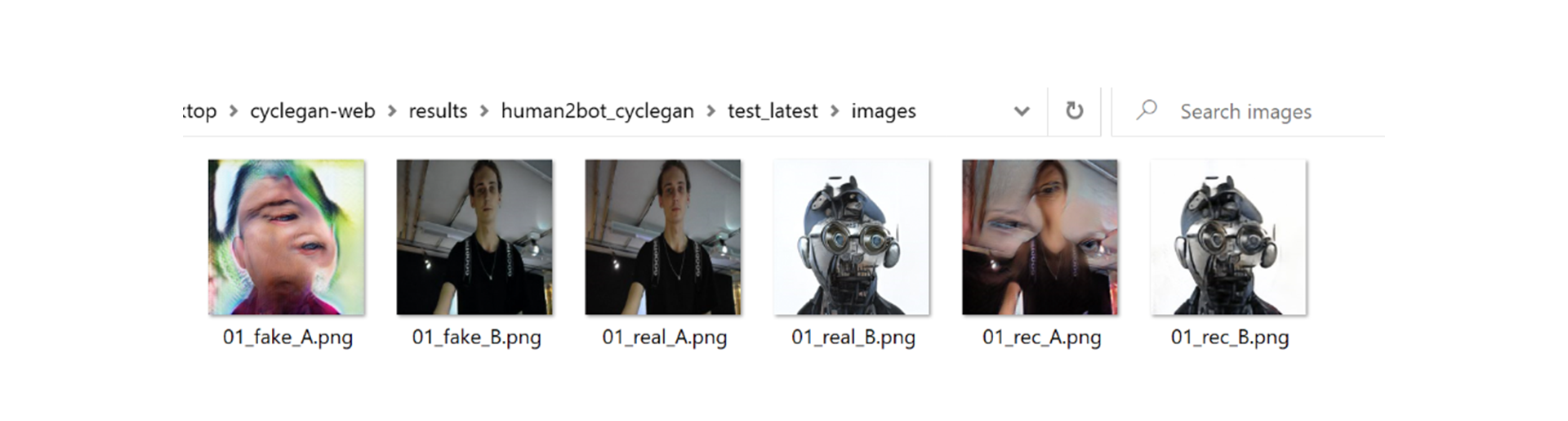

The interactive webpage needs to transfer features of the Mishmash creature onto human face, for which neural style transfer is an ideal solution. I landed on CycleGAN which is a GAN model that aims to solve the image-to-image translation problem. It maps between an input image and an output image using a training set of aligned image pairs.

CYCLEGAN architecture

2. Preparing the datasets and training

In order to obtain large datasets of machine images & microscophic human cell images, I scrapped Pinterest, Flicker, and Instagram. I used Python to clean the datasets, eventually had to hand curate all the photos for crisp machine learning outcome.

3. Facial recognition

The Mishmash profile needs the support from a facial recognition model with pretrained facial attributes analysis, and display them alongside the CYCLEGAN generated image. Deepface is a lightweight face recognition and facial attribute analysis (age, gender, emotion and race) framework for python. It doesn not consume large computational power and the analysis comes out pretty quickly during live test. It also supports numpy or based64 encoded images, which makes it easier to transfer camera images to based64 then directly feed to the package.

4. Building environment for the machine learning code

CYCLEGAN and STYLEGAN are using two different machine learning platforms: Tensorflow and Pytorch, which means the program has to switch between them. Both of them have really specific required environment and running them live also requires high-end NVIDIA GPU in specific models in order to install Cuda 10.0 and Cudnn, which made thing ten times more complicated for me.

4. Javascript and Python

In order to display the generated outcomes on webpages through Javascript, Javascript need to request and fetch data from Python. I used Flask API to package ML algorithms and sent to Javascript with Pycharmer. For the interactive webpage, I implemented Vue.js with HBuilder, which is a progressive framework for building web interfaces and single-page apps.

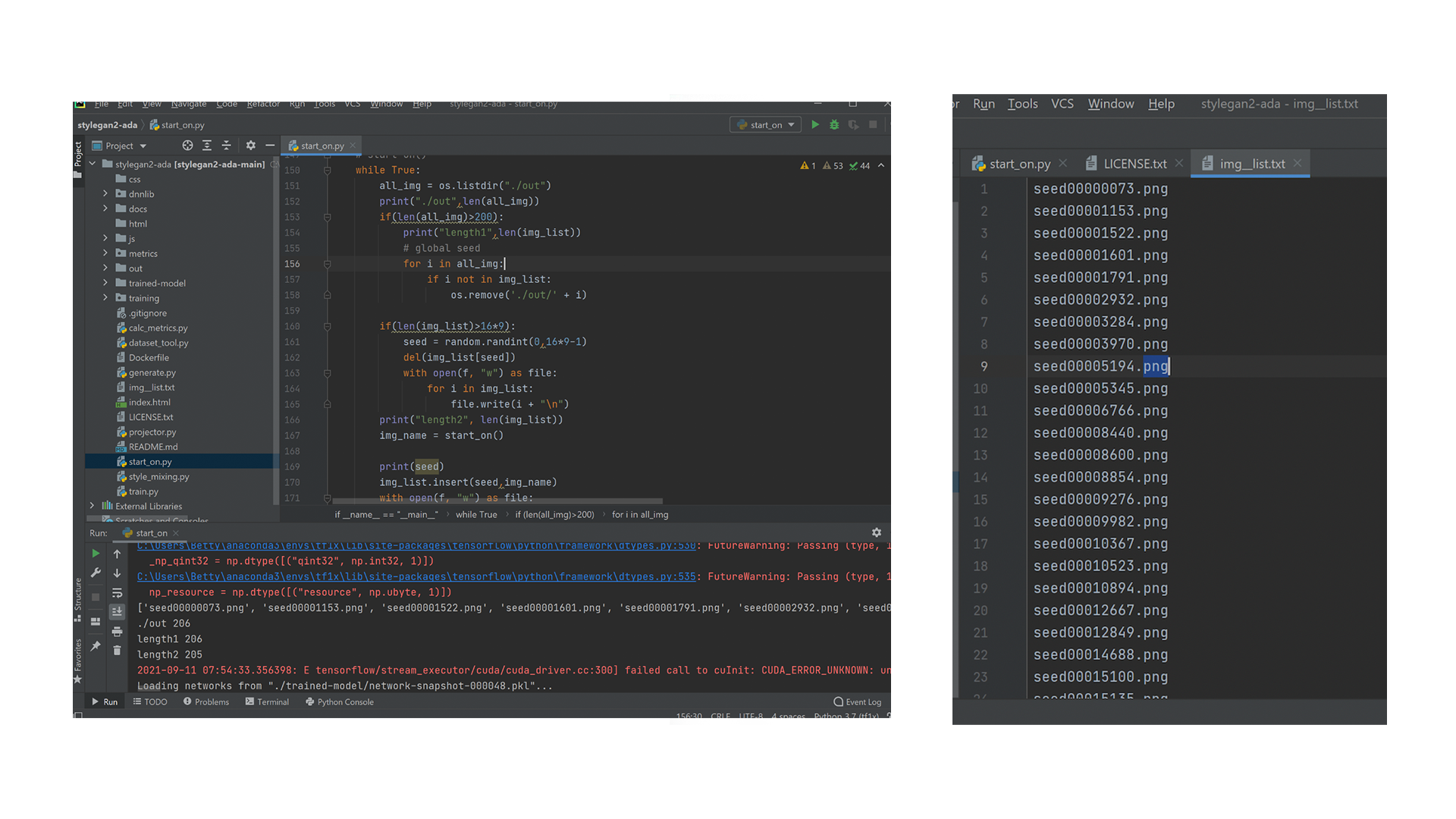

For the live generation image archive, each image is related to a random seed in the latent space, so I used the seed number as file name. The file paths of generated images will be written to a txt file, then are fetched by js. For the webpage, the canvas draws an image of the camera when the user hits "snap" button. Then I transfer the image into Base64, save it to local folder, and pass it through Flask to ML systems. Evertime a new photo was taken by the user, the old file will be replaced.

left: Stylegan live generating seed images; returned image paths

The newest image taken by camera saved locally and processed by Cyclegan for image-to-image translation

Future Development

A few people came up to me during the show, asking me if their Mishmash profile will be added and shown in the image archive. I think it is an very interesting idea of building tighter connection between the audience and the trained AI. It furthers interactivity and improves engagment when the audience can see their and other participants' profiles on the display. Furthermore, it adds a different layer of communication between the audience themselves, as the machine would possibly pick a Mishmash creature generated by a previous audience and morph it with a new audience.

I also want to train my own facial recognition model with customized personal attributes analysis with larger generated information. I've noticed people were having so much fun looking at those attributes during the show, whether they were terribly wrong or shockingly accurate. They were interested to know how the machine sees their faces. The four attributes currently in the system ( age, gender, ethncity, and emotion) are interesting but a bit general. I plan to customize these attributes to be more fictional and absurd, which accommodates to the theme of an artificial machine-human hybrid (enginer power, speed, weight, etc.)

Last but not least, some people were a bit unsure of how to interact with the webpage interface upon my observation, especially non tech-savvy and older age groups. It could be more intuitive and user friendly if the camera captures a photo by itself when a face is detected, and then feed the image to the AI for futher processing.

Self evaluation

I am really satisified with what I've acheived for this project in general. Building and training the ideal ML models needs dedication because building datasets from scratch requires acute sense of information fetching & selection. The complicated ML environment for running the AIs almost felt impossible to accomondate at some point, but I am glad I figure it out. The most difficult part is probably writing the code for Python and Javascript and let them exchange data back and forth alongside the ML. Lots of compatibility issues trying to make it run, and figuring out the most efficient solution as it has to live generate took up so many trials and errors. But it feels so rewarding when I saw people enjoyed my projects during the show. They were eager to know the concept behind the work; they asked so many questions after my introduction; they were amused, surprised, and confused by the outcomes and taking photos of the Mishmash profile. I am so grateful for all the support and constructive advices I got along the way, I could not possibily finish the project without them. Not only did I improve my technical skills, but I also learnt a lot about exhibition planning, group work, installing. I got to understand and improve my working habit as well. I look forward to further developing this project!

References

[Ideation]

https://www.techradar.com/news/captcha-if-you-can-how-youve-been-training-ai-for-years-without-realising-it

https://futurism.com/the-most-life-life-robots-ever-created

https://philosophynow.org/issues/61/Being_and_Becoming

https://yourstory.com/2017/06/jean-paul-sartre-philosophy-existentialism-freedom/amp

[Code]

https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix

https://github.com/NVlabs/stylegan2-ada

https://github.com/iperov/DeepFaceLab

https://github.com/dvschultz/dataset-tools

https://www.youtube.com/watch?v=VRMxvIKbFow&t=1509s

https://www.youtube.com/playlist?list=PLWuCzxqIpJs8ViuBIUtAk-dsAtdrApYoy

https://flask.palletsprojects.com/en/2.0.x/

https://vuejs.org/v2/guide/

https://towardsdatascience.com/talking-to-python-from-javascript-flask-and-the-fetch-api-e0ef3573c451