Face-off: The Challenges of Facial Recognition Technology in an era of Cosmetic Surgical Modification

produced by: Timothy Hyunsoo Lee

Introduction

In the last couple of decades, there has been a boom in cosmetic alterations to the face - an increase of over 160% in the total number of plastic surgeries from 1997 to 2008 (Singh et. Al., 2010). In 2012, more than 14.6 million plastic surgery procedures were performed in the United States, of which almost ten million pertained only to facial treatments (Nappi et. Al., 2016). The popularity of cosmetic plastic surgery in recent decades comes from many factors, including the availability and advancement of surgical technologies, the speed with which these procedures are performed, and their increasing affordability to the mass (Singh et. Al., 2010).

A significant implication in the rise of facial modification comes from the challenges it poses to emerging facial recognition technology. Research by Singh et. al., in 2010 showed that current models of facial recognition were unable to handle plastic surgery classified as global features, as they altered the nodal points of the face necessary to match an identity pre- and post-operation (Singh et. al., 2010). There have been methods to create algorithms for specific regions of the face, but the computational time for matching a full face renders them inconvenient and not practical for real-time use. Some of the more promising research takes advantage of the fact that there are few features on the face which remain unaltered during facial plastic surgery and are not easily doctored (Dadure et. al., 2018). Research by Sabharwal and Gupta showed that combining regional cues based on the local textures of the face with more robust Principal Component Analysis (PCA) yielded promising recognition for both local and global surgical treatment; however, the percentage of positive matching was still only 87% (Sabharwal and Gupta, 2019). As such, there is still much advancement necessary in facial recognition technology to accurately and consistently identify faces that have undergone facial plastic surgery.

For an in-depth introduction into facial-recognition technology, the challenges of FRT in cosmetic surgery, and how artists are integrating these concerns into their practices, we invite you to read our longform text here.

Background research

The implications for improving facial recognition technology and the algorithms for image recognition in light of expanding plastic surgery go beyond worries of sinister and fraudulent activities: the association between our face and our identity has been solidified by social constructs, cultural influences, and the institutions we live in (Cole, 2012). An investigation of faces invariably leads to the concept of self; researchers and theorists have long argued the important of the face to self-identity, and the extent to which the face of an individual is a public or private phenomenon, situation-specific or context-independent (Spencer-Oatey, 2007). Simon (2004) identifies a number of functions of identity; that identity helps to provide people with a sense of belonging and uniqueness; it helps people locate themselves in their social worlds; and many facets of identity help provide people with positive self-evaluations. But where does the face tie into our identity? Many differentiate identity and the face as individual versus relational; that is, the identity is contained within an individual, whereas the face is a relational phenomenon (Spencer-Oatey, 2007). As Goffman (1967) argues “the term face may be defined as the positive social value a person effectively claims for himself by the line others assume he has taken during a particular contact.” As a result, while face and identity are both similar in their contribution to the “self-image” and having multiple attributes, the face is only associated with positively evaluated attributes that the individual wants others to acknowledge (and conversely, with negatively evaluated attributes that the individual does not want others to label to themselves (Spencer-Oatey, 2007)).

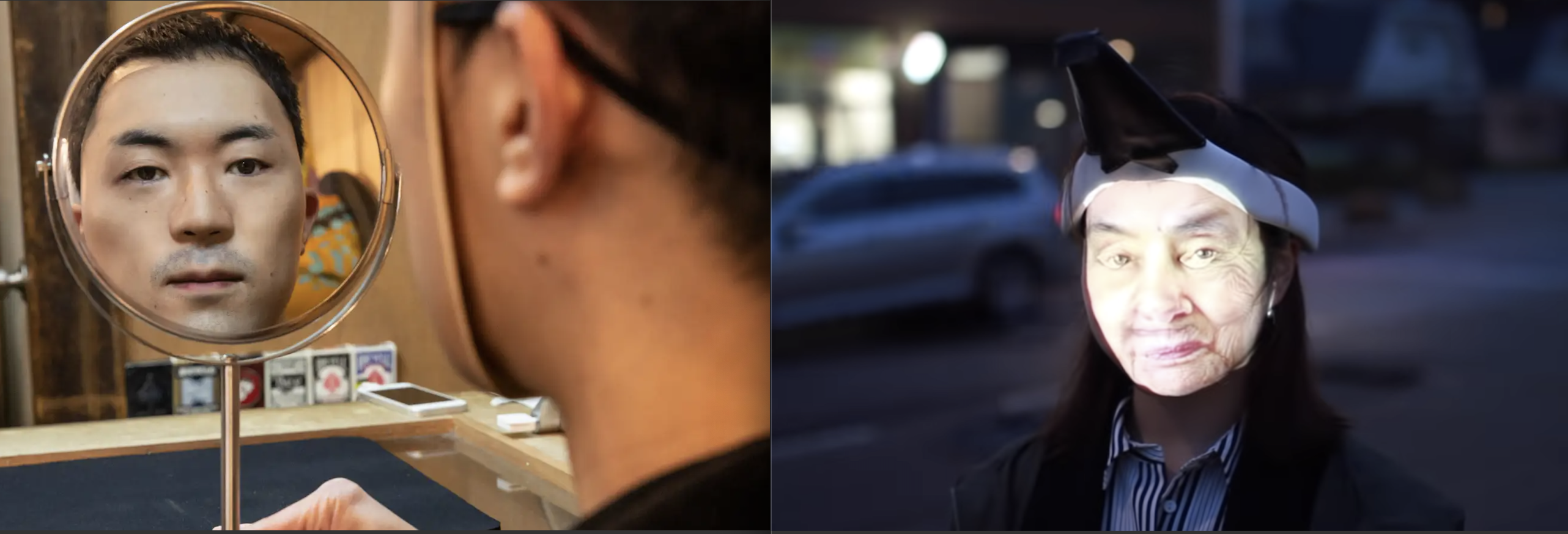

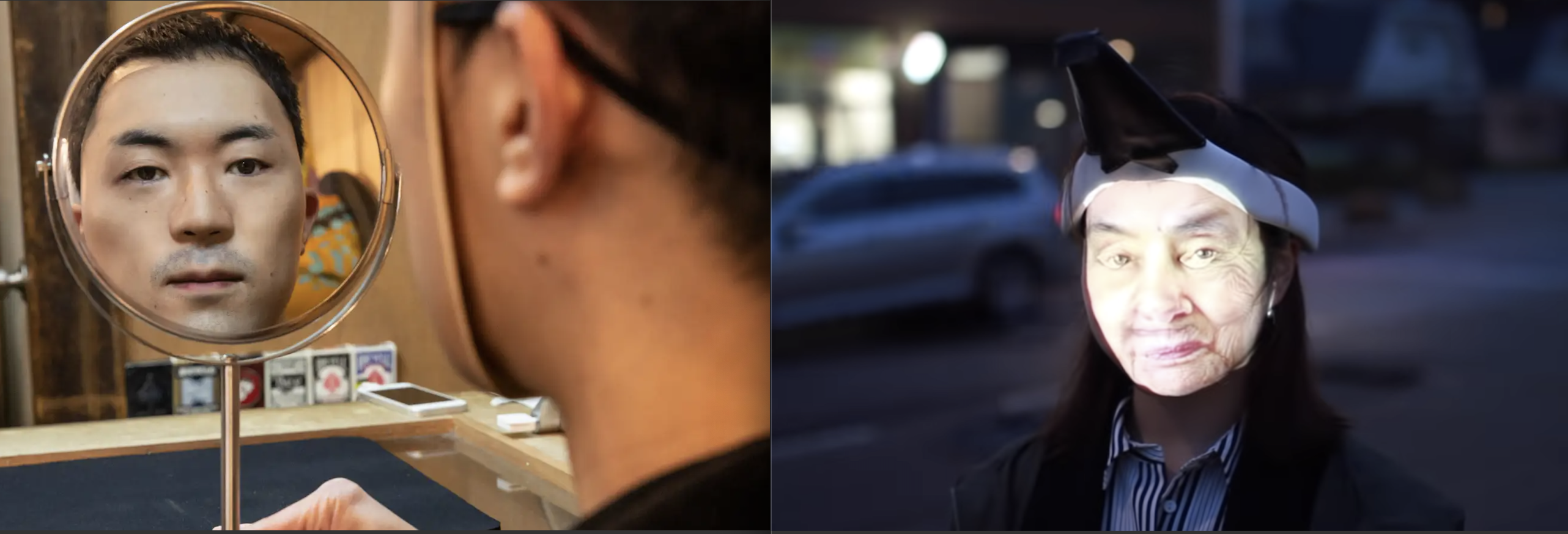

(left): Kamenya Omote "That Face" series; (right) Jing-Cai Liu's wearable facial projection

The relationship between a person’s face and their sense of self has long been explored by artists and activists, who contemplate not only the social but also political implications of tying our face with our identities. Artist and specialty mask maker Kamenya Omote produces three-dimensional face masks of strangers’ faces to sell (Hyperallergic, 2020). These realistic masks were created by printing onto a three-dimensional model created from the model’s face. Omote’s “That Face” series tunes into society’s preoccupation and obsession with our likeness, and creates masks in an era where you can, through plastic surgery, literally buy and sell a face. He sees the long-standing implication of the developing technologies that enable us to create an identical replication of someone’s face: he said in an interview with Hyperallergic (2020) that “if face replication becomes common, it would be interesting to be able to save a face from your youth, for example, or be able to change your face at will. But I’m more interested in what happens to people’s bodies, because the face and the body are inseparable.” Likewise, industrial designer Jing-Cai Liu developed a wearable projector that projects different faces onto one’s own face as a response to the use of mass surveillance by the retail and advertisement industries in tailoring targeted marketing towards customers they track. The project explored themes of the dystopian future, provoking debates about the “emerging future” and creating solutions to maintaining the privacy of our identities (Liu, 2017). By superimposing another person’s face on top of your own, Liu claims their identity is guarded and the wearer is protected from “privacy violations” (Liu, 2017).

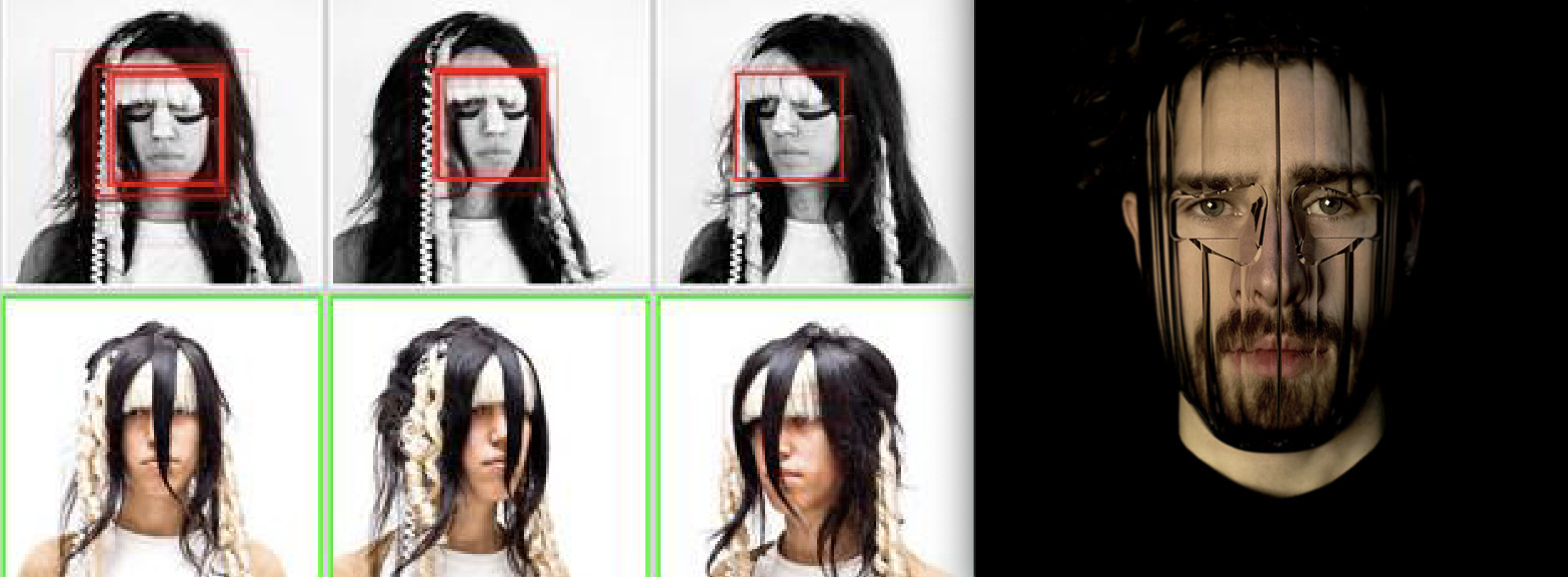

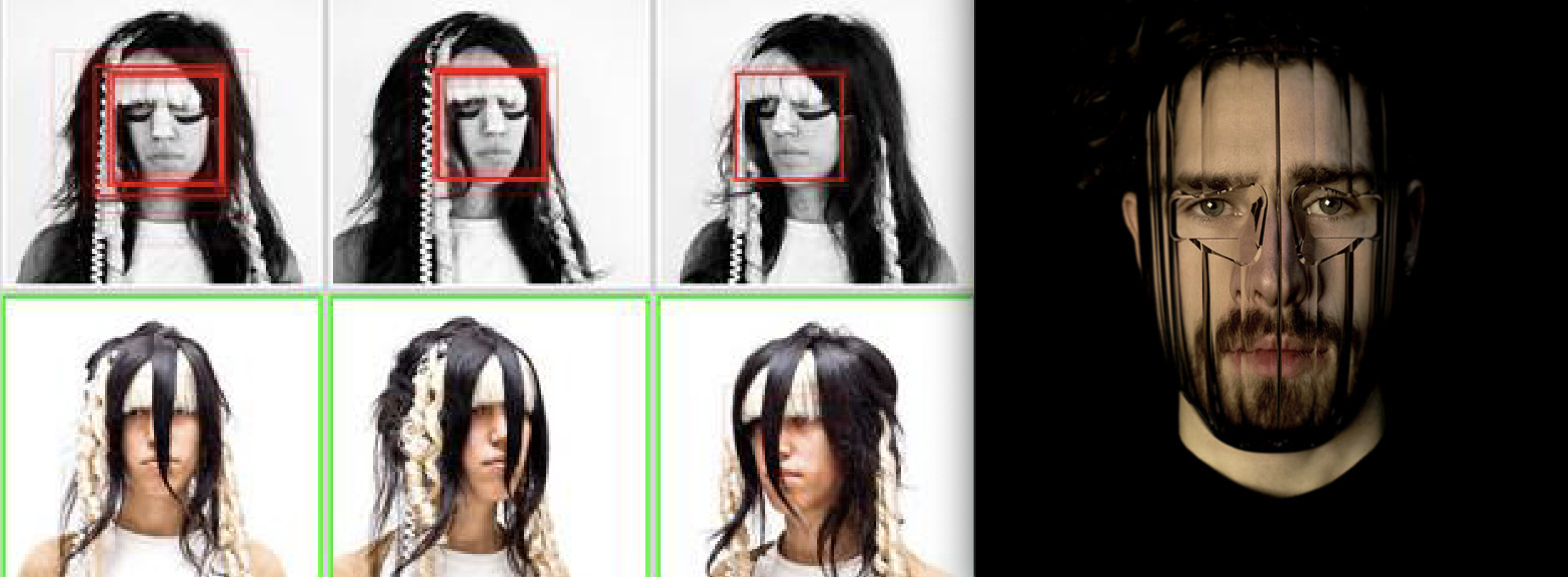

(left): Adam Harvey's CV Dazzle; (right): Jip van Leeuwenstein’s “Surveillance Exclusion”

Although Omote and Liu are concerned with hiding the actual physical characteristics of the face, Adam Harvey looks to avoid facial detection software by altering the very landmarks of the face often modified in plastic surgery (Harvey, 2010). Particularly in the United States, there has been an increase in surveillance in society over the past few decades, and these algorithms are noted for their bias towards the Black and brown communities (Benjamin, 2019). As a result, many activists and artists have sought to use CV Dazzle as a form of protection against the surveillance state that they see their countries becoming. The inspiration behind CV Dazzle, or Computer Vision Dazzle, came from a type of WWI ship camouflage called Dazzle that broke the visual continuity of a battleship with blocks of forms and patterns to conceal the ship’s orientation and size to enemies (Harvey, 2018). By drawing striking and bold patterns on the face, CV Dazzle aims to confuse facial recognition software by altering the expected dark and light areas of the face that most computer algorithms look for. Similarly, Dutch designer Jip van Leeuwenstein’s “Surveillance Exclusion” project is a series of fitted clear masks that are formed like a lens (van Leeuwenstein, 2016). These masks are able to fracture the image of the face to avoid certain facial recognition software from detecting them – as van Leeuwenstein says “mega databanks and high resolution cameras stock hundreds of exabytes a year. But who has access to this data? / By wearing this mask formed like a lens it is possible to become unrecognizable for facial recognition software.” However, he understands the significance of our face in forming an identity: the masks are transparent so that all the facial features, while distorted, can still be visible to those around the wearer. Although, like CV Dazzle, this work renders the user more conspicuous, van Leeuwenstein’s work maintains to a certain degree the integrity of the wearer’s face so that they will “not lose [their] identity and facial expressions. (van Leeuwenstein, 2016)”

Artefact

My artefact explores the day-to-day, and non-permanent, methods of facial modification and how they affect our use of facial recognition software. My interest in this topic stemmed from my final project in Term I of Computational Arts-based research and theory, where I used makeup and markings on the face inspired by CV Dazzle to see how popular facial recognition software (such as Snapchat and Instagram) responded. As an extension of this line of work, I am interested in the recognition of the same face that has changed – inspired by having recently switched over to the newest model of the iPhone. One of the crowning features of the new phone include a new privacy option called Face ID, which allows an individual to access their phone, transfer money, and otherwise bypass security protocols through facial recognition. In a society that is increasingly open to facial modification and gender fluidity, the reliance on the face as a biometric identifier becomes increasingly challenging, and I am interested in exploring the limitations of a software – Face ID – that is currently being utilized by millions of people around the world. I first noticed the limitations of the current software while wearing a mask: the phone could not recognize my face and subsequently remained locked. I tried other permutations of masking to see how the phone would react, with interesting results: the phone would not unlock if I closed my eyes, but would unlock while wearing sunglasses. According to the Apple website, the technology of Face ID uses a TrueDepth camera system to accurately map the geometry of a person’s face. The camera “captures accurate face data by projecting and analyzing over 30,000 invisible dots to create a depth map of your face, and also captures an infrared image of your face. A portion of the neural engine…transforms the depth map and infrared image into a mathematical representation and compares that representation to the enrolled facial data” (Apple, 2020).

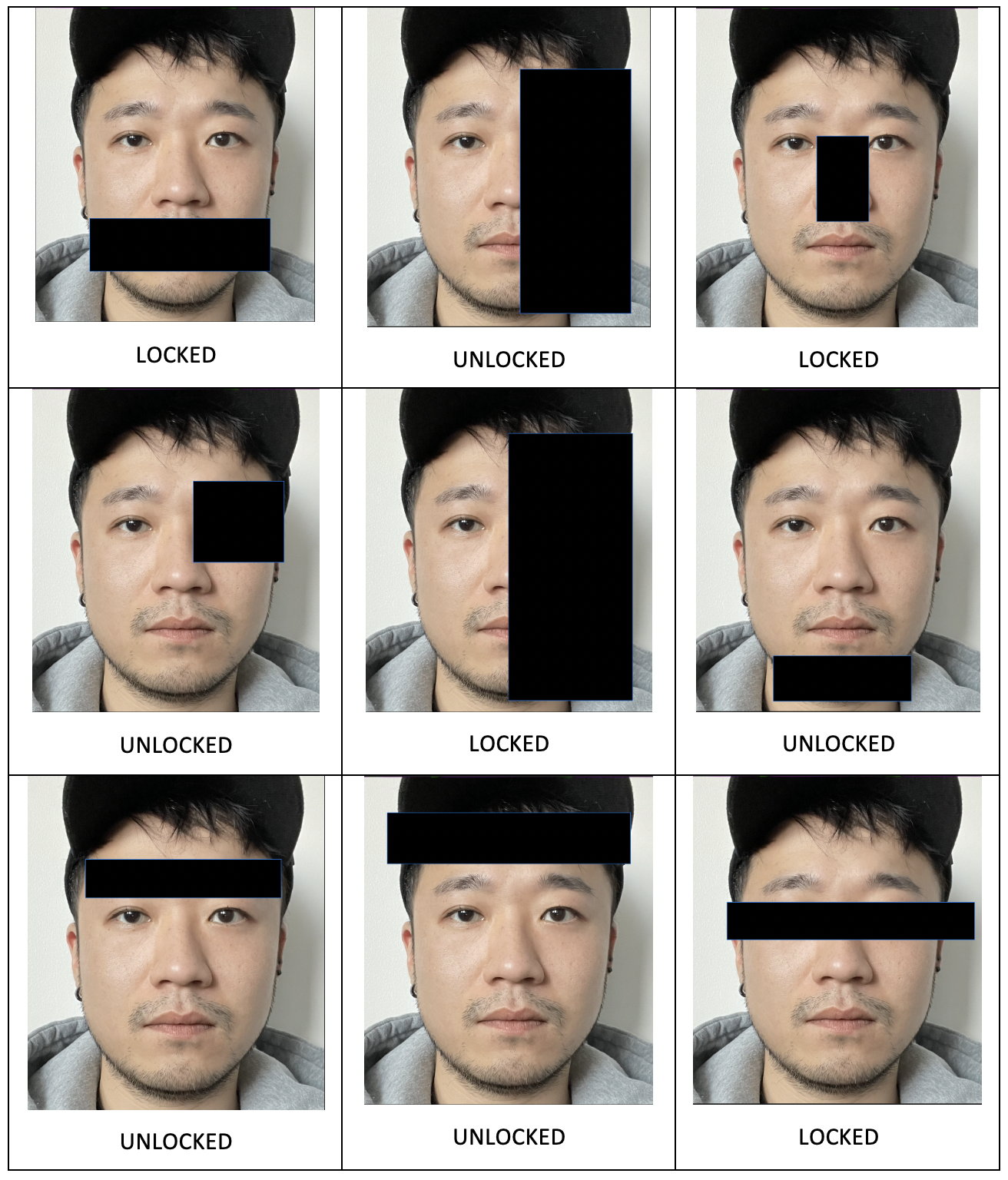

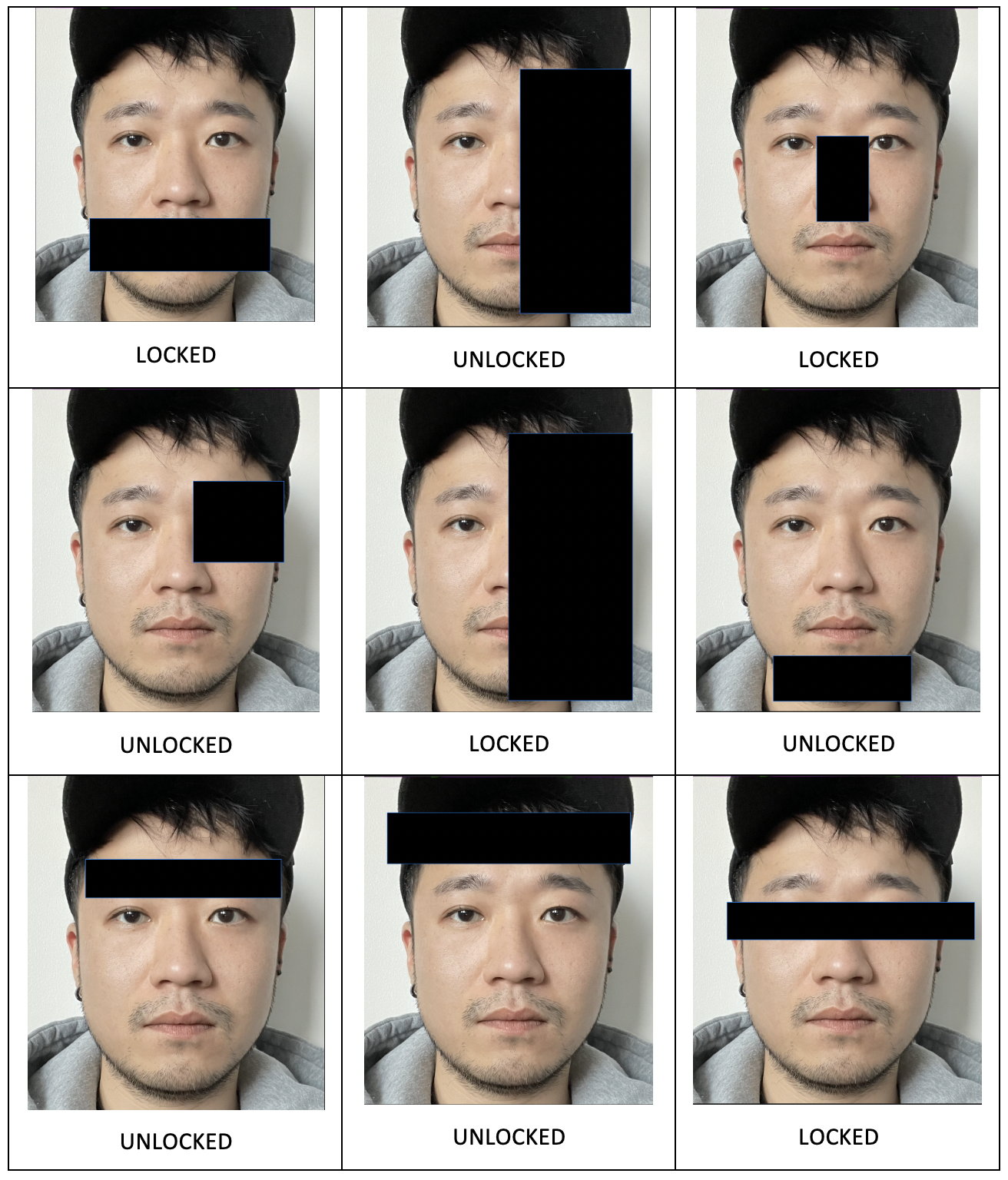

I wanted to test out some other limitations of the Face ID so I used a black rectangle to mask parts of my facial features to see if my phone would still unlock. For consistency, I considered the feature necessary if the phone did not unlock after five attempts.

It appeared that the more than 50% of the face needed to be seen for the phone to unlock, which may be due to the algorithm’s tracking of the 30,000 points projected onto our faces and it needing at least half of them for proper recognition. Certain facial features, such as the eyebrows, chin, and forehead could be concealed without disrupting face detection. Some of the key landmarks of the face included the nose, the mouth, and the eyes.

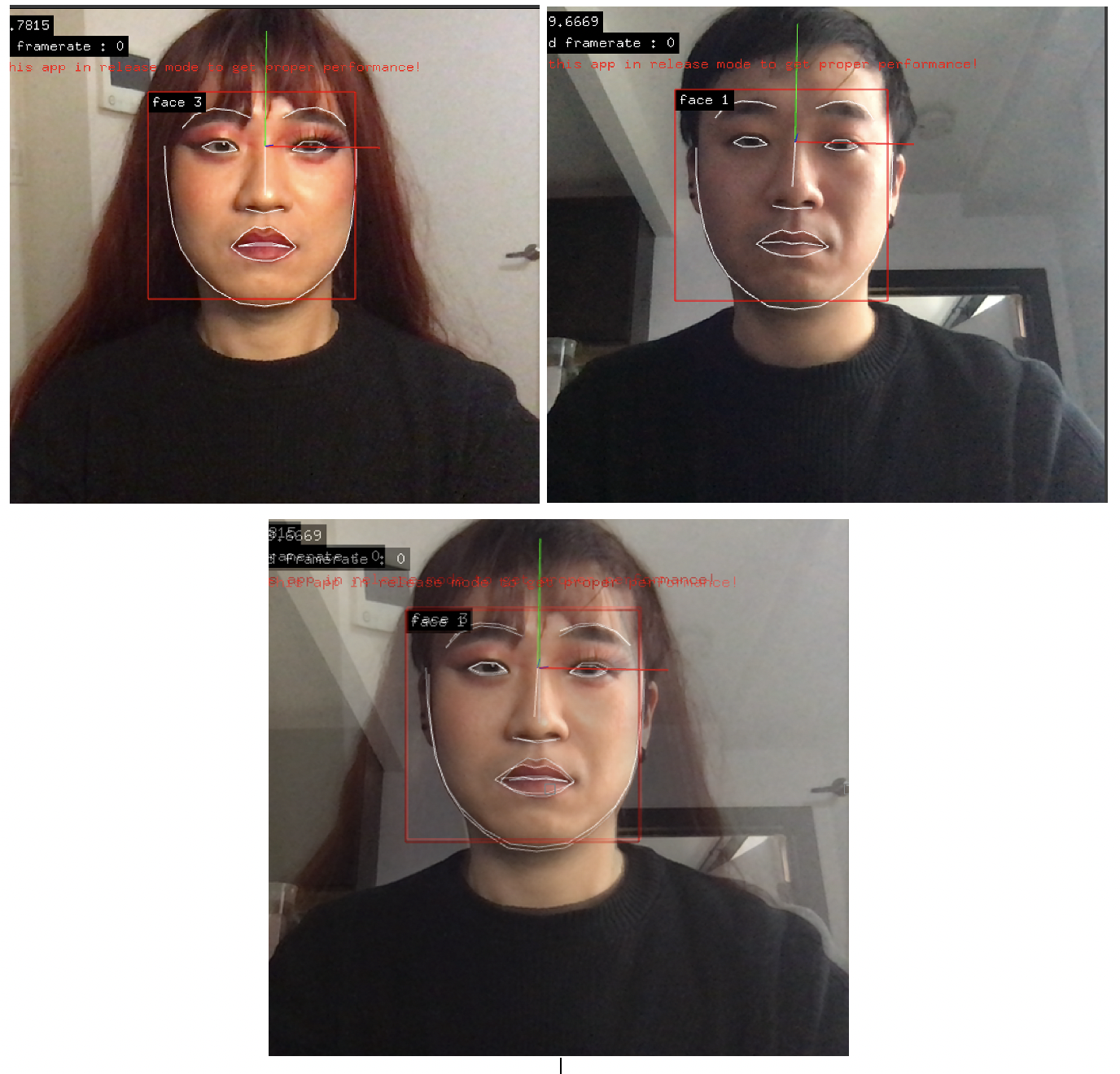

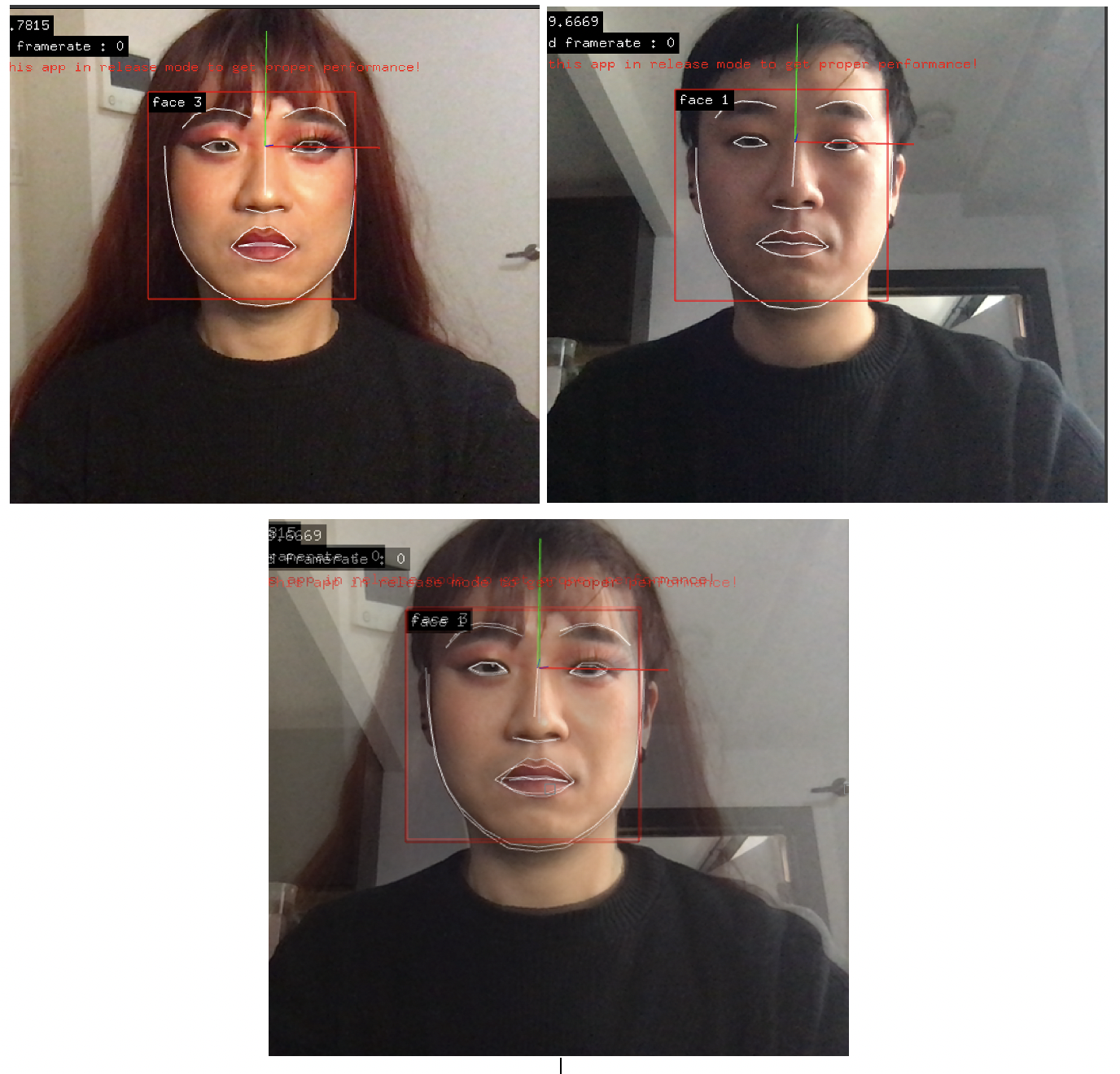

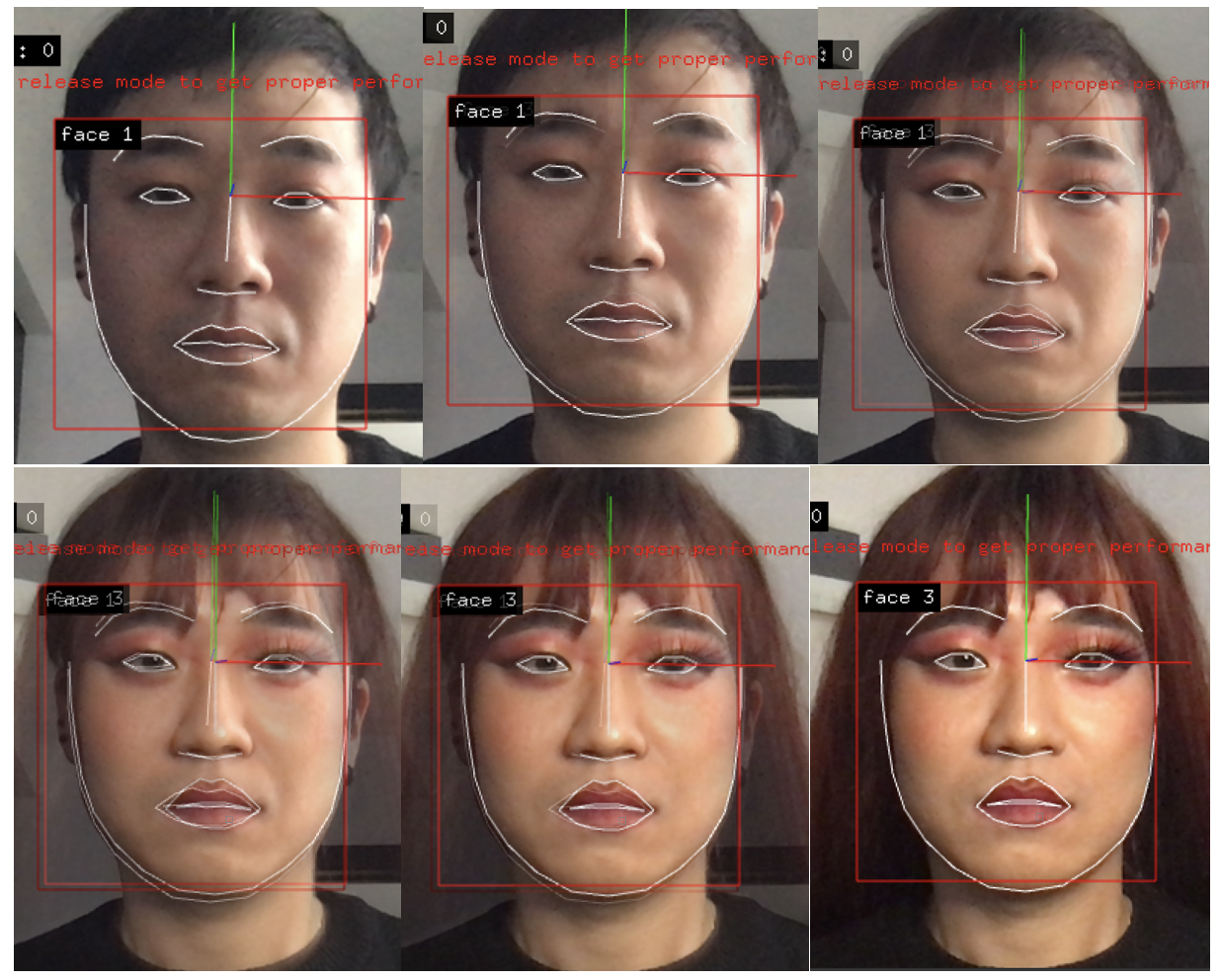

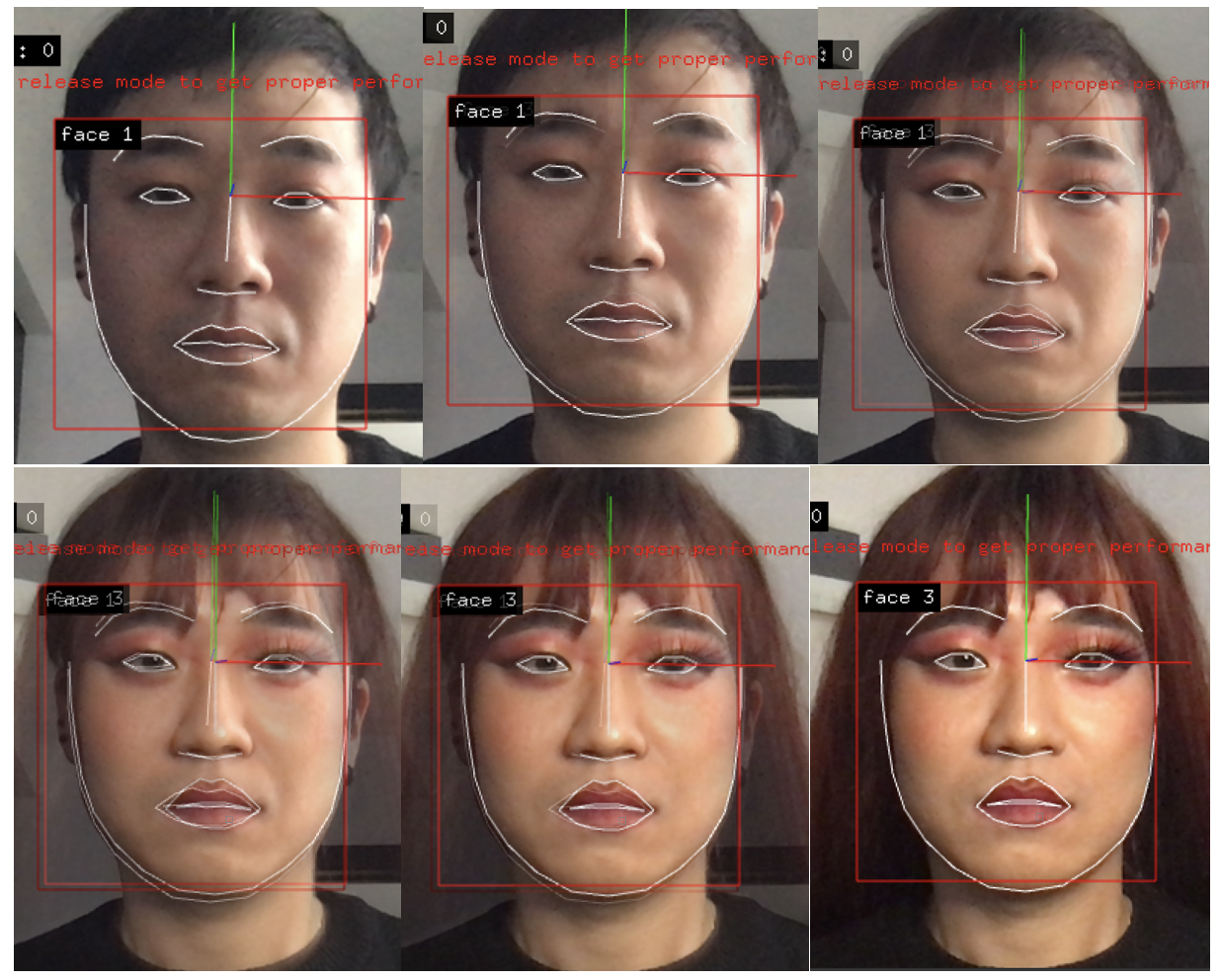

As an individual who often does drag, I wanted to see if the extreme contouring and reshaping of the face that happens in drag makeup affected the landmarks that Face ID uses to recognize a face as being the phone’s owner. Anecdotal evidence from the drag community via informal polling suggested that queens who contour their face in exaggerated ways were often unable to use FaceID to unlock their phones, as opposed to queens who wore makeup very lightly and only in enhancing their existing structure. In going into drag, I decided to exaggerate my features, paying particular attention to the reshaping of my lips, brows, and cheek bones. I then attempted to unlock my phone using FaceID – an average of attempting to unlock the phone five times. I also used an openFrameworks addon ofxFaceTracker2 to mark the major landmarks of the face – that of the brows, eyes, nose, lips, and the edges of the face. I used this program to capture the basic geometry of my face normally and while in drag and superimposed the resulting images to show how drag makeup can change the perception of face geometry – and potentially obstruct the recognition of some of the 30,000 points FaceID uses for positive match and unlocking.

In doing drag, the ofxFaceTracker2 addon highlights some of the main structural changes that happened with makeup, most notably the enlargement of the lips, raised cheekbones, lower eyebrows, and the sharpening of the contours of the eyes. Although hiding these regions individually did not originally affect the facial detection of my FaceID, and subsequently caused my phone to unlock, collectively these changes seemed to render my face unrecognizable: my phone was unable to unlock itself after repeated attempts. It’s important to note that ofxFaceTracker2 does not detect the subtle shifts that often happen in drag with makeup. Although the Apple website claims to be able to identify individuals who underwent mild facial changes, such as shaving a beard or wearing makeup, in this instance it seemed to be unable to going into drag and the subtle shifts in the facial landscape that occurs, which corroborates the anecdotal evidence I received from other drag queens. This may have to do with the fact that although changing into drag may not always involve drastic changes to the important landmarks of the face – that of the nose, eyes, and mouth – it often changes other, more subtle, aspects of the face geometry, such as the contours of the face, the shape of the nose, lips and eyebrows, as well as the perceived size of the chin and/or forehead. Because FaceID requires a threshold match – a certain degree of similarity before asserting a positive or negative recognition – these subtle changes may have led to more than half of the 30,000 points FaceID projects onto the face as being different from the original. What is interesting, though, is that towards the end of the session – after multiple attempts at unlocking my phone and failing, I was able to successfully unlock my phone while in drag. Research into why this recognition happened much later comes from Apple’s neural engines involved in facial recognition: after typing in my password to unlock my phone because my face could not, the algorithms behind FaceID were able to make the association that my I’ve had a change in appearance and the software “learned” to recognize my new features as part of my overall face (Chamary, 2017). However, drag often requires an individual to take on new looks and alter their appearances accordingly; as a result, it would be interesting to see the extent to which FaceID can recognize the multiple changes a drag queen undergoes in their appearance and moreover how this affects recognition of their original face.

Conclusion

We now have the ability to change our identity by the alteration of our faces. In an era of surveillance states, where our faces are being turned into scannable barcodes, this is a way to avoid current methods of tracking individuals’ activities/movements and locations. But for many people on a personal level, it’s a way to start over; to change aspects of themselves they find undesirable and recreate their ideal vision of themselves. Exploring the limitations of FaceID, it was interesting to see what aspects of our face algorithms (and those who employ them) consider important to our identities. Are those derivations scientific or based on our understanding and biases of how our face ties into our sense of who we are? How do we navigate, on an existential level, the standardization and reduction of one of our most personal and unique reflections of our identity into a number, a piece of data. Something that does not reflect the complexity of being an individual. An investigation of faces invariably leads to the concept of self; researchers and theorists have long argued the important of the face to self-identity, and the extent to which the face of an individual is a public or private phenomenon, situation-specific or context-independent (Spencer-Oatey, 2007). Simon (2004) identifies a number of functions of identity; that identity helps to provide people with a sense of belonging and uniqueness; it helps people locate themselves in their social worlds; and many facets of identity help provide people with positive self-evaluations. The idea of making oneself anew has interesting implications: it asserts the necessity of the face in our current society as one of the most important markers of someone’s identity. As a result, is plastic surgery a method of making oneself invisible or developing a new identity entirely? In the case of cosmetic surgery, procedures that change the face will make the individual remain invisible in society while disrupting facial detection – but at the cost of their former identity.

Citations

Adjabi, I., Ouahabi, A., Benzaoui, A., & Taleb-Ahmed, A. (2020). Past, Present and Future of Face Recognition: A Review. Electronics, 9(8), 1-52. Retrieved from https://www.mdpi.com/2079-9292/9/8/1188/htm

Ali, A.S.O., Sagayan, V., Malik, A. and Aziz, A. (2016), Proposed face recognition system after plastic surgery. IET Comput. Vis., 10: 344-350. https://doi.org/10.1049/iet-cvi.2014.0263

Apple (2020, February 26) About Face ID Advanced Technology. Apple Support. https://support.apple.com/en-us/HT208108

Benjamin, R. (2019). Race after Technology. Medford, MA: Polity Press

Chamary, J.V. (2017, September 18) How Face ID works on Iphone X. Forbes. https://www.forbes.com/sites/jvchamary/2017/09/16/how-face-id-works-apple-iphone-x/?sh=5fb2fbca624d.

Harvey, Adam. (2010) CV Dazzle. https://cvdazzle.com/

Harvey, Adam (2018) CV Dazzle, Camouflage from face-detection https://ahprojects.com/cvdazzle/

Hyperallergic (2020). Japanese Shop Sells Hyperrealistic 3-D printed face masks. https://hyperallergic.com/610180/japanese-shop-sells-hyperrealistic-3d-printed-face-masks/

Cole, S. A. (2012). The face of biometrics. Technology and Culture, 53(1), 200-203. Retrieved from https://gold.idm.oclc.org/login?url=https://www-proquest-com.gold.idm.oclc.org/scholarly-journals/face-biometrics/docview/929039973/se-2?accountid=11149

Dadure, P., S. Sikder and K. Sambyo, "Plastic Surgery Face Recognition Using LBP and PCA Algorithm," 2018 2nd International Conference on Electronics, Materials Engineering & Nano-Technology (IEMENTech), Kolkata, India, 2018, pp. 1-5, doi: 10.1109/IEMENTECH.2018.8465379.

Goffman, Erving, 1967. Interaction Ritual. Essays on Face-to-Face Behaviour. Pantheon, New York.

Hamann, K., & Smith, R. (2019). Facial recognition technology. Criminal Justice, 34(1), 9-13. Retrieved from https://gold.idm.oclc.org/login?url=https://www-proquest-com.gold.idm.oclc.org/trade-journals/facial-recognition-technology/docview/2246857277/se-2?accountid=11149

Jones, W.D. "Plastic surgery 1, face recognition 0," in IEEE Spectrum, vol. 46, no. 9, pp. 17-17, Sept. 2009, doi: 10.1109/MSPEC.2009.5210052.

Liu, Jing-Cai. Wearable Face Projector (2017). Retrieved from http://jingcailiu.com/wearable-face-projector/

Martinez A. M. (2017). Computational Models of Face Perception. Current directions in psychological science, 26(3), 263–269. Retrieved from https://doi.org/10.1177/0963721417698535

Nappi, M., Ricciardi, S. and Tistarelli, M. (2016). Deceiving faces: When plastic surgery challenges facial recognition. Image and Vision Computing. 54: 71-82. https://doi.org/10.1016/j.imavis.2016.08.012

Parks, C. & Monson. K. (2018). Recognizability of computer-generated facial approximations in an automated facial recognition context for potential use in unidentified persons data repositories: Optimally and operationally modeled conditions. Forensic Science International, 291, 272-278. Retrieved from https://doi.org/10.1016/j.forsciint.2018.07.024

Sabharwal, T., and Gupta, R. (2019), Human identification after plastic surgery using region based score level fusion of local facial features. Journal of Information Security and Applications. 48. https://doi.org/10.1016/j.jisa.2019.102373

Simon, Bernd, 2004. Identity in Modern Society. A Social Psychological Perspective. Blackwell, Oxford.

Singh, R., M. Vatsa, H. S. Bhatt, S. Bharadwaj, A. Noore and S. S. Nooreyezdan, "Plastic Surgery: A New Dimension to Face Recognition," in IEEE Transactions on Information Forensics and Security, vol. 5, no. 3, pp. 441-448, Sept. 2010, doi: 10.1109/TIFS.2010.2054083.

South Korean Plastic Surgery Trips Headaches for Customs Officers, [online at Shainghaiist] Available: http://shanghaiist.com/2009/08/04/south_korean_plastic_surgery_trips.php.

Spencer-Oatey, H. (2007). Theories of identity and the analysis of the face. Journal of Pragmatics, 39, 639-656.

Tsao, D. Y., & Livingstone, M. S. (2008). Mechanisms of face perception. Annual review of neuroscience, 31, 411–437. https://doi.org/10.1146/annurev.neuro.30.051606.094238

Turk, M. & Pentland, A. (1991). Eigenfaces for Recognition. Journal of Cognitive Neuroscience, 3(1), 71-86. Retrieved from https://doi.org/10.1162/jocn.1991.3.1.71

Van Leeuwenstein, Jip (2016). Surveillance Exclusion. http://www.jipvanleeuwenstein.nl/