SHADER

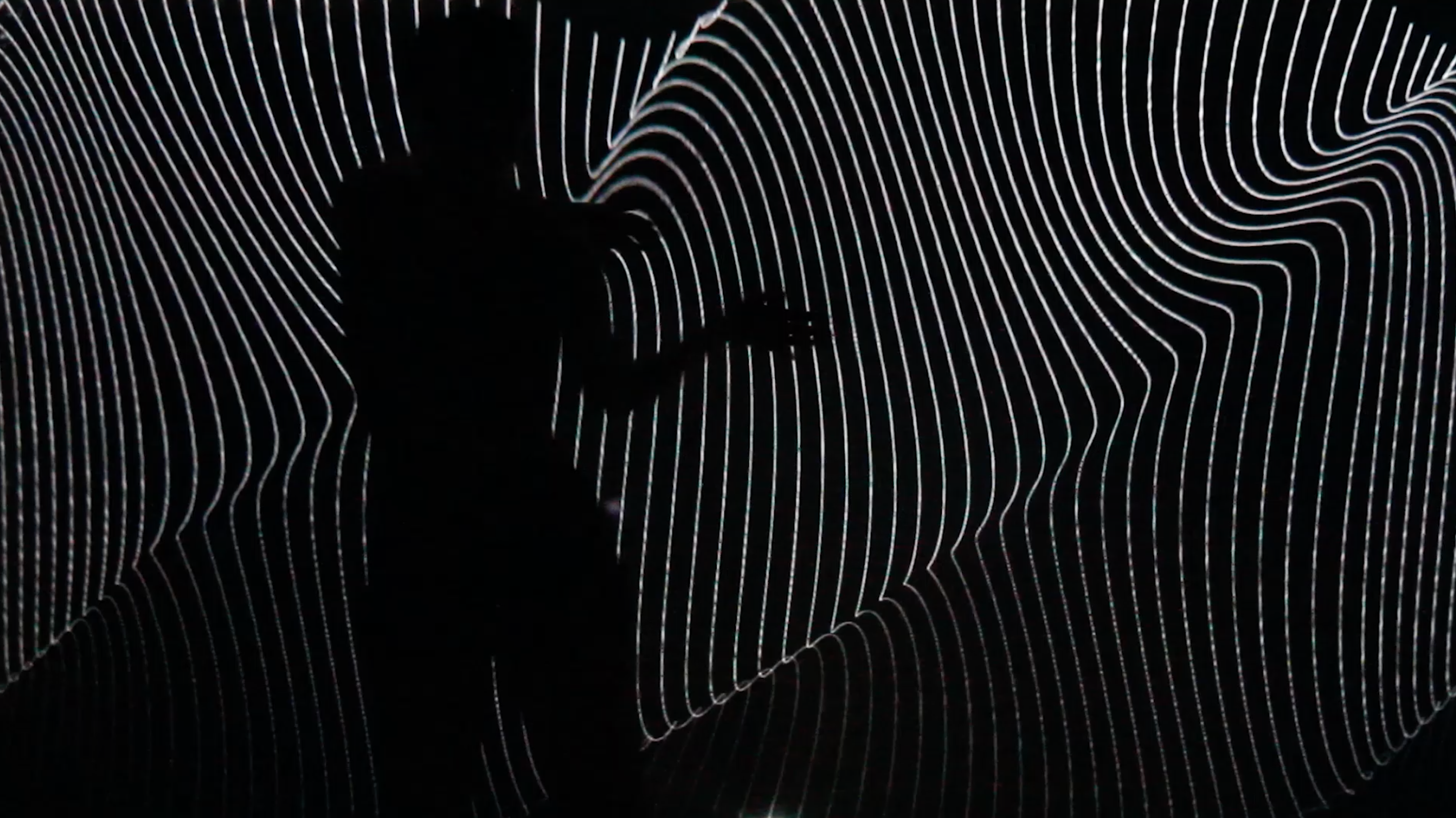

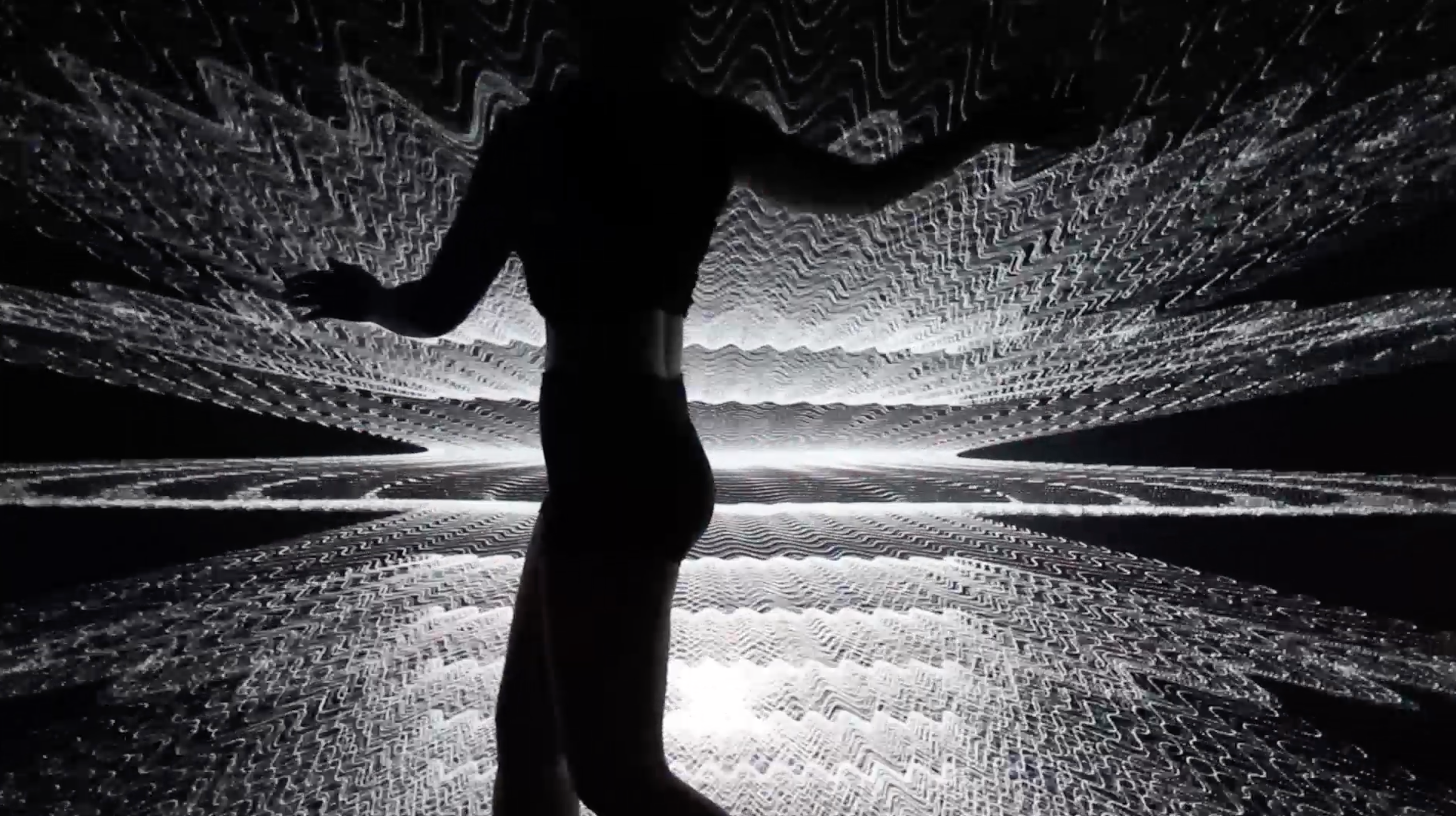

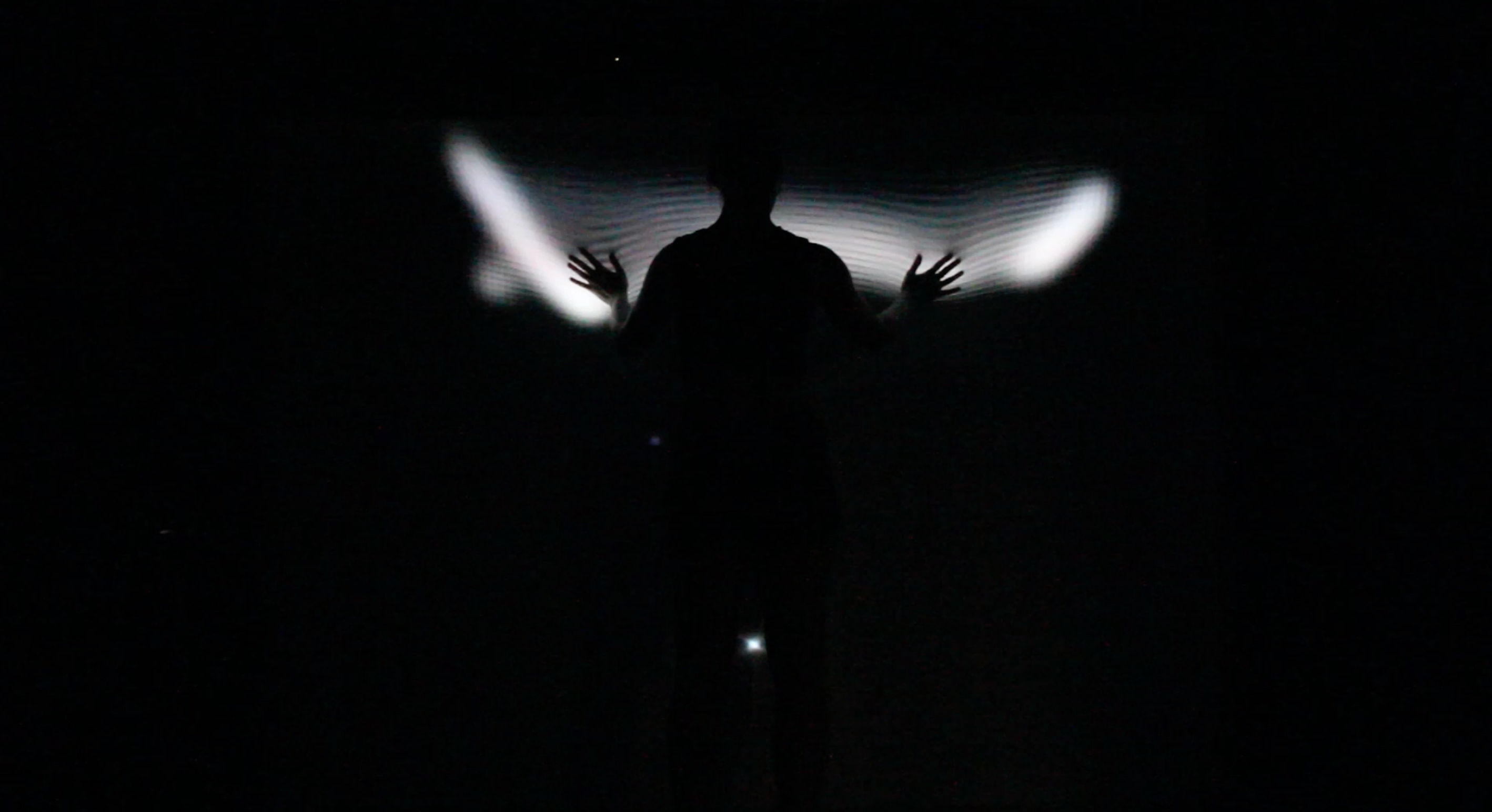

It's a dialogue between shader and reality. The metamorphoses of touching can raise people’s brittleness about boundary of dematerialized shader and actual screen. The screen can be regarded as the spectrum of experiencer’s volatile emotions. Distorting the limits and immersing the spectator into a short detachment of reality. Nothing is uncounted experience, creating an immaterial boundary is the process of people wander their heart. It’s all start from the screen seduced you to interact with it.

produced by: Friendred

In total, there are 8 different scenes, which all expressing lexical distortions of shader and real life. Blurring the boundary and submerging the audience into a ‘transition’ environment.

Thanks

Sound: KKU NV

Performer: Amy Louise Cartwright

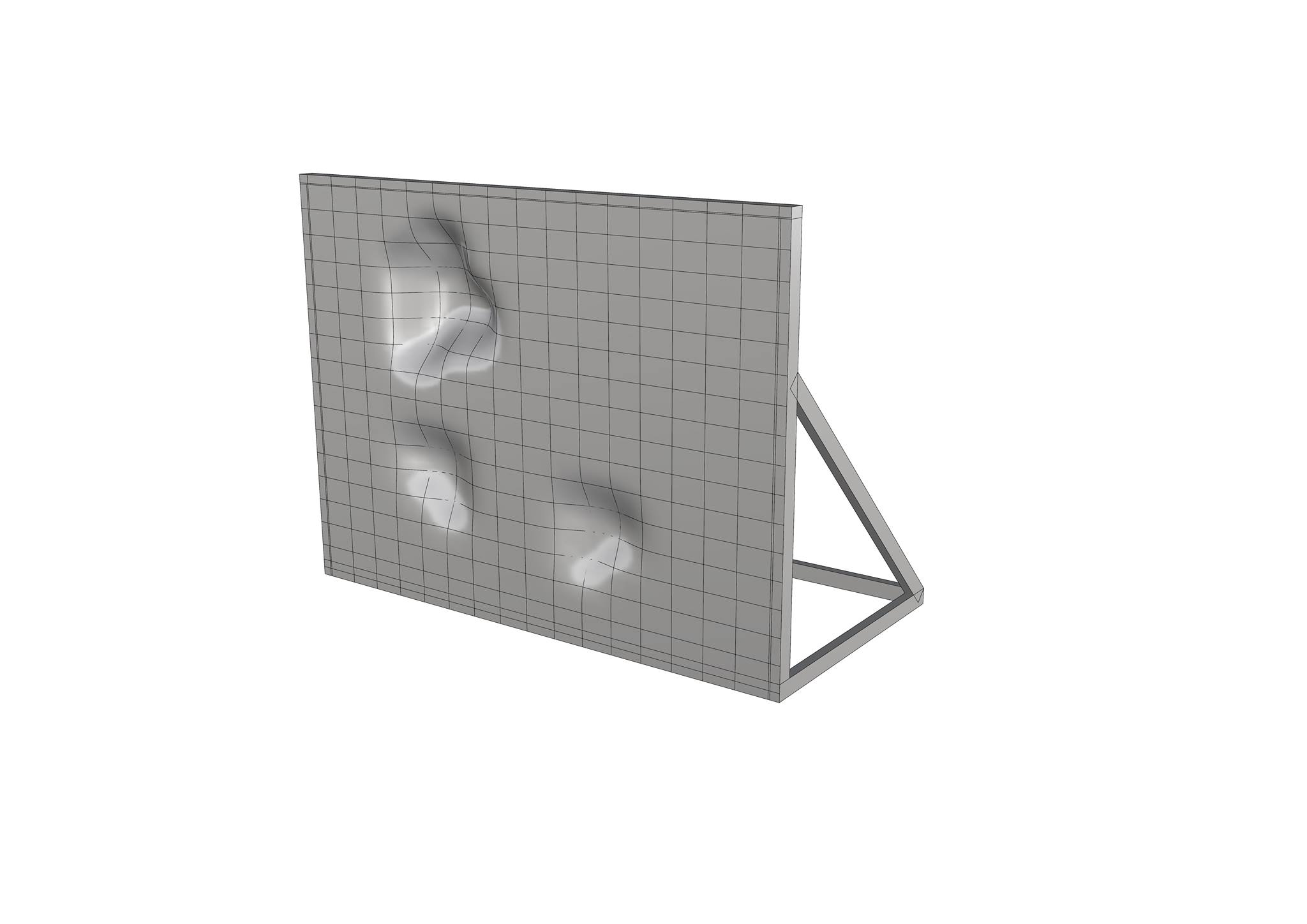

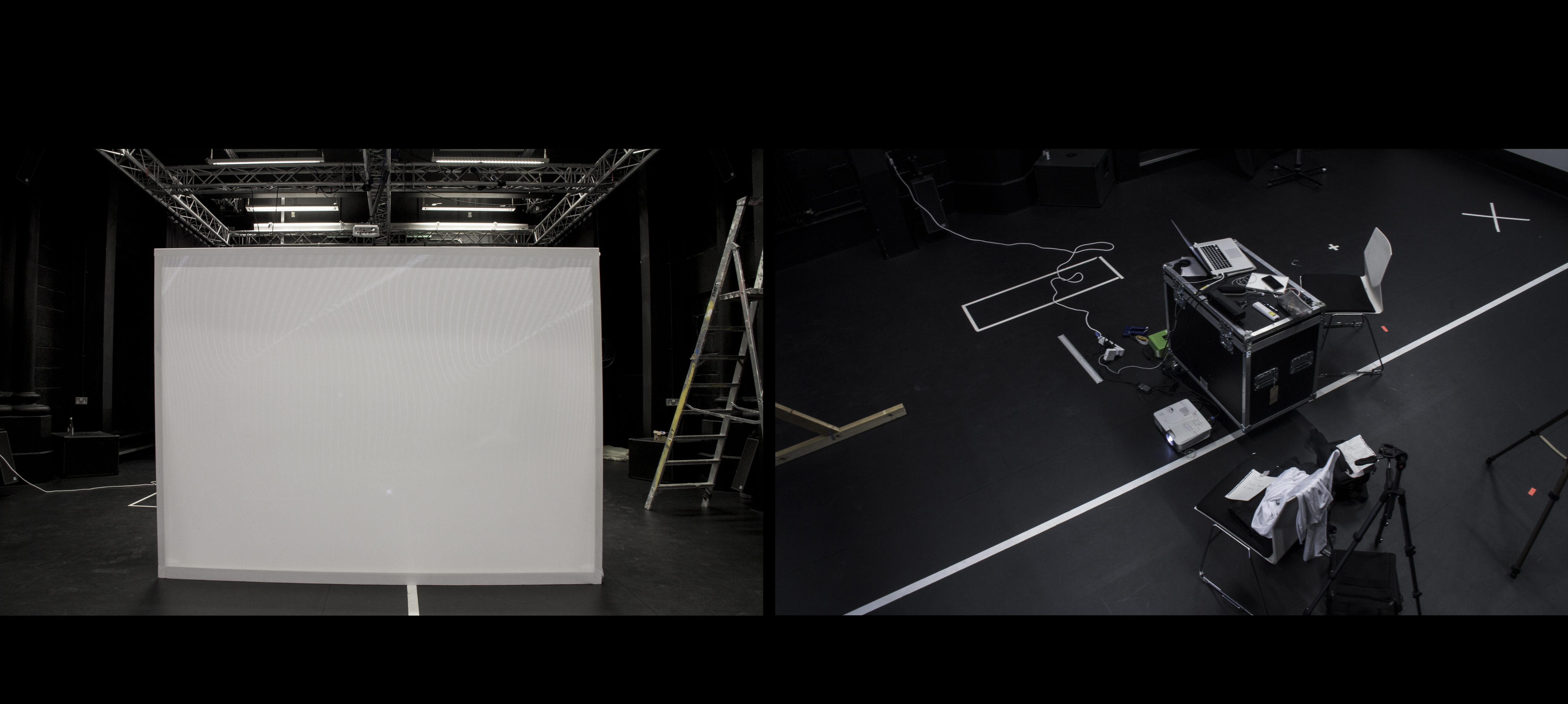

Wood Fabrication: Freddie Hong

Special Thanks For Helping: Jakob, Qiuhan Huang, Arturas

Thanks: Theo Papatheodorou

Addon & Reference

ofxFboBlur-master

ofxGui

ofxKinectV2

ofxOpenCv

ofxUI

ofxXmlSettings