Storm

"Storm," an interactive sound and light sculpture, was inspired by my childhood fascination with the series X-men, the resilience and heroism in the face of discrimination, and the strong female roles that resonated with me as a queer immigrant and child of the diaspora.

produced by: Timothy Lee

Introduction

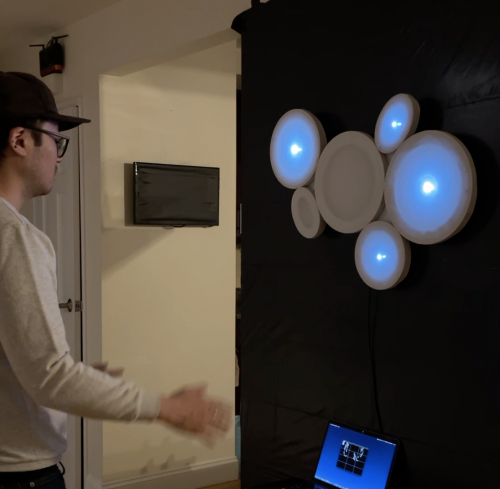

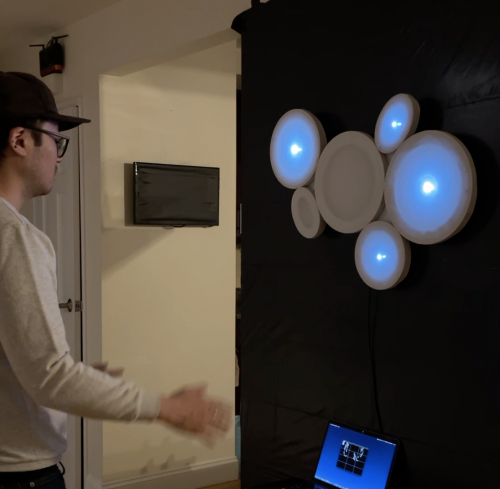

"Storm," an interactive sound and light sculpture, was inspired by my childhood and my fascination with the series X-men. As a queer immigrant, and someone used to being "othered" for multiple facets of my life by the greater community, the imagery and heroic courageous of the X-men in light of discrimination and retaliation was a concept I understood too well. Among the characters, I strongly resonated with female leads, growing up amongst strong women in my family but also because they appealed to parts in me that society has long told me were not for boys. As a result, I chose to create a work revolving around the powers of the character Storm, who had the ability to manipulate nature and was well known for summoning thunderstorms and lightning to defeat her enemies. For this installation, I used OpenCV and Arduino to create a cloud that responds to the gestures and movements of a participant, emitting light and the sounds of thunder with each flick.

Research and Technical Design

In creating this project, I knew I needed to produce two MVPS (mininum viable products) to prove that my vision was realizable: to call functions using computer vision (OpenCV), and to connect the arduino with openframeworks.

Calling functions in OpenCV

My first MVP involved using the computer vision example code we learned in class to call functions - it was the first step in establishing interaction. Instead of using addons such as contour finding or hand detection, I opted for something simpler: to use a threshold image and pixel processing to determine if a pixel of interest was black (no movement) or white (movement detected). I preferred this method because I wanted my interaction to be all-or-nothing, so the simple detection of movement by my program to call a function was all I needed.

I decided to split my video grabber screen into quadrants, and created the pixel to be processed to be the center of each quadrant. I quickly realized that my video wasn't always able to capture the pixel changing each time, until I realized that I needed to establish pixel processing on the threshold layer (diffFloat) of the video feed and not the original video grab. I also created a small grid on the top left corner of my display window whose boxes would turn red every time the corresponding quadrant was "activated" by movement. To make the motion detection more specific, I ultimately decided to create two more pixels to be processing, each located 20 px from the central one. This ensured a better recognition if my movement passes through a quadrant on the camera.

Arduino <--> openFrameworks

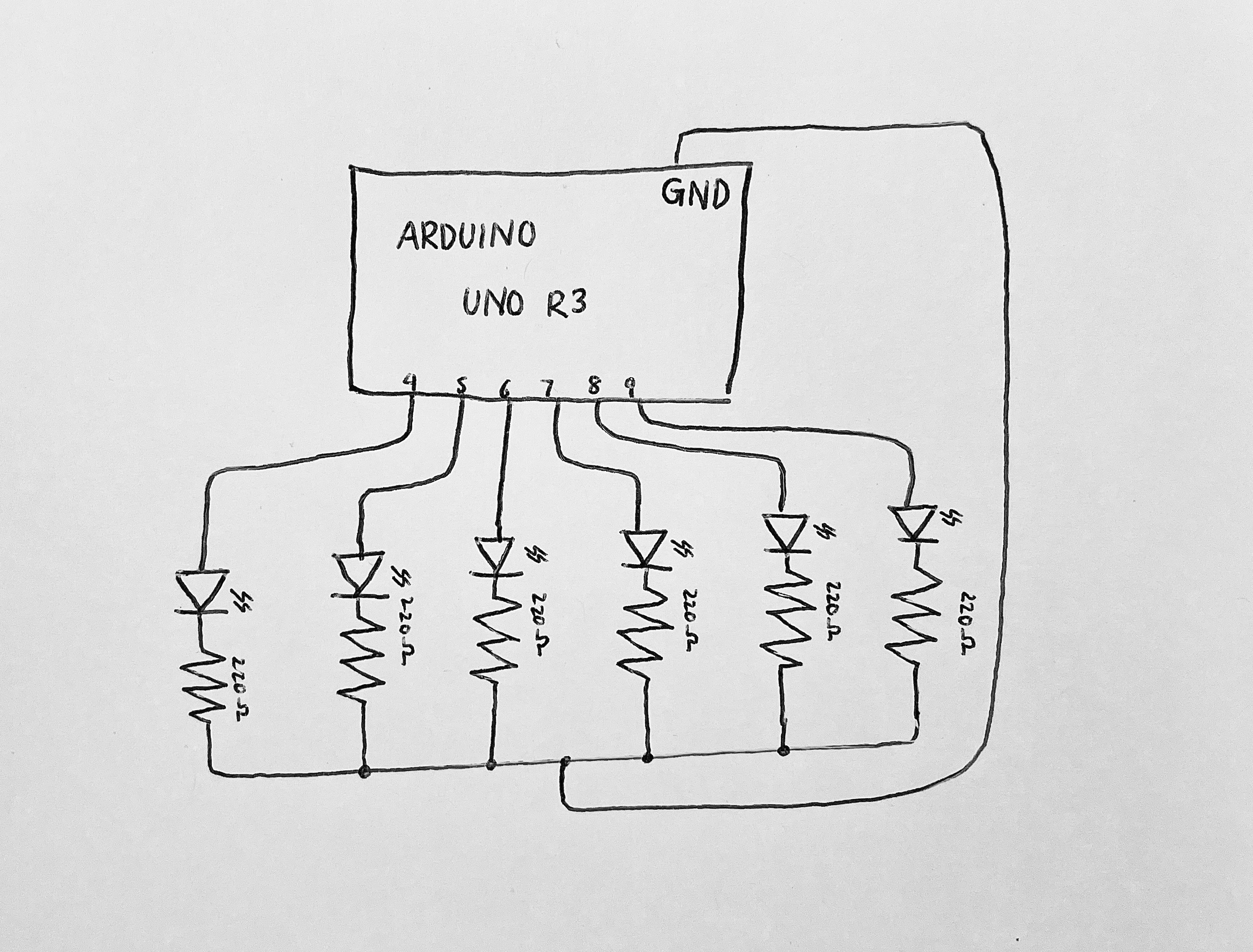

My next MVP required connecting Arduino with openFrameworks. For this, I installed the Standard Firmata code from the Arduino example library and uploaded it to my Arduino UNO r3 and wired it as shown below. I then took the Firmata example code from openFrameworks and extracted the code I needed, including setting up a serial communication via the modem cable PORT and at a certain baud. Then I set the digital pins to their assigned numbers, and incorporated them into my computer vision code such that whenever a quadrant detects motion and flashes a red square, a signal would be sent to the corresponding digital pin on the arduino to light up the LED. While working on establishing connection, I ran into a problem: my program would build successfully, launch, and the moment communication is established it would crash. After troubleshooting, I came to realize the problem was a timing issue: while openFrameworks was connecting to Arduino, the rest of the program was running and as a result, could not recognize the digital pins of the Arduino in my subsequent draw() section. As a result, I put in an if statement to ensure that unless arduino and openframeworks established a connection, the rest of the program would not run. This solved the problem with the program crashing after building successfully.

Putting it all together

I wanted to incorporate sound into my project but have it be activated by motion - much in the same way my LED lights were wired. For this, I found free sound samples of a rainstorm as well as thunder and imported them into my code. I set the rainstorm as the background noise, having it play continuously on loop. For the thunder, I first set it as multiplay to enable it to be called multiple times in a row. I then included it under the code that activates the LED lights, so that it would simultaneously call for the sound to be played.

After setting up my Arduino board and connecting it to my laptop, I was able to establish serial connection between openFrameworks and Arduino, and I was glad to see that my movements triggered not only flashes of light but the sounds of thunder.

Future development

In developing this project further, I would be interested in making this sculpture larger, or having multiple iterations of it to fill up an entire room. I think the potential for this project to become a much larger and experiential work is interesting, and I would even consider playing around with different colored LED lights, or programming my code to respond to a larger variety of motions. In a future iteration, I would also try to connect my arduino with my openframeworks via a WIFI module rather than a usb modem cable, since cables limit the autonomy of my sculptures to stand-alone as works.

Self evaluation

I was pleasantly surprised with how the project turned out because I was able to combine my interest in physical computing and using the arduino with relevant skills taught to use in openFrameworks. I was particularly glad that I was able to translate my exercises in computer vision into an interactive format, and seeing the LED light bulbs illuminate when my program detected movement within a certain space was very rewarding.

However, even with the extension I found myself running short of time because the scope of this project was beyond the complexity I was comfortable in, and versed for, and as a result I was only able to make a prototype for what I hope is a much larger immersive installation. With more time, I would have invested in making the project more ambitious, such as larger work or a more interactive component that responds to multiple gestures. My greatest weakness in this project is how difficult I find it working with code, since my brain is not wired naturally to think in such algortihmic formats.

Overall, I'm proud of this installation and also proud of myself for applying the skills I've learned towards this project while still keeping it in line with my personal artistic practice..

References

Lewis Lepton's openFrameworks tutorial series: https://www.youtube.com/playlist?list=PL4neAtv21WOlqpDzGqbGM_WN2hc5ZaVv7

Examples used in Workshop for Creative Coding II (computer vision, pixel processing)

OpenFrameworks Firmata example code

I also want to thank my friend Matt Carney who helped me work through, and resolve, issues I was having with connecting arduino with OpenCV.

Also thank you to my fellow CompArt classmates for their tips and advice in troubleshooting my codes, and to my module tutor Theo!