SHDW

Audio-visual performance.

produced by: Chris Speed

Introduction

SHDW is an audio-visual exploration into the Jungian concept of the Shadow Self.

Concept and background research

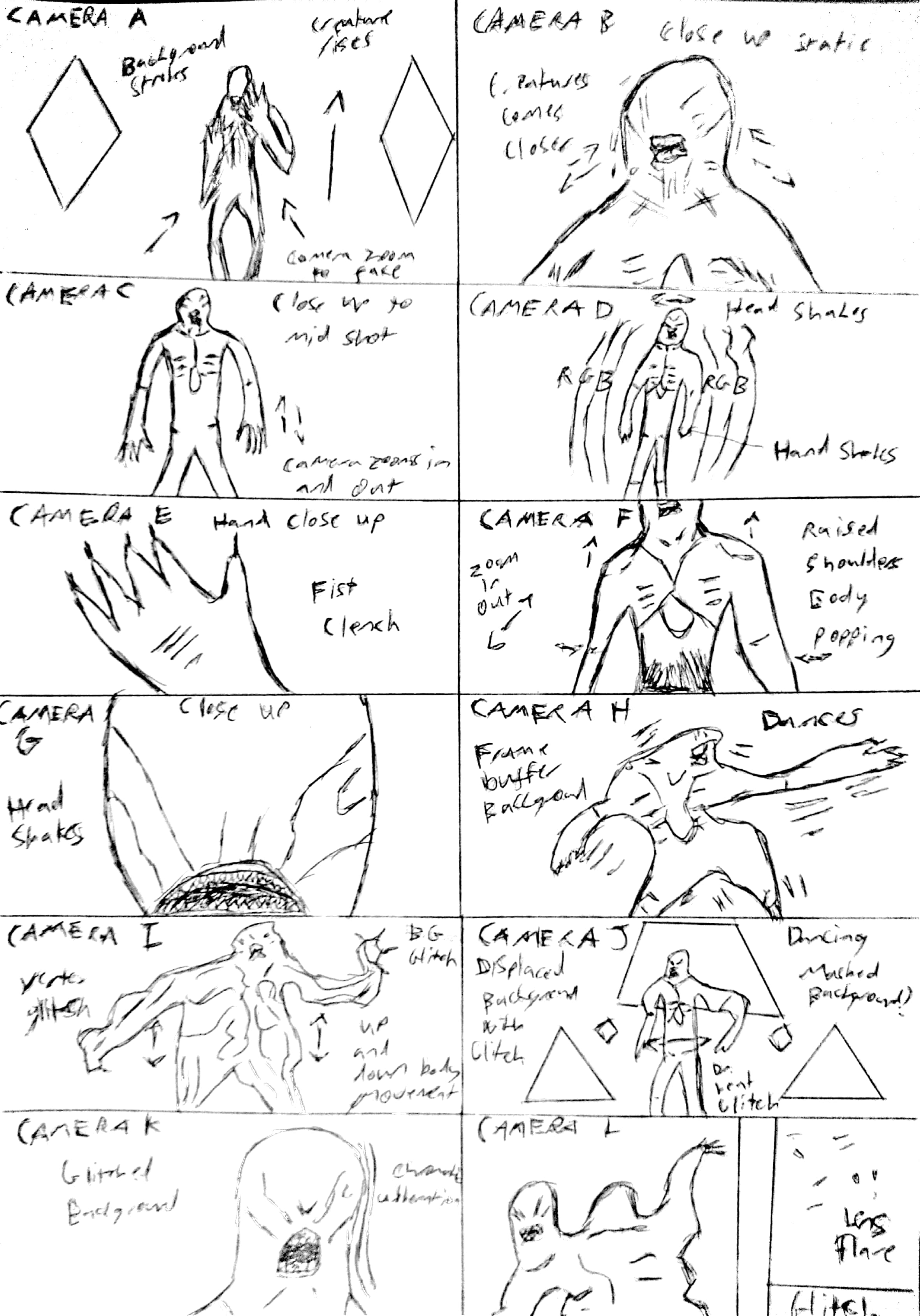

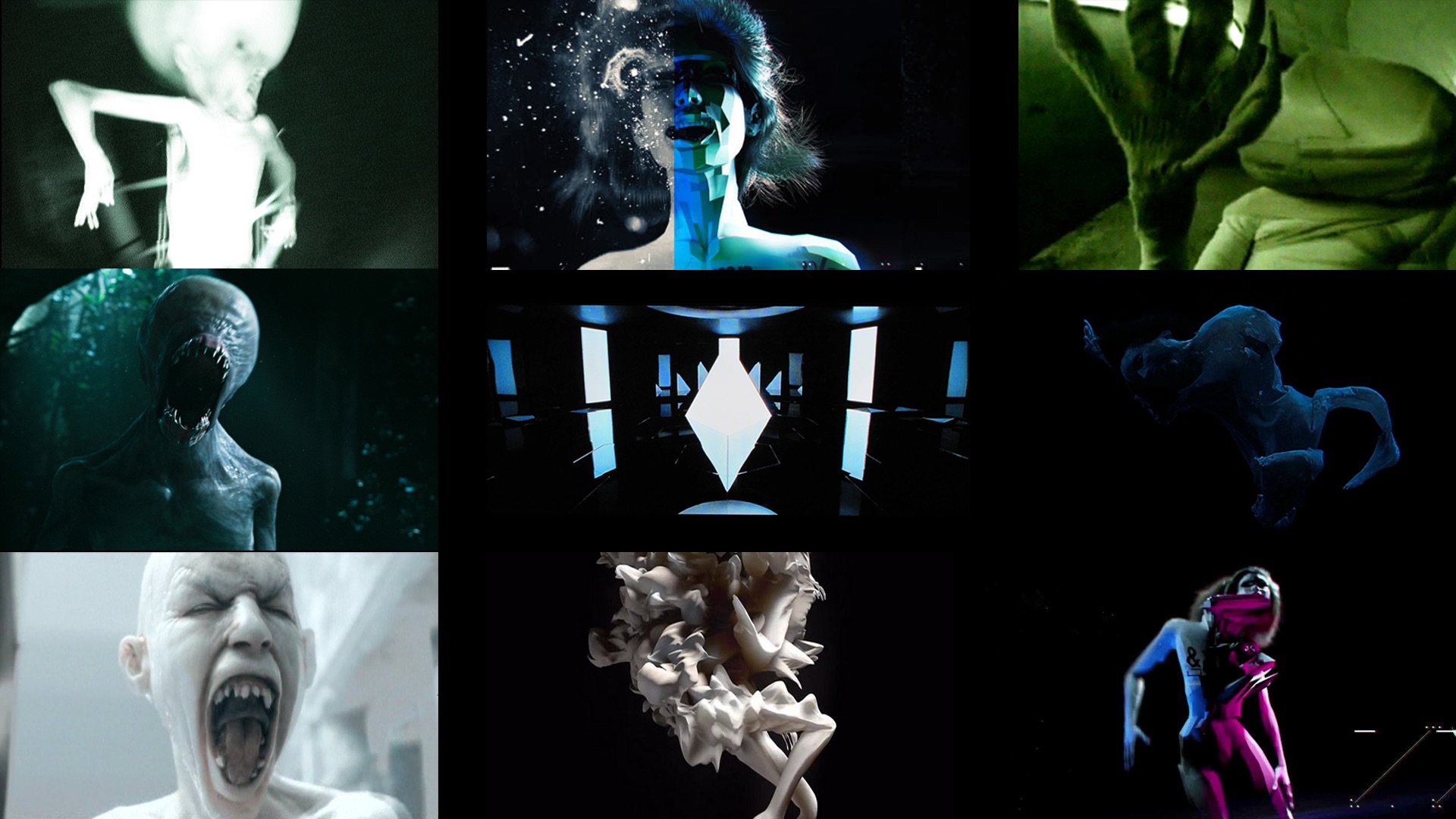

Sometimes, whilst daydreaming, I would see an eyeless demonic face flashing within white strobe light. Images from the subconscious fascinate me as an artist as a lot of my best work tends to draw from these regions. To understand myself better, I began reading more into psychology and became particularly interested in the work of Carl Jung. His notion of archetypes I knew from studying Joseph Campbell’s monomyth back in filmmaking class, however I found one archetypical figure particularly interesting this time around. The shadow is the personification of the human dark side that the conscious ego does not identify within itself. It is the source of many of our worst traits, but beyond it’s self destructive tendencies, there is also hidden potential as a source of creativity. I wanted to explore the artistic catharsis of integrating my shadow through a process Jung refers to as ‘individuation.’ Due to my background, I knew that this would come to life as an AV performance so once I had my idea, I rolled with it and began to storyboard everything.

Glitch art has played an important recurring theme in my work, so I revisited Rosa Menkman’s Glitch Studies Manifesto in preparation for this project. She inspired me to keep a child like fascination while manipulating, bending and breaking code until it becomes something new. As a visual artist, I wear my influences on my sleeve and drew inspiration from Chris Cunningham, Jesse Kanda and the Tokyo collective BRDG. Atau also referred me to The Flicker by Tony Conrad which impacted my decision to use strobes as a method to elicit altered states. Musically I was greatly inspired by Arca, Ben Frost, Holly Herndon and Venetian Snares. Sonically, I have an approach of emulating my favourite producers up to a certain point but then breaking away to try and cultivate my own sound.

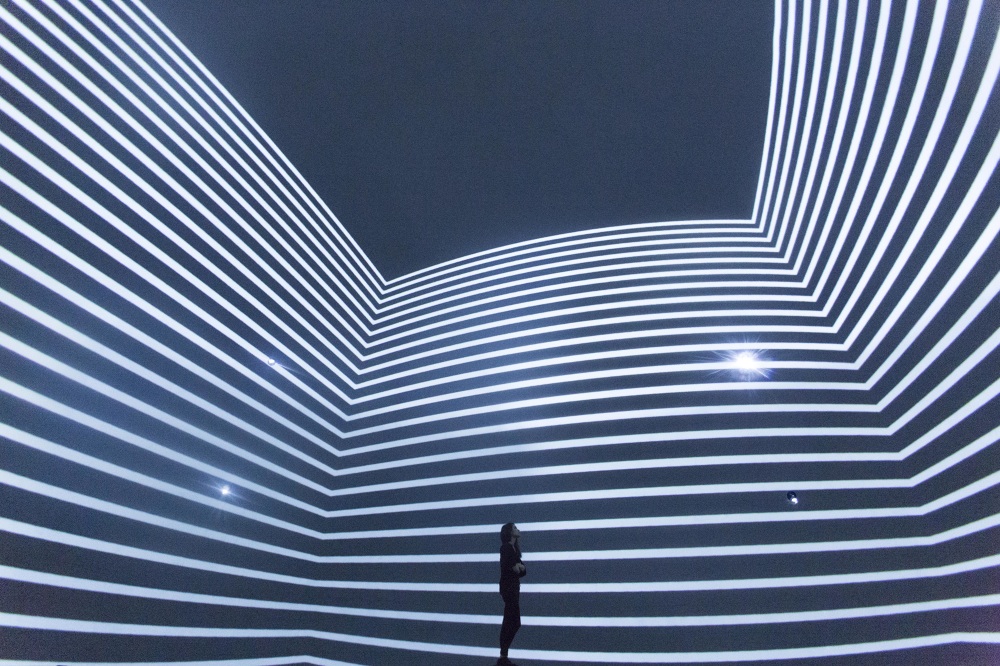

After forming the performance group, we decided pretty early on that we wanted to showcase our work within the immersive pipeline approach. Upon speaking to Blanca Regina she explained that the SIML setup was inspired by a mix of the ISM Hexadome, SAT Montreal and the Cinechamber.

Technical

With this project I wanted to build upon previous work but also use it as an opportunity to learn many new computational techniques. Initially I considered using virtual reality as a medium for performance so began to explore this field in depth. I enrolled on the department’s Virtual Reality Specialization. Through the course, I built many mobile apps for the Google Cardboard and became comfortable with the game engine Unity 3D. Very quickly, I realised this software would be the best tool for this job. Interface wise, Unity is very similar to Blender or Cinema 4D however it’s scripting abilities and its ease of use for creating real-time 3D applications instantly blew me away! However, I have found you constantly have to reverse engineer with Unity, as it is mostly intended for game development so you have to think with that in mind when using it as a tool for alternative purposes.

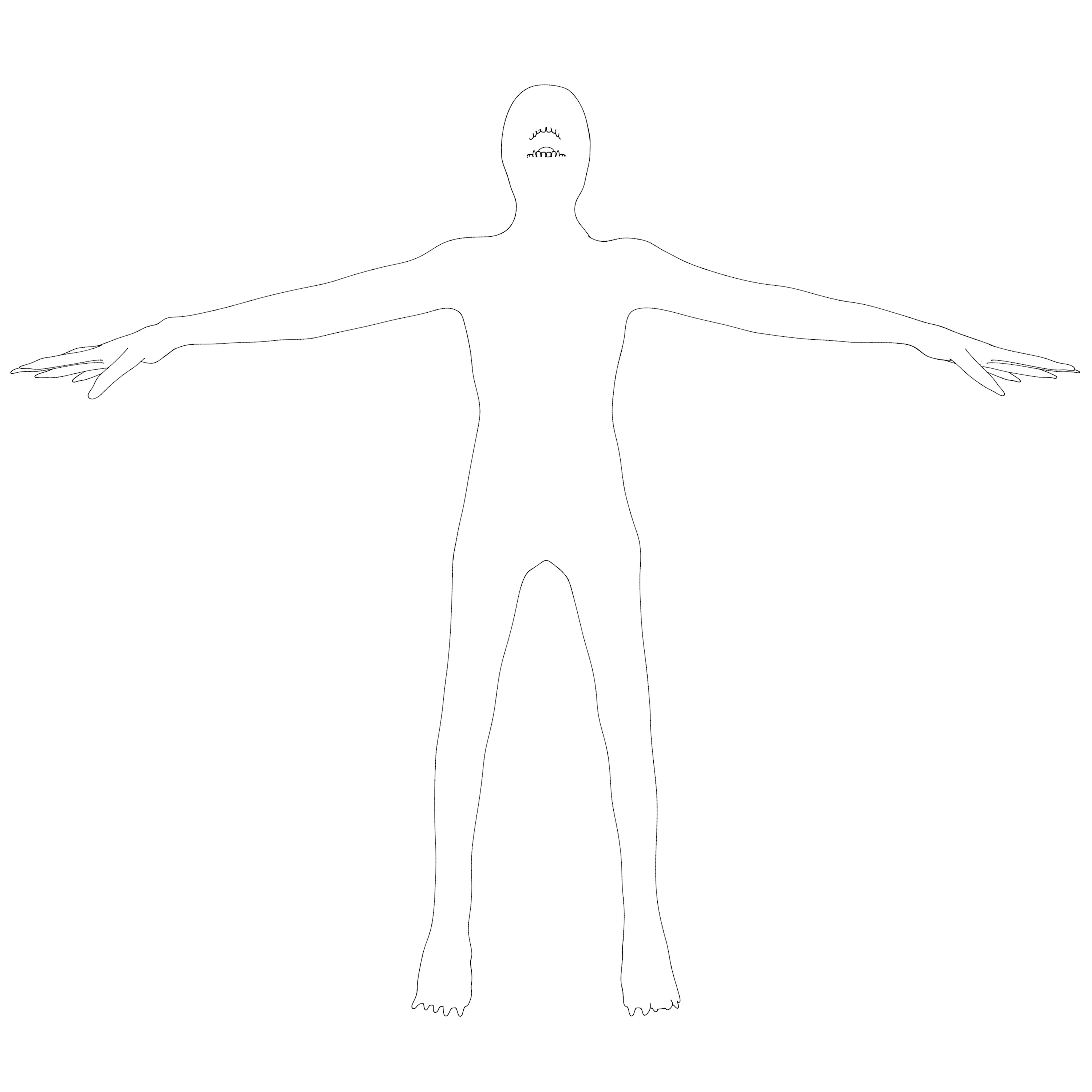

Before writing any code, I first had to find a suitable avatar to work with. Upon Eevi’s feedback, I chose a mutant from the Unity asset store that looked less derivative of HR Giger’s designs. I then used a Blender plugin to simulate organic growth on the creature, displaced it’s mesh and added my own textures to the model. Finally, I brought it into Unity and began to write scripts so that I could control its behaviour on runtime. You mostly work with C# in Unity, which I found easy to get to grips with after learning C++ in Openframeworks, as the two have quite similar syntax. I wrote C# scripts to control various objects within the scene such as strobe lights and the movement of 3D cameras.

Previously on the course, I had conducted some research into shaders in the hope I could implement them in OpenFrameworks and utilise the GPU to create more complicated visual effects. Even with the assistance of The Book of Shaders, I still found them far too abstract to comprehend. So when I found out that Unity shaders are written in HLSL and their own language “ShaderLab” I prepared for the worst but surprisingly it was actually much less difficult than I had anticipated. Through some helpful chapters in the Unity Documentation (and many failed attempts) I worked out how to write a vertex displacement shader. For the final prototype, I used a sine function and applied it to the y axis of my mutant model.

For the music aspect of the SHDW project, I used Max MSP to process some field recordings and build custom instruments. Sam Tarakajian’s online tutorials are a great resource, from his templates I built my own step sequencer, drum machine and delay effects. I also heavily utilised the HSU-002 patch by Dub Russell to further randomise my samples. After building up a library of sounds created within Max, I then brought everything into my DAW of choice Ableton Live and began to arrange/master everything into songs.

Prototypes

After spending several months on the music, I quickly returned back to the visual aspect of the project. For a long time, I was trying to use the “getSpectrumData” function for FFT analysis and make the scene audio reactive. Unfortunately, this never worked as the only live audio input I could use was my laptop’s internal microphone and this would cause feedback. To add some further production value to my Unity Project I began to work with Keijiro Takahashi’s packages, in particular his KinoGlitch, KinoFringe and MidiJack libraries. Unfortunately, there is not much documentation on how Unity can be used for computational art compared to Processing or Openframeworks, but it does have a supportive community that can be very helpful with code problems. This was very much the case when it came to mapping the vertex displacement shader’s controls to my MIDI controller as someone on a forum helped me through every step of that hurdle.

I had left it too late to use the university’s motion capture suite so, upon Theo’s advice, I took matters into my own hands and built a DIY mocap studio in my living room. Luckily OpenNi skeleton tracking is not deprecated on my Windows PC, so I used this machine, a Kinect and a program called ipiMocap to record my movements. For background subtraction, I had to optimise the room for depth by covering objects with a sheet. After some hilarious failed attempts, I eventually had a take I was happy with and exported it as a FBX. Finally, I cleaned up the animation in Unity, mapped each scene to keyboard presses and set the clear flags on one camera to false for a trailing effect.

Blanca was kind enough to help us to prototype the entire SIML setup as a small scale model. During this time, we got to grips with the setup and how to use Madmapper in conjunction with each of our software/hardware. It was here I realised that this huge resolution would not comply with my Unity executable file and eventually it crashed altogether. This was problematic as it meant I could no longer run my game in real time. Eventually I had no choice but to pre-record everything from Unity, make them into video files and bring them into my VJ software (VDMX) for playback. Though not as computational as I would have hoped, in the end it worked out well, as I had no further technical hiccups throughout the 2 weeks we erected the SIML space. This meant I could focus more on rehearsals and helping out my team wherever possible.

Self evaluation and future development

I am happy with the project and proud of myself for venturing out of my comfort zone to try new things. In particular, I am pleased with how the music turned out as this was one of my first solo ventures into production, and I think the obsessive attention to detail ultimately paid off. However, I believe the project’s contextual origins could have been better realised as I feel the Shadow concept was more of a starting point but was not adequately fleshed out in the final product.

Next time, if I were to build upon this project I would want to introduce real time motion capture using a kinect on stage, with a dancer’s movements controlling the avatar.

I would like to thank Gim Chuang of the Audiovisual Unity 3D Facebook group for her help with implementing MIDI control. And a massive thank you to Friendred, Laurie, Ben, Annie and the technicians for all of their hard work in the lead up to the degree show.

References

The collected works of C.G. Jung / editors: Sir Herbert Read [...et al.]. Vol.9 ; translated by R.F.C. Hull Pt.1, The archetypes and the collective unconscious

Menkman, R., (2010) Glitch Studies Manifesto. [PDF] Amsterdam/Cologne. Available from: https://amodern.net/wp-content/uploads/2016/05/2010_Original_Rosa-Menkman-Glitch-Studies-Manifesto.pdf [Accessed: May 2018]

The Flicker

https://www.coursera.org/specializations/virtual-reality

https://assetstore.unity.com/packages/3d/characters/mutant-hunter-111207

https://github.com/hsab/GrowthNodes

https://docs.unity3d.com/Manual/SL-SurfaceShaderExamples.html

https://unity3d.com/learn/tutorials/topics/graphics/displacing-vertices-and-clipping-pixels

https://www.youtube.com/user/dude837

https://docs.unity3d.com/ScriptReference/AudioSource.GetSpectrumData.html

https://github.com/keijiro