Devolve

A generative, audiovisual performance.

produced by: Laurie Carter

Introduction

Devolve is inspired by the idea that everything is continually evolving from or devolving towards nothingness. This is the metaphysical backbone to the Japanese concept of Wabi Sabi along with an aspiration to accept and find beauty in the imperfect, the incomplete and particularly to accept the impermanent. I wanted to create a meditative space for an observer to pause for a short while, suspending their sense of time, allowing both sound and visuals wash over them.

Within my professional life over the past 10 years, I have been involved in projects that lord technology's speed, it's capacity to disrupt industry or celebrate its ability to connect us to one another in ways never before seen. Whilst some of these aspects exited me, and to an extent still do, I have become fatigued by the amount of noise surrounding this kind of technological power. I wanted to explore the use of technology to create a solitary, meditative space for an individual to reflect within; tech to enable some time for oneself.

Concept, background research and inspiration

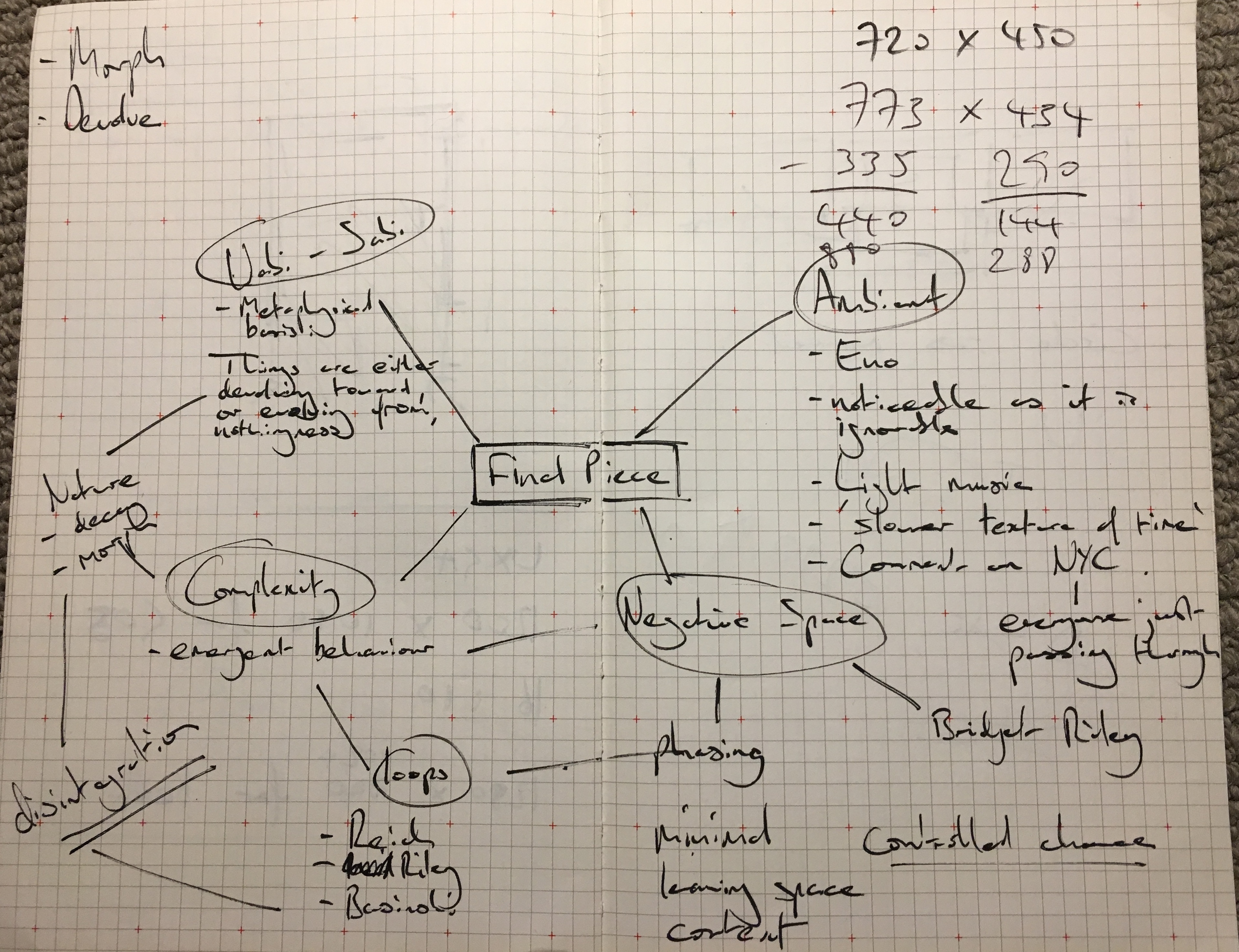

As a music student, I became interested in chance-based, generative compositional techniques; pieces where systems were established with sufficient randomness incorporated so as to create a new and unique experience with each performance. Terry Riley's In C changed my whole idea of what it was to be a composer due to the power he allowed his performers in choosing which phrases to play and when. The aesthetic created is exciting and alive, a certain complexity creating swarms of sound to immerse the listener. My aim was to develop my own techniques for generative composition but to also incorporate a visual element to my performances in an attempt to immersive the audience further.

Steve Reich and, later, William Basinski's tape loop techniques have been a great inspiration for my piece. In Reich's early work, It's Gonna Rain, he played the same recording on two analogue tape machines, allowing the sound to gradually go out of phase with one another due to the imperfect nature of the machinery, before returning to unison, creating a rich soundscape. Basinski's piece, Disintegration Loops, explores the phenomenon of decay as a heavily worn tape loop gradually disintegrates as it rotates through the machine. The effect is an almost imperceivable decline in audio quality which suspends the listener's sense of time creating a beautiful yet sombre mood.

Brian Eno's installation work is commonly accompanied by his musical compositions and his recent Light Music has inspired me for my piece. When interviewed on creating generative art, Eno likens his role to that of a gardener saying that the artist must 'surrender at the beginning of the project' (ref 1) and relinquish control once a system has been put in place. In Michael Bracewell's introduction to the accompanying book of the exhibition, he describes the work as '“a space for the contemplation of individual experience”, where the viewer/listener is “encouraged to engage with a generative sensory/aesthetic experience that reflects the ever-changing moods and randomness of life itself”' (ref 2).

Below is one of my mindmaps I created at an early developmental stage.

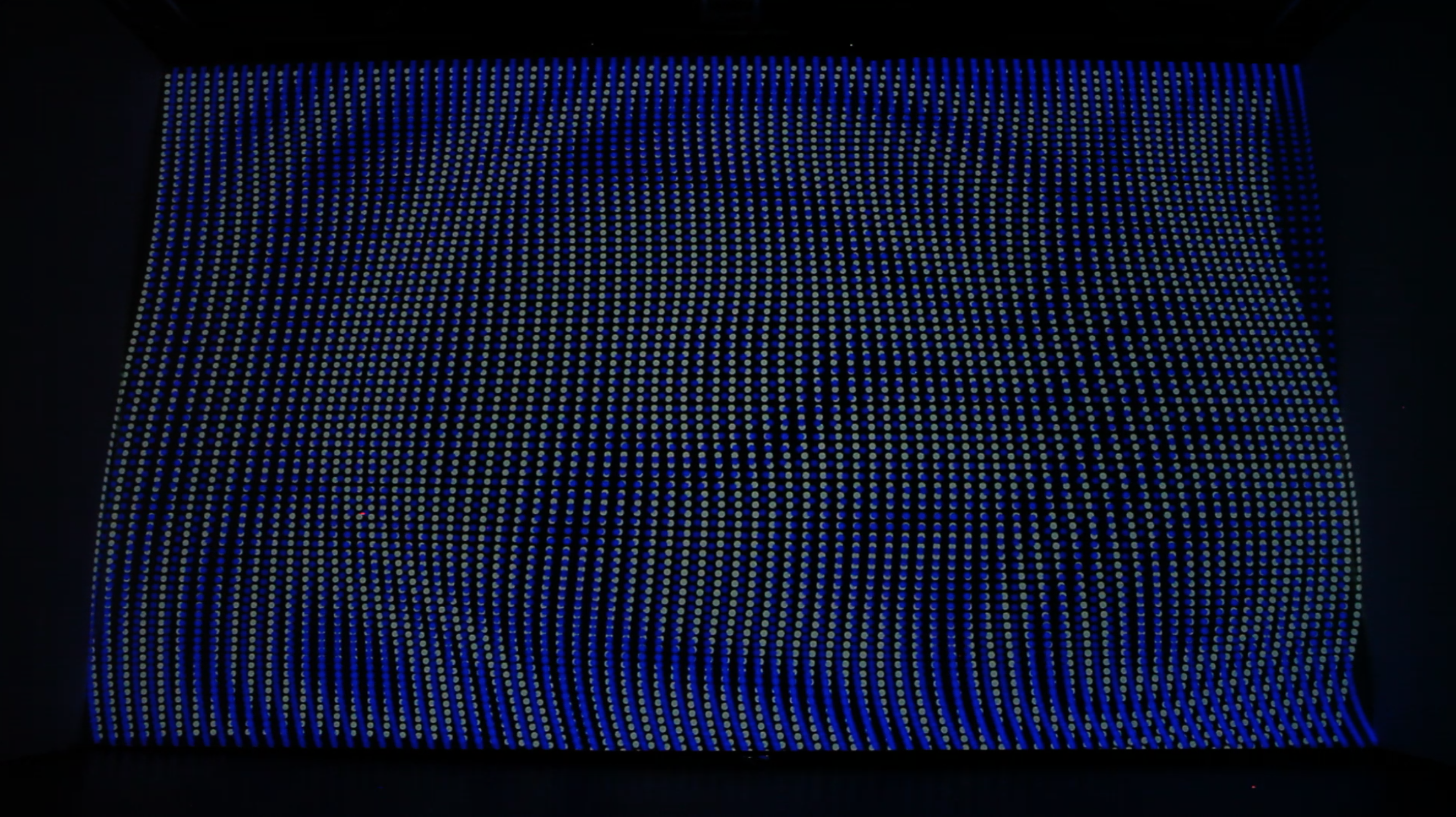

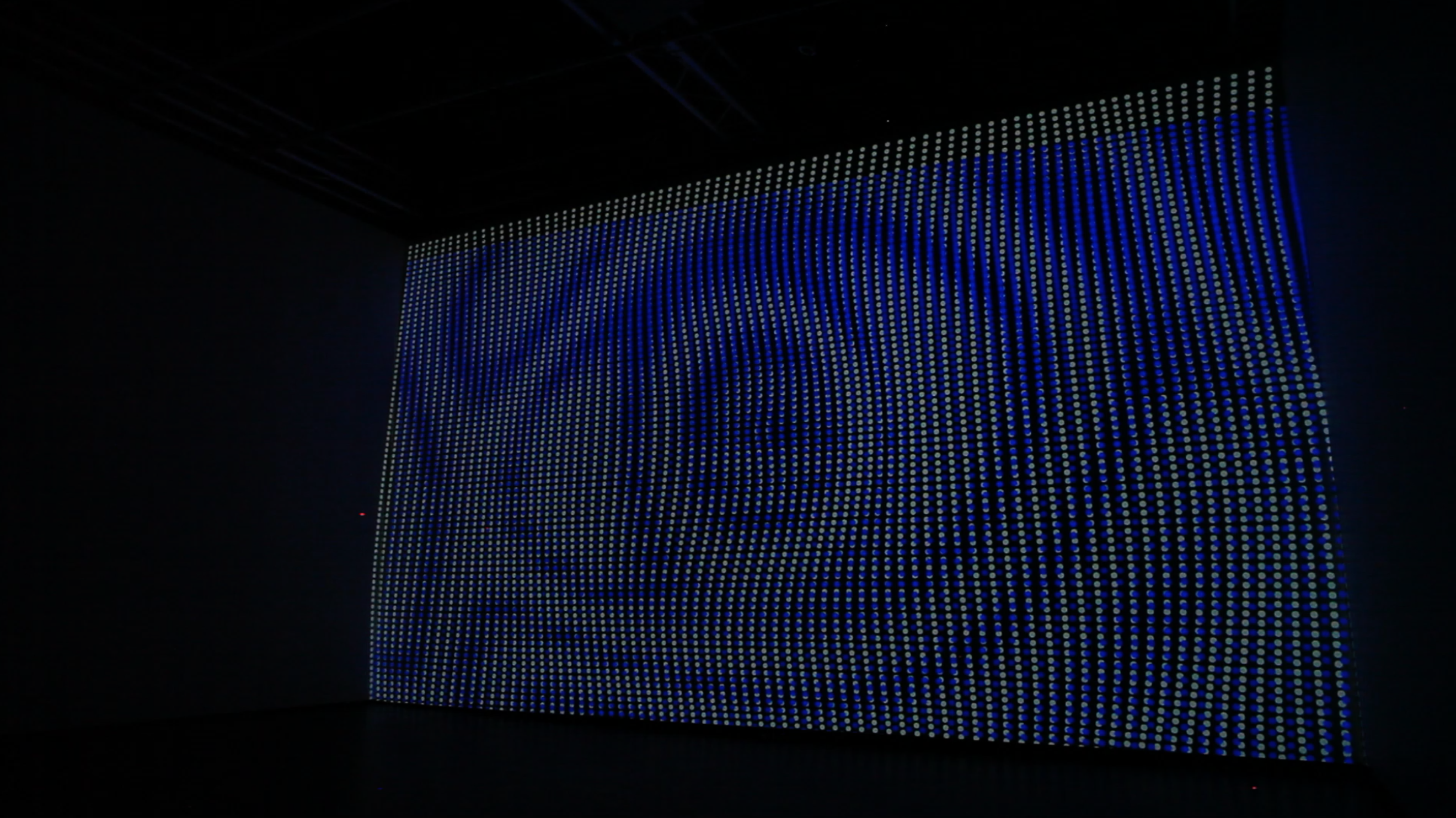

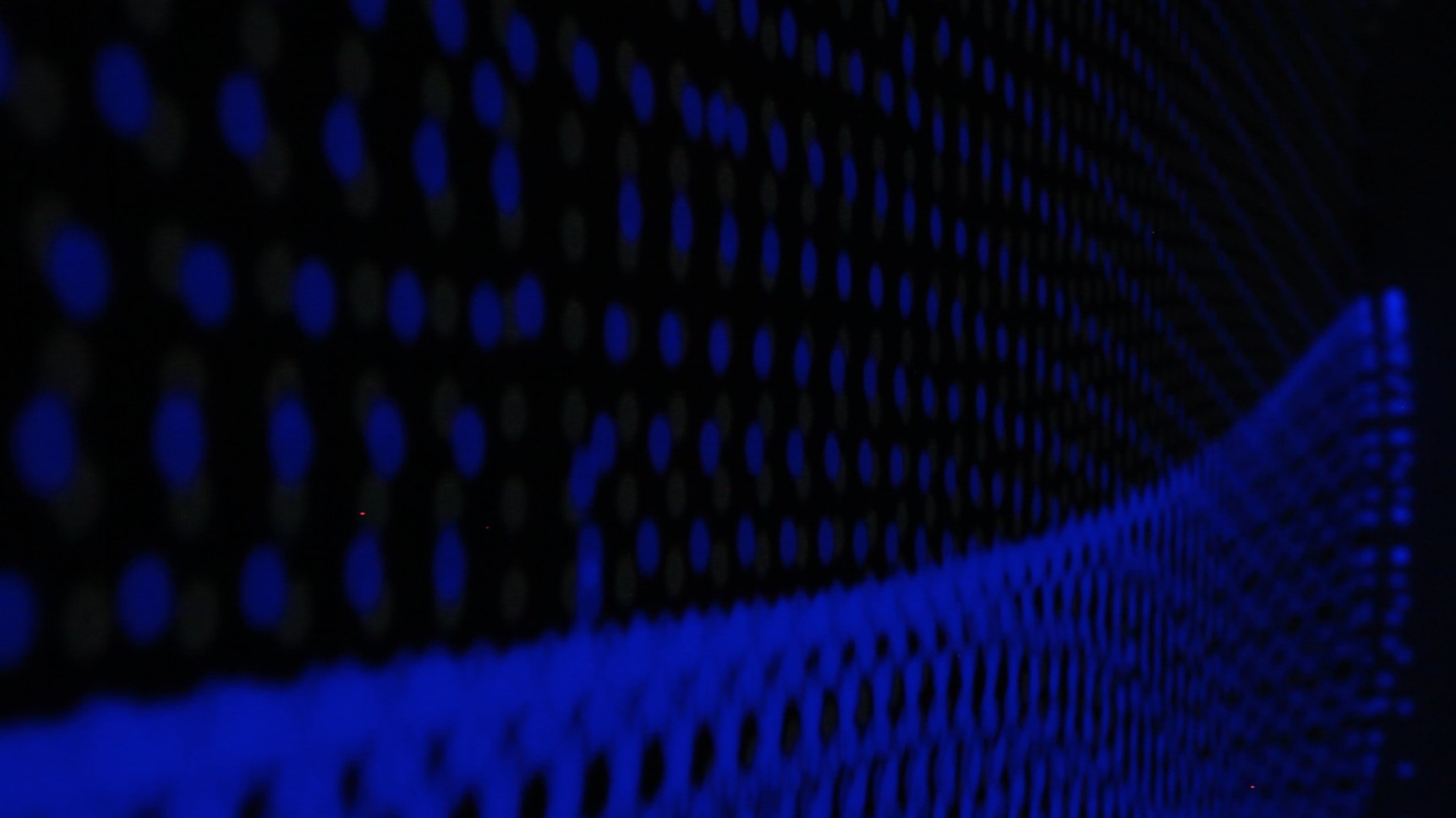

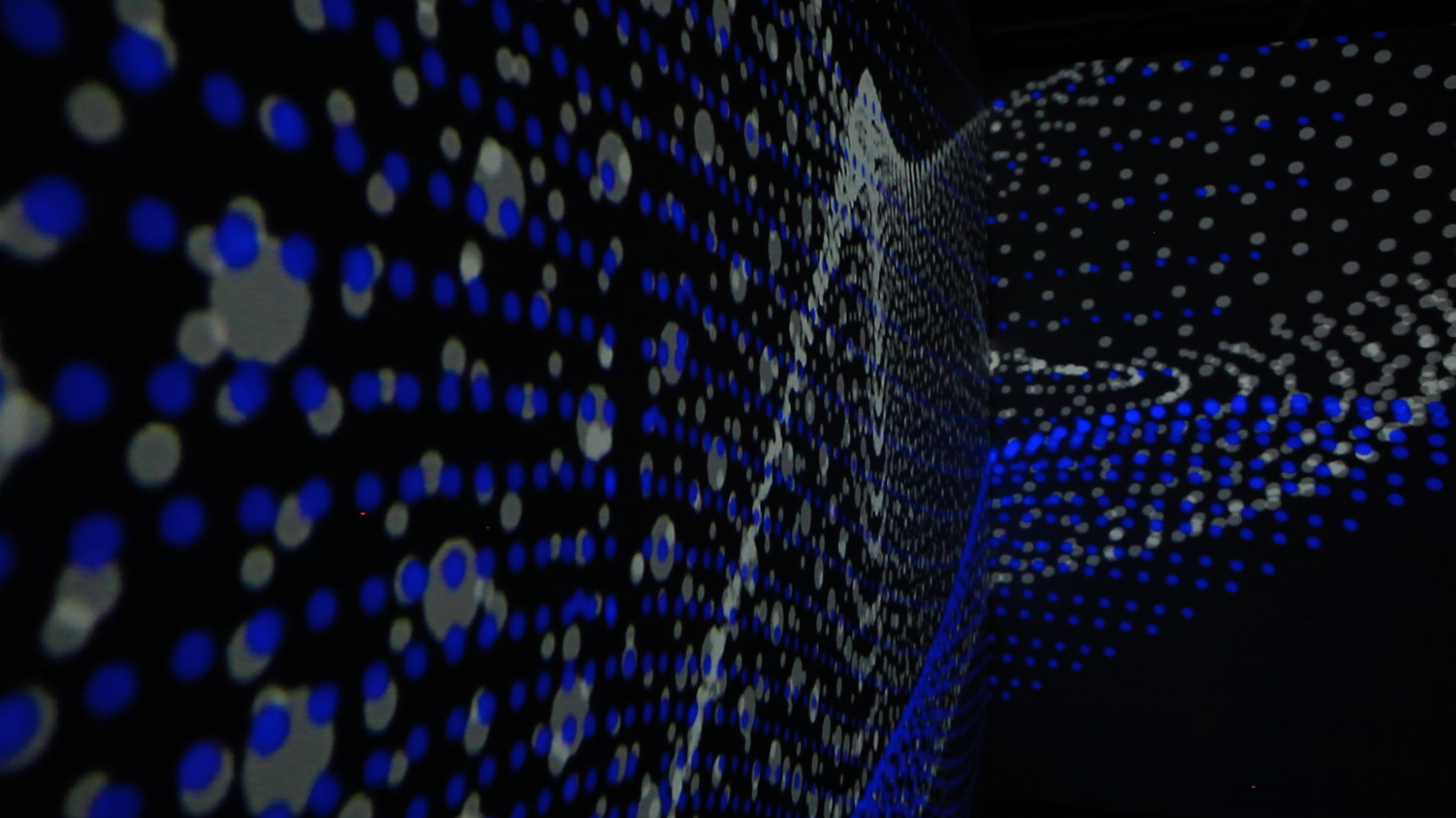

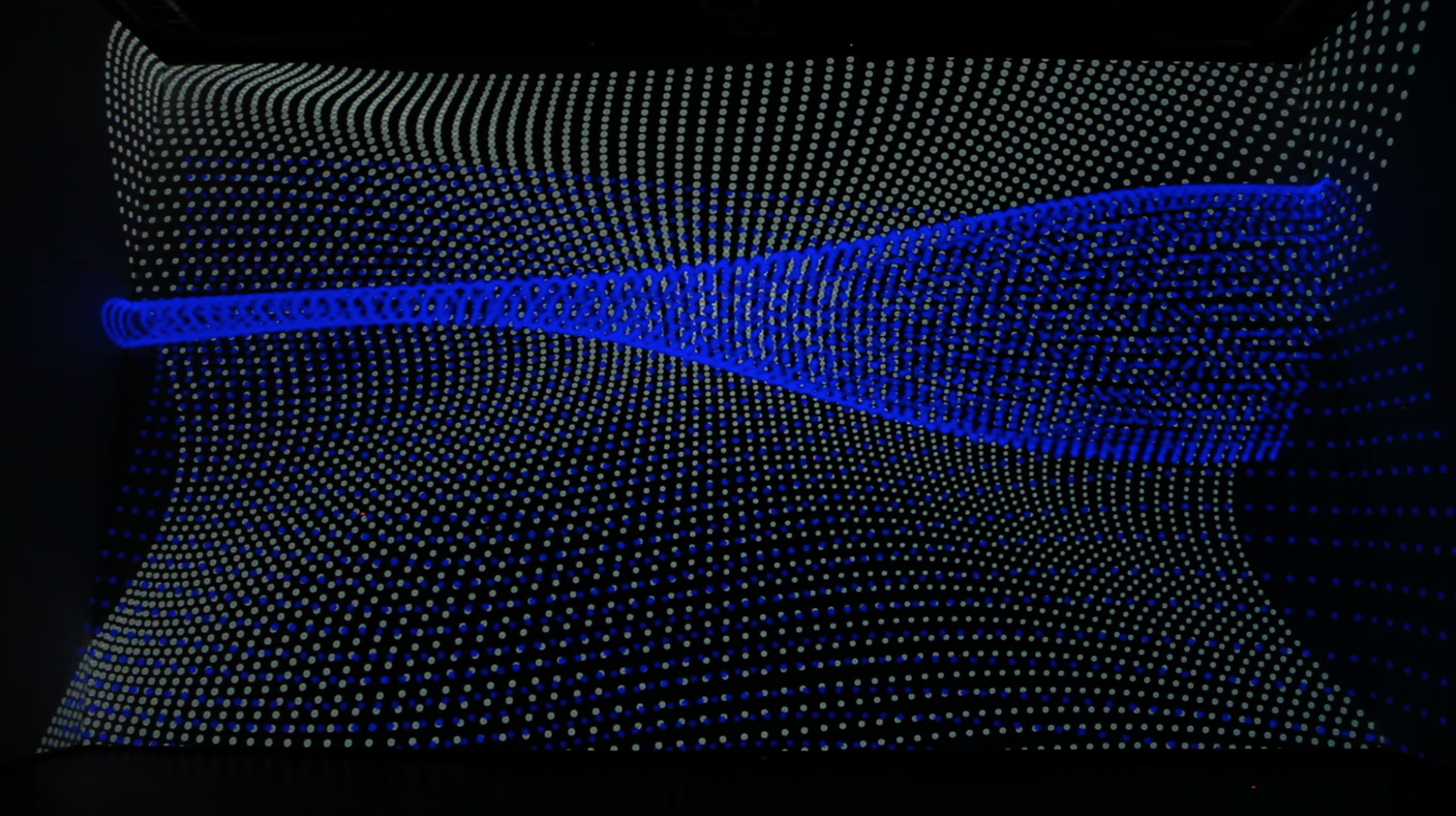

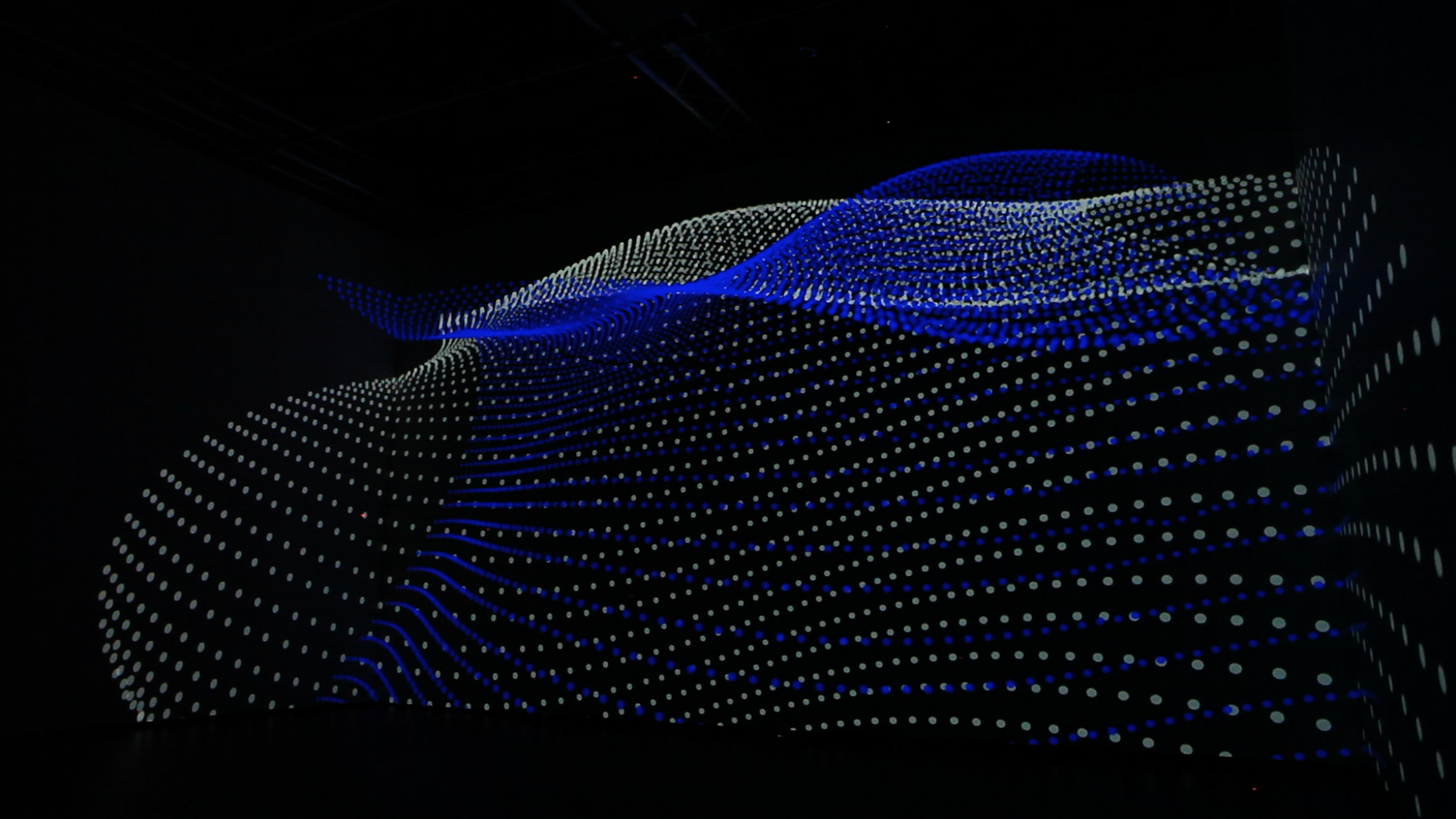

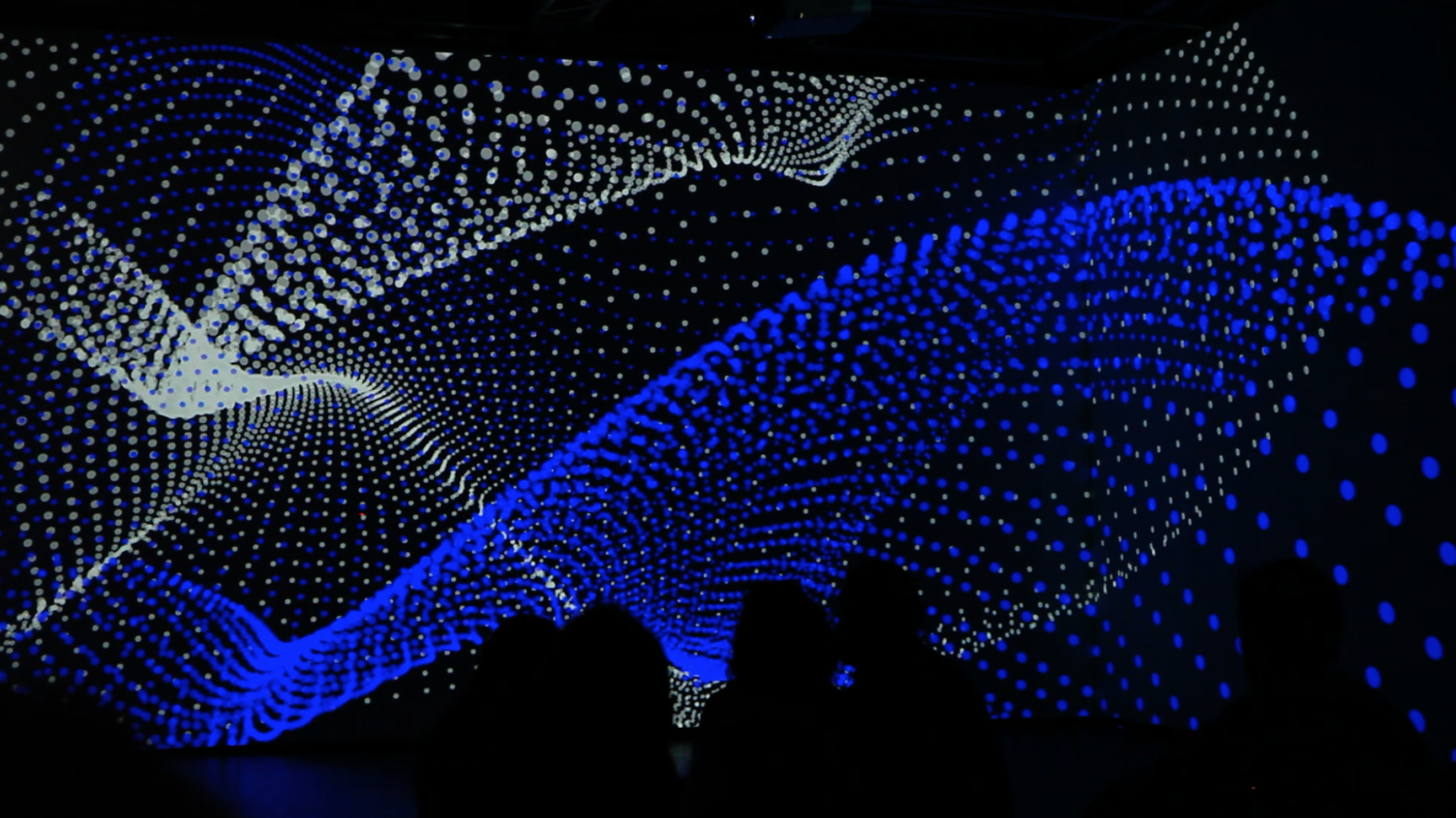

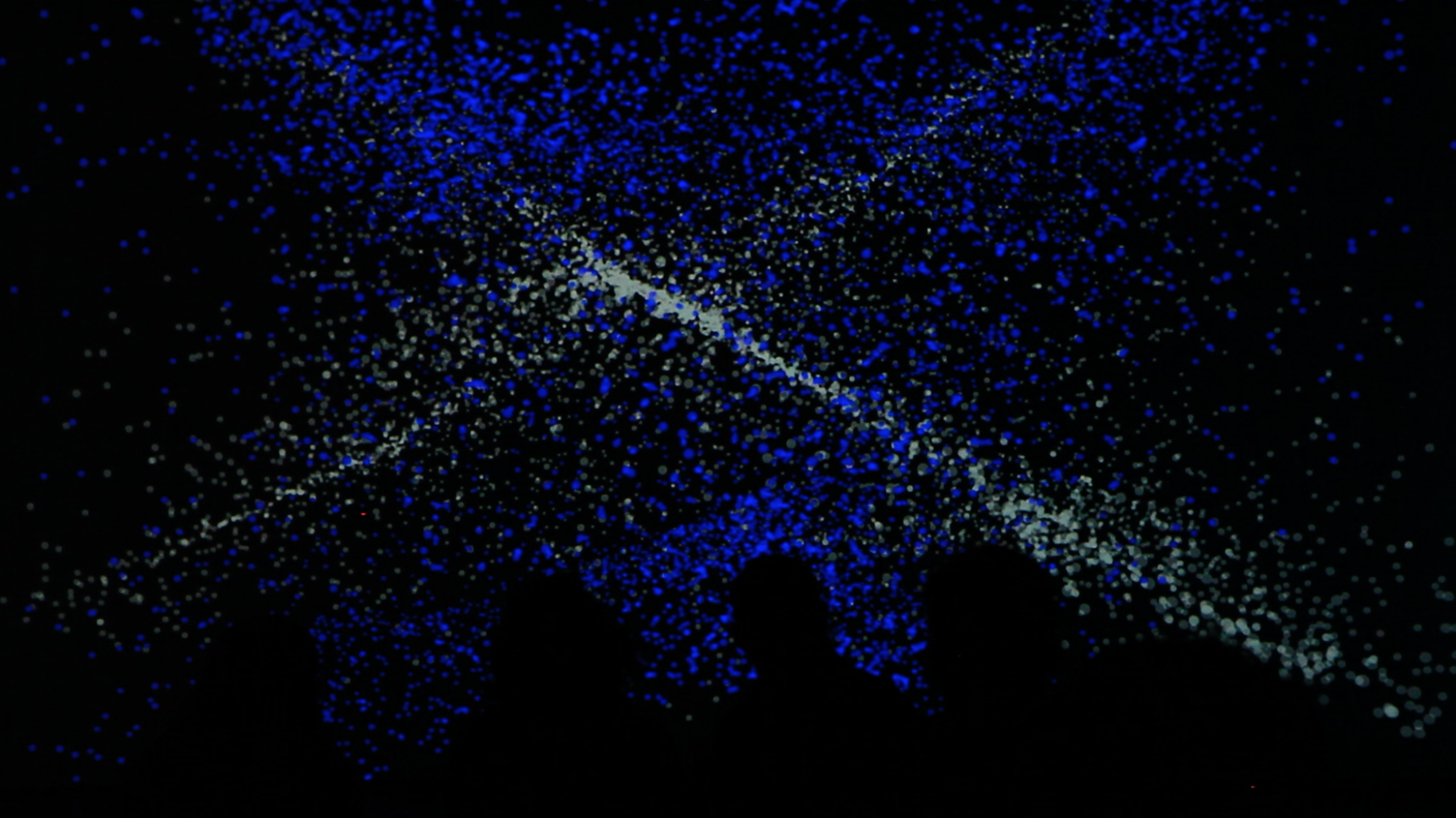

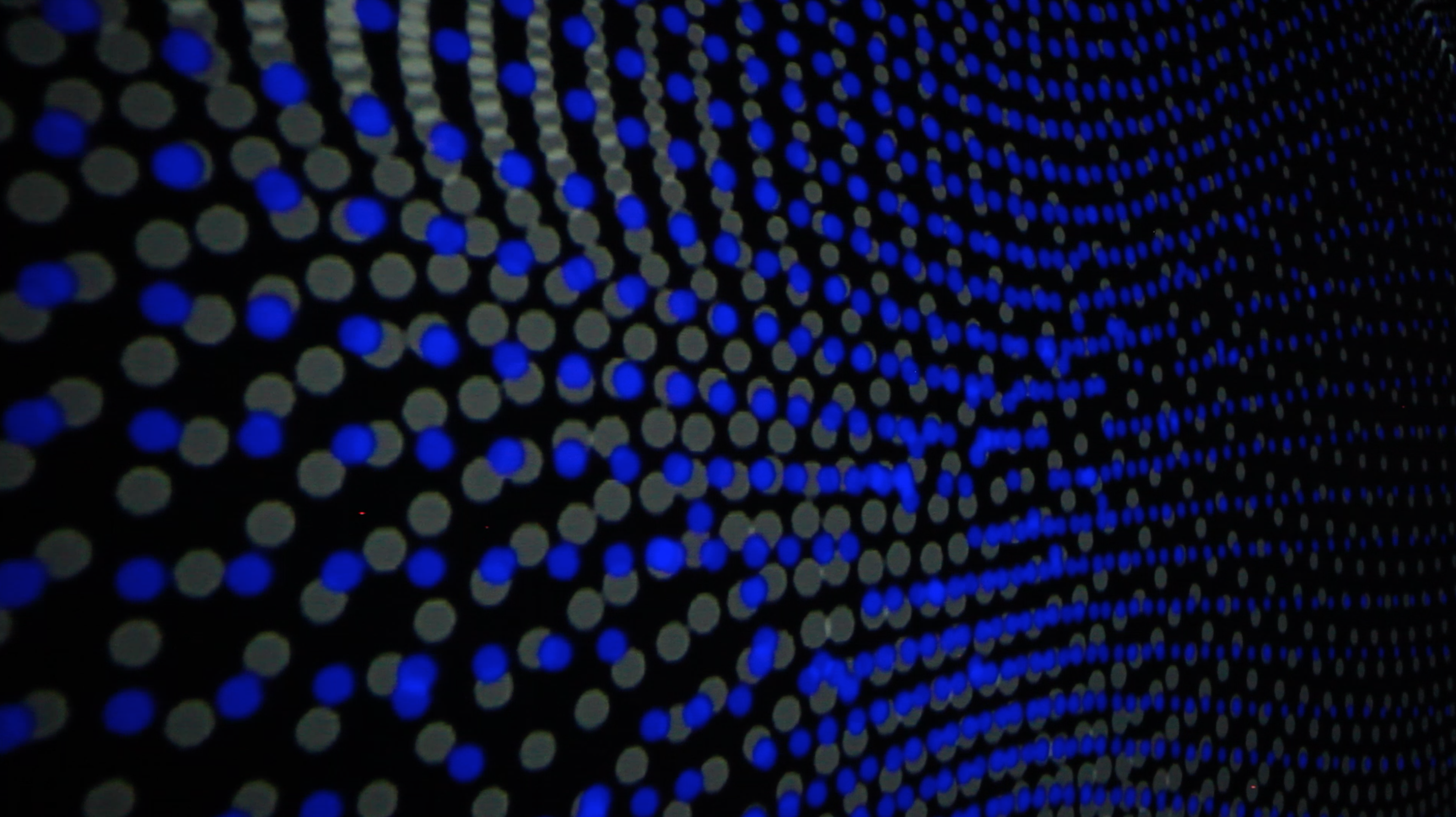

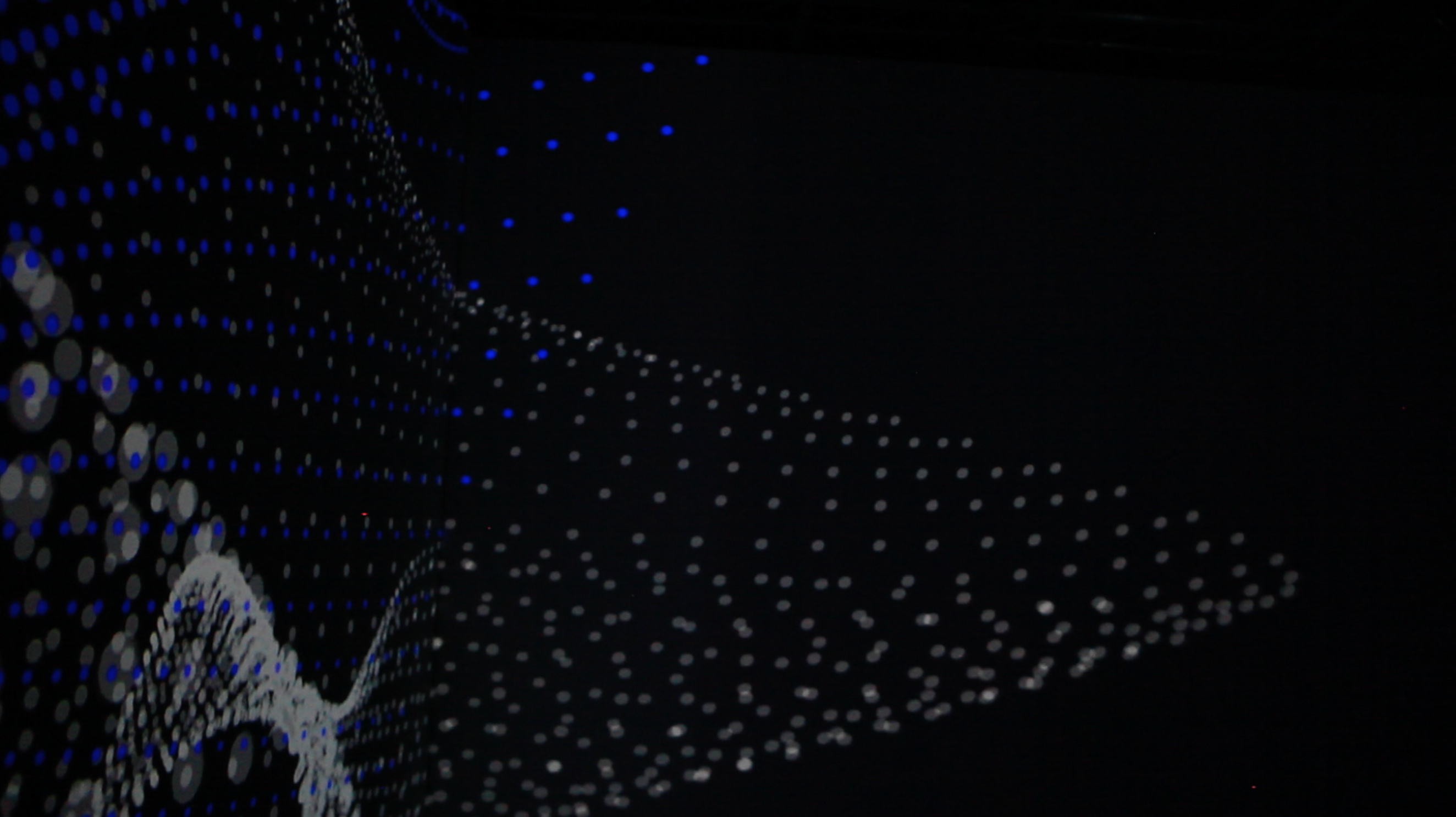

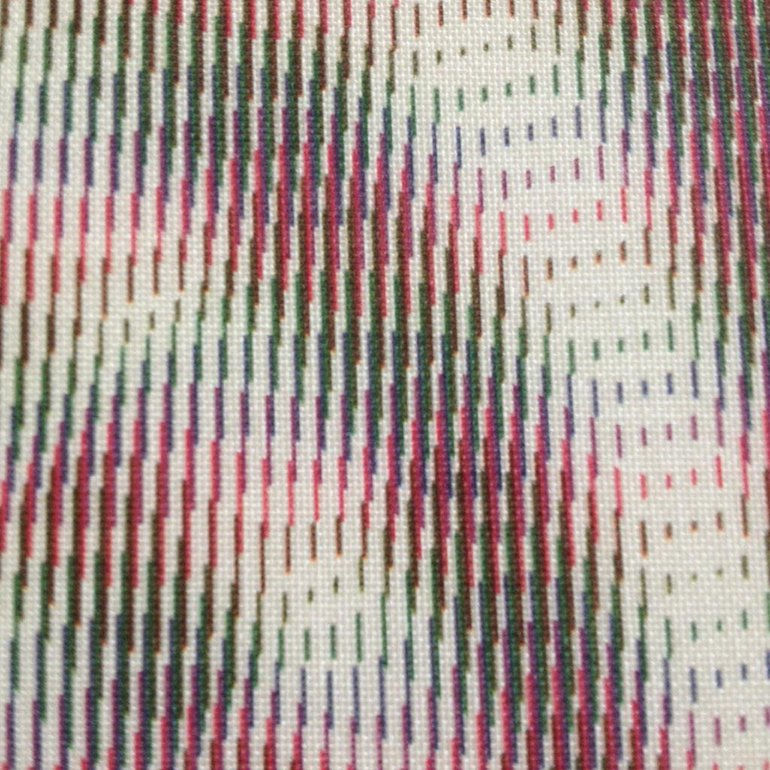

Just as the phasing technique used by Reich creates rhythmic and harmonic audio variation as the piece progresses, I accompanied my music visually with two grid patterns whose movements were powered by the two audio loops. Therefore as the loops unfurled so too did the grids, creating more and more complex visual distortion. I used grid formations as their rigidity and unnatural form is interesting to transform and hint at natural simulations with, such as wave patterns and swarming. The blue tone that I used for one grid allowed for a calming effect which accompanied the music well whilst the second, white grid was more utilitarian as it made it easier for the viewer to see the moire-esk patterns emerging and disappearing. The work of designer Takahiro Kurashima in his book series Poemotion was of great influence for the visual aspect of my piece. His fascination with 'unseen things and unintentional forms' (ref 3) helped me in my direction along with the work of Refik Anadol, Ali Phi and Leo Villareal.

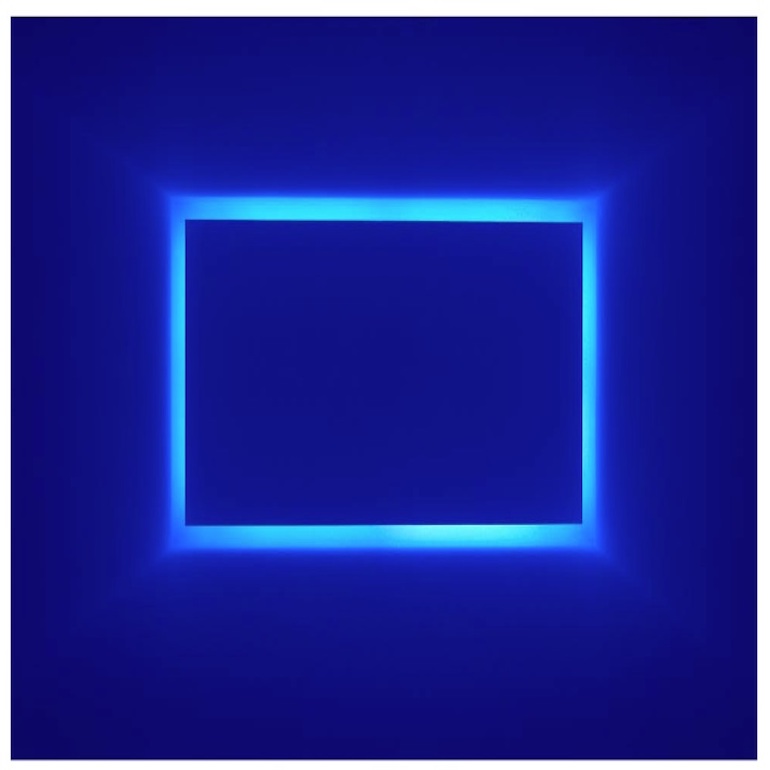

For the first half of the piece, I only projected on to one wall so that the audience would focus all of their attention towards one end of the room and feel confident in the dimensions of the space that they were in. Very slowly, however, I then began to wrap the two grids around the adjacent walls making it seem as if the back wall had disappeared. I wanted the audience to feel as if they were almost staring into an ever changing abyss, playing with their sense of time and space. I had experienced this feeling as a child and have been greatly influenced by spending large amounts of time in the Rothko Room at the Tate Modern or by viewing many works by James Turrell.

Below inspirational images:

1. Takahiro Kurashima, Poemotion 2

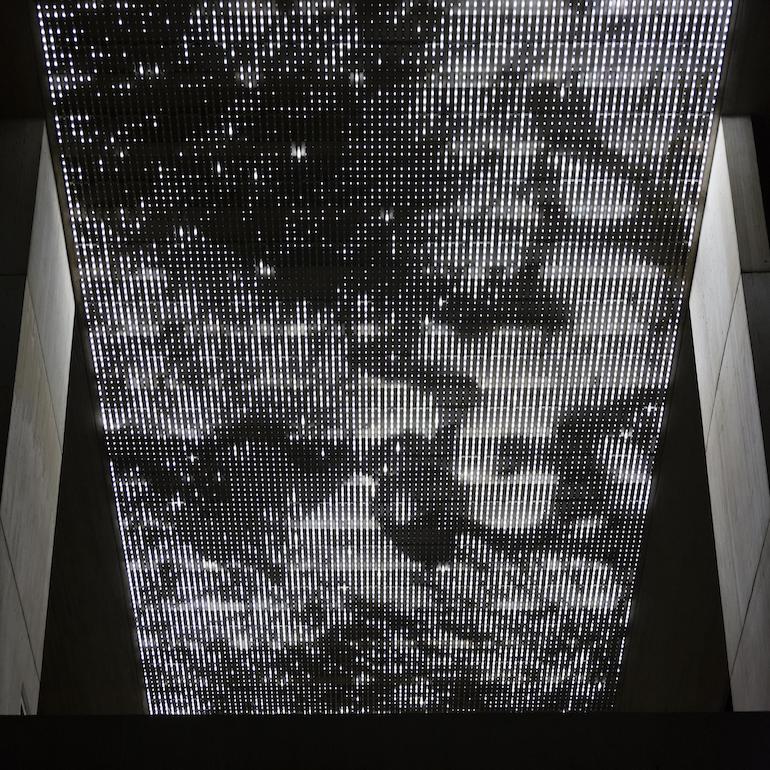

2. Refik Anadol, Infinity Room

3. Ali Phi, Transient Dream

4. Leo Villareal, Cosmos

5. James Turrell, Floater 99

Technical approach

At the heart of my piece is real-time, audio analysis which I then use to control a number of visual transformations. My two piano loops gradually go out of phase with one another and this is visualised by the audio signal of each loop controlling the movement of a separate coloured grid that is being projected. Via OSC, I have control of many different types of visual transformations and distortions of the circles that make up my grids but the intensity of all of these is controlled by the amplitude of either piano loop that can be heard.

I used Ableton Live to control all of my audio recording, processing and for the performance as it is my DAW of choice and it is a very comfortable environment for me to work in. It also allows for robust mapping of midi controllers and reliable sending of OSC messages which was crucial for the audio and visual elements of my piece to interact successfully. I used OpenFrameworks to control the visuals as, throughout the course, I had found that it handled greater numbers of agents more successfully than say Processing when experimenting with generative, emergent behaviour. I was pleased with some of my earlier experiments controlling visuals via OSC in OpenFrameworks and I also wanted to give myself the challenge of producing my final piece in it as, moving forward, I hope to continue using the platform for more complex projects. Finally, MadMapper was used to control our projection setup as it had previously been used successfully in the theatre where we were to perform. We had a total of five artists performing in the same space, all with separate and complicated setups, over multiple days. We wanted to put on the best show, as a group, that we could so it made sense to use a very flexible and robust program such as MadMapper that we could rely upon. Although on opening night we had some technical challenges, overall we managed to achieve very smooth changeovers between artists' performances.

Prototypes

I knew early on that I wanted to experiment with real-time, audio-controlled visualisation and began by exploring FFT Analysis. Along with the assistance of Mick Grierson, I used the OpenFrameworks addon ofMaxim to do so but shortly found that it became extremely computationally expensive when trying to manipulate the number of agents that I hoped to. I also found that I had a memory leak when using the addon so, after consulting with Theo Papatheodorou, I decided to simply analyse the volume of the audio signal rather than being concerned with the specific frequencies that were being passed in. This did not diminish the visual effect that I was hoping to achieve and, in fact, made the piece far more efficient and therefore stable for live performance.

Below are two clips of early prototypes - firstly exploring a flocking simulation and secondly two grid patterns, both being controlled by FFT Analysis.

Self evaluation

I was happy with what I had produced leading up to the final few weeks before our final exhibition but it wasn't until I was able to see the projection on the theatre walls that I made some important decisions that I feel made the piece stronger. Mainly this was to do with the amount of time that I waited for the piece to progress as I was able to be more minimal with the evolution once I saw it at size. Also, I was initially only planning to project on to one wall but once I saw the power of slowing wrapping the piece around the three walls, I spent a lot of time working to get the pacing of this effect correct and I think that this was perhaps one of the strongest elements of the performance.

I would have loved to have been able to use more agents within my grids as this would have allowed me to have produced even more interesting effects. However, I was not able to do so with the coding approach that I took without sacrificing my framerate which would have lead to a far less smooth effect. To be able to use more agents, I would have had to have used my GPU more effectively, using glsl and shaders. This is currently out of my skillset but certainly an area that I am interested in exploring in the future.

Future development

I would love to produce an installation version of my piece rather than just a performance. I can imagine the piece being around 30 minutes in duration, evolving at an extremely slow pace with the audience being able to walk around and experience it from other angles rather than just sitting in one place.

Also, and far more ambitiously, I would love to produce a mixed reality version of the piece where the audience feels as if they are truly engulfed in the ever-changing visual undulations. Despite using the three walls of the performance space and gradually extending the grids' z axis so that a sense of 3D is felt, it would be very powerful to be able to walk amongst the shapes, almost akin to experiencing an Anthony McCall work.

References

1. https://www.youtube.com/watch?v=GPhxZBRD8ug

2. https://fadmagazine.com/2017/03/16/brian-eno-light-music-book-launch-book-signing/

3. http://www.takahirokurashima.com/cv-.html

4. Light Show, Hayward Publishing

5. Wabi-Sabi Further Thoughts, Leonard Koren

6. Silence, John Cage

7. The Nature of Code, Daniel Shiffman

8. Brian Eno Visual Music, Christopher Scoates

9. Emergence: The Connected Lives of Ants, Brains, Cities and Software, Steven Johnson

Acknowledgements

Much support and code inspiration came from many classes in Workshops In Creative Coding by Theo Papatheodorou, particularly week 18, OSC messaging example code, and Programming for Artists 2 by Lior Bengai, particularly week 9, Emergent.

I wish to thank Hideyuki Saito for creating the ofxOSC addon, Mick Grierson for creating the ofxMaxim addon and Anthony Stellato for creating the ofxSyphon addon, along with the audio in example code that comes with OpenFrameworks as without these my project would not have been possible.

I'd also like to thank Annie, Chris, Ben and Friendred who all performed amazingly throughout the exhibition and worked so hard in the lead up. By the end we truly felt like a collective, as interested in and driven by the success of each other's work as much as our own.