Emotional Hat Matching Game

The project start from the intention to mix difference types of input device on the same game. In this case I am adopting the traditional physical console with two potentiometers ans two buttons to control the drop geometries and Webcam with face recognition algorithm to match the facial expression with the geometries.

produced by: Natthakit Kangsadansenanon

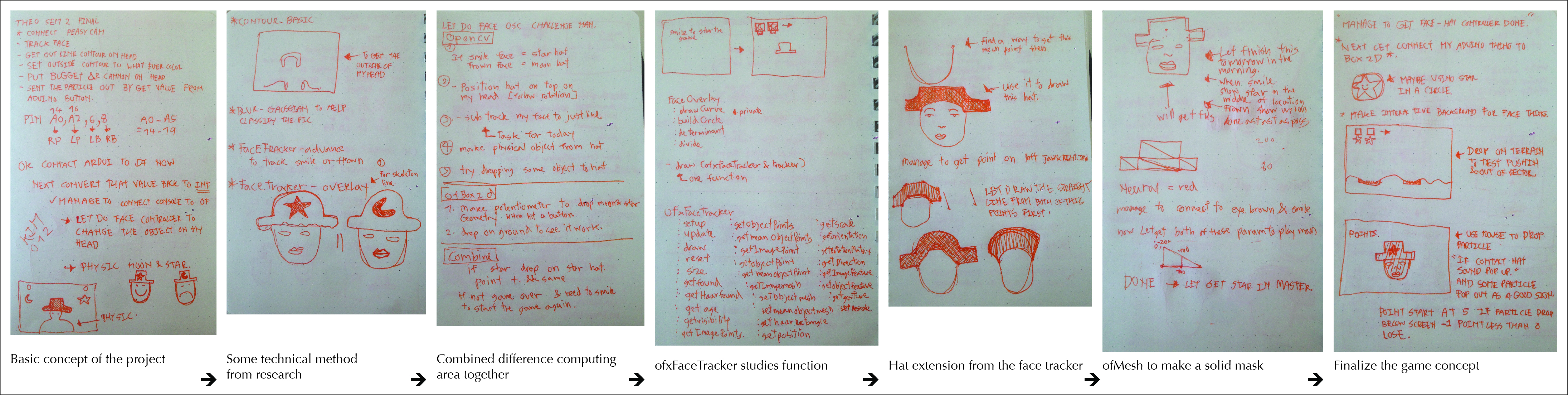

Start of the project

I am start this project from the algorithm areas that I am interested in

- The communication between Aduino and openFrameworks.

- Box2D library the engine behind the game like Angey Bird. I have heard about this library From Daniel Shiffman video and I am kinda interest in this.

- ofxFaceTracker the computer vision library from Kyle Mcdonald To utilise difference parameter of facial expression to control the output.

The way I am approach this project is learning from the project examples that coming up with the addon. I am review all of them then pick something that being useful for my project then combined it together and Lastly modify it to my preferences.

Below : The sketch of my project path.

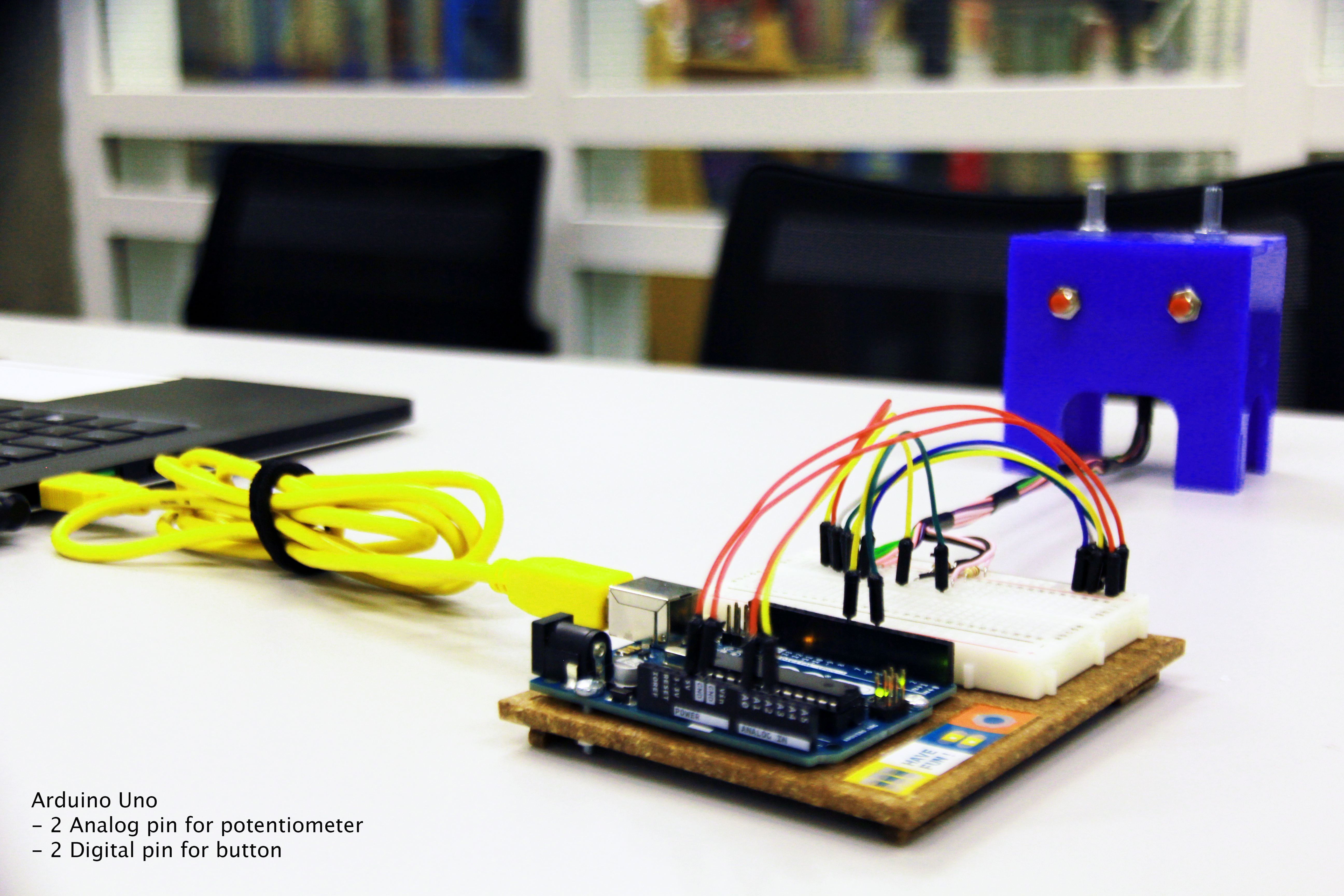

Aduino

Let's start with the Aduino console I am adopting ofSerial communication method between these two components because somehow OfAduino just does not work on my laptop. My First step is to start with the in the Aduino IDE. Sending data value from one components is quite easy but to sending value from 2 Potentiometers and 2 Buttons require more setting becauseofSerial disregard the difference components it only send the mix of new value from each components so I need to attach some string before each value. On the openFramework side I am manage to make the condition like if this sting this value must belong to its.The value that later on being map to the interactivity of the project.

Below: The image of the console.

Below: Here is the demonstration of the input interaction that this console can provide.

ofxBox2D

Box2d is a Physics engine to calculate 2d shape colliding in the setting of their world. I am adopting this library so i dont need to make my own particle and paticleSystem class to move the geometry on the screen. After I am spend time try to understand the project examples 2 project caught my eye. The first one is bodies example which I am learning the basic principle of this library. The second one is Event-listener example which detect when the objects collide on the screen. In this case the algorithm activate the sound and changing the color of the object then back to normal when no longer contact. I am manage to implemented both of these lesson into my project later on.

ofxFaceTracker

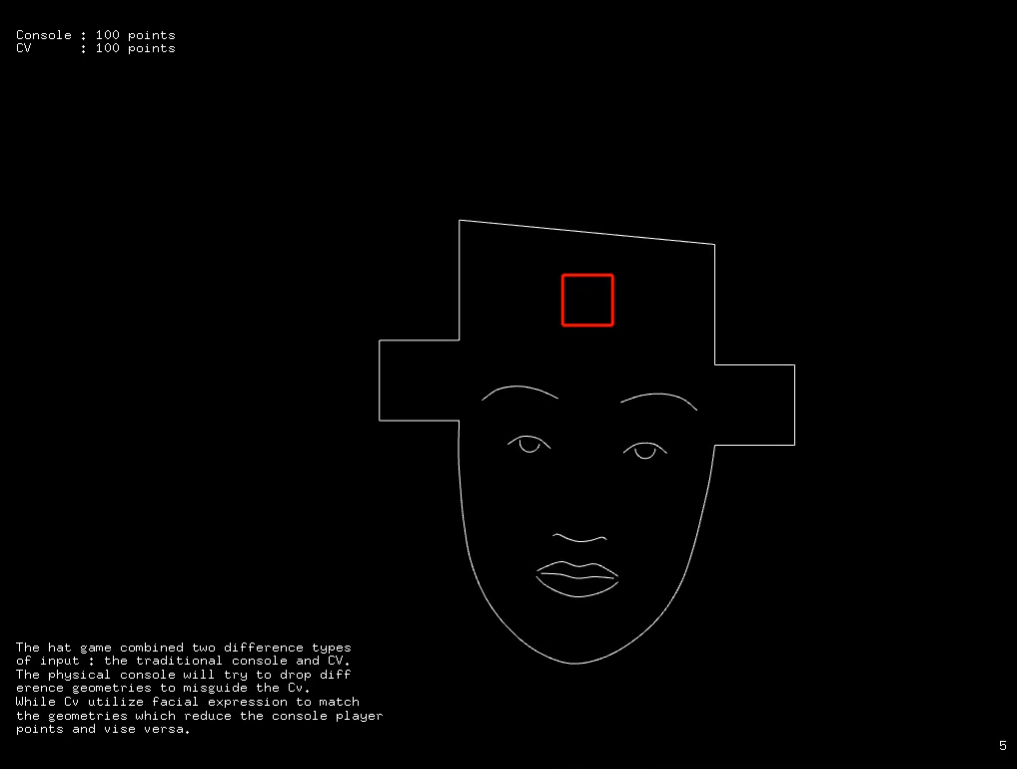

My first mission for this addon is to make a hat that follow my face and changing thte style of the hat according to the expression. After trying to understand the way this library work I am able to extract the last point on Left and Right jaw to be the start and theend of the hat polyline. Lastly I need to implemented the matching geometry to the expression and put it in the middle of the hat. In this case the square = raising my eye brown, X = normal face and O = smile.

Below: The video demostrate the input method.

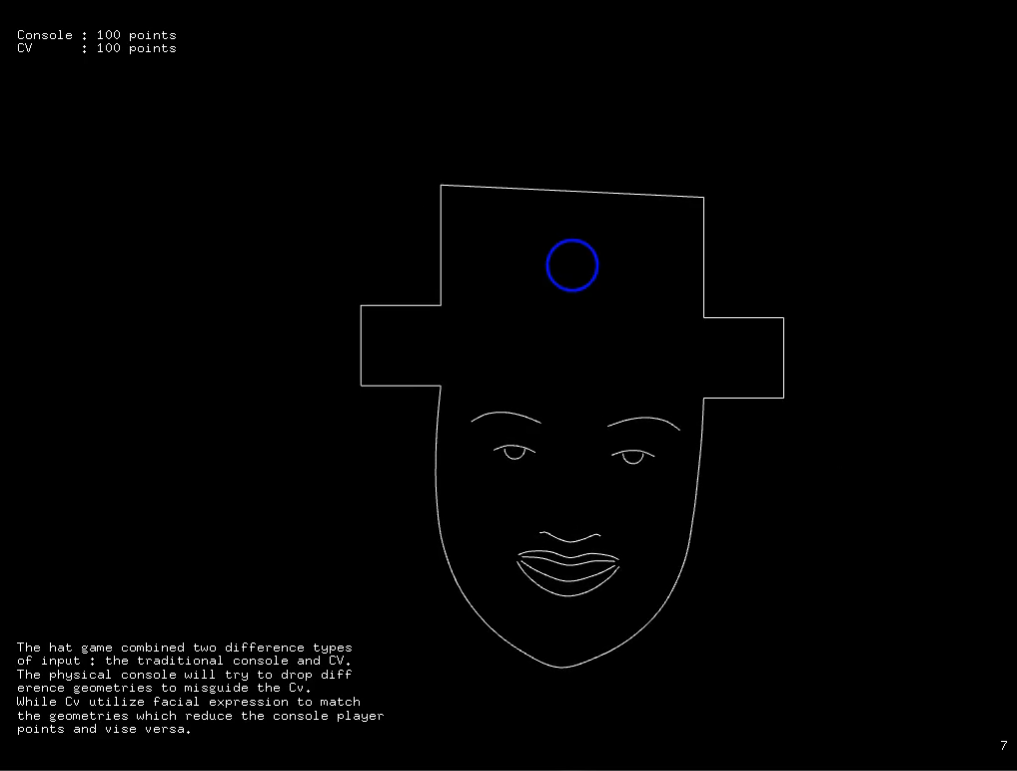

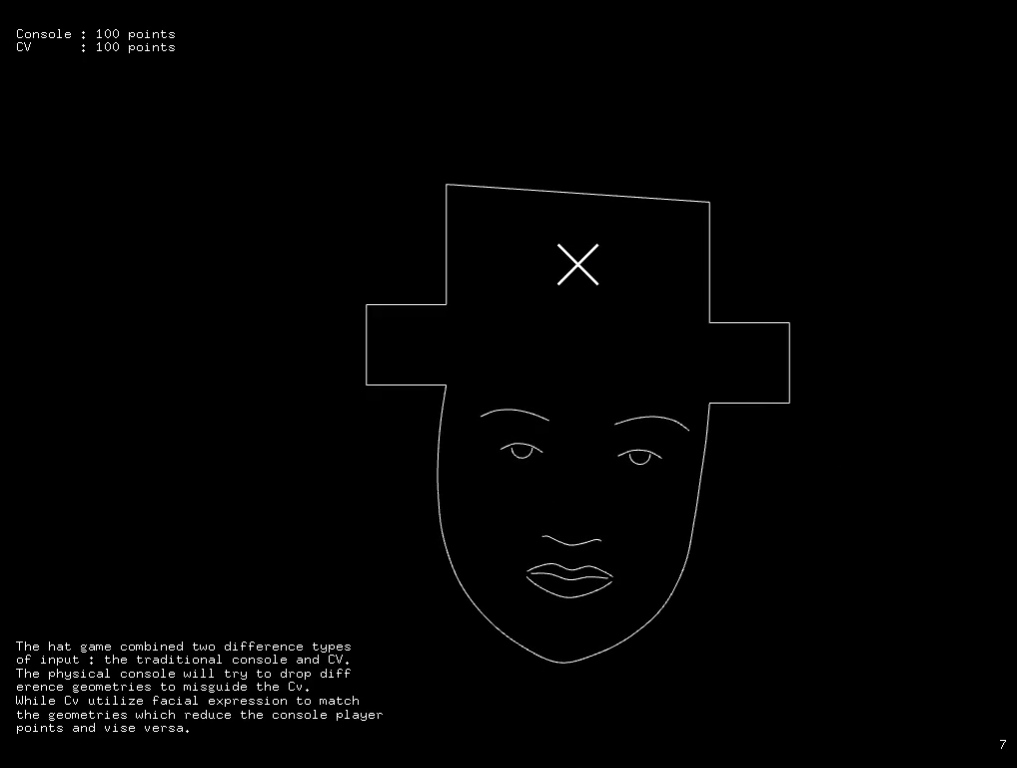

Combine it together

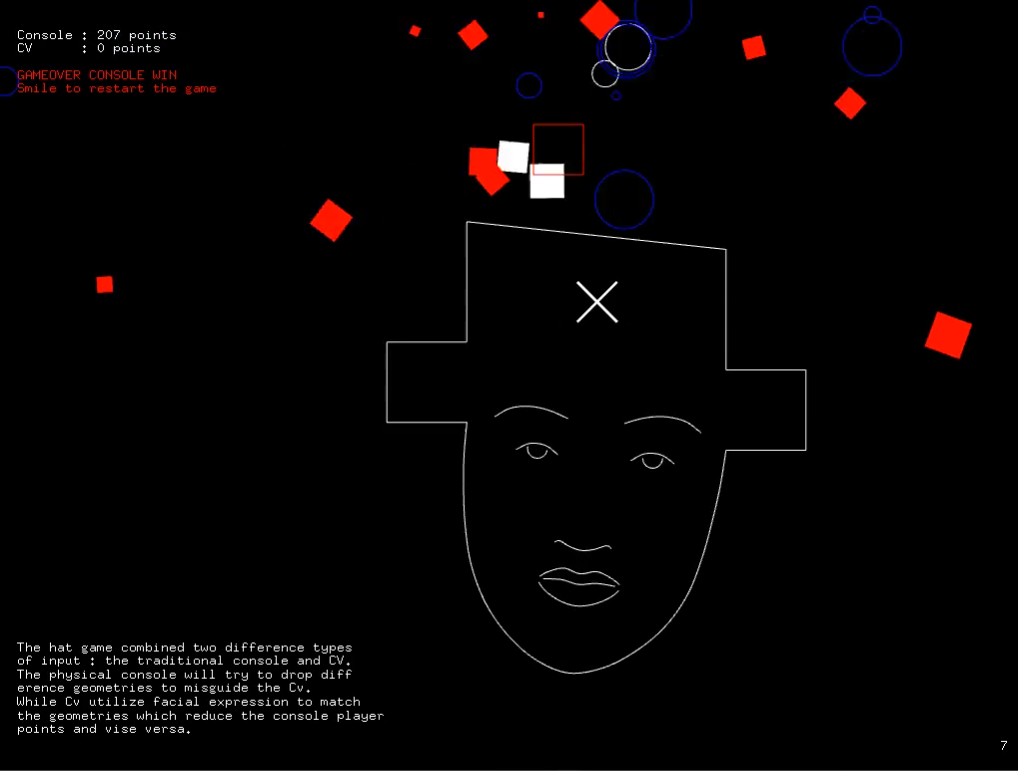

In the matching game the console player will gain points if the CV player can not catch up with matching the geometries and lose points if Cv manage to matching facial expression with the shape. Both players will start from the 100 points. Who reach zero first lose the game. And the game will restart when it detect the smile.

The physical console is design to manage the drop geometry point for both circle and square. The button is the release geometry activator.

- The logic of the game is CV only gain point if the geometry bouncing to each other so the Cv player need to move where the object drop too. so it can bouncing off on top of the hat. Meanwhile, the physical console move away from where the facial location.

Below: Scene examples in the program

Vision for the future

I would like to explore more on the game which each player using difference input device to interact on the environment. Of course, not every device will have the same advantage but I believe software could helping us balance on this issue.

Reference:

1. Project example from ofxBox2d and ofxFacetracker which some of those function I am modify to this project use.